Statistics Made Easy

What is Univariate Analysis? (Definition & Example)

The term univariate analysis refers to the analysis of one variable. You can remember this because the prefix “uni” means “one.”

The purpose of univariate analysis is to understand the distribution of values for a single variable. You can contrast this type of analysis with the following:

- Bivariate Analysis : The analysis of two variables.

- Multivariate Analysis: The analysis of two or more variables.

For example, suppose we have the following dataset:

We could choose to perform univariate analysis on any of the individual variables in the dataset to gain a better understanding of its distribution of values.

For example, we may choose to perform univariate analysis on the variable Household Size :

There are three common ways to perform univariate analysis:

1. Summary Statistics

The most common way to perform univariate analysis is to describe a variable using summary statistics .

There are two popular types of summary statistics:

- Measures of central tendency : these numbers describe where the center of a dataset is located. Examples include the mean and the median .

- Measures of dispersion : these numbers describe how spread out the values are in the dataset. Examples include the range , interquartile range , standard deviation , and variance .

2. Frequency Distributions

Another way to perform univariate analysis is to create a frequency distribution , which describes how often different values occur in a dataset.

Yet another way to perform univariate analysis is to create charts to visualize the distribution of values for a certain variable.

Common examples include:

- Density Curves

The following examples show how to perform each type of univariate analysis using the Household Size variable from our dataset mentioned earlier:

Summary Statistics

We can calculate the following measures of central tendency for Household Size:

- Mean (the average value): 3.8

- Median (the middle value): 4

These values give us an idea of where the “center” value is located.

We can also calculate the following measures of dispersion:

- Range (the difference between the max and min): 6

- Interquartile Range (the spread of the middle 50% of values): 2.5

- Standard Deviation (an average measure of spread): 1.87

These values give us an idea of how spread out the values are for this variable.

Frequency Distributions

We can also create the following frequency distribution table to summarize how often different values occur:

This allows us to quickly see that the most frequent household size is 4 .

Resource: You can use this Frequency Calculator to automatically produce a frequency distribution for any variable.

We can create the following charts to help us visualize the distribution of values for Household Size:

A boxplot is a plot that shows the five-number summary of a dataset.

The five-number summary includes:

- The minimum value

- The first quartile

- The median value

- The third quartile

- The maximum value

Here’s what a boxplot would look like for the variable Household Size:

Resource: You can use this Boxplot Generator to automatically produce a boxplot for any variable.

2. Histogram

A histogram is a type of chart that uses vertical bars to display frequencies. This type of chart is a useful way to visualize the distribution of values in a dataset.

Here’s what a histogram would look like for the variable Household Size:

3. Density Curve

A density curve is a curve on a graph that represents the distribution of values in a dataset.

It’s particularly useful for visualizing the “shape” of a distribution, including whether or not a distribution has one or more “peaks” of frequently occurring values and whether or not the distribution is skewed to the left or the right .

Here’s what a density curve would look like for the variable Household Size:

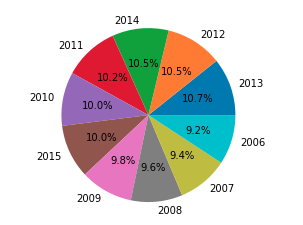

4. Pie Chart

A pie chart is a type of chart that is shaped like a circle and uses slices to represent proportions of a whole.

Here’s what a pie chart would look like for the variable Household Size:

Depending on the type of data, one of these charts may be more useful for visualizing the distribution of values than the others.

Hey there. My name is Zach Bobbitt. I have a Master of Science degree in Applied Statistics and I’ve worked on machine learning algorithms for professional businesses in both healthcare and retail. I’m passionate about statistics, machine learning, and data visualization and I created Statology to be a resource for both students and teachers alike. My goal with this site is to help you learn statistics through using simple terms, plenty of real-world examples, and helpful illustrations.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

- Memberships

Univariate Analysis: basic theory and example

Univariate analysis: this article explains univariate analysis in a practical way. The article begins with a general explanation and an explanation of the reasons for applying this method in research, followed by the definition of the term and a graphical representation of the different ways of representing univariate statistics. Enjoy the read!

Introduction

Research is a dynamic process that carefully uses different techniques and methods to gain insights, validate hypotheses and make informed decisions.

Using a variety of analytical methods, researchers can gain a thorough understanding of their data, revealing patterns, trends, and relationships.

One of the main approaches or methods for research is the univariate analysis, which provides valuable insights into individual variables and their characteristics.

In this article, we dive into the world of univariate analysis, its definition, importance and applications in research.

Techniques and methods in research

Research methodologies encompass a wide variety of techniques and methods that help researchers extract meaningful information from their data. Some common approaches are:

Descriptive statistics

Summarizing data using measures such as mean, median, mode, variance, and standard deviation.

Inferential statistics

Drawing conclusions about a broader population based on a sample. Methods such as hypothesis testing and confidence intervals are used for this.

Multivariate analysis

Exploring relationships between multiple variables simultaneously, allowing researchers to explore complex interactions and dependencies. A bivariate analysis is when the relationship between two variables is explored.

Qualitative analysis

Discovering insights and trying to understand subjective type of data, such as interviews, observations and case studies.

Quantitative analysis

Analyzing numerical data using statistical methods to reveal patterns and trends.

What is univariate analysis?

Univariate analysis focuses on the study and interpretation of only one variable on its own, without considering possible relationships with other variables.

The method aims to understand the characteristics and behavior of that specific variable. Univariate analysis is the simplest form of analyzing data.

Definition of univariate

The term univariate consists of two elements: uni, which means one, and variate, which refers to a statistical variable. Therefore, univariate analysis focuses on exploring and summarizing the properties of one variable independently.

Importance of univariate analysis

Univariate analysis serves as an important first step in many research projects, as it provides essential insights and lays a foundation for further research. It offers researchers the following benefits:

Data exploration

Univariate analysis allows researchers to understand the distribution, central tendency, and variability of a variable.

Identification of outliers

By detecting anomalous values, univariate analysis helps identify outliers that require further investigation or treatment during the data analysis phase.

Data cleaning

Univariate analysis helps identify missing data, inconsistencies or errors within a variable, allowing researchers to refine and optimize their data set before moving on to more complex analyses.

Variable selection

Researchers can use the univariate analysis to determine which variables are most promising for further research. This enables efficient allocation of resources and hypothesis testing.

Reporting and visualization

Summarizing and visualizing univariate statistics facilitates clear and concise reporting of research results. This makes complex data more accessible to a wider audience.

Research Methods For Business Students Course A-Z guide to writing a rockstar Research Paper with a bulletproof Research Methodology! More information

Applications of univariate analysis

Univariate analysis is used in various research areas and disciplines. It is often used in:

- Epidemiological studies to analyze risk factors

- Social science research to investigate attitudes, behaviors or socio-economic variables

- Market research to understand consumer preferences, buying patterns or market trends

- Environmental studies to investigate pollution, climate data or species distributions

By using univariate analysis, researchers can uncover valuable insights, detect trends, and lay the groundwork for more comprehensive statistical analysis.

Types of univariate analyses

The most common method of performing univariate analysis is summary statistics. The correct statistics are determined by the level of measurement or the nature of the information in the variabels. The following are the most common types of summary statistics:

- Measures of dispersion: these numbers describe how evenly the values are distributed in a dataset. The range, standard deviation, interquartile range, and variance are some examples.

- Range: the difference between the highest and lowest value in a data set.

- Standard deviation: an average measure of the spread.

- Interquartile range: the spread of the middle 50% of the values.

- Measures of central tendency: these numbers describe the location of the center point of a data set or the middle value of the data set. The mean, median and mode are the three main measures of central tendency.

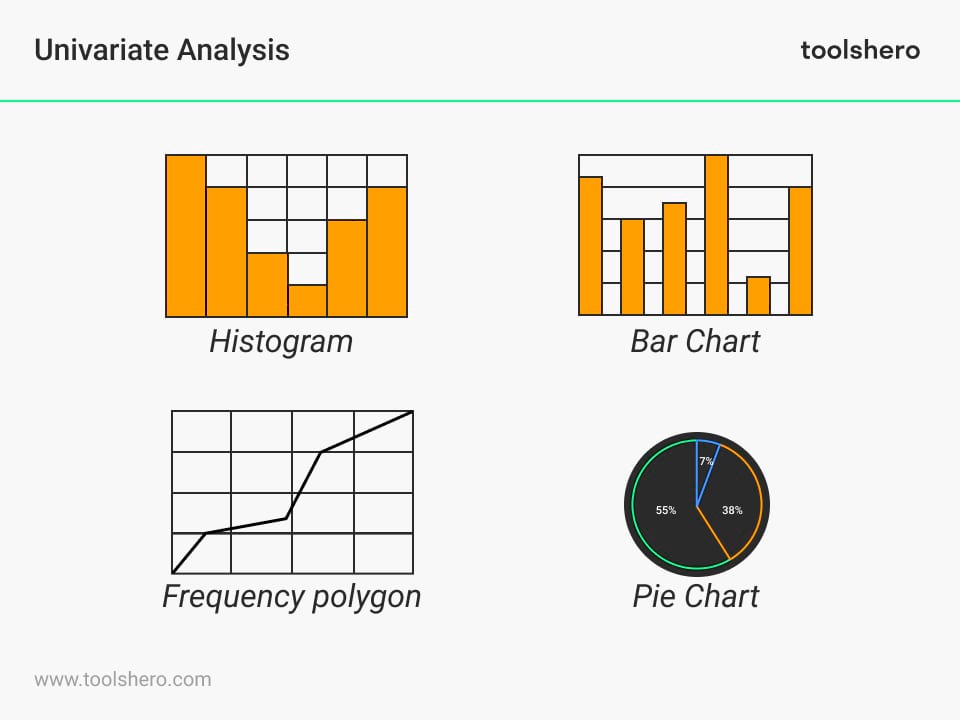

Figure 1. Univariate Analysis – Types

Frequency table

Frequency indicates how often something occurs. The frequency of observation thus indicates the number of times an event occurs.

The frequency distribution table can display qualitative and numerical or quantitative variables. The distribution provides an overview of the data and allows you to spot patterns.

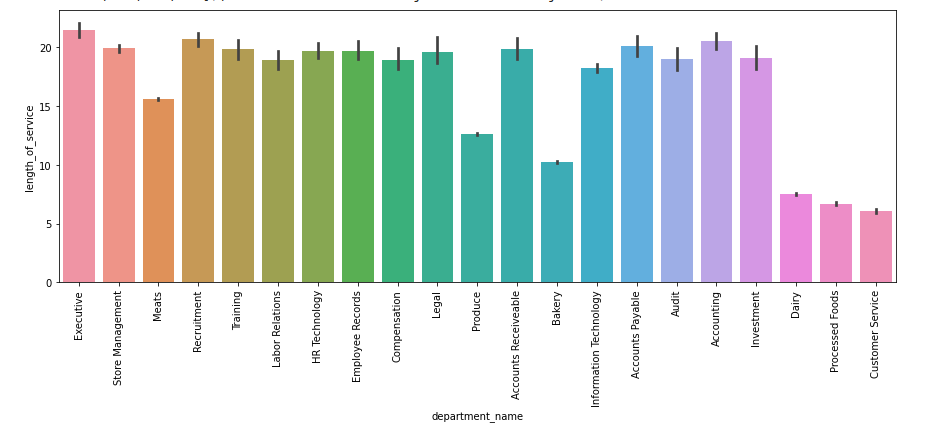

The bar chart is displayed in the form of rectangular bars. The chart compares different categories. The chart can be plotted vertically or horizontally.

In most cases, the bar is plotted vertically.

The horizontal or x-axis represents the category and the vertical y-axis represents the value of the category.

This diagram can be used, for example, to see which part of a budget is the largest.

A histogram is a graph that shows how often certain values occur in a data set. It consists of bars whose height indicates how often a certain value occurs.

Frequency polygon

The frequency polygon is very similar to the histogram. It is used to compare data sets or to display the cumulative frequency distribution.

The frequency polygon is displayed as a line graph.

The pie chart displays the data in a circular format. The diagram is divided into pieces where each piece is proportional to its part of the complete category. So each “pie slice” in the pie chart is a portion of the total. The total of the pieces should always be 100.

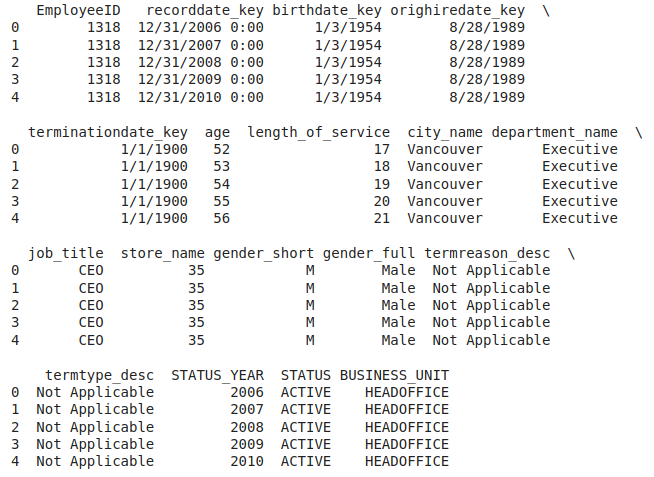

Example situation of an Univariate Analysis

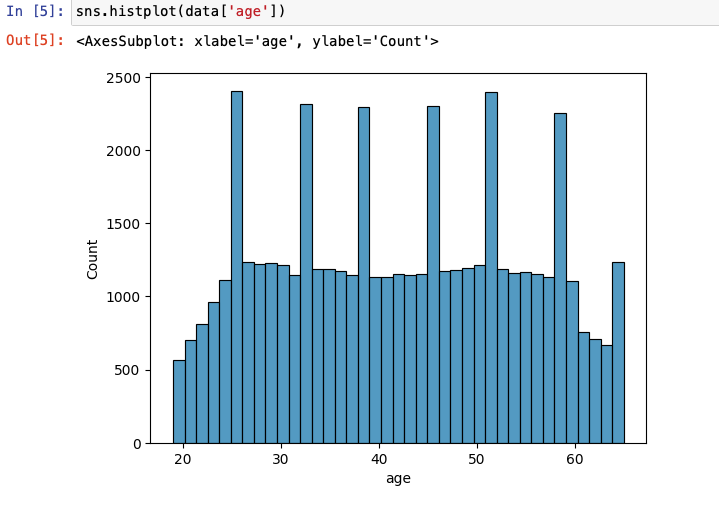

An example of univariate analysis might be examining the age of employees in a company.

Data is collected on the age of all employees and then a univariate analysis is performed to understand the characteristics and distribution of this single variable.

We can calculate summary statistics, such as the mean, median, and standard deviation, to get an idea of the central tendency and range of ages.

Histograms can also be used to visualize the frequency of different age groups and to identify any patterns or outliers.

Now it’s your turn

What do you think? Do you recognize the explanation about the univariate analysis? Have you ever heard of univariate analysis? Have you applied it yourself during any of the studies you have conducted? Do you know of any other methods or techniques used in conjunction with univariate analysis? Are you familiar with the visual graphs used in univariate analysis?

Share your experience and knowledge in the comments box below.

More information about the Univariate Analysis

- Barick, R. (2021). Research Methods For Business Students . Retrieved 02/16/2024 from Udemy.

- Dowdy, S., Wearden, S., & Chilko, D. (2011). Statistics for research . John Wiley & Sons.

- Garfield, J., & Ben‐Zvi, D. (2007). How students learn statistics revisited: A current review of research on teaching and learning statistics . International statistical review, 75(3), 372-396.

- Ostle, B. (1963). Statistics in research . Statistics in research., (2nd Ed).

- Wagner III, W. E. (2019). Using IBM® SPSS® statistics for research methods and social science statistics . Sage Publications .

How to cite this article: Janse, B. (2024). Univariate Analysis . Retrieved [insert date] from Toolshero: https://www.toolshero.com/research/univariate-analysis/

Original publication date: 03/22/2024 | Last update: 03/22/2024

Add a link to this page on your website: <a href=”https://www.toolshero.com/research/univariate-analysis/”>Toolshero: Univariate Analysis</a>

Did you find this article interesting?

Your rating is more than welcome or share this article via Social media!

Average rating 4.2 / 5. Vote count: 5

No votes so far! Be the first to rate this post.

We are sorry that this post was not useful for you!

Let us improve this post!

Tell us how we can improve this post?

Ben Janse is a young professional working at ToolsHero as Content Manager. He is also an International Business student at Rotterdam Business School where he focusses on analyzing and developing management models. Thanks to his theoretical and practical knowledge, he knows how to distinguish main- and side issues and to make the essence of each article clearly visible.

Related ARTICLES

Respondents: the definition, meaning and the recruitment

Market Research: the Basics and Tools

Gartner Magic Quadrant report and basics explained

Bivariate Analysis in Research explained

Contingency Table: the Theory and an Example

Content Analysis explained plus example

Also interesting.

Field Research explained

Observational Research Method explained

Research Ethics explained

Leave a reply cancel reply.

You must be logged in to post a comment.

BOOST YOUR SKILLS

Toolshero supports people worldwide ( 10+ million visitors from 100+ countries ) to empower themselves through an easily accessible and high-quality learning platform for personal and professional development.

By making access to scientific knowledge simple and affordable, self-development becomes attainable for everyone, including you! Join our learning platform and boost your skills with Toolshero.

POPULAR TOPICS

- Change Management

- Marketing Theories

- Problem Solving Theories

- Psychology Theories

ABOUT TOOLSHERO

- Free Toolshero e-book

- Memberships & Pricing

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

23 14. Univariate analysis

Chapter outline.

- Where do I start with quantitative data analysis? (12 minute read time)

- Measures of central tendency (17 minute read time, including 5-minute video)

- Frequencies and variability (13 minute read time)

People often dread quantitative data analysis because – oh no – it’s math. And true, you’re going to have to work with numbers. For years, I thought I was terrible at math, and then I started working with data and statistics, and it turned out I had a real knack for it. (I have a statistician friend who claims statistics is not math, which is a math joke that’s way over my head, but there you go.) This chapter, and the subsequent quantitative analysis chapters, are going to focus on helping you understand descriptive statistics and a few statistical tests, NOT calculate them (with a couple of exceptions). Future research classes will focus on teaching you to calculate these tests for yourself. So take a deep breath and clear your mind of any doubts about your ability to understand and work with numerical data.

In this chapter, we’re going to discuss the first step in analyzing your quantitative data: univariate data analysis. Univariate data analysis is a quantitative method in which a variable is examined individually to determine its distribution , or “the way the scores are distributed across the levels of that variable” (Price et. al, Chapter 12.1, para. 2). When we talk about levels , what we are talking about are the possible values of the variable – like a participant’s age, income or gender. (Note that this is different than our earlier discussion in Chaper 10 of levels of measurement , but the level of measurement of your variables absolutely affects what kinds of analyses you can do with it.) Univariate analysis is n on-relational , which just means that we’re not looking into how our variables relate to each other. Instead, we’re looking at variables in isolation to try to understand them better. For this reason, univariate analysis is best for descriptive research questions.

So when do you use univariate data analysis? Always! It should be the first thing you do with your quantitative data, whether you are planning to move on to more sophisticated statistical analyses or are conducting a study to describe a new phenomenon. You need to understand what the values of each variable look like – what if one of your variables has a lot of missing data because participants didn’t answer that question on your survey? What if there isn’t much variation in the gender of your sample? These are things you’ll learn through univariate analysis.

14.1 Where do I start with quantitative data analysis?

Learning objectives.

Learners will be able to…

- Define and construct a data analysis plan

- Define key data management terms – variable name, data dictionary, primary and secondary data, observations/cases

No matter how large or small your data set is, quantitative data can be intimidating. There are a few ways to make things manageable for yourself, including creating a data analysis plan and organizing your data in a useful way. We’ll discuss some of the keys to these tactics below.

The data analysis plan

As part of planning for your research, and to help keep you on track and make things more manageable, you should come up with a data analysis plan. You’ve basically been working on doing this in writing your research proposal so far. A data analysis plan is an ordered outline that includes your research question, a description of the data you are going to use to answer it, and the exact step-by-step analyses, that you plan to run to answer your research question. This last part – which includes choosing your quantitative analyses – is the focus of this and the next two chapters of this book.

A basic data analysis plan might look something like what you see in Table 14.1. Don’t panic if you don’t yet understand some of the statistical terms in the plan; we’re going to delve into them throughout the next few chapters. Note here also that this is what operationalizing your variables and moving through your research with them looks like on a basic level.

An important point to remember is that you should never get stuck on using a particular statistical method because you or one of your co-researchers thinks it’s cool or it’s the hot thing in your field right now. You should certainly go into your data analysis plan with ideas, but in the end, you need to let your research question and the actual content of your data guide what statistical tests you use. Be prepared to be flexible if your plan doesn’t pan out because the data is behaving in unexpected ways.

Managing your data

Whether you’ve collected your own data or are using someone else’s data, you need to make sure it is well-organized in a database in a way that’s actually usable. “Database” can be kind of a scary word, but really, I just mean an Excel spreadsheet or a data file in whatever program you’re using to analyze your data (like SPSS, SAS, or r). (I would avoid Excel if you’ve got a very large data set – one with millions of records or hundreds of variables – because it gets very slow and can only handle a certain number of cases and variables, depending on your version. But if your data set is smaller and you plan to keep your analyses simple, you can definitely get away with Excel.) Your database or data set should be organized with variables as your columns and observations/cases as your rows. For example, let’s say we did a survey on ice cream preferences and collected the following information in Table 14.2:

There are a few key data management terms to understand:

- Variable name : Just what it sounds like – the name of your variable. Make sure this is something useful, short and, if you’re using something other than Excel, all one word. Most statistical programs will automatically rename variables for you if they aren’t one word, but the names are usually a little ridiculous and long.

- Observations/cases : The rows in your data set. In social work, these are often your study participants (people), but can be anything from census tracts to black bears to trains. When we talk about sample size, we’re talking about the number of observations/cases. In our mini data set, each person is an observation/case.

- Primary data : Data you have collected yourself.

- Secondary data : Data someone else has collected that you have permission to use in your research. For example, for my student research project in my MSW program, I used data from a local probation program to determine if a shoplifting prevention group was reducing the rate at which people were re-offending. I had data on who participated in the program and then received their criminal history six months after the end of their probation period. This was secondary data I used to determine whether the shoplifting prevention group had any effect on an individual’s likelihood of re-offending.

- Data dictionary (sometimes called a code book) : This is the document where you list your variable names, what the variables actually measure or represent, what each of the values of the variable mean if the meaning isn’t obvious (i.e., if there are numbers assigned to gender), the level of measurement and anything special to know about the variables (for instance, the source if you mashed two data sets together). If you’re using secondary data, the data dictionary should be available to you.

When considering what data you might want to collect as part of your project, there are two important considerations that can create dilemmas for researchers. You might only get one chance to interact with your participants, so you must think comprehensively in your planning phase about what information you need and collect as much relevant data as possible. At the same time, though, especially when collecting sensitive information, you need to consider how onerous the data collection is for participants and whether you really need them to share that information. Just because something is interesting to us doesn’t mean it’s related enough to our research question to chase it down. Work with your research team and/or faculty early in your project to talk through these issues before you get to this point. And if you’re using secondary data , make sure you have access to all the information you need in that data before you use it.

Let’s take that mini data set we’ve got up above and I’ll show you what your data dictionary might look like in Table 14.3.

Key Takeaways

- Getting organized at the beginning of your project with a data analysis plan will help keep you on track. Data analysis plans should include your research question, a description of your data, and a step-by-step outline of what you’re going to do with it.

- Be flexible with your data analysis plan – sometimes data surprises us and we have to adjust the statistical tests we are using.

- Always make a data dictionary or, if using secondary data, get a copy of the data dictionary so you (or someone else) can understand the basics of your data.

- Make a data analysis plan for your project. Remember this should include your research question, a description of the data you will use, and a step-by-step outline of what you’re going to do with your data once you have it, including statistical tests (non-relational and relational) that you plan to use. You can do this exercise whether you’re using quantitative or qualitative data! The same principles apply.

- Make a data dictionary for the data you are proposing to collect as part of your study. You can use the example above as a template.

14.2 Measures of central tendency

- Explain measures of central tendency – mean, median and mode – and when to use them to describe your data

- Explain the importance of examining the range of your data

- Apply the appropriate measure of central tendency to a research problem or question

A measure of central tendency is one number that can give you an idea about the distribution of your data. The video below gives a more detailed introduction to central tendency. Then we’ll talk more specifically about our three measures of central tendency – mean, median and mode.

One quick note: the narrator in the video mentions skewness and kurtosis . Basically, these refer to a particular shape for a distribution when you graph it out.e.That gets into some more advanced multivariate analysis that we aren’t tackling in this book, so just file them away for a more advanced class, if you ever take on.

There are three key measures of central tendency, which we’ll go into now.

The mean , also called the average, is calculated by adding all your cases and dividing the sum by the number of cases. You’ve undoubtedly calculated a mean at some point in your life. The mean is the most widely used measure of central tendency because it’s easy to understand and calculate. It can only be used with interval/ratio variables, like age, test scores or years of post-high school education. (If you think about it, using it with a nominal or ordinal variable doesn’t make much sense – why do we care about the average of our numerical values we assigned to certain races?)

The biggest drawback of using the mean is that it’s extremely sensitive to outliers , or extreme values in your data. And the smaller your data set is, the more sensitive your mean is to these outliers. One thing to remember about outliers – they are not inherently bad, and can sometimes contain really important information. Don’t automatically discard them because they skew your data.

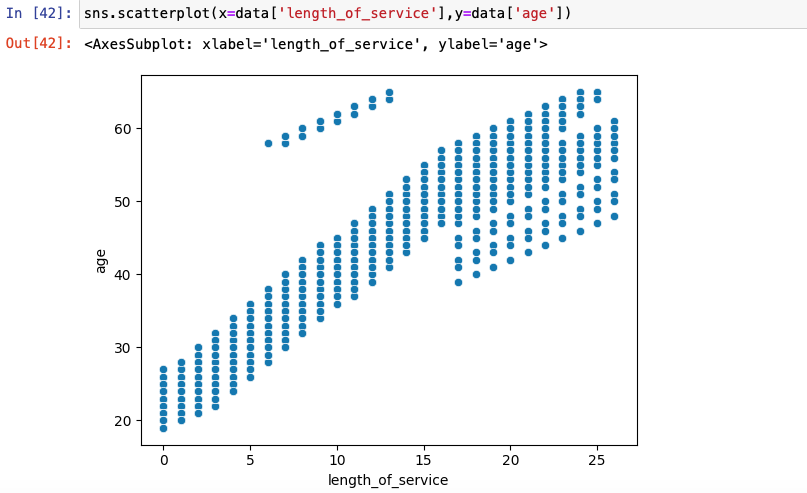

Let’s take a minute to talk about how to locate outliers in your data. If your data set is very small, you can just take a look at it and see outliers. But in general, you’re probably going to be working with data sets that have at least a couple dozen cases, which makes just looking at your values to find outliers difficult. The best way to quickly look for outliers is probably to make a scatter plot with excel or whatever database management program you’re using.

Let’s take a very small data set as an example. Oh hey, we had one before! I’ve re-created it in Table 14.5. We’re going to add some more cases to it so it’s a little easier to illustrate what we’re doing.

Let’s say we’re interested in knowing more about the distribution of participant age. Let’s see a scatterplot of age (Figure 14.1). On our y-axis (the vertical one) is the value of age, and on our x-axis (the horizontal one) is the frequency of each age, or the number of times it appears in our data set.

Do you see any outliers in the scatter plot? There is one participant who is significantly older than the rest at age 54. Let’s think about what happens when we calculate our mean with and without that outlier. Complete the two exercises below by using the ages listed in our mini-data set in this section.

Next, let’s try it without the outlier.

With our outlier, the average age of our participants is 28, and without it, the average age is 25. That might not seem enormous, but it illustrates the effects of outliers on the mean.

Just because Tom is an outlier at age 54 doesn’t mean you should exclude him. The most important thing about outliers is to think critically about them and how they could affect your analysis. Finding outliers should prompt a couple of questions. First, could the data have been entered incorrectly? Is Tom actually 24, and someone just hit the “5” instead of the “2” on the number pad? What might be special about Tom that he ended up in our group, given how that he is different? Are there other relevant ways in which Tom differs from our group (is he an outlier in other ways)? Does it really matter than Tom is much older than our other participants? If we don’t think age is a relevant factor in ice cream preferences, then it probably doesn’t. If we do, then we probably should have made an effort to get a wider range of ages in our participants.

The median (also called the 50th percentile) is the middle value when all our values are placed in numerical order. If you have five values and you put them in numerical order, the third value will be the median. When you have an even number of values, you’ll have to take the average of the middle two values to get the median. So, if you have 6 values, the average of values 3 and 4 will be the median. Keep in mind that for large data sets, you’re going to want to use either Excel or a statistical program to calculate the median – otherwise, it’s nearly impossible logistically.

Like the mean, you can only calculate the median with interval/ratio variables, like age, test scores or years of post-high school education. The median is also a lot less sensitive to outliers than the mean. While it can be more time intensive to calculate, the median is preferable in most cases to the mean for this reason. It gives us a more accurate picture of where the middle of our distribution sits in most cases. In my work as a policy analyst and researcher, I rarely, if ever, use the mean as a measure of central tendency. Its main value for me is to compare it to the median for statistical purposes. So get used to the median, unless you’re specifically asked for the mean. (When we talk about t- tests in the next chapter, we’ll talk about when the mean can be useful.)

Let’s go back to our little data set and calculate the median age of our participants (Table 14.6).

Remember, to calculate the median, you put all the values in numerical order and take the number in the middle. When there’s an even number of values, take the average of the two middle values.

What happens if we remove Tom, the outlier?

With Tom in our group, the median age is 27.5, and without him, it’s 27. You can see that the median was far less sensitive to him being included in our data than the mean was.

The mode of a variable is the most commonly occurring value. While you can calculate the mode for interval/ratio variables, it’s mostly useful when examining and describing nominal or ordinal variables. Think of it this way – do we really care that there are two people with an income of $38,000 per year, or do we care that these people fall into a certain category related to that value, like above or below the federal poverty level?

Let’s go back to our ice cream survey (Table 14.7).

We can use the mode for a few different variables here: gender, hometown and fav_ice_cream. The cool thing about the mode is that you can use it for numeric/quantitative and text/quantitative variables.

So let’s find some modes. For hometown – or whether the participant’s hometown is the one in which the survey was administered or not – the mode is 0, or “no” because that’s the most common answer. For gender, the mode is 0, or “female.” And for fav_ice_cream, the mode is Chocolate, although there’s a lot of variation there. Sometimes, you may have more than one mode, which is still useful information.

One final thing I want to note about these three measures of central tendency: if you’re using something like a ranking question or a Likert scale, depending on what you’re measuring, you might use a mean or median, even though these look like they will only spit out ordinal variables. For example, say you’re a car designer and want to understand what people are looking for in new cars. You conduct a survey asking participants to rank the characteristics of a new car in order of importance (an ordinal question). The most commonly occurring answer – the mode – really tells you the information you need to design a car that people will want to buy. On the flip side, if you have a scale of 1 through 5 measuring a person’s satisfaction with their most recent oil change, you may want to know the mean score because it will tell you, relative to most or least satisfied, where most people fall in your survey. To know what’s most helpful, think critically about the question you want to answer and about what the actual values of your variable can tell you.

- The mean is the average value for a variable, calculated by adding all values and dividing the total by the number of cases. While the mean contains useful information about a variable’s distribution, it’s also susceptible to outliers, especially with small data sets.

- In general, the mean is most useful with interval/ratio variables.

- The median , or 50th percentile, is the exact middle of our distribution when the values of our variable are placed in numerical order. The median is usually a more accurate measurement of the middle of our distribution because outliers have a much smaller effect on it.

- In general, the median is only useful with interval/ratio variables.

- The mode is the most commonly occurring value of our variable. In general, it is only useful with nominal or ordinal variables.

- Say you want to know the income of the typical participant in your study. Which measure of central tendency would you use? Why?

- Find an interval/ratio variable and calculate the mean and median. Make a scatter plot and look for outliers.

- Find a nominal variable and calculate the mode.

14.3 Frequencies and variability

- Define descriptive statistics and understand when to use these methods.

- Produce and describe visualizations to report quantitative data.

Descriptive statistics refer to a set of techniques for summarizing and displaying data. We’ve already been through the measures of central tendency, (which are considered descriptive statistics) which got their own chapter because they’re such a big topic. Now, we’re going to talk about other descriptive statistics and ways to visually represent data.

Frequency tables

One way to display the distribution of a variable is in a frequency table . Table 14.2, for example, is a frequency table showing a hypothetical distribution of scores on the Rosenberg Self-Esteem Scale for a sample of 40 college students. The first column lists the values of the variable—the possible scores on the Rosenberg scale—and the second column lists the frequency of each score. This table shows that there were three students who had self-esteem scores of 24, five who had self-esteem scores of 23, and so on. From a frequency table like this, one can quickly see several important aspects of a distribution, including the range of scores (from 15 to 24), the most and least common scores (22 and 17, respectively), and any extreme scores that stand out from the rest.

There are a few other points worth noting about frequency tables. First, the levels listed in the first column usually go from the highest at the top to the lowest at the bottom, and they usually do not extend beyond the highest and lowest scores in the data. For example, although scores on the Rosenberg scale can vary from a high of 30 to a low of 0, Table 14.8 only includes levels from 24 to 15 because that range includes all the scores in this particular data set. Second, when there are many different scores across a wide range of values, it is often better to create a grouped frequency table, in which the first column lists ranges of values and the second column lists the frequency of scores in each range. Table 14.9, for example, is a grouped frequency table showing a hypothetical distribution of simple reaction times for a sample of 20 participants. In a grouped frequency table, the ranges must all be of equal width, and there are usually between five and 15 of them. Finally, frequency tables can also be used for nominal or ordinal variables, in which case the levels are category labels. The order of the category labels is somewhat arbitrary, but they are often listed from the most frequent at the top to the least frequent at the bottom.

A histogram is a graphical display of a distribution. It presents the same information as a frequency table but in a way that is grasped more quickly and easily. The histogram in Figure 14.2 presents the distribution of self-esteem scores in Table 14.8. The x- axis (the horizontal one) of the histogram represents the variable and the y- axis (the vertical one) represents frequency. Above each level of the variable on the x- axis is a vertical bar that represents the number of individuals with that score. When the variable is quantitative, as it is in this example, there is usually no gap between the bars. When the variable is nominal or ordinal, however, there is usually a small gap between them. (The gap at 17 in this histogram reflects the fact that there were no scores of 17 in this data set.)

Distribution shapes

When the distribution of a quantitative variable is displayed in a histogram, it has a shape. The shape of the distribution of self-esteem scores in Figure 14.2 is typical. There is a peak somewhere near the middle of the distribution and “tails” that taper in either direction from the peak. The distribution of Figure 14.2 is unimodal , meaning it has one distinct peak, but distributions can also be bimodal , as in Figure 14.3, meaning they have two distinct peaks. Figure 14.3, for example, shows a hypothetical bimodal distribution of scores on the Beck Depression Inventory. I know we talked about the mode mostly for nominal or ordinal variables, but you can actually use histograms to look at the distribution of interval/ratio variables, too, and still have a unimodal or bimodal distribution even if you aren’t calculating a mode. Distributions can also have more than two distinct peaks, but these are relatively rare in social work research.

Another characteristic of the shape of a distribution is whether it is symmetrical or skewed. The distribution in the center of Figure 14.4 is symmetrical . Its left and right halves are mirror images of each other. The distribution on the left is negatively skewed , with its peak shifted toward the upper end of its range and a relatively long negative tail. The distribution on the right is positively skewed, with its peak toward the lower end of its range and a relatively long positive tail.

Range: A simple measure of variability

The variability of a distribution is the extent to which the scores vary around their central tendency. Consider the two distributions in Figure 14.5, both of which have the same central tendency. The mean, median, and mode of each distribution are 10. Notice, however, that the two distributions differ in terms of their variability. The top one has relatively low variability, with all the scores relatively close to the center. The bottom one has relatively high variability, with the scores are spread across a much greater range.

One simple measure of variability is the range , which is simply the difference between the highest and lowest scores in the distribution. The range of the self-esteem scores in Table 12.1, for example, is the difference between the highest score (24) and the lowest score (15). That is, the range is 24 − 15 = 9. Although the range is easy to compute and understand, it can be misleading when there are outliers. Imagine, for example, an exam on which all the students scored between 90 and 100. It has a range of 10. But if there was a single student who scored 20, the range would increase to 80—giving the impression that the scores were quite variable when in fact only one student differed substantially from the rest.

- Descriptive statistics are a way to summarize and display data, and are essential to understand and report your data.

- A frequency table is useful for nominal and ordinal variables and is needed to produce a histogram

- A histogram is a graphic representation of your data that shows how many cases fall into each level of your variable.

- Variability is important to understand in analyzing your data because studying a phenomenon that does not vary for your population does not provide a lot of information.

- Think about the dependent variable in your project. What would you do if you analyzed its variability for people of different genders, and there was very little variability?

- What do you think it would mean if the distribution of the variable were bimodal?

Univariate data analysis is a quantitative method in which a variable is examined individually to determine its distribution.

the way the scores are distributed across the levels of that variable.

The possible values of the variable - like a participant's age, income or gender.

Referring to data analysis that doesn't examine how variables relate to each other.

An ordered outline that includes your research question, a description of the data you are going to use to answer it, and the exact analyses, step-by-step, that you plan to run to answer your research question.

The process of determining how to measure a construct that cannot be directly observed

A group of statistical techniques that examines the relationship between at least three variables

The name of your variable.

The rows in your data set. In social work, these are often your study participants (people), but can be anything from census tracts to black bears to trains.

Data someone else has collected that you have permission to use in your research.

This is the document where you list your variable names, what the variables actually measure or represent, what each of the values of the variable mean if the meaning isn't obvious.

One number that can give you an idea about the distribution of your data.

Also called the average, the mean is calculated by adding all your cases and dividing the total by the number of cases.

Extreme values in your data.

A graphical representation of data where the y-axis (the vertical one along the side) is your variable's value and the x-axis (the horizontal one along the bottom) represents the individual instance in your data.

The value in the middle when all our values are placed in numerical order. Also called the 50th percentile.

The most commonly occurring value of a variable.

A technique for summarizing and presenting data.

A table that lays out how many cases fall into each level of a varible.

a graphical display of a distribution.

A distribution with one distinct peak when represented on a histogram.

A distribution with two distinct peaks when represented on a histogram.

A distribution with a roughly equal number of cases on either side of the median.

A distribution where cases are clustered on one or the other side of the median.

The extent to which the levels of a variable vary around their central tendency (the mean, median, or mode).

The difference between the highest and lowest scores in the distribution.

Graduate research methods in social work Copyright © 2020 by Matthew DeCarlo, Cory Cummings, Kate Agnelli is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

8.1 - the univariate approach: analysis of variance (anova).

In the univariate case, the data can often be arranged in a table as shown in the table below:

The columns correspond to the responses to g different treatments or from g different populations. And, the rows correspond to the subjects in each of these treatments or populations.

- \(Y_{ij}\) = Observation from subject j in group i

- \(n_{i}\) = Number of subjects in group i

- \(N = n_{1} + n_{2} + \dots + n_{g}\) = Total sample size.

Assumptions for the Analysis of Variance are the same as for a two-sample t -test except that there are more than two groups:

- The data from group i has common mean = \(\mu_{i}\); i.e., \(E\left(Y_{ij}\right) = \mu_{i}\) . This means that there are no sub-populations with different means.

- Homoskedasticity : The data from all groups have common variance \(\sigma^2\); i.e., \(var(Y_{ij}) = \sigma^{2}\). That is, the variability in the data does not depend on group membership.

- Independence: The subjects are independently sampled.

- Normality : The data are normally distributed.

The hypothesis of interest is that all of the means are equal. Mathematically we write this as:

\(H_0\colon \mu_1 = \mu_2 = \dots = \mu_g\)

The alternative is expressed as:

\(H_a\colon \mu_i \ne \mu_j \) for at least one \(i \ne j\).

i.e., there is a difference between at least one pair of group population means. The following notation should be considered:

This involves taking an average of all the observations for j = 1 to \(n_{i}\) belonging to the i th group. The dot in the second subscript means that the average involves summing over the second subscript of y .

This involves taking the average of all the observations within each group and over the groups and dividing by the total sample size. The double dots indicate that we are summing over both subscripts of y .

- \(\bar{y}_{i.} = \frac{1}{n_i}\sum_{j=1}^{n_i}Y_{ij}\) = Sample mean for group i .

- \(\bar{y}_{..} = \frac{1}{N}\sum_{i=1}^{g}\sum_{j=1}^{n_i}Y_{ij}\) = Grand mean.

Here we are looking at the average squared difference between each observation and the grand mean. Note that if the observations tend to be far away from the Grand Mean then this will take a large value. Conversely, if all of the observations tend to be close to the Grand mean, this will take a small value. Thus, the total sum of squares measures the variation of the data about the Grand mean.

An Analysis of Variance (ANOVA) is a partitioning of the total sum of squares. In the second line of the expression below, we are adding and subtracting the sample mean for the i th group. In the third line, we can divide this out into two terms, the first term involves the differences between the observations and the group means, \(\bar{y}_i\), while the second term involves the differences between the group means and the grand mean.

\(\begin{array}{lll} SS_{total} & = & \sum_{i=1}^{g}\sum_{j=1}^{n_i}\left(Y_{ij}-\bar{y}_{..}\right)^2 \\ & = & \sum_{i=1}^{g}\sum_{j=1}^{n_i}\left((Y_{ij}-\bar{y}_{i.})+(\bar{y}_{i.}-\bar{y}_{..})\right)^2 \\ & = &\underset{SS_{error}}{\underbrace{\sum_{i=1}^{g}\sum_{j=1}^{n_i}(Y_{ij}-\bar{y}_{i.})^2}}+\underset{SS_{treat}}{\underbrace{\sum_{i=1}^{g}n_i(\bar{y}_{i.}-\bar{y}_{..})^2}} \end{array}\)

The first term is called the error sum of squares and measures the variation in the data about their group means.

Note that if the observations tend to be close to their group means, then this value will tend to be small. On the other hand, if the observations tend to be far away from their group means, then the value will be larger. The second term is called the treatment sum of squares and involves the differences between the group means and the Grand mean. Here, if group means are close to the Grand mean, then this value will be small. While, if the group means tend to be far away from the Grand mean, this will take a large value. This second term is called the Treatment Sum of Squares and measures the variation of the group means about the Grand mean.

The Analysis of Variance results is summarized in an analysis of variance table below:

Hover over the light bulb to get more information on that item.

The ANOVA table contains columns for Source, Degrees of Freedom, Sum of Squares, Mean Square and F . Sources include Treatment and Error which together add up to the Total.

The degrees of freedom for treatment in the first row of the table are calculated by taking the number of groups or treatments minus 1. The total degree of freedom is the total sample size minus 1. The Error degrees of freedom are obtained by subtracting the treatment degrees of freedom from the total degrees of freedom to obtain N - g .

The formulae for the Sum of Squares are given in the SS column. The Mean Square terms are obtained by taking the Sums of Squares terms and dividing them by the corresponding degrees of freedom.

The final column contains the F statistic which is obtained by taking the MS for treatment and dividing it by the MS for Error.

Under the null hypothesis that the treatment effect is equal across group means, that is \(H_{0} \colon \mu_{1} = \mu_{2} = \dots = \mu_{g} \), this F statistic is F -distributed with g - 1 and N - g degrees of freedom:

\(F \sim F_{g-1, N-g}\)

The numerator degrees of freedom g - 1 comes from the degrees of freedom for treatments in the ANOVA table. This is referred to as the numerator degrees of freedom since the formula for the F -statistic involves the Mean Square for Treatment in the numerator. The denominator degrees of freedom N - g is equal to the degrees of freedom for error in the ANOVA table. This is referred to as the denominator degrees of freedom because the formula for the F -statistic involves the Mean Square Error in the denominator.

We reject \(H_{0}\) at level \(\alpha\) if the F statistic is greater than the critical value of the F -table, with g - 1 and N - g degrees of freedom, and evaluated at level \(\alpha\).

\(F > F_{g-1, N-g, \alpha}\)

- How It Works

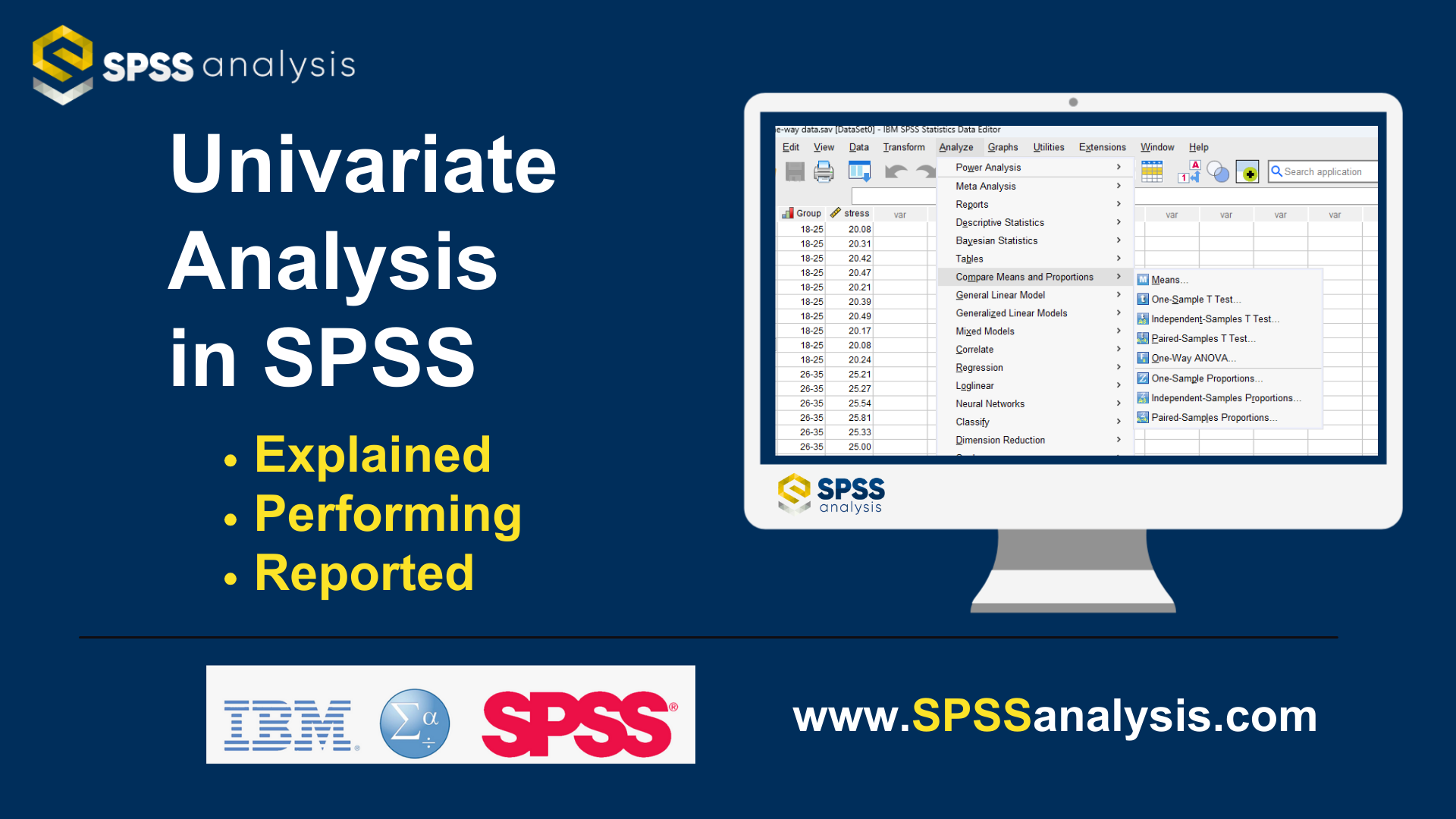

Univariate Analysis of Variance in SPSS

Discover Univariate Analysis of Variance in SPSS ! Learn how to perform, understand SPSS output , and report results in APA style. Check out this simple, easy-to-follow guide below for a quick read!

Struggling with the ANOVA Test in SPSS? We’re here to help . We offer comprehensive assistance to students , covering assignments , dissertations , research, and more. Request Quote Now !

Introduction

Welcome to our exploration of the U nivariate Analysis of Variance Analysis , a statistical method that unlocks valuable insights when comparing means across multiple groups. Whether you’re a student engaged in a research project or a seasoned researcher investigating diverse populations, the One-Way ANOVA Test proves indispensable in discerning if there are significant differences among group means. In this blog post, we’ll traverse the fundamentals of the Univariate Analysis , from its definition to the practical application using SPSS . By the end, you’ll possess not only a solid theoretical understanding but also the practical skills to conduct and interpret this powerful statistical analysis.

What is the Univariate Analysis?

ANOVA stands for Analysis of Variance, and the “ One-Way ” denotes a scenario where there is a single independent variable with more than two levels or groups . Essentially, this test assesses whether the means of these groups are significantly different from each other. It’s a robust method for scenarios like comparing the performance of students in multiple teaching methods or examining the impact of different treatments on a medical condition. The One-Way ANOVA Test yields valuable insights into group variations, providing researchers with a statistical lens to discern patterns and make informed decisions. Now, let’s delve deeper into the assumptions, hypotheses, and the step-by-step process of conducting the One-Way ANOVA Test in SPSS .

Assumption of the One-Way ANOVA Test

Before delving into the intricacies of the One-Way ANOVA Test, let’s outline its critical assumptions:

- Normality : The dependent variable should be approximately normally distributed within each group.

- Homogeneity of Variances : The variances of the groups being compared should be approximately equal. This assumption is crucial for the validity of the test.

- Independence : Observations within each group must be independent of each other.

Adhering to these assumptions ensures the reliability of the One-Way ANOVA Test results, providing a strong foundation for accurate statistical analysis.

Hypothesis of the Univariate Analysis of Variance (ANOVA) Test

Moving on to the formulation of hypotheses in the One-Way ANOVA Test,

- The null hypothesis ( H 0): There is no significant difference in the means of the groups.

- The alternative hypothesis ( H 1): there is a significant difference in the means of the groups.

Clear and specific hypotheses are crucial for the subsequent statistical analysis and interpretation.

Post-Hoc Tests for ANOVA

While the One-Way ANOVA is powerful in detecting overall group differences, it doesn’t provide specific information on which pairs of groups differ significantly. Post-hoc tests become essential in this context to conduct pairwise comparisons and identify the specific groups responsible for the observed overall difference. Without post-hoc tests, researchers might miss crucial nuances in the data, leading to incomplete or inaccurate interpretations.

Here are commonly used Post-hoc Tests for One-Way ANOVA:

- Tukey’s Honestly Significant Difference (HSD): Ideal when there are equal sample sizes and variances across groups. It controls the familywise error rate, making it suitable for multiple comparisons.

- Bonferroni Correction : Helpful when conducting numerous comparisons. It’s more conservative, adjusting the significance level to counteract the increased risk of Type I errors.

- Scheffe Test : Useful for unequal sample sizes and variances. It’s more robust but might be conservative in some situations.

- Dunnett’s Test : Designed for comparing each treatment group with a control group. It’s suitable for situations where there is a control group and multiple treatment groups.

- Games-Howell Test: Useful when sample sizes and variances are unequal across groups. It’s a robust option for situations where assumptions of homogeneity are not met.

Choosing the appropriate post-hoc test depends on the characteristics of your data and the specific research context. Consider factors such as sample sizes, homogeneity of variances, and the number of planned comparisons when deciding on the most suitable post-hoc test for your One-Way ANOVA results.

Example of Univariate Analysis of Variance Analysis

To illustrate the practical application of the One-Way ANOVA Test, let’s consider a hypothetical scenario. Imagine you’re studying the effectiveness of different fertilizers on the growth of plants. You have three groups, each treated with a different fertilizer.

- The null hypothesis: there’s no significant difference in the mean plant growth across the three fertilizers.

- The alternative hypothesis: there is a significant difference in the mean plant growth across the three fertilizers.

By conducting the One-Way ANOVA Test, you can statistically evaluate whether the observed differences in plant growth are likely due to the different fertilizers’ effectiveness or if they could occur by random chance alone. This example demonstrates how the One-Way ANOVA Test can be a valuable tool in diverse fields, providing insights into the impact of various factors on the dependent variable.

How to Perform Univariate Analysis of Variance in SPSS

Step by Step: Running ANOVA Test in SPSS Statistics

Let’s delve into the step-by-step process of conducting the univariate analysis using SPSS. Here’s a step-by-step guide on how to perform Univariate Analysis of Variance in SPSS :

- STEP: Load Data into SPSS

Commence by launching SPSS and loading your dataset, which should encompass the variables of interest – a categorical independent variable. If your data is not already in SPSS format, you can import it by navigating to File > Open > Data and selecting your data file.

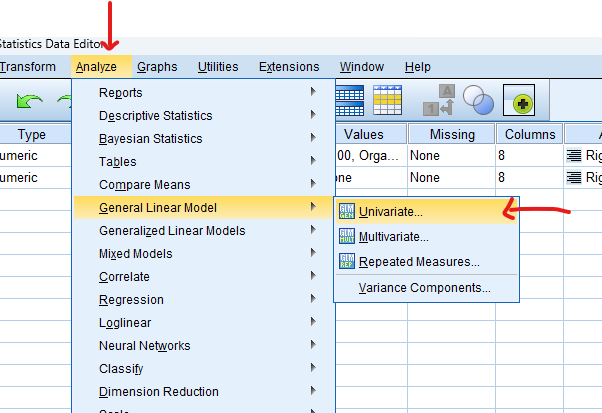

- STEP: Access the Analyze Menu

In the top menu, locate and click on “ Analyze .” Within the “Analyze” menu, navigate to “ General Linear Model ” and choose ” Univariate .” Analyze > General Linear Model> Univariate

- STEP: Specify Variables

In the dialogue box, move the dependent variable to the “ Dependent Variable ” field. Move the variable representing the group or factor to the “ Fixed Factor (s) ” field. This is the independent variable with different levels or groups.

- STEP: Plots Post-Hoc Test

Click on the “ Plot ” button, Move to Facto into the Horizontal Axis, and then click the “ Add ” button.

Go on the “ Post Hoc ” button, Check “ Tukey ” and Adjust as per your analysis requirements.

- STEP: Options

Snap on the “ Options ” button Check “ Descriptive ”, “ Homogeneity Test ” and “ Estimates of effect size ”

- STEP: Generate SPSS Output

Once you have specified your variables and chosen options, click the “ OK ” button to perform the analysis. SPSS will generate a comprehensive output, including the requested frequency table and chart for your dataset.

Conducting a One-Way ANOVA test in SPSS provides a robust foundation for understanding the key features of your data. Always ensure that you consult the documentation corresponding to your SPSS version, as steps might slightly differ based on the software version in use. This guide is tailored for SPSS version 25 , and for any variations, it’s recommended to refer to the software’s documentation for accurate and updated instructions.

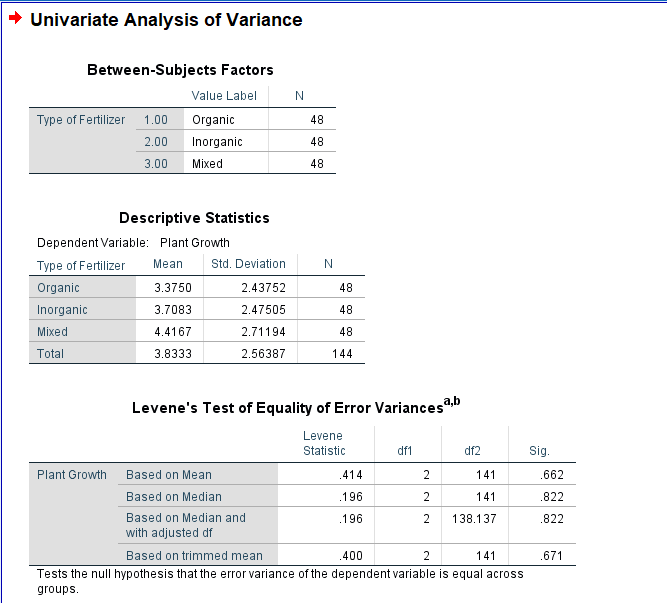

SPSS Output for One Way ANOVA

How to Interpret SPSS Output of Univariate Analysis

SPSS will generate output, including descriptive statistics, the f value, degrees of freedom, and the p-value and post-hoc

Descriptives Table

- Mean and Standard Deviation : Evaluate the means and standard deviations of each group. This provides an initial overview of the central tendency and variability within each group.

- Sample Size (N): Confirm the number of observations in each group. Discrepancies in sample sizes could impact the interpretation.

- 95% Confidence Interval (CI): Review the confidence interval for the mean difference.

Test of Homogeneity of Variances Table

- Levene’s Test: In the Test of Homogeneity of Variances table, look at Levene’s Test statistic and associated p-value. This test assesses whether the variances across groups are roughly equal. A non-significant p-value suggests that the assumption of homogeneity of variances is met.

ANOVA Table

- Between-Groups and Within-Groups Variability: Move on to the ANOVA table, which displays the Between-Groups and Within-Groups sums of squares, degrees of freedom, mean squares, the F-ratio, and the p-value.

- F-Ratio : Focus on the F-ratio. A higher F-ratio indicates larger differences among group means relative to within-group variability.

- Degrees of Freedom : Note the degrees of freedom for Between-Groups and Within-Groups. These values are essential for calculating the critical F-value.

- P-Value: Examine the p-value associated with the F-ratio. If the p-value is below your chosen significance level (commonly 0.05), it suggests that at least one group’s mean is significantly different.

Post Hoc Tests Table

- Specific Group Differences: If you conducted post-hoc tests, examine the results. Look for significant differences between specific pairs of groups. Pay attention to p-values and confidence intervals to identify which groups are significantly different from each other.

Effect Size Measures

- Eta-squared : If available, consider effect size measures in the ANOVA table. Eta-squared indicates the proportion of variance in the dependent variable explained by the group differences.

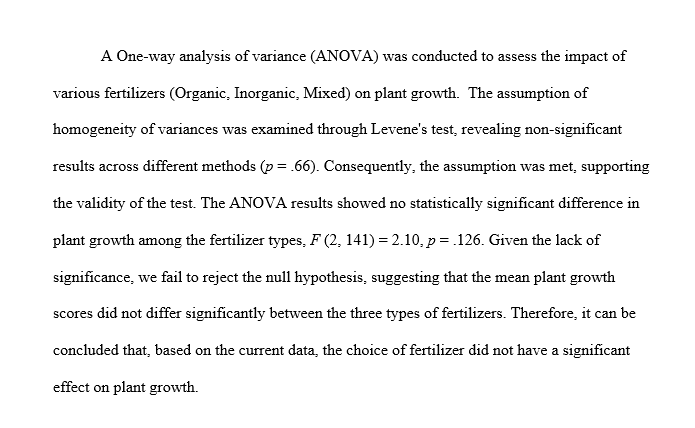

How to Report Results of One-Way ANOVA Test in APA

Reporting the results of a One-Way ANOVA Test in APA style ensures clarity and adherence to established guidelines. Begin with a concise description of the analysis conducted, including the test name, the dependent variable, and the independent variable representing the groups.

For instance, “A One-Way Analysis of Variance (ANOVA) was conducted to examine the differences in plant growth across different fertilizers.”

Present the key statistical findings from the ANOVA table, including the F-ratio, degrees of freedom, and p-value. For example, “The results revealed a significant difference in plant growth among the fertilizers, F(df_between, df_within) = [F-ratio], p = [p-value].”

If the p-value is significant, proceed with post-hoc tests (e.g., Tukey’s HSD) to pinpoint specific group differences. Additionally, report effect size measures to provide a comprehensive overview of the results.

Conclude the report by summarising the implications of the findings in relation to your research question or hypothesis. This structured approach to reporting One-Way ANOVA results in APA format ensures transparency and facilitates the understanding of your research outcomes.

Get Help For Your SPSS Analysis

Embark on a seamless research journey with SPSSAnalysis.com , where our dedicated team provides expert data analysis assistance for students, academicians, and individuals. We ensure your research is elevated with precision. Explore our pages;

- SPSS Data Analysis Help – SPSS Helper ,

- Quantitative Analysis Help ,

- Qualitative Analysis Help ,

- SPSS Dissertation Analysis Help ,

- Dissertation Statistics Help ,

- Statistical Analysis Help ,

- Medical Data Analysis Help .

Connect with us at SPSSAnalysis.com to empower your research endeavors and achieve impactful results. Get a Free Quote Today !

Expert SPSS data analysis assistance available.

Struggling with Statistical Analysis in SPSS? - Hire a SPSS Helper Now!

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Quantitative Data Analysis With SPSS

11 Quantitative Analysis with SPSS: Univariate Analysis

Mikaila Mariel Lemonik Arthur

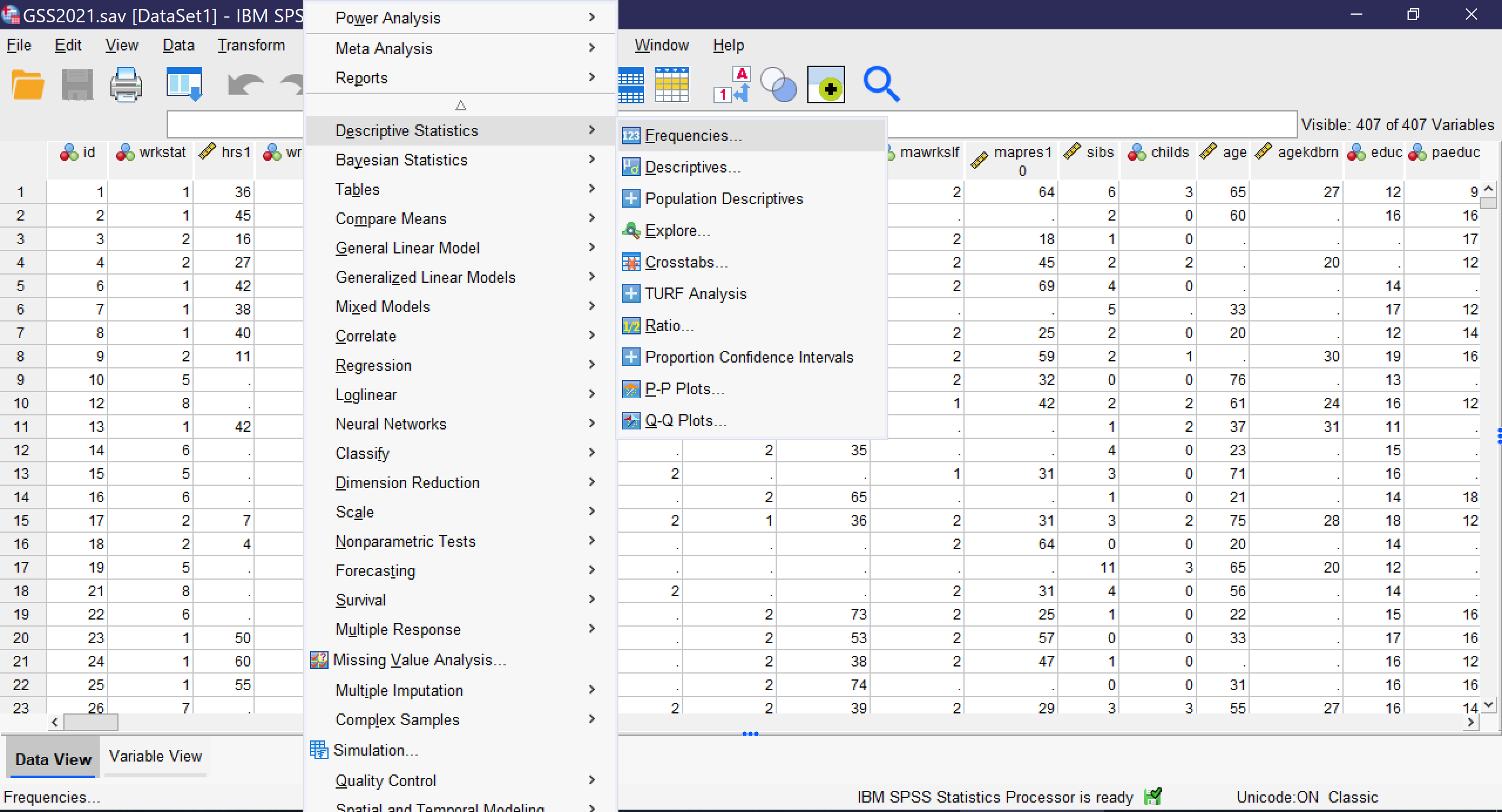

The first step in any quantitative analysis project is univariate analysis, also known as descriptive statistics . Producing these measures is an important part of understanding the data as well as important for preparing for subsequent bivariate and multivariate analysis. This chapter will detail how to produce frequency distributions (also called frequency tables), measures of central tendency , measures of dispersion , and graphs in SPSS. The chapter on Univariate Analysis provides details on understanding and interpreting these measures. To select the correct measures for your variables, first determine the level of measurement of each variable for which you want to produce appropriate descriptive statistics. The distinction between binary and other nominal variables is important here, so you need to determine whether each variable is binary, nominal, ordinal , or continuous . Then, use Table 1 to determine which descriptive statistics you should produce.

Producing Descriptive Statistics

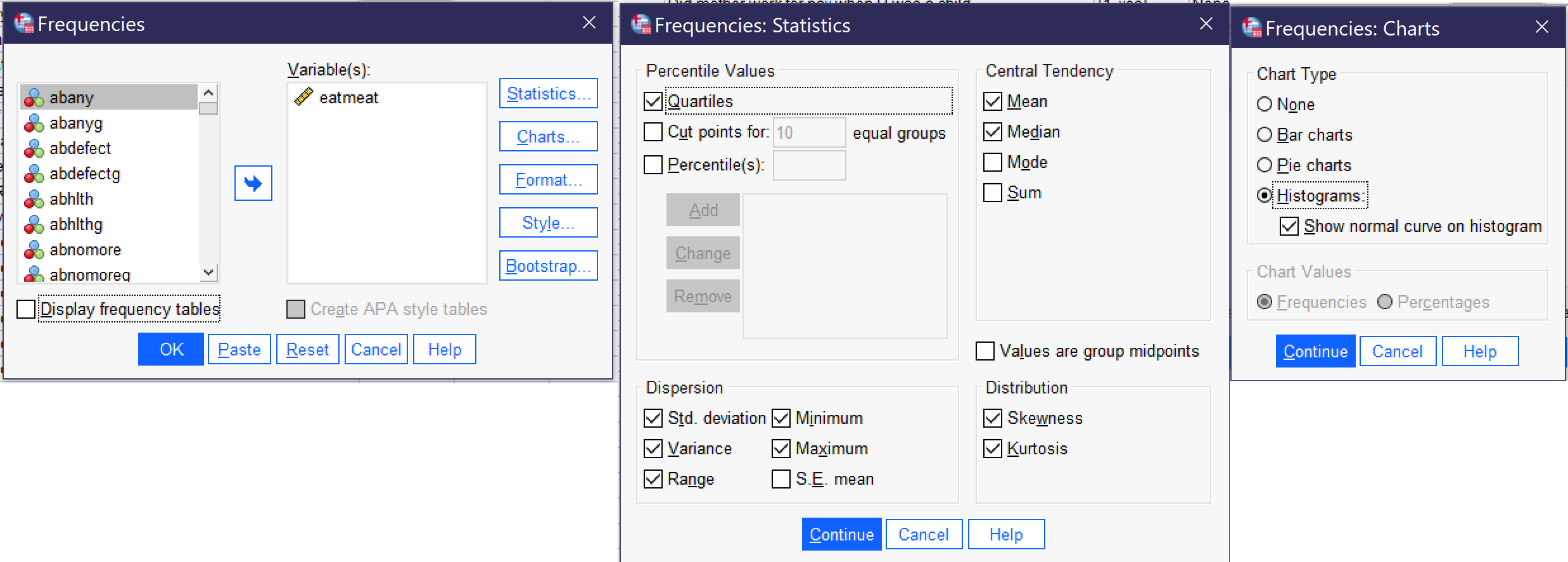

Other than graphs, all of the univariate analyses discussed in this chapter are produced by going to Analyze → Descriptive Statistics → Frequencies, as shown in Figure 1. Note that SPSS also offers a tool called Descriptives; avoid this unless you are specifically seeking to produce Z scores , a topic beyond the scope of this text, as the Descriptives tool provides far fewer options than the Frequencies tool.

Selecting this tool brings up a window called “Frequencies” from which the various descriptive statistics can be selected, as shown in Figure 2. In this window, users select which variables to perform univariate analysis upon. Note that while univariate analyses can be performed upon multiple variables as a group, those variables need to all have the same level of measurement as only one set of options can be selected at a time.

To use the Frequencies tool, scroll through the list of variables on the left side of the screen, or click in the list and begin typing the variable name if you remember it and the list will jump to it. Use the blue arrow to move the variable into the Variables box or grab and drag it over. If you are performing analysis on a binary, nominal, or ordinal variable, be sure the checkbox next to “Display frequency tables” is checked; if you are performing analysis on a continuous variable, leave that box unchecked. The checkbox for “Create APA style tables” slightly alters the format and display of tables. If you are working in the field of psychology specifically, you should select this checkbox, otherwise it is not needed. The options under “Format” specify elements about the display of the tables; in most cases those should be left as the default. The options under “Style” and “Bootstrap” are beyond the scope of this text.

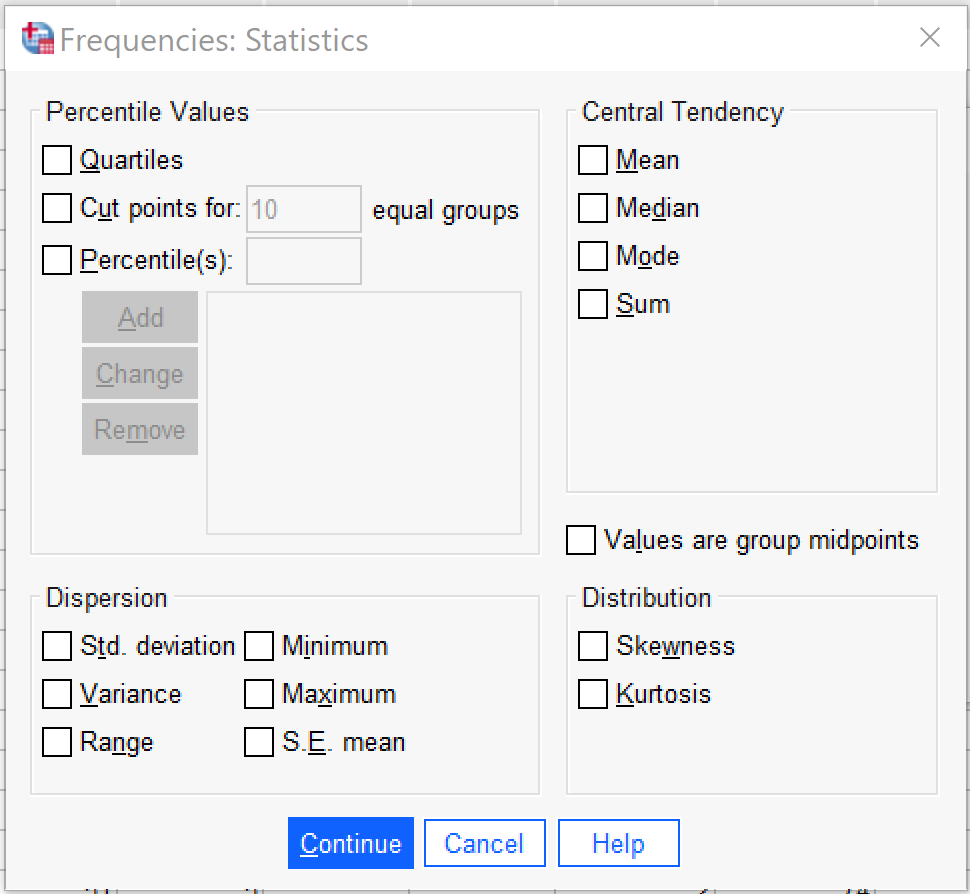

It is under “Statistics” that the specific descriptive statistics to be produced are selected, as shown in Figure 3. First, users can select several different options for producing percentiles, which are usually produced only for continuous variables but occasionally are used for ordinal variables. Quartiles produces the 25th, 50th (median), and 75th percentile in the data. Cut points allows the user to select a specified number of equal groups and see at which values the groups break. Percentiles allows the user to specify specific percentiles to produce—for instance, a user might want to specify 33 and 66 to see where the upper, middle, and lower third of data fall.

Second, users can select measures of central tendency, specifically the mean (used for binary and continuous variables), the median (used for ordinal and continuous variables), and the mode (used for binary, nominal, and ordinal variables). Sum adds up all the values of the variable, and is not typically used. There is also an option to select if values are group midpoints, which is beyond the scope of this text.

Next, users can select measures of dispersion and distribution, including the standard deviation (abbreviated here Std. deviation, and used for continuous variables), the variance (used for continuous variables), the range (used for ordinal and continuous variables), the minimum value (used for ordinal and continuous variables), the maximum value (used for ordinal and continuous variables), and the standard error of the mean (abbreviated here as S.E. mean, this is a measure of sampling error and beyond the scope of this text), as well as skewness and kurtosis (used for continuous variables).

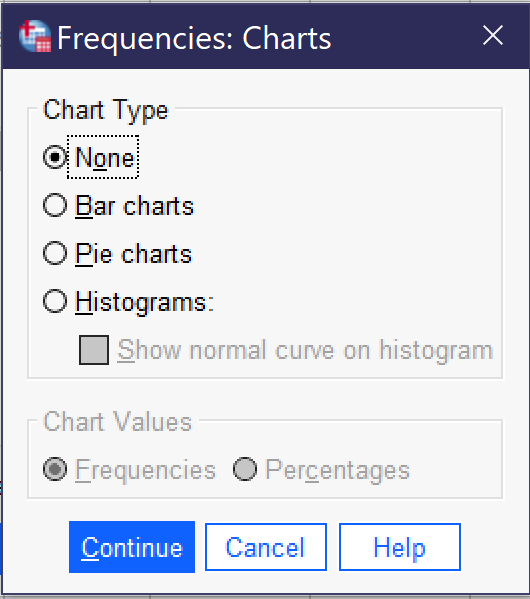

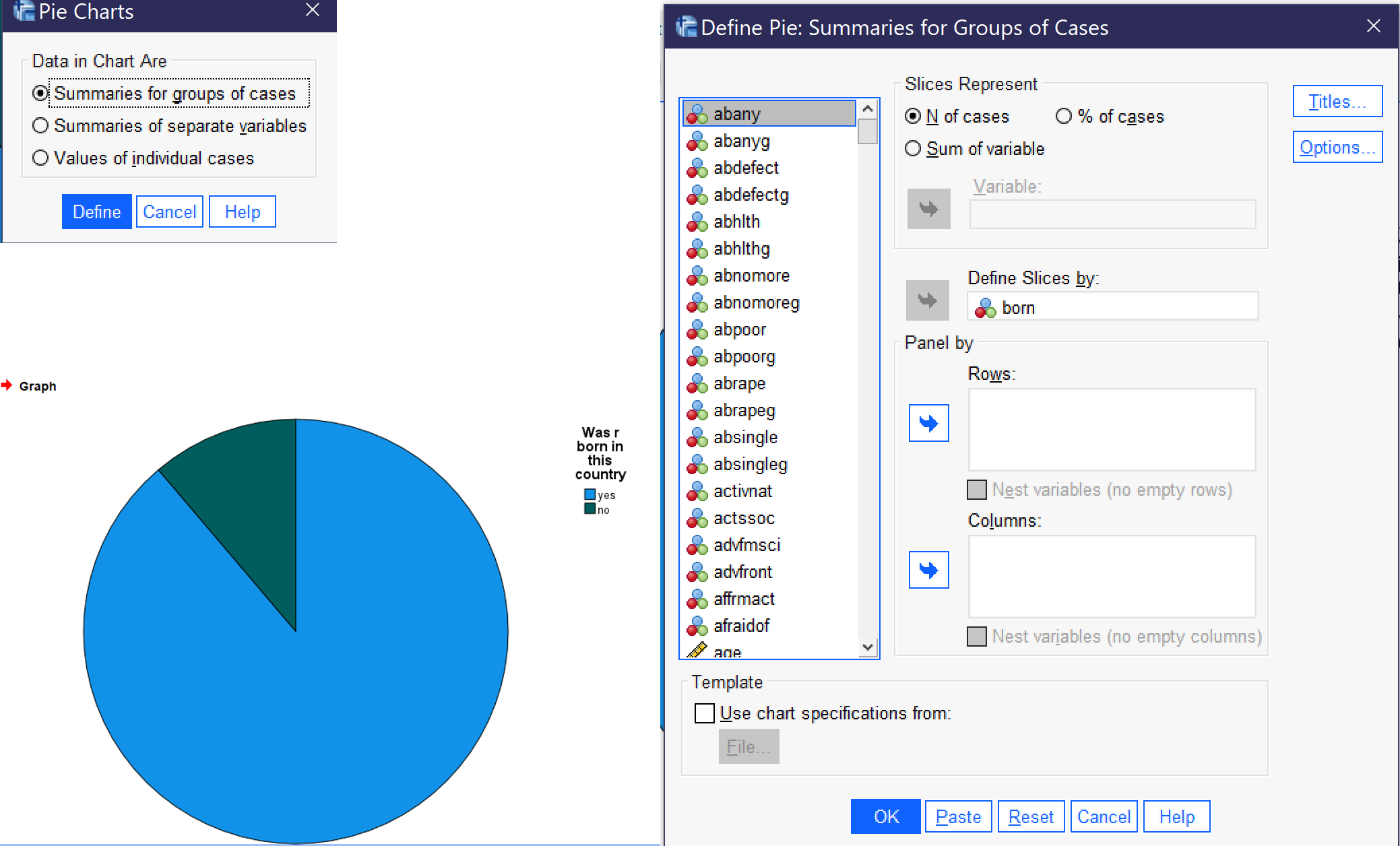

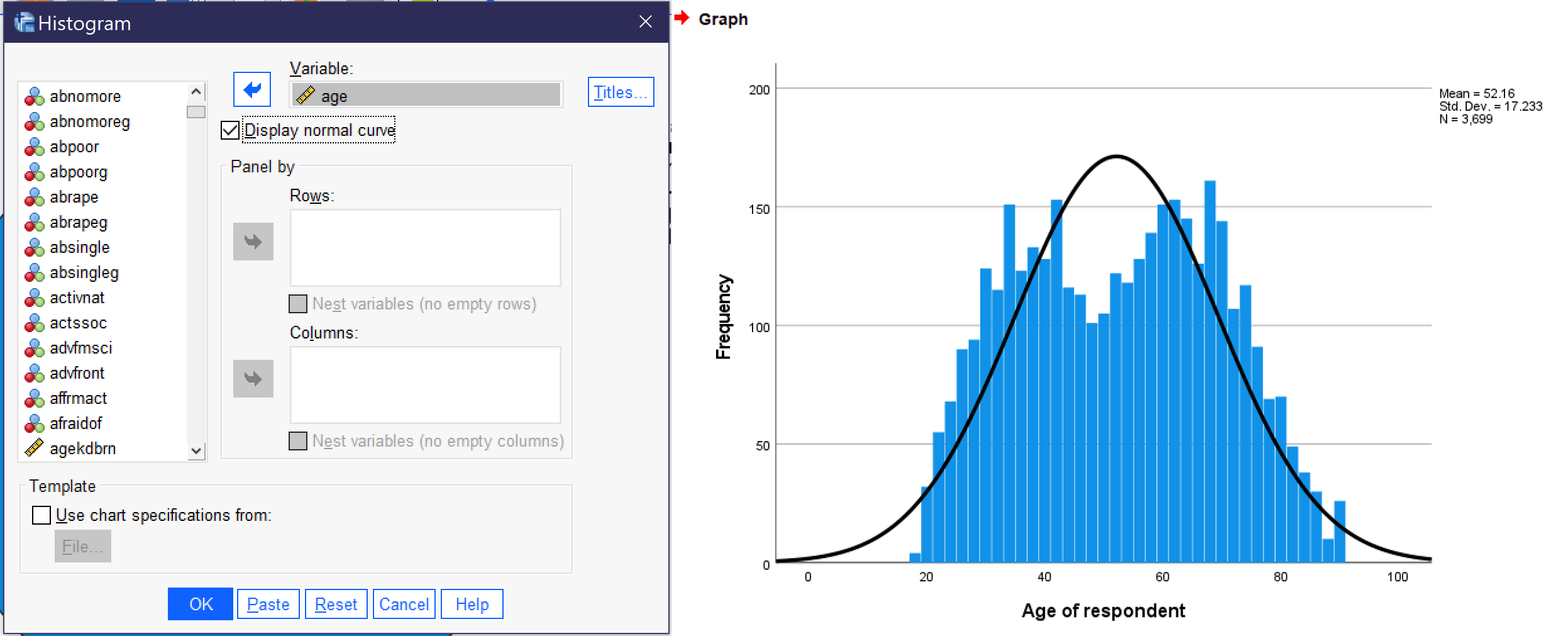

Once all desired tests are selected, click “Continue” to go back to the main frequencies dialog. There, you can also select the Chart button to produce graphs (as shown in Figure 4), though only one graph can be produced at a time (other options for producing graphs will be discussed later in this chapter). Bar charts are appropriate for binary, nominal, and ordinal variables. Pie charts are typically used only for binary variables and nominal variables with just a few categories, though they may at times make sense for ordinal variables with just a few categories. Histograms are used for continuous variables; there is an option to show the normal curve on the histogram, which can help users visualize the distribution more clearly. Users can also choose whether their graphs will be displayed in terms of frequencies (the raw count of values) or percentages.

Examples at Each Level of Measurement

Here, we will produce appropriate descriptive statistics for one variable from the 2021 GSS file at each level of measurement, showing what it looks like to produce them, what the resulting output looks like, and how to interpret that output.

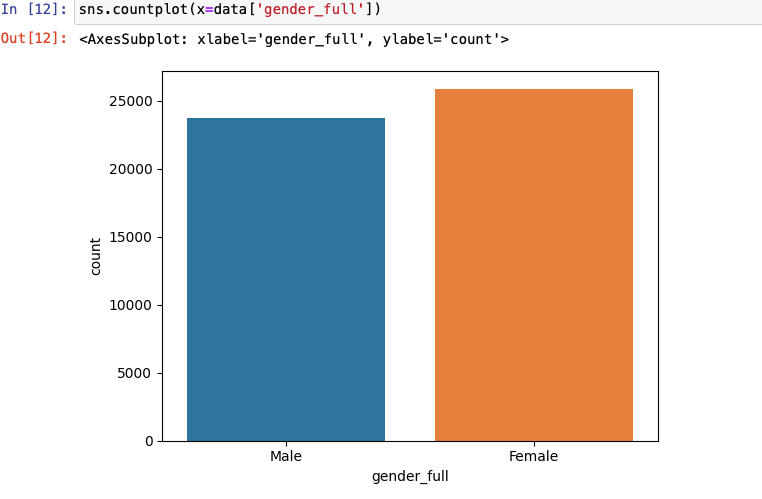

A Binary Variable

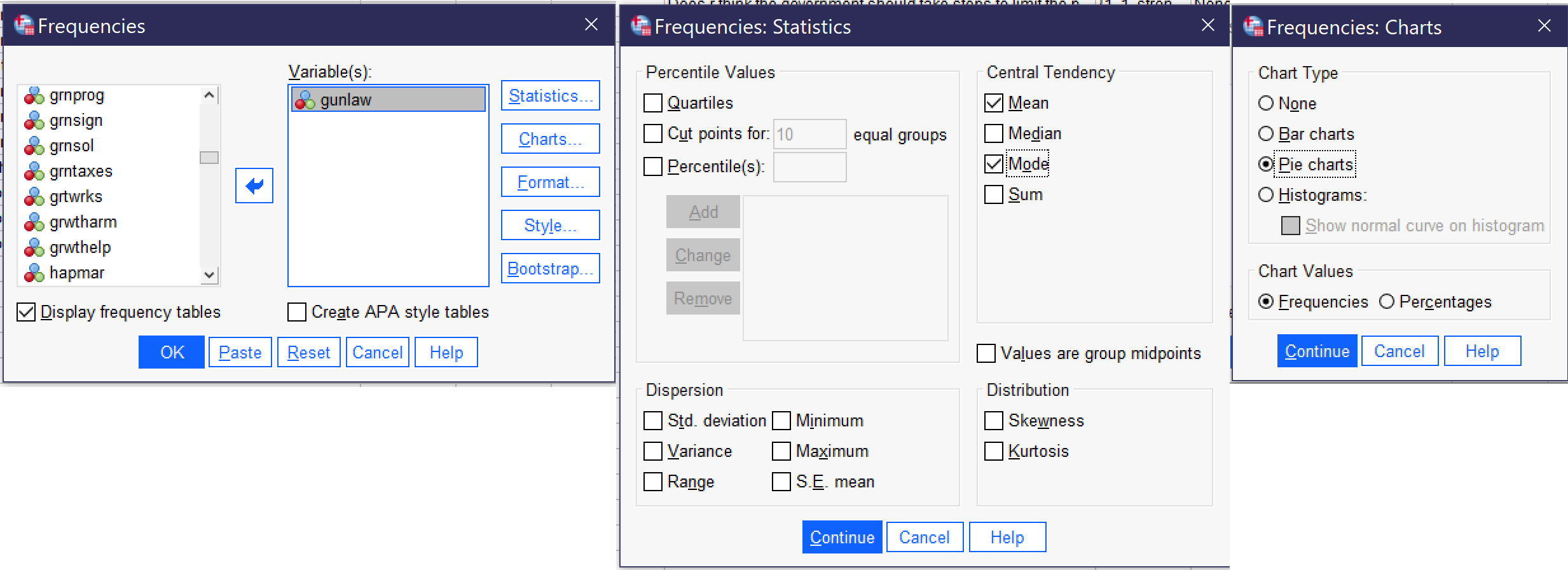

To produce descriptive statistics for a binary variable, be sure to leave Display frequency tables checked. Under statistics, select Mean and Mode and then click continue, and under graphs select your choice of bar graph or pie chart and then click continue. Using the variable GUNLAW, then, the selected option would look as shown in Figure 5. Then click OK, and the results will appear in the Output window.

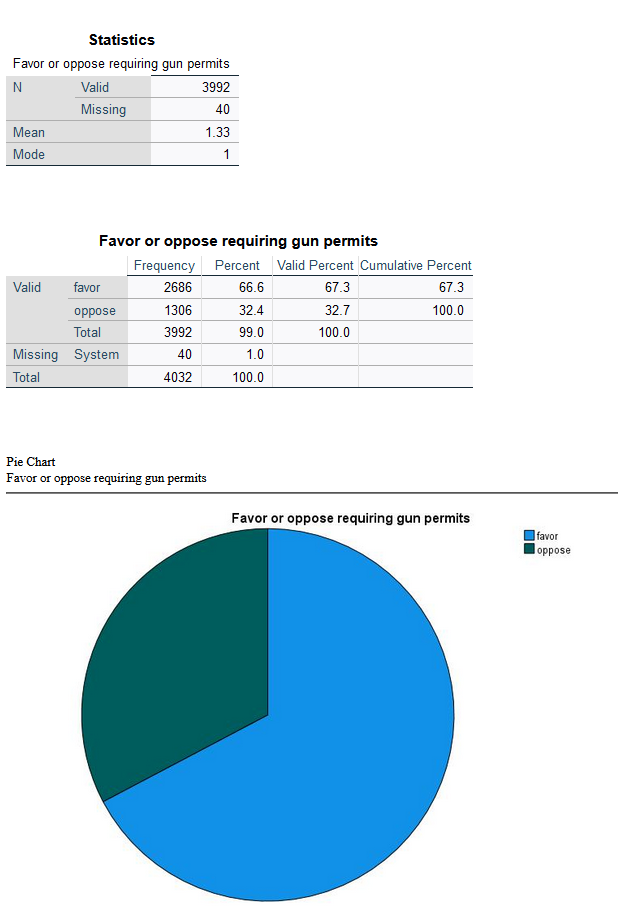

The output for GUNLAW will look approximately like what is shown in Figure 6. GUNLAW is a variable measuring whether the respondent favors or opposes requiring individuals to obtain police permits before buying a gun.

The output shows that 3,992 people gave a valid answer to this question, while responses for 40 people are missing. Of those who provided answers, the mode, or most frequent response, is 1. If we look at the value labels, we will find that 1 here means “favor;” in other words, the largest number of respondents favors requiring permits for gun owners. The mean is 1.33. In the case of a binary variable, what the mean tells us is the approximate proportion of people who have provided the higher-numbered value label—so in this case, about ⅓ of respondents said they are opposed to requiring permits.

The frequency table, then, shows the number and proportion of people who provided each answer. The most important column to pay attention to is Valid Percent. This column tells us what percentage of the people who answered the question gave each answer. So, in this case, we would say that 67.3% of respondents favor requiring permits for gun ownership, while 32.7% are opposed—and 1% are missing.

Finally, we have produced a pie chart, which provides the same information in a visual format. Users who like playing with their graphs can double-click on the graph and then right-click or cmd/ctrl click to change options such as displaying value labels or amounts or changing the color of the graph.

A Nominal Variable

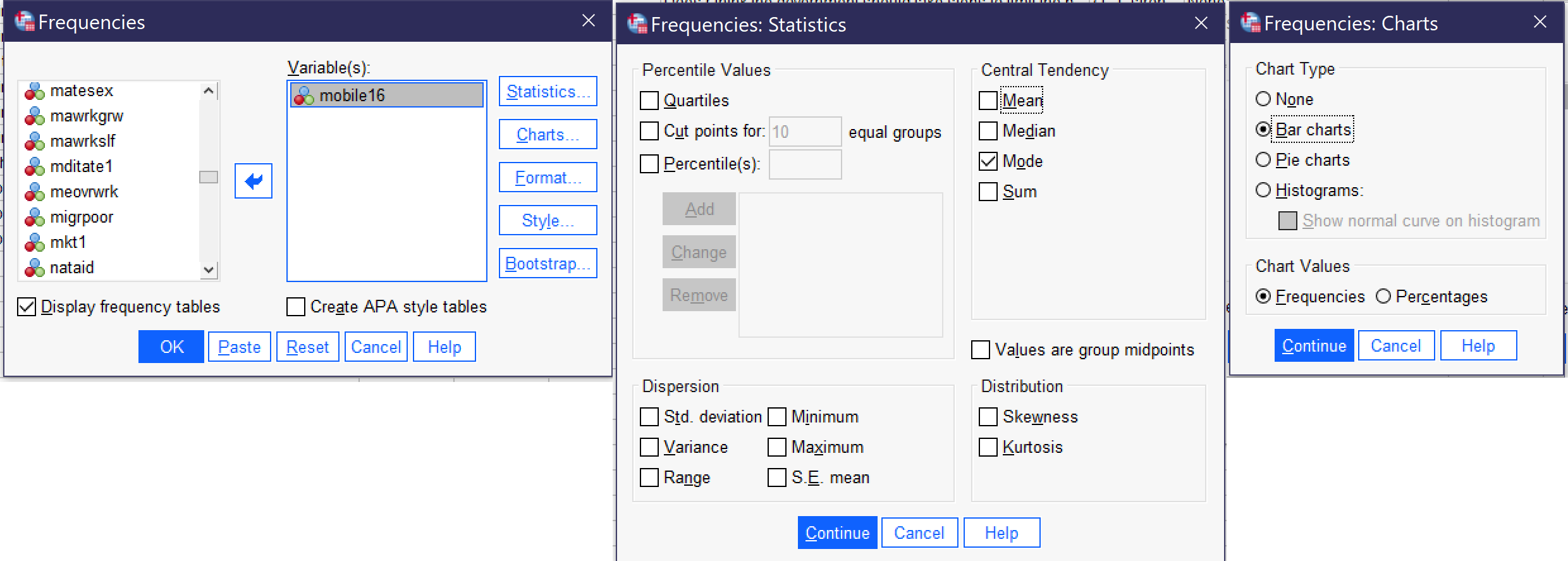

To produce descriptive statistics for a nominal variable, be sure to leave Display frequency tables checked. Under statistics, select Mode and then click continue, and under graphs select your choice of bar graph or pie chart (avoid pie chart if your variable has many categories) and then click continue. Using the variable MOBILE16, then, the selected option would look as shown in Figure 7. Then click OK, and the results will appear in the Output window.

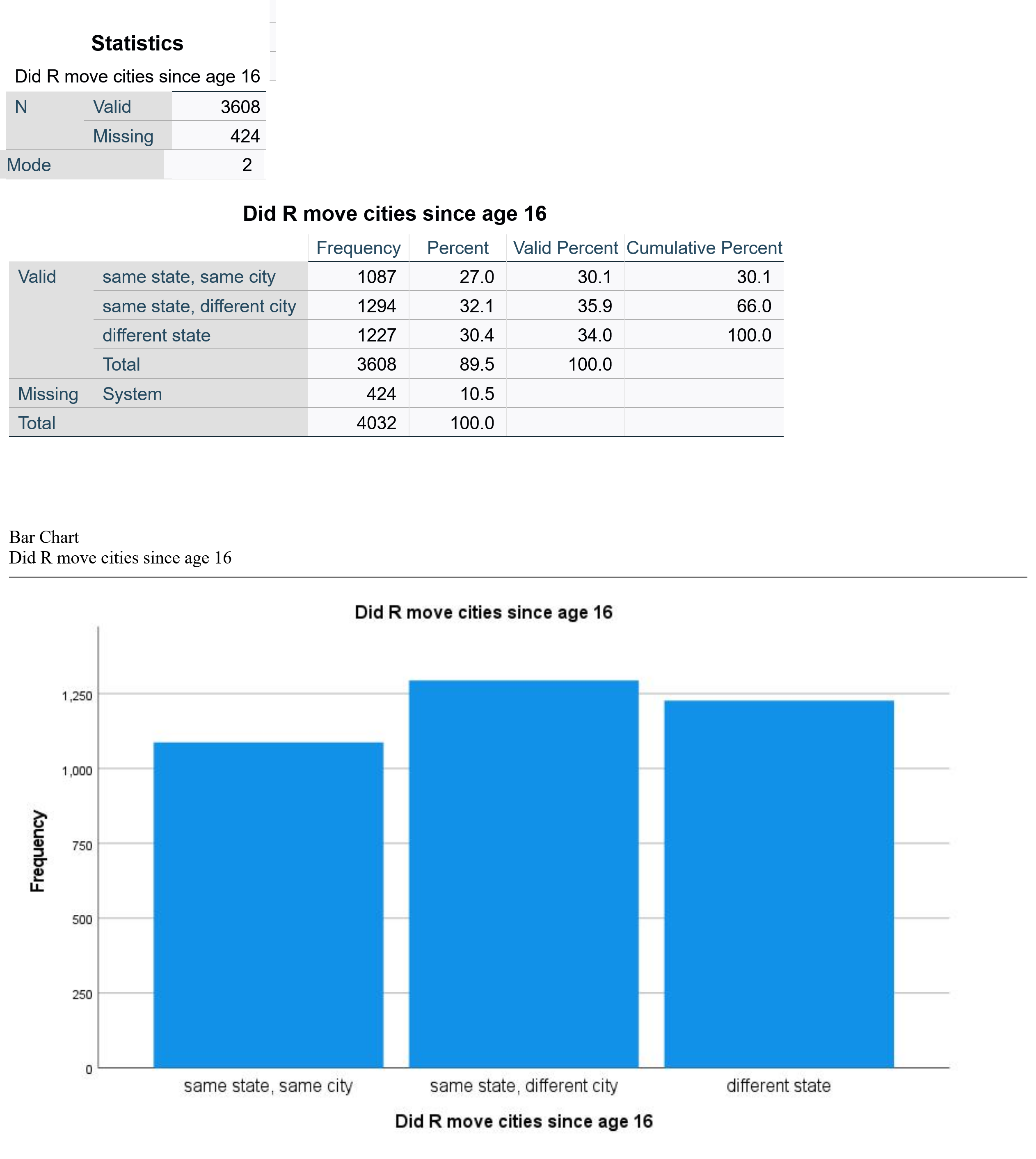

The output will then look approximately like the output shown in Figure 8. MOBILE16 is a variable measuring respondents’ degree of geographical mobility since age 16, asking them if they live in the same city they lived in at age 16; stayed in the same state they lived in at age 16 but now live in a different city; or live in a different state than they lived in at age 16.

The output shows that 3608 respondents answered this survey question, while 424 did not. The mode is 2; looking at the value labels, we conclude that 2 refers to “same state, different city,” or in other words that the largest group of respondents lives in the same state they lived in at age 16 but not in the same city they lived in at age 16. The frequency table shows us the percentage breakdown of respondents into the three categories. Valid percent is most useful here, as it tells us the percentage of respondents in each category after those who have not responded to the question are removed. In this case, 35.9% of people live in the same state but a different city, the largest category of respondents. Thirty-four percent live in a different state, while 30.1% live in the same city in which they lived at age 16. Below the frequency table is a bar graph which provides a visual for the information in the frequency table. As noted above, users can change options such as displaying value labels or amounts or changing the color of the graph.

An Ordinal Variable

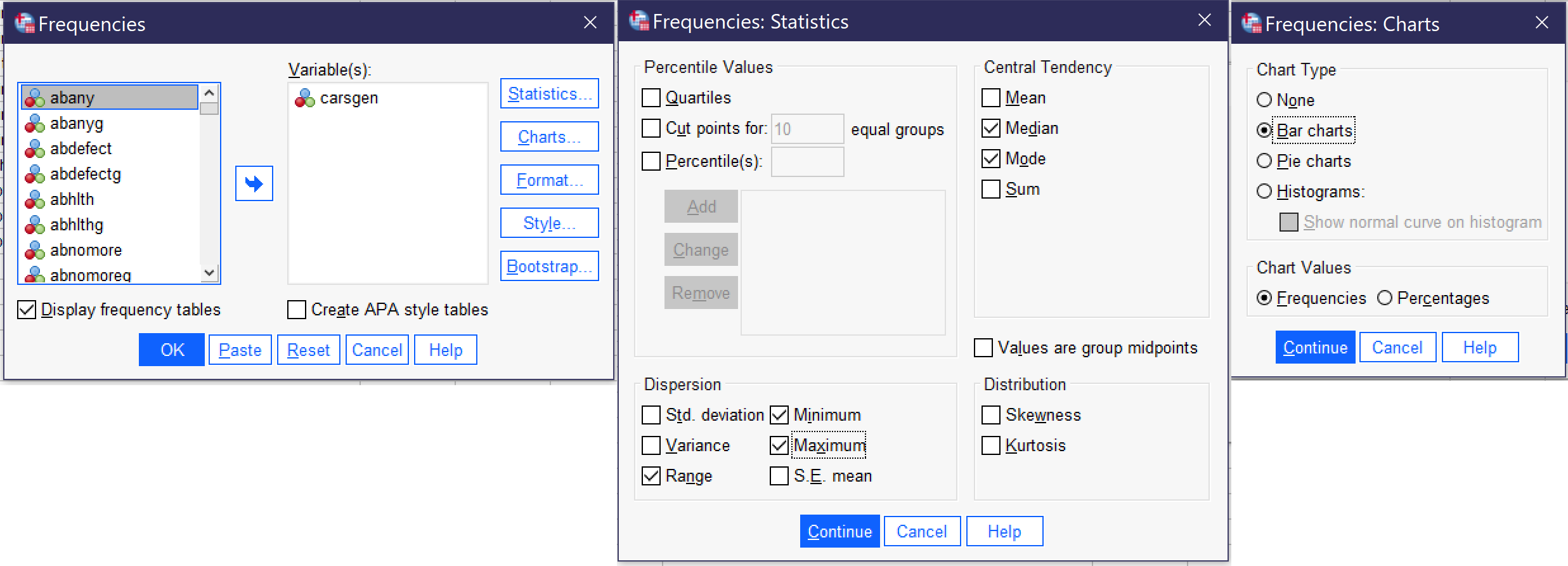

To produce descriptive statistics for an ordinal variable, be sure to leave Display frequency tables checked. Under statistics, select Median, Mode, Range, Minimum, and Maximum, and then click continue, and under graphs select your choice of bar graph and then click continue. Then click OK, and the results will appear in the Output window. Using the variable CARSGEN, then, the selected option would look as shown in Figure 7.

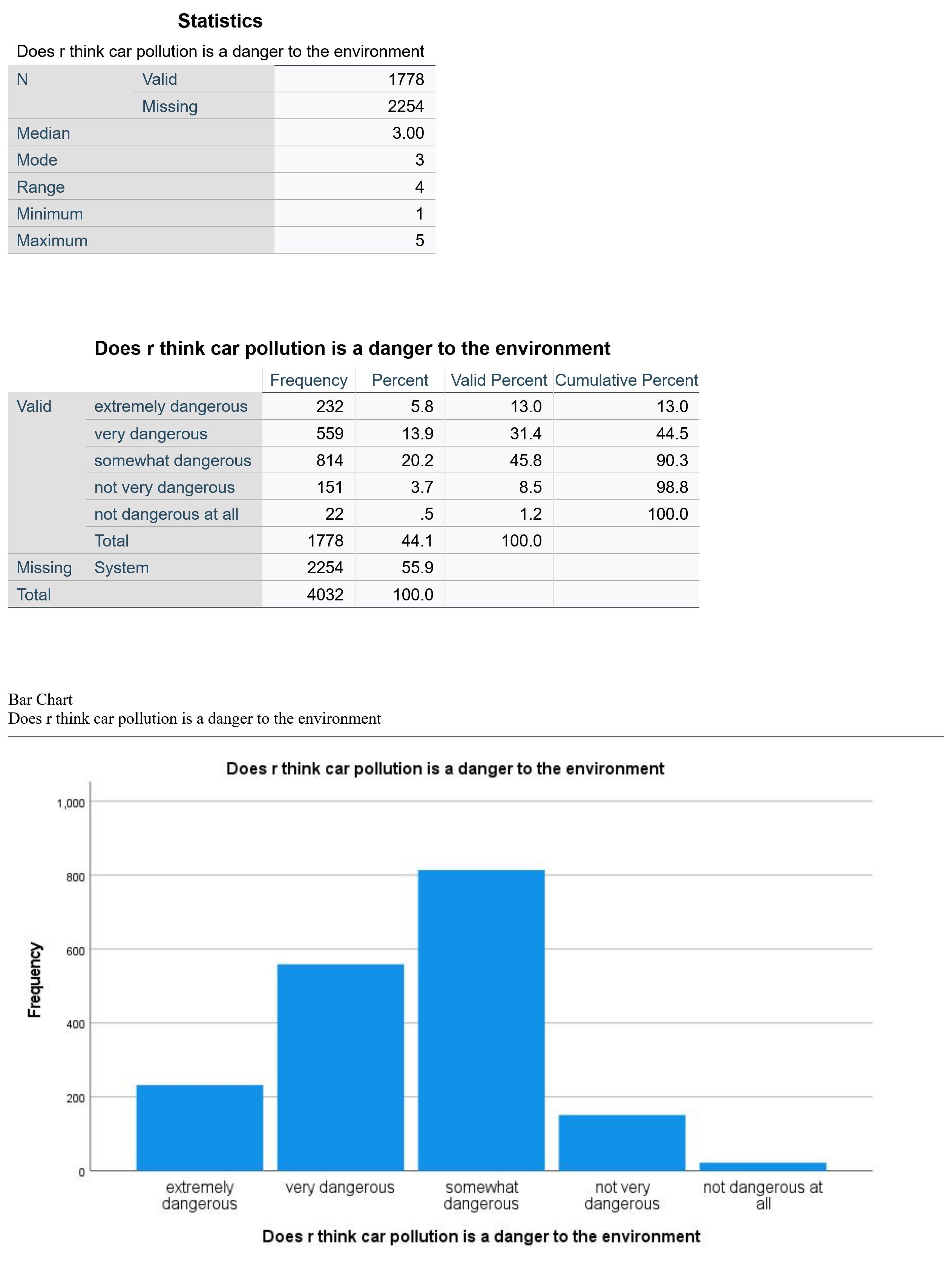

The output will then look approximately like the output shown in Figure 10. CARSGEN is an ordinal variable measuring the degree to which respondents agree or disagree that car pollution is a danger to the environment.

First, we see that 1778 respondents answered this question, while 2254 did not (remember that the GSS has a lot of questions; some are asked of all respondents while others are only asked of a subset, so the fact that a lot of people did not answer may indicate that many were not asked rather than that there is a high degree of nonresponse). The median and mode are both 3. Looking at the value labels tells us that 3 represents “somewhat dangerous.” The range is 4, representing the maximum (5) minus the minimum (1)—in other words, there are five ordinal categories.

Looking at the valid percents, we can see that 13% of respondents consider car pollution extremely dangerous, 31.4% very dangerous, and 45.8%—the biggest category (and both the mode and median)—somewhat dangerous. In contrast only 8.5% think car pollution is not very dangerous and 1.2% think it is not dangerous at all. Thus, it is reasonable to conclude that the vast majority—over 90%—of respondents think that car pollution presents at least some degree of danger. The bar graph at the bottom of the output represents this information visually.

A Continuous Variable

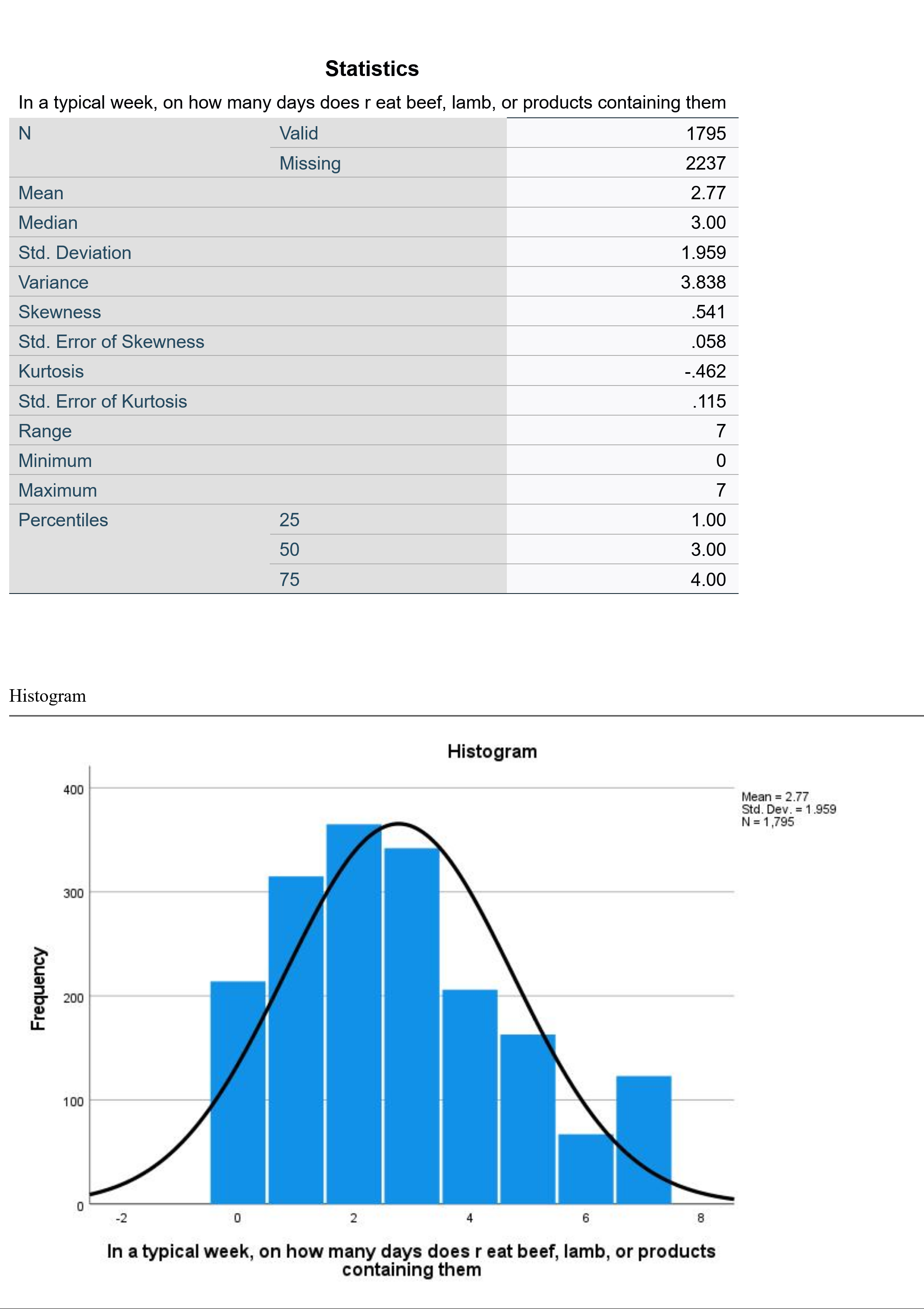

To produce descriptive statistics for a continuous variable, be sure to uncheck Display frequency tables. Under statistics, go to percentile values and select Quartiles (or other percentile options appropriate to your project). Then select Mean, Median, Std. deviation, Variance, Range, Minimum, Maximum, Skewness, and Kurtosis and then click continue, and under graphs select Histograms and turn on Show normal curve on histogram and then click continue. Using the variable EATMEAT, then, the selected option would look as shown in Figure 11. Then click OK, and the results will appear in the Output window.

The output will then look approximately like the output shown in Figure 12. EATMEAT is a continuous variable measuring the number of days per week that the respondent eats beef, lamb, or products containing beef or lamb.

Because this variable is continuous, we have not produced frequency tables, and therefore we jump right into the statistics. 1795 respondents answered this question. On average, they eat beef or lamb 2.77 days per week (that is what the mean tells us). The median respondent eats beef or lamb three days per week. The standard deviation of 1.959 tells us that about 68% of respondents will be found within ±1.959 of the mean of 2.77, or between 0.811 days and 4.729 days. The skewness of 0.541 tells us that the data is mildly skewed to the right, with a longer tail at the higher end of the distribution. The kurtosis of -0.462 tells us that the data is mildly platykurtic, or has little data in the outlying tails. (Note that we have ignored several statistics in the table, which are used to compute or further interpret the figures we are discussing and which are otherwise beyond the scope of this text). The range is 7, with a minimum of 0 and a maximum of 7—sensible, given that this variable is measuring the number of days of the week that something happens. The 25th percentile is at 1, the 50th at 3 (this is the same as the median) and the 75th at 4. This tells us that one quarter of respondents eat beef or lamb one day a week or fewer; a quarter eat it between one and three days a week; a quarter eat it between three and four days a week; and a quarter eat it more than four days per week. The histogram shows the shape of the distribution; note that while the distribution is otherwise fairly normally distributed, more respondents eat beef or lamb seven days a week than eat it six days a week.

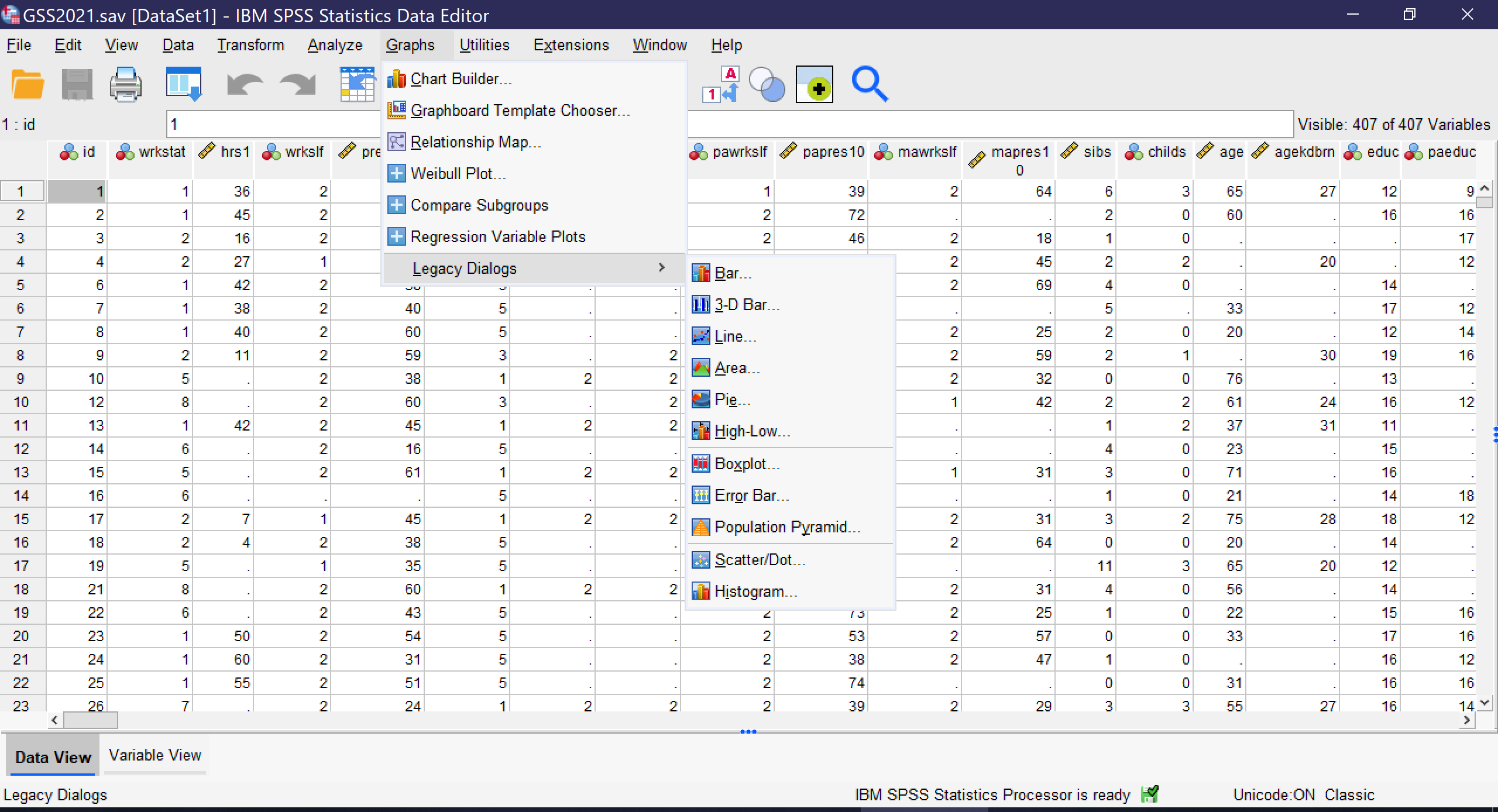

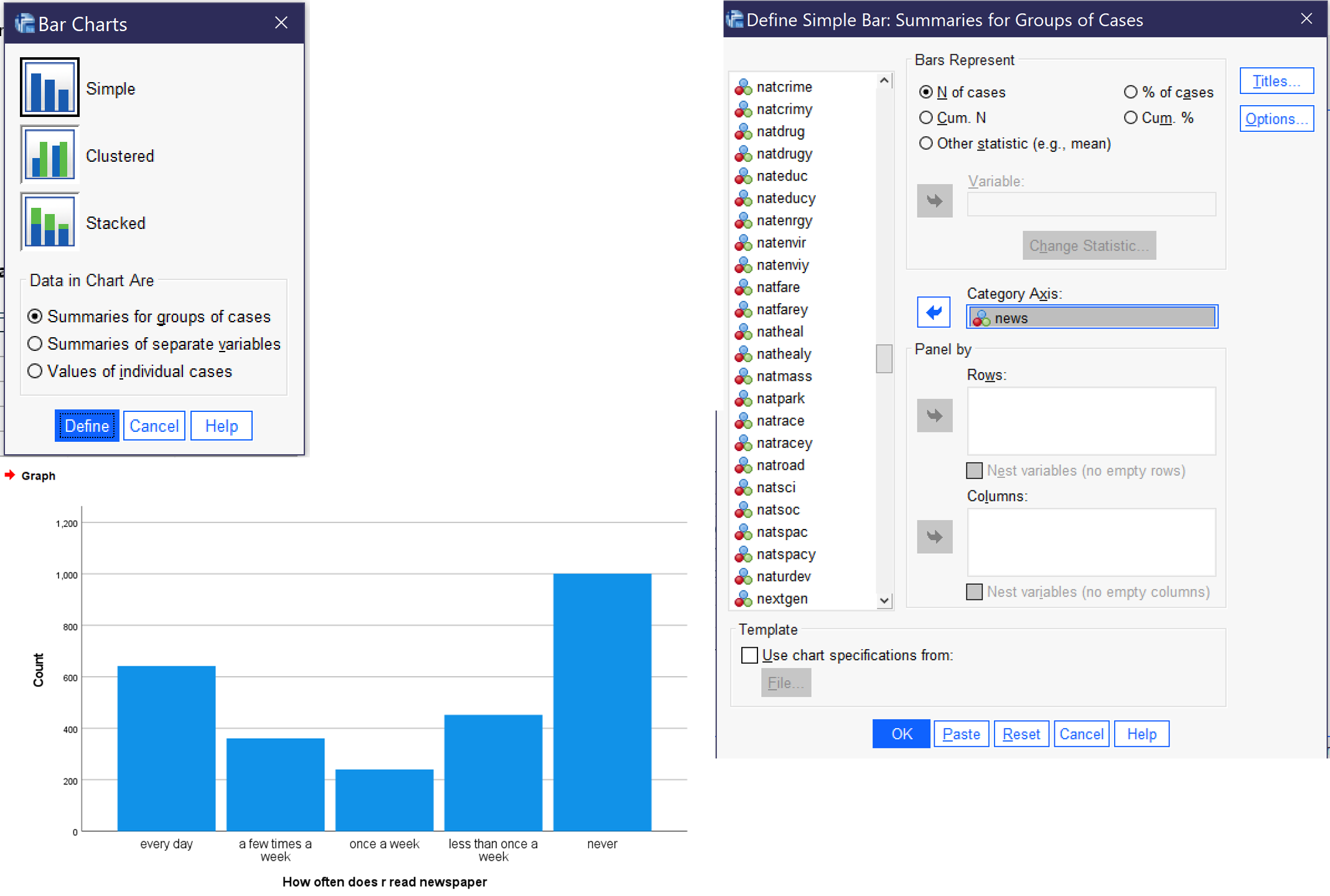

There are several other ways to produce graphs in SPSS. The simplest is to go to Graphs → Legacy Dialogs, where a variety of specific graph types can be selected and produced, including both univariate and bivariate charts. The Legacy Dialogs menu, as shown in Figure 13, permits users to choose bar graphs, 3-D bar graphs, line graphs, area charts, pie charts, high-low plots, boxplots, error bars, population pyramids, scatterplots/dot graphs, and histograms. Users are then presented with a series of options for what data to include in their chart and how to format the chart.

Here, we will review how to produce univariate bar graphs, pie charts, and histograms using the legacy dialogs. Other graphs important to the topics discussed in this text will be reviewed in other chapters.