Critically Analyzing Information Sources: Critical Appraisal and Analysis

- Critical Appraisal and Analysis

Initial Appraisal : Reviewing the source

- What are the author's credentials--institutional affiliation (where he or she works), educational background, past writings, or experience? Is the book or article written on a topic in the author's area of expertise? You can use the various Who's Who publications for the U.S. and other countries and for specific subjects and the biographical information located in the publication itself to help determine the author's affiliation and credentials.

- Has your instructor mentioned this author? Have you seen the author's name cited in other sources or bibliographies? Respected authors are cited frequently by other scholars. For this reason, always note those names that appear in many different sources.

- Is the author associated with a reputable institution or organization? What are the basic values or goals of the organization or institution?

B. Date of Publication

- When was the source published? This date is often located on the face of the title page below the name of the publisher. If it is not there, look for the copyright date on the reverse of the title page. On Web pages, the date of the last revision is usually at the bottom of the home page, sometimes every page.

- Is the source current or out-of-date for your topic? Topic areas of continuing and rapid development, such as the sciences, demand more current information. On the other hand, topics in the humanities often require material that was written many years ago. At the other extreme, some news sources on the Web now note the hour and minute that articles are posted on their site.

C. Edition or Revision

Is this a first edition of this publication or not? Further editions indicate a source has been revised and updated to reflect changes in knowledge, include omissions, and harmonize with its intended reader's needs. Also, many printings or editions may indicate that the work has become a standard source in the area and is reliable. If you are using a Web source, do the pages indicate revision dates?

D. Publisher

Note the publisher. If the source is published by a university press, it is likely to be scholarly. Although the fact that the publisher is reputable does not necessarily guarantee quality, it does show that the publisher may have high regard for the source being published.

E. Title of Journal

Is this a scholarly or a popular journal? This distinction is important because it indicates different levels of complexity in conveying ideas. If you need help in determining the type of journal, see Distinguishing Scholarly from Non-Scholarly Periodicals . Or you may wish to check your journal title in the latest edition of Katz's Magazines for Libraries (Olin Reference Z 6941 .K21, shelved at the reference desk) for a brief evaluative description.

Critical Analysis of the Content

Having made an initial appraisal, you should now examine the body of the source. Read the preface to determine the author's intentions for the book. Scan the table of contents and the index to get a broad overview of the material it covers. Note whether bibliographies are included. Read the chapters that specifically address your topic. Reading the article abstract and scanning the table of contents of a journal or magazine issue is also useful. As with books, the presence and quality of a bibliography at the end of the article may reflect the care with which the authors have prepared their work.

A. Intended Audience

What type of audience is the author addressing? Is the publication aimed at a specialized or a general audience? Is this source too elementary, too technical, too advanced, or just right for your needs?

B. Objective Reasoning

- Is the information covered fact, opinion, or propaganda? It is not always easy to separate fact from opinion. Facts can usually be verified; opinions, though they may be based on factual information, evolve from the interpretation of facts. Skilled writers can make you think their interpretations are facts.

- Does the information appear to be valid and well-researched, or is it questionable and unsupported by evidence? Assumptions should be reasonable. Note errors or omissions.

- Are the ideas and arguments advanced more or less in line with other works you have read on the same topic? The more radically an author departs from the views of others in the same field, the more carefully and critically you should scrutinize his or her ideas.

- Is the author's point of view objective and impartial? Is the language free of emotion-arousing words and bias?

C. Coverage

- Does the work update other sources, substantiate other materials you have read, or add new information? Does it extensively or marginally cover your topic? You should explore enough sources to obtain a variety of viewpoints.

- Is the material primary or secondary in nature? Primary sources are the raw material of the research process. Secondary sources are based on primary sources. For example, if you were researching Konrad Adenauer's role in rebuilding West Germany after World War II, Adenauer's own writings would be one of many primary sources available on this topic. Others might include relevant government documents and contemporary German newspaper articles. Scholars use this primary material to help generate historical interpretations--a secondary source. Books, encyclopedia articles, and scholarly journal articles about Adenauer's role are considered secondary sources. In the sciences, journal articles and conference proceedings written by experimenters reporting the results of their research are primary documents. Choose both primary and secondary sources when you have the opportunity.

D. Writing Style

Is the publication organized logically? Are the main points clearly presented? Do you find the text easy to read, or is it stilted or choppy? Is the author's argument repetitive?

E. Evaluative Reviews

- Locate critical reviews of books in a reviewing source , such as the Articles & Full Text , Book Review Index , Book Review Digest, and ProQuest Research Library . Is the review positive? Is the book under review considered a valuable contribution to the field? Does the reviewer mention other books that might be better? If so, locate these sources for more information on your topic.

- Do the various reviewers agree on the value or attributes of the book or has it aroused controversy among the critics?

- For Web sites, consider consulting this evaluation source from UC Berkeley .

Permissions Information

If you wish to use or adapt any or all of the content of this Guide go to Cornell Library's Research Guides Use Conditions to review our use permissions and our Creative Commons license.

- Next: Tips >>

- Last Updated: Jun 21, 2024 3:08 PM

- URL: https://guides.library.cornell.edu/critically_analyzing

We’re reviewing our resources this fall (September-December 2024). We will do our best to minimize disruption, but you might notice changes over the next few months as we correct errors & delete redundant resources.

Critical Analysis and Evaluation

Many assignments ask you to critique and evaluate a source. Sources might include journal articles, books, websites, government documents, portfolios, podcasts, or presentations.

When you critique, you offer both negative and positive analysis of the content, writing, and structure of a source.

When you evaluate , you assess how successful a source is at presenting information, measured against a standard or certain criteria.

Elements of a critical analysis:

opinion + evidence from the article + justification

Your opinion is your thoughtful reaction to the piece.

Evidence from the article offers some proof to back up your opinion.

The justification is an explanation of how you arrived at your opinion or why you think it’s true.

How do you critique and evaluate?

When critiquing and evaluating someone else’s writing/research, your purpose is to reach an informed opinion about a source. In order to do that, try these three steps:

- How do you feel?

- What surprised you?

- What left you confused?

- What pleased or annoyed you?

- What was interesting?

- What is the purpose of this text?

- Who is the intended audience?

- What kind of bias is there?

- What was missing?

- See our resource on analysis and synthesis ( Move From Research to Writing: How to Think ) for other examples of questions to ask.

- sophisticated

- interesting

- undocumented

- disorganized

- superficial

- unconventional

- inappropriate interpretation of evidence

- unsound or discredited methodology

- traditional

- unsubstantiated

- unsupported

- well-researched

- easy to understand

- Opinion : This article’s assessment of the power balance in cities is confusing.

- Evidence: It first says that the power to shape policy is evenly distributed among citizens, local government, and business (Rajal, 232).

- Justification : but then it goes on to focus almost exclusively on business. Next, in a much shorter section, it combines the idea of citizens and local government into a single point of evidence. This leaves the reader with the impression that the citizens have no voice at all. It is not helpful in trying to determine the role of the common voter in shaping public policy.

Sample criteria for critical analysis

Sometimes the assignment will specify what criteria to use when critiquing and evaluating a source. If not, consider the following prompts to approach your analysis. Choose the questions that are most suitable for your source.

- What do you think about the quality of the research? Is it significant?

- Did the author answer the question they set out to? Did the author prove their thesis?

- Did you find contradictions to other things you know?

- What new insight or connections did the author make?

- How does this piece fit within the context of your course, or the larger body of research in the field?

- The structure of an article or book is often dictated by standards of the discipline or a theoretical model. Did the piece meet those standards?

- Did the piece meet the needs of the intended audience?

- Was the material presented in an organized and logical fashion?

- Is the argument cohesive and convincing? Is the reasoning sound? Is there enough evidence?

- Is it easy to read? Is it clear and easy to understand, even if the concepts are sophisticated?

- University of Oregon Libraries

- Research Guides

How to Write a Literature Review

- 5. Critically Analyze and Evaluate

- Literature Reviews: A Recap

- Reading Journal Articles

- Does it Describe a Literature Review?

- 1. Identify the Question

- 2. Review Discipline Styles

- Searching Article Databases

- Finding Full-Text of an Article

- Citation Chaining

- When to Stop Searching

- 4. Manage Your References

Critically analyze and evaluate

Tip: read and annotate pdfs.

- 6. Synthesize

- 7. Write a Literature Review

Ask yourself questions like these about each book or article you include:

- What is the research question?

- What is the primary methodology used?

- How was the data gathered?

- How is the data presented?

- What are the main conclusions?

- Are these conclusions reasonable?

- What theories are used to support the researcher's conclusions?

Take notes on the articles as you read them and identify any themes or concepts that may apply to your research question.

This sample template (below) may also be useful for critically reading and organizing your articles. Or you can use this online form and email yourself a copy .

- Sample Template for Critical Analysis of the Literature

Opening an article in PDF format in Acrobat Reader will allow you to use "sticky notes" and "highlighting" to make notes on the article without printing it out. Make sure to save the edited file so you don't lose your notes!

Some Citation Managers like Mendeley also have highlighting and annotation features.Here's a screen capture of a pdf in Mendeley with highlighting, notes, and various colors:

Screen capture from a UO Librarian's Mendeley Desktop app

- Learn more about citation management software in the previous step: 4. Manage Your References

- << Previous: 4. Manage Your References

- Next: 6. Synthesize >>

- Last Updated: Aug 12, 2024 11:48 AM

- URL: https://researchguides.uoregon.edu/litreview

Contact Us Library Accessibility UO Libraries Privacy Notices and Procedures

1501 Kincaid Street Eugene, OR 97403 P: 541-346-3053 F: 541-346-3485

- Visit us on Facebook

- Visit us on Twitter

- Visit us on Youtube

- Visit us on Instagram

- Report a Concern

- Nondiscrimination and Title IX

- Accessibility

- Privacy Policy

- Find People

Writing Academically

Proofreading, other editing & coaching for highly successful academic writing

- Editing & coaching pricing

- Academic coaching

- How to conduct a targeted literature search

How to write a successful critical analysis

- How to write a strong literature review

- Cautious in tone

- Formal English

- Precise and concise English

- Impartial and objective English

- Substantiate your claims

- The academic team

For further queries or assistance in writing a critical analysis email Bill Wrigley .

What do you critically analyse?

In a critical analysis you do not express your own opinion or views on the topic. You need to develop your thesis, position or stance on the topic from the views and research of others . In academic writing you critically analyse other researchers’:

- concepts, terms

- viewpoints, arguments, positions

- methodologies, approaches

- research results and conclusions

This means weighing up the strength of the arguments or research support on the topic, and deciding who or what has the more or stronger weight of evidence or support.

Therefore, your thesis argues, with evidence, why a particular theory, concept, viewpoint, methodology, or research result(s) is/are stronger, more sound, or more advantageous than others.

What does ‘analysis’ mean?

A critical analysis means analysing or breaking down the parts of the literature and grouping these into themes, patterns or trends.

In an analysis you need to:

1. Identify and separate out the parts of the topic by grouping the various key theories, main concepts, the main arguments or ideas, and the key research results and conclusions on the topic into themes, patterns or trends of agreement , dispute and omission .

2. Discuss each of these parts by explaining:

i. the areas of agreement/consensus, or similarity

ii. the issues or controversies: in dispute or debate, areas of difference

ii. the omissions, gaps, or areas that are under-researched

3. Discuss the relationship between these parts

4. Examine how each contributes to the whole topic

5. Make conclusions about their significance or importance in the topic

What does ‘critical’ mean?

A critical analysis does not mean writing angry, rude or disrespectful comments, or expressing your views in judgmental terms of black and white, good and bad, or right and wrong.

To be critical, or to critique, means to evaluate . Therefore, to write critically in an academic analysis means to:

- judge the quality, significance or worth of the theories, concepts, viewpoints, methodologies, and research results

- evaluate in a fair and balanced manner

- avoid extreme or emotional language

- strengths, advantages, benefits, gains, or improvements

- disadvantages, weaknesses, shortcomings, limitations, or drawbacks

How to critically analyse a theory, model or framework

The evaluative words used most often to refer to theory, model or framework are a sound theory or a strong theory.

The table below summarizes the criteria for judging the strengths and weaknesses of a theory:

- comprehensive

- empirically supported

- parsimonious

Evaluating a Theory, Model or Framework

The table below lists the criteria for the strengths and their corresponding weaknesses that are usually considered in a theory.

| Comprehensively accounts for main phenomena | overlooks or omits important features or concepts |

| Clear, detailed | vague, unexplained, ill-defined, misconceived |

| Main tenets or concepts are logical and consistent | concepts or tenets are inconsistent or contradictory |

| Practical, useful | impractical, unuseful |

| Applicable across a range of settings, contexts, groups and conditions | limited or narrow applicability |

| Empirically supported by a large body of evidence propositions and predictions are supported by evidence | supported by small or no body of evidence insufficient empirical support for the propositions and predictions |

| Up-to-date, accounts for new developments | outdated |

| Parsimonius (not excessive): simple, clear, with few variables | excessive, overly complex or complicated |

Critical analysis examples of theories

The following sentences are examples of the phrases used to explain strengths and weaknesses.

Smith’s (2005) theory appears up to date, practical and applicable across many divergent settings.

Brown’s (2010) theory, although parsimonious and logical, lacks a sufficient body of evidence to support its propositions and predictions

Little scientific evidence has been presented to support the premises of this theory.

One of the limitations with this theory is that it does not explain why…

A significant strength of this model is that it takes into account …

The propositions of this model appear unambiguous and logical.

A key problem with this framework is the conceptual inconsistency between ….

How to critically analyse a concept

The table below summarizes the criteria for judging the strengths and weaknesses of a concept:

- key variables identified

- clear and well-defined

Evaluating Concepts

| Key variables or constructs identified | key variables or constructs omitted or missed |

| Clear, well-defined, specific, precise | ambiguous, vague, ill-defined, overly general, imprecise, not sufficiently distinctive overinclusive, too broad, or narrowly defined |

| Meaningful, useful | conceptually flawed |

| Logical | contradictory |

| Relevant | questionable relevance |

| Up-to-date | out of date |

Critical analysis examples of concepts

Many researchers have used the concept of control in different ways.

There is little consensus about what constitutes automaticity.

Putting forth a very general definition of motivation means that it is possible that any behaviour could be included.

The concept of global education lacks clarity, is imprecisely defined and is overly complex.

Some have questioned the usefulness of resilience as a concept because it has been used so often and in so many contexts.

Research suggests that the concept of preoperative fasting is an outdated clinical approach.

How to critically analyse arguments, viewpoints or ideas

The table below summarizes the criteria for judging the strengths and weaknesses of an argument, viewpoint or idea:

- reasons support the argument

- argument is substantiated by evidence

- evidence for the argument is relevant

- evidence for the argument is unbiased, sufficient and important

- evidence is reputable

Evaluating Arguments, Views or Ideas

| Reasons and evidence provided support the argument | the reasons or evidence do not support the argument - overgeneralization |

| Substantiated (supported) by factual evidence | insufficient substantiation (support) |

| Evidence is relevant and believable | Based on peripheral or irrelevant evidence |

| Unbiased: sufficient or important evidence or ideas included and considered. | biased: overlooks, omits, disregards, or is selective with important or relevant evidence or ideas. |

| Evidence from reputable or authoritative sources | evidence relies on non reputable or unrecognized sources |

| Balanced: considers opposing views | unbalanced: does not consider opposing views |

| Clear, not confused, unambiguous | confused, ambiguous |

| Logical, consistent | the reasons do not follow logically from and support the arguments; arguments or ideas are inconsistent |

| Convincing | unconvincing |

Critical analysis examples of arguments, viewpoints or ideas

The validity of this argument is questionable as there is insufficient evidence to support it.

Many writers have challenged Jones’ claim on the grounds that …….

This argument fails to draw on the evidence of others in the field.

This explanation is incomplete because it does not explain why…

The key problem with this explanation is that ……

The existing accounts fail to resolve the contradiction between …

However, there is an inconsistency with this argument. The inconsistency lies in…

Although this argument has been proposed by some, it lacks justification.

However, the body of evidence showing that… contradicts this argument.

How to critically analyse a methodology

The table below provides the criteria for judging the strengths and weaknesses of methodology.

An evaluation of a methodology usually involves a critical analysis of its main sections:

design; sampling (participants); measurement tools and materials; procedure

- design tests the hypotheses or research questions

- method valid and reliable

- potential bias or measurement error, and confounding variables addressed

- method allows results to be generalized

- representative sampling of cohort and phenomena; sufficient response rate

- valid and reliable measurement tools

- valid and reliable procedure

- method clear and detailed to allow replication

Evaluating a Methodology

| Research design tests the hypotheses or research questions | research design is inappropriate for the hypotheses or research questions |

| Valid and reliable method | dubious, questionable validity |

| The method addresses potential sources of bias or measurement error. confounding variables were identified | insufficiently rigorous measurement error produces questionable or unreliable confounding variables not identified or addressed |

| The method (sample, measurement tools, procedure) allows results to be generalized or transferred. Sampling was representative to enable generalization | generalizability of the results is limited due to an unrepresentative sample: small sample size or limited sample range |

| Sampling of cohort was representative to enable generalization sampling of phenomena under investigation sufficiently wide and representative sampling response rate was sufficiently high | limited generalizability of results due to unrepresentative sample: small sample size or limited sample range of cohort or phenomena under investigation sampling response rate was too low |

| Measurement tool(s) / instrument(s), appropriate, reliable and valid measurements were accurate | inappropriate measurement tools; incomplete or ambiguous scale items inaccurate measurement reliability statistics from previous research for measurement tool not reported measurement instrument items are ambiguous, unclear, contradictory |

| Procedure reliable and valid | Measurement error from administration of the measurement tool(s) |

| Method was clearly explained and sufficiently detailed to allow replication | Explanation of the methodology (or parts of it, for example the Procedure) is unclear, confused, imprecise, ambiguous, inconsistent or contradictory |

Critical analysis examples of a methodology

The unrepresentativeness of the sample makes these results misleading.

The presence of unmeasured variables in this study limits the interpretation of the results.

Other, unmeasured confounding variables may be influencing this association.

The interpretation of the data requires caution because the effect of confounding variables was not taken into account.

The insufficient control of several response biases in this study means the results are likely to be unreliable.

Although this correlational study shows association between the variables, it does not establish a causal relationship.

Taken together, the methodological shortcomings of this study suggest the need for serious caution in the meaningful interpretation of the study’s results.

How to critically analyse research results and conclusions

The table below provides the criteria for judging the strengths and weaknesses of research results and conclusions:

- appropriate choice and use of statistics

- correct interpretation of results

- all results explained

- alternative explanations considered

- significance of all results discussed

- consistency of results with previous research discussed

- results add to existing understanding or knowledge

- limitations discussed

- results clearly explained

- conclusions consistent with results

Evaluating the Results and Conclusions

| Chose and used appropriate statistics | inappropriate choice or use of statistics |

| Results interpreted correctly or accurately | incorrect interpretation of results the results have been over-interpreted For example: correlation measures have been incorrectly interpreted to suggest causation rather than association |

| All results were explained, including inconsistent or misleading results | inconsistent or misleading results not explained |

| Alternative explanations for results were considered | unbalanced explanations: alternative explanations for results not explored |

| Significance of all results were considered | incomplete consideration of results |

| Results considered according to consistency with other research or viewpoints Results are conclusive because they have been replicated by other studies | consistency of results with other research not considered results are suggestive rather than conclusive because they have not been replicated by other studies |

| Results add significantly to existing understanding or knowledge | results do not significantly add to existing understanding knowledge |

| Limitations of the research design or method are acknowledged | limitations of the research design or method not considered |

| Results were clearly explained, sufficiently detailed, consistent | results were unclear, insufficiently detailed, inconsistent, confusing, ambiguous, contradictory |

| Conclusions were consistent with and supported by the results | conclusions were not consistent with or not supported by the results |

Leave a Reply

Click here to cancel reply.

You must be logged in to post a comment.

WRITING FORMATS FOR EDITING OR COACHING

- Essay or assignment

- Thesis or dissertation

- Proposal for PhD or Masters research

- Literature review

- Journal article or book chapter

- IELTS writing tasks 1 & 2 for general and academic writing

- Resumes & cover letters

- Presentations

- Applications & submissions

Do you have a question?

- Academic writing skills

- Academic English skills

- The Academic team

- Privacy policy

- Terms and conditions

- ABN: 15796080518

- 66 Mungarie Street, Keperra, Qld 4054 Australia

- Email: [email protected]

Website design and development by Caboodle Web

How to read a paper, critical review

Reading a scientific article is a complex task. The worst way to approach this task is to treat it like the reading of a textbook—reading from title to literature cited, digesting every word along the way without any reflection or criticism.

A critical review (sometimes called a critique, critical commentary, critical appraisal, critical analysis) is a detailed commentary on and critical evaluation of a text. You might carry out a critical review as a stand-alone exercise, or as part of your research and preparation for writing a literature review. The following guidelines are designed to help you critically evaluate a research article.

How to Read a Scientific Article

You should begin by skimming the article to identify its structure and features. As you read, look for the author’s main points.

- Generate questions before, during, and after reading.

- Draw inferences based on your own experiences and knowledge.

- To really improve understanding and recall, take notes as you read.

What is meant by critical and evaluation?

- To be critical does not mean to criticise in an exclusively negative manner. To be critical of a text means you question the information and opinions in the text, in an attempt to evaluate or judge its worth overall.

- An evaluation is an assessment of the strengths and weaknesses of a text. This should relate to specific criteria, in the case of a research article. You have to understand the purpose of each section, and be aware of the type of information and evidence that are needed to make it convincing, before you can judge its overall value to the research article as a whole.

Useful Downloads

- How to read a scientific paper

- How to conduct a critical review

- The Open University

- Accessibility hub

- Guest user / Sign out

- Study with The Open University

My OpenLearn Profile

Personalise your OpenLearn profile, save your favourite content and get recognition for your learning

About this free course

Become an ou student, download this course, share this free course.

Start this free course now. Just create an account and sign in. Enrol and complete the course for a free statement of participation or digital badge if available.

1 Important points to consider when critically evaluating published research papers

Simple review articles (also referred to as ‘narrative’ or ‘selective’ reviews), systematic reviews and meta-analyses provide rapid overviews and ‘snapshots’ of progress made within a field, summarising a given topic or research area. They can serve as useful guides, or as current and comprehensive ‘sources’ of information, and can act as a point of reference to relevant primary research studies within a given scientific area. Narrative or systematic reviews are often used as a first step towards a more detailed investigation of a topic or a specific enquiry (a hypothesis or research question), or to establish critical awareness of a rapidly-moving field (you will be required to demonstrate this as part of an assignment, an essay or a dissertation at postgraduate level).

The majority of primary ‘empirical’ research papers essentially follow the same structure (abbreviated here as IMRAD). There is a section on Introduction, followed by the Methods, then the Results, which includes figures and tables showing data described in the paper, and a Discussion. The paper typically ends with a Conclusion, and References and Acknowledgements sections.

The Title of the paper provides a concise first impression. The Abstract follows the basic structure of the extended article. It provides an ‘accessible’ and concise summary of the aims, methods, results and conclusions. The Introduction provides useful background information and context, and typically outlines the aims and objectives of the study. The Abstract can serve as a useful summary of the paper, presenting the purpose, scope and major findings. However, simply reading the abstract alone is not a substitute for critically reading the whole article. To really get a good understanding and to be able to critically evaluate a research study, it is necessary to read on.

While most research papers follow the above format, variations do exist. For example, the results and discussion sections may be combined. In some journals the materials and methods may follow the discussion, and in two of the most widely read journals, Science and Nature, the format does vary from the above due to restrictions on the length of articles. In addition, there may be supporting documents that accompany a paper, including supplementary materials such as supporting data, tables, figures, videos and so on. There may also be commentaries or editorials associated with a topical research paper, which provide an overview or critique of the study being presented.

Box 1 Key questions to ask when appraising a research paper

- Is the study’s research question relevant?

- Does the study add anything new to current knowledge and understanding?

- Does the study test a stated hypothesis?

- Is the design of the study appropriate to the research question?

- Do the study methods address key potential sources of bias?

- Were suitable ‘controls’ included in the study?

- Were the statistical analyses appropriate and applied correctly?

- Is there a clear statement of findings?

- Does the data support the authors’ conclusions?

- Are there any conflicts of interest or ethical concerns?

There are various strategies used in reading a scientific research paper, and one of these is to start with the title and the abstract, then look at the figures and tables, and move on to the introduction, before turning to the results and discussion, and finally, interrogating the methods.

Another strategy (outlined below) is to begin with the abstract and then the discussion, take a look at the methods, and then the results section (including any relevant tables and figures), before moving on to look more closely at the discussion and, finally, the conclusion. You should choose a strategy that works best for you. However, asking the ‘right’ questions is a central feature of critical appraisal, as with any enquiry, so where should you begin? Here are some critical questions to consider when evaluating a research paper.

Look at the Abstract and then the Discussion : Are these accessible and of general relevance or are they detailed, with far-reaching conclusions? Is it clear why the study was undertaken? Why are the conclusions important? Does the study add anything new to current knowledge and understanding? The reasons why a particular study design or statistical method were chosen should also be clear from reading a research paper. What is the research question being asked? Does the study test a stated hypothesis? Is the design of the study appropriate to the research question? Have the authors considered the limitations of their study and have they discussed these in context?

Take a look at the Methods : Were there any practical difficulties that could have compromised the study or its implementation? Were these considered in the protocol? Were there any missing values and, if so, was the number of missing values too large to permit meaningful analysis? Was the number of samples (cases or participants) too small to establish meaningful significance? Do the study methods address key potential sources of bias? Were suitable ‘controls’ included in the study? If controls are missing or not appropriate to the study design, we cannot be confident that the results really show what is happening in an experiment. Were the statistical analyses appropriate and applied correctly? Do the authors point out the limitations of methods or tests used? Were the methods referenced and described in sufficient detail for others to repeat or extend the study?

Take a look at the Results section and relevant tables and figures : Is there a clear statement of findings? Were the results expected? Do they make sense? What data supports them? Do the tables and figures clearly describe the data (highlighting trends etc.)? Try to distinguish between what the data show and what the authors say they show (i.e. their interpretation).

Moving on to look in greater depth at the Discussion and Conclusion : Are the results discussed in relation to similar (previous) studies? Do the authors indulge in excessive speculation? Are limitations of the study adequately addressed? Were the objectives of the study met and the hypothesis supported or refuted (and is a clear explanation provided)? Does the data support the authors’ conclusions? Maybe there is only one experiment to support a point. More often, several different experiments or approaches combine to support a particular conclusion. A rule of thumb here is that if multiple approaches and multiple lines of evidence from different directions are presented, and all point to the same conclusion, then the conclusions are more credible. But do question all assumptions. Identify any implicit or hidden assumptions that the authors may have used when interpreting their data. Be wary of data that is mixed up with interpretation and speculation! Remember, just because it is published, does not mean that it is right.

O ther points you should consider when evaluating a research paper : Are there any financial, ethical or other conflicts of interest associated with the study, its authors and sponsors? Are there ethical concerns with the study itself? Looking at the references, consider if the authors have preferentially cited their own previous publications (i.e. needlessly), and whether the list of references are recent (ensuring that the analysis is up-to-date). Finally, from a practical perspective, you should move beyond the text of a research paper, talk to your peers about it, consult available commentaries, online links to references and other external sources to help clarify any aspects you don’t understand.

The above can be taken as a general guide to help you begin to critically evaluate a scientific research paper, but only in the broadest sense. Do bear in mind that the way that research evidence is critiqued will also differ slightly according to the type of study being appraised, whether observational or experimental, and each study will have additional aspects that would need to be evaluated separately. For criteria recommended for the evaluation of qualitative research papers, see the article by Mildred Blaxter (1996), available online. Details are in the References.

Activity 1 Critical appraisal of a scientific research paper

A critical appraisal checklist, which you can download via the link below, can act as a useful tool to help you to interrogate research papers. The checklist is divided into four sections, broadly covering:

- some general aspects

- research design and methodology

- the results

- discussion, conclusion and references.

Science perspective – critical appraisal checklist [ Tip: hold Ctrl and click a link to open it in a new tab. ( Hide tip ) ]

- Identify and obtain a research article based on a topic of your own choosing, using a search engine such as Google Scholar or PubMed (for example).

- The selection criteria for your target paper are as follows: the article must be an open access primary research paper (not a review) containing empirical data, published in the last 2–3 years, and preferably no more than 5–6 pages in length.

- Critically evaluate the research paper using the checklist provided, making notes on the key points and your overall impression.

Critical appraisal checklists are useful tools to help assess the quality of a study. Assessment of various factors, including the importance of the research question, the design and methodology of a study, the validity of the results and their usefulness (application or relevance), the legitimacy of the conclusions, and any potential conflicts of interest, are an important part of the critical appraisal process. Limitations and further improvements can then be considered.

- Critical Appraisal Tools

- Introduction

- Related Guides

- Getting Help

Critical Appraisal of Studies

Critical appraisal is the process of carefully and systematically examining research to judge its trustworthiness, and its value/relevance in a particular context by providing a framework to evaluate the research. During the critical appraisal process, researchers can:

- Decide whether studies have been undertaken in a way that makes their findings reliable as well as valid and unbiased

- Make sense of the results

- Know what these results mean in the context of the decision they are making

- Determine if the results are relevant to their patients/schoolwork/research

Burls, A. (2009). What is critical appraisal? In What Is This Series: Evidence-based medicine. Available online at What is Critical Appraisal?

Critical appraisal is included in the process of writing high quality reviews, like systematic and integrative reviews and for evaluating evidence from RCTs and other study designs. For more information on systematic reviews, check out our Systematic Review guide.

- Next: Critical Appraisal Tools >>

- Last Updated: Nov 16, 2023 1:27 PM

- URL: https://guides.library.duq.edu/critappraise

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Review Article

- Published: 20 January 2009

How to critically appraise an article

- Jane M Young 1 &

- Michael J Solomon 2

Nature Clinical Practice Gastroenterology & Hepatology volume 6 , pages 82–91 ( 2009 ) Cite this article

52k Accesses

99 Citations

447 Altmetric

Metrics details

Critical appraisal is a systematic process used to identify the strengths and weaknesses of a research article in order to assess the usefulness and validity of research findings. The most important components of a critical appraisal are an evaluation of the appropriateness of the study design for the research question and a careful assessment of the key methodological features of this design. Other factors that also should be considered include the suitability of the statistical methods used and their subsequent interpretation, potential conflicts of interest and the relevance of the research to one's own practice. This Review presents a 10-step guide to critical appraisal that aims to assist clinicians to identify the most relevant high-quality studies available to guide their clinical practice.

Critical appraisal is a systematic process used to identify the strengths and weaknesses of a research article

Critical appraisal provides a basis for decisions on whether to use the results of a study in clinical practice

Different study designs are prone to various sources of systematic bias

Design-specific, critical-appraisal checklists are useful tools to help assess study quality

Assessments of other factors, including the importance of the research question, the appropriateness of statistical analysis, the legitimacy of conclusions and potential conflicts of interest are an important part of the critical appraisal process

This is a preview of subscription content, access via your institution

Access options

Subscribe to this journal

Receive 12 print issues and online access

195,33 € per year

only 16,28 € per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Making sense of the literature: an introduction to critical appraisal for the primary care practitioner

How to appraise the literature: basic principles for the busy clinician - part 2: systematic reviews and meta-analyses

How to appraise the literature: basic principles for the busy clinician - part 1: randomised controlled trials

Druss BG and Marcus SC (2005) Growth and decentralisation of the medical literature: implications for evidence-based medicine. J Med Libr Assoc 93 : 499–501

PubMed PubMed Central Google Scholar

Glasziou PP (2008) Information overload: what's behind it, what's beyond it? Med J Aust 189 : 84–85

PubMed Google Scholar

Last JE (Ed.; 2001) A Dictionary of Epidemiology (4th Edn). New York: Oxford University Press

Google Scholar

Sackett DL et al . (2000). Evidence-based Medicine. How to Practice and Teach EBM . London: Churchill Livingstone

Guyatt G and Rennie D (Eds; 2002). Users' Guides to the Medical Literature: a Manual for Evidence-based Clinical Practice . Chicago: American Medical Association

Greenhalgh T (2000) How to Read a Paper: the Basics of Evidence-based Medicine . London: Blackwell Medicine Books

MacAuley D (1994) READER: an acronym to aid critical reading by general practitioners. Br J Gen Pract 44 : 83–85

CAS PubMed PubMed Central Google Scholar

Hill A and Spittlehouse C (2001) What is critical appraisal. Evidence-based Medicine 3 : 1–8 [ http://www.evidence-based-medicine.co.uk ] (accessed 25 November 2008)

Public Health Resource Unit (2008) Critical Appraisal Skills Programme (CASP) . [ http://www.phru.nhs.uk/Pages/PHD/CASP.htm ] (accessed 8 August 2008)

National Health and Medical Research Council (2000) How to Review the Evidence: Systematic Identification and Review of the Scientific Literature . Canberra: NHMRC

Elwood JM (1998) Critical Appraisal of Epidemiological Studies and Clinical Trials (2nd Edn). Oxford: Oxford University Press

Agency for Healthcare Research and Quality (2002) Systems to rate the strength of scientific evidence? Evidence Report/Technology Assessment No 47, Publication No 02-E019 Rockville: Agency for Healthcare Research and Quality

Crombie IK (1996) The Pocket Guide to Critical Appraisal: a Handbook for Health Care Professionals . London: Blackwell Medicine Publishing Group

Heller RF et al . (2008) Critical appraisal for public health: a new checklist. Public Health 122 : 92–98

Article Google Scholar

MacAuley D et al . (1998) Randomised controlled trial of the READER method of critical appraisal in general practice. BMJ 316 : 1134–37

Article CAS Google Scholar

Parkes J et al . Teaching critical appraisal skills in health care settings (Review). Cochrane Database of Systematic Reviews 2005, Issue 3. Art. No.: cd001270. 10.1002/14651858.cd001270

Mays N and Pope C (2000) Assessing quality in qualitative research. BMJ 320 : 50–52

Hawking SW (2003) On the Shoulders of Giants: the Great Works of Physics and Astronomy . Philadelphia, PN: Penguin

National Health and Medical Research Council (1999) A Guide to the Development, Implementation and Evaluation of Clinical Practice Guidelines . Canberra: National Health and Medical Research Council

US Preventive Services Taskforce (1996) Guide to clinical preventive services (2nd Edn). Baltimore, MD: Williams & Wilkins

Solomon MJ and McLeod RS (1995) Should we be performing more randomized controlled trials evaluating surgical operations? Surgery 118 : 456–467

Rothman KJ (2002) Epidemiology: an Introduction . Oxford: Oxford University Press

Young JM and Solomon MJ (2003) Improving the evidence-base in surgery: sources of bias in surgical studies. ANZ J Surg 73 : 504–506

Margitic SE et al . (1995) Lessons learned from a prospective meta-analysis. J Am Geriatr Soc 43 : 435–439

Shea B et al . (2001) Assessing the quality of reports of systematic reviews: the QUORUM statement compared to other tools. In Systematic Reviews in Health Care: Meta-analysis in Context 2nd Edition, 122–139 (Eds Egger M. et al .) London: BMJ Books

Chapter Google Scholar

Easterbrook PH et al . (1991) Publication bias in clinical research. Lancet 337 : 867–872

Begg CB and Berlin JA (1989) Publication bias and dissemination of clinical research. J Natl Cancer Inst 81 : 107–115

Moher D et al . (2000) Improving the quality of reports of meta-analyses of randomised controlled trials: the QUORUM statement. Br J Surg 87 : 1448–1454

Shea BJ et al . (2007) Development of AMSTAR: a measurement tool to assess the methodological quality of systematic reviews. BMC Medical Research Methodology 7 : 10 [10.1186/1471-2288-7-10]

Stroup DF et al . (2000) Meta-analysis of observational studies in epidemiology: a proposal for reporting. Meta-analysis Of Observational Studies in Epidemiology (MOOSE) group. JAMA 283 : 2008–2012

Young JM and Solomon MJ (2003) Improving the evidence-base in surgery: evaluating surgical effectiveness. ANZ J Surg 73 : 507–510

Schulz KF (1995) Subverting randomization in controlled trials. JAMA 274 : 1456–1458

Schulz KF et al . (1995) Empirical evidence of bias. Dimensions of methodological quality associated with estimates of treatment effects in controlled trials. JAMA 273 : 408–412

Moher D et al . (2001) The CONSORT statement: revised recommendations for improving the quality of reports of parallel group randomized trials. BMC Medical Research Methodology 1 : 2 [ http://www.biomedcentral.com/ 1471-2288/1/2 ] (accessed 25 November 2008)

Rochon PA et al . (2005) Reader's guide to critical appraisal of cohort studies: 1. Role and design. BMJ 330 : 895–897

Mamdani M et al . (2005) Reader's guide to critical appraisal of cohort studies: 2. Assessing potential for confounding. BMJ 330 : 960–962

Normand S et al . (2005) Reader's guide to critical appraisal of cohort studies: 3. Analytical strategies to reduce confounding. BMJ 330 : 1021–1023

von Elm E et al . (2007) Strengthening the reporting of observational studies in epidemiology (STROBE) statement: guidelines for reporting observational studies. BMJ 335 : 806–808

Sutton-Tyrrell K (1991) Assessing bias in case-control studies: proper selection of cases and controls. Stroke 22 : 938–942

Knottnerus J (2003) Assessment of the accuracy of diagnostic tests: the cross-sectional study. J Clin Epidemiol 56 : 1118–1128

Furukawa TA and Guyatt GH (2006) Sources of bias in diagnostic accuracy studies and the diagnostic process. CMAJ 174 : 481–482

Bossyut PM et al . (2003)The STARD statement for reporting studies of diagnostic accuracy: explanation and elaboration. Ann Intern Med 138 : W1–W12

STARD statement (Standards for the Reporting of Diagnostic Accuracy Studies). [ http://www.stard-statement.org/ ] (accessed 10 September 2008)

Raftery J (1998) Economic evaluation: an introduction. BMJ 316 : 1013–1014

Palmer S et al . (1999) Economics notes: types of economic evaluation. BMJ 318 : 1349

Russ S et al . (1999) Barriers to participation in randomized controlled trials: a systematic review. J Clin Epidemiol 52 : 1143–1156

Tinmouth JM et al . (2004) Are claims of equivalency in digestive diseases trials supported by the evidence? Gastroentrology 126 : 1700–1710

Kaul S and Diamond GA (2006) Good enough: a primer on the analysis and interpretation of noninferiority trials. Ann Intern Med 145 : 62–69

Piaggio G et al . (2006) Reporting of noninferiority and equivalence randomized trials: an extension of the CONSORT statement. JAMA 295 : 1152–1160

Heritier SR et al . (2007) Inclusion of patients in clinical trial analysis: the intention to treat principle. In Interpreting and Reporting Clinical Trials: a Guide to the CONSORT Statement and the Principles of Randomized Controlled Trials , 92–98 (Eds Keech A. et al .) Strawberry Hills, NSW: Australian Medical Publishing Company

National Health and Medical Research Council (2007) National Statement on Ethical Conduct in Human Research 89–90 Canberra: NHMRC

Lo B et al . (2000) Conflict-of-interest policies for investigators in clinical trials. N Engl J Med 343 : 1616–1620

Kim SYH et al . (2004) Potential research participants' views regarding researcher and institutional financial conflicts of interests. J Med Ethics 30 : 73–79

Komesaroff PA and Kerridge IH (2002) Ethical issues concerning the relationships between medical practitioners and the pharmaceutical industry. Med J Aust 176 : 118–121

Little M (1999) Research, ethics and conflicts of interest. J Med Ethics 25 : 259–262

Lemmens T and Singer PA (1998) Bioethics for clinicians: 17. Conflict of interest in research, education and patient care. CMAJ 159 : 960–965

Download references

Author information

Authors and affiliations.

JM Young is an Associate Professor of Public Health and the Executive Director of the Surgical Outcomes Research Centre at the University of Sydney and Sydney South-West Area Health Service, Sydney,

Jane M Young

MJ Solomon is Head of the Surgical Outcomes Research Centre and Director of Colorectal Research at the University of Sydney and Sydney South-West Area Health Service, Sydney, Australia.,

Michael J Solomon

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Jane M Young .

Ethics declarations

Competing interests.

The authors declare no competing financial interests.

Rights and permissions

Reprints and permissions

About this article

Cite this article.

Young, J., Solomon, M. How to critically appraise an article. Nat Rev Gastroenterol Hepatol 6 , 82–91 (2009). https://doi.org/10.1038/ncpgasthep1331

Download citation

Received : 10 August 2008

Accepted : 03 November 2008

Published : 20 January 2009

Issue Date : February 2009

DOI : https://doi.org/10.1038/ncpgasthep1331

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Emergency physicians’ perceptions of critical appraisal skills: a qualitative study.

- Sumintra Wood

- Jacqueline Paulis

- Angela Chen

BMC Medical Education (2022)

An integrative review on individual determinants of enrolment in National Health Insurance Scheme among older adults in Ghana

- Anthony Kwame Morgan

- Anthony Acquah Mensah

BMC Primary Care (2022)

Autopsy findings of COVID-19 in children: a systematic review and meta-analysis

- Anju Khairwa

- Kana Ram Jat

Forensic Science, Medicine and Pathology (2022)

The use of a modified Delphi technique to develop a critical appraisal tool for clinical pharmacokinetic studies

- Alaa Bahaa Eldeen Soliman

- Shane Ashley Pawluk

- Ousama Rachid

International Journal of Clinical Pharmacy (2022)

Critical Appraisal: Analysis of a Prospective Comparative Study Published in IJS

- Ramakrishna Ramakrishna HK

- Swarnalatha MC

Indian Journal of Surgery (2021)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

- University Libraries

- Research Guides

- Critical Evaluation

Authority: Critical Evaluation

- World Views and Voices

- Understanding Peer Review

Critical Evaluation of Information Sources

After initial evaluation of a source, the next step is to go deeper. This includes a wide variety of techniques and may depend on the type of source. In the case of research, it will include evaluating the methodology used in the study and requires you to have knowledge of those discipline-specific methods. If you are just beginning your academic career or just entered a new field, you will likely need to learn more about the methodologies used in order to fully understand and evaluate this part of a study.

Lateral reading is a technique that can, and should, be applied to any source type. In the case of a research study, looking for the older articles that influenced the one you selected can give you a better understanding of the issues and context. Reading articles that were published after can give you an idea of how scholars are pushing that research to the next step. This can also help with understanding how scholars engage with each other in conversation through research and even how the academic system privileges certain voices and established authorities in the conversation. You might find articles that respond directly to studies that provide insight into evaluation and critique within that discipline.

Evaluation at this level is central to developing a better understanding of your own research question by learning from these scholarly conversations and how authority is tested.

Check out the resources below to help you with this stage of evaluation.

Scientific Method/Methodologies

Here is a general overview of how the scientific method works and how scholars evaluate their work using critical thinking. This same process is used when scholars write up their scholarly work.

The Steps of the Scientific Method

Question something that was observed, do background research to better understand, formulate a hypothesis (research question), create an experiment or method for studying the question, run the experiment and record the results, think critically about what the results mean, suggest conclusions and report back, lateral reading.

Critical Thinking

Thinking critically about the information you encounter is central to how you develop your own conclusions, judgement, and position. This analysis is what will allow you to make a valuable contribution of your own to the scholarly conversation.

- TEDEd: Dig Deeper on the 5 Tips to Improve Your Critical Thinking

- The Foundation for Critical Thinking: College and University Students

- Stanford Encyclopedia of Philosophy: Critical Thinking

Scholarship as Conversation

It sounds pretty bad if you say an article was retracted, but is it always? As with most things, it depends on the context. Someone retracting a statement made based on false information or misinformation is one thing. It happens fairly often in the case of social media--removed tweets or Instagram posts for example.

In scholarship, there are a number of reasons an article might be retracted. These range from errors in the methods used, experiment structure, data, etc. to issues of fraud or misrepresentation. Central to scholarship is the community of scholars actively participating in the scholarly conversation even after the peer review process. Careful analysis of published research by other scholars is vital to course correction.

In science research, it's a central part of the process ! An inherent part of discovery is basing conclusions on the information at hand and repeating the process to gather more information. If further research is done that provides new information and insight, that might mean an older conclusion gets corrected. Uncertainty is unsettling, but trust in the process means understanding the important role of retraction.

- << Previous: Evaluation

- Next: Resources >>

- Subscribe to journal Subscribe

- Get new issue alerts Get alerts

Secondary Logo

Journal logo.

Colleague's E-mail is Invalid

Your message has been successfully sent to your colleague.

Save my selection

A guide to critical appraisal of evidence

Fineout-Overholt, Ellen PhD, RN, FNAP, FAAN

Ellen Fineout-Overholt is the Mary Coulter Dowdy Distinguished Professor of Nursing at the University of Texas at Tyler School of Nursing, Tyler, Tex.

The author has disclosed no financial relationships related to this article.

Critical appraisal is the assessment of research studies' worth to clinical practice. Critical appraisal—the heart of evidence-based practice—involves four phases: rapid critical appraisal, evaluation, synthesis, and recommendation. This article reviews each phase and provides examples, tips, and caveats to help evidence appraisers successfully determine what is known about a clinical issue. Patient outcomes are improved when clinicians apply a body of evidence to daily practice.

How do nurses assess the quality of clinical research? This article outlines a stepwise approach to critical appraisal of research studies' worth to clinical practice: rapid critical appraisal, evaluation, synthesis, and recommendation. When critical care nurses apply a body of valid, reliable, and applicable evidence to daily practice, patient outcomes are improved.

Critical care nurses can best explain the reasoning for their clinical actions when they understand the worth of the research supporting their practices. In c ritical appraisal , clinicians assess the worth of research studies to clinical practice. Given that achieving improved patient outcomes is the reason patients enter the healthcare system, nurses must be confident their care techniques will reliably achieve best outcomes.

Nurses must verify that the information supporting their clinical care is valid, reliable, and applicable. Validity of research refers to the quality of research methods used, or how good of a job researchers did conducting a study. Reliability of research means similar outcomes can be achieved when the care techniques of a study are replicated by clinicians. Applicability of research means it was conducted in a similar sample to the patients for whom the findings will be applied. These three criteria determine a study's worth in clinical practice.

Appraising the worth of research requires a standardized approach. This approach applies to both quantitative research (research that deals with counting things and comparing those counts) and qualitative research (research that describes experiences and perceptions). The word critique has a negative connotation. In the past, some clinicians were taught that studies with flaws should be discarded. Today, it is important to consider all valid and reliable research informative to what we understand as best practice. Therefore, the author developed the critical appraisal methodology that enables clinicians to determine quickly which evidence is worth keeping and which must be discarded because of poor validity, reliability, or applicability.

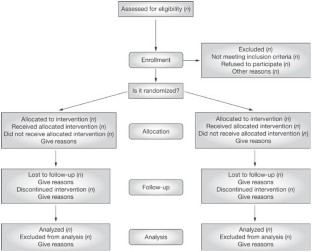

Evidence-based practice process

The evidence-based practice (EBP) process is a seven-step problem-solving approach that begins with data gathering (see Seven steps to EBP ). During daily practice, clinicians gather data supporting inquiry into a particular clinical issue (Step 0). The description is then framed as an answerable question (Step 1) using the PICOT question format ( P opulation of interest; I ssue of interest or intervention; C omparison to the intervention; desired O utcome; and T ime for the outcome to be achieved). 1 Consistently using the PICOT format helps ensure that all elements of the clinical issue are covered. Next, clinicians conduct a systematic search to gather data answering the PICOT question (Step 2). Using the PICOT framework, clinicians can systematically search multiple databases to find available studies to help determine the best practice to achieve the desired outcome for their patients. When the systematic search is completed, the work of critical appraisal begins (Step 3). The known group of valid and reliable studies that answers the PICOT question is called the body of evidence and is the foundation for the best practice implementation (Step 4). Next, clinicians evaluate integration of best evidence with clinical expertise and patient preferences and values to determine if the outcomes in the studies are realized in practice (Step 5). Because healthcare is a community of practice, it is important that experiences with evidence implementation be shared, whether the outcome is what was expected or not. This enables critical care nurses concerned with similar care issues to better understand what has been successful and what has not (Step 6).

Critical appraisal of evidence

The first phase of critical appraisal, rapid critical appraisal, begins with determining which studies will be kept in the body of evidence. All valid, reliable, and applicable studies on the topic should be included. This is accomplished using design-specific checklists with key markers of good research. When clinicians determine a study is one they want to keep (a “keeper” study) and that it belongs in the body of evidence, they move on to phase 2, evaluation. 2

In the evaluation phase, the keeper studies are put together in a table so that they can be compared as a body of evidence, rather than individual studies. This phase of critical appraisal helps clinicians identify what is already known about a clinical issue. In the third phase, synthesis, certain data that provide a snapshot of a particular aspect of the clinical issue are pulled out of the evaluation table to showcase what is known. These snapshots of information underpin clinicians' decision-making and lead to phase 4, recommendation. A recommendation is a specific statement based on the body of evidence indicating what should be done—best practice. Critical appraisal is not complete without a specific recommendation. Each of the phases is explained in more detail below.

Phase 1: Rapid critical appraisal . Rapid critical appraisal involves using two tools that help clinicians determine if a research study is worthy of keeping in the body of evidence. The first tool, General Appraisal Overview for All Studies (GAO), covers the basics of all research studies (see Elements of the General Appraisal Overview for All Studies ). Sometimes, clinicians find gaps in knowledge about certain elements of research studies (for example, sampling or statistics) and need to review some content. Conducting an internet search for resources that explain how to read a research paper, such as an instructional video or step-by-step guide, can be helpful. Finding basic definitions of research methods often helps resolve identified gaps.

To accomplish the GAO, it is best to begin with finding out why the study was conducted and how it answers the PICOT question (for example, does it provide information critical care nurses want to know from the literature). If the study purpose helps answer the PICOT question, then the type of study design is evaluated. The study design is compared with the hierarchy of evidence for the type of PICOT question. The higher the design falls within the hierarchy or levels of evidence, the more confidence nurses can have in its finding, if the study was conducted well. 3,4 Next, find out what the researchers wanted to learn from their study. These are called the research questions or hypotheses. Research questions are just what they imply; insufficient information from theories or the literature are available to guide an educated guess, so a question is asked. Hypotheses are reasonable expectations guided by understanding from theory and other research that predicts what will be found when the research is conducted. The research questions or hypotheses provide the purpose of the study.

Next, the sample size is evaluated. Expectations of sample size are present for every study design. As an example, consider as a rule that quantitative study designs operate best when there is a sample size large enough to establish that relationships do not exist by chance. In general, the more participants in a study, the more confidence in the findings. Qualitative designs operate best with fewer people in the sample because these designs represent a deeper dive into the understanding or experience of each person in the study. 5 It is always important to describe the sample, as clinicians need to know if the study sample resembles their patients. It is equally important to identify the major variables in the study and how they are defined because this helps clinicians best understand what the study is about.

The final step in the GAO is to consider the analyses that answer the study research questions or confirm the study hypothesis. This is another opportunity for clinicians to learn, as learning about statistics in healthcare education has traditionally focused on conducting statistical tests as opposed to interpreting statistical tests. Understanding what the statistics indicate about the study findings is an imperative of critical appraisal of quantitative evidence.

The second tool is one of the variety of rapid critical appraisal checklists that speak to validity, reliability, and applicability of specific study designs, which are available at varying locations (see Critical appraisal resources ). When choosing a checklist to implement with a group of critical care nurses, it is important to verify that the checklist is complete and simple to use. Be sure to check that the checklist has answers to three key questions. The first question is: Are the results of the study valid? Related subquestions should help nurses discern if certain markers of good research design are present within the study. For example, identifying that study participants were randomly assigned to study groups is an essential marker of good research for a randomized controlled trial. Checking these essential markers helps clinicians quickly review a study to check off these important requirements. Clinical judgment is required when the study lacks any of the identified quality markers. Clinicians must discern whether the absence of any of the essential markers negates the usefulness of the study findings. 6-9

The second question is: What are the study results? This is answered by reviewing whether the study found what it was expecting to and if those findings were meaningful to clinical practice. Basic knowledge of how to interpret statistics is important for understanding quantitative studies, and basic knowledge of qualitative analysis greatly facilitates understanding those results. 6-9

The third question is: Are the results applicable to my patients? Answering this question involves consideration of the feasibility of implementing the study findings into the clinicians' environment as well as any contraindication within the clinicians' patient populations. Consider issues such as organizational politics, financial feasibility, and patient preferences. 6-9

When these questions have been answered, clinicians must decide about whether to keep the particular study in the body of evidence. Once the final group of keeper studies is identified, clinicians are ready to move into the phase of critical appraisal. 6-9

Phase 2: Evaluation . The goal of evaluation is to determine how studies within the body of evidence agree or disagree by identifying common patterns of information across studies. For example, an evaluator may compare whether the same intervention is used or if the outcomes are measured in the same way across all studies. A useful tool to help clinicians accomplish this is an evaluation table. This table serves two purposes: first, it enables clinicians to extract data from the studies and place the information in one table for easy comparison with other studies; and second, it eliminates the need for further searching through piles of periodicals for the information. (See Bonus Content: Evaluation table headings .) Although the information for each of the columns may not be what clinicians consider as part of their daily work, the information is important for them to understand about the body of evidence so that they can explain the patterns of agreement or disagreement they identify across studies. Further, the in-depth understanding of the body of evidence from the evaluation table helps with discussing the relevant clinical issue to facilitate best practice. Their discussion comes from a place of knowledge and experience, which affords the most confidence. The patterns and in-depth understanding are what lead to the synthesis phase of critical appraisal.

The key to a successful evaluation table is simplicity. Entering data into the table in a simple, consistent manner offers more opportunity for comparing studies. 6-9 For example, using abbreviations versus complete sentences in all columns except the final one allows for ease of comparison. An example might be the dependent variable of depression defined as “feelings of severe despondency and dejection” in one study and as “feeling sad and lonely” in another study. 10 Because these are two different definitions, they need to be different dependent variables. Clinicians must use their clinical judgment to discern that these different dependent variables require different names and abbreviations and how these further their comparison across studies.

Sample and theoretical or conceptual underpinnings are important to understanding how studies compare. Similar samples and settings across studies increase agreement. Several studies with the same conceptual framework increase the likelihood of common independent variables and dependent variables. The findings of a study are dependent on the analyses conducted. That is why an analysis column is dedicated to recording the kind of analysis used (for example, the name of the statistical analyses for quantitative studies). Only statistics that help answer the clinical question belong in this column. The findings column must have a result for each of the analyses listed; however, in the actual results, not in words. For example, a clinician lists a t -test as a statistic in the analysis column, so a t -value should reflect whether the groups are different as well as probability ( P -value or confidence interval) that reflects statistical significance. The explanation for these results would go in the last column that describes worth of the research to practice. This column is much more flexible and contains other information such as the level of evidence, the studies' strengths and limitations, any caveats about the methodology, or other aspects of the study that would be helpful to its use in practice. The final piece of information in this column is a recommendation for how this study would be used in practice. Each of the studies in the body of evidence that addresses the clinical question is placed in one evaluation table to facilitate the ease of comparing across the studies. This comparison sets the stage for synthesis.

Phase 3: Synthesis . In the synthesis phase, clinicians pull out key information from the evaluation table to produce a snapshot of the body of evidence. A table also is used here to feature what is known and help all those viewing the synthesis table to come to the same conclusion. A hypothetical example table included here demonstrates that a music therapy intervention is effective in reducing the outcome of oxygen saturation (SaO 2 ) in six of the eight studies in the body of evidence that evaluated that outcome (see Sample synthesis table: Impact on outcomes ). Simply using arrows to indicate effect offers readers a collective view of the agreement across studies that prompts action. Action may be to change practice, affirm current practice, or conduct research to strengthen the body of evidence by collaborating with nurse scientists.

When synthesizing evidence, there are at least two recommended synthesis tables, including the level-of-evidence table and the impact-on-outcomes table for quantitative questions, such as therapy or relevant themes table for “meaning” questions about human experience. (See Bonus Content: Level of evidence for intervention studies: Synthesis of type .) The sample synthesis table also demonstrates that a final column labeled synthesis indicates agreement across the studies. Of the three outcomes, the most reliable for clinicians to see with music therapy is SaO 2 , with positive results in six out of eight studies. The second most reliable outcome would be reducing increased respiratory rate (RR). Parental engagement has the least support as a reliable outcome, with only two of five studies showing positive results. Synthesis tables make the recommendation clear to all those who are involved in caring for that patient population. Although the two synthesis tables mentioned are a great start, the evidence may require more synthesis tables to adequately explain what is known. These tables are the foundation that supports clinically meaningful recommendations.

Phase 4: Recommendation . Recommendations are definitive statements based on what is known from the body of evidence. For example, with an intervention question, clinicians should be able to discern from the evidence if they will reliably get the desired outcome when they deliver the intervention as it was in the studies. In the sample synthesis table, the recommendation would be to implement the music therapy intervention across all settings with the population, and measure SaO 2 and RR, with the expectation that both would be optimally improved with the intervention. When the synthesis demonstrates that studies consistently verify an outcome occurs as a result of an intervention, however that intervention is not currently practiced, care is not best practice. Therefore, a firm recommendation to deliver the intervention and measure the appropriate outcomes must be made, which concludes critical appraisal of the evidence.