- Youth Program

- Wharton Online

Research Papers / Publications

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

Statistics articles from across Nature Portfolio

Statistics is the application of mathematical concepts to understanding and analysing large collections of data. A central tenet of statistics is to describe the variations in a data set or population using probability distributions. This analysis aids understanding of what underlies these variations and enables predictions of future changes.

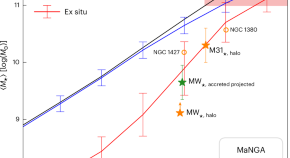

Machine learning reveals the merging history of nearby galaxies

A probabilistic machine learning method trained on cosmological simulations is used to determine whether stars in 10,000 nearby galaxies formed internally or were accreted from other galaxies during merging events. The model predicts that only 20% of the stellar mass in present day galaxies is the result of past mergers.

Latest Research and Reviews

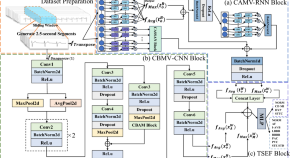

A coordinated adaptive multiscale enhanced spatio-temporal fusion network for multi-lead electrocardiogram arrhythmia detection

- Zicong Yang

Modelling the age distribution of longevity leaders

- László Németh

- Bálint Vető

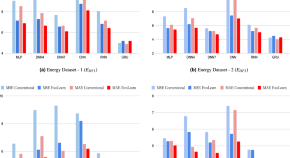

Effective weight optimization strategy for precise deep learning forecasting models using EvoLearn approach

- Ashima Anand

- Rajender Parsad

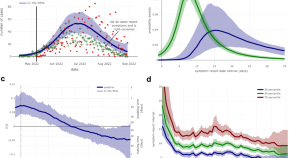

Quantification of the time-varying epidemic growth rate and of the delays between symptom onset and presenting to healthcare for the mpox epidemic in the UK in 2022

- Robert Hinch

- Jasmina Panovska-Griffiths

- Christophe Fraser

Investigating the causal relationship between wealth index and ICT skills: a mediation analysis approach

- Tarikul Islam

- Nabil Ahmed Uthso

Statistical analysis of the effect of socio-political factors on individual life satisfaction

- Ayman Alzaatreh

News and Comment

Efficient learning of many-body systems

The Hamiltonian describing a quantum many-body system can be learned using measurements in thermal equilibrium. Now, a learning algorithm applicable to many natural systems has been found that requires exponentially fewer measurements than existing methods.

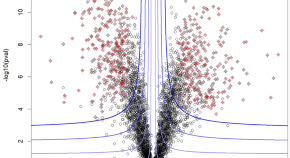

Fudging the volcano-plot without dredging the data

Selecting omic biomarkers using both their effect size and their differential status significance ( i.e. , selecting the “volcano-plot outer spray”) has long been equally biologically relevant and statistically troublesome. However, recent proposals are paving the way to resolving this dilemma.

- Thomas Burger

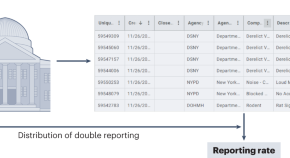

Disentangling truth from bias in naturally occurring data

A technique that leverages duplicate records in crowdsourcing data could help to mitigate the effects of biases in research and services that are dependent on government records.

- Daniel T. O’Brien

Sciama’s argument on life in a random universe and distinguishing apples from oranges

Dennis Sciama has argued that the existence of life depends on many quantities—the fundamental constants—so in a random universe life should be highly unlikely. However, without full knowledge of these constants, his argument implies a universe that could appear to be ‘intelligently designed’.

- Zhi-Wei Wang

- Samuel L. Braunstein

A method for generating constrained surrogate power laws

A paper in Physical Review X presents a method for numerically generating data sequences that are as likely to be observed under a power law as a given observed dataset.

- Zoe Budrikis

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

- Search by keyword

- Search by citation

Page 1 of 3

A generalization to the log-inverse Weibull distribution and its applications in cancer research

In this paper we consider a generalization of a log-transformed version of the inverse Weibull distribution. Several theoretical properties of the distribution are studied in detail including expressions for i...

- View Full Text

Approximations of conditional probability density functions in Lebesgue spaces via mixture of experts models

Mixture of experts (MoE) models are widely applied for conditional probability density estimation problems. We demonstrate the richness of the class of MoE models by proving denseness results in Lebesgue space...

Structural properties of generalised Planck distributions

A family of generalised Planck (GP) laws is defined and its structural properties explored. Sometimes subject to parameter restrictions, a GP law is a randomly scaled gamma law; it arises as the equilibrium la...

New class of Lindley distributions: properties and applications

A new generalized class of Lindley distribution is introduced in this paper. This new class is called the T -Lindley{ Y } class of distributions, and it is generated by using the quantile functions of uniform, expon...

Tolerance intervals in statistical software and robustness under model misspecification

A tolerance interval is a statistical interval that covers at least 100 ρ % of the population of interest with a 100(1− α ) % confidence, where ρ and α are pre-specified values in (0, 1). In many scientific fields, su...

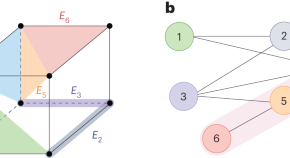

Combining assumptions and graphical network into gene expression data analysis

Analyzing gene expression data rigorously requires taking assumptions into consideration but also relies on using information about network relations that exist among genes. Combining these different elements ...

A comparison of zero-inflated and hurdle models for modeling zero-inflated count data

Counts data with excessive zeros are frequently encountered in practice. For example, the number of health services visits often includes many zeros representing the patients with no utilization during a follo...

A general stochastic model for bivariate episodes driven by a gamma sequence

We propose a new stochastic model describing the joint distribution of ( X , N ), where N is a counting variable while X is the sum of N independent gamma random variables. We present the main properties of this gene...

A flexible multivariate model for high-dimensional correlated count data

We propose a flexible multivariate stochastic model for over-dispersed count data. Our methodology is built upon mixed Poisson random vectors ( Y 1 ,…, Y d ), where the { Y i } are conditionally independent Poisson random...

Generalized fiducial inference on the mean of zero-inflated Poisson and Poisson hurdle models

Zero-inflated and hurdle models are widely applied to count data possessing excess zeros, where they can simultaneously model the process from how the zeros were generated and potentially help mitigate the eff...

Multivariate distributions of correlated binary variables generated by pair-copulas

Correlated binary data are prevalent in a wide range of scientific disciplines, including healthcare and medicine. The generalized estimating equations (GEEs) and the multivariate probit (MP) model are two of ...

On two extensions of the canonical Feller–Spitzer distribution

We introduce two extensions of the canonical Feller–Spitzer distribution from the class of Bessel densities, which comprise two distinct stochastically decreasing one-parameter families of positive absolutely ...

A new trivariate model for stochastic episodes

We study the joint distribution of stochastic events described by ( X , Y , N ), where N has a 1-inflated (or deflated) geometric distribution and X , Y are the sum and the maximum of N exponential random variables. Mod...

A flexible univariate moving average time-series model for dispersed count data

Al-Osh and Alzaid ( 1988 ) consider a Poisson moving average (PMA) model to describe the relation among integer-valued time series data; this model, however, is constrained by the underlying equi-dispersion assumpt...

Spatio-temporal analysis of flood data from South Carolina

To investigate the relationship between flood gage height and precipitation in South Carolina from 2012 to 2016, we built a conditional autoregressive (CAR) model using a Bayesian hierarchical framework. This ...

Affine-transformation invariant clustering models

We develop a cluster process which is invariant with respect to unknown affine transformations of the feature space without knowing the number of clusters in advance. Specifically, our proposed method can iden...

Distributions associated with simultaneous multiple hypothesis testing

We develop the distribution for the number of hypotheses found to be statistically significant using the rule from Simes (Biometrika 73: 751–754, 1986) for controlling the family-wise error rate (FWER). We fin...

New families of bivariate copulas via unit weibull distortion

This paper introduces a new family of bivariate copulas constructed using a unit Weibull distortion. Existing copulas play the role of the base or initial copulas that are transformed or distorted into a new f...

Generalized logistic distribution and its regression model

A new generalized asymmetric logistic distribution is defined. In some cases, existing three parameter distributions provide poor fit to heavy tailed data sets. The proposed new distribution consists of only t...

The spherical-Dirichlet distribution

Today, data mining and gene expressions are at the forefront of modern data analysis. Here we introduce a novel probability distribution that is applicable in these fields. This paper develops the proposed sph...

Item fit statistics for Rasch analysis: can we trust them?

To compare fit statistics for the Rasch model based on estimates of unconditional or conditional response probabilities.

Exact distributions of statistics for making inferences on mixed models under the default covariance structure

At this juncture when mixed models are heavily employed in applications ranging from clinical research to business analytics, the purpose of this article is to extend the exact distributional result of Wald (A...

A new discrete pareto type (IV) model: theory, properties and applications

Discrete analogue of a continuous distribution (especially in the univariate domain) is not new in the literature. The work of discretizing continuous distributions begun with the paper by Nakagawa and Osaki (197...

Density deconvolution for generalized skew-symmetric distributions

The density deconvolution problem is considered for random variables assumed to belong to the generalized skew-symmetric (GSS) family of distributions. The approach is semiparametric in that the symmetric comp...

The unifed distribution

We introduce a new distribution with support on (0,1) called unifed. It can be used as the response distribution for a GLM and it is suitable for data aggregation. We make a comparison to the beta regression. ...

On Burr III Marshal Olkin family: development, properties, characterizations and applications

In this paper, a flexible family of distributions with unimodel, bimodal, increasing, increasing and decreasing, inverted bathtub and modified bathtub hazard rate called Burr III-Marshal Olkin-G (BIIIMO-G) fam...

The linearly decreasing stress Weibull (LDSWeibull): a new Weibull-like distribution

Motivated by an engineering pullout test applied to a steel strip embedded in earth, we show how the resulting linearly decreasing force leads naturally to a new distribution, if the force under constant stress i...

Meta analysis of binary data with excessive zeros in two-arm trials

We present a novel Bayesian approach to random effects meta analysis of binary data with excessive zeros in two-arm trials. We discuss the development of likelihood accounting for excessive zeros, the prior, a...

On ( p 1 ,…, p k )-spherical distributions

The class of ( p 1 ,…, p k )-spherical probability laws and a method of simulating random vectors following such distributions are introduced using a new stochastic vector representation. A dynamic geometric disintegra...

A new class of survival distribution for degradation processes subject to shocks

Many systems experience gradual degradation while simultaneously being exposed to a stream of random shocks of varying magnitudes that eventually cause failure when a shock exceeds the residual strength of the...

A new extended normal regression model: simulations and applications

Various applications in natural science require models more accurate than well-known distributions. In this context, several generators of distributions have been recently proposed. We introduce a new four-par...

Multiclass analysis and prediction with network structured covariates

Technological advances associated with data acquisition are leading to the production of complex structured data sets. The recent development on classification with multiclass responses makes it possible to in...

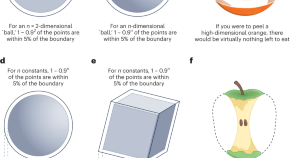

High-dimensional star-shaped distributions

Stochastic representations of star-shaped distributed random vectors having heavy or light tail density generating function g are studied for increasing dimensions along with corresponding geometric measure repre...

A unified complex noncentral Wishart type distribution inspired by massive MIMO systems

The eigenvalue distributions from a complex noncentral Wishart matrix S = X H X has been the subject of interest in various real world applications, where X is assumed to be complex matrix variate normally distribute...

Particle swarm based algorithms for finding locally and Bayesian D -optimal designs

When a model-based approach is appropriate, an optimal design can guide how to collect data judiciously for making reliable inference at minimal cost. However, finding optimal designs for a statistical model w...

Admissible Bernoulli correlations

A multivariate symmetric Bernoulli distribution has marginals that are uniform over the pair {0,1}. Consider the problem of sampling from this distribution given a prescribed correlation between each pair of v...

On p -generalized elliptical random processes

We introduce rank- k -continuous axis-aligned p -generalized elliptically contoured distributions and study their properties such as stochastic representations, moments, and density-like representations. Applying th...

Parameters of stochastic models for electroencephalogram data as biomarkers for child’s neurodevelopment after cerebral malaria

The objective of this study was to test statistical features from the electroencephalogram (EEG) recordings as predictors of neurodevelopment and cognition of Ugandan children after coma due to cerebral malari...

A new generalization of generalized half-normal distribution: properties and regression models

In this paper, a new extension of the generalized half-normal distribution is introduced and studied. We assess the performance of the maximum likelihood estimators of the parameters of the new distribution vi...

Analytical properties of generalized Gaussian distributions

The family of Generalized Gaussian (GG) distributions has received considerable attention from the engineering community, due to the flexible parametric form of its probability density function, in modeling ma...

A new Weibull- X family of distributions: properties, characterizations and applications

We propose a new family of univariate distributions generated from the Weibull random variable, called a new Weibull-X family of distributions. Two special sub-models of the proposed family are presented and t...

The transmuted geometric-quadratic hazard rate distribution: development, properties, characterizations and applications

We propose a five parameter transmuted geometric quadratic hazard rate (TG-QHR) distribution derived from mixture of quadratic hazard rate (QHR), geometric and transmuted distributions via the application of t...

A nonparametric approach for quantile regression

Quantile regression estimates conditional quantiles and has wide applications in the real world. Estimating high conditional quantiles is an important problem. The regular quantile regression (QR) method often...

Mean and variance of ratios of proportions from categories of a multinomial distribution

Ratio distribution is a probability distribution representing the ratio of two random variables, each usually having a known distribution. Currently, there are results when the random variables in the ratio fo...

The power-Cauchy negative-binomial: properties and regression

We propose and study a new compounded model to extend the half-Cauchy and power-Cauchy distributions, which offers more flexibility in modeling lifetime data. The proposed model is analytically tractable and c...

Families of distributions arising from the quantile of generalized lambda distribution

In this paper, the class of T-R { generalized lambda } families of distributions based on the quantile of generalized lambda distribution has been proposed using the T-R { Y } framework. In the development of the T - R {

Risk ratios and Scanlan’s HRX

Risk ratios are distribution function tail ratios and are widely used in health disparities research. Let A and D denote advantaged and disadvantaged populations with cdfs F ...

Joint distribution of k -tuple statistics in zero-one sequences of Markov-dependent trials

We consider a sequence of n , n ≥3, zero (0) - one (1) Markov-dependent trials. We focus on k -tuples of 1s; i.e. runs of 1s of length at least equal to a fixed integer number k , 1≤ k ≤ n . The statistics denoting the n...

Quantile regression for overdispersed count data: a hierarchical method

Generalized Poisson regression is commonly applied to overdispersed count data, and focused on modelling the conditional mean of the response. However, conditional mean regression models may be sensitive to re...

Describing the Flexibility of the Generalized Gamma and Related Distributions

The generalized gamma (GG) distribution is a widely used, flexible tool for parametric survival analysis. Many alternatives and extensions to this family have been proposed. This paper characterizes the flexib...

- ISSN: 2195-5832 (electronic)

- Amstat News

- ASA Community

- Practical Significance

- ASA Leader HUB

- Real World Data Science

- Staff Directory

- ASA Leader Hub

- Code of Conduct

- Board of Directors

- Constitution

- Strategic Plan

- Council of Sections Governing Board

- Council of Chapters Governing Board

- Council of Sections

- Council of Chapters

- Individual Member Benefits

- Membership Options

- Membership for Organizations

- Student Chapters

- Sections & Interest Groups

- Outreach Groups

- Membership Campaigns

- Membership Directory

- Members Only

- Classroom Resources

- Publications

- Guidelines and Reports

- Professional Development

- Student Competitions

- Communities and Resources

- Graduate Educators

- Caucus of Academic Reps

- Student Resources

- Career Resources

- Communities

- Statistics and Biostatistics Programs

- Internships and Fellowships

- K-12 Student Outreach

- K-12 Statistical Ambassador

- Educational Ambassador

- Statistics and Biostatistics Degree Data

- COVID-19 Pandemic Resources

- Education Publications

- JSM Proceedings

- Significance

- ASA Member News

- Joint Statistical Meetings

- Conference on Statistical Practice

- ASA Biopharmaceutical Section Regulatory-Industry Statistics Workshop

- International Conference on Establishment Statistics

- International Conference on Health Policy Statistics

- Symposium on Data Science & Statistics

- Women in Statistics and Data Science

- Other Meetings

- ASA Board Statements

- Letters Signed/Sent

- Resources for Policymakers

- Federal Budget Information

- Statistical Significance Series

- Count on Stats

- ASA Fellowships and Grants

- Salary Information

- External Funding Sources

- Ethical Guidelines for Statistical Practice

- Accreditation

- Authorized Use of PSTAT® Mark

- ASA Fellows

- Student Paper Competitions

- Awards and Scholarships

ASA Journals Online

Journal of the american statistical association, the american statistician, journal of agricultural, biological, and environmental statistics, journal of business & economic statistics, journal of computational and graphical statistics, journal of nonparametric statistics, statistical analysis and data mining: the asa data science journal, statistics in biopharmaceutical research, technometrics, asa open-access journals.

Data Science in Science

Journal of statistics and data science education .

Statistics and Public Policy

Statistics surveys, asa co-published journals, journal of educational and behavioral statistics, journal of quantitative analysis in sports.

SIAM/ASA Journal on Uncertainty Quantification

Journal of Survey Statistics and Methodology

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Indian J Anaesth

- v.60(9); 2016 Sep

Basic statistical tools in research and data analysis

Zulfiqar ali.

Department of Anaesthesiology, Division of Neuroanaesthesiology, Sheri Kashmir Institute of Medical Sciences, Soura, Srinagar, Jammu and Kashmir, India

S Bala Bhaskar

1 Department of Anaesthesiology and Critical Care, Vijayanagar Institute of Medical Sciences, Bellary, Karnataka, India

Statistical methods involved in carrying out a study include planning, designing, collecting data, analysing, drawing meaningful interpretation and reporting of the research findings. The statistical analysis gives meaning to the meaningless numbers, thereby breathing life into a lifeless data. The results and inferences are precise only if proper statistical tests are used. This article will try to acquaint the reader with the basic research tools that are utilised while conducting various studies. The article covers a brief outline of the variables, an understanding of quantitative and qualitative variables and the measures of central tendency. An idea of the sample size estimation, power analysis and the statistical errors is given. Finally, there is a summary of parametric and non-parametric tests used for data analysis.

INTRODUCTION

Statistics is a branch of science that deals with the collection, organisation, analysis of data and drawing of inferences from the samples to the whole population.[ 1 ] This requires a proper design of the study, an appropriate selection of the study sample and choice of a suitable statistical test. An adequate knowledge of statistics is necessary for proper designing of an epidemiological study or a clinical trial. Improper statistical methods may result in erroneous conclusions which may lead to unethical practice.[ 2 ]

Variable is a characteristic that varies from one individual member of population to another individual.[ 3 ] Variables such as height and weight are measured by some type of scale, convey quantitative information and are called as quantitative variables. Sex and eye colour give qualitative information and are called as qualitative variables[ 3 ] [ Figure 1 ].

Classification of variables

Quantitative variables

Quantitative or numerical data are subdivided into discrete and continuous measurements. Discrete numerical data are recorded as a whole number such as 0, 1, 2, 3,… (integer), whereas continuous data can assume any value. Observations that can be counted constitute the discrete data and observations that can be measured constitute the continuous data. Examples of discrete data are number of episodes of respiratory arrests or the number of re-intubations in an intensive care unit. Similarly, examples of continuous data are the serial serum glucose levels, partial pressure of oxygen in arterial blood and the oesophageal temperature.

A hierarchical scale of increasing precision can be used for observing and recording the data which is based on categorical, ordinal, interval and ratio scales [ Figure 1 ].

Categorical or nominal variables are unordered. The data are merely classified into categories and cannot be arranged in any particular order. If only two categories exist (as in gender male and female), it is called as a dichotomous (or binary) data. The various causes of re-intubation in an intensive care unit due to upper airway obstruction, impaired clearance of secretions, hypoxemia, hypercapnia, pulmonary oedema and neurological impairment are examples of categorical variables.

Ordinal variables have a clear ordering between the variables. However, the ordered data may not have equal intervals. Examples are the American Society of Anesthesiologists status or Richmond agitation-sedation scale.

Interval variables are similar to an ordinal variable, except that the intervals between the values of the interval variable are equally spaced. A good example of an interval scale is the Fahrenheit degree scale used to measure temperature. With the Fahrenheit scale, the difference between 70° and 75° is equal to the difference between 80° and 85°: The units of measurement are equal throughout the full range of the scale.

Ratio scales are similar to interval scales, in that equal differences between scale values have equal quantitative meaning. However, ratio scales also have a true zero point, which gives them an additional property. For example, the system of centimetres is an example of a ratio scale. There is a true zero point and the value of 0 cm means a complete absence of length. The thyromental distance of 6 cm in an adult may be twice that of a child in whom it may be 3 cm.

STATISTICS: DESCRIPTIVE AND INFERENTIAL STATISTICS

Descriptive statistics[ 4 ] try to describe the relationship between variables in a sample or population. Descriptive statistics provide a summary of data in the form of mean, median and mode. Inferential statistics[ 4 ] use a random sample of data taken from a population to describe and make inferences about the whole population. It is valuable when it is not possible to examine each member of an entire population. The examples if descriptive and inferential statistics are illustrated in Table 1 .

Example of descriptive and inferential statistics

Descriptive statistics

The extent to which the observations cluster around a central location is described by the central tendency and the spread towards the extremes is described by the degree of dispersion.

Measures of central tendency

The measures of central tendency are mean, median and mode.[ 6 ] Mean (or the arithmetic average) is the sum of all the scores divided by the number of scores. Mean may be influenced profoundly by the extreme variables. For example, the average stay of organophosphorus poisoning patients in ICU may be influenced by a single patient who stays in ICU for around 5 months because of septicaemia. The extreme values are called outliers. The formula for the mean is

where x = each observation and n = number of observations. Median[ 6 ] is defined as the middle of a distribution in a ranked data (with half of the variables in the sample above and half below the median value) while mode is the most frequently occurring variable in a distribution. Range defines the spread, or variability, of a sample.[ 7 ] It is described by the minimum and maximum values of the variables. If we rank the data and after ranking, group the observations into percentiles, we can get better information of the pattern of spread of the variables. In percentiles, we rank the observations into 100 equal parts. We can then describe 25%, 50%, 75% or any other percentile amount. The median is the 50 th percentile. The interquartile range will be the observations in the middle 50% of the observations about the median (25 th -75 th percentile). Variance[ 7 ] is a measure of how spread out is the distribution. It gives an indication of how close an individual observation clusters about the mean value. The variance of a population is defined by the following formula:

where σ 2 is the population variance, X is the population mean, X i is the i th element from the population and N is the number of elements in the population. The variance of a sample is defined by slightly different formula:

where s 2 is the sample variance, x is the sample mean, x i is the i th element from the sample and n is the number of elements in the sample. The formula for the variance of a population has the value ‘ n ’ as the denominator. The expression ‘ n −1’ is known as the degrees of freedom and is one less than the number of parameters. Each observation is free to vary, except the last one which must be a defined value. The variance is measured in squared units. To make the interpretation of the data simple and to retain the basic unit of observation, the square root of variance is used. The square root of the variance is the standard deviation (SD).[ 8 ] The SD of a population is defined by the following formula:

where σ is the population SD, X is the population mean, X i is the i th element from the population and N is the number of elements in the population. The SD of a sample is defined by slightly different formula:

where s is the sample SD, x is the sample mean, x i is the i th element from the sample and n is the number of elements in the sample. An example for calculation of variation and SD is illustrated in Table 2 .

Example of mean, variance, standard deviation

Normal distribution or Gaussian distribution

Most of the biological variables usually cluster around a central value, with symmetrical positive and negative deviations about this point.[ 1 ] The standard normal distribution curve is a symmetrical bell-shaped. In a normal distribution curve, about 68% of the scores are within 1 SD of the mean. Around 95% of the scores are within 2 SDs of the mean and 99% within 3 SDs of the mean [ Figure 2 ].

Normal distribution curve

Skewed distribution

It is a distribution with an asymmetry of the variables about its mean. In a negatively skewed distribution [ Figure 3 ], the mass of the distribution is concentrated on the right of Figure 1 . In a positively skewed distribution [ Figure 3 ], the mass of the distribution is concentrated on the left of the figure leading to a longer right tail.

Curves showing negatively skewed and positively skewed distribution

Inferential statistics

In inferential statistics, data are analysed from a sample to make inferences in the larger collection of the population. The purpose is to answer or test the hypotheses. A hypothesis (plural hypotheses) is a proposed explanation for a phenomenon. Hypothesis tests are thus procedures for making rational decisions about the reality of observed effects.

Probability is the measure of the likelihood that an event will occur. Probability is quantified as a number between 0 and 1 (where 0 indicates impossibility and 1 indicates certainty).

In inferential statistics, the term ‘null hypothesis’ ( H 0 ‘ H-naught ,’ ‘ H-null ’) denotes that there is no relationship (difference) between the population variables in question.[ 9 ]

Alternative hypothesis ( H 1 and H a ) denotes that a statement between the variables is expected to be true.[ 9 ]

The P value (or the calculated probability) is the probability of the event occurring by chance if the null hypothesis is true. The P value is a numerical between 0 and 1 and is interpreted by researchers in deciding whether to reject or retain the null hypothesis [ Table 3 ].

P values with interpretation

If P value is less than the arbitrarily chosen value (known as α or the significance level), the null hypothesis (H0) is rejected [ Table 4 ]. However, if null hypotheses (H0) is incorrectly rejected, this is known as a Type I error.[ 11 ] Further details regarding alpha error, beta error and sample size calculation and factors influencing them are dealt with in another section of this issue by Das S et al .[ 12 ]

Illustration for null hypothesis

PARAMETRIC AND NON-PARAMETRIC TESTS

Numerical data (quantitative variables) that are normally distributed are analysed with parametric tests.[ 13 ]

Two most basic prerequisites for parametric statistical analysis are:

- The assumption of normality which specifies that the means of the sample group are normally distributed

- The assumption of equal variance which specifies that the variances of the samples and of their corresponding population are equal.

However, if the distribution of the sample is skewed towards one side or the distribution is unknown due to the small sample size, non-parametric[ 14 ] statistical techniques are used. Non-parametric tests are used to analyse ordinal and categorical data.

Parametric tests

The parametric tests assume that the data are on a quantitative (numerical) scale, with a normal distribution of the underlying population. The samples have the same variance (homogeneity of variances). The samples are randomly drawn from the population, and the observations within a group are independent of each other. The commonly used parametric tests are the Student's t -test, analysis of variance (ANOVA) and repeated measures ANOVA.

Student's t -test

Student's t -test is used to test the null hypothesis that there is no difference between the means of the two groups. It is used in three circumstances:

where X = sample mean, u = population mean and SE = standard error of mean

where X 1 − X 2 is the difference between the means of the two groups and SE denotes the standard error of the difference.

- To test if the population means estimated by two dependent samples differ significantly (the paired t -test). A usual setting for paired t -test is when measurements are made on the same subjects before and after a treatment.

The formula for paired t -test is:

where d is the mean difference and SE denotes the standard error of this difference.

The group variances can be compared using the F -test. The F -test is the ratio of variances (var l/var 2). If F differs significantly from 1.0, then it is concluded that the group variances differ significantly.

Analysis of variance

The Student's t -test cannot be used for comparison of three or more groups. The purpose of ANOVA is to test if there is any significant difference between the means of two or more groups.

In ANOVA, we study two variances – (a) between-group variability and (b) within-group variability. The within-group variability (error variance) is the variation that cannot be accounted for in the study design. It is based on random differences present in our samples.

However, the between-group (or effect variance) is the result of our treatment. These two estimates of variances are compared using the F-test.

A simplified formula for the F statistic is:

where MS b is the mean squares between the groups and MS w is the mean squares within groups.

Repeated measures analysis of variance

As with ANOVA, repeated measures ANOVA analyses the equality of means of three or more groups. However, a repeated measure ANOVA is used when all variables of a sample are measured under different conditions or at different points in time.

As the variables are measured from a sample at different points of time, the measurement of the dependent variable is repeated. Using a standard ANOVA in this case is not appropriate because it fails to model the correlation between the repeated measures: The data violate the ANOVA assumption of independence. Hence, in the measurement of repeated dependent variables, repeated measures ANOVA should be used.

Non-parametric tests

When the assumptions of normality are not met, and the sample means are not normally, distributed parametric tests can lead to erroneous results. Non-parametric tests (distribution-free test) are used in such situation as they do not require the normality assumption.[ 15 ] Non-parametric tests may fail to detect a significant difference when compared with a parametric test. That is, they usually have less power.

As is done for the parametric tests, the test statistic is compared with known values for the sampling distribution of that statistic and the null hypothesis is accepted or rejected. The types of non-parametric analysis techniques and the corresponding parametric analysis techniques are delineated in Table 5 .

Analogue of parametric and non-parametric tests

Median test for one sample: The sign test and Wilcoxon's signed rank test

The sign test and Wilcoxon's signed rank test are used for median tests of one sample. These tests examine whether one instance of sample data is greater or smaller than the median reference value.

This test examines the hypothesis about the median θ0 of a population. It tests the null hypothesis H0 = θ0. When the observed value (Xi) is greater than the reference value (θ0), it is marked as+. If the observed value is smaller than the reference value, it is marked as − sign. If the observed value is equal to the reference value (θ0), it is eliminated from the sample.

If the null hypothesis is true, there will be an equal number of + signs and − signs.

The sign test ignores the actual values of the data and only uses + or − signs. Therefore, it is useful when it is difficult to measure the values.

Wilcoxon's signed rank test

There is a major limitation of sign test as we lose the quantitative information of the given data and merely use the + or – signs. Wilcoxon's signed rank test not only examines the observed values in comparison with θ0 but also takes into consideration the relative sizes, adding more statistical power to the test. As in the sign test, if there is an observed value that is equal to the reference value θ0, this observed value is eliminated from the sample.

Wilcoxon's rank sum test ranks all data points in order, calculates the rank sum of each sample and compares the difference in the rank sums.

Mann-Whitney test

It is used to test the null hypothesis that two samples have the same median or, alternatively, whether observations in one sample tend to be larger than observations in the other.

Mann–Whitney test compares all data (xi) belonging to the X group and all data (yi) belonging to the Y group and calculates the probability of xi being greater than yi: P (xi > yi). The null hypothesis states that P (xi > yi) = P (xi < yi) =1/2 while the alternative hypothesis states that P (xi > yi) ≠1/2.

Kolmogorov-Smirnov test

The two-sample Kolmogorov-Smirnov (KS) test was designed as a generic method to test whether two random samples are drawn from the same distribution. The null hypothesis of the KS test is that both distributions are identical. The statistic of the KS test is a distance between the two empirical distributions, computed as the maximum absolute difference between their cumulative curves.

Kruskal-Wallis test

The Kruskal–Wallis test is a non-parametric test to analyse the variance.[ 14 ] It analyses if there is any difference in the median values of three or more independent samples. The data values are ranked in an increasing order, and the rank sums calculated followed by calculation of the test statistic.

Jonckheere test

In contrast to Kruskal–Wallis test, in Jonckheere test, there is an a priori ordering that gives it a more statistical power than the Kruskal–Wallis test.[ 14 ]

Friedman test

The Friedman test is a non-parametric test for testing the difference between several related samples. The Friedman test is an alternative for repeated measures ANOVAs which is used when the same parameter has been measured under different conditions on the same subjects.[ 13 ]

Tests to analyse the categorical data

Chi-square test, Fischer's exact test and McNemar's test are used to analyse the categorical or nominal variables. The Chi-square test compares the frequencies and tests whether the observed data differ significantly from that of the expected data if there were no differences between groups (i.e., the null hypothesis). It is calculated by the sum of the squared difference between observed ( O ) and the expected ( E ) data (or the deviation, d ) divided by the expected data by the following formula:

A Yates correction factor is used when the sample size is small. Fischer's exact test is used to determine if there are non-random associations between two categorical variables. It does not assume random sampling, and instead of referring a calculated statistic to a sampling distribution, it calculates an exact probability. McNemar's test is used for paired nominal data. It is applied to 2 × 2 table with paired-dependent samples. It is used to determine whether the row and column frequencies are equal (that is, whether there is ‘marginal homogeneity’). The null hypothesis is that the paired proportions are equal. The Mantel-Haenszel Chi-square test is a multivariate test as it analyses multiple grouping variables. It stratifies according to the nominated confounding variables and identifies any that affects the primary outcome variable. If the outcome variable is dichotomous, then logistic regression is used.

SOFTWARES AVAILABLE FOR STATISTICS, SAMPLE SIZE CALCULATION AND POWER ANALYSIS

Numerous statistical software systems are available currently. The commonly used software systems are Statistical Package for the Social Sciences (SPSS – manufactured by IBM corporation), Statistical Analysis System ((SAS – developed by SAS Institute North Carolina, United States of America), R (designed by Ross Ihaka and Robert Gentleman from R core team), Minitab (developed by Minitab Inc), Stata (developed by StataCorp) and the MS Excel (developed by Microsoft).

There are a number of web resources which are related to statistical power analyses. A few are:

- StatPages.net – provides links to a number of online power calculators

- G-Power – provides a downloadable power analysis program that runs under DOS

- Power analysis for ANOVA designs an interactive site that calculates power or sample size needed to attain a given power for one effect in a factorial ANOVA design

- SPSS makes a program called SamplePower. It gives an output of a complete report on the computer screen which can be cut and paste into another document.

It is important that a researcher knows the concepts of the basic statistical methods used for conduct of a research study. This will help to conduct an appropriately well-designed study leading to valid and reliable results. Inappropriate use of statistical techniques may lead to faulty conclusions, inducing errors and undermining the significance of the article. Bad statistics may lead to bad research, and bad research may lead to unethical practice. Hence, an adequate knowledge of statistics and the appropriate use of statistical tests are important. An appropriate knowledge about the basic statistical methods will go a long way in improving the research designs and producing quality medical research which can be utilised for formulating the evidence-based guidelines.

Financial support and sponsorship

Conflicts of interest.

There are no conflicts of interest.

Volume 64, Issue 1

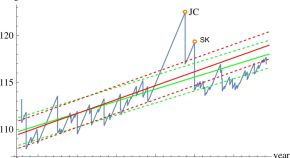

Replacement model with random replacement time.

- Kanchan Jain

- Sudheesh K. Kattumannil

- Anjana Rajagopal

Epidemic changepoint detection in the presence of nuisance changes

- Julius Juodakis

- Stephen Marsland

Squared error-based shrinkage estimators of discrete probabilities and their application to variable selection

- Małgorzata Łazȩcka

- Jan Mielniczuk

Admissible linear estimators in the general Gauss–Markov model under generalized extended balanced loss function

- Buatikan Mirezi

- Selahattin Kaçıranlar

Degree of isomorphism: a novel criterion for identifying and classifying orthogonal designs

- Lin-Chen Weng

- Kai-Tai Fang

- A. M. Elsawah

Convergence of estimative density: criterion for model complexity and sample size

Predictive inference of dual generalized order statistics from the inverse weibull distribution.

- Amany E. Aly

A ranked-based estimator of the mean past lifetime with an application

- Elham Zamanzade

- Majid Asadi

- Ehsan Zamanzade

Inference about the arithmetic average of log transformed data

- José Dias Curto

Constructing K-optimal designs for regression models

- Zongzhi Yue

- Xiaoqing Zhang

Group linear algorithm with sparse principal decomposition: a variable selection and clustering method for generalized linear models

- Juan C. Laria

- M. Carmen Aguilera-Morillo

- Rosa E. Lillo

Testing for diagonal symmetry based on center-outward ranking

- Sakineh Dehghan

- Mohammad Reza Faridrohani

- Zahra Barzegar

Forecasting highly persistent time series with bounded spectrum processes

- Federico Maddanu

Robust estimation in beta regression via maximum L \(_q\) -likelihood

- Terezinha K. A. Ribeiro

- Silvia L. P. Ferrari

Applying generalized funnel plots to help design statistical analyses

- Janet Aisbett

- Eric J. Drinkwater

- Stephen Woodcock

Correction to: On efficiency of some restricted estimators in a multivariate regression model

- Sévérien Nkurunziza

- Find a journal

- Publish with us

- Track your research

How to Write Statistics Research Paper | Easy Guide

A statistics research paper is an academic document presenting original findings or analyses derived from the data’s collection, organization, analysis, and interpretation. It addresses research questions or hypotheses within the field of statistics.

As a rule, college students get such papers assigned during a semester to assess their knowledge of statistics. However, any statistician specialist can also write research papers and publish them in academic journals, thus developing and promoting this field.

Want to master the art of statistics research paper writing?

We’ve got expert tips from a professional research paper writing service on crafting such studies. In this article, you’ll find a step-by-step guide on writing a statistics research paper that your educators, colleagues, or clients will approve.

How to write a statistics research paper: Steps

Table of Contents

State the problem

Collect the data, write an introductory paragraph, craft an abstract, describe your methodology, present your findings: evaluate and illustrate, revise and proofread.

Research papers aren’t about describing the existing knowledge on the topic. You should state your intellectual concern with it, indicating why it’s worth studying. When choosing the problem you’ll research in the paper, emphasize its ongoing nature:

What have other researchers already studied about it? Cite at least one previous publication related to your research and provide your statistical motivation to continue researching the topic. (You’ll refer to those researches in footnotes or within the text of your paper.)

Once you have the topic (problem) to research in your paper, it’s time to collect sources you’ll use as evidence and references. For statistics papers, consider the following:

- Published research from experts in Statistics (academic journals, newspapers, books, online publications, etc.)

- Statistical data from reliable sources (Google’s Public Data and Scholar, FedStats, and others)

- Your personal hypothesis, experiments, and info-gathering activities

The last one is a must-have! Your statistics research paper requires new information gathered by you as a researcher and not previously published anywhere. The massive block of your research paper will be about the data collection methods you used to investigate the problem and come to the conclusions you’ll provide.

Some underestimate the introductory paragraphs of research papers , but they are wrong. The introduction is the first thing a reader sees to understand if your research is worth their attention and time. With that in mind, ensure your introductory paragraph is intriguing yet informative enough for the audience to continue reading.

Start with a writing hook, a sentence grabbing a reader’s attention. Also, an intro needs background information: your topic and the scientific motivation for the new research methods. (What’s wrong with existing ones? Or, what do they miss?) Finally, move on to your thesis statement: 1-2 sentences summarizing the primary idea behind your research paper.

It’s an overview of your statistics research paper where you establish notation and outline the methods and the results. Abstracts are integral for all academic studies and research, giving readers enough details to decide whether your paper is relevant to them.

What do you include in an abstract?

Introduce your topic and explain why it’s significant in your field. State the gap present in the research at the moment and reveal the aim of your paper. Then, briefly describe your research methods and approach, summarize your findings, and explain their contribution to the field.

The methods section is the most extensive one in your research paper. Here, you provide sufficient information about how you collected data for your research, what methodologies you used, and how you evaluated the results.

Be specific; describe everything so the audience can repeat your research (experiments) and reconstruct your results. It’s the value your paper brings to the academic community.

Further paragraphs of your research paper present your findings. Try to stick to one idea per paragraph to make it clear and easy for readers to consume.

Prepare and add supporting materials that will help you illustrate findings: graphs, diagrams, charts, tables, etc. You can use them in a paper’s body or add them as an appendix; these elements will support your points and serve as extra proof for your findings.

Last but not least:

Write a concluding paragraph for your statistics research paper. Repeat your thesis, summarize your findings, and conclude whether they have proved or contradicted your initial theory (hypothesis). Also, you can make suggestions for further research in the same area.

Re-read your paper several times before publishing or submitting it for review. Ensure all the information is logical and coherent, all the terms are correct, and all the elements are present and accurately placed.

Also, proofread your final draft: Spelling, grammar, and punctuation mistakes are a no-no here! Re-check the list of references again; ensure you follow the required citation style and use the proper format.

So, now you know seven easy steps for writing a statistical research paper. Whether you’re a college student or a statistician willing to make a scientific contribution to a niche, follow them to craft a professionally structured academic document:

State a problem, choose methods of analyzing it, evaluate your findings, and illustrate them to engage the audience in discussion.

If you still need clarification or have questions about writing a statistics paper, don’t hesitate to ask for assistance. Professional writers with experience in statistics are ready to help you improve your writing skills.

Related Posts

Step by Step Guide on The Best Way to Finance Car

The Best Way on How to Get Fund For Business to Grow it Efficiently

Fatal Peril: Unheard Stories from the IPV-to-Prison Pipeline

- Debbie Mukamal

- Andrea N. Cimino

- Blyss Cleveland

- Emma Dougherty

- Jacqueline Lewittes

- Becca Zimmerman

- Debbie Mukamal, Andrea N. Cimino, Blyss Cleveland, Emma Dougherty, Jacqueline Lewittes, and Becca Zimmerman, Fatal Peril: Unheard Stories from the IPV-to-Prison Pipeline , Stanford Criminal Justice Center (Summer 2024).

- Stanford Criminal Justice Center (SCJC)

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Descriptive Statistics | Definitions, Types, Examples

Published on July 9, 2020 by Pritha Bhandari . Revised on June 21, 2023.

Descriptive statistics summarize and organize characteristics of a data set. A data set is a collection of responses or observations from a sample or entire population.

In quantitative research , after collecting data, the first step of statistical analysis is to describe characteristics of the responses, such as the average of one variable (e.g., age), or the relation between two variables (e.g., age and creativity).

The next step is inferential statistics , which help you decide whether your data confirms or refutes your hypothesis and whether it is generalizable to a larger population.

Table of contents

Types of descriptive statistics, frequency distribution, measures of central tendency, measures of variability, univariate descriptive statistics, bivariate descriptive statistics, other interesting articles, frequently asked questions about descriptive statistics.

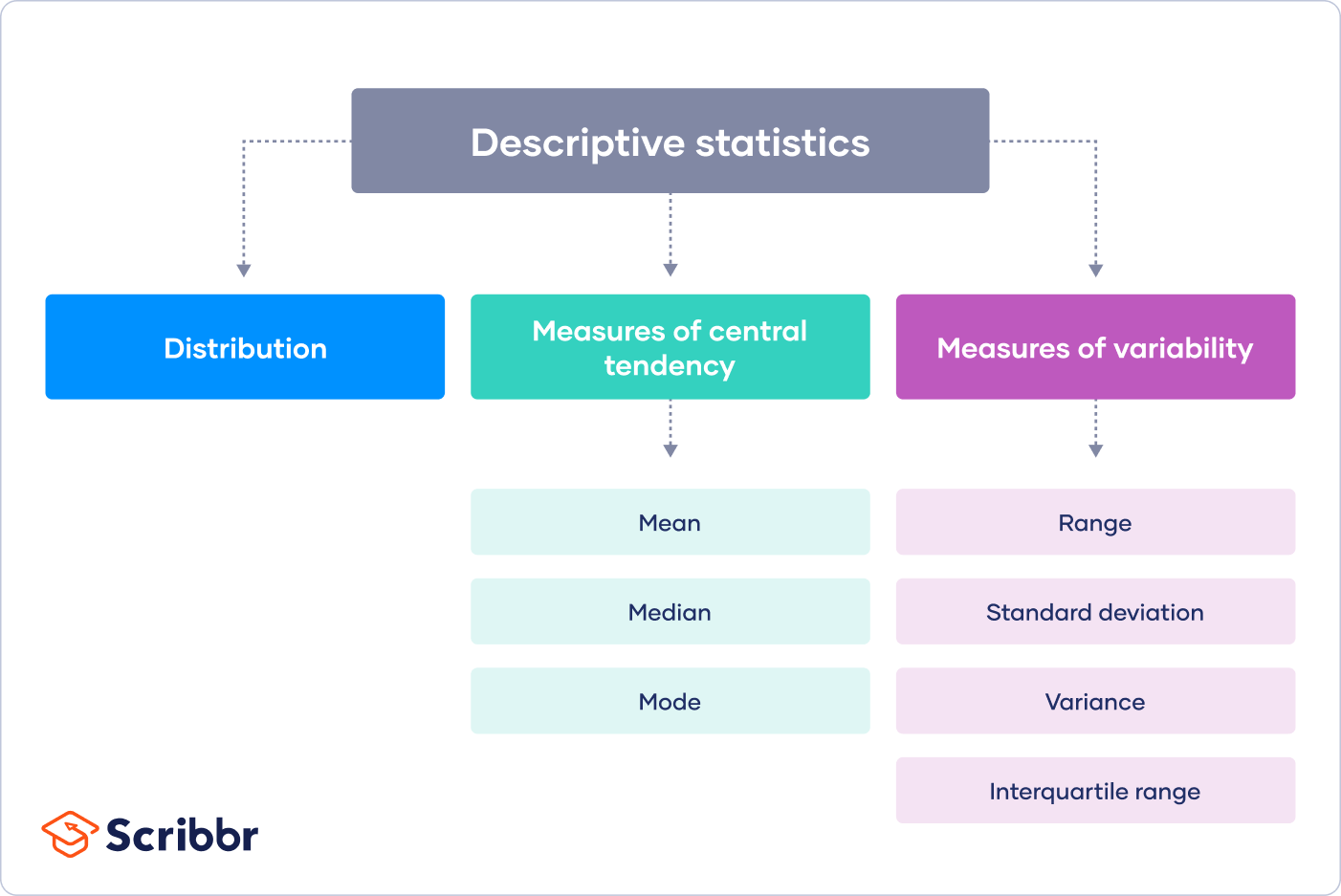

There are 3 main types of descriptive statistics:

- The distribution concerns the frequency of each value.

- The central tendency concerns the averages of the values.

- The variability or dispersion concerns how spread out the values are.

You can apply these to assess only one variable at a time, in univariate analysis, or to compare two or more, in bivariate and multivariate analysis.

- Go to a library

- Watch a movie at a theater

- Visit a national park

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

A data set is made up of a distribution of values, or scores. In tables or graphs, you can summarize the frequency of every possible value of a variable in numbers or percentages. This is called a frequency distribution .

- Simple frequency distribution table

- Grouped frequency distribution table

| Gender | Number |

|---|---|

| Male | 182 |

| Female | 235 |

| Other | 27 |

From this table, you can see that more women than men or people with another gender identity took part in the study. In a grouped frequency distribution, you can group numerical response values and add up the number of responses for each group. You can also convert each of these numbers to percentages.

| Library visits in the past year | Percent |

|---|---|

| 0–4 | 6% |

| 5–8 | 20% |

| 9–12 | 42% |

| 13–16 | 24% |

| 17+ | 8% |

Measures of central tendency estimate the center, or average, of a data set. The mean, median and mode are 3 ways of finding the average.

Here we will demonstrate how to calculate the mean, median, and mode using the first 6 responses of our survey.

The mean , or M , is the most commonly used method for finding the average.

To find the mean, simply add up all response values and divide the sum by the total number of responses. The total number of responses or observations is called N .

| Data set | 15, 3, 12, 0, 24, 3 |

|---|---|

| Sum of all values | 15 + 3 + 12 + 0 + 24 + 3 = 57 |

| Total number of responses | = 6 |

| Mean | Divide the sum of values by to find : 57/6 = |

The median is the value that’s exactly in the middle of a data set.

To find the median, order each response value from the smallest to the biggest. Then , the median is the number in the middle. If there are two numbers in the middle, find their mean.

| Ordered data set | 0, 3, 3, 12, 15, 24 |

|---|---|

| Middle numbers | 3, 12 |

| Median | Find the mean of the two middle numbers: (3 + 12)/2 = |

The mode is the simply the most popular or most frequent response value. A data set can have no mode, one mode, or more than one mode.

To find the mode, order your data set from lowest to highest and find the response that occurs most frequently.

| Ordered data set | 0, 3, 3, 12, 15, 24 |

|---|---|

| Mode | Find the most frequently occurring response: |

Measures of variability give you a sense of how spread out the response values are. The range, standard deviation and variance each reflect different aspects of spread.

The range gives you an idea of how far apart the most extreme response scores are. To find the range , simply subtract the lowest value from the highest value.

Standard deviation

The standard deviation ( s or SD ) is the average amount of variability in your dataset. It tells you, on average, how far each score lies from the mean. The larger the standard deviation, the more variable the data set is.

There are six steps for finding the standard deviation:

- List each score and find their mean.

- Subtract the mean from each score to get the deviation from the mean.

- Square each of these deviations.

- Add up all of the squared deviations.

- Divide the sum of the squared deviations by N – 1.

- Find the square root of the number you found.

| Raw data | Deviation from mean | Squared deviation |

|---|---|---|

| 15 | 15 – 9.5 = 5.5 | 30.25 |

| 3 | 3 – 9.5 = -6.5 | 42.25 |

| 12 | 12 – 9.5 = 2.5 | 6.25 |

| 0 | 0 – 9.5 = -9.5 | 90.25 |

| 24 | 24 – 9.5 = 14.5 | 210.25 |

| 3 | 3 – 9.5 = -6.5 | 42.25 |

| = 9.5 | Sum = 0 | Sum of squares = 421.5 |

Step 5: 421.5/5 = 84.3

Step 6: √84.3 = 9.18

The variance is the average of squared deviations from the mean. Variance reflects the degree of spread in the data set. The more spread the data, the larger the variance is in relation to the mean.

To find the variance, simply square the standard deviation. The symbol for variance is s 2 .

Prevent plagiarism. Run a free check.

Univariate descriptive statistics focus on only one variable at a time. It’s important to examine data from each variable separately using multiple measures of distribution, central tendency and spread. Programs like SPSS and Excel can be used to easily calculate these.

| Visits to the library | |

|---|---|

| 6 | |

| Mean | 9.5 |

| Median | 7.5 |

| Mode | 3 |

| Standard deviation | 9.18 |

| Variance | 84.3 |

| Range | 24 |

If you were to only consider the mean as a measure of central tendency, your impression of the “middle” of the data set can be skewed by outliers, unlike the median or mode.

Likewise, while the range is sensitive to outliers , you should also consider the standard deviation and variance to get easily comparable measures of spread.

If you’ve collected data on more than one variable, you can use bivariate or multivariate descriptive statistics to explore whether there are relationships between them.

In bivariate analysis, you simultaneously study the frequency and variability of two variables to see if they vary together. You can also compare the central tendency of the two variables before performing further statistical tests .

Multivariate analysis is the same as bivariate analysis but with more than two variables.

Contingency table

In a contingency table, each cell represents the intersection of two variables. Usually, an independent variable (e.g., gender) appears along the vertical axis and a dependent one appears along the horizontal axis (e.g., activities). You read “across” the table to see how the independent and dependent variables relate to each other.

| Number of visits to the library in the past year | |||||

|---|---|---|---|---|---|

| Group | 0–4 | 5–8 | 9–12 | 13–16 | 17+ |

| Children | 32 | 68 | 37 | 23 | 22 |

| Adults | 36 | 48 | 43 | 83 | 25 |

Interpreting a contingency table is easier when the raw data is converted to percentages. Percentages make each row comparable to the other by making it seem as if each group had only 100 observations or participants. When creating a percentage-based contingency table, you add the N for each independent variable on the end.

| Visits to the library in the past year (Percentages) | ||||||

|---|---|---|---|---|---|---|

| Group | 0–4 | 5–8 | 9–12 | 13–16 | 17+ | |

| Children | 18% | 37% | 20% | 13% | 12% | 182 |

| Adults | 15% | 20% | 18% | 35% | 11% | 235 |

From this table, it is more clear that similar proportions of children and adults go to the library over 17 times a year. Additionally, children most commonly went to the library between 5 and 8 times, while for adults, this number was between 13 and 16.

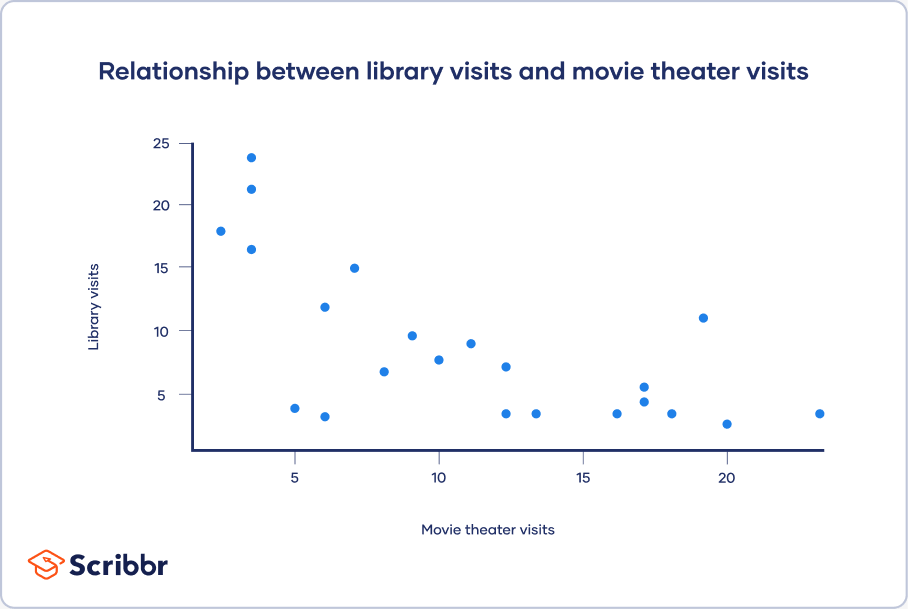

Scatter plots

A scatter plot is a chart that shows you the relationship between two or three variables . It’s a visual representation of the strength of a relationship.

In a scatter plot, you plot one variable along the x-axis and another one along the y-axis. Each data point is represented by a point in the chart.

From your scatter plot, you see that as the number of movies seen at movie theaters increases, the number of visits to the library decreases. Based on your visual assessment of a possible linear relationship, you perform further tests of correlation and regression.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Statistical power

- Pearson correlation

- Degrees of freedom

- Statistical significance

Methodology

- Cluster sampling

- Stratified sampling

- Focus group

- Systematic review

- Ethnography

- Double-Barreled Question

Research bias

- Implicit bias

- Publication bias

- Cognitive bias

- Placebo effect

- Pygmalion effect

- Hindsight bias

- Overconfidence bias

Descriptive statistics summarize the characteristics of a data set. Inferential statistics allow you to test a hypothesis or assess whether your data is generalizable to the broader population.

The 3 main types of descriptive statistics concern the frequency distribution, central tendency, and variability of a dataset.

- Distribution refers to the frequencies of different responses.

- Measures of central tendency give you the average for each response.

- Measures of variability show you the spread or dispersion of your dataset.

- Univariate statistics summarize only one variable at a time.

- Bivariate statistics compare two variables .

- Multivariate statistics compare more than two variables .

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bhandari, P. (2023, June 21). Descriptive Statistics | Definitions, Types, Examples. Scribbr. Retrieved September 3, 2024, from https://www.scribbr.com/statistics/descriptive-statistics/

Is this article helpful?

Pritha Bhandari

Other students also liked, central tendency | understanding the mean, median & mode, variability | calculating range, iqr, variance, standard deviation, inferential statistics | an easy introduction & examples, what is your plagiarism score.

IMAGES

VIDEO

COMMENTS

Overview. Statistical Papers is a forum for presentation and critical assessment of statistical methods encouraging the discussion of methodological foundations and potential applications. The Journal stresses statistical methods that have broad applications, giving special attention to those relevant to the economic and social sciences.

Research Papers / Publications. Search Publication Type Publication Year Yan Sun, Pratik Chaudhari, Ian J. Barnett, Edgar Dobriban A ... Annals of Statistics (Accepted). Behrad Moniri, Seyed Hamed Hassani, Edgar Dobriban, Evaluating the Performance of Large Language Models via Debates.

Read the latest Research articles in Statistics from Scientific Reports

A paper in Physical Review X presents a method for numerically generating data sequences that are as likely to be observed under a power law as a given observed dataset. Zoe Budrikis Research ...

Introduction. Statistical analysis is necessary for any research project seeking to make quantitative conclusions. The following is a primer for research-based statistical analysis. It is intended to be a high-level overview of appropriate statistical testing, while not diving too deep into any specific methodology.

Journal overview. Established in 1888 and published quarterly in March, June, September, and December, the Journal of the American Statistical Association ( JASA ) has long been considered the premier journal of statistical science. Articles focus on statistical applications, theory, and methods throughout all disciplines that make use of data ...

Print ISSN: 1687-952X. Journal of Probability and Statistics is an open access journal publishing papers on the theory and application of probability and statistics that consider new methods and approaches to their implementation, or report significant results for the field. As part of Wiley's Forward Series, this journal offers a streamlined ...

A critical note on the exponentiated EWMA chart. Abdul Haq. William H. Woodall. Short Communication 23 August 2024. Hadamard matrices, quaternions, and the Pearson chi-square statistic. Abbas Alhakim. Regular Article 21 August 2024. Coherent indexes for shifted count and semicontinuous models. Marcelo Bourguignon.

Journal of Applied Statistics is a world-leading journal which provides a forum for communication among statisticians and practitioners for judicious application of statistical principles and innovations of statistical methodology motivated by current and important real-world examples ... The Journal publishes original research papers, review ...

1973-2020 • The Annals of Statistics. 1930-1972 •. The Annals of Statistics publishes research papers of the highest quality reflecting the many facets of contemporary statistics. Primary emphasis is placed on importance and originality, not on formalism. The discipline of statistics has deep roots in both mathematics and in substantive ...

Volume 59 March - December 2018. Issue 4 December 2018. Part 1: Special Issue on Mathematical and Simulation Methods in Statistics and Experimental Design (first 19 articles) Part 2: Regular papers (last 3 articles) Issue 3 September 2018. Issue 2 June 2018.

Several theoretical properties of the distribution are studied in detail including expressions for i... C. Satheesh Kumar and Subha R. Nair. Journal of Statistical Distributions and Applications 2021 8:14. Research Published on: 12 December 2021. Full Text. PDF.

About the journal. Statistics & Probability Letters adopts a novel and highly innovative approach to the publication of research findings in statistics and probability. It features concise articles, rapid publication and broad coverage of the statistics and probability literature. Statistics & Probability Letters is a refereed journal.

Journal of Educational and Behavioral Statistics. Co-sponsored by the ASA and American Educational Research Association, JEBS includes papers that present new methods of analysis, critical reviews of current practice, tutorial presentations of less well-known methods, and novel applications of already-known methods.

The Beginner's Guide to Statistical Analysis | 5 Steps & ...

Using the data from these three rows, we can draw the following descriptive picture. Mentabil scores spanned a range of 50 (from a minimum score of 85 to a maximum score of 135). Speed scores had a range of 16.05 s (from 1.05 s - the fastest quality decision to 17.10 - the slowest quality decision).

Bad statistics may lead to bad research, and bad research may lead to unethical practice. Hence, an adequate knowledge of statistics and the appropriate use of statistical tests are important. An appropriate knowledge about the basic statistical methods will go a long way in improving the research designs and producing quality medical research ...

Only a few of the most influential papers on the field of statistics are included on our list. through papers in statistics'. Four of our most cited papers, Duncan (1955), Kramer. (1956), and ...

In this paper, we substantiate our premise that statistics is one of the most important disciplines to provide tools and methods to find structure in and to give deeper insight into data, and the most important discipline to analyze and quantify uncertainty. We give an overview over different proposed structures of Data Science and address the impact of statistics on such steps as data ...

Correction to: On efficiency of some restricted estimators in a multivariate regression model. Sévérien Nkurunziza. Correction 23 July 2022 Pages: 365 - 365. Volume 64, issue 1 articles listing for Statistical Papers.

A statistics research paper is an academic document presenting original findings or analyses derived from the data's collection, organization, analysis, and interpretation. It addresses research questions or hypotheses within the field of statistics.

The "Statistical Analyses and Methods in the Published Literature" ( SAMPL) guidelines covers basic statistical reporting for research in biomedical journals. While specific to PLOS ONE, these guidelines should be applicable to most research contexts since the journal serves many research disciplines.

Publish Date: September 4, 2024 Format: Report Citation(s): Debbie Mukamal, Andrea N. Cimino, Blyss Cleveland, Emma Dougherty, Jacqueline Lewittes, and Becca Zimmerman, Fatal Peril: Unheard Stories from the IPV-to-Prison Pipeline, Stanford Criminal Justice Center (Summer 2024). Related Organization(s):

Descriptive Statistics | Definitions, Types, Examples

Paper statistics. Downloads. 4. Abstract Views. 25. PlumX Metrics. ... Follow. University of Michigan Law School, Public Law & Legal Theory Research Paper Series. Subscribe to this free journal for more curated articles on this topic FOLLOWERS. 5,316. PAPERS. 957. This Journal is curated by: ...