Center for Teaching

Teaching problem solving.

Print Version

Tips and Techniques

Expert vs. novice problem solvers, communicate.

- Have students identify specific problems, difficulties, or confusions . Don’t waste time working through problems that students already understand.

- If students are unable to articulate their concerns, determine where they are having trouble by asking them to identify the specific concepts or principles associated with the problem.

- In a one-on-one tutoring session, ask the student to work his/her problem out loud . This slows down the thinking process, making it more accurate and allowing you to access understanding.

- When working with larger groups you can ask students to provide a written “two-column solution.” Have students write up their solution to a problem by putting all their calculations in one column and all of their reasoning (in complete sentences) in the other column. This helps them to think critically about their own problem solving and helps you to more easily identify where they may be having problems. Two-Column Solution (Math) Two-Column Solution (Physics)

Encourage Independence

- Model the problem solving process rather than just giving students the answer. As you work through the problem, consider how a novice might struggle with the concepts and make your thinking clear

- Have students work through problems on their own. Ask directing questions or give helpful suggestions, but provide only minimal assistance and only when needed to overcome obstacles.

- Don’t fear group work ! Students can frequently help each other, and talking about a problem helps them think more critically about the steps needed to solve the problem. Additionally, group work helps students realize that problems often have multiple solution strategies, some that might be more effective than others

Be sensitive

- Frequently, when working problems, students are unsure of themselves. This lack of confidence may hamper their learning. It is important to recognize this when students come to us for help, and to give each student some feeling of mastery. Do this by providing positive reinforcement to let students know when they have mastered a new concept or skill.

Encourage Thoroughness and Patience

- Try to communicate that the process is more important than the answer so that the student learns that it is OK to not have an instant solution. This is learned through your acceptance of his/her pace of doing things, through your refusal to let anxiety pressure you into giving the right answer, and through your example of problem solving through a step-by step process.

Experts (teachers) in a particular field are often so fluent in solving problems from that field that they can find it difficult to articulate the problem solving principles and strategies they use to novices (students) in their field because these principles and strategies are second nature to the expert. To teach students problem solving skills, a teacher should be aware of principles and strategies of good problem solving in his or her discipline .

The mathematician George Polya captured the problem solving principles and strategies he used in his discipline in the book How to Solve It: A New Aspect of Mathematical Method (Princeton University Press, 1957). The book includes a summary of Polya’s problem solving heuristic as well as advice on the teaching of problem solving.

Teaching Guides

- Online Course Development Resources

- Principles & Frameworks

- Pedagogies & Strategies

- Reflecting & Assessing

- Challenges & Opportunities

- Populations & Contexts

Quick Links

- Services for Departments and Schools

- Examples of Online Instructional Modules

- Faculty & Staff

Teaching problem solving

Strategies for teaching problem solving apply across disciplines and instructional contexts. First, introduce the problem and explain how people in your discipline generally make sense of the given information. Then, explain how to apply these approaches to solve the problem.

Introducing the problem

Explaining how people in your discipline understand and interpret these types of problems can help students develop the skills they need to understand the problem (and find a solution). After introducing how you would go about solving a problem, you could then ask students to:

- frame the problem in their own words

- define key terms and concepts

- determine statements that accurately represent the givens of a problem

- identify analogous problems

- determine what information is needed to solve the problem

Working on solutions

In the solution phase, one develops and then implements a coherent plan for solving the problem. As you help students with this phase, you might ask them to:

- identify the general model or procedure they have in mind for solving the problem

- set sub-goals for solving the problem

- identify necessary operations and steps

- draw conclusions

- carry out necessary operations

You can help students tackle a problem effectively by asking them to:

- systematically explain each step and its rationale

- explain how they would approach solving the problem

- help you solve the problem by posing questions at key points in the process

- work together in small groups (3 to 5 students) to solve the problem and then have the solution presented to the rest of the class (either by you or by a student in the group)

In all cases, the more you get the students to articulate their own understandings of the problem and potential solutions, the more you can help them develop their expertise in approaching problems in your discipline.

Evidence-Based Teaching Guides → Evidence-Based Teaching Guides → Problem Solving

Instruction followed by problem solving.

- In the PLTL approach, the instruction phase takes place in the traditional classroom, often in the form of lecture, and the problem-solving phase takes place as students work in collaborative groups (typically ranging from 6-10 students) facilitated by a trained undergraduate peer leader for 90−120 minutes each week.

- This approach provides facilitated help to students in their courses, improves students’ problem-solving skills, enhances students’ communication abilities, and provides an active-learning experience for students.

- The peer leader should not help solve the problems with the students in the group, but guides them to discuss their reasoning by asking probing questions and to equally participate by using different collaborative learning strategies (such as round robin, scribe, and pairs). Students decide as a group whether the answer is correct or not, which encourages the students to consider the problem more deeply.

- PLTL is used in many STEM undergraduate disciplines including biology, chemistry, mathematics, physics, psychology, and computer science, and in all types of institutions. It has been used at all different levels of undergraduate courses. If done well, PLTL can improve course grades, course and series retention, standardized and course exam performance, and DWF rates.

- PLTL can also be beneficial to peer leaders, self-reporting greater content learning, improved study skills, improved interpersonal skills, increased leadership skills, and confidence.

- In the constructivist framework, teaching is not the transmission of knowledge from the instructor to the student. The instructor is a facilitator or guide, giving structure to the learning process.

- Students construct meaning (e.g., develop concepts and models) through active involvement with the material and by making sense of their experiences.

- Social constructivism assumes that students’ understanding and sense-making are developed jointly in collaboration with other students.

- The peer leader is considered to be an effective guide because they are in the ZPD of the students in their PLTL group.

- The PLTL program is integral to the course and integrated with other course components.

- how to effectively create a community of practice within their group such that students will make joint decisions while solving the problems, discuss multiple approaches to solve the problems, and practice professional social and communication skills;

- practice with questioning strategies to support students in deepening their discussion to include explanations for their ideas and problem-solving processes;

- learning about how students learn based on psychology and education research, and how to apply this information while facilitating their group.

- require students to work collaboratively to solve problems;

- encourage students to engage deeply with the content (i.e., include prompts asking them to explain their reasoning or process), disciplinary vocabulary (i.e., include prompts asking them to define terms in their own words), and essential skills;

- become more complex throughout the problem set while ensuring that the students are always within their Zone of Proximal Development.

- Organizational arrangements promote active learning via focus on group size, room space, length of session, and low noise level.

- The institution and department encourage and support innovative teaching.

- In worked examples, the instruction takes the form of example problems. These problems include a problem statement and a step-by-step procedure for solving the problem, intended to show how an expert might solve this type of problem. After this explicit instruction, students complete practice problems like the worked examples.

- Worked examples plus practice problems have been found to be beneficial when compared to instruction followed by problem solving alone. This benefit is observed for novices learning to solve complex problems but is lost as learners become more expert in the domain and is not observed for simple problems.

- Worked examples provide guidance that can help students learn to do analogous problems (near transfer) and may have similar benefits to productive failure and scaffolded guided inquiry for near transfer.

- Worked examples help students in early-to-intermediate stages of cognitive skill development as they are learning to abstract general principles for solving a given type of problem.

- Comparing worked examples that focus on different types of problems can also help students identify deep features and abstract general principles that help them know when to use a given problem solving approach.

- Problem-solving practice that incorporates strategies like retrieval and interleaving become more effective as students seek to become faster and more accurate.

- Integrating sources of information (e.g., images integrated with explanatory text or auditory explanations),

- Including visual cues to help students readily follow the explanation,

- Fostering identification of subgoals within a problem, either by labeling or visually separating chunks of a problem solution corresponding to a subgoal.

- Lessons should include at least two worked examples for a type of problem.

- Worked examples should be accompanied by practice problems and should be interspersed throughout a lesson rather than combined in one section of the lesson.

- Different problem types should use similar cover stories to emphasize deep problem features.

- Relate solutions to abstract principles

- Compare different examples and self-explain key similarities and differences.

- If multiple worked examples for a given type of problem are used, it can be beneficial to remove guidance in stages (also known as fading). Backwards fading (leaving blanks later in the problems first, then earlier and earlier) has been found to be more beneficial than forward fading.

How To Use This Guide

Return to Map

Classroom Q&A

With larry ferlazzo.

In this EdWeek blog, an experiment in knowledge-gathering, Ferlazzo will address readers’ questions on classroom management, ELL instruction, lesson planning, and other issues facing teachers. Send your questions to [email protected]. Read more from this blog.

Eight Instructional Strategies for Promoting Critical Thinking

- Share article

(This is the first post in a three-part series.)

The new question-of-the-week is:

What is critical thinking and how can we integrate it into the classroom?

This three-part series will explore what critical thinking is, if it can be specifically taught and, if so, how can teachers do so in their classrooms.

Today’s guests are Dara Laws Savage, Patrick Brown, Meg Riordan, Ph.D., and Dr. PJ Caposey. Dara, Patrick, and Meg were also guests on my 10-minute BAM! Radio Show . You can also find a list of, and links to, previous shows here.

You might also be interested in The Best Resources On Teaching & Learning Critical Thinking In The Classroom .

Current Events

Dara Laws Savage is an English teacher at the Early College High School at Delaware State University, where she serves as a teacher and instructional coach and lead mentor. Dara has been teaching for 25 years (career preparation, English, photography, yearbook, newspaper, and graphic design) and has presented nationally on project-based learning and technology integration:

There is so much going on right now and there is an overload of information for us to process. Did you ever stop to think how our students are processing current events? They see news feeds, hear news reports, and scan photos and posts, but are they truly thinking about what they are hearing and seeing?

I tell my students that my job is not to give them answers but to teach them how to think about what they read and hear. So what is critical thinking and how can we integrate it into the classroom? There are just as many definitions of critical thinking as there are people trying to define it. However, the Critical Think Consortium focuses on the tools to create a thinking-based classroom rather than a definition: “Shape the climate to support thinking, create opportunities for thinking, build capacity to think, provide guidance to inform thinking.” Using these four criteria and pairing them with current events, teachers easily create learning spaces that thrive on thinking and keep students engaged.

One successful technique I use is the FIRE Write. Students are given a quote, a paragraph, an excerpt, or a photo from the headlines. Students are asked to F ocus and respond to the selection for three minutes. Next, students are asked to I dentify a phrase or section of the photo and write for two minutes. Third, students are asked to R eframe their response around a specific word, phrase, or section within their previous selection. Finally, students E xchange their thoughts with a classmate. Within the exchange, students also talk about how the selection connects to what we are covering in class.

There was a controversial Pepsi ad in 2017 involving Kylie Jenner and a protest with a police presence. The imagery in the photo was strikingly similar to a photo that went viral with a young lady standing opposite a police line. Using that image from a current event engaged my students and gave them the opportunity to critically think about events of the time.

Here are the two photos and a student response:

F - Focus on both photos and respond for three minutes

In the first picture, you see a strong and courageous black female, bravely standing in front of two officers in protest. She is risking her life to do so. Iesha Evans is simply proving to the world she does NOT mean less because she is black … and yet officers are there to stop her. She did not step down. In the picture below, you see Kendall Jenner handing a police officer a Pepsi. Maybe this wouldn’t be a big deal, except this was Pepsi’s weak, pathetic, and outrageous excuse of a commercial that belittles the whole movement of people fighting for their lives.

I - Identify a word or phrase, underline it, then write about it for two minutes

A white, privileged female in place of a fighting black woman was asking for trouble. A struggle we are continuously fighting every day, and they make a mockery of it. “I know what will work! Here Mr. Police Officer! Drink some Pepsi!” As if. Pepsi made a fool of themselves, and now their already dwindling fan base continues to ever shrink smaller.

R - Reframe your thoughts by choosing a different word, then write about that for one minute

You don’t know privilege until it’s gone. You don’t know privilege while it’s there—but you can and will be made accountable and aware. Don’t use it for evil. You are not stupid. Use it to do something. Kendall could’ve NOT done the commercial. Kendall could’ve released another commercial standing behind a black woman. Anything!

Exchange - Remember to discuss how this connects to our school song project and our previous discussions?

This connects two ways - 1) We want to convey a strong message. Be powerful. Show who we are. And Pepsi definitely tried. … Which leads to the second connection. 2) Not mess up and offend anyone, as had the one alma mater had been linked to black minstrels. We want to be amazing, but we have to be smart and careful and make sure we include everyone who goes to our school and everyone who may go to our school.

As a final step, students read and annotate the full article and compare it to their initial response.

Using current events and critical-thinking strategies like FIRE writing helps create a learning space where thinking is the goal rather than a score on a multiple-choice assessment. Critical-thinking skills can cross over to any of students’ other courses and into life outside the classroom. After all, we as teachers want to help the whole student be successful, and critical thinking is an important part of navigating life after they leave our classrooms.

‘Before-Explore-Explain’

Patrick Brown is the executive director of STEM and CTE for the Fort Zumwalt school district in Missouri and an experienced educator and author :

Planning for critical thinking focuses on teaching the most crucial science concepts, practices, and logical-thinking skills as well as the best use of instructional time. One way to ensure that lessons maintain a focus on critical thinking is to focus on the instructional sequence used to teach.

Explore-before-explain teaching is all about promoting critical thinking for learners to better prepare students for the reality of their world. What having an explore-before-explain mindset means is that in our planning, we prioritize giving students firsthand experiences with data, allow students to construct evidence-based claims that focus on conceptual understanding, and challenge students to discuss and think about the why behind phenomena.

Just think of the critical thinking that has to occur for students to construct a scientific claim. 1) They need the opportunity to collect data, analyze it, and determine how to make sense of what the data may mean. 2) With data in hand, students can begin thinking about the validity and reliability of their experience and information collected. 3) They can consider what differences, if any, they might have if they completed the investigation again. 4) They can scrutinize outlying data points for they may be an artifact of a true difference that merits further exploration of a misstep in the procedure, measuring device, or measurement. All of these intellectual activities help them form more robust understanding and are evidence of their critical thinking.

In explore-before-explain teaching, all of these hard critical-thinking tasks come before teacher explanations of content. Whether we use discovery experiences, problem-based learning, and or inquiry-based activities, strategies that are geared toward helping students construct understanding promote critical thinking because students learn content by doing the practices valued in the field to generate knowledge.

An Issue of Equity

Meg Riordan, Ph.D., is the chief learning officer at The Possible Project, an out-of-school program that collaborates with youth to build entrepreneurial skills and mindsets and provides pathways to careers and long-term economic prosperity. She has been in the field of education for over 25 years as a middle and high school teacher, school coach, college professor, regional director of N.Y.C. Outward Bound Schools, and director of external research with EL Education:

Although critical thinking often defies straightforward definition, most in the education field agree it consists of several components: reasoning, problem-solving, and decisionmaking, plus analysis and evaluation of information, such that multiple sides of an issue can be explored. It also includes dispositions and “the willingness to apply critical-thinking principles, rather than fall back on existing unexamined beliefs, or simply believe what you’re told by authority figures.”

Despite variation in definitions, critical thinking is nonetheless promoted as an essential outcome of students’ learning—we want to see students and adults demonstrate it across all fields, professions, and in their personal lives. Yet there is simultaneously a rationing of opportunities in schools for students of color, students from under-resourced communities, and other historically marginalized groups to deeply learn and practice critical thinking.

For example, many of our most underserved students often spend class time filling out worksheets, promoting high compliance but low engagement, inquiry, critical thinking, or creation of new ideas. At a time in our world when college and careers are critical for participation in society and the global, knowledge-based economy, far too many students struggle within classrooms and schools that reinforce low-expectations and inequity.

If educators aim to prepare all students for an ever-evolving marketplace and develop skills that will be valued no matter what tomorrow’s jobs are, then we must move critical thinking to the forefront of classroom experiences. And educators must design learning to cultivate it.

So, what does that really look like?

Unpack and define critical thinking

To understand critical thinking, educators need to first unpack and define its components. What exactly are we looking for when we speak about reasoning or exploring multiple perspectives on an issue? How does problem-solving show up in English, math, science, art, or other disciplines—and how is it assessed? At Two Rivers, an EL Education school, the faculty identified five constructs of critical thinking, defined each, and created rubrics to generate a shared picture of quality for teachers and students. The rubrics were then adapted across grade levels to indicate students’ learning progressions.

At Avenues World School, critical thinking is one of the Avenues World Elements and is an enduring outcome embedded in students’ early experiences through 12th grade. For instance, a kindergarten student may be expected to “identify cause and effect in familiar contexts,” while an 8th grader should demonstrate the ability to “seek out sufficient evidence before accepting a claim as true,” “identify bias in claims and evidence,” and “reconsider strongly held points of view in light of new evidence.”

When faculty and students embrace a common vision of what critical thinking looks and sounds like and how it is assessed, educators can then explicitly design learning experiences that call for students to employ critical-thinking skills. This kind of work must occur across all schools and programs, especially those serving large numbers of students of color. As Linda Darling-Hammond asserts , “Schools that serve large numbers of students of color are least likely to offer the kind of curriculum needed to ... help students attain the [critical-thinking] skills needed in a knowledge work economy. ”

So, what can it look like to create those kinds of learning experiences?

Designing experiences for critical thinking

After defining a shared understanding of “what” critical thinking is and “how” it shows up across multiple disciplines and grade levels, it is essential to create learning experiences that impel students to cultivate, practice, and apply these skills. There are several levers that offer pathways for teachers to promote critical thinking in lessons:

1.Choose Compelling Topics: Keep it relevant

A key Common Core State Standard asks for students to “write arguments to support claims in an analysis of substantive topics or texts using valid reasoning and relevant and sufficient evidence.” That might not sound exciting or culturally relevant. But a learning experience designed for a 12th grade humanities class engaged learners in a compelling topic— policing in America —to analyze and evaluate multiple texts (including primary sources) and share the reasoning for their perspectives through discussion and writing. Students grappled with ideas and their beliefs and employed deep critical-thinking skills to develop arguments for their claims. Embedding critical-thinking skills in curriculum that students care about and connect with can ignite powerful learning experiences.

2. Make Local Connections: Keep it real

At The Possible Project , an out-of-school-time program designed to promote entrepreneurial skills and mindsets, students in a recent summer online program (modified from in-person due to COVID-19) explored the impact of COVID-19 on their communities and local BIPOC-owned businesses. They learned interviewing skills through a partnership with Everyday Boston , conducted virtual interviews with entrepreneurs, evaluated information from their interviews and local data, and examined their previously held beliefs. They created blog posts and videos to reflect on their learning and consider how their mindsets had changed as a result of the experience. In this way, we can design powerful community-based learning and invite students into productive struggle with multiple perspectives.

3. Create Authentic Projects: Keep it rigorous

At Big Picture Learning schools, students engage in internship-based learning experiences as a central part of their schooling. Their school-based adviser and internship-based mentor support them in developing real-world projects that promote deeper learning and critical-thinking skills. Such authentic experiences teach “young people to be thinkers, to be curious, to get from curiosity to creation … and it helps students design a learning experience that answers their questions, [providing an] opportunity to communicate it to a larger audience—a major indicator of postsecondary success.” Even in a remote environment, we can design projects that ask more of students than rote memorization and that spark critical thinking.

Our call to action is this: As educators, we need to make opportunities for critical thinking available not only to the affluent or those fortunate enough to be placed in advanced courses. The tools are available, let’s use them. Let’s interrogate our current curriculum and design learning experiences that engage all students in real, relevant, and rigorous experiences that require critical thinking and prepare them for promising postsecondary pathways.

Critical Thinking & Student Engagement

Dr. PJ Caposey is an award-winning educator, keynote speaker, consultant, and author of seven books who currently serves as the superintendent of schools for the award-winning Meridian CUSD 223 in northwest Illinois. You can find PJ on most social-media platforms as MCUSDSupe:

When I start my keynote on student engagement, I invite two people up on stage and give them each five paper balls to shoot at a garbage can also conveniently placed on stage. Contestant One shoots their shot, and the audience gives approval. Four out of 5 is a heckuva score. Then just before Contestant Two shoots, I blindfold them and start moving the garbage can back and forth. I usually try to ensure that they can at least make one of their shots. Nobody is successful in this unfair environment.

I thank them and send them back to their seats and then explain that this little activity was akin to student engagement. While we all know we want student engagement, we are shooting at different targets. More importantly, for teachers, it is near impossible for them to hit a target that is moving and that they cannot see.

Within the world of education and particularly as educational leaders, we have failed to simplify what student engagement looks like, and it is impossible to define or articulate what student engagement looks like if we cannot clearly articulate what critical thinking is and looks like in a classroom. Because, simply, without critical thought, there is no engagement.

The good news here is that critical thought has been defined and placed into taxonomies for decades already. This is not something new and not something that needs to be redefined. I am a Bloom’s person, but there is nothing wrong with DOK or some of the other taxonomies, either. To be precise, I am a huge fan of Daggett’s Rigor and Relevance Framework. I have used that as a core element of my practice for years, and it has shaped who I am as an instructional leader.

So, in order to explain critical thought, a teacher or a leader must familiarize themselves with these tried and true taxonomies. Easy, right? Yes, sort of. The issue is not understanding what critical thought is; it is the ability to integrate it into the classrooms. In order to do so, there are a four key steps every educator must take.

- Integrating critical thought/rigor into a lesson does not happen by chance, it happens by design. Planning for critical thought and engagement is much different from planning for a traditional lesson. In order to plan for kids to think critically, you have to provide a base of knowledge and excellent prompts to allow them to explore their own thinking in order to analyze, evaluate, or synthesize information.

- SIDE NOTE – Bloom’s verbs are a great way to start when writing objectives, but true planning will take you deeper than this.

QUESTIONING

- If the questions and prompts given in a classroom have correct answers or if the teacher ends up answering their own questions, the lesson will lack critical thought and rigor.

- Script five questions forcing higher-order thought prior to every lesson. Experienced teachers may not feel they need this, but it helps to create an effective habit.

- If lessons are rigorous and assessments are not, students will do well on their assessments, and that may not be an accurate representation of the knowledge and skills they have mastered. If lessons are easy and assessments are rigorous, the exact opposite will happen. When deciding to increase critical thought, it must happen in all three phases of the game: planning, instruction, and assessment.

TALK TIME / CONTROL

- To increase rigor, the teacher must DO LESS. This feels counterintuitive but is accurate. Rigorous lessons involving tons of critical thought must allow for students to work on their own, collaborate with peers, and connect their ideas. This cannot happen in a silent room except for the teacher talking. In order to increase rigor, decrease talk time and become comfortable with less control. Asking questions and giving prompts that lead to no true correct answer also means less control. This is a tough ask for some teachers. Explained differently, if you assign one assignment and get 30 very similar products, you have most likely assigned a low-rigor recipe. If you assign one assignment and get multiple varied products, then the students have had a chance to think deeply, and you have successfully integrated critical thought into your classroom.

Thanks to Dara, Patrick, Meg, and PJ for their contributions!

Please feel free to leave a comment with your reactions to the topic or directly to anything that has been said in this post.

Consider contributing a question to be answered in a future post. You can send one to me at [email protected] . When you send it in, let me know if I can use your real name if it’s selected or if you’d prefer remaining anonymous and have a pseudonym in mind.

You can also contact me on Twitter at @Larryferlazzo .

Education Week has published a collection of posts from this blog, along with new material, in an e-book form. It’s titled Classroom Management Q&As: Expert Strategies for Teaching .

Just a reminder; you can subscribe and receive updates from this blog via email (The RSS feed for this blog, and for all Ed Week articles, has been changed by the new redesign—new ones won’t be available until February). And if you missed any of the highlights from the first nine years of this blog, you can see a categorized list below.

- This Year’s Most Popular Q&A Posts

- Race & Racism in Schools

- School Closures & the Coronavirus Crisis

- Classroom-Management Advice

- Best Ways to Begin the School Year

- Best Ways to End the School Year

- Student Motivation & Social-Emotional Learning

- Implementing the Common Core

- Facing Gender Challenges in Education

- Teaching Social Studies

- Cooperative & Collaborative Learning

- Using Tech in the Classroom

- Student Voices

- Parent Engagement in Schools

- Teaching English-Language Learners

- Reading Instruction

- Writing Instruction

- Education Policy Issues

- Differentiating Instruction

- Math Instruction

- Science Instruction

- Advice for New Teachers

- Author Interviews

- Entering the Teaching Profession

- The Inclusive Classroom

- Learning & the Brain

- Administrator Leadership

- Teacher Leadership

- Relationships in Schools

- Professional Development

- Instructional Strategies

- Best of Classroom Q&A

- Professional Collaboration

- Classroom Organization

- Mistakes in Education

- Project-Based Learning

I am also creating a Twitter list including all contributors to this column .

The opinions expressed in Classroom Q&A With Larry Ferlazzo are strictly those of the author(s) and do not reflect the opinions or endorsement of Editorial Projects in Education, or any of its publications.

Sign Up for EdWeek Update

Edweek top school jobs.

Sign Up & Sign In

- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Verywell Mind Insights

- 2023 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

Overview of the Problem-Solving Mental Process

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Rachel Goldman, PhD FTOS, is a licensed psychologist, clinical assistant professor, speaker, wellness expert specializing in eating behaviors, stress management, and health behavior change.

:max_bytes(150000):strip_icc():format(webp)/Rachel-Goldman-1000-a42451caacb6423abecbe6b74e628042.jpg)

- Identify the Problem

- Define the Problem

- Form a Strategy

- Organize Information

- Allocate Resources

- Monitor Progress

- Evaluate the Results

Frequently Asked Questions

Problem-solving is a mental process that involves discovering, analyzing, and solving problems. The ultimate goal of problem-solving is to overcome obstacles and find a solution that best resolves the issue.

The best strategy for solving a problem depends largely on the unique situation. In some cases, people are better off learning everything they can about the issue and then using factual knowledge to come up with a solution. In other instances, creativity and insight are the best options.

It is not necessary to follow problem-solving steps sequentially, It is common to skip steps or even go back through steps multiple times until the desired solution is reached.

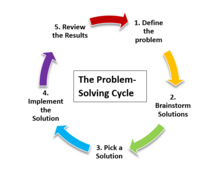

In order to correctly solve a problem, it is often important to follow a series of steps. Researchers sometimes refer to this as the problem-solving cycle. While this cycle is portrayed sequentially, people rarely follow a rigid series of steps to find a solution.

The following steps include developing strategies and organizing knowledge.

1. Identifying the Problem

While it may seem like an obvious step, identifying the problem is not always as simple as it sounds. In some cases, people might mistakenly identify the wrong source of a problem, which will make attempts to solve it inefficient or even useless.

Some strategies that you might use to figure out the source of a problem include :

- Asking questions about the problem

- Breaking the problem down into smaller pieces

- Looking at the problem from different perspectives

- Conducting research to figure out what relationships exist between different variables

2. Defining the Problem

After the problem has been identified, it is important to fully define the problem so that it can be solved. You can define a problem by operationally defining each aspect of the problem and setting goals for what aspects of the problem you will address

At this point, you should focus on figuring out which aspects of the problems are facts and which are opinions. State the problem clearly and identify the scope of the solution.

3. Forming a Strategy

After the problem has been identified, it is time to start brainstorming potential solutions. This step usually involves generating as many ideas as possible without judging their quality. Once several possibilities have been generated, they can be evaluated and narrowed down.

The next step is to develop a strategy to solve the problem. The approach used will vary depending upon the situation and the individual's unique preferences. Common problem-solving strategies include heuristics and algorithms.

- Heuristics are mental shortcuts that are often based on solutions that have worked in the past. They can work well if the problem is similar to something you have encountered before and are often the best choice if you need a fast solution.

- Algorithms are step-by-step strategies that are guaranteed to produce a correct result. While this approach is great for accuracy, it can also consume time and resources.

Heuristics are often best used when time is of the essence, while algorithms are a better choice when a decision needs to be as accurate as possible.

4. Organizing Information

Before coming up with a solution, you need to first organize the available information. What do you know about the problem? What do you not know? The more information that is available the better prepared you will be to come up with an accurate solution.

When approaching a problem, it is important to make sure that you have all the data you need. Making a decision without adequate information can lead to biased or inaccurate results.

5. Allocating Resources

Of course, we don't always have unlimited money, time, and other resources to solve a problem. Before you begin to solve a problem, you need to determine how high priority it is.

If it is an important problem, it is probably worth allocating more resources to solving it. If, however, it is a fairly unimportant problem, then you do not want to spend too much of your available resources on coming up with a solution.

At this stage, it is important to consider all of the factors that might affect the problem at hand. This includes looking at the available resources, deadlines that need to be met, and any possible risks involved in each solution. After careful evaluation, a decision can be made about which solution to pursue.

6. Monitoring Progress

After selecting a problem-solving strategy, it is time to put the plan into action and see if it works. This step might involve trying out different solutions to see which one is the most effective.

It is also important to monitor the situation after implementing a solution to ensure that the problem has been solved and that no new problems have arisen as a result of the proposed solution.

Effective problem-solvers tend to monitor their progress as they work towards a solution. If they are not making good progress toward reaching their goal, they will reevaluate their approach or look for new strategies .

7. Evaluating the Results

After a solution has been reached, it is important to evaluate the results to determine if it is the best possible solution to the problem. This evaluation might be immediate, such as checking the results of a math problem to ensure the answer is correct, or it can be delayed, such as evaluating the success of a therapy program after several months of treatment.

Once a problem has been solved, it is important to take some time to reflect on the process that was used and evaluate the results. This will help you to improve your problem-solving skills and become more efficient at solving future problems.

A Word From Verywell

It is important to remember that there are many different problem-solving processes with different steps, and this is just one example. Problem-solving in real-world situations requires a great deal of resourcefulness, flexibility, resilience, and continuous interaction with the environment.

Get Advice From The Verywell Mind Podcast

Hosted by therapist Amy Morin, LCSW, this episode of The Verywell Mind Podcast shares how you can stop dwelling in a negative mindset.

Follow Now : Apple Podcasts / Spotify / Google Podcasts

You can become a better problem solving by:

- Practicing brainstorming and coming up with multiple potential solutions to problems

- Being open-minded and considering all possible options before making a decision

- Breaking down problems into smaller, more manageable pieces

- Asking for help when needed

- Researching different problem-solving techniques and trying out new ones

- Learning from mistakes and using them as opportunities to grow

It's important to communicate openly and honestly with your partner about what's going on. Try to see things from their perspective as well as your own. Work together to find a resolution that works for both of you. Be willing to compromise and accept that there may not be a perfect solution.

Take breaks if things are getting too heated, and come back to the problem when you feel calm and collected. Don't try to fix every problem on your own—consider asking a therapist or counselor for help and insight.

If you've tried everything and there doesn't seem to be a way to fix the problem, you may have to learn to accept it. This can be difficult, but try to focus on the positive aspects of your life and remember that every situation is temporary. Don't dwell on what's going wrong—instead, think about what's going right. Find support by talking to friends or family. Seek professional help if you're having trouble coping.

Davidson JE, Sternberg RJ, editors. The Psychology of Problem Solving . Cambridge University Press; 2003. doi:10.1017/CBO9780511615771

Sarathy V. Real world problem-solving . Front Hum Neurosci . 2018;12:261. Published 2018 Jun 26. doi:10.3389/fnhum.2018.00261

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

- Our Mission

3 Simple Strategies to Improve Students’ Problem-Solving Skills

These strategies are designed to make sure students have a good understanding of problems before attempting to solve them.

Research provides a striking revelation about problem solvers. The best problem solvers approach problems much differently than novices. For instance, one meta-study showed that when experts evaluate graphs , they tend to spend less time on tasks and answer choices and more time on evaluating the axes’ labels and the relationships of variables within the graphs. In other words, they spend more time up front making sense of the data before moving to addressing the task.

While slower in solving problems, experts use this additional up-front time to more efficiently and effectively solve the problem. In one study, researchers found that experts were much better at “information extraction” or pulling the information they needed to solve the problem later in the problem than novices. This was due to the fact that they started a problem-solving process by evaluating specific assumptions within problems, asking predictive questions, and then comparing and contrasting their predictions with results. For example, expert problem solvers look at the problem context and ask a number of questions:

- What do we know about the context of the problem?

- What assumptions are underlying the problem? What’s the story here?

- What qualitative and quantitative information is pertinent?

- What might the problem context be telling us? What questions arise from the information we are reading or reviewing?

- What are important trends and patterns?

As such, expert problem solvers don’t jump to the presented problem or rush to solutions. They invest the time necessary to make sense of the problem.

Now, think about your own students: Do they immediately jump to the question, or do they take time to understand the problem context? Do they identify the relevant variables, look for patterns, and then focus on the specific tasks?

If your students are struggling to develop the habit of sense-making in a problem- solving context, this is a perfect time to incorporate a few short and sharp strategies to support them.

3 Ways to Improve Student Problem-Solving

1. Slow reveal graphs: The brilliant strategy crafted by K–8 math specialist Jenna Laib and her colleagues provides teachers with an opportunity to gradually display complex graphical information and build students’ questioning, sense-making, and evaluating predictions.

For instance, in one third-grade class, students are given a bar graph without any labels or identifying information except for bars emerging from a horizontal line on the bottom of the slide. Over time, students learn about the categories on the x -axis (types of animals) and the quantities specified on the y -axis (number of baby teeth).

The graphs and the topics range in complexity from studying the standard deviation of temperatures in Antarctica to the use of scatterplots to compare working hours across OECD (Organization for Economic Cooperation and Development) countries. The website offers a number of graphs on Google Slides and suggests questions that teachers may ask students. Furthermore, this site allows teachers to search by type of graph (e.g., scatterplot) or topic (e.g., social justice).

2. Three reads: The three-reads strategy tasks students with evaluating a word problem in three different ways . First, students encounter a problem without having access to the question—for instance, “There are 20 kangaroos on the grassland. Three hop away.” Students are expected to discuss the context of the problem without emphasizing the quantities. For instance, a student may say, “We know that there are a total amount of kangaroos, and the total shrinks because some kangaroos hop away.”

Next, students discuss the important quantities and what questions may be generated. Finally, students receive and address the actual problem. Here they can both evaluate how close their predicted questions were from the actual questions and solve the actual problem.

To get started, consider using the numberless word problems on educator Brian Bushart’s site . For those teaching high school, consider using your own textbook word problems for this activity. Simply create three slides to present to students that include context (e.g., on the first slide state, “A salesman sold twice as much pears in the afternoon as in the morning”). The second slide would include quantities (e.g., “He sold 360 kilograms of pears”), and the third slide would include the actual question (e.g., “How many kilograms did he sell in the morning and how many in the afternoon?”). One additional suggestion for teams to consider is to have students solve the questions they generated before revealing the actual question.

3. Three-Act Tasks: Originally created by Dan Meyer, three-act tasks follow the three acts of a story . The first act is typically called the “setup,” followed by the “confrontation” and then the “resolution.”

This storyline process can be used in mathematics in which students encounter a contextual problem (e.g., a pool is being filled with soda). Here students work to identify the important aspects of the problem. During the second act, students build knowledge and skill to solve the problem (e.g., they learn how to calculate the volume of particular spaces). Finally, students solve the problem and evaluate their answers (e.g., how close were their calculations to the actual specifications of the pool and the amount of liquid that filled it).

Often, teachers add a fourth act (i.e., “the sequel”), in which students encounter a similar problem but in a different context (e.g., they have to estimate the volume of a lava lamp). There are also a number of elementary examples that have been developed by math teachers including GFletchy , which offers pre-kindergarten to middle school activities including counting squares , peas in a pod , and shark bait .

Students need to learn how to slow down and think through a problem context. The aforementioned strategies are quick ways teachers can begin to support students in developing the habits needed to effectively and efficiently tackle complex problem-solving.

Initial Thoughts

Perspectives & resources, what is high-quality mathematics instruction and why is it important.

- Page 1: The Importance of High-Quality Mathematics Instruction

- Page 2: A Standards-Based Mathematics Curriculum

- Page 3: Evidence-Based Mathematics Practices

What evidence-based mathematics practices can teachers employ?

- Page 4: Explicit, Systematic Instruction

- Page 5: Visual Representations

Page 6: Schema Instruction

- Page 7: Metacognitive Strategies

- Page 8: Effective Classroom Practices

- Page 9: References & Additional Resources

- Page 10: Credits

How does this practice align?

High-leverage practices.

- HLP14 : Teach cognitive and metacognitive strategies to support learning and independence

CCSSM: Standards for Mathematical Practice

- MP7 : Look for and make use of structure.

Another effective strategy for helping students improve their mathematics performance is related to solving word problems. More specifically, it involves teaching students how to identify word problem types based on a given problem’s underlying structure, or schema . Before learning about this strategy, however, it is helpful to understand why many students struggle with word problems in the first place.

Difficulty with Word Problems

Most students, especially those with mathematics difficulties and disabilities, have trouble solving word problems. This is in large part because word problems require students to:

- Read and understand the text, including mathematics vocabulary

- Be able to identify and separate relevant information from irrelevant information

- Represent the problem correctly

- Choose an appropriate strategy for solving the problem

- Perform the computational procedures

- Check the answer to ensure that it makes sense (Adapted from Stevens and Powell, 2016; Jitendra, et al., 2015; Jitendra et al., 2013)

Students who experience difficulty with any of the steps listed above, such as students who struggle with mathematics, will likely arrive at an incorrect answer.

Research Shows

- Students with mathematical difficulties and disabilities struggle more than their peers when solving word problems. (Stevens & Powell, 2016; Jitendra et al., 2015; Fuchs et al., 2010)

- Schema instruction—explicit instruction in identifying word problem types, representing them correctly, and using an effective method for solving them—has been found to be effective among students with mathematical difficulties and disabilities. (Jitendra et al., 2016; Jitendra et al., 2015; Jitendra et al., 2009; Montague & Dietz, 2009; Fuchs et al., 2010)

- Teaching students how to solve word problems by identifying word problem types is more effective than teaching them only to identify key words (e.g., “altogether,” “difference”). (Jitendra, Griffin, Deatline-Buchman, & Sczesniak, 2007)

Word Problem Structures

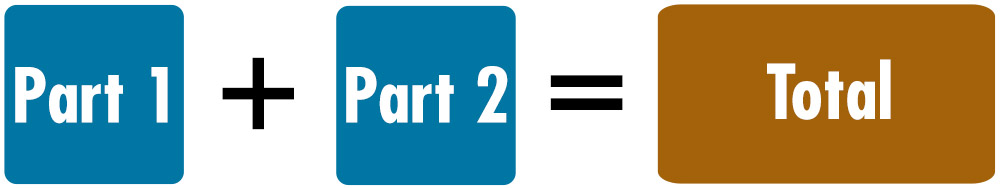

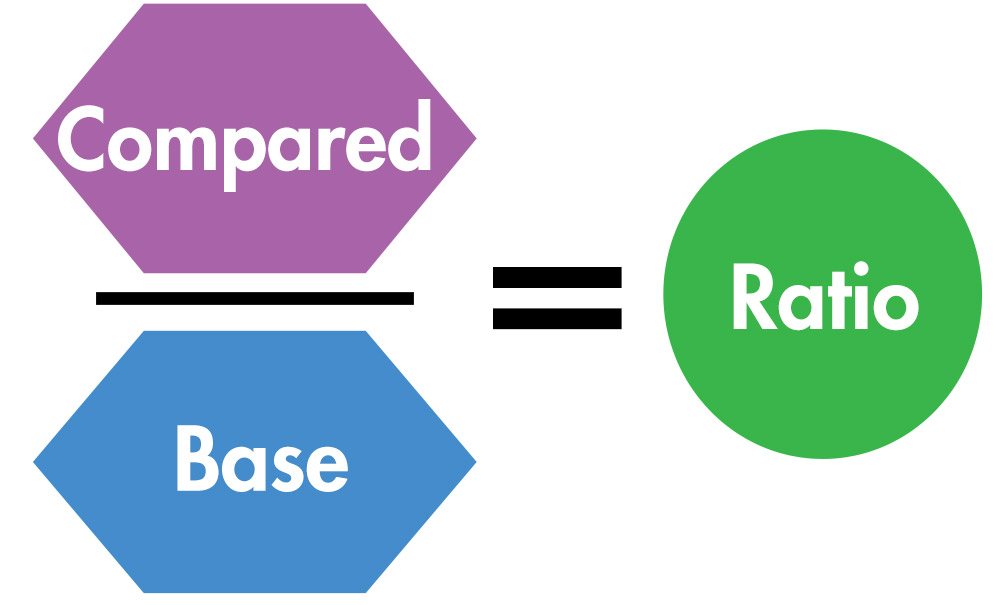

To help students become more proficient at solving word problems, teachers can help students recognize the problem schema, which refers to the underlying structure of the problem or the problem type (e.g., adding or combining two or more sets, finding the difference between two sets). This, in turn, leads to an associated strategy for solving that problem type. There are two main types of schemas: additive and multiplicative. Below, we will introduce you to additive schemas before moving on to descriptions and examples of multiplicative schemas.

Additive Schemas

Additive schemas can be used for addition and subtraction problems. These schemas are effective for students in early elementary school through middle school. Below are a few examples of additive schemas used to solve word problems: total, difference, and change.

Description

- Involves adding or combining two or more distinct sets (each set representing a part) that are put together to form a total.

- Also known as part-part-whole or combine .

- Students might solve for any unknown in the equation.

- Can be used with a variety of types of numbers (e.g., whole, fractions, decimals).

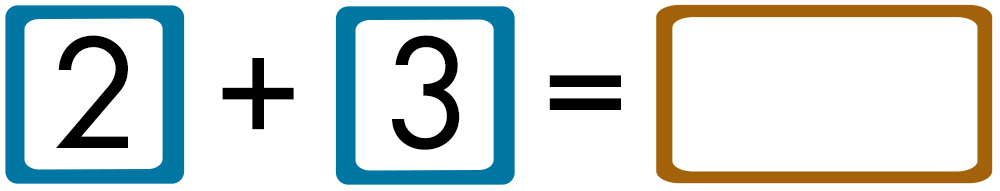

Sam has 2 cookies. Ali has 3 cookies. How many cookies do they have altogether?

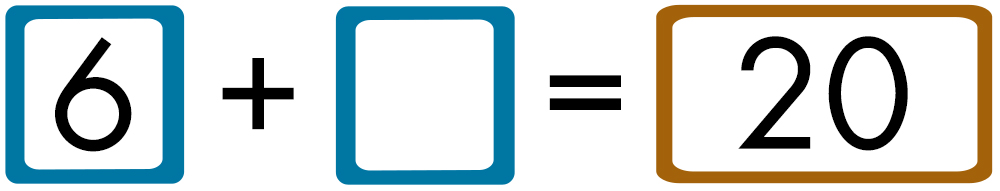

There are 6 students in the classroom and some more students in the hallway. There are 20 students in all. How many students are in the hallway?

- Involves comparing and finding the difference between two sets.

- Also known as compare .

The small dog has 3 spots. The large dog has 7 spots. How many more spots does the large dog have than the small dog?

Cy has 3 more pencils than Brody. Cy has 7 pencils. How many pencils does Brody have?

Ava has 9 fewer points than Giovani. Ava has 2 points. How many points does Giovani have?

- Involves finding the increase or decrease in the quantity of the same set (i.e., there is one set and something happens to that set).

- Can involve multiple changes to the same set.

- Change schemas differ from total and difference schemas in that they involve a change in the set over time.

- Students might solve for any number in the equation.

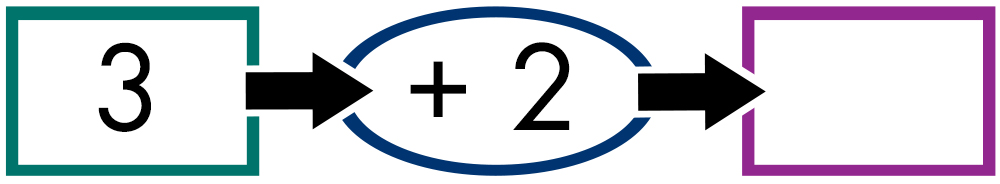

Carly has 3 ribbons. Shay gives her 2 ribbons. How many ribbons does Carly have now?

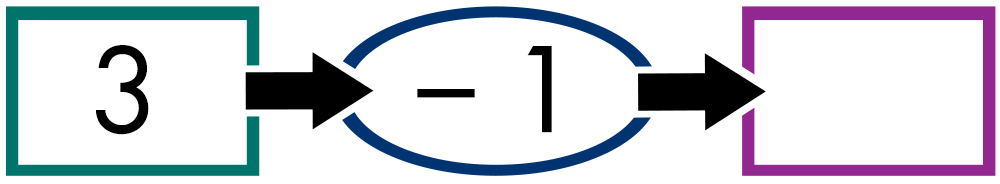

Carly has 3 ribbons. She gave Shay 1 ribbon. How many ribbons does Carly have now?

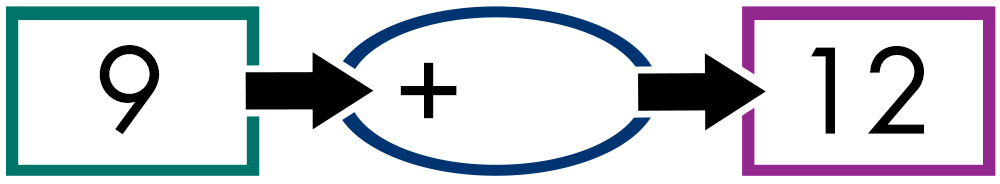

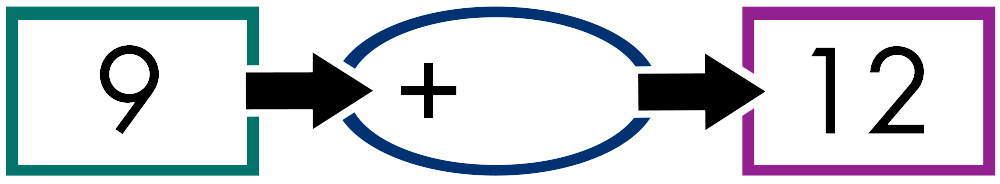

Misha has 9 suckers. Kaheen gave her some more suckers. Now she has 12 suckers. How many did Kaheen give her?

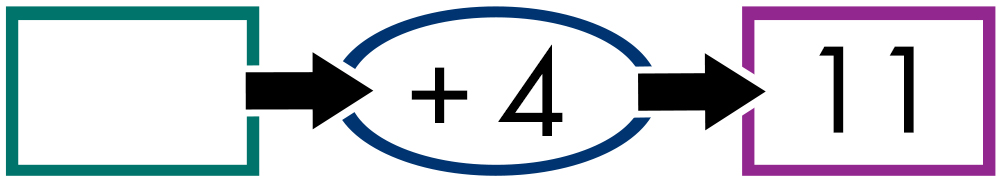

Misha has some suckers. Kaheen gave her 4 suckers. Now Misha has 11 suckers. How many suckers did Misha have to begin with?

(Adapted from Stevens & Powell, 2016; Morales, Shute & Pellegrino, 1985)

For Your Information

Even when they apply the same schema to solve a word problem, students will likely approach its solution in a variety of ways. An example of this can be found below.

Problem: Emma had nine dollars. Then she earned some more money doing her chores. Now Emma has $12. How much money did she earn?

Two students, A and B, set up the problem using the change schema.

However, Student A solves the problem by subtracting 12 – 9. Student B solves the problem by counting on from 9. Although one student adds and the other subtracts, both students arrive at the correct solution. This example illustrates that the operation is secondary to the structure of the word problem.

Multiplicative Schemas

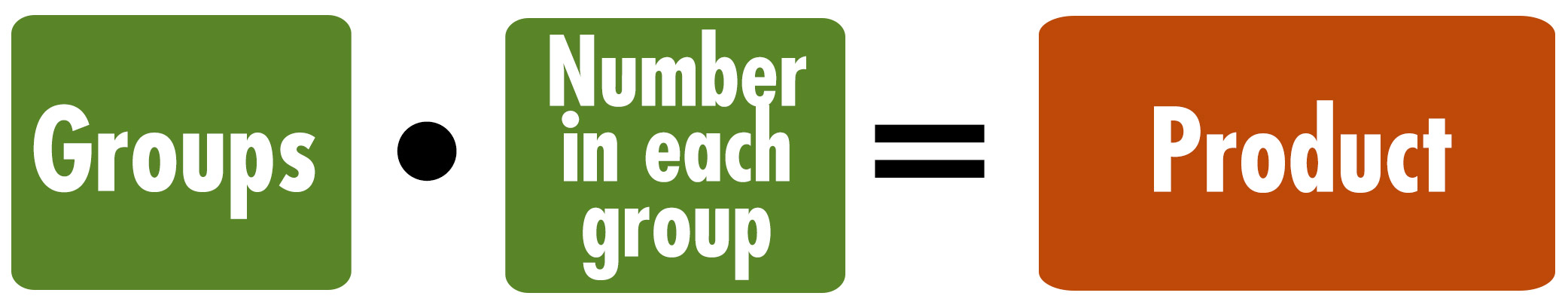

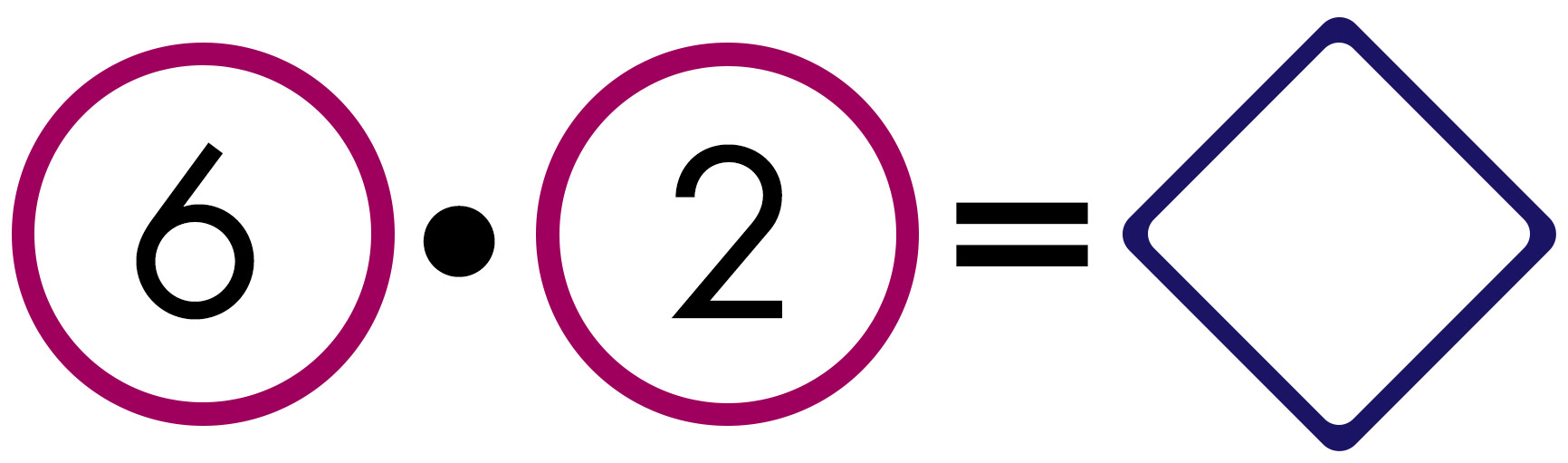

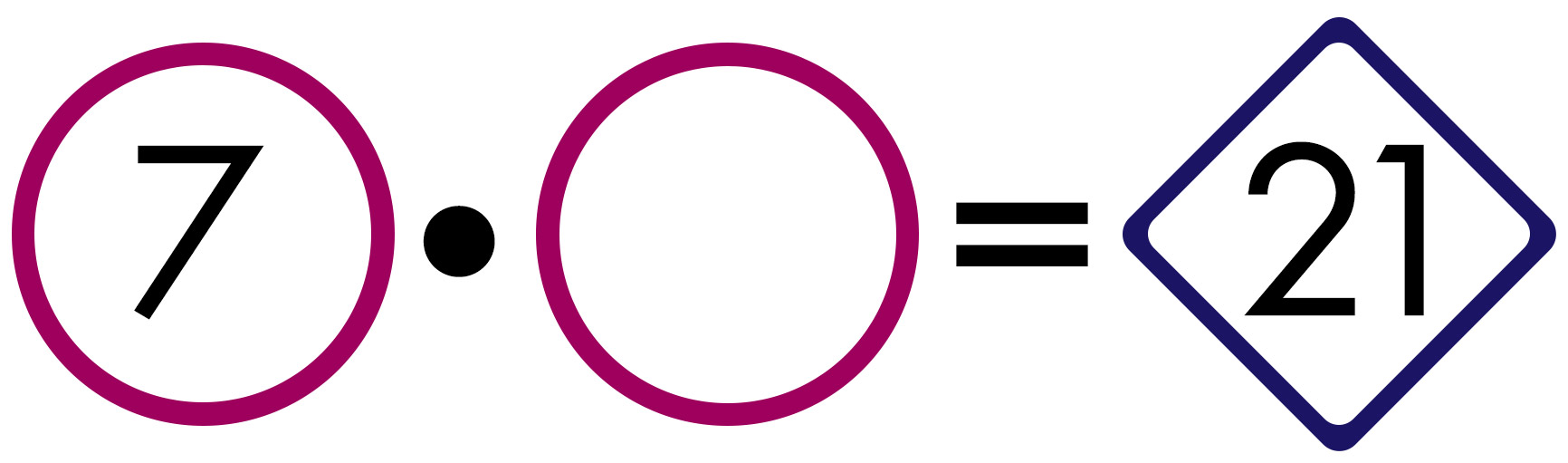

Multiplicative schemas can be used to solve multiplication and division problems. There are three main types of multiplicative schemas: equal, comparison, and ratio/proportion.

Equal Groups

- Involves multiplying or dividing groups where there is an equal number in each group.

- Students often encounter these types of word problems on standardized tests during 3rd and 4th grades and on into middle school.

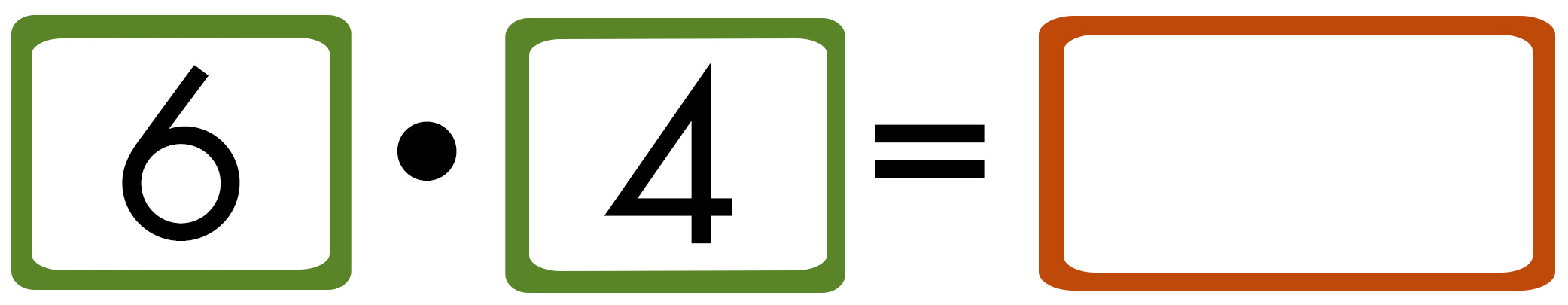

Tara has 6 bags of oranges. There are 4 oranges in each bag. How many oranges does Tara have?

Matthew has 20 comic books. His bookshelf has 5 shelves. He wants to put an equal number of comic books on each shelf. How many comic books will he put on each shelf?

- Involves multiplying a set a given number of times.

- Students often encounter these types of word problems on standardized tests during 4th and 5th grades and into middle school.

Tara has 6 bags of oranges. Mai has 6 pieces of candy. Kyla has 2 times as many pieces of candy. How many pieces of candy does Kyla have?

Pedro has 7 video games. Bronwynn has 21 video games. How many times as many video games does Bronwynn have than Pedro?

Ratios/Proportions

- Involves finding the relationship between two numbers.

- Students often encounter these types of word problems on standardized tests during upper elementary through middle school.

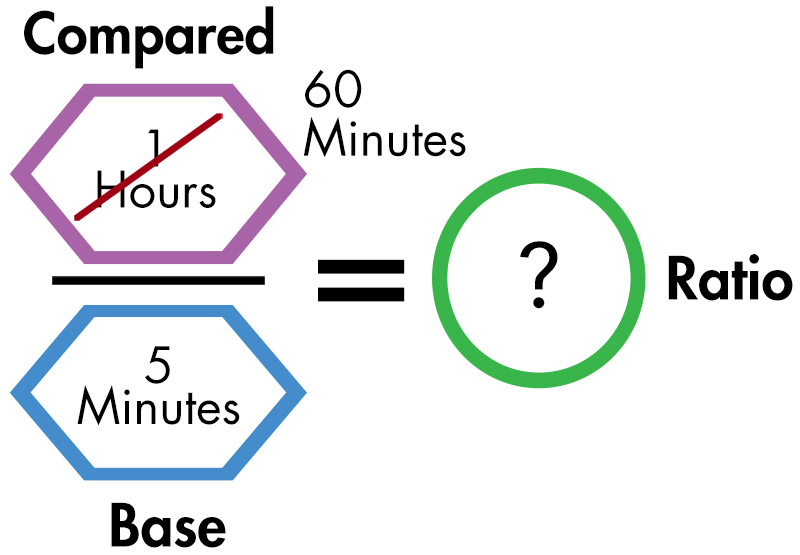

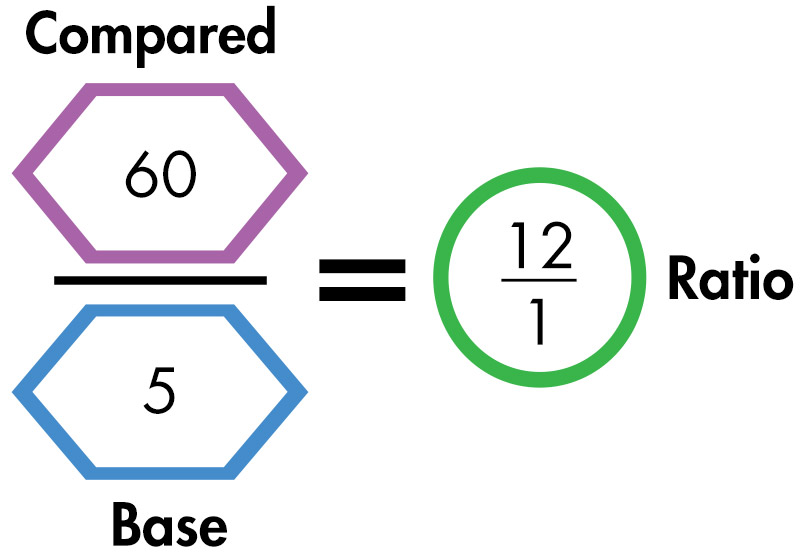

Example: On Saturday, Naoki worked in the hot sun for 10 hours, helping to clean up and revitalize a neighborhood park. To prevent dehydration, she took a 5-minute water break every hour. What proportion of time did Naoki spend working compared to taking breaks?

Note: To solve this problem, the student first converted hours to minutes so that he could work with the same unit.

Note: The student determines that the ratio for working to taking breaks is 12 minutes of work to 1 minute on break.

Source: Jitendra, Star, Dupuis, & Rodriguez, 2013

Combined Schemas

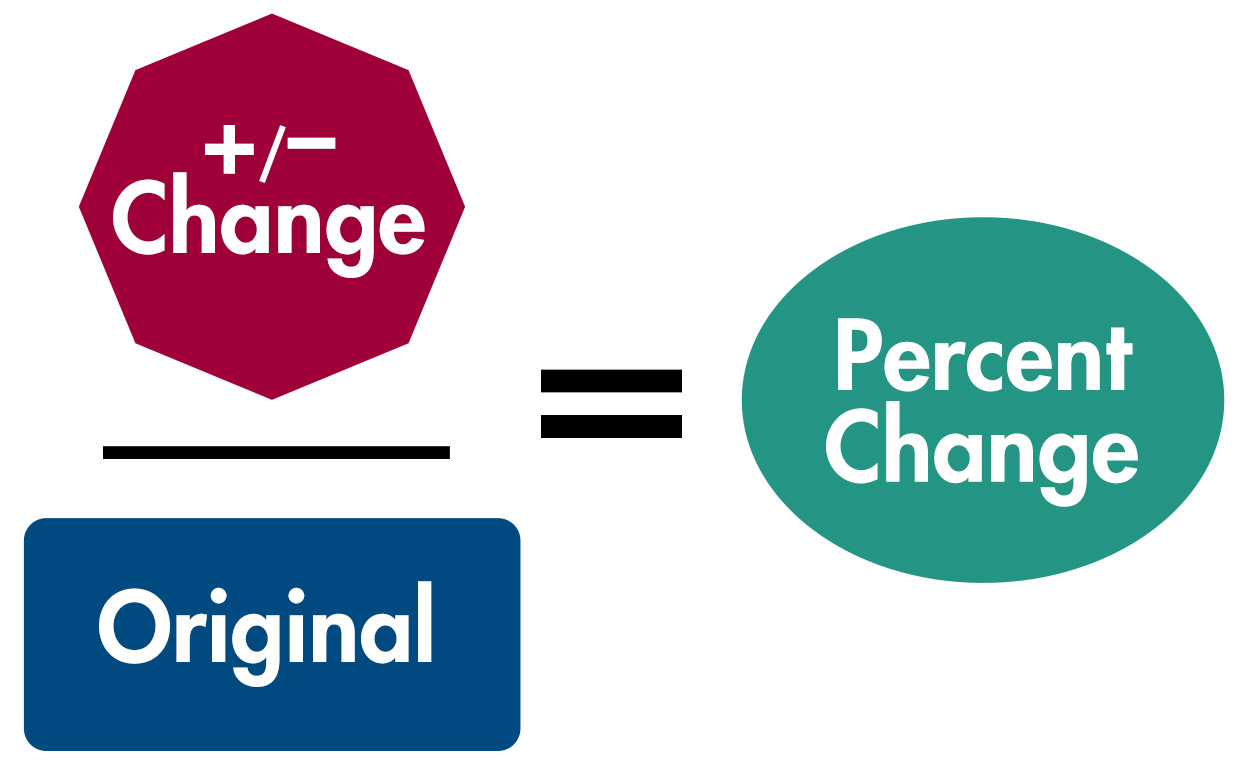

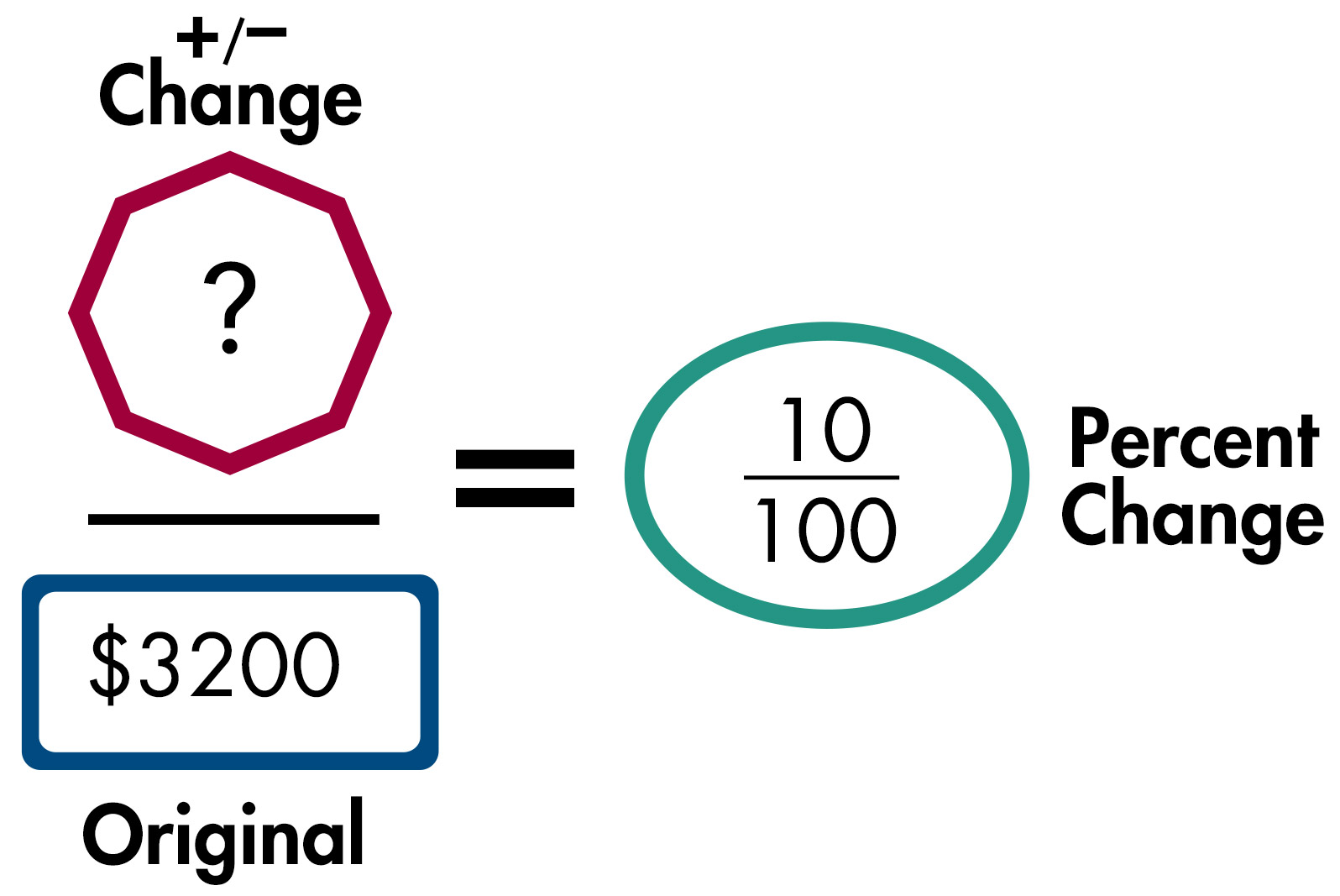

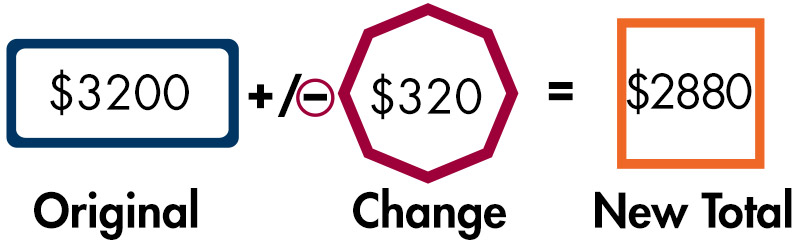

As students advance in school, they will encounter new kinds of mathematical problems with new underlying structures, or schema. They will also encounter multi-step mathematics problems. The example below illustrates a percent change problem which involves the combination of two schemas: a multiplicative and an additive .

Note: After the student solves for the missing value in the equation above, he enters it along with the provided information in the equation below to solve the problem.

Example: Mark is interested in buying a car. The car costs $3,200. He will receive a 10% discount if he buys the car this weekend. How much will he pay for the car?

Solution equation (to determine the amount of change):

After the student solves for the “change,” which is $320, he will then create another solution equation to find the “new total.”

Solution equation (to determine the “new total”):

The student determines that with the 10% discount Mark will pay $2,880.

Sarah Powell, who has conducted extensive research on schema instruction, discusses the underlying focus of this strategy (time: 2:40).

Sarah Powell, PhD Assistant Professor, Special Education University of Texas at Austin

View Transcript

Transcript: Sarah Powell, PhD

The thing with schemas is that you cannot define word problems by their operation. So you cannot describe a word problem as being a subtraction problem or being a division problem. Instead, you have to describe the word problem at a deeper level and that is describing the word problem by its schema. And sometimes I like to use the word structure . It’s really important to use schemas or structures so that students have consistency with problem solving. If we teach the structure of combined problems in 1st grade and 2nd grade, students continue to see that schema in 3rd grade, 4th grade, and 5th grade. Now the numbers may get greater. So instead of adding three plus nine, they might be adding 133 plus 239. But the structure is the same. And so one of the things that we are trying to do with our math standards that guide most instruction in the United States is to provide consistency in math learning across grade levels, and the schemas really help do that, so you see that combined structure again and again.

Now in middle school grades, you see it in a slightly different way. It might be part of a multi-step problem, but it’s still there, so that every year we don’t have to reteach problem solving. We just help students say, “Oh, now we’re looking at a total schema but with fractions. Now here’s a total schema but with decimals.” And so there’s a lot of consistency that’s provided with the schemas. And right now problem solving is really taught grade-by-grade. So how do I solve 2nd-grade word problems, or how do I solve 5th-grade word problems? And that’s not a good way of thinking about it. It’s better if we focus on the schema and think about this grade-level continuum of problem solving. And they would just make problem solving so much easier for students and also easier for teachers, because then they’re not going back to square one every year and starting about how do I teach problem solving in 5th grade?

I would argue that problem solving is the most important thing you have to teach, because when we look at high-stakes assessments—and that’s where students show their mathematics competency—for word problems, students have to take the numbers and manipulate the numbers. It’s very difficult. Problem solving should be the primary focus of the mathematics curriculum, and instead of teaching problem solving as supplementary to math instruction, problem solving should really be taught as the way that we learn mathematics. And we need to get students to be thinkers of mathematics, not just doers of mathematics.

Teaching Word Problem Structures

As when teaching any strategy, teachers should use explicit, systematic instruction when introducing schema instruction , sometimes referred to as schema-based instruction (SBI) . Although the same process is used to teach any schema, for illustrative purposes, the steps for how to teach the combine schema are outlined in the box below.

(Adapted from Stevens & Powell, 2016)

Teachers should make sure that students have mastered one schema (e.g., combine ) before introducing a different problem type (e.g., compare ). This reduces the possibility of students confusing one schema type with another during the learning process.

- Service to the State

College of Education - UT Austin

- Academics Overview

- Bachelor’s Programs

- Master’s Programs

- Doctoral Programs

- Post-baccalaureate

- Educator Preparation Programs

- Student Life Overview

- Career Engagement

- For Families

- Prospective Students

- Current Students

- Tuition, Financial Aid and Scholarships

- Commencement

- Office of Student Affairs

- Departments Overview

- Curriculum and Instruction

- Educational Leadership and Policy

- Kinesiology and Health Education

- Our Programs

- Educational Psychology

- Special Education

- Centers and Institutes

- Find Faculty

- Office of Educational Research

- Alumni and Friends Overview

- Advisory Council

- Meet Our Alumni

- Update Your Information

- About Overview

- College Leadership

- Facts and Rankings

- Reimagine Education

- Visit the college

- Building Renovations

- How to Apply

- How To Apply

- Newly Admitted Students

- Academic Advising

- Student Services

- Office of Educational Research Support

- Communications, Marketing and Media

- Visit the College

Instruction Then Problem-Solving, or Vice Versa?

by Kristen Mosley

The need and desire to actively involve students in learning experiences is evergreen, yet the timing or ways in which an instructor can do so vary widely. While some instructors prefer instruction-first approaches, others take an inverted route with problem-solving first approaches. Yet the question remains: which design is more impactful for student learning?

Let’s first break down the two approaches:

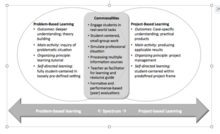

1. Instruction-first approach: this instructional design is sequenced as it is named. First, students receive instruction on a topic. Next, students engage in opportunities to apply or problem-solve with the topic at hand. The thinking behind this traditional approach is that if students lack prior knowledge on the topic, then receiving direct instruction prior to engaging in problem-solving should cue them to the key features of the problems to be solved. Equipping students with this knowledge prior to asking them to engage in novel problem solving should therefore lessen the burden on their working memory capacity. 1

2. Problem-solving first instruction: this instructional design is sequenced in the opposite fashion of its predecessor. First, students engage in a novel problem involving a yet-to-be-learned topic. Then, students receive instruction on the topic that they just explored. Proponents of this instructional design believe this approach better prepares students for future learning and applying, as they will inevitably find themselves in novel situations and with inadequate prior knowledge. 2 In essence, this approach emphasizes learner agency over attempts to control working memory capacity, as this hurdle is inherent to most problem-solving situations.

Of additional note, one more term is needed to understand these two different approaches and how they have been found to differentially impact student outcomes. Productive failure is a unique component of problem-solving first instruction in which students are engaged in problem-solving that is intentionally designed to result in failure. 3 However, this instructional aspect is not inherent to problem-solving first approaches. That is, while productive failure necessitates the use of problem-solving first instruction, the use of problem-solving first instruction does not require the use of productive failure. Instead, productive failure is a sub feature that can, and perhaps should, 4 be used within problem-solving first instructional designs.

A recent meta-analysis examined 53 studies that compared the impact of instruction-first and problem-solving first approaches on student learning. Roughly one-third of the comparisons in this study (36.7%) examined research with undergraduates, and roughly half of all comparisons in the study examined differences in student outcomes that resulted from quasi-experimental designs within real classroom settings. 4 The findings therefore hold critical implications for undergraduate teaching and learning.

Amongst the many findings of this study, a significant effect size in favor of problem-solving first approaches (Hedge’s g = .36) demonstrated that, when comparing students’ conceptual knowledge and transfer outcomes in the two different instructional settings, a problem-solving first approach was more effective than an instruction-first approach. 4 Having found this effect for conceptual knowledge and transfer outcomes, though, it is important to also note where effect sizes were insignificant.

The benefits of problem-solving first instruction did not prove to be significantly greater than instruction-first approaches for procedural learning nor did they prove to be as strong when conducted without maintaining fidelity to productive failure instruction. That is, results suggest problem-solving first instruction that also includes productive failure design supersedes the use of problem-solving first instruction alone. 4

In sum, evidence suggests problem-solving first instruction, as compared to instruction-first approaches, is a stronger facilitator of students’ long-term, conceptual learning as well as their ability to later transfer this learning to other domains. The benefits of problem-solving first instruction were also strongest when conducted in tandem with productive failure…which is the topic of our next post! Come back next week to learn more about productive failure design and how this approach can be incorporated into your instruction.

1. Sweller, J. (2020). Cognitive load theory and educational technology. Educational Technology Research and Development , 68 (1), 1-16.

2. Loibl, K., Roll, I., & Rummel, N. (2017). Towards a theory of when and how problem solving followed by instruction supports learning. Educational Psychology Review , 29(4), 693–715. https://doi.org/10.1007/s10648-016-9379-x

3. Kapur, M. (2008). Productive failure. Cognition and Instruction , 26(3), 379–424. https://doi.org/10.1080/07370000802212669

4. Sinha, T., & Kapur, M. (2021). When Problem Solving Followed by Instruction Works: Evidence for Productive Failure. Review of Educational Research , 91(5), 761–798. https://doi.org/10.3102/00346543211019105

Email or call us for any questions you may have or to make an appointment with an OI2 member.

[email protected] 512-232-2189 SZB 2.318

Director: Lucas Horton 512-232-4199

@oi2ut on Twitter

Cognition and Instruction/Problem Solving, Critical Thinking and Argumentation

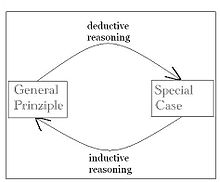

We are constantly surrounded by ambiguities, falsehoods, challenges or situations in our daily lives that require our Critical Thinking , Problem Solving Skills , and Argumentation skills . While these three terms are often used interchangeably, they are notably different. Critical thinking enables us to actively engage with information that we are presented with through all of our senses, and to think deeply about such information. This empowers us to analyse, critique, and apply knowledge, as well as create new ideas. Critical thinking can be considered the overarching cognitive skill of problem solving and argumentation. With critical thinking, although there are logical conclusions we can arrive at, there is not necessarily a 'right' idea. What may seem 'right' is often very subjective. Problem solving is a form of critical thinking that confronts learners with decisions to be made about best possible solutions, with no specific right answer for well-defined and ill-defined problems. One method of engaging with Problem Solving is with tutor systems such as Cognitive Tutor which can modify problems for individual students as well as track their progress in learning. Particular to Problem Solving is Project Based Learning which focuses the learner on solving a driving question, placing the student in the centre of learning experience by conducting an extensive investigation. Problem Based Learning focuses on real-life problems that motivate the student with experiential learning. Further, Design Thinking uses a specific scaffold system to encourage learners to develop a prototype to solve a real-world problem through a series of steps. Empathy, practical design principles, and refinement of prototyping demonstrate critical thought throughout this process. Likewise, argumentation is a critical thinking process that does not necessarily involve singular answers, hence the requirement for negotiation in argumentative thought. More specifically, argumentation involves using reasoning to support or refute a claim or idea. In comparison problem solving may lead to one solution that could be considered to be empirical.

This chapter provides a theoretical overview of these three key topics: the qualities of each, their relationship to each other, as well as practical classroom applications.

Learning Outcomes:

- Defining Critical Thought and its interaction with knowledge

- Defining Problem Solving and how it uses Critical Thought to develop solutions to problems

- Introduce a Cognitive Tutor as a cognitive learning tool that employs problem solving to enhance learning

- Explore Project Based Learning as a specific method of Problem Solving

- Examine Design Thinking as a sub-set of Project Based Learning and its scaffold process for learning

- Define Argumentation and how it employs a Critical Though process

- Examine specific methodologies and instruments of application for argumentation

- 1.1 Defining critical thinking

- 1.2 Critical thinking as a western construct

- 1.3 Critical thinking in other parts of the world

- 1.4 Disposition and critical thinking

- 1.5 Self-regulation and critical thinking

- 1.6.1 Venn Diagrams

- 1.6.2.1 The classroom environment

- 1.6.3.1 Socratic Method

- 1.6.3.2 Bloom’s Taxonomy

- 1.6.3.3 Norman Webb’s Depth of Knowledge

- 1.6.3.4 Williams Model

- 1.6.3.5 Wiggins & McTighe’s Six Facets of Understanding

- 2.1.1.1.1 Structure Of The Classroom

- 2.2.1.1 Instructional Implications

- 2.2.2.1 Instructional Implications

- 2.3.1 Mind set

- 2.3.2.1.1 Instructional Implications

- 2.4 Novice Versus Expert In Problem Solving

- 2.5.1 An overview of Cognitive Tutor

- 2.5.2.1 ACT-R theory

- 2.5.2.2 Production rules

- 2.5.2.3 Cognitive model and model tracing

- 2.5.2.4 Knowledge tracing

- 2.5.3.1 Cognitive Tutor® Geometry

- 2.5.3.2 Genetics Cognitive Tutor

- 2.6.1 Theorizing Solutions for Real World Problems

- 2.6.2 Experience is the Foundation of Learning

- 2.6.3 Self-Motivation Furthers Student Learning

- 2.6.4 Educators Find Challenges in Project Based Learning Implementation

- 2.6.5 Learner Need for Authentic Results through Critical Thought

- 2.7.1 Using the Process of Practical Design for Real-World Solutions

- 2.7.2 Critical Thought on Design in the Artificial World

- 2.7.3 Critical Thinking as Disruptive Achievement

- 2.7.4 Designers are Not Scientific?

- 2.7.5 21st Century Learners and the Need for Divergent Thinking

- 3.1 Educators Find Challenges in Project Based Learning Implementation

- 3.2 Learner Need for Authentic Results through Critical Thought

- 3.3 Critical Thinking as Disruptive Achievement

- 3.4.1 Argumentation Stages

- 3.5 The Impact of Argumentation on Learning

- 4.1.1 Production, Analysis, and Evaluation

- 4.2 How Argumentation Improves Critical Thinking

- 5.1 Teaching Tactics

- 5.2.1 The CoRT Thinking Materials

- 5.2.2 The Feuerstein Instrumental Enrichment Program (FIE)

- 5.2.3 The Productive Thinking Program

- 5.2.4 The IDEAL Problem Solver

- 5.3.1 Dialogue and Argumentation

- 5.3.2 Science and Argumentation

- 5.3.3.1 Historical Thinking - The Big Six

- 5.4 Instructing through Academic Controversy

- 7.1 External links

- 8 References

Critical thinking [ edit | edit source ]

Critical thinking is an extremely valuable aspect of education. The ability to think critically often increases over the lifespan as knowledge and experience is acquired, but it is crucial to begin the process of this development as early on as possible. Research has indicated that critical thinking skills are correlated with better transfer of knowledge, while a lack of critical thinking skills has been associated with biased reasoning [1] . Before children even begin formal schooling, they develop critical thinking skills at home because of interactions with parents and caregivers [2] . As well, critical thinking appears to improve with explicit instruction [3] . Being able to engage in critical thought is what allows us to make informed decisions in situations like elections, in which candidates present skewed views of themselves and other candidates. Without critical thinking, people would fall prey to fallacious information and biased reasoning. It is therefore important that students are introduced to critical thought and are encouraged to utilize critical thinking skills as they face problems.

Defining critical thinking [ edit | edit source ]

In general, critical thinking can be defined as the process of evaluating arguments and evidence to reach a conclusion that is the most appropriate and valid among other possible conclusions. Critical thinking is a dynamic and reflective process, and it is primarily evidence-based [4] . Thinking critically involves being able to criticize information objectively and explore opposing views, eventually leading to a conclusion based on evidence and careful thought. Critical thinkers are skeptical of information given to them, actively seek out evidence, and are not hesitant to take on decision-making and complex problem solving tasks [5] . Asking questions, debating topics, and critiquing the credibility of sources are all activities that involve thinking critically. As outlined by Glaser (1941), critical thinking involves three main components: a disposition for critical thought, knowledge of critical thinking strategies, and some ability to apply the strategies [6] . Having a disposition for critical thought is necessary for applying known strategies.

Critical thinking, which includes cognitive processes such as weighing and evaluating information, leads to more thorough understanding of an issue or problem. As a type of reflection, critical thinking also promotes an awareness of one's own perceptions, intentions, feelings and actions. [7]

Critical thinking as a western construct [ edit | edit source ]

In modern education, critical thinking is taken for granted as something that people universally need and should acquire, especially at a higher educational level [8] [9] . However, critical thinking is a human construct [10] - not a scientific fact - that is tied to Ancient Greek philosophy and beliefs [11] .