User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

25.2 - power functions, example 25-2 section .

Let's take a look at another example that involves calculating the power of a hypothesis test.

Let \(X\) denote the IQ of a randomly selected adult American. Assume, a bit unrealistically, that \(X\) is normally distributed with unknown mean \(\mu\) and standard deviation 16. Take a random sample of \(n=16\) students, so that, after setting the probability of committing a Type I error at \(\alpha=0.05\), we can test the null hypothesis \(H_0:\mu=100\) against the alternative hypothesis that \(H_A:\mu>100\).

What is the power of the hypothesis test if the true population mean were \(\mu=108\)?

Setting \(\alpha\), the probability of committing a Type I error, to 0.05, implies that we should reject the null hypothesis when the test statistic \(Z\ge 1.645\), or equivalently, when the observed sample mean is 106.58 or greater:

because we transform the test statistic \(Z\) to the sample mean by way of:

\(Z=\dfrac{\bar{X}-\mu}{\frac{\sigma}{\sqrt{n}}}\qquad \Rightarrow \bar{X}=\mu+Z\dfrac{\sigma}{\sqrt{n}} \qquad \bar{X}=100+1.645\left(\dfrac{16}{\sqrt{16}}\right)=106.58\)

Now, that implies that the power, that is, the probability of rejecting the null hypothesis, when \(\mu=108\) is 0.6406 as calculated here (recalling that \(Phi(z)\) is standard notation for the cumulative distribution function of the standard normal random variable):

\( \text{Power}=P(\bar{X}\ge 106.58\text{ when } \mu=108) = P\left(Z\ge \dfrac{106.58-108}{\frac{16}{\sqrt{16}}}\right) \\ = P(Z\ge -0.36)=1-P(Z<-0.36)=1-\Phi(-0.36)=1-0.3594=0.6406 \)

and illustrated here:

In summary, we have determined that we have (only) a 64.06% chance of rejecting the null hypothesis \(H_0:\mu=100\) in favor of the alternative hypothesis \(H_A:\mu>100\) if the true unknown population mean is in reality \(\mu=108\).

What is the power of the hypothesis test if the true population mean were \(\mu=112\)?

Because we are setting \(\alpha\), the probability of committing a Type I error, to 0.05, we again reject the null hypothesis when the test statistic \(Z\ge 1.645\), or equivalently, when the observed sample mean is 106.58 or greater. That means that the probability of rejecting the null hypothesis, when \(\mu=112\) is 0.9131 as calculated here:

\( \text{Power}=P(\bar{X}\ge 106.58\text{ when }\mu=112)=P\left(Z\ge \frac{106.58-112}{\frac{16}{\sqrt{16}}}\right) \\ = P(Z\ge -1.36)=1-P(Z<-1.36)=1-\Phi(-1.36)=1-0.0869=0.9131 \)

In summary, we have determined that we now have a 91.31% chance of rejecting the null hypothesis \(H_0:\mu=100\) in favor of the alternative hypothesis \(H_A:\mu>100\) if the true unknown population mean is in reality \(\mu=112\). Hmm.... it should make sense that the probability of rejecting the null hypothesis is larger for values of the mean, such as 112, that are far away from the assumed mean under the null hypothesis.

What is the power of the hypothesis test if the true population mean were \(\mu=116\)?

Again, because we are setting \(\alpha\), the probability of committing a Type I error, to 0.05, we reject the null hypothesis when the test statistic \(Z\ge 1.645\), or equivalently, when the observed sample mean is 106.58 or greater. That means that the probability of rejecting the null hypothesis, when \(\mu=116\) is 0.9909 as calculated here:

\(\text{Power}=P(\bar{X}\ge 106.58\text{ when }\mu=116) =P\left(Z\ge \dfrac{106.58-116}{\frac{16}{\sqrt{16}}}\right) = P(Z\ge -2.36)=1-P(Z<-2.36)= 1-\Phi(-2.36)=1-0.0091=0.9909 \)

In summary, we have determined that, in this case, we have a 99.09% chance of rejecting the null hypothesis \(H_0:\mu=100\) in favor of the alternative hypothesis \(H_A:\mu>100\) if the true unknown population mean is in reality \(\mu=116\). The probability of rejecting the null hypothesis is the largest yet of those we calculated, because the mean, 116, is the farthest away from the assumed mean under the null hypothesis.

Are you growing weary of this? Let's summarize a few things we've learned from engaging in this exercise:

- First and foremost, my instructor can be tedious at times..... errrr, I mean, first and foremost, the power of a hypothesis test depends on the value of the parameter being investigated. In the above, example, the power of the hypothesis test depends on the value of the mean \(\mu\).

- As the actual mean \(\mu\) moves further away from the value of the mean \(\mu=100\) under the null hypothesis, the power of the hypothesis test increases.

It's that first point that leads us to what is called the power function of the hypothesis test . If you go back and take a look, you'll see that in each case our calculation of the power involved a step that looks like this:

\(\text{Power } =1 - \Phi (z) \) where \(z = \frac{106.58 - \mu}{16 / \sqrt{16}} \)

That is, if we use the standard notation \(K(\mu)\) to denote the power function, as it depends on \(\mu\), we have:

\(K(\mu) = 1- \Phi \left( \frac{106.58 - \mu}{16 / \sqrt{16}} \right) \)

So, the reality is your instructor could have been a whole lot more tedious by calculating the power for every possible value of \(\mu\) under the alternative hypothesis! What we can do instead is create a plot of the power function, with the mean \(\mu\) on the horizontal axis and the power \(K(\mu)\) on the vertical axis. Doing so, we get a plot in this case that looks like this:

Now, what can we learn from this plot? Well:

We can see that \(\alpha\) (the probability of a Type I error), \(\beta\) (the probability of a Type II error), and \(K(\mu)\) are all represented on a power function plot, as illustrated here:

We can see that the probability of a Type I error is \(\alpha=K(100)=0.05\), that is, the probability of rejecting the null hypothesis when the null hypothesis is true is 0.05.

We can see the power of a test \(K(\mu)\), as well as the probability of a Type II error \(\beta(\mu)\), for each possible value of \(\mu\).

We can see that \(\beta(\mu)=1-K(\mu)\) and vice versa, that is, \(K(\mu)=1-\beta(\mu)\).

And we can see graphically that, indeed, as the actual mean \(\mu\) moves further away from the null mean \(\mu=100\), the power of the hypothesis test increases.

Now, what would do you suppose would happen to the power of our hypothesis test if we were to change our willingness to commit a Type I error? Would the power for a given value of \(\mu\) increase, decrease, or remain unchanged? Suppose, for example, that we wanted to set \(\alpha=0.01\) instead of \(\alpha=0.05\)? Let's return to our example to explore this question.

Example 25-2 (continued) Section

Let \(X\) denote the IQ of a randomly selected adult American. Assume, a bit unrealistically, that \(X\) is normally distributed with unknown mean \(\mu\) and standard deviation 16. Take a random sample of \(n=16\) students, so that, after setting the probability of committing a Type I error at \(\alpha=0.01\), we can test the null hypothesis \(H_0:\mu=100\) against the alternative hypothesis that \(H_A:\mu>100\).

Setting \(\alpha\), the probability of committing a Type I error, to 0.01, implies that we should reject the null hypothesis when the test statistic \(Z\ge 2.326\), or equivalently, when the observed sample mean is 109.304 or greater:

\(\bar{x} = \mu + z \left( \frac{\sigma}{\sqrt{n}} \right) =100 + 2.326\left( \frac{16}{\sqrt{16}} \right)=109.304 \)

That means that the probability of rejecting the null hypothesis, when \(\mu=108\) is 0.3722 as calculated here:

So, the power when \(\mu=108\) and \(\alpha=0.01\) is smaller (0.3722) than the power when \(\mu=108\) and \(\alpha=0.05\) (0.6406)! Perhaps we can see this graphically:

By the way, we could again alternatively look at the glass as being half-empty. In that case, the probability of a Type II error when \(\mu=108\) and \(\alpha=0.01\) is \(1-0.3722=0.6278\). In this case, the probability of a Type II error is greater than the probability of a Type II error when \(\mu=108\) and \(\alpha=0.05\).

All of this can be seen graphically by plotting the two power functions, one where \(\alpha=0.01\) and the other where \(\alpha=0.05\), simultaneously. Doing so, we get a plot that looks like this:

This last example illustrates that, providing the sample size \(n\) remains unchanged, a decrease in \(\alpha\) causes an increase in \(\beta\) , and at least theoretically, if not practically, a decrease in \(\beta\) causes an increase in \(\alpha\). It turns out that the only way that \(\alpha\) and \(\beta\) can be decreased simultaneously is by increasing the sample size \(n\).

Hypothesis Testing Calculator

Related: confidence interval calculator, type ii error.

The first step in hypothesis testing is to calculate the test statistic. The formula for the test statistic depends on whether the population standard deviation (σ) is known or unknown. If σ is known, our hypothesis test is known as a z test and we use the z distribution. If σ is unknown, our hypothesis test is known as a t test and we use the t distribution. Use of the t distribution relies on the degrees of freedom, which is equal to the sample size minus one. Furthermore, if the population standard deviation σ is unknown, the sample standard deviation s is used instead. To switch from σ known to σ unknown, click on $\boxed{\sigma}$ and select $\boxed{s}$ in the Hypothesis Testing Calculator.

Next, the test statistic is used to conduct the test using either the p-value approach or critical value approach. The particular steps taken in each approach largely depend on the form of the hypothesis test: lower tail, upper tail or two-tailed. The form can easily be identified by looking at the alternative hypothesis (H a ). If there is a less than sign in the alternative hypothesis then it is a lower tail test, greater than sign is an upper tail test and inequality is a two-tailed test. To switch from a lower tail test to an upper tail or two-tailed test, click on $\boxed{\geq}$ and select $\boxed{\leq}$ or $\boxed{=}$, respectively.

In the p-value approach, the test statistic is used to calculate a p-value. If the test is a lower tail test, the p-value is the probability of getting a value for the test statistic at least as small as the value from the sample. If the test is an upper tail test, the p-value is the probability of getting a value for the test statistic at least as large as the value from the sample. In a two-tailed test, the p-value is the probability of getting a value for the test statistic at least as unlikely as the value from the sample.

To test the hypothesis in the p-value approach, compare the p-value to the level of significance. If the p-value is less than or equal to the level of signifance, reject the null hypothesis. If the p-value is greater than the level of significance, do not reject the null hypothesis. This method remains unchanged regardless of whether it's a lower tail, upper tail or two-tailed test. To change the level of significance, click on $\boxed{.05}$. Note that if the test statistic is given, you can calculate the p-value from the test statistic by clicking on the switch symbol twice.

In the critical value approach, the level of significance ($\alpha$) is used to calculate the critical value. In a lower tail test, the critical value is the value of the test statistic providing an area of $\alpha$ in the lower tail of the sampling distribution of the test statistic. In an upper tail test, the critical value is the value of the test statistic providing an area of $\alpha$ in the upper tail of the sampling distribution of the test statistic. In a two-tailed test, the critical values are the values of the test statistic providing areas of $\alpha / 2$ in the lower and upper tail of the sampling distribution of the test statistic.

To test the hypothesis in the critical value approach, compare the critical value to the test statistic. Unlike the p-value approach, the method we use to decide whether to reject the null hypothesis depends on the form of the hypothesis test. In a lower tail test, if the test statistic is less than or equal to the critical value, reject the null hypothesis. In an upper tail test, if the test statistic is greater than or equal to the critical value, reject the null hypothesis. In a two-tailed test, if the test statistic is less than or equal the lower critical value or greater than or equal to the upper critical value, reject the null hypothesis.

When conducting a hypothesis test, there is always a chance that you come to the wrong conclusion. There are two types of errors you can make: Type I Error and Type II Error. A Type I Error is committed if you reject the null hypothesis when the null hypothesis is true. Ideally, we'd like to accept the null hypothesis when the null hypothesis is true. A Type II Error is committed if you accept the null hypothesis when the alternative hypothesis is true. Ideally, we'd like to reject the null hypothesis when the alternative hypothesis is true.

Hypothesis testing is closely related to the statistical area of confidence intervals. If the hypothesized value of the population mean is outside of the confidence interval, we can reject the null hypothesis. Confidence intervals can be found using the Confidence Interval Calculator . The calculator on this page does hypothesis tests for one population mean. Sometimes we're interest in hypothesis tests about two population means. These can be solved using the Two Population Calculator . The probability of a Type II Error can be calculated by clicking on the link at the bottom of the page.

Power – A Quick Introduction

In statistics, power is the probability of rejecting a false null hypothesis.

Power Calculation Example

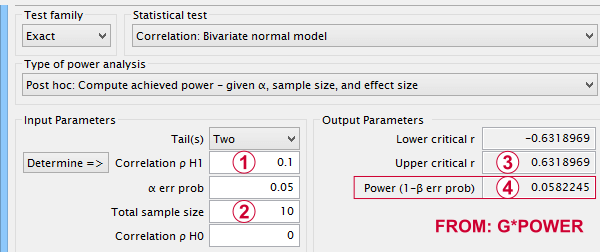

Power & alpha level, power & effect size, power & sample size, 3 main reasons for power calculations, software for power calculations - g*power, power - minimal example.

- In some country, IQ and salary have a population correlation ρ = 0.10.

- A scientist examines a sample of N = 10 people and finds a sample correlation r = 0.15.

- He tests the (false) null hypothesis H 0 that ρ = 0. The significance level for this test, p = 0.68.

- Since p > 0.05, his chosen alpha level, he does not reject his (false) null hypothesis that ρ = 0.

Now, given a sample size of N = 10 and a population correlation ρ = 0.10, what's the probability of correctly rejecting the null hypothesis? ρ = 0.0? -->This probability is known as power and denoted as (1 - β) in statistics. For the aforementioned example, (1 - β) is only 0.058 (roughly 6%) as shown below.

So even though H 0 is false, we've little power to actually reject it. Not rejecting a false H 0 is known as a committing a type II error.

Type I and Type II Errors

Any null hypothesis may be true or false and we may or may not reject it. This results in the 4 scenarios outlined below.

As you probably guess, we usually want the power for our tests to be as high as possible. But before taking a look at factors affecting power, let's first try and understand how a power calculation actually works.

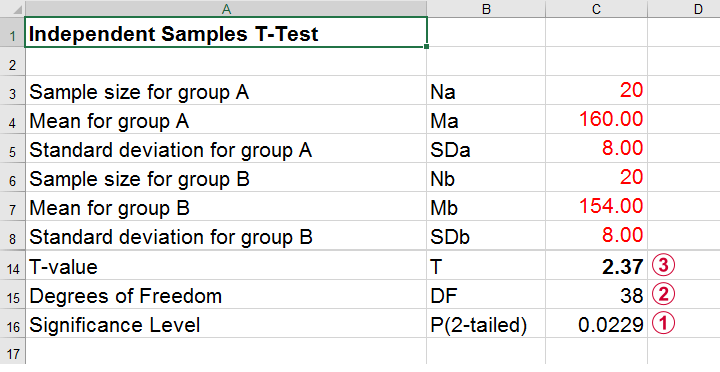

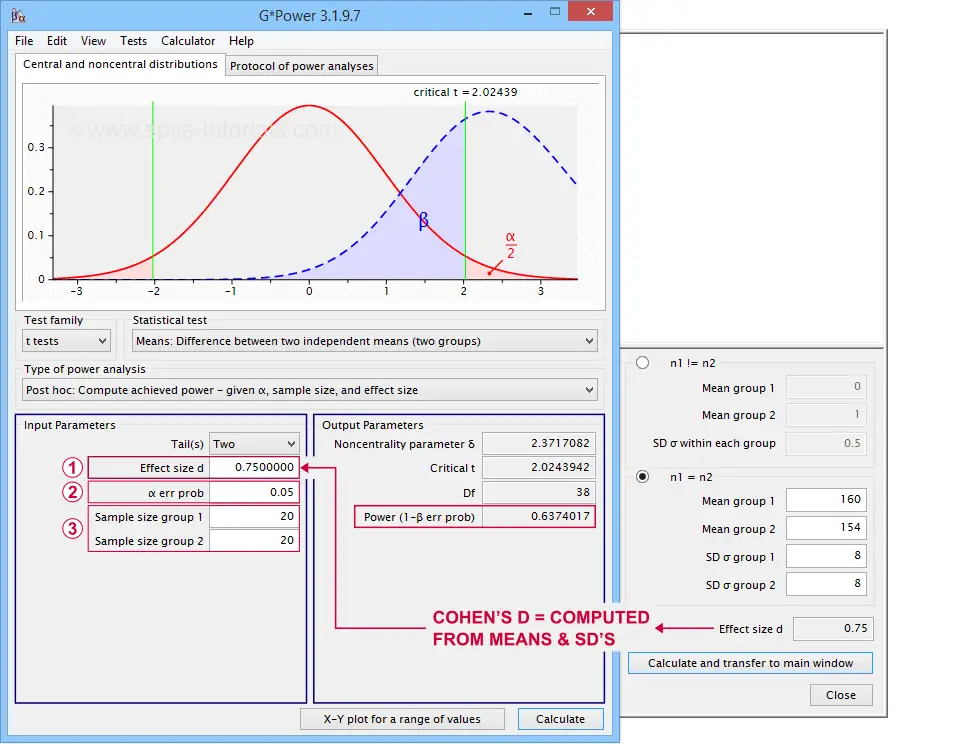

A pharmaceutical company wants to demonstrate that their medicine against high blood pressure actually works. They expect the following:

- the average blood pressure in some untreated population is 160 mmHg;

- they expect their medicine to lower this to roughly 154 mmHg;

- the standard deviation should be around 8 mmHg (both populations);

- they plan to use an independent samples t-test at α = 0.05 with N = 20 for either subsample.

Given these considerations, what's the power for this study? Or -alternatively- what's the probability of rejecting H 0 that the mean blood pressure is equal between treated and untreated populations?

Obviously, nobody knows the outcomes for this study until it's finished. However, we do know the most likely outcomes : they're our population estimates. So let's for a moment pretend that we'll find exactly these and enter them into a t-test calculator.

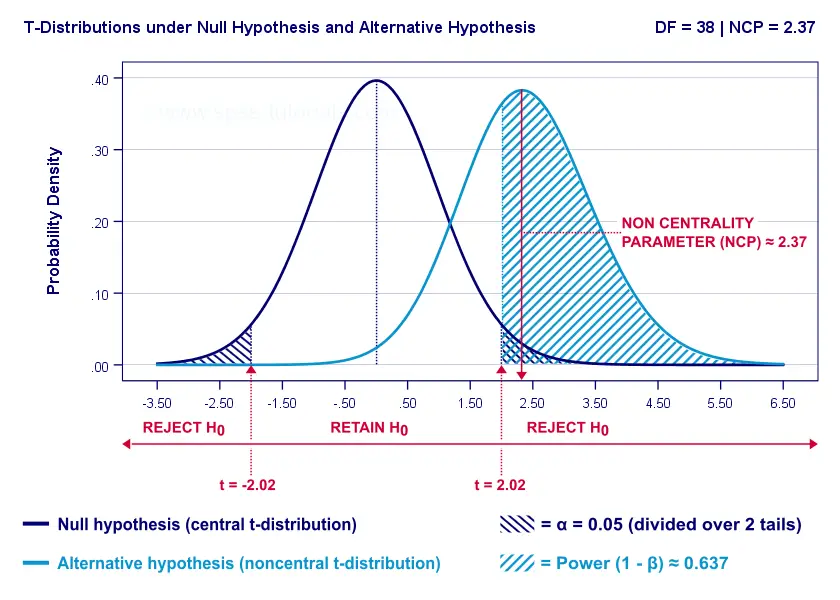

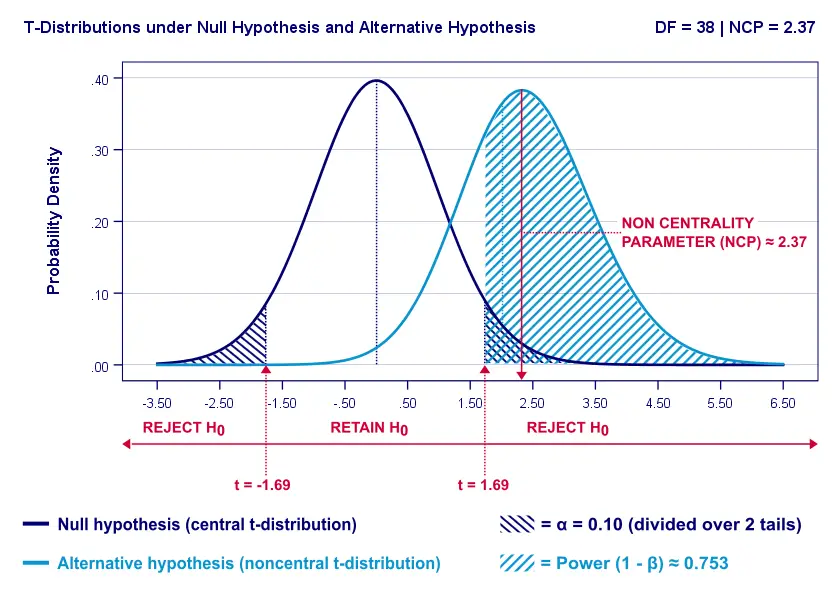

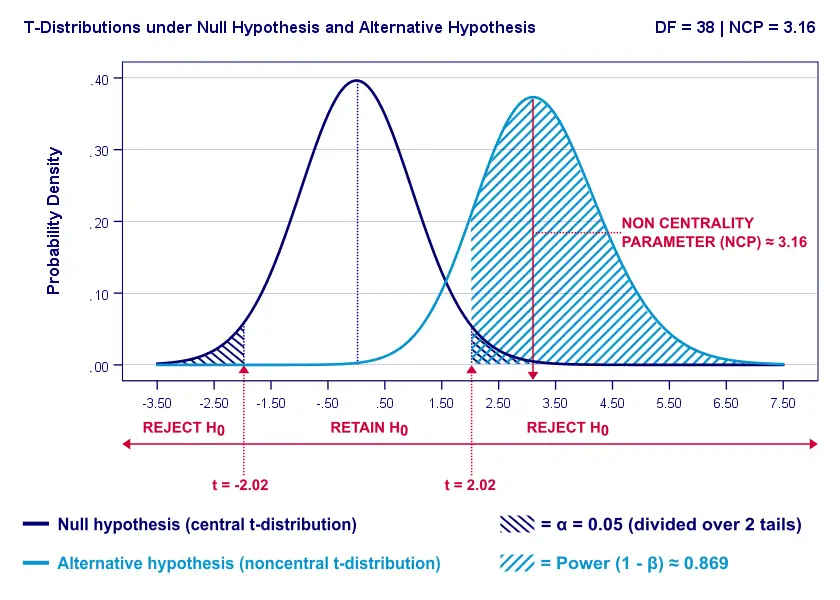

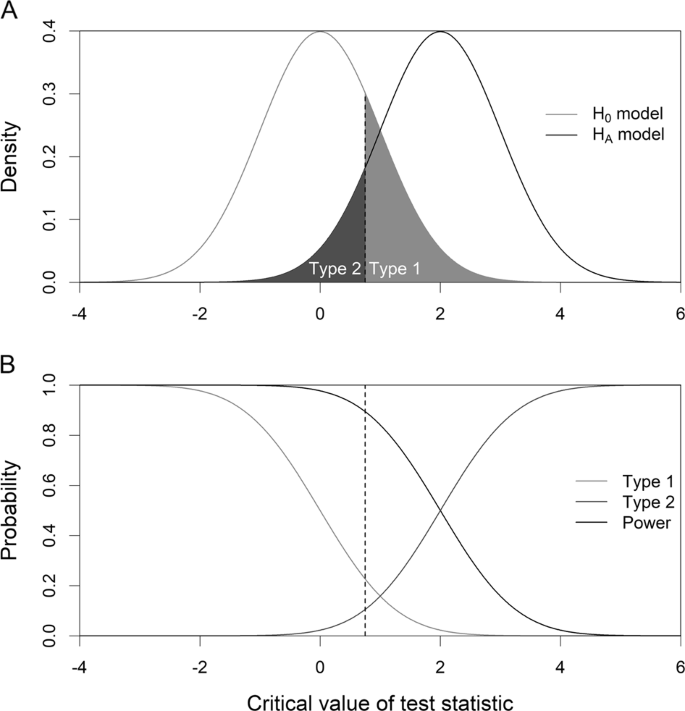

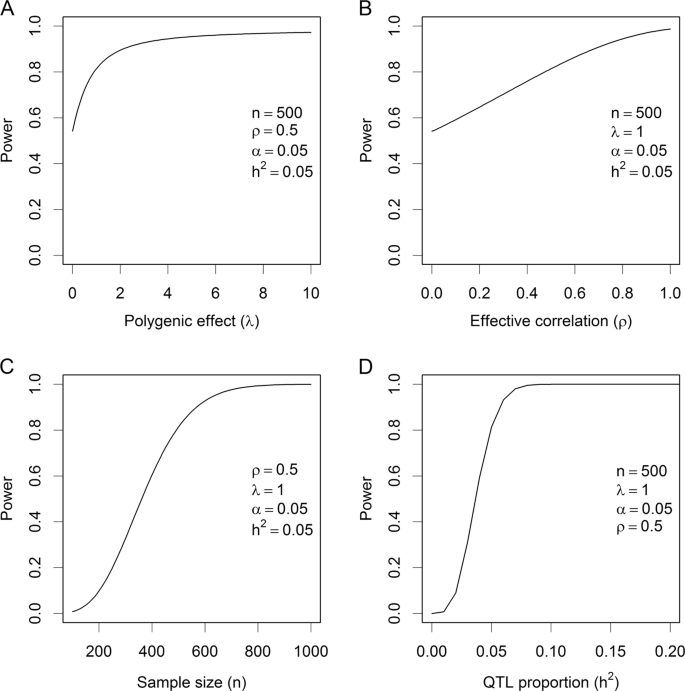

Now, this expected (or average) t = 2.37 under the alternative hypothesis H a is known as a noncentrality parameter or NCP. The NCP tells us how t is distributed under some exact alternative hypothesis and thus allows us to estimate the power for some test. The figure below illustrates how this works.

- First off, our H 0 is tested using a central t-distribution with df = 38;

- If we test at α = 0.05 (2-tailed), we'll reject H 0 if t < -2.02 (left critical value) or if t > 2.02 (right critical value);

- If our alternative hypothesis H A is exactly true, t follows a noncentral t-distribution with df = 38 and NCP = 2.37;

- Under this noncentral t-distribution, the probability of finding t > 2.02 ≈ 0.637. So this is roughly the probability of rejecting H 0 -or the power (1 - β) - for our first scenario.

A minor note here is that we'd also reject H 0 if t < -2.02 but this probability is almost zero for our first scenario. The exact calculation can be replicated from the SPSS syntax below.

Power and Effect Size

Like we just saw, estimating power requires specifying

- an exact null hypothesis and

- an exact alternative hypothesis.

In the previous example, our scientists had an exact alternative hypothesis because they had very specific ideas regarding population means and standard deviations. In most applied studies, however, we're pretty clueless about such population parameters. This raises the question how do we get an exact alternative hypothesis?

For most tests, the alternative hypothesis can be specified as an effect size measure : a single number combining several means, variances and/or frequencies. Like so, we proceed from requiring a bunch of unknown parameters to a single unknown parameter.

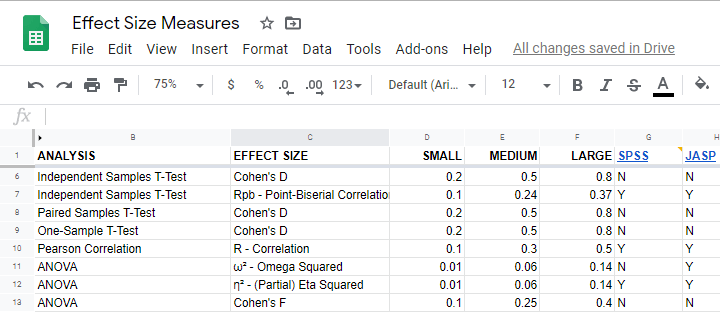

What's even better: widely agreed upon rules of thumb are available for effect size measures. An overview is presented in this Googlesheet , partly shown below.

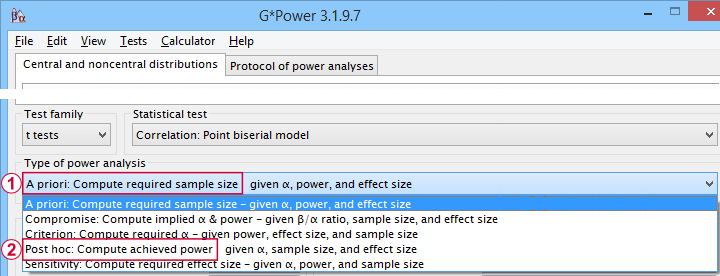

In applied studies, we often use G*Power for estimating power. The screenshot below replicates our power calculation example for the blood pressure medicine study.

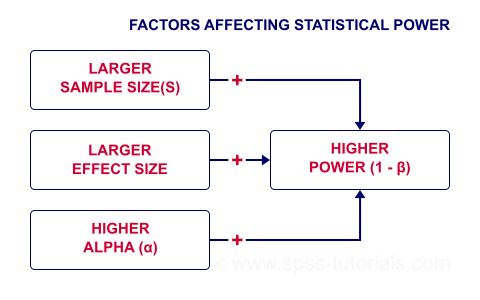

Factors Affecting Power

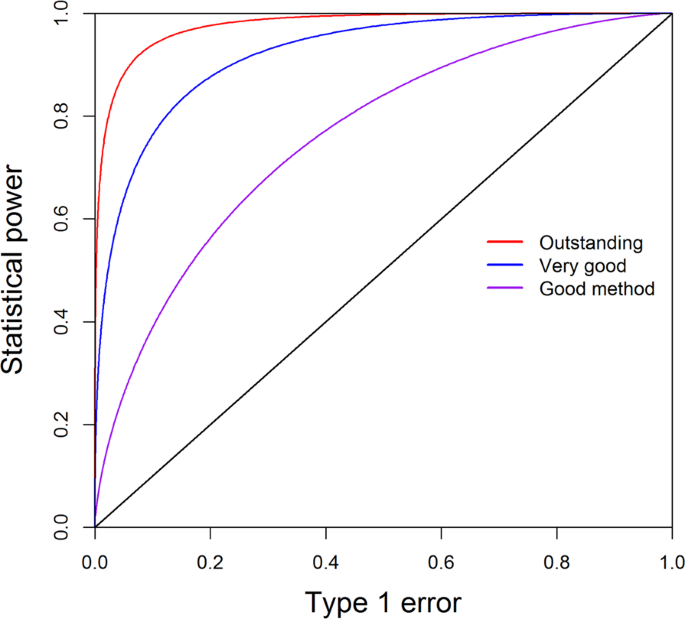

The figure below gives a quick overview how 3 factors relate to power.

Let's now take a closer look at each of them.

Before taking a closer look at each, we need to point out that increasing your sample size(s) is the only sound way to increase power. This is because

- increasing alpha increases power but also increases the risk of committing a type I error. Testing at α > 0.05 is unacceptable under common statistical conventions.

- you can't choose

Everything else equal, increasing alpha increases power. For our example calculation, power increases from 0.637 to 0.753 if we test at α = 0.10 instead of 0.05.

A higher alpha level results in smaller (absolute) critical values: we already reject H 0 if t > 1.69 instead of t > 2.02. 1.69 has a higher probability under H A than finding t > 2.02. -->So the light blue area, indicating (1 - β) , increases. We basically require a smaller deviation from H 0 for statistical significance.

However, increasing alpha comes at a cost: it increases the probability of committing a type I error (rejecting H 0 when it's actually true). Therefore, testing at α > 0.05 is generally frowned upon. In short, increasing alpha basically just decreases one problem by increasing another one.

Everything else equal, a larger effect size results in higher power. For our example, power increases from 0.637 to 0.869 if we believe that Cohen’s D = 1.0 rather than 0.8.

A larger effect size results in a larger noncentrality parameter (NCP). Therefore, the distributions under H 0 and H A lie further apart. This increases the light blue area, indicating the power for this test.

Keep in mind, though, that we can estimate but not choose some population effect size. If we overestimate this effect size, we'll overestimate the power for our test accordingly. Therefore, we can't usually increase power by increasing an effect size.

An arguable exception is increasing an effect size by modifying a research design or analysis. For example, (partial) eta squared for a treatment effect in ANOVA may increase by adding a covariate to the analysis.

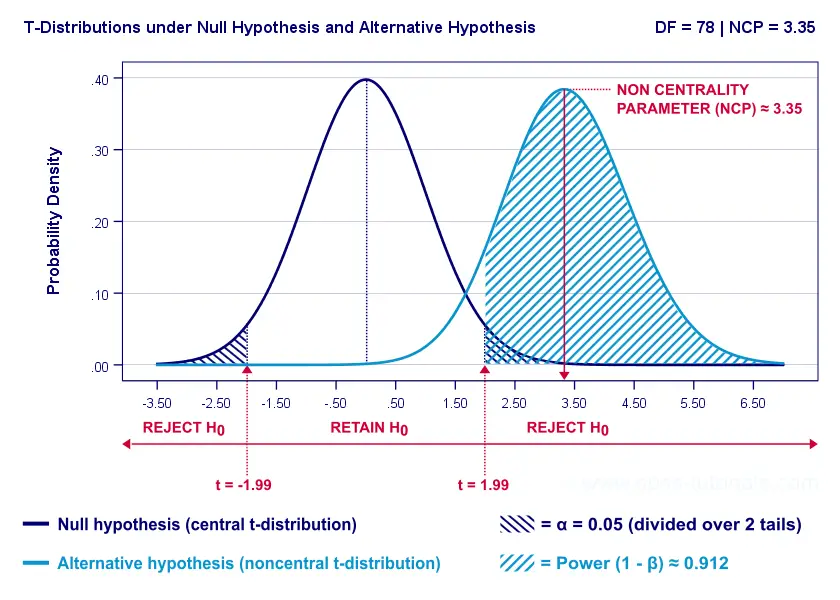

Everything else equal, larger sample size(s) result in higher power. For our example, increasing the total sample size from N = 40 to N = 80 increases power from 0.637 to 0.912.

The increase in power stems from our distributions lying further apart. This reflects an increased noncentrality parameter (NCP). But why does the NCP increase with larger sample sizes?

Well, recall that for a t-distribution, the NCP is the expected t-value under H A . Now, t is computed as

$$t = \frac{\overline{X_1} - \overline{X_2}}{SE}$$

where \(SE\) denotes the standard error of the mean difference. In turn, \(SE\) is computed as

$$SE = Sw\sqrt{\frac{1}{n_1} + \frac{1}{n_2}}$$

where \(S_w\) denotes the estimated population SD of the outcome variable. This formula shows that as sample sizes increase, \(SE\) de creases and therefore t (and hence the NCP) in creases.

On top of this, degrees of freedom increase (from df = 38 to df = 78 for our example). This results in slightly smaller (absolute) critical t-values but this effect is very modest.

In short, increasing sample size(s) is a sound way to increase the power for some test.

Power & Research Design

Apart from sample size, effect size & α, research design may also affect power. Although there's no exact formulas, some general guidelines are that

- everything else equal, within-subjects designs tend to have more power than between-subjects designs;

- for ANCOVA , including one or two covariates tends to increase power for demonstrating a treatment effect;

- for multiple regression , power for each separate predictor tends to decrease as more predictors are added to the model;

Power calculations in applied research serve 3 main purposes:

- compute the required sample size prior to data collection. This involves estimating an effect size and choosing α (usually 0.05) and the desired power (1 - B), often 0.80;

- estimate power before collecting data for some planned analyses. This requires specifying the intended sample size, choosing an α and estimating which effect sizes are expected. If the estimated power is low, the planned study may be cancelled or proceed with a larger sample size;

- estimate power after data have been collected and analyzed. This calculation is based on the actual sample size, α used for testing and observed effect size.

G*Power is freely downloadable software for running the aforementioned and many other power calculations. Among its features are

- computing effect sizes from descriptive statistics (mostly sample means and standard deviations);

- computing power, required sample sizes, required effect sizes and more;

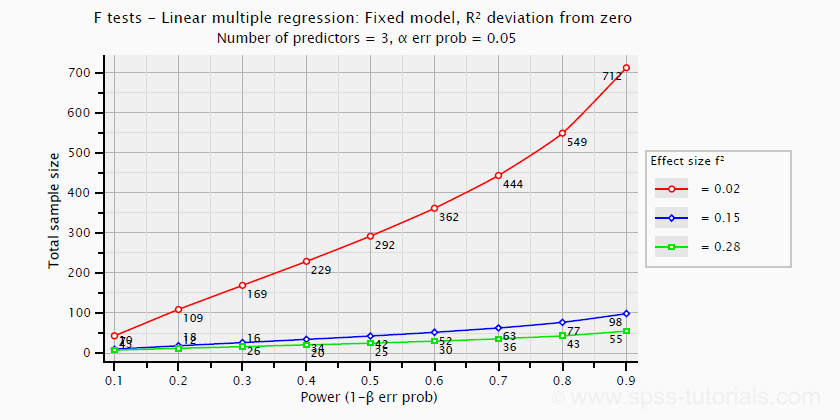

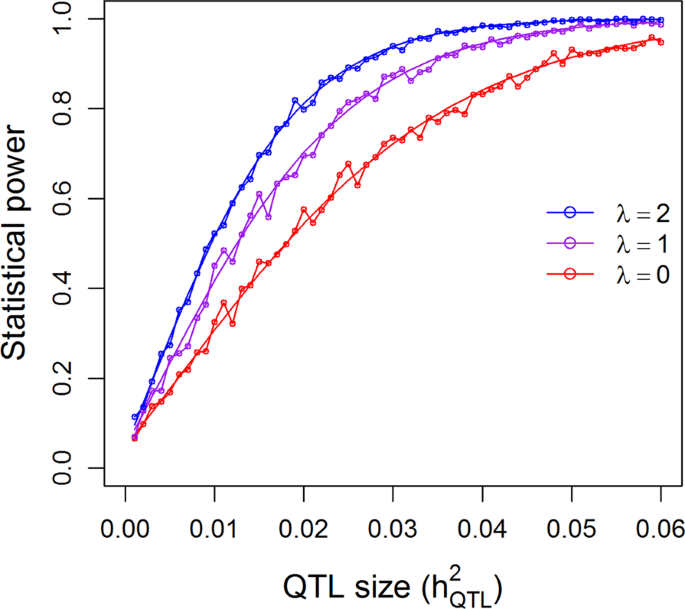

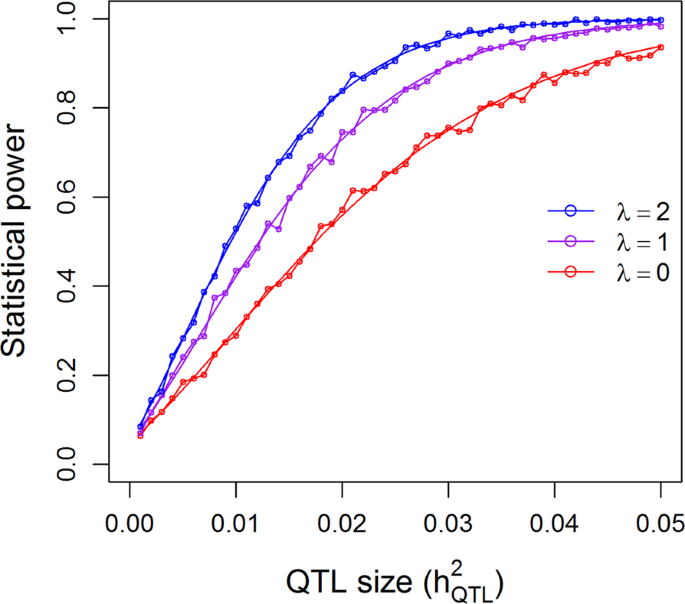

- creating plots that visualize how power, effect size and sample size relate for many different statistical procedures. The figure below shows an example for multiple linear regression.

Altogether, we think G*Power is amazing software and we highly recommend using it. The only disadvantage we can think of is that it requires rather unusual effect size measures. Some examples are

- Cohen’s f for ANOVA and

- Cohen’s W for a chi-square test .

This is awkward because the APA and (perhaps therefore) most journal articles typically recommend reporting

- (partial) eta-squared for ANOVA and

- the contingency coefficient or (better) Cramér’s V for a chi-square test.

These are also the measures we typically obtain from statistical packages such as SPSS or JASP. Fortunately, G*Power converts some measures and/or computes them from descriptive statistics like we saw in this screenshot .

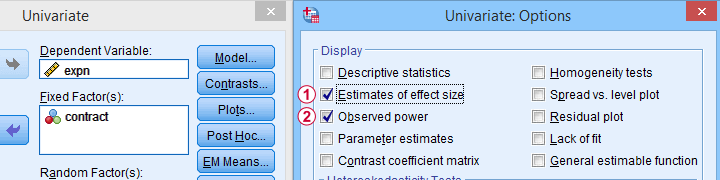

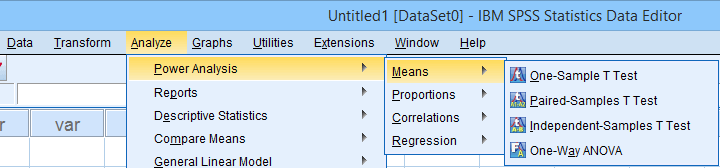

Software for Power Calculations - SPSS

In SPSS , observed power can be obtained from the GLM, UNIANOVA and (deprecated) MANOVA procedures. Keep in mind that GLM - short for General Linear Model- is very general indeed: it can be used for a wide variety of analyses including

- (multiple) linear regression;

- ANCOVA (analysis of covariance);

- repeated measures ANOVA .

Other power calculations (required sample sizes or estimating power prior to data collection) were added to SPSS version 27, released in 2020.

In my opinion, SPSS power analysis is a pathetic attempt to compete with G*Power. If you don't believe me, just try running a couple of power analyses in both programs simultaneously. If you do believe me, ignore SPSS power analysis and just go for G*Power.

Thanks for reading.

Tell us what you think!

This tutorial has 5 comments:.

By Bogdan on November 17th, 2022

Thanks for the very clear and detailed explanations, they really helped a lot!

By john on November 18th, 2022

At last I've found a very clear description of statistical power. Great explanation. Thanks for this.

By Sabby Grg on May 6th, 2023

Thank you for the clear explanation. I had a few questions about the a priori gpower analysis for the sample size and sensitivity analysis after the data collection. For my project, I initially wanted to run a correlation matrix and then run a multiple linear regression analysis. But the correlation matrix showed that none of the predictors correlated with the outcome variable, so I had to conduct a Spearman’s ranked correlation (data was non-normally distributed). Firstly, should I mention in my report that initially a sample size was determined through f-test power analysis with multiple regression as the statistical test (N=85). But also mention that as there was a change in statistical test, another power analysis was done with t test family and correlation: point biserial model as the statistical test (N=82). As a priori analysis is done before the study, it seemed silly to mention another analysis was done after the study but also doesn’t make sense to just mention the power analysis for multiple regression when I used a correlation test instead.

Secondly, it was recommended to conduct a sensitivity analysis to see what effect size I was powered to detect with my sample size of 81, as I was very close to the required sample size. I did it on G*power with t tests, correlation and sensitivity and the effect size calculation showed a medium effect size, which was what I was aiming for. Even though I didn’t add meet the required sample size, my sample achieved a medium effect size. How should I explain this in my results?

Would the best method be to mention that a power analysis was done with multiple regression as the statistical test, resulting in a sample size of 85. But during data analysis, the correlation matrix showed non-significant relationship between outcome and predictor variables so the appropriate test was Spearman’s rho correlation. A sensitivity analysis showed that even with the sample size of 81, a medium effect size for a correlation test was still achieved (+ justification).

Apologies for the lengthy query. Thank you in advance for your help.

By Ruben Geert van den Berg on May 7th, 2023

Honestly, I'm not buying any of this.

My basic conclusion is that you're just not willing to accept that the effects you're looking for probably aren't there.

For sample sizes of, say, N > 25, Pearson correlations don't require normality. Failing to detect them at N = 81 is pretty clear evidence that some variables just aren't linearly related.

They could still be non linearly related but you should model that via CURVEFIT or non linear transformations rather than going for Spearman correlations.

I'd simply report that the relations you're looking for are probably weak at best. And perhaps use a larger sample size next time.

But blaming lack of power for "non significant" results doesn't strike me as very convincing.

By Sabby Grg on May 7th, 2023

Thank you for your reply. Oh dear, in hindsight, I have worded my query utterly horribly. I think there may have been a misunderstanding, mainly from my lack of knowledge. Firstly, I completely understand your point on the Pearson’s r. I already let my supervisor know that for pre-analysis, I did the Pearson’s correlation before the regression based on the central limit theorem. As there were no significant results from the pre-analysis correlation matrix, it was recommended to do a Spearman’s correlation and justify why (non-normality), instead of regression. Upon reflection, it might be better to use Pearson’s r for the main test since it was already justified through central limit theorem.

Regardless, it was already established that my results are non significant and I had already accepted it. But one of the feedback was to do a sensitivity analysis to check the effect size of my sample of 81. Now, I can see where my initial query seems very misleading because I used the term, justify, when it should have been explain. I thought the recommendation of sensitivity analysis was to explain that my sample size was still powered enough to detect a result but that said result was non-significant. Initially, I wanted to understand how my sample was less than the a priori sample size calculation yet, still was powered to show a medium effect size (indicated by sensitivity analysis). Then after, I was going to explain how the study/sample was powered enough to show a medium effect size but the correlation test showed non-significant results so, it means that there just isn't any relationship between the variables <— This is the part I should’ve added in the initial query and this was my intention. In all honesty, I believe it might be best to leave my sensitivity analysis out if there is such misunderstanding when trying to explain it.

I do apologise for the misunderstanding as trying to justify the non-significant result with power, was not my intention. I was simply trying to understand what explanation there could be for my sample size (81) showing the same effect size as the a priori calculation (85). I know this may all sound amateur from an expert’s point of view but unfortunately, I am at that phase. Even this whole explanation might be flawed but I ask for your consideration.

I will just report inferential statistics using Pearsons’s and leave out the sensitivity analysis. But I do want to thank you for your reply as it has helped me review and reflect.

Privacy Overview

Teach yourself statistics

Power of a Hypothesis Test

The probability of not committing a Type II error is called the power of a hypothesis test.

Effect Size

To compute the power of the test, one offers an alternative view about the "true" value of the population parameter, assuming that the null hypothesis is false. The effect size is the difference between the true value and the value specified in the null hypothesis.

Effect size = True value - Hypothesized value

For example, suppose the null hypothesis states that a population mean is equal to 100. A researcher might ask: What is the probability of rejecting the null hypothesis if the true population mean is equal to 90? In this example, the effect size would be 90 - 100, which equals -10.

Factors That Affect Power

The power of a hypothesis test is affected by three factors.

- Sample size ( n ). Other things being equal, the greater the sample size, the greater the power of the test.

- Significance level (α). The lower the significance level, the lower the power of the test. If you reduce the significance level (e.g., from 0.05 to 0.01), the region of acceptance gets bigger. As a result, you are less likely to reject the null hypothesis. This means you are less likely to reject the null hypothesis when it is false, so you are more likely to make a Type II error. In short, the power of the test is reduced when you reduce the significance level; and vice versa.

- The "true" value of the parameter being tested. The greater the difference between the "true" value of a parameter and the value specified in the null hypothesis, the greater the power of the test. That is, the greater the effect size, the greater the power of the test.

Test Your Understanding

Other things being equal, which of the following actions will reduce the power of a hypothesis test?

I. Increasing sample size. II. Changing the significance level from 0.01 to 0.05. III. Increasing beta, the probability of a Type II error.

(A) I only (B) II only (C) III only (D) All of the above (E) None of the above

The correct answer is (C). Increasing sample size makes the hypothesis test more sensitive - more likely to reject the null hypothesis when it is, in fact, false. Changing the significance level from 0.01 to 0.05 makes the region of acceptance smaller, which makes the hypothesis test more likely to reject the null hypothesis, thus increasing the power of the test. Since, by definition, power is equal to one minus beta, the power of a test will get smaller as beta gets bigger.

Suppose a researcher conducts an experiment to test a hypothesis. If she doubles her sample size, which of the following will increase?

I. The power of the hypothesis test. II. The effect size of the hypothesis test. III. The probability of making a Type II error.

The correct answer is (A). Increasing sample size makes the hypothesis test more sensitive - more likely to reject the null hypothesis when it is, in fact, false. Thus, it increases the power of the test. The effect size is not affected by sample size. And the probability of making a Type II error gets smaller, not bigger, as sample size increases.

Statistical Power Calculator

The statistical power is a power of a binary hypothesis test. It is the probability that effectively rejects the null hypothesis value (H 0 ) when the alternative hypothesis value (H 1 ) is true. In this calculator, calculate the statistical power of a test (p = 1 - β) from the beta value.

Null Hypothesis Test

Related Calculators:

- Permutation And Combination Calculator

- Normal Distribution Calculator

- Normal Distribution(PDF)

- Binomial Distribution Calculator

- Ehrenfest Equation For Second Order Phase Transition Calculator

- Spring Resonant Frequency Calculator

Calculators and Converters

- Calculators

- Probability And Distributions

Top Calculators

Popular calculators.

- Derivative Calculator

- Inverse of Matrix Calculator

- Compound Interest Calculator

- Pregnancy Calculator Online

Top Categories

Power and Sample Size Determination

Lisa Sullivan, PhD

Professor of Biosatistics

Boston Univeristy School of Public Health

Introduction

A critically important aspect of any study is determining the appropriate sample size to answer the research question. This module will focus on formulas that can be used to estimate the sample size needed to produce a confidence interval estimate with a specified margin of error (precision) or to ensure that a test of hypothesis has a high probability of detecting a meaningful difference in the parameter.

Studies should be designed to include a sufficient number of participants to adequately address the research question. Studies that have either an inadequate number of participants or an excessively large number of participants are both wasteful in terms of participant and investigator time, resources to conduct the assessments, analytic efforts and so on. These situations can also be viewed as unethical as participants may have been put at risk as part of a study that was unable to answer an important question. Studies that are much larger than they need to be to answer the research questions are also wasteful.

The formulas presented here generate estimates of the necessary sample size(s) required based on statistical criteria. However, in many studies, the sample size is determined by financial or logistical constraints. For example, suppose a study is proposed to evaluate a new screening test for Down Syndrome. Suppose that the screening test is based on analysis of a blood sample taken from women early in pregnancy. In order to evaluate the properties of the screening test (e.g., the sensitivity and specificity), each pregnant woman will be asked to provide a blood sample and in addition to undergo an amniocentesis. The amniocentesis is included as the gold standard and the plan is to compare the results of the screening test to the results of the amniocentesis. Suppose that the collection and processing of the blood sample costs $250 per participant and that the amniocentesis costs $900 per participant. These financial constraints alone might substantially limit the number of women that can be enrolled. Just as it is important to consider both statistical and clinical significance when interpreting results of a statistical analysis, it is also important to weigh both statistical and logistical issues in determining the sample size for a study.

Learning Objectives

After completing this module, the student will be able to:

- Provide examples demonstrating how the margin of error, effect size and variability of the outcome affect sample size computations.

- Compute the sample size required to estimate population parameters with precision.

- Interpret statistical power in tests of hypothesis.

- Compute the sample size required to ensure high power when hypothesis testing.

Issues in Estimating Sample Size for Confidence Intervals Estimates

The module on confidence intervals provided methods for estimating confidence intervals for various parameters (e.g., μ , p, ( μ 1 - μ 2 ), μ d , (p 1 -p 2 )). Confidence intervals for every parameter take the following general form:

Point Estimate + Margin of Error

In the module on confidence intervals we derived the formula for the confidence interval for μ as

In practice we use the sample standard deviation to estimate the population standard deviation. Note that there is an alternative formula for estimating the mean of a continuous outcome in a single population, and it is used when the sample size is small (n<30). It involves a value from the t distribution, as opposed to one from the standard normal distribution, to reflect the desired level of confidence. When performing sample size computations, we use the large sample formula shown here. [Note: The resultant sample size might be small, and in the analysis stage, the appropriate confidence interval formula must be used.]

The point estimate for the population mean is the sample mean and the margin of error is

In planning studies, we want to determine the sample size needed to ensure that the margin of error is sufficiently small to be informative. For example, suppose we want to estimate the mean weight of female college students. We conduct a study and generate a 95% confidence interval as follows 125 + 40 pounds, or 85 to 165 pounds. The margin of error is so wide that the confidence interval is uninformative. To be informative, an investigator might want the margin of error to be no more than 5 or 10 pounds (meaning that the 95% confidence interval would have a width (lower limit to upper limit) of 10 or 20 pounds). In order to determine the sample size needed, the investigator must specify the desired margin of error . It is important to note that this is not a statistical issue, but a clinical or a practical one. For example, suppose we want to estimate the mean birth weight of infants born to mothers who smoke cigarettes during pregnancy. Birth weights in infants clearly have a much more restricted range than weights of female college students. Therefore, we would probably want to generate a confidence interval for the mean birth weight that has a margin of error not exceeding 1 or 2 pounds.

The margin of error in the one sample confidence interval for μ can be written as follows:

Our goal is to determine the sample size, n, that ensures that the margin of error, " E ," does not exceed a specified value. We can take the formula above and, with some algebra, solve for n :

First, multipy both sides of the equation by the square root of n . Then cancel out the square root of n from the numerator and denominator on the right side of the equation (since any number divided by itself is equal to 1). This leaves:

Now divide both sides by "E" and cancel out "E" from the numerator and denominator on the left side. This leaves:

Finally, square both sides of the equation to get:

This formula generates the sample size, n , required to ensure that the margin of error, E , does not exceed a specified value. To solve for n , we must input " Z ," " σ ," and " E ."

- Z is the value from the table of probabilities of the standard normal distribution for the desired confidence level (e.g., Z = 1.96 for 95% confidence)

- E is the margin of error that the investigator specifies as important from a clinical or practical standpoint.

- σ is the standard deviation of the outcome of interest.

Sometimes it is difficult to estimate σ . When we use the sample size formula above (or one of the other formulas that we will present in the sections that follow), we are planning a study to estimate the unknown mean of a particular outcome variable in a population. It is unlikely that we would know the standard deviation of that variable. In sample size computations, investigators often use a value for the standard deviation from a previous study or a study done in a different, but comparable, population. The sample size computation is not an application of statistical inference and therefore it is reasonable to use an appropriate estimate for the standard deviation. The estimate can be derived from a different study that was reported in the literature; some investigators perform a small pilot study to estimate the standard deviation. A pilot study usually involves a small number of participants (e.g., n=10) who are selected by convenience, as opposed to by random sampling. Data from the participants in the pilot study can be used to compute a sample standard deviation, which serves as a good estimate for σ in the sample size formula. Regardless of how the estimate of the variability of the outcome is derived, it should always be conservative (i.e., as large as is reasonable), so that the resultant sample size is not too small.

Sample Size for One Sample, Continuous Outcome

In studies where the plan is to estimate the mean of a continuous outcome variable in a single population, the formula for determining sample size is given below:

where Z is the value from the standard normal distribution reflecting the confidence level that will be used (e.g., Z = 1.96 for 95%), σ is the standard deviation of the outcome variable and E is the desired margin of error. The formula above generates the minimum number of subjects required to ensure that the margin of error in the confidence interval for μ does not exceed E .

An investigator wants to estimate the mean systolic blood pressure in children with congenital heart disease who are between the ages of 3 and 5. How many children should be enrolled in the study? The investigator plans on using a 95% confidence interval (so Z=1.96) and wants a margin of error of 5 units. The standard deviation of systolic blood pressure is unknown, but the investigators conduct a literature search and find that the standard deviation of systolic blood pressures in children with other cardiac defects is between 15 and 20. To estimate the sample size, we consider the larger standard deviation in order to obtain the most conservative (largest) sample size.

In order to ensure that the 95% confidence interval estimate of the mean systolic blood pressure in children between the ages of 3 and 5 with congenital heart disease is within 5 units of the true mean, a sample of size 62 is needed. [ Note : We always round up; the sample size formulas always generate the minimum number of subjects needed to ensure the specified precision.] Had we assumed a standard deviation of 15, the sample size would have been n=35. Because the estimates of the standard deviation were derived from studies of children with other cardiac defects, it would be advisable to use the larger standard deviation and plan for a study with 62 children. Selecting the smaller sample size could potentially produce a confidence interval estimate with a larger margin of error.

An investigator wants to estimate the mean birth weight of infants born full term (approximately 40 weeks gestation) to mothers who are 19 years of age and under. The mean birth weight of infants born full-term to mothers 20 years of age and older is 3,510 grams with a standard deviation of 385 grams. How many women 19 years of age and under must be enrolled in the study to ensure that a 95% confidence interval estimate of the mean birth weight of their infants has a margin of error not exceeding 100 grams? Try to work through the calculation before you look at the answer.

Sample Size for One Sample, Dichotomous Outcome

In studies where the plan is to estimate the proportion of successes in a dichotomous outcome variable (yes/no) in a single population, the formula for determining sample size is:

where Z is the value from the standard normal distribution reflecting the confidence level that will be used (e.g., Z = 1.96 for 95%) and E is the desired margin of error. p is the proportion of successes in the population. Here we are planning a study to generate a 95% confidence interval for the unknown population proportion, p . The equation to determine the sample size for determining p seems to require knowledge of p, but this is obviously this is a circular argument, because if we knew the proportion of successes in the population, then a study would not be necessary! What we really need is an approximate value of p or an anticipated value. The range of p is 0 to 1, and therefore the range of p(1-p) is 0 to 1. The value of p that maximizes p(1-p) is p=0.5. Consequently, if there is no information available to approximate p, then p=0.5 can be used to generate the most conservative, or largest, sample size.

Example 2:

An investigator wants to estimate the proportion of freshmen at his University who currently smoke cigarettes (i.e., the prevalence of smoking). How many freshmen should be involved in the study to ensure that a 95% confidence interval estimate of the proportion of freshmen who smoke is within 5% of the true proportion?

Because we have no information on the proportion of freshmen who smoke, we use 0.5 to estimate the sample size as follows:

In order to ensure that the 95% confidence interval estimate of the proportion of freshmen who smoke is within 5% of the true proportion, a sample of size 385 is needed.

Suppose that a similar study was conducted 2 years ago and found that the prevalence of smoking was 27% among freshmen. If the investigator believes that this is a reasonable estimate of prevalence 2 years later, it can be used to plan the next study. Using this estimate of p, what sample size is needed (assuming that again a 95% confidence interval will be used and we want the same level of precision)?

An investigator wants to estimate the prevalence of breast cancer among women who are between 40 and 45 years of age living in Boston. How many women must be involved in the study to ensure that the estimate is precise? National data suggest that 1 in 235 women are diagnosed with breast cancer by age 40. This translates to a proportion of 0.0043 (0.43%) or a prevalence of 43 per 10,000 women. Suppose the investigator wants the estimate to be within 10 per 10,000 women with 95% confidence. The sample size is computed as follows:

A sample of size n=16,448 will ensure that a 95% confidence interval estimate of the prevalence of breast cancer is within 0.10 (or to within 10 women per 10,000) of its true value. This is a situation where investigators might decide that a sample of this size is not feasible. Suppose that the investigators thought a sample of size 5,000 would be reasonable from a practical point of view. How precisely can we estimate the prevalence with a sample of size n=5,000? Recall that the confidence interval formula to estimate prevalence is:

Assuming that the prevalence of breast cancer in the sample will be close to that based on national data, we would expect the margin of error to be approximately equal to the following:

Thus, with n=5,000 women, a 95% confidence interval would be expected to have a margin of error of 0.0018 (or 18 per 10,000). The investigators must decide if this would be sufficiently precise to answer the research question. Note that the above is based on the assumption that the prevalence of breast cancer in Boston is similar to that reported nationally. This may or may not be a reasonable assumption. In fact, it is the objective of the current study to estimate the prevalence in Boston. The research team, with input from clinical investigators and biostatisticians, must carefully evaluate the implications of selecting a sample of size n = 5,000, n = 16,448 or any size in between.

Sample Sizes for Two Independent Samples, Continuous Outcome

In studies where the plan is to estimate the difference in means between two independent populations, the formula for determining the sample sizes required in each comparison group is given below:

where n i is the sample size required in each group (i=1,2), Z is the value from the standard normal distribution reflecting the confidence level that will be used and E is the desired margin of error. σ again reflects the standard deviation of the outcome variable. Recall from the module on confidence intervals that, when we generated a confidence interval estimate for the difference in means, we used Sp, the pooled estimate of the common standard deviation, as a measure of variability in the outcome (based on pooling the data), where Sp is computed as follows:

If data are available on variability of the outcome in each comparison group, then Sp can be computed and used in the sample size formula. However, it is more often the case that data on the variability of the outcome are available from only one group, often the untreated (e.g., placebo control) or unexposed group. When planning a clinical trial to investigate a new drug or procedure, data are often available from other trials that involved a placebo or an active control group (i.e., a standard medication or treatment given for the condition under study). The standard deviation of the outcome variable measured in patients assigned to the placebo, control or unexposed group can be used to plan a future trial, as illustrated below.

Note that the formula for the sample size generates sample size estimates for samples of equal size. If a study is planned where different numbers of patients will be assigned or different numbers of patients will comprise the comparison groups, then alternative formulas can be used.

An investigator wants to plan a clinical trial to evaluate the efficacy of a new drug designed to increase HDL cholesterol (the "good" cholesterol). The plan is to enroll participants and to randomly assign them to receive either the new drug or a placebo. HDL cholesterol will be measured in each participant after 12 weeks on the assigned treatment. Based on prior experience with similar trials, the investigator expects that 10% of all participants will be lost to follow up or will drop out of the study over 12 weeks. A 95% confidence interval will be estimated to quantify the difference in mean HDL levels between patients taking the new drug as compared to placebo. The investigator would like the margin of error to be no more than 3 units. How many patients should be recruited into the study?

The sample sizes are computed as follows:

A major issue is determining the variability in the outcome of interest (σ), here the standard deviation of HDL cholesterol. To plan this study, we can use data from the Framingham Heart Study. In participants who attended the seventh examination of the Offspring Study and were not on treatment for high cholesterol, the standard deviation of HDL cholesterol is 17.1. We will use this value and the other inputs to compute the sample sizes as follows:

Samples of size n 1 =250 and n 2 =250 will ensure that the 95% confidence interval for the difference in mean HDL levels will have a margin of error of no more than 3 units. Again, these sample sizes refer to the numbers of participants with complete data. The investigators hypothesized a 10% attrition (or drop-out) rate (in both groups). In order to ensure that the total sample size of 500 is available at 12 weeks, the investigator needs to recruit more participants to allow for attrition.

N (number to enroll) * (% retained) = desired sample size

Therefore N (number to enroll) = desired sample size/(% retained)

N = 500/0.90 = 556

If they anticipate a 10% attrition rate, the investigators should enroll 556 participants. This will ensure N=500 with complete data at the end of the trial.

An investigator wants to compare two diet programs in children who are obese. One diet is a low fat diet, and the other is a low carbohydrate diet. The plan is to enroll children and weigh them at the start of the study. Each child will then be randomly assigned to either the low fat or the low carbohydrate diet. Each child will follow the assigned diet for 8 weeks, at which time they will again be weighed. The number of pounds lost will be computed for each child. Based on data reported from diet trials in adults, the investigator expects that 20% of all children will not complete the study. A 95% confidence interval will be estimated to quantify the difference in weight lost between the two diets and the investigator would like the margin of error to be no more than 3 pounds. How many children should be recruited into the study?

Again the issue is determining the variability in the outcome of interest (σ), here the standard deviation in pounds lost over 8 weeks. To plan this study, investigators use data from a published study in adults. Suppose one such study compared the same diets in adults and involved 100 participants in each diet group. The study reported a standard deviation in weight lost over 8 weeks on a low fat diet of 8.4 pounds and a standard deviation in weight lost over 8 weeks on a low carbohydrate diet of 7.7 pounds. These data can be used to estimate the common standard deviation in weight lost as follows:

We now use this value and the other inputs to compute the sample sizes:

Samples of size n 1 =56 and n 2 =56 will ensure that the 95% confidence interval for the difference in weight lost between diets will have a margin of error of no more than 3 pounds. Again, these sample sizes refer to the numbers of children with complete data. The investigators anticipate a 20% attrition rate. In order to ensure that the total sample size of 112 is available at 8 weeks, the investigator needs to recruit more participants to allow for attrition.

N = 112/0.80 = 140

Sample Size for Matched Samples, Continuous Outcome

In studies where the plan is to estimate the mean difference of a continuous outcome based on matched data, the formula for determining sample size is given below:

where Z is the value from the standard normal distribution reflecting the confidence level that will be used (e.g., Z = 1.96 for 95%), E is the desired margin of error, and σ d is the standard deviation of the difference scores. It is extremely important that the standard deviation of the difference scores (e.g., the difference based on measurements over time or the difference between matched pairs) is used here to appropriately estimate the sample size.

Sample Sizes for Two Independent Samples, Dichotomous Outcome

In studies where the plan is to estimate the difference in proportions between two independent populations (i.e., to estimate the risk difference), the formula for determining the sample sizes required in each comparison group is:

where n i is the sample size required in each group (i=1,2), Z is the value from the standard normal distribution reflecting the confidence level that will be used (e.g., Z = 1.96 for 95%), and E is the desired margin of error. p 1 and p 2 are the proportions of successes in each comparison group. Again, here we are planning a study to generate a 95% confidence interval for the difference in unknown proportions, and the formula to estimate the sample sizes needed requires p 1 and p 2 . In order to estimate the sample size, we need approximate values of p 1 and p 2 . The values of p 1 and p 2 that maximize the sample size are p 1 =p 2 =0.5. Thus, if there is no information available to approximate p 1 and p 2 , then 0.5 can be used to generate the most conservative, or largest, sample sizes.

Similar to the situation for two independent samples and a continuous outcome at the top of this page, it may be the case that data are available on the proportion of successes in one group, usually the untreated (e.g., placebo control) or unexposed group. If so, the known proportion can be used for both p 1 and p 2 in the formula shown above. The formula shown above generates sample size estimates for samples of equal size. If a study is planned where different numbers of patients will be assigned or different numbers of patients will comprise the comparison groups, then alternative formulas can be used. Interested readers can see Fleiss for more details. 4

An investigator wants to estimate the impact of smoking during pregnancy on premature delivery. Normal pregnancies last approximately 40 weeks and premature deliveries are those that occur before 37 weeks. The 2005 National Vital Statistics report indicates that approximately 12% of infants are born prematurely in the United States. 5 The investigator plans to collect data through medical record review and to generate a 95% confidence interval for the difference in proportions of infants born prematurely to women who smoked during pregnancy as compared to those who did not. How many women should be enrolled in the study to ensure that the 95% confidence interval for the difference in proportions has a margin of error of no more than 4%?

The sample sizes (i.e., numbers of women who smoked and did not smoke during pregnancy) can be computed using the formula shown above. National data suggest that 12% of infants are born prematurely. We will use that estimate for both groups in the sample size computation.

Samples of size n 1 =508 women who smoked during pregnancy and n 2 =508 women who did not smoke during pregnancy will ensure that the 95% confidence interval for the difference in proportions who deliver prematurely will have a margin of error of no more than 4%.

Is attrition an issue here?

Issues in Estimating Sample Size for Hypothesis Testing

In the module on hypothesis testing for means and proportions, we introduced techniques for means, proportions, differences in means, and differences in proportions. While each test involved details that were specific to the outcome of interest (e.g., continuous or dichotomous) and to the number of comparison groups (one, two, more than two), there were common elements to each test. For example, in each test of hypothesis, there are two errors that can be committed. The first is called a Type I error and refers to the situation where we incorrectly reject H 0 when in fact it is true. In the first step of any test of hypothesis, we select a level of significance, α , and α = P(Type I error) = P(Reject H 0 | H 0 is true). Because we purposely select a small value for α , we control the probability of committing a Type I error. The second type of error is called a Type II error and it is defined as the probability we do not reject H 0 when it is false. The probability of a Type II error is denoted β , and β =P(Type II error) = P(Do not Reject H 0 | H 0 is false). In hypothesis testing, we usually focus on power, which is defined as the probability that we reject H 0 when it is false, i.e., power = 1- β = P(Reject H 0 | H 0 is false). Power is the probability that a test correctly rejects a false null hypothesis. A good test is one with low probability of committing a Type I error (i.e., small α ) and high power (i.e., small β, high power).

Here we present formulas to determine the sample size required to ensure that a test has high power. The sample size computations depend on the level of significance, aα, the desired power of the test (equivalent to 1-β), the variability of the outcome, and the effect size. The effect size is the difference in the parameter of interest that represents a clinically meaningful difference. Similar to the margin of error in confidence interval applications, the effect size is determined based on clinical or practical criteria and not statistical criteria.

The concept of statistical power can be difficult to grasp. Before presenting the formulas to determine the sample sizes required to ensure high power in a test, we will first discuss power from a conceptual point of view.

Suppose we want to test the following hypotheses at aα=0.05: H 0 : μ = 90 versus H 1 : μ ≠ 90. To test the hypotheses, suppose we select a sample of size n=100. For this example, assume that the standard deviation of the outcome is σ=20. We compute the sample mean and then must decide whether the sample mean provides evidence to support the alternative hypothesis or not. This is done by computing a test statistic and comparing the test statistic to an appropriate critical value. If the null hypothesis is true (μ=90), then we are likely to select a sample whose mean is close in value to 90. However, it is also possible to select a sample whose mean is much larger or much smaller than 90. Recall from the Central Limit Theorem (see page 11 in the module on Probability), that for large n (here n=100 is sufficiently large), the distribution of the sample means is approximately normal with a mean of

If the null hypothesis is true, it is possible to observe any sample mean shown in the figure below; all are possible under H 0 : μ = 90.

Rejection Region for Test H 0 : μ = 90 versus H 1 : μ ≠ 90 at α =0.05

The areas in the two tails of the curve represent the probability of a Type I Error, α= 0.05. This concept was discussed in the module on Hypothesis Testing.

Now, suppose that the alternative hypothesis, H 1 , is true (i.e., μ ≠ 90) and that the true mean is actually 94. The figure below shows the distributions of the sample mean under the null and alternative hypotheses.The values of the sample mean are shown along the horizontal axis.

If the true mean is 94, then the alternative hypothesis is true. In our test, we selected α = 0.05 and reject H 0 if the observed sample mean exceeds 93.92 (focusing on the upper tail of the rejection region for now). The critical value (93.92) is indicated by the vertical line. The probability of a Type II error is denoted β, and β = P(Do not Reject H 0 | H 0 is false), i.e., the probability of not rejecting the null hypothesis if the null hypothesis were true. β is shown in the figure above as the area under the rightmost curve (H 1 ) to the left of the vertical line (where we do not reject H 0 ). Power is defined as 1- β = P(Reject H 0 | H 0 is false) and is shown in the figure as the area under the rightmost curve (H 1 ) to the right of the vertical line (where we reject H 0 ).

Note that β and power are related to α, the variability of the outcome and the effect size. From the figure above we can see what happens to β and power if we increase α. Suppose, for example, we increase α to α=0.10.The upper critical value would be 92.56 instead of 93.92. The vertical line would shift to the left, increasing α, decreasing β and increasing power. While a better test is one with higher power, it is not advisable to increase α as a means to increase power. Nonetheless, there is a direct relationship between α and power (as α increases, so does power).

β and power are also related to the variability of the outcome and to the effect size. The effect size is the difference in the parameter of interest (e.g., μ) that represents a clinically meaningful difference. The figure above graphically displays α, β, and power when the difference in the mean under the null as compared to the alternative hypothesis is 4 units (i.e., 90 versus 94). The figure below shows the same components for the situation where the mean under the alternative hypothesis is 98.

Notice that there is much higher power when there is a larger difference between the mean under H 0 as compared to H 1 (i.e., 90 versus 98). A statistical test is much more likely to reject the null hypothesis in favor of the alternative if the true mean is 98 than if the true mean is 94. Notice also in this case that there is little overlap in the distributions under the null and alternative hypotheses. If a sample mean of 97 or higher is observed it is very unlikely that it came from a distribution whose mean is 90. In the previous figure for H 0 : μ = 90 and H 1 : μ = 94, if we observed a sample mean of 93, for example, it would not be as clear as to whether it came from a distribution whose mean is 90 or one whose mean is 94.

Ensuring That a Test Has High Power

In designing studies most people consider power of 80% or 90% (just as we generally use 95% as the confidence level for confidence interval estimates). The inputs for the sample size formulas include the desired power, the level of significance and the effect size. The effect size is selected to represent a clinically meaningful or practically important difference in the parameter of interest, as we will illustrate.

The formulas we present below produce the minimum sample size to ensure that the test of hypothesis will have a specified probability of rejecting the null hypothesis when it is false (i.e., a specified power). In planning studies, investigators again must account for attrition or loss to follow-up. The formulas shown below produce the number of participants needed with complete data, and we will illustrate how attrition is addressed in planning studies.

In studies where the plan is to perform a test of hypothesis comparing the mean of a continuous outcome variable in a single population to a known mean, the hypotheses of interest are:

H 0 : μ = μ 0 and H 1 : μ ≠ μ 0 where μ 0 is the known mean (e.g., a historical control). The formula for determining sample size to ensure that the test has a specified power is given below:

where α is the selected level of significance and Z 1-α /2 is the value from the standard normal distribution holding 1- α/2 below it. For example, if α=0.05, then 1- α/2 = 0.975 and Z=1.960. 1- β is the selected power, and Z 1-β is the value from the standard normal distribution holding 1- β below it. Sample size estimates for hypothesis testing are often based on achieving 80% or 90% power. The Z 1-β values for these popular scenarios are given below:

- For 80% power Z 0.80 = 0.84

- For 90% power Z 0.90 =1.282

ES is the effect size , defined as follows:

where μ 0 is the mean under H 0 , μ 1 is the mean under H 1 and σ is the standard deviation of the outcome of interest. The numerator of the effect size, the absolute value of the difference in means | μ 1 - μ 0 |, represents what is considered a clinically meaningful or practically important difference in means. Similar to the issue we faced when planning studies to estimate confidence intervals, it can sometimes be difficult to estimate the standard deviation. In sample size computations, investigators often use a value for the standard deviation from a previous study or a study performed in a different but comparable population. Regardless of how the estimate of the variability of the outcome is derived, it should always be conservative (i.e., as large as is reasonable), so that the resultant sample size will not be too small.

Example 7:

An investigator hypothesizes that in people free of diabetes, fasting blood glucose, a risk factor for coronary heart disease, is higher in those who drink at least 2 cups of coffee per day. A cross-sectional study is planned to assess the mean fasting blood glucose levels in people who drink at least two cups of coffee per day. The mean fasting blood glucose level in people free of diabetes is reported as 95.0 mg/dL with a standard deviation of 9.8 mg/dL. 7 If the mean blood glucose level in people who drink at least 2 cups of coffee per day is 100 mg/dL, this would be important clinically. How many patients should be enrolled in the study to ensure that the power of the test is 80% to detect this difference? A two sided test will be used with a 5% level of significance.

The effect size is computed as:

The effect size represents the meaningful difference in the population mean - here 95 versus 100, or 0.51 standard deviation units different. We now substitute the effect size and the appropriate Z values for the selected α and power to compute the sample size.

Therefore, a sample of size n=31 will ensure that a two-sided test with α =0.05 has 80% power to detect a 5 mg/dL difference in mean fasting blood glucose levels.

In the planned study, participants will be asked to fast overnight and to provide a blood sample for analysis of glucose levels. Based on prior experience, the investigators hypothesize that 10% of the participants will fail to fast or will refuse to follow the study protocol. Therefore, a total of 35 participants will be enrolled in the study to ensure that 31 are available for analysis (see below).

N (number to enroll) * (% following protocol) = desired sample size

N = 31/0.90 = 35.

Sample Size for One Sample, Dichotomous Outcome

In studies where the plan is to perform a test of hypothesis comparing the proportion of successes in a dichotomous outcome variable in a single population to a known proportion, the hypotheses of interest are:

where p 0 is the known proportion (e.g., a historical control). The formula for determining the sample size to ensure that the test has a specified power is given below:

where α is the selected level of significance and Z 1-α /2 is the value from the standard normal distribution holding 1- α/2 below it. 1- β is the selected power and Z 1-β is the value from the standard normal distribution holding 1- β below it , and ES is the effect size, defined as follows:

where p 0 is the proportion under H 0 and p 1 is the proportion under H 1 . The numerator of the effect size, the absolute value of the difference in proportions |p 1 -p 0 |, again represents what is considered a clinically meaningful or practically important difference in proportions.

Example 8:

A recent report from the Framingham Heart Study indicated that 26% of people free of cardiovascular disease had elevated LDL cholesterol levels, defined as LDL > 159 mg/dL. 9 An investigator hypothesizes that a higher proportion of patients with a history of cardiovascular disease will have elevated LDL cholesterol. How many patients should be studied to ensure that the power of the test is 90% to detect a 5% difference in the proportion with elevated LDL cholesterol? A two sided test will be used with a 5% level of significance.

We first compute the effect size:

We now substitute the effect size and the appropriate Z values for the selected α and power to compute the sample size.

A sample of size n=869 will ensure that a two-sided test with α =0.05 has 90% power to detect a 5% difference in the proportion of patients with a history of cardiovascular disease who have an elevated LDL cholesterol level.

A medical device manufacturer produces implantable stents. During the manufacturing process, approximately 10% of the stents are deemed to be defective. The manufacturer wants to test whether the proportion of defective stents is more than 10%. If the process produces more than 15% defective stents, then corrective action must be taken. Therefore, the manufacturer wants the test to have 90% power to detect a difference in proportions of this magnitude. How many stents must be evaluated? For you computations, use a two-sided test with a 5% level of significance. (Do the computation yourself, before looking at the answer.)

In studies where the plan is to perform a test of hypothesis comparing the means of a continuous outcome variable in two independent populations, the hypotheses of interest are:

where μ 1 and μ 2 are the means in the two comparison populations. The formula for determining the sample sizes to ensure that the test has a specified power is:

where n i is the sample size required in each group (i=1,2), α is the selected level of significance and Z 1-α /2 is the value from the standard normal distribution holding 1- α /2 below it, and 1- β is the selected power and Z 1-β is the value from the standard normal distribution holding 1- β below it. ES is the effect size, defined as:

where | μ 1 - μ 2 | is the absolute value of the difference in means between the two groups expected under the alternative hypothesis, H 1 . σ is the standard deviation of the outcome of interest. Recall from the module on Hypothesis Testing that, when we performed tests of hypothesis comparing the means of two independent groups, we used Sp, the pooled estimate of the common standard deviation, as a measure of variability in the outcome.

Sp is computed as follows:

If data are available on variability of the outcome in each comparison group, then Sp can be computed and used to generate the sample sizes. However, it is more often the case that data on the variability of the outcome are available from only one group, usually the untreated (e.g., placebo control) or unexposed group. When planning a clinical trial to investigate a new drug or procedure, data are often available from other trials that may have involved a placebo or an active control group (i.e., a standard medication or treatment given for the condition under study). The standard deviation of the outcome variable measured in patients assigned to the placebo, control or unexposed group can be used to plan a future trial, as illustrated.

Note also that the formula shown above generates sample size estimates for samples of equal size. If a study is planned where different numbers of patients will be assigned or different numbers of patients will comprise the comparison groups, then alternative formulas can be used (see Howell 3 for more details).

An investigator is planning a clinical trial to evaluate the efficacy of a new drug designed to reduce systolic blood pressure. The plan is to enroll participants and to randomly assign them to receive either the new drug or a placebo. Systolic blood pressures will be measured in each participant after 12 weeks on the assigned treatment. Based on prior experience with similar trials, the investigator expects that 10% of all participants will be lost to follow up or will drop out of the study. If the new drug shows a 5 unit reduction in mean systolic blood pressure, this would represent a clinically meaningful reduction. How many patients should be enrolled in the trial to ensure that the power of the test is 80% to detect this difference? A two sided test will be used with a 5% level of significance.

In order to compute the effect size, an estimate of the variability in systolic blood pressures is needed. Analysis of data from the Framingham Heart Study showed that the standard deviation of systolic blood pressure was 19.0. This value can be used to plan the trial.

The effect size is:

Samples of size n 1 =232 and n 2 = 232 will ensure that the test of hypothesis will have 80% power to detect a 5 unit difference in mean systolic blood pressures in patients receiving the new drug as compared to patients receiving the placebo. However, the investigators hypothesized a 10% attrition rate (in both groups), and to ensure a total sample size of 232 they need to allow for attrition.

N = 232/0.90 = 258.

The investigator must enroll 258 participants to be randomly assigned to receive either the new drug or placebo.

An investigator is planning a study to assess the association between alcohol consumption and grade point average among college seniors. The plan is to categorize students as heavy drinkers or not using 5 or more drinks on a typical drinking day as the criterion for heavy drinking. Mean grade point averages will be compared between students classified as heavy drinkers versus not using a two independent samples test of means. The standard deviation in grade point averages is assumed to be 0.42 and a meaningful difference in grade point averages (relative to drinking status) is 0.25 units. How many college seniors should be enrolled in the study to ensure that the power of the test is 80% to detect a 0.25 unit difference in mean grade point averages? Use a two-sided test with a 5% level of significance.

Answer

In studies where the plan is to perform a test of hypothesis on the mean difference in a continuous outcome variable based on matched data, the hypotheses of interest are:

where μ d is the mean difference in the population. The formula for determining the sample size to ensure that the test has a specified power is given below:

where α is the selected level of significance and Z 1-α/2 is the value from the standard normal distribution holding 1- α/2 below it, 1- β is the selected power and Z 1-β is the value from the standard normal distribution holding 1- β below it and ES is the effect size, defined as follows:

where μ d is the mean difference expected under the alternative hypothesis, H 1 , and σ d is the standard deviation of the difference in the outcome (e.g., the difference based on measurements over time or the difference between matched pairs).

Example 10: