Essay on History of Computer

Students are often asked to write an essay on History of Computer in their schools and colleges. And if you’re also looking for the same, we have created 100-word, 250-word, and 500-word essays on the topic.

Let’s take a look…

100 Words Essay on History of Computer

Early beginnings.

Computers didn’t always look like the laptops or smartphones we use today. The first computer was the abacus, invented in 2400 BC. It used beads to help people calculate.

First Mechanical Computer

In 1822, Charles Babbage, a British mathematician, designed a mechanical computer called the “Difference Engine.” It was supposed to perform mathematical calculations.

The Birth of Modern Computers

The first modern computer was created in the 1930s. It was huge and filled an entire room. These computers used vacuum tubes to process information.

Personal Computers

In the 1970s, companies like Apple and IBM started making personal computers. This made it possible for people to have computers at home.

Remember, computers have come a long way and continue to evolve!

Also check:

- Paragraph on History of Computer

250 Words Essay on History of Computer

Introduction.

The history of computers is a fascinating journey, tracing back several centuries. It illustrates human ingenuity and evolution from primitive calculators to complex computing systems.

Early Computers

The concept of computing dates back to antiquity. The abacus, developed in 2400 BC, is often considered the earliest computer. In the 19th century, Charles Babbage conceptualized and designed the first mechanical computer, the Analytical Engine, which used punch cards for instructions.

Birth of Modern Computers

The 20th century heralded the era of modern computing. The first programmable computer, the Z3, was built by Konrad Zuse in 1941. However, it was the Electronic Numerical Integrator and Computer (ENIAC), developed in 1946, that truly revolutionized computing with its electronic technology.

Personal Computers and the Internet

The 1970s and 1980s saw the advent of personal computers (PCs). The Apple II, introduced in 1977, and IBM’s PC, launched in 1981, brought computers to the masses. The 1990s marked the birth of the internet, transforming computers into communication devices and information gateways.

Present and Future

Today, computers have become an integral part of our lives, from smartphones to supercomputers. They are now moving towards quantum computing, promising unprecedented computational power.

In summary, the history of computers is a testament to human innovation, evolving from simple counting devices to powerful tools that shape our lives. As we look forward to the future, the potential for further advancements in computing technology is limitless.

500 Words Essay on History of Computer

The dawn of computing.

The history of computers dates back to antiquity with devices like the abacus, used for calculations. However, the concept of a programmable computer was first realized in the 19th century by Charles Babbage, an English mathematician. His design, known as the Analytical Engine, is considered the first general-purpose computer, although it was never built.

The first half of the 20th century saw the development of electro-mechanical computers. The most notable was the Mark I, developed by Howard Aiken at Harvard University in 1944. It was the first machine to automatically execute long computations.

During the same period, the ENIAC (Electronic Numerical Integrator and Computer) was developed by John Mauchly and J. Presper Eckert at the University of Pennsylvania. Completed in 1945, it was the first general-purpose electronic computer. However, it was not programmable in the modern sense.

The Era of Transistors

The late 1940s marked the invention of the transistor, which revolutionized the computer industry. Transistors were faster, smaller, and more reliable than their vacuum tube counterparts. The first transistorized computer was built at the University of Manchester in 1953.

The 1950s and 1960s saw the development of mainframe computers, like IBM’s 700 series, which dominated the computing world for the next two decades. These machines were large and expensive, but they allowed multiple users to access the computer simultaneously through terminals.

Microprocessors and Personal Computers

The invention of the microprocessor in the 1970s marked the beginning of the personal computer era. The Intel 4004, released in 1971, was the first commercially available microprocessor. This development led to the creation of small, relatively inexpensive machines like the Apple II and the IBM PC, which made computing accessible to individuals and small businesses.

The Internet and Beyond

The 1980s and 1990s brought about the rise of the internet and the World Wide Web, expanding the use of computers into every aspect of modern life. The advent of graphical user interfaces, such as Microsoft’s Windows and Apple’s Mac OS, made computers even more user-friendly.

Today, computers have become ubiquitous in our society. They are embedded in everything from our phones to our cars, and they play a critical role in fields ranging from science to entertainment. The history of computers is a story of continuous innovation and progress, and it is clear that this trend will continue into the foreseeable future.

That’s it! I hope the essay helped you.

If you’re looking for more, here are essays on other interesting topics:

- Essay on Generation of Computer

- Essay on Computer Technology Good or Bad

- Essay on Computer Network

Apart from these, you can look at all the essays by clicking here .

Happy studying!

One Comment

I’m so happy and it’s so helpful to me. May God bless you

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

History of computers: A brief timeline

The history of computers began with primitive designs in the early 19th century and went on to change the world during the 20th century.

- 2000-present day

Additional resources

The history of computers goes back over 200 years. At first theorized by mathematicians and entrepreneurs, during the 19th century mechanical calculating machines were designed and built to solve the increasingly complex number-crunching challenges. The advancement of technology enabled ever more-complex computers by the early 20th century, and computers became larger and more powerful.

Today, computers are almost unrecognizable from designs of the 19th century, such as Charles Babbage's Analytical Engine — or even from the huge computers of the 20th century that occupied whole rooms, such as the Electronic Numerical Integrator and Calculator.

Here's a brief history of computers, from their primitive number-crunching origins to the powerful modern-day machines that surf the Internet, run games and stream multimedia.

19th century

1801: Joseph Marie Jacquard, a French merchant and inventor invents a loom that uses punched wooden cards to automatically weave fabric designs. Early computers would use similar punch cards.

1821: English mathematician Charles Babbage conceives of a steam-driven calculating machine that would be able to compute tables of numbers. Funded by the British government, the project, called the "Difference Engine" fails due to the lack of technology at the time, according to the University of Minnesota .

1848: Ada Lovelace, an English mathematician and the daughter of poet Lord Byron, writes the world's first computer program. According to Anna Siffert, a professor of theoretical mathematics at the University of Münster in Germany, Lovelace writes the first program while translating a paper on Babbage's Analytical Engine from French into English. "She also provides her own comments on the text. Her annotations, simply called "notes," turn out to be three times as long as the actual transcript," Siffert wrote in an article for The Max Planck Society . "Lovelace also adds a step-by-step description for computation of Bernoulli numbers with Babbage's machine — basically an algorithm — which, in effect, makes her the world's first computer programmer." Bernoulli numbers are a sequence of rational numbers often used in computation.

1853: Swedish inventor Per Georg Scheutz and his son Edvard design the world's first printing calculator. The machine is significant for being the first to "compute tabular differences and print the results," according to Uta C. Merzbach's book, " Georg Scheutz and the First Printing Calculator " (Smithsonian Institution Press, 1977).

1890: Herman Hollerith designs a punch-card system to help calculate the 1890 U.S. Census. The machine, saves the government several years of calculations, and the U.S. taxpayer approximately $5 million, according to Columbia University Hollerith later establishes a company that will eventually become International Business Machines Corporation ( IBM ).

Early 20th century

1931: At the Massachusetts Institute of Technology (MIT), Vannevar Bush invents and builds the Differential Analyzer, the first large-scale automatic general-purpose mechanical analog computer, according to Stanford University .

1936: Alan Turing , a British scientist and mathematician, presents the principle of a universal machine, later called the Turing machine, in a paper called "On Computable Numbers…" according to Chris Bernhardt's book " Turing's Vision " (The MIT Press, 2017). Turing machines are capable of computing anything that is computable. The central concept of the modern computer is based on his ideas. Turing is later involved in the development of the Turing-Welchman Bombe, an electro-mechanical device designed to decipher Nazi codes during World War II, according to the UK's National Museum of Computing .

1937: John Vincent Atanasoff, a professor of physics and mathematics at Iowa State University, submits a grant proposal to build the first electric-only computer, without using gears, cams, belts or shafts.

1939: David Packard and Bill Hewlett found the Hewlett Packard Company in Palo Alto, California. The pair decide the name of their new company by the toss of a coin, and Hewlett-Packard's first headquarters are in Packard's garage, according to MIT .

1941: German inventor and engineer Konrad Zuse completes his Z3 machine, the world's earliest digital computer, according to Gerard O'Regan's book " A Brief History of Computing " (Springer, 2021). The machine was destroyed during a bombing raid on Berlin during World War II. Zuse fled the German capital after the defeat of Nazi Germany and later released the world's first commercial digital computer, the Z4, in 1950, according to O'Regan.

1941: Atanasoff and his graduate student, Clifford Berry, design the first digital electronic computer in the U.S., called the Atanasoff-Berry Computer (ABC). This marks the first time a computer is able to store information on its main memory, and is capable of performing one operation every 15 seconds, according to the book " Birthing the Computer " (Cambridge Scholars Publishing, 2016)

1945: Two professors at the University of Pennsylvania, John Mauchly and J. Presper Eckert, design and build the Electronic Numerical Integrator and Calculator (ENIAC). The machine is the first "automatic, general-purpose, electronic, decimal, digital computer," according to Edwin D. Reilly's book "Milestones in Computer Science and Information Technology" (Greenwood Press, 2003).

1946: Mauchly and Presper leave the University of Pennsylvania and receive funding from the Census Bureau to build the UNIVAC, the first commercial computer for business and government applications.

1947: William Shockley, John Bardeen and Walter Brattain of Bell Laboratories invent the transistor . They discover how to make an electric switch with solid materials and without the need for a vacuum.

1949: A team at the University of Cambridge develops the Electronic Delay Storage Automatic Calculator (EDSAC), "the first practical stored-program computer," according to O'Regan. "EDSAC ran its first program in May 1949 when it calculated a table of squares and a list of prime numbers ," O'Regan wrote. In November 1949, scientists with the Council of Scientific and Industrial Research (CSIR), now called CSIRO, build Australia's first digital computer called the Council for Scientific and Industrial Research Automatic Computer (CSIRAC). CSIRAC is the first digital computer in the world to play music, according to O'Regan.

Late 20th century

1953: Grace Hopper develops the first computer language, which eventually becomes known as COBOL, which stands for COmmon, Business-Oriented Language according to the National Museum of American History . Hopper is later dubbed the "First Lady of Software" in her posthumous Presidential Medal of Freedom citation. Thomas Johnson Watson Jr., son of IBM CEO Thomas Johnson Watson Sr., conceives the IBM 701 EDPM to help the United Nations keep tabs on Korea during the war.

1954: John Backus and his team of programmers at IBM publish a paper describing their newly created FORTRAN programming language, an acronym for FORmula TRANslation, according to MIT .

1958: Jack Kilby and Robert Noyce unveil the integrated circuit, known as the computer chip. Kilby is later awarded the Nobel Prize in Physics for his work.

1968: Douglas Engelbart reveals a prototype of the modern computer at the Fall Joint Computer Conference, San Francisco. His presentation, called "A Research Center for Augmenting Human Intellect" includes a live demonstration of his computer, including a mouse and a graphical user interface (GUI), according to the Doug Engelbart Institute . This marks the development of the computer from a specialized machine for academics to a technology that is more accessible to the general public.

1969: Ken Thompson, Dennis Ritchie and a group of other developers at Bell Labs produce UNIX, an operating system that made "large-scale networking of diverse computing systems — and the internet — practical," according to Bell Labs .. The team behind UNIX continued to develop the operating system using the C programming language, which they also optimized.

1970: The newly formed Intel unveils the Intel 1103, the first Dynamic Access Memory (DRAM) chip.

1971: A team of IBM engineers led by Alan Shugart invents the "floppy disk," enabling data to be shared among different computers.

1972: Ralph Baer, a German-American engineer, releases Magnavox Odyssey, the world's first home game console, in September 1972 , according to the Computer Museum of America . Months later, entrepreneur Nolan Bushnell and engineer Al Alcorn with Atari release Pong, the world's first commercially successful video game.

1973: Robert Metcalfe, a member of the research staff for Xerox, develops Ethernet for connecting multiple computers and other hardware.

1977: The Commodore Personal Electronic Transactor (PET), is released onto the home computer market, featuring an MOS Technology 8-bit 6502 microprocessor, which controls the screen, keyboard and cassette player. The PET is especially successful in the education market, according to O'Regan.

1975: The magazine cover of the January issue of "Popular Electronics" highlights the Altair 8080 as the "world's first minicomputer kit to rival commercial models." After seeing the magazine issue, two "computer geeks," Paul Allen and Bill Gates, offer to write software for the Altair, using the new BASIC language. On April 4, after the success of this first endeavor, the two childhood friends form their own software company, Microsoft.

1976: Steve Jobs and Steve Wozniak co-found Apple Computer on April Fool's Day. They unveil Apple I, the first computer with a single-circuit board and ROM (Read Only Memory), according to MIT .

1977: Radio Shack began its initial production run of 3,000 TRS-80 Model 1 computers — disparagingly known as the "Trash 80" — priced at $599, according to the National Museum of American History. Within a year, the company took 250,000 orders for the computer, according to the book " How TRS-80 Enthusiasts Helped Spark the PC Revolution " (The Seeker Books, 2007).

1977: The first West Coast Computer Faire is held in San Francisco. Jobs and Wozniak present the Apple II computer at the Faire, which includes color graphics and features an audio cassette drive for storage.

1978: VisiCalc, the first computerized spreadsheet program is introduced.

1979: MicroPro International, founded by software engineer Seymour Rubenstein, releases WordStar, the world's first commercially successful word processor. WordStar is programmed by Rob Barnaby, and includes 137,000 lines of code, according to Matthew G. Kirschenbaum's book " Track Changes: A Literary History of Word Processing " (Harvard University Press, 2016).

1981: "Acorn," IBM's first personal computer, is released onto the market at a price point of $1,565, according to IBM. Acorn uses the MS-DOS operating system from Windows. Optional features include a display, printer, two diskette drives, extra memory, a game adapter and more.

1983: The Apple Lisa, standing for "Local Integrated Software Architecture" but also the name of Steve Jobs' daughter, according to the National Museum of American History ( NMAH ), is the first personal computer to feature a GUI. The machine also includes a drop-down menu and icons. Also this year, the Gavilan SC is released and is the first portable computer with a flip-form design and the very first to be sold as a "laptop."

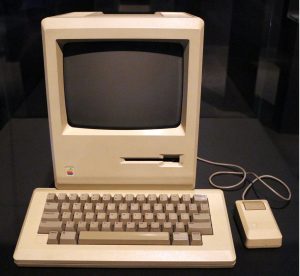

1984: The Apple Macintosh is announced to the world during a Superbowl advertisement. The Macintosh is launched with a retail price of $2,500, according to the NMAH.

1985 : As a response to the Apple Lisa's GUI, Microsoft releases Windows in November 1985, the Guardian reported . Meanwhile, Commodore announces the Amiga 1000.

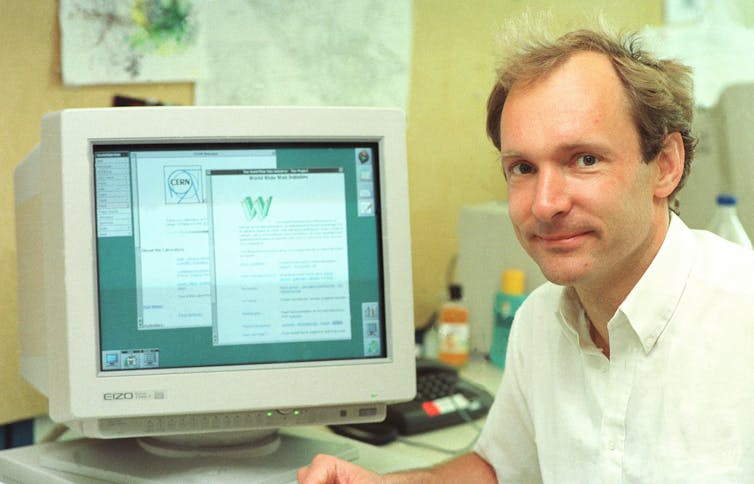

1989: Tim Berners-Lee, a British researcher at the European Organization for Nuclear Research ( CERN ), submits his proposal for what would become the World Wide Web. His paper details his ideas for Hyper Text Markup Language (HTML), the building blocks of the Web.

1993: The Pentium microprocessor advances the use of graphics and music on PCs.

1996: Sergey Brin and Larry Page develop the Google search engine at Stanford University.

1997: Microsoft invests $150 million in Apple, which at the time is struggling financially. This investment ends an ongoing court case in which Apple accused Microsoft of copying its operating system.

1999: Wi-Fi, the abbreviated term for "wireless fidelity" is developed, initially covering a distance of up to 300 feet (91 meters) Wired reported .

21st century

2001: Mac OS X, later renamed OS X then simply macOS, is released by Apple as the successor to its standard Mac Operating System. OS X goes through 16 different versions, each with "10" as its title, and the first nine iterations are nicknamed after big cats, with the first being codenamed "Cheetah," TechRadar reported.

2003: AMD's Athlon 64, the first 64-bit processor for personal computers, is released to customers.

2004: The Mozilla Corporation launches Mozilla Firefox 1.0. The Web browser is one of the first major challenges to Internet Explorer, owned by Microsoft. During its first five years, Firefox exceeded a billion downloads by users, according to the Web Design Museum .

2005: Google buys Android, a Linux-based mobile phone operating system

2006: The MacBook Pro from Apple hits the shelves. The Pro is the company's first Intel-based, dual-core mobile computer.

2009: Microsoft launches Windows 7 on July 22. The new operating system features the ability to pin applications to the taskbar, scatter windows away by shaking another window, easy-to-access jumplists, easier previews of tiles and more, TechRadar reported .

2010: The iPad, Apple's flagship handheld tablet, is unveiled.

2011: Google releases the Chromebook, which runs on Google Chrome OS.

2015: Apple releases the Apple Watch. Microsoft releases Windows 10.

2016: The first reprogrammable quantum computer was created. "Until now, there hasn't been any quantum-computing platform that had the capability to program new algorithms into their system. They're usually each tailored to attack a particular algorithm," said study lead author Shantanu Debnath, a quantum physicist and optical engineer at the University of Maryland, College Park.

2017: The Defense Advanced Research Projects Agency (DARPA) is developing a new "Molecular Informatics" program that uses molecules as computers. "Chemistry offers a rich set of properties that we may be able to harness for rapid, scalable information storage and processing," Anne Fischer, program manager in DARPA's Defense Sciences Office, said in a statement. "Millions of molecules exist, and each molecule has a unique three-dimensional atomic structure as well as variables such as shape, size, or even color. This richness provides a vast design space for exploring novel and multi-value ways to encode and process data beyond the 0s and 1s of current logic-based, digital architectures."

2019: A team at Google became the first to demonstrate quantum supremacy — creating a quantum computer that could feasibly outperform the most powerful classical computer — albeit for a very specific problem with no practical real-world application. The described the computer, dubbed "Sycamore" in a paper that same year in the journal Nature . Achieving quantum advantage – in which a quantum computer solves a problem with real-world applications faster than the most powerful classical computer — is still a ways off.

2022: The first exascale supercomputer, and the world's fastest, Frontier, went online at the Oak Ridge Leadership Computing Facility (OLCF) in Tennessee. Built by Hewlett Packard Enterprise (HPE) at the cost of $600 million, Frontier uses nearly 10,000 AMD EPYC 7453 64-core CPUs alongside nearly 40,000 AMD Radeon Instinct MI250X GPUs. This machine ushered in the era of exascale computing, which refers to systems that can reach more than one exaFLOP of power – used to measure the performance of a system. Only one machine – Frontier – is currently capable of reaching such levels of performance. It is currently being used as a tool to aid scientific discovery.

What is the first computer in history?

Charles Babbage's Difference Engine, designed in the 1820s, is considered the first "mechanical" computer in history, according to the Science Museum in the U.K . Powered by steam with a hand crank, the machine calculated a series of values and printed the results in a table.

What are the five generations of computing?

The "five generations of computing" is a framework for assessing the entire history of computing and the key technological advancements throughout it.

The first generation, spanning the 1940s to the 1950s, covered vacuum tube-based machines. The second then progressed to incorporate transistor-based computing between the 50s and the 60s. In the 60s and 70s, the third generation gave rise to integrated circuit-based computing. We are now in between the fourth and fifth generations of computing, which are microprocessor-based and AI-based computing.

What is the most powerful computer in the world?

As of November 2023, the most powerful computer in the world is the Frontier supercomputer . The machine, which can reach a performance level of up to 1.102 exaFLOPS, ushered in the age of exascale computing in 2022 when it went online at Tennessee's Oak Ridge Leadership Computing Facility (OLCF)

There is, however, a potentially more powerful supercomputer waiting in the wings in the form of the Aurora supercomputer, which is housed at the Argonne National Laboratory (ANL) outside of Chicago. Aurora went online in November 2023. Right now, it lags far behind Frontier, with performance levels of just 585.34 petaFLOPS (roughly half the performance of Frontier), although it's still not finished. When work is completed, the supercomputer is expected to reach performance levels higher than 2 exaFLOPS.

What was the first killer app?

Killer apps are widely understood to be those so essential that they are core to the technology they run on. There have been so many through the years – from Word for Windows in 1989 to iTunes in 2001 to social media apps like WhatsApp in more recent years

Several pieces of software may stake a claim to be the first killer app, but there is a broad consensus that VisiCalc, a spreadsheet program created by VisiCorp and originally released for the Apple II in 1979, holds that title. Steve Jobs even credits this app for propelling the Apple II to become the success it was, according to co-creator Dan Bricklin .

- Fortune: A Look Back At 40 Years of Apple

- The New Yorker: The First Windows

- " A Brief History of Computing " by Gerard O'Regan (Springer, 2021)

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

Timothy is Editor in Chief of print and digital magazines All About History and History of War . He has previously worked on sister magazine All About Space , as well as photography and creative brands including Digital Photographer and 3D Artist . He has also written for How It Works magazine, several history bookazines and has a degree in English Literature from Bath Spa University .

Quantum computing breakthrough could happen with just hundreds, not millions, of qubits using new error-correction system

Future quantum computers could use bizarre 'error-free' qubit design built on forgotten research from the 1990s

Breakthrough 6G antenna could lead to high-speed communications and holograms

Most Popular

- 2 James Webb telescope confirms there is something seriously wrong with our understanding of the universe

- 3 'I nearly fell out of my chair': 1,800-year-old mini portrait of Alexander the Great found in a field in Denmark

- 4 6G speeds hit 100 Gbps in new test — 500 times faster than average 5G cellphones

- 5 Why do people hear their names being called in the woods?

- 2 2 plants randomly mated up to 1 million years ago to give rise to one of the world's most popular drinks

- 3 Deepest blue hole in the world discovered, with hidden caves and tunnels believed to be inside

- 4 Hundreds of black 'spiders' spotted in mysterious 'Inca City' on Mars in new satellite photos

- 5 Stunning image shows atoms transforming into quantum waves — just as Schrödinger predicted

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Modern (1940’s-present)

66 History of Computers

Chandler Little and Ben Greene

Introduction

Modern technology first started evolving when electricity started to be used more often in everyday life. One of the biggest inventions in the 20th century was the computer, and it has gone through many changes and improvements since its creation. The last two decades have shown more advancement in technology than any other invention. They have advanced almost every level of learning in our lives and looks like it will only keep impacting through the decades. Computers in today’s society have become a focal point for everyday life and will continue to do so for the foreseeable future. One important company that has shaped the computer industry is Apple, Inc., which was founded in the final quarter of the 20th century. This is one of the primary computer providers in American society, so a history of the company is included in this chapter to contribute specific information about a business that has propelled the growth of computer popularity.

The Evolution of Computers

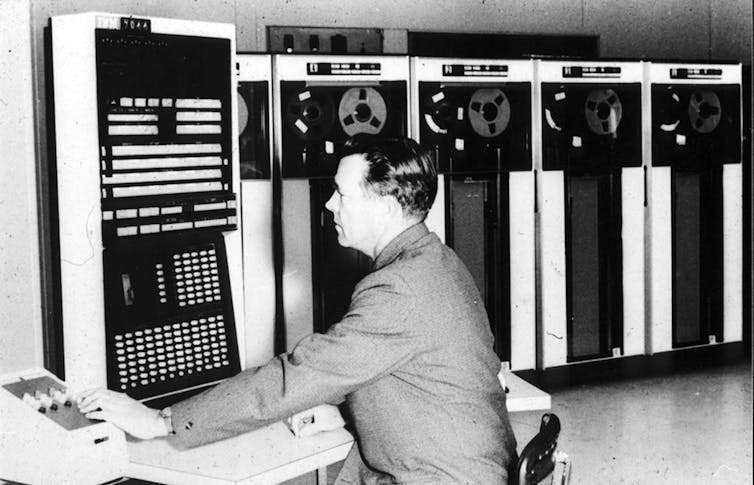

Computers have come a long way from their creation. This first computer was created in 1822 by Charles Babbage and was created with a series of vacuum tubes, ultimately weighing 700 pounds- far larger than computers today. Computers have vastly decreased in size due to the invention of the transistor in 1947, which revolutionized computing by replacing bulky vacuum tubes with

smaller components that made computers more compact and also more reliable. This led to an era of rapid technological advancement, leading to the development of integrated circuits, microprocessors, and eventually the smaller, lighter personal computers that have become indispensable in modern society. For example, most laptops today weigh in the range of two to eight pounds. A picture of one of the first computers can be seen in Figure 1. There have been large amounts of movement in the data storage sector in computers. The very first hard drive was created in 1956 and had a capacity of 5MB and weighed in at 550 pounds.

Today hard drives are becoming smaller and we see them weighing a couple of ounces to a couple of pounds. As files have come more complex, the need for more space in computers has increased drastically. Today we see games take up to 100GB of storage. To give a reference as to how big of a difference

5MB is to 100GB of storage is that 5MB is .005 GB. The hard drives we have today are seeing sizes from 10TB and larger. A TB is 1000GB. The evolution of the hard drive can be seen in figure 2. As the world of computers keeps progressing, there is a general concept of making them smaller, but at the same time seeing a generational step in improvement. The improvements allow the daily tasks of many users (like teachers, researchers, and doctors) to become shorter, making their tasks quicker and easier to accomplish. New software is also constantly being developed, and as a result, we are seeing strides in staying connected to others in the ways of social media, messaging platforms, and other means of communication. The downside to this growing dependence on computers is that technological failures/damages can create major setbacks on any given day.

relation to sts

The development of computers as a prevalent form of technology has had a profound impact on society as a whole in the United States. Computers are now ubiquitous, playing a crucial role in various aspects of everyday life. They are utilized in most classrooms across the country, facilitating learning and enhancing educational experiences for students of all ages. In the workplace, computers have revolutionized business operations, streamlining processes, increasing efficiency, and enabling remote work capabilities. Additionally, computers have become indispensable tools in households across America. According to the U.S. Census Bureau’s American Community Survey, a staggering 92% of households reported having at least one type of computer (2021). This statistic underscores the widespread integration of computers into the fabric of American life. The impact of computers extends beyond mere accessibility. They have transformed communication, allowing people to connect instantaneously through email, social media, and video conferencing platforms. Additionally, computers have revolutionized entertainment, providing access to a vast array of digital content, from streaming services to video games.

Overall, the pervasive presence of computers underscores their monumental impact on Americans’ lives, shaping how we learn, work, communicate, and entertain ourselves. As technology continues to evolve, the influence of computers on society is expected to grow even further, driving transformative changes across various domains.

missing Voices in computer history

The evolution of computers has been happening at a fast rate, and when this happens people’s contributions are left out. The main demographic in computers that are left out are women. Grace Hopper is one of the most influential people in the computer spectrum, but her work is not shown in the classroom. In the 1950s, Grace Hopper was a senior mathematician on her team for UNIVAC (UNIVersal Automatic Computing INC). Here she created the very first compiler (Cassel, 2016). This was a massive accomplishment for anyone in the field of computing because it allowed the idea that programming languages are not tied to a specific computer, but can be used on any computer. This single feature in computers was one of the main driving forces for computing to become so robust and powerful that it is today. Grace Hopper’s work needs to be talked about in classrooms not only just in engineering courses, but as well as general classes. Students need to hear that a woman was the driving force behind the evolution of computing. By talking about this, it may urge more women to join the computing field because right now only 25% of jobs in the computing sector are held by women (Cassel, 2016). With a more diverse workforce in computing, we can see the creation of new ideas and features that were never thought of before.

During the evolution of computers, many people have been left out with their creation with respect to the development and algorithms. With the push to gender equality in the world in future years, this gap between the disparity between women’s credibility and men’s credibility will be shrunk to a negligible amount. As computers continue to evolve the world of STS will need to evolve with them to adapt to the changes in technology. If not, some of the great creations in the computer sector will be neglected, and most notoriously here is VR (Virtual Reality) with its higher entry-level price and motion sickness that comes along with VR (Virtual Reality).

history of apple, inc.

In American society today, two primary operating systems dominate: Windows and MacOS. MacOS is the operating system for Apple computers, which were estimated to cover about 16.1% of the U.S. personal computer market in the fourth quarter of 2023 according to a study from Gartner (2024). The company Apple Inc. was founded on April 1, 1976, by Steve Jobs and Steve Wozniak who wanted to make computers more user-friendly and accessible to individuals. Their vision was to revolutionize the computer industry. They started by building the Apple I in Jobs’ garage, leading to the introduction of the Apple II, which featured color graphics and propelled the company’s growth. However, internal conflicts and the departure of key figures like Jobs and Wozniak led to a period of struggle in the 1980s and early 1990s. Jobs returned to Apple in 1997 and initiated transformative changes, including an alliance with Microsoft and the launch of groundbreaking products like the iBook and iPod. Apple continued to expand their product line, with the introduction of the iPhone in 2007 marking a new era of success for Apple, propelling it to become the second most valuable company in the world. Apple has been able to maintain a strong position in the technology market for this entire period by continuously improving its beginning product, the Macintosh computer, and by adapting to new technological changes.

Apple’s Macintosh computers have changed quite a lot throughout the company’s history. The Macintosh 128k (figure 3) was the very first Apple computer, released on January 24, 1984. It had a 9-inch black and white display with 128KB of RAM (computer memory) and operated on MacOS 1.0. The next important release was in April 1995 with several variations of the Macintosh Performa which had 500MB to 1 GB of memory. Interestingly, the multiple models of this computer ended up competing with each other and were discontinued. This led to the iMac G3 in August 1998 which sported a futuristic design with multiple color options for the back of the computer as well as USB ports, 4GB of memory, and built-in speakers. iMac G3 was the beginning of MacOS 8. In 2007, the iMac went through a major redesign with melded glass and aluminum as the material and a widescreen display. The newer Mac’s continue to be built slimmer, with faster processors, better displays, and more storage (Mingis and Moreau, 2021).

Missing voices within apple

In a male-dominant field, it’s very possible for women’s impacts to be drowned out in technological evolutions. Within Apple’s business specifically, several women have made a large difference in their progression. Susan Kare for example, was the first designer of Apple’s icons like the stopwatch and paintbrush that helped Apple establish the Mac. Another woman with a large contribution to Apple was Joanne Hoffman. She was the fifth person to join Macintosh in 1980 and “wrote the first draft of the User Interface Guidelines for the Mac and figured out how to pitch the computer at the education markets” in the beginning of Apple’s existence (Evans, 2016).

Throughout this chapter, the importance of computers as a catalyst for advancement in our society is evident. Clearly computers have evolved from their inception up until this point today in multiple different ways, and several people from several different backgrounds have played an important part in this.

How has the advancement in technology improved your life?

A brief history of computers – unipi.it . (n.d.). Retrieved November 7, 2022, from https://digitaltools.labcd.unipi.it/wp-content/uploads/2021/05/A-brief-history-of-computers.pdf

Cassel, L. (December 15, 2016 ). “Op-Ed: 25 Years After Computing Pioneer Grace Hopper’ s Death, We Still Have Work to Do”. USNEWS.com, Thursday. Accessed via Nexis Uni database from Clemson University.

Evans, J. (2016, March 8). 10 Women Who Made Apple Great. Computerworld. https://www.computerworld.com/article/3041874/10-women-who-made-apple-great.html

Gartner. (2024, January 10). Gartner Says Worldwide PC Shipments Increased 0.3% in Fourth Quarter of 2023 but Declined 14.8% for the Year. Gartner. https://www.gartner.com/en/newsroom/press-releases/01-10-2024-gartner-says-worldwide-pc-shipments-increased-zero-point-three-percent-in-fourth-quarter-of-2023-but-declined-fourteen-point-eight-percent-for-the-year#:~:text=HP%20maintained%20the%20top%20spot,share%20(see%20Table%202).&text=HP%20Inc.,-4%2C665

Kleiman, K. & Saklayen, N. (2018, April 19). These 6 pioneering women helped create modern computer s. ideas.ted.com. Retrieved September 26, 2021, from https://ideas.ted.com/how-i-discovered-six-pioneering-women-who-helped-create-modern-computers-and-why-we-should-never-forget-them / .

Mingis, K. and Moreau, S. (2021, April 28). The Evolution of the Macintosh – and the Imac. Computerworld. https://www.computerworld.com/article/1617841/evolution-of-macintosh-and-imac.html

Richardson, A. (2023, April). The Founding of Apple Computer, Inc. Library of Congress. https://guides.loc.gov/this-month-in-business-history/april/apple-computer-founded

Thompson, C. (2019, June 1). The gendered history of human computers. Smithsonian.com. Retrieved September 26, 2021, from https://www.smithsonianmag.com/science-nature/history-human-computers-180972202/ .

Women in Computing and Women in Engineering honored for promoting girls in STEM. (May 26, 2017 Friday). US Official News. Accessed via Nexis Uni database from Clemson University.

Zimmermann, K. A. (2017, September 7). History of computers: A brief timeline. LiveScience. Retrieved September 26, 2021, from https://www.livescience.com/20718-computer-history.html .

“Gene Amdahl’s first computer.” by Erik Pitti is licensed under CC BY 2.0

“First hard drives” by gabrielsaldana is licensed under CC BY 2.0

Sailko. (2017). Neo Preistoria Exhibition (Milan 2016). Wikipedia. https://en.wikipedia.org/wiki/Macintosh_128K#/media/File:Computer_macintosh_128k,_1984_(all_about_Apple_onlus).jpg

AI ACKNOWLEDGMENT

I acknowledge the use of ChatGPT to generate additional content for this chapter.

Prompts and uses:

I entered the following prompt: Summarize the history of Apple in 7 sentences based on that prompt [the Library of Congress article].

Use: I modified the output to add more information from the article that I found relevant. I also adjusted the wording to make it fit the style of the rest of the chapter.

I entered the following prompt: The development of computers as a new, prevalent form of technology has majorly impacted society as a whole in the United States, as they are involved in several aspects of everyday life. Computers are used in some form in most classrooms in the country, in most workplaces, and in most households. Specifically, 92% of households in the U.S. Census Bureau’s American Community Survey reported having at least one type of computer. This is a simple statistic that shows the monumental impact of computers in Americans’ lives.

Use: I used the output the expand upon this paragraph; after entering the prompt, ChatGPT added several sentences and reworded some of the previously written content. I then removed some of the added information from ChatGPT and made the output more concise.

I entered the following prompt: Give me 3 more sentences to add to the following prompt. I am trying to talk about the history of computers and how they were invented. The prompt begins now- Computers have come a long way from their creation. This first computer was created in 1822 by Charles Babbage. This computer was created with a series of vacuum tubes and weighed a total of 700 pounds, which is much larger than the computers we see today. For example, most laptops weigh in a range of two to eight pounds.

After getting this output, I wrote this prompt: Give me two more sentences to add to that.

Use: I used the 5 sentences of the output to select the information that I wanted and add it to the content that was already in the book in order to provide more detail to the reduction in sizes of computers over time.

To the extent possible under law, Chandler Little and Ben Greene have waived all copyright and related or neighboring rights to Science Technology and Society a Student Led Exploration , except where otherwise noted.

Share This Book

- Table of Contents

- Random Entry

- Chronological

- Editorial Information

- About the SEP

- Editorial Board

- How to Cite the SEP

- Special Characters

- Advanced Tools

- Support the SEP

- PDFs for SEP Friends

- Make a Donation

- SEPIA for Libraries

- Entry Contents

Bibliography

Academic tools.

- Friends PDF Preview

- Author and Citation Info

- Back to Top

The Modern History of Computing

Historically, computers were human clerks who calculated in accordance with effective methods. These human computers did the sorts of calculation nowadays carried out by electronic computers, and many thousands of them were employed in commerce, government, and research establishments. The term computing machine , used increasingly from the 1920s, refers to any machine that does the work of a human computer, i.e., any machine that calculates in accordance with effective methods. During the late 1940s and early 1950s, with the advent of electronic computing machines, the phrase ‘computing machine’ gradually gave way simply to ‘computer’, initially usually with the prefix ‘electronic’ or ‘digital’. This entry surveys the history of these machines.

- Analog Computers

The Universal Turing Machine

Electromechanical versus electronic computation, turing's automatic computing engine, the manchester machine, eniac and edvac, other notable early computers, high-speed memory, other internet resources, related entries.

Charles Babbage was Lucasian Professor of Mathematics at Cambridge University from 1828 to 1839 (a post formerly held by Isaac Newton). Babbage's proposed Difference Engine was a special-purpose digital computing machine for the automatic production of mathematical tables (such as logarithm tables, tide tables, and astronomical tables). The Difference Engine consisted entirely of mechanical components — brass gear wheels, rods, ratchets, pinions, etc. Numbers were represented in the decimal system by the positions of 10-toothed metal wheels mounted in columns. Babbage exhibited a small working model in 1822. He never completed the full-scale machine that he had designed but did complete several fragments. The largest — one ninth of the complete calculator — is on display in the London Science Museum. Babbage used it to perform serious computational work, calculating various mathematical tables. In 1990, Babbage's Difference Engine No. 2 was finally built from Babbage's designs and is also on display at the London Science Museum.

The Swedes Georg and Edvard Scheutz (father and son) constructed a modified version of Babbage's Difference Engine. Three were made, a prototype and two commercial models, one of these being sold to an observatory in Albany, New York, and the other to the Registrar-General's office in London, where it calculated and printed actuarial tables.

Babbage's proposed Analytical Engine, considerably more ambitious than the Difference Engine, was to have been a general-purpose mechanical digital computer. The Analytical Engine was to have had a memory store and a central processing unit (or ‘mill’) and would have been able to select from among alternative actions consequent upon the outcome of its previous actions (a facility nowadays known as conditional branching). The behaviour of the Analytical Engine would have been controlled by a program of instructions contained on punched cards connected together with ribbons (an idea that Babbage had adopted from the Jacquard weaving loom). Babbage emphasised the generality of the Analytical Engine, saying ‘the conditions which enable a finite machine to make calculations of unlimited extent are fulfilled in the Analytical Engine’ (Babbage [1994], p. 97).

Babbage worked closely with Ada Lovelace, daughter of the poet Byron, after whom the modern programming language ADA is named. Lovelace foresaw the possibility of using the Analytical Engine for non-numeric computation, suggesting that the Engine might even be capable of composing elaborate pieces of music.

A large model of the Analytical Engine was under construction at the time of Babbage's death in 1871 but a full-scale version was never built. Babbage's idea of a general-purpose calculating engine was never forgotten, especially at Cambridge, and was on occasion a lively topic of mealtime discussion at the war-time headquarters of the Government Code and Cypher School, Bletchley Park, Buckinghamshire, birthplace of the electronic digital computer.

Analog computers

The earliest computing machines in wide use were not digital but analog. In analog representation, properties of the representational medium ape (or reflect or model) properties of the represented state-of-affairs. (In obvious contrast, the strings of binary digits employed in digital representation do not represent by means of possessing some physical property — such as length — whose magnitude varies in proportion to the magnitude of the property that is being represented.) Analog representations form a diverse class. Some examples: the longer a line on a road map, the longer the road that the line represents; the greater the number of clear plastic squares in an architect's model, the greater the number of windows in the building represented; the higher the pitch of an acoustic depth meter, the shallower the water. In analog computers, numerical quantities are represented by, for example, the angle of rotation of a shaft or a difference in electrical potential. Thus the output voltage of the machine at a time might represent the momentary speed of the object being modelled.

As the case of the architect's model makes plain, analog representation may be discrete in nature (there is no such thing as a fractional number of windows). Among computer scientists, the term ‘analog’ is sometimes used narrowly, to indicate representation of one continuously-valued quantity by another (e.g., speed by voltage). As Brian Cantwell Smith has remarked:

‘Analog’ should … be a predicate on a representation whose structure corresponds to that of which it represents … That continuous representations should historically have come to be called analog presumably betrays the recognition that, at the levels at which it matters to us, the world is more foundationally continuous than it is discrete. (Smith [1991], p. 271)

James Thomson, brother of Lord Kelvin, invented the mechanical wheel-and-disc integrator that became the foundation of analog computation (Thomson [1876]). The two brothers constructed a device for computing the integral of the product of two given functions, and Kelvin described (although did not construct) general-purpose analog machines for integrating linear differential equations of any order and for solving simultaneous linear equations. Kelvin's most successful analog computer was his tide predicting machine, which remained in use at the port of Liverpool until the 1960s. Mechanical analog devices based on the wheel-and-disc integrator were in use during World War I for gunnery calculations. Following the war, the design of the integrator was considerably improved by Hannibal Ford (Ford [1919]).

Stanley Fifer reports that the first semi-automatic mechanical analog computer was built in England by the Manchester firm of Metropolitan Vickers prior to 1930 (Fifer [1961], p. 29); however, I have so far been unable to verify this claim. In 1931, Vannevar Bush, working at MIT, built the differential analyser, the first large-scale automatic general-purpose mechanical analog computer. Bush's design was based on the wheel and disc integrator. Soon copies of his machine were in use around the world (including, at Cambridge and Manchester Universities in England, differential analysers built out of kit-set Meccano, the once popular engineering toy).

It required a skilled mechanic equipped with a lead hammer to set up Bush's mechanical differential analyser for each new job. Subsequently, Bush and his colleagues replaced the wheel-and-disc integrators and other mechanical components by electromechanical, and finally by electronic, devices.

A differential analyser may be conceptualised as a collection of ‘black boxes’ connected together in such a way as to allow considerable feedback. Each box performs a fundamental process, for example addition, multiplication of a variable by a constant, and integration. In setting up the machine for a given task, boxes are connected together so that the desired set of fundamental processes is executed. In the case of electrical machines, this was done typically by plugging wires into sockets on a patch panel (computing machines whose function is determined in this way are referred to as ‘program-controlled’).

Since all the boxes work in parallel, an electronic differential analyser solves sets of equations very quickly. Against this has to be set the cost of massaging the problem to be solved into the form demanded by the analog machine, and of setting up the hardware to perform the desired computation. A major drawback of analog computation is the higher cost, relative to digital machines, of an increase in precision. During the 1960s and 1970s, there was considerable interest in ‘hybrid’ machines, where an analog section is controlled by and programmed via a digital section. However, such machines are now a rarity.

In 1936, at Cambridge University, Turing invented the principle of the modern computer. He described an abstract digital computing machine consisting of a limitless memory and a scanner that moves back and forth through the memory, symbol by symbol, reading what it finds and writing further symbols (Turing [1936]). The actions of the scanner are dictated by a program of instructions that is stored in the memory in the form of symbols. This is Turing's stored-program concept, and implicit in it is the possibility of the machine operating on and modifying its own program. (In London in 1947, in the course of what was, so far as is known, the earliest public lecture to mention computer intelligence, Turing said, ‘What we want is a machine that can learn from experience’, adding that the ‘possibility of letting the machine alter its own instructions provides the mechanism for this’ (Turing [1947] p. 393). Turing's computing machine of 1936 is now known simply as the universal Turing machine. Cambridge mathematician Max Newman remarked that right from the start Turing was interested in the possibility of actually building a computing machine of the sort that he had described (Newman in interview with Christopher Evans in Evans [197?].

From the start of the Second World War Turing was a leading cryptanalyst at the Government Code and Cypher School, Bletchley Park. Here he became familiar with Thomas Flowers' work involving large-scale high-speed electronic switching (described below). However, Turing could not turn to the project of building an electronic stored-program computing machine until the cessation of hostilities in Europe in 1945.

During the wartime years Turing did give considerable thought to the question of machine intelligence. Colleagues at Bletchley Park recall numerous off-duty discussions with him on the topic, and at one point Turing circulated a typewritten report (now lost) setting out some of his ideas. One of these colleagues, Donald Michie (who later founded the Department of Machine Intelligence and Perception at the University of Edinburgh), remembers Turing talking often about the possibility of computing machines (1) learning from experience and (2) solving problems by means of searching through the space of possible solutions, guided by rule-of-thumb principles (Michie in interview with Copeland, 1995). The modern term for the latter idea is ‘heuristic search’, a heuristic being any rule-of-thumb principle that cuts down the amount of searching required in order to find a solution to a problem. At Bletchley Park Turing illustrated his ideas on machine intelligence by reference to chess. Michie recalls Turing experimenting with heuristics that later became common in chess programming (in particular minimax and best-first).

Further information about Turing and the computer, including his wartime work on codebreaking and his thinking about artificial intelligence and artificial life, can be found in Copeland 2004.

With some exceptions — including Babbage's purely mechanical engines, and the finger-powered National Accounting Machine - early digital computing machines were electromechanical. That is to say, their basic components were small, electrically-driven, mechanical switches called ‘relays’. These operate relatively slowly, whereas the basic components of an electronic computer — originally vacuum tubes (valves) — have no moving parts save electrons and so operate extremely fast. Electromechanical digital computing machines were built before and during the second world war by (among others) Howard Aiken at Harvard University, George Stibitz at Bell Telephone Laboratories, Turing at Princeton University and Bletchley Park, and Konrad Zuse in Berlin. To Zuse belongs the honour of having built the first working general-purpose program-controlled digital computer. This machine, later called the Z3, was functioning in 1941. (A program-controlled computer, as opposed to a stored-program computer, is set up for a new task by re-routing wires, by means of plugs etc.)

Relays were too slow and unreliable a medium for large-scale general-purpose digital computation (although Aiken made a valiant effort). It was the development of high-speed digital techniques using vacuum tubes that made the modern computer possible.

The earliest extensive use of vacuum tubes for digital data-processing appears to have been by the engineer Thomas Flowers, working in London at the British Post Office Research Station at Dollis Hill. Electronic equipment designed by Flowers in 1934, for controlling the connections between telephone exchanges, went into operation in 1939, and involved between three and four thousand vacuum tubes running continuously. In 1938–1939 Flowers worked on an experimental electronic digital data-processing system, involving a high-speed data store. Flowers' aim, achieved after the war, was that electronic equipment should replace existing, less reliable, systems built from relays and used in telephone exchanges. Flowers did not investigate the idea of using electronic equipment for numerical calculation, but has remarked that at the outbreak of war with Germany in 1939 he was possibly the only person in Britain who realized that vacuum tubes could be used on a large scale for high-speed digital computation. (See Copeland 2006 for m more information on Flowers' work.)

The earliest comparable use of vacuum tubes in the U.S. seems to have been by John Atanasoff at what was then Iowa State College (now University). During the period 1937–1942 Atanasoff developed techniques for using vacuum tubes to perform numerical calculations digitally. In 1939, with the assistance of his student Clifford Berry, Atanasoff began building what is sometimes called the Atanasoff-Berry Computer, or ABC, a small-scale special-purpose electronic digital machine for the solution of systems of linear algebraic equations. The machine contained approximately 300 vacuum tubes. Although the electronic part of the machine functioned successfully, the computer as a whole never worked reliably, errors being introduced by the unsatisfactory binary card-reader. Work was discontinued in 1942 when Atanasoff left Iowa State.

The first fully functioning electronic digital computer was Colossus, used by the Bletchley Park cryptanalysts from February 1944.

From very early in the war the Government Code and Cypher School (GC&CS) was successfully deciphering German radio communications encoded by means of the Enigma system, and by early 1942 about 39,000 intercepted messages were being decoded each month, thanks to electromechanical machines known as ‘bombes’. These were designed by Turing and Gordon Welchman (building on earlier work by Polish cryptanalysts).

During the second half of 1941, messages encoded by means of a totally different method began to be intercepted. This new cipher machine, code-named ‘Tunny’ by Bletchley Park, was broken in April 1942 and current traffic was read for the first time in July of that year. Based on binary teleprinter code, Tunny was used in preference to Morse-based Enigma for the encryption of high-level signals, for example messages from Hitler and members of the German High Command.

The need to decipher this vital intelligence as rapidly as possible led Max Newman to propose in November 1942 (shortly after his recruitment to GC&CS from Cambridge University) that key parts of the decryption process be automated, by means of high-speed electronic counting devices. The first machine designed and built to Newman's specification, known as the Heath Robinson, was relay-based with electronic circuits for counting. (The electronic counters were designed by C.E. Wynn-Williams, who had been using thyratron tubes in counting circuits at the Cavendish Laboratory, Cambridge, since 1932 [Wynn-Williams 1932].) Installed in June 1943, Heath Robinson was unreliable and slow, and its high-speed paper tapes were continually breaking, but it proved the worth of Newman's idea. Flowers recommended that an all-electronic machine be built instead, but he received no official encouragement from GC&CS. Working independently at the Post Office Research Station at Dollis Hill, Flowers quietly got on with constructing the world's first large-scale programmable electronic digital computer. Colossus I was delivered to Bletchley Park in January 1943.

By the end of the war there were ten Colossi working round the clock at Bletchley Park. From a cryptanalytic viewpoint, a major difference between the prototype Colossus I and the later machines was the addition of the so-called Special Attachment, following a key discovery by cryptanalysts Donald Michie and Jack Good. This broadened the function of Colossus from ‘wheel setting’ — i.e., determining the settings of the encoding wheels of the Tunny machine for a particular message, given the ‘patterns’ of the wheels — to ‘wheel breaking’, i.e., determining the wheel patterns themselves. The wheel patterns were eventually changed daily by the Germans on each of the numerous links between the German Army High Command and Army Group commanders in the field. By 1945 there were as many 30 links in total. About ten of these were broken and read regularly.

Colossus I contained approximately 1600 vacuum tubes and each of the subsequent machines approximately 2400 vacuum tubes. Like the smaller ABC, Colossus lacked two important features of modern computers. First, it had no internally stored programs. To set it up for a new task, the operator had to alter the machine's physical wiring, using plugs and switches. Second, Colossus was not a general-purpose machine, being designed for a specific cryptanalytic task involving counting and Boolean operations.

F.H. Hinsley, official historian of GC&CS, has estimated that the war in Europe was shortened by at least two years as a result of the signals intelligence operation carried out at Bletchley Park, in which Colossus played a major role. Most of the Colossi were destroyed once hostilities ceased. Some of the electronic panels ended up at Newman's Computing Machine Laboratory in Manchester (see below), all trace of their original use having been removed. Two Colossi were retained by GC&CS (renamed GCHQ following the end of the war). The last Colossus is believed to have stopped running in 1960.

Those who knew of Colossus were prohibited by the Official Secrets Act from sharing their knowledge. Until the 1970s, few had any idea that electronic computation had been used successfully during the second world war. In 1970 and 1975, respectively, Good and Michie published notes giving the barest outlines of Colossus. By 1983, Flowers had received clearance from the British Government to publish a partial account of the hardware of Colossus I. Details of the later machines and of the Special Attachment, the uses to which the Colossi were put, and the cryptanalytic algorithms that they ran, have only recently been declassified. (For the full account of Colossus and the attack on Tunny see Copeland 2006.)

To those acquainted with the universal Turing machine of 1936, and the associated stored-program concept, Flowers' racks of digital electronic equipment were proof of the feasibility of using large numbers of vacuum tubes to implement a high-speed general-purpose stored-program computer. The war over, Newman lost no time in establishing the Royal Society Computing Machine Laboratory at Manchester University for precisely that purpose. A few months after his arrival at Manchester, Newman wrote as follows to the Princeton mathematician John von Neumann (February 1946):

I am … hoping to embark on a computing machine section here, having got very interested in electronic devices of this kind during the last two or three years. By about eighteen months ago I had decided to try my hand at starting up a machine unit when I got out. … I am of course in close touch with Turing.

Turing and Newman were thinking along similar lines. In 1945 Turing joined the National Physical Laboratory (NPL) in London, his brief to design and develop an electronic stored-program digital computer for scientific work. (Artificial Intelligence was not far from Turing's thoughts: he described himself as ‘building a brain’ and remarked in a letter that he was ‘more interested in the possibility of producing models of the action of the brain than in the practical applications to computing’.) John Womersley, Turing's immediate superior at NPL, christened Turing's proposed machine the Automatic Computing Engine, or ACE, in homage to Babbage's Difference Engine and Analytical Engine.

Turing's 1945 report ‘Proposed Electronic Calculator’ gave the first relatively complete specification of an electronic stored-program general-purpose digital computer. The report is reprinted in full in Copeland 2005.

The first electronic stored-program digital computer to be proposed in the U.S. was the EDVAC (see below). The ‘First Draft of a Report on the EDVAC’ (May 1945), composed by von Neumann, contained little engineering detail, in particular concerning electronic hardware (owing to restrictions in the U.S.). Turing's ‘Proposed Electronic Calculator’, on the other hand, supplied detailed circuit designs and specifications of hardware units, specimen programs in machine code, and even an estimate of the cost of building the machine (£11,200). ACE and EDVAC differed fundamentally from one another; for example, ACE employed distributed processing, while EDVAC had a centralised structure.

Turing saw that speed and memory were the keys to computing. Turing's colleague at NPL, Jim Wilkinson, observed that Turing ‘was obsessed with the idea of speed on the machine’ [Copeland 2005, p. 2]. Turing's design had much in common with today's RISC architectures and it called for a high-speed memory of roughly the same capacity as an early Macintosh computer (enormous by the standards of his day). Had Turing's ACE been built as planned it would have been in a different league from the other early computers. However, progress on Turing's Automatic Computing Engine ran slowly, due to organisational difficulties at NPL, and in 1948 a ‘very fed up’ Turing (Robin Gandy's description, in interview with Copeland, 1995) left NPL for Newman's Computing Machine Laboratory at Manchester University. It was not until May 1950 that a small pilot model of the Automatic Computing Engine, built by Wilkinson, Edward Newman, Mike Woodger, and others, first executed a program. With an operating speed of 1 MHz, the Pilot Model ACE was for some time the fastest computer in the world.

Sales of DEUCE, the production version of the Pilot Model ACE, were buoyant — confounding the suggestion, made in 1946 by the Director of the NPL, Sir Charles Darwin, that ‘it is very possible that … one machine would suffice to solve all the problems that are demanded of it from the whole country’ [Copeland 2005, p. 4]. The fundamentals of Turing's ACE design were employed by Harry Huskey (at Wayne State University, Detroit) in the Bendix G15 computer (Huskey in interview with Copeland, 1998). The G15 was arguably the first personal computer; over 400 were sold worldwide. DEUCE and the G15 remained in use until about 1970. Another computer deriving from Turing's ACE design, the MOSAIC, played a role in Britain's air defences during the Cold War period; other derivatives include the Packard-Bell PB250 (1961). (More information about these early computers is given in [Copeland 2005].)

The earliest general-purpose stored-program electronic digital computer to work was built in Newman's Computing Machine Laboratory at Manchester University. The Manchester ‘Baby’, as it became known, was constructed by the engineers F.C. Williams and Tom Kilburn, and performed its first calculation on 21 June 1948. The tiny program, stored on the face of a cathode ray tube, was just seventeen instructions long. A much enlarged version of the machine, with a programming system designed by Turing, became the world's first commercially available computer, the Ferranti Mark I. The first to be completed was installed at Manchester University in February 1951; in all about ten were sold, in Britain, Canada, Holland and Italy.

The fundamental logico-mathematical contributions by Turing and Newman to the triumph at Manchester have been neglected, and the Manchester machine is nowadays remembered as the work of Williams and Kilburn. Indeed, Newman's role in the development of computers has never been sufficiently emphasised (due perhaps to his thoroughly self-effacing way of relating the relevant events).

It was Newman who, in a lecture in Cambridge in 1935, introduced Turing to the concept that led directly to the Turing machine: Newman defined a constructive process as one that a machine can carry out (Newman in interview with Evans, op. cit.). As a result of his knowledge of Turing's work, Newman became interested in the possibilities of computing machinery in, as he put it, ‘a rather theoretical way’. It was not until Newman joined GC&CS in 1942 that his interest in computing machinery suddenly became practical, with his realisation that the attack on Tunny could be mechanised. During the building of Colossus, Newman tried to interest Flowers in Turing's 1936 paper — birthplace of the stored-program concept - but Flowers did not make much of Turing's arcane notation. There is no doubt that by 1943, Newman had firmly in mind the idea of using electronic technology in order to construct a stored-program general-purpose digital computing machine.

In July of 1946 (the month in which the Royal Society approved Newman's application for funds to found the Computing Machine Laboratory), Freddie Williams, working at the Telecommunications Research Establishment, Malvern, began the series of experiments on cathode ray tube storage that was to lead to the Williams tube memory. Williams, until then a radar engineer, explains how it was that he came to be working on the problem of computer memory:

[O]nce [the German Armies] collapsed … nobody was going to care a toss about radar, and people like me … were going to be in the soup unless we found something else to do. And computers were in the air. Knowing absolutely nothing about them I latched onto the problem of storage and tackled that. (Quoted in Bennett 1976.)

Newman learned of Williams' work, and with the able help of Patrick Blackett, Langworthy Professor of Physics at Manchester and one of the most powerful figures in the University, was instrumental in the appointment of the 35 year old Williams to the recently vacated Chair of Electro-Technics at Manchester. (Both were members of the appointing committee (Kilburn in interview with Copeland, 1997).) Williams immediately had Kilburn, his assistant at Malvern, seconded to Manchester. To take up the story in Williams' own words:

[N]either Tom Kilburn nor I knew the first thing about computers when we arrived in Manchester University. We'd had enough explained to us to understand what the problem of storage was and what we wanted to store, and that we'd achieved, so the point now had been reached when we'd got to find out about computers … Newman explained the whole business of how a computer works to us. (F.C. Williams in interview with Evans [1976])

Elsewhere Williams is explicit concerning Turing's role and gives something of the flavour of the explanation that he and Kilburn received:

Tom Kilburn and I knew nothing about computers, but a lot about circuits. Professor Newman and Mr A.M. Turing … knew a lot about computers and substantially nothing about electronics. They took us by the hand and explained how numbers could live in houses with addresses and how if they did they could be kept track of during a calculation. (Williams [1975], p. 328)

It seems that Newman must have used much the same words with Williams and Kilburn as he did in an address to the Royal Society on 4th March 1948:

Professor Hartree … has recalled that all the essential ideas of the general-purpose calculating machines now being made are to be found in Babbage's plans for his analytical engine. In modern times the idea of a universal calculating machine was independently introduced by Turing … [T]he machines now being made in America and in this country … [are] in certain general respects … all similar. There is provision for storing numbers, say in the scale of 2, so that each number appears as a row of, say, forty 0's and 1's in certain places or "houses" in the machine. … Certain of these numbers, or "words" are read, one after another, as orders. In one possible type of machine an order consists of four numbers, for example 11, 13, 27, 4. The number 4 signifies "add", and when control shifts to this word the "houses" H11 and H13 will be connected to the adder as inputs, and H27 as output. The numbers stored in H11 and H13 pass through the adder, are added, and the sum is passed on to H27. The control then shifts to the next order. In most real machines the process just described would be done by three separate orders, the first bringing [H11] (=content of H11) to a central accumulator, the second adding [H13] into the accumulator, and the third sending the result to H27; thus only one address would be required in each order. … A machine with storage, with this automatic-telephone-exchange arrangement and with the necessary adders, subtractors and so on, is, in a sense, already a universal machine. (Newman [1948], pp. 271–272)

Following this explanation of Turing's three-address concept (source 1, source 2, destination, function) Newman went on to describe program storage (‘the orders shall be in a series of houses X1, X2, …’) and conditional branching. He then summed up:

From this highly simplified account it emerges that the essential internal parts of the machine are, first, a storage for numbers (which may also be orders). … Secondly, adders, multipliers, etc. Thirdly, an "automatic telephone exchange" for selecting "houses", connecting them to the arithmetic organ, and writing the answers in other prescribed houses. Finally, means of moving control at any stage to any chosen order, if a certain condition is satisfied, otherwise passing to the next order in the normal sequence. Besides these there must be ways of setting up the machine at the outset, and extracting the final answer in useable form. (Newman [1948], pp. 273–4)

In a letter written in 1972 Williams described in some detail what he and Kilburn were told by Newman:

About the middle of the year [1946] the possibility of an appointment at Manchester University arose and I had a talk with Professor Newman who was already interested in the possibility of developing computers and had acquired a grant from the Royal Society of £30,000 for this purpose. Since he understood computers and I understood electronics the possibilities of fruitful collaboration were obvious. I remember Newman giving us a few lectures in which he outlined the organisation of a computer in terms of numbers being identified by the address of the house in which they were placed and in terms of numbers being transferred from this address, one at a time, to an accumulator where each entering number was added to what was already there. At any time the number in the accumulator could be transferred back to an assigned address in the store and the accumulator cleared for further use. The transfers were to be effected by a stored program in which a list of instructions was obeyed sequentially. Ordered progress through the list could be interrupted by a test instruction which examined the sign of the number in the accumulator. Thereafter operation started from a new point in the list of instructions. This was the first information I received about the organisation of computers. … Our first computer was the simplest embodiment of these principles, with the sole difference that it used a subtracting rather than an adding accumulator. (Letter from Williams to Randell, 1972; in Randell [1972], p. 9)

Turing's early input to the developments at Manchester, hinted at by Williams in his above-quoted reference to Turing, may have been via the lectures on computer design that Turing and Wilkinson gave in London during the period December 1946 to February 1947 (Turing and Wilkinson [1946–7]). The lectures were attended by representatives of various organisations planning to use or build an electronic computer. Kilburn was in the audience (Bowker and Giordano [1993]). (Kilburn usually said, when asked from where he obtained his basic knowledge of the computer, that he could not remember (letter from Brian Napper to Copeland, 2002); for example, in a 1992 interview he said: ‘Between early 1945 and early 1947, in that period, somehow or other I knew what a digital computer was … Where I got this knowledge from I've no idea’ (Bowker and Giordano [1993], p. 19).)

Whatever role Turing's lectures may have played in informing Kilburn, there is little doubt that credit for the Manchester computer — called the ‘Newman-Williams machine’ in a contemporary document (Huskey 1947) — belongs not only to Williams and Kilburn but also to Newman, and that the influence on Newman of Turing's 1936 paper was crucial, as was the influence of Flowers' Colossus.

The first working AI program, a draughts (checkers) player written by Christopher Strachey, ran on the Ferranti Mark I in the Manchester Computing Machine Laboratory. Strachey (at the time a teacher at Harrow School and an amateur programmer) wrote the program with Turing's encouragement and utilising the latter's recently completed Programmers' Handbook for the Ferranti. (Strachey later became Director of the Programming Research Group at Oxford University.) By the summer of 1952, the program could, Strachey reported, ‘play a complete game of draughts at a reasonable speed’. (Strachey's program formed the basis for Arthur Samuel's well-known checkers program.) The first chess-playing program, also, was written for the Manchester Ferranti, by Dietrich Prinz; the program first ran in November 1951. Designed for solving simple problems of the mate-in-two variety, the program would examine every possible move until a solution was found. Turing started to program his ‘Turochamp’ chess-player on the Ferranti Mark I, but never completed the task. Unlike Prinz's program, the Turochamp could play a complete game (when hand-simulated) and operated not by exhaustive search but under the guidance of heuristics.

The first fully functioning electronic digital computer to be built in the U.S. was ENIAC, constructed at the Moore School of Electrical Engineering, University of Pennsylvania, for the Army Ordnance Department, by J. Presper Eckert and John Mauchly. Completed in 1945, ENIAC was somewhat similar to the earlier Colossus, but considerably larger and more flexible (although far from general-purpose). The primary function for which ENIAC was designed was the calculation of tables used in aiming artillery. ENIAC was not a stored-program computer, and setting it up for a new job involved reconfiguring the machine by means of plugs and switches. For many years, ENIAC was believed to have been the first functioning electronic digital computer, Colossus being unknown to all but a few.