cloud computing Recently Published Documents

Total documents.

- Latest Documents

- Most Cited Documents

- Contributed Authors

- Related Sources

- Related Keywords

Simulation and performance assessment of a modified throttled load balancing algorithm in cloud computing environment

<span lang="EN-US">Load balancing is crucial to ensure scalability, reliability, minimize response time, and processing time and maximize resource utilization in cloud computing. However, the load fluctuation accompanied with the distribution of a huge number of requests among a set of virtual machines (VMs) is challenging and needs effective and practical load balancers. In this work, a two listed throttled load balancer (TLT-LB) algorithm is proposed and further simulated using the CloudAnalyst simulator. The TLT-LB algorithm is based on the modification of the conventional TLB algorithm to improve the distribution of the tasks between different VMs. The performance of the TLT-LB algorithm compared to the TLB, round robin (RR), and active monitoring load balancer (AMLB) algorithms has been evaluated using two different configurations. Interestingly, the TLT-LB significantly balances the load between the VMs by reducing the loading gap between the heaviest loaded and the lightest loaded VMs to be 6.45% compared to 68.55% for the TLB and AMLB algorithms. Furthermore, the TLT-LB algorithm considerably reduces the average response time and processing time compared to the TLB, RR, and AMLB algorithms.</span>

An improved forensic-by-design framework for cloud computing with systems engineering standard compliance

Reliability of trust management systems in cloud computing.

Cloud computing is an innovation that conveys administrations like programming, stage, and framework over the web. This computing structure is wide spread and dynamic, which chips away at the compensation per-utilize model and supports virtualization. Distributed computing is expanding quickly among purchasers and has many organizations that offer types of assistance through the web. It gives an adaptable and on-request administration yet at the same time has different security dangers. Its dynamic nature makes it tweaked according to client and supplier’s necessities, subsequently making it an outstanding benefit of distributed computing. However, then again, this additionally makes trust issues and or issues like security, protection, personality, and legitimacy. In this way, the huge test in the cloud climate is selecting a perfect organization. For this, the trust component assumes a critical part, in view of the assessment of QoS and Feedback rating. Nonetheless, different difficulties are as yet present in the trust the board framework for observing and assessing the QoS. This paper talks about the current obstructions present in the trust framework. The objective of this paper is to audit the available trust models. The issues like insufficient trust between the supplier and client have made issues in information sharing likewise tended to here. Besides, it lays the limits and their enhancements to help specialists who mean to investigate this point.

Cloud Computing Adoption in the Construction Industry of Singapore: Drivers, Challenges, and Strategies

An extensive review of web-based multi granularity service composition.

The paper reviews the efforts to compose SOAP, non-SOAP and non-web services. Traditionally efforts were made for composite SOAP services, however, these efforts did not include the RESTful and non-web services. A SOAP service uses structured exchange methodology for dealing with web services while a non-SOAP follows different approach. The research paper reviews the invoking and composing a combination of SOAP, non-SOAP, and non-web services into a composite process to execute complex tasks on various devices. It also shows the systematic integration of the SOAP, non-SOAP and non-web services describing the composition of heterogeneous services than the ones conventionally used from the perspective of resource consumption. The paper further compares and reviews different layout model for the discovery of services, selection of services and composition of services in Cloud computing. Recent research trends in service composition are identified and then research about microservices are evaluated and shown in the form of table and graphs.

Integrated Blockchain and Cloud Computing Systems: A Systematic Survey, Solutions, and Challenges

Cloud computing is a network model of on-demand access for sharing configurable computing resource pools. Compared with conventional service architectures, cloud computing introduces new security challenges in secure service management and control, privacy protection, data integrity protection in distributed databases, data backup, and synchronization. Blockchain can be leveraged to address these challenges, partly due to the underlying characteristics such as transparency, traceability, decentralization, security, immutability, and automation. We present a comprehensive survey of how blockchain is applied to provide security services in the cloud computing model and we analyze the research trends of blockchain-related techniques in current cloud computing models. During the reviewing, we also briefly investigate how cloud computing can affect blockchain, especially about the performance improvements that cloud computing can provide for the blockchain. Our contributions include the following: (i) summarizing the possible architectures and models of the integration of blockchain and cloud computing and the roles of cloud computing in blockchain; (ii) classifying and discussing recent, relevant works based on different blockchain-based security services in the cloud computing model; (iii) simply investigating what improvements cloud computing can provide for the blockchain; (iv) introducing the current development status of the industry/major cloud providers in the direction of combining cloud and blockchain; (v) analyzing the main barriers and challenges of integrated blockchain and cloud computing systems; and (vi) providing recommendations for future research and improvement on the integration of blockchain and cloud systems.

Cloud Computing and Undergraduate Researches in Universities in Enugu State: Implication for Skills Demand

Cloud building block chip for creating fpga and asic clouds.

Hardware-accelerated cloud computing systems based on FPGA chips (FPGA cloud) or ASIC chips (ASIC cloud) have emerged as a new technology trend for power-efficient acceleration of various software applications. However, the operating systems and hypervisors currently used in cloud computing will lead to power, performance, and scalability problems in an exascale cloud computing environment. Consequently, the present study proposes a parallel hardware hypervisor system that is implemented entirely in special-purpose hardware, and that virtualizes application-specific multi-chip supercomputers, to enable virtual supercomputers to share available FPGA and ASIC resources in a cloud system. In addition to the virtualization of multi-chip supercomputers, the system’s other unique features include simultaneous migration of multiple communicating hardware tasks, and on-demand increase or decrease of hardware resources allocated to a virtual supercomputer. Partitioning the flat hardware design of the proposed hypervisor system into multiple partitions and applying the chip unioning technique to its partitions, the present study introduces a cloud building block chip that can be used to create FPGA or ASIC clouds as well. Single-chip and multi-chip verification studies have been done to verify the functional correctness of the hypervisor system, which consumes only a fraction of (10%) hardware resources.

Study On Social Network Recommendation Service Method Based On Mobile Cloud Computing

Cloud-based network virtualization in iot with openstack.

In Cloud computing deployments, specifically in the Infrastructure-as-a-Service (IaaS) model, networking is one of the core enabling facilities provided for the users. The IaaS approach ensures significant flexibility and manageability, since the networking resources and topologies are entirely under users’ control. In this context, considerable efforts have been devoted to promoting the Cloud paradigm as a suitable solution for managing IoT environments. Deep and genuine integration between the two ecosystems, Cloud and IoT, may only be attainable at the IaaS level. In light of extending the IoT domain capabilities’ with Cloud-based mechanisms akin to the IaaS Cloud model, network virtualization is a fundamental enabler of infrastructure-oriented IoT deployments. Indeed, an IoT deployment without networking resilience and adaptability makes it unsuitable to meet user-level demands and services’ requirements. Such a limitation makes the IoT-based services adopted in very specific and statically defined scenarios, thus leading to limited plurality and diversity of use cases. This article presents a Cloud-based approach for network virtualization in an IoT context using the de-facto standard IaaS middleware, OpenStack, and its networking subsystem, Neutron. OpenStack is being extended to enable the instantiation of virtual/overlay networks between Cloud-based instances (e.g., virtual machines, containers, and bare metal servers) and/or geographically distributed IoT nodes deployed at the network edge.

Export Citation Format

Share document.

- Open access

- Published: 06 July 2018

Reliability and high availability in cloud computing environments: a reference roadmap

- Mohammad Reza Mesbahi 1 ,

- Amir Masoud Rahmani ORCID: orcid.org/0000-0001-8641-6119 1 , 2 &

- Mehdi Hosseinzadeh 3

Human-centric Computing and Information Sciences volume 8 , Article number: 20 ( 2018 ) Cite this article

61k Accesses

64 Citations

3 Altmetric

Metrics details

Reliability and high availability have always been a major concern in distributed systems. Providing highly available and reliable services in cloud computing is essential for maintaining customer confidence and satisfaction and preventing revenue losses. Although various solutions have been proposed for cloud availability and reliability, but there are no comprehensive studies that completely cover all different aspects in the problem. This paper presented a ‘Reference Roadmap’ of reliability and high availability in cloud computing environments. A big picture was proposed which was divided into four steps specifying through four pivotal questions starting with ‘Where?’, ‘Which?’, ‘When?’ and ‘How?’ keywords. The desirable result of having a highly available and reliable cloud system could be gained by answering these questions. Each step of this reference roadmap proposed a specific concern of a special portion of the issue. Two main research gaps were proposed by this reference roadmap.

Introduction

It was not so long ago that applications were entirely developed by organizations for their own use, possibly exploiting components/platforms developed by third parties. However, with service-oriented architecture (SOA), we moved into a new world which applications could delegate some of their functionalities to already existing services developed by third parties [ 1 ].

For meeting ever-changing business requirements, organizations have to invest more in time and budget for scaling up IT infrastructures. However, achieving this aim by own premises and investments not only is not cost-effective but also organizations will not be able to have an optimal resource utilization [ 2 ]. Therefore, these challenges have forced companies to seek some new alternative technology solutions. One of these modern technologies is cloud computing, which focuses on increasing computing power to execute millions of instructions per seconds.

Nowadays, cloud computing and its services are at the top of the list of buzzwords in the IT world. It is a recent trend in IT that can be considered as a paradigm shift for providing IT & computing resources through the network. One of the best and most popular definitions of cloud computing is the NIST definition proposed in 2009 and updated in 2011. According to this definition, “Cloud computing is a model for enabling ubiquitous, convenient, on-demand network access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications, and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction” [ 3 ].

Recent advances in cloud computing are pushing virtualization more than ever. In other words, cloud computing services can be considered as a significant step towards realizing the utility computing concept [ 4 ]. In such a computing model, services can be accessed by users regardless of where they are hosted or how they are delivered.

Over the years, computing trends such as cluster computing, grid computing, service-oriented computing and virtualization have gained maturity but cloud computing is still in infancy, and experiences lack of complete standards and solutions [ 5 ]. Therefore, the following critical issues are introduced by cloud business models and technologies including load balancing [ 6 , 7 ], security [ 8 , 9 ], energy-efficiency [ 10 , 11 , 12 ], workflow scheduling [ 13 , 14 , 15 , 16 , 17 ], data/service availability, license management, data lock-in and API design [ 1 , 2 , 5 , 18 , 19 , 20 ].

High Availability (HA) and reliability in cloud computing services are some of the hot challenges. The probability that a system is operational in a time interval without any failures is represented as the system reliability, whereas the availability of a system at time ‘t’ is referred to as the probability that the system is up and functional correctly at that instance in time [ 21 , 22 ]. HA for cloud services is essential for maintaining customer’s confidence and preventing revenue losses due to service level agreement (SLA) violation penalties [ 23 , 24 ]. In recent years, cloud computing environments have received significant attention from global business and government agencies for supporting critical mission systems [ 25 ]. However, the lack of reliability and high availability of cloud services is quickly becoming a major issue [ 25 , 26 ]. Research reports express that about $285 million have been lost yearly due to cloud service failures and offering availability of about 99.91% [ 26 ].

Cloud computing service outage can seriously impact workloads of enterprise systems and consumer data and applications. Amazon’s EC2 outage on April, 2011 is an example of one of the largest cloud disasters. Several days of Amazon cloud services unavailability resulted in data loss of several high profile sites and serious business issues for hundreds of IT managers [ 27 ]. Furthermore, according to the CRN reports [ 28 ], the 10 biggest cloud service failure of 2017, including IBM’s cloud infrastructure failure on January 26, GitLab’s popular online code repository service outage on January 31, Facebook on February 24, Amazon Web Services on February 28, Microsoft Azure on March 16, Microsoft Office 365 on March 21 and etc., caused production data loss, and prevented customers from accessing their accounts, services, projects and critical data for very long and painful hours. In addition, credibility of cloud providers took a hit in these service failures and unavailability.

Although many research papers and studies have been conducted in recent years such as studies in [ 26 , 29 , 30 , 31 , 32 , 33 , 34 ], but each of these previous works focused on a special aspect of HA in cloud computing and there are no comprehensive studies, which cover all aspects of HA problem in cloud computing environment based on all cloud actors’ requirements. In this paper, a comprehensive study results about cloud computing reliability and HA problems as a ‘Reference Roadmap’ for future researchers will be proposed.

This research aims to offer a comprehensive strategy for providing high availability and reliability while guaranteeing less performance degradation in cloud computing datacenters. Therefore, by satisfying the users’ requirements and providing services according to the SLA and preventing SLA violation penalties, providers can make a huge profit [ 35 ]. To achieve this goal, all possible aspects to this issue will be studied by proposing a big picture of the problem (for clarifying the possible future approaches). In this research, the proposed research gaps will be puzzled out by completing the pieces of our big picture through answering four questions starting with ‘Where?’, ‘Which?’, ‘When?’ and ‘How?’ question words. In the rest of this paper, a proposed reference roadmap will be introduced and its different aspects will be described. Then the possible primary solutions for these proposed questions will be introduced. The main contributions of this study can be considered as follows:

A reference road map for cloud high availability and reliability is proposed.

A big picture is proposed through dividing the problem space into four major parts.

A comprehensive cloud task taxonomy and failure classification are presented.

Research gaps which were neglected in the literature review are identified.

The rest of this paper is as follows. The research background and literature review is presented in “ Research background ”. The proposed reference roadmap in terms of research “Big Picture” is presented in “ Proposed reference roadmap ”. Discussion and open issues section is presented in “ Discussion and open issues ”. The paper is concluded in “ Conclusion ”.

Research background

Cloud computing provides the context of offering virtualized computing resources and services in a shared and scalable environment through the network on a pay-as-you-go model. By rapid adoption of cloud computing, a large proportion of worldwide IT companies and government organizations have adopted cloud services for various purposes including hosting the mission-critical applications and thus critical data [ 34 ]. In order to support these mission-critical applications and data, there is need to provide dependable cloud computing environments.

In order to study dependability of cloud computing, the major cloud computing system (CCS) dependability attributes should be identified which can quantify the dependability of cloud in different aspects. Some important attributes for dependable cloud environments have been mentioned in [ 36 ] and include availability, reliability, performability, security, and recoverability.

Five major actors and related roles in cloud environments are described in the NIST Cloud Computing Standards Roadmap document [ 37 ]. These five participating actors are cloud provider, cloud consumer, cloud broker, cloud carrier and cloud auditor [ 37 ]. Table 1 presents the definitions of these actors. Consumers and Providers are two main roles among these actors which are significantly considerable in the most cloud computing scenarios. Therefore, this research paper focuses on these two actors.

Pan and Hu [ 36 ] proposed a summary of the relative strengths of dependency on different dependability attributes for each class of actors shown in Table 1 . This empirical analysis shows that there are three ‘Availability’, ‘Reliability’, and ‘Performability’ critical requirements for cloud consumers and providers. Thus, on one hand, from the consumers’ viewpoint, there is a great requirement for highly available and reliable cloud services, but on the other hand, while cloud providers understand the necessity for providing highly available and reliable services for meeting quality of services (QoS) due to the SLA, they also prefer to have highly utilized systems to achieve more profits [ 35 ]. Under these considerations, providing dependable cloud environments which can meet the desires of both cloud consumers and providers is a new challenge.

There are many different design principles such as ‘Eliminating Single Point of Failure’ [ 27 , 38 ], ‘Disaster Recovery’ [ 39 , 40 ] and ‘Real-Time and Fast Failure Detection’ [ 41 , 42 , 43 ] that can help achieve high availability and reliability in cloud computing environments. The single point of failure (SPOF) in cloud computing datacenters can occur in both software and hardware level. Single point of failure can be interpreted as a probable risk which can cause the entire system failure. Applying redundancy is an important factor for avoiding SPOFs [ 27 ]. In simple words, every vital component should exist in more than one instance. At the occurrence of a disaster at a main component in a cloud datacenter, system operations can be switched to backup services and efficient techniques are required for data backup and recovery [ 40 ]. The time taken to detect a failure is one of the key factors in the cloud computing environments. So, fast and real-time failure detection to identify or predict a failure in the early stages is one of the most important principles to achieving high availability and reliability in cloud systems [ 42 , 43 ]. Moreover, there are some new trends in cloud computing such as SDN-based technology like Espresso that makes cloud infrastructures more reliable and available in the network level [ 44 ].

A systematic review of high availability in cloud computing was undertaken by Endo et al. [ 45 ]. This study aimed to discuss high availability mechanisms and important related research questions in cloud computing systems. From the results, the three most useful HA solutions are ‘Failure Detection’, ‘Replication’, and ‘Monitoring’. In addition, the review results show that ‘Experiment’ approach is used more than other approaches for evaluating the HA solutions. However, the paper proposed some research questions and tried to answer these questions, but the study results are more similar to the study mapping review. Furthermore, it did not consider the different cloud actors’ requirements in terms of high availability.

Liu et al. [ 46 ] applied an analytical modeling and sensitivity analysis for investigating the effective factors on cloud infrastructure availability such as repair policy and system parameters. In this study, the replication method was used to provide physical machine availability. Two different repair policies were considered in this study, and a Stochastic Reward Nets availability model was developed for each policy. The numerical results of this study showed that both policies provide the same level of availability but with the different cost level. The system availability was assessed through modeling without considering the failure types in this paper. In addition, the limited number of hot pares and repair policies cannot be sufficient to evaluate the large-scale cloud environments.

The availability and performance of storage services in private cloud environments were evaluated in [ 47 ]. In this study, a hierarchical model was applied for evaluation which consists of Markov chain, stochastic Petri Nets and reliability block diagrams. The result of this study showed that the adoption of redundancy could reduce the probability that timeouts occurred and users were attended to due to failures.

An et al. [ 32 ] presented a system architecture and framework of fault tolerance middleware to provide high availability in cloud computing infrastructures. This middleware uses the virtual machine replicas according to a user defined algorithm for replication. Proposing an optimal replica placement in cloud infrastructure is an important issue in this study. So, authors developed an algorithm for online VM replica placement.

Snyder et al. [ 48 ] presented an algorithm for evaluating the reliability and performance of cloud computing datacenters. In this study, a non-sequential Monte Carlo simulation was used to analyze the system reliability. This paper demonstrated that using this approach can be more efficient and flexible for cloud reliability evaluation when there is a set of discrete resources.

A cloud scoring system was developed in [ 49 ] that can integrate with a Stochastic Petri Net model. In this study, while an analytical model was used for evaluating the application deployment availability, the scoring system can suggest the optimal HA-aware option according to the energy efficiency and operational expenditure. The proposed model in this study can consider different types of failures, repair policies, redundancy, and interdependencies of application’s components. One of the main contributions of this study is proposing an extensible integrated scoring system for offering the suitable deployment based on the users’ requirements.

A comparative evaluation of two redundancy and proactive fault tolerance techniques was proposed in [ 50 ], based on the cloud providers’ and consumers’ requirements. This evaluation was proposed in terms of cloud environment’s availability based on the consumers’ viewpoint and cost of energy from the providers’ viewpoint. The result of this study showed that the proactive fault tolerance methods can be better than traditional redundancy technique in terms of cloud consumers’ costs and execution success rate.

A framework for amending GreenCloud simulator was proposed in [ 51 ] to support the high availability features in simulation processes. In this study, the necessity of a simulator that can provide the HA features was addressed. Then, the phased communication application (PCA) scenario was implemented to evaluate the HA features, workload modeling and scheduling.

Table 2 presents a comparative analysis of some availability and reliability solutions. The comparative factors in this table are main idea, advantages, challenges and evaluation metrics.

The lack of evaluating solutions to quantitatively assess the availability of provided cloud services is one of the main issues and gaps. In addition, according to the literature review, it seems that VM performance overhead [ 52 ] can affect the system availability. Furthermore, the high availability common techniques such as VM migration can also affect the VM performance overhead. So, considering the mutual impact of VM performance overhead and high availability solutions in cloud environments is another important research gap in the current CCS study area.

Proposed reference roadmap

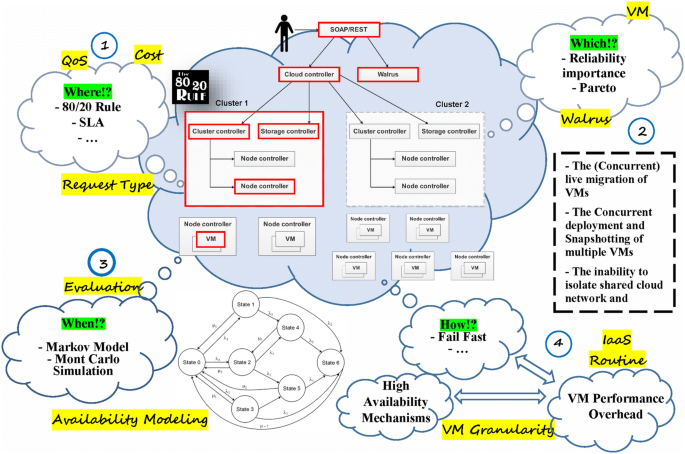

This section presents the proposed reference roadmap in terms of the big picture shown in Fig. 1 . The goal of this section is to cover all different aspects of the reliability and availability issues in cloud computing research area. So, the area in the big picture is divided into four major steps. Figure 1 illustrates the general scheme of the proposed big picture of our reference roadmap. This big picture represents all aspects and factors of high availability and reliability issues in cloud computing environments. In this big picture, a eucalyptus-based architecture illustrates the major and key components of a cloud computing environment. By determining cloud key components in this architecture we will be able to identify the most important factors that can affect system high availability and reliability. The main components include VM, node controller, cluster controller, storage controller, cloud controller and Walrus [ 23 ]. Four major steps to achieve a high available and reliable cloud system are posed in terms of four pivotal questions. In addition, other related issues such as cloud computing nodes’ performance have been considered in this big picture.

Proposed big picture of high availability and reliability issue in cloud computing environments

By proposing this big picture we aim to have a comprehensive look at the problem area and have a reference roadmap for taking each step to solve the problem in cloud computing environments. It is believed that by taking these four steps in terms of answering the four questions, a high available and reliable cloud computing system will be obtained and while satisfying the cloud consumers’ requirements, the providers’ concerns could also be considered.

In the rest of this section, the different parts of our proposed big picture will be explained in details. Each question will be discussed and current available approaches and solutions for each part will be proposed. In future works, we aim to study each part in more details as another independent research and use this big picture as a reference roadmap as mentioned earlier.

“ Where are the vital parts for providing HA in the body of cloud computing datacenters? ”

There are many different geographically distributed cloud clusters in a cloud environment. Cloud computing datacenters comprise of a different stack of components such as physical servers with heterogeneous hardware characteristics like different processor speed, disk and memory size [ 53 ]. In addition, based on the property of workload heterogeneity and dynamicity, datacenters run a vast number of applications with diverse characteristics. Particularly, an application can be divided into one or more processes running on dedicated virtual machines (VM) and the resource requirement differs from VM to VM [ 53 ]. Therefore, by posing the where question at the first step, we are trying to find out where the vital parts are with high priority and basic requirement for high availability. Figure 2 illustrates the proposed roadmap of the “Where” step. The following possible approaches are available for offering primary solutions for the where question.

Proposed roadmap of the “Where” step

• SLA-based approach

A service level agreement is simply defined as a part of a standardized service contract where a service is formally defined. It is an agreement about the quality of a provided service. The agreement describes terms regarding service usage rate and delivery which are agreed between the service providers and the consumers. SLAs contain the following parts [ 54 ]:

The agreement context. Signatory parties, generally the consumer and the provider, and possibly third parties entrusted to enforce the agreement, an expiration date, and any other relevant information.

A description of the offered services including both functional and non-functional aspects such as QoS.

Obligations agreement of each party, which is mainly domain-specific.

Policies: penalties incurred if a SLA term is not respected and SLA violation occurs.

Service level agreements can also be discussed at these three different levels:

Customer - based SLA It is a type of agreement with a single customer that covers all the necessary services. This is similar to the SLA between an IT service provider and the IT department of an organization for all required IT services.

Service - based SLA It is defined as a general agreement for all customers who are using the delivered services by the service provider.

Multi - level SLA This kind of agreement can be split into different levels, with each level addressing a different set of customers for the same services.

By using this approach, we can focus on the SLA in the ‘Multi-level SLA’ to find the clusters which are offering services to the users whose basic requirement and first priority to run their tasks are highly available in the entire cloud system. Then by determining these clusters it can be said that the vital parts of the system are known and if high availability can be provided for these clusters, it can be said that while providing a high available system, more benefits are gained by preventing SLA violation penalties.

• Using 80/20 Rule

Pareto principle or 80/20 rule is a useful rule in computer science world [ 55 , 56 , 57 ]. In simple words, it says that in anything, a few (about 20%) are vital and many (about 80%) are trivial. In the subject of cost in cloud computing environments, 80/20 rule can be used which says that 80% of the outcomes will come from 20% of your effort [ 58 ]. In addition, the 80/20 rule is leveraged in our previous study to provide a highly reliable architecture in cloud environments [ 57 ]. According to our research results, there are a reliable sub-cluster and a highly reliable zone in cloud computing datacenters which can be used to serve the most profitable request. This rule can be applied from two different perspectives:

80% of cloud service providers’ profits may come from 20% of customers.

80% of requested services consist of just 20% of the entire cloud providers’ services.

So in this step, we can limit the domain of providing high availability in cloud computing clusters in our study based on this assumption that the majority of cloud providers’ profits will be earned by offering 20% of the entire services to 20% of whole customers. Therefore, if the high availability in the clusters which belong to that 20% of customers can be guaranteed, then it can be claimed that cloud providers will make the maximum profits while offering a system with high availability.

• Task-based approach

Providing high availability for different requests and incoming workloads according to the requested task classification and through various suitable mechanisms for each task’s class is another approach for this step. So, if the cloud computing tasks can be classified according to their resource requirements (CPU, Memory, etc.), then cloud services and tasks high availability can be provided by hosting tasks in the suitable clusters to avoid task failure due to the resource limitation. For instance, a memory-intensive task will be forwarded into a cluster with sufficient memory resources. Therefore, tasks can be completed without any execution interruption related to the lack of memory errors.

There are many different types of application software and tasks that can be executed in a distributed environment such as high-performance computing (HPC) and high-throughput computing (HTC) applications [ 59 ]. It is required to provide a massively parallel infrastructure for running High-performance computing applications using multi-threaded and multi-process program models. Tightly coupled parallel jobs within a single machine can be executed efficiently using HPC applications. This kind of applications commonly uses message passing interface (MPI) to achieve the needed inter-process communication. On the other hand, distributed computing environments have had awesome achievements to execute loosely coupled applications using workflow systems. The loosely coupled applications may involve numerous tasks that can be separately scheduled on different heterogeneous resources to achieve the goals of the main application. Tasks could be large or small, compute-intensive or data-intensive and may be uniprocessor or multiprocessor. Moreover, these tasks may be loosely-coupled or tightly-coupled, heterogeneous or homogeneous and can have a static or dynamic nature. The aggregate number of tasks, the quantity of required computing resources and volumes of required data for processing could be small but also extremely large [ 59 ].

As mentioned earlier, the appearance of cloud computing guarantees providing highly available and efficient services to run applications like web applications, social networks, messaging apps etc. Providing this guarantee needs a scalable infrastructure including many computing clusters which are shared by various tasks with different requirements and quality of service in terms of availability, reliability, latency and throughput [ 60 ]. Therefore, to provide required service level (e.g. highly available services), a good understanding of task resource consumption (e.g. memory usage, CPU cycles, and storages) is essential.

As cloud computing has been in its infancy during the last years, the applications that will run on clouds are not well defined [ 59 ]. The Li and Qiu [ 61 ], expressed that the required amount of cloud infrastructure resources for current and future tasks can be predicted according to the major trends of the last decade of the large-scale and grid computing environments. First, singular jobs are mainly split into two data-intensive and compute-intensive tasks categories. It can be said that there are no tightly coupled parallel jobs. Second, the duration of individual tasks is dimensioning with every year; few tasks are still running for longer than 1 h and majority require only a few minute to complete. Third, compute-intensive jobs will be divided into Dag-based workflow and bags-of-tasks (BoTs). But data-intensive jobs may utilize several and different programming models.

A task classification has been done in [ 60 ] based on the tasks’ resource consumption running in the Google Cloud Backend. The results of this study are presented in Table 3 . Cloud computing tasks are classified based on their execution duration, number of required CPU cores and amount of required memory in this study. The essential amount of resources for each class of cloud tasks is represented with three major ‘Small’, ‘Med (Medium)’, and ‘Large’ factors, which are abbreviated as ‘s’, ‘m’ and ‘l’, respectively in the ‘Final Class’ column of Table 3 . Three words in this column represent the duration, cores and memory, correspondingly. In addition, ‘*’ means that all three factors are possible in this type of class. For instance, the Final Class ‘sm*’ refers to a class of tasks having an execution duration as ‘small’ (short-running tasks), the number of required CPU cores are ‘Med’ and they would consume ‘small’, ‘Med’ or ‘large’ amount of memory.

In addition, Foster et al. [ 59 ] characterize the clouds’ application to be loosely coupled, transaction oriented (small tasks in the order of milliseconds to seconds) and likely to be interactive (as opposed to batch-scheduled).

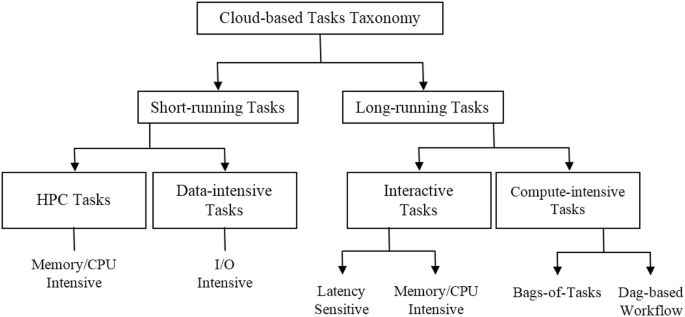

The proposed taxonomy of cloud computing tasks is presented in Fig. 3 . According to our studies, cloud tasks can be classified into two long-running and short-running tasks in terms of tasks’ duration. Therefore based on Table 3 , it can be said that task durations are bimodal, either somewhat less than 30 min or larger than 18 h [ 60 ]. Such behavior results from the characteristics and types of application tasks running on cloud infrastructures. There are two types of long-running tasks. The first are interactive or user-facing tasks which run continuously so as to respond quickly to a user request. The second type of long-running tasks is compute-intensive, such as processing web logs [ 60 ]. Interactive tasks consume a large amount of CPU and memory during periods of a high user request rate. It means that they are CPU-intensive and memory-intensive. In addition since they handle end-user interactions, they are likely latency-sensitive. There are several types of short-running tasks. These tasks dominate the task population. Some short duration tasks are highly parallel operations such as index lookups and searches [ 60 ]. We can also mention HPC tasks. Some tasks can be considered as short memory-intensive tasks which include memory intensive operations like map reduce workers that compute an inverted index. These types of tasks are specified as class 2 in Table 3 . Other tasks which include CPU-intensive operations are considered as short CPU-intensive tasks like map reduce workers which compute aggregation of a log. Finally, it can be said that typical data-intensive workloads consist of short-running, data-parallel tasks. For data-intensive applications, data should be moved across the network, which represents a potential bottleneck [ 62 ].

Cloud tasks taxonomy

According to the proposed cloud tasks taxonomy and previous discussion we can have more understanding of cloud tasks’ requirements and suggest the best approach for providing HA based on the tasks’ types. As the conclusion of this section, it can be said that interactive tasks require more HA from the customer perspectives. Because they are interacting with end users and consuming a large amount of CPU and memory during periods of high user request rate, then by detecting and providing more CPU and Memory resources for these group of tasks, their availability can be improved. In addition, as they are latency sensitive, then providing these resources should be done during a specific threshold. Likewise, for other types of tasks, related requirement and resources for being highly available can be provided.

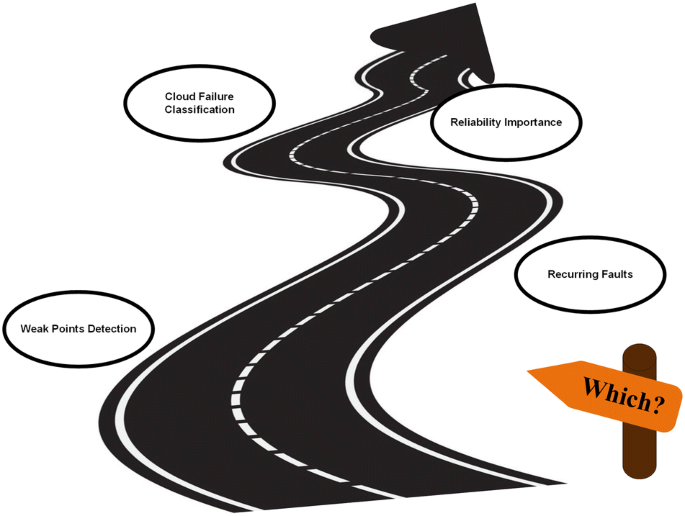

“Which components play key roles to affect cloud computing HA and reliability?”

As mentioned earlier we identified main constituent components of a cloud computing architecture which are inspired by the Eucalyptus architecture. By answering to “Which” question, we are trying to know the system’s weak points of HA and reliability in this step as illustrated in Fig. 4 . After knowing these weak points, we will be able to think about how we can improve them and find suitable solutions in the next steps. Therefore, to catch this goal all cloud failures and their causes should be classified first. Next, all important reliability and HA measuring tools and metrics to evaluate the importance degree of each cloud components in the proposed architecture will be specified. Some main failure modes of CCSs have been proposed in [ 36 , 63 , 64 ] but as one of the future works of this research, different viewpoints exist for proposing a comprehensive classification of cloud failures. Table 4 presents a summary of these studies. Six main failure modes include software failures, hardware failures, cloud management system failures, security failures, environment failures and human faults.

Proposed roadmap of the “Which” step

One of the important aspects of software failures is database failure and data resource missing [ 36 ]. Therefore one of the HA and reliability issues in cloud computing systems will be based on the user requests to unavailable or removed data resources. For providing HA and reliability for data related services we can use 80/20 rule based on the fact that most of the data requests only access a small part of the data [ 55 ]. So, concentrating on hotspot data which are normally less than 20% of the whole data resource in terms of 80/20 rule for improving system’s availability and reliability could be one of the future approaches of this research.

Hardware failure is another important failure mode in cloud computing datacenters. In [ 65 ], hardware failures of multiple datacenters have been examined for determining explicit failure rates for different components including disks, CPUs, memory, and RAID controllers. One of the most important results of this study is that disk failure is the major source of failures in such datacenters [ 65 ]. Therefore, cloud storage devices or storage controllers as pointed in the proposed architecture can be one of the main components that can affect system reliability. Based on the conclusion of [ 65 ], we propose the hypothesis that failures of components follow the Pareto principle in cloud computing datacenters. This hypothesis can be studied as another future work of this paper. Therefore it can be said that about 80% of cloud system failures are related to storage failures.

Some researches conducted about the networks of CCS show that most of the datacenter networks and switches are highly reliable and only load balancers most often experience faults due to software failures [ 26 , 70 ].

Cloud management system (CMS) is the last important failure classification of Table 4 which will be considered in this section. Cloud management system can be considered as the manager of cloud computing services. A cloud management system uses the combination of software and technologies for handling the cloud environments. In other words, CMS can be considered as a response to the management challenges of cloud computing. At least, a CMS should be able to:

Manage a pool of heterogeneous resources.

Provide remote access for end users.

Monitor system security.

Manage resource allocation policies.

Manage tracking of resource usage.

As aforementioned in [ 71 ], three different types of failures have already been identified for CMSs and include: (1) technological failures that result from hardware problems, (2) hardware limitations creating management errors as a result of limited capability and capacity for processing information and (3) middleware limitations for exchanging information between systems as a result of various technologies using different information and data models. Currently, CMSs can detect the failures and follow the pre-defined procedures to re-establish the communications infrastructure [ 71 ]. However, the most important type of failure is related to content and semantic issues. These failures will occur when management systems operate with incorrect information, when data is changed erroneously after a translation or conversion process, or when data is misinterpreted. So, this kind of problem is still largely unsolved and is a serious problem.

One of the fundamental reasons behind avoiding the adoption and utilization of cloud services is the issue of security. Security is often considered as the main requirement for hosting critical applications in public clouds. Security failure which is one of the most important modes of cloud computing failures can be divided into three general modes which include: customer faults, software security breaches and security policy failure.

According to the Gartner predictions for IT world and users for 2016 and beyond, through 2020, 95% of cloud computing security failures will occur because of customers’ faults [ 68 ]. So, it can be said that customers’ faults will be the most important reason of cloud security failures. In addition, cloud-based software security breach is another serious issue. When cloud services are unavailable because of cloud system failures, this can cause huge problems for users who depend on their daily cloud-based activities, loss of revenue, clients and reputation for businesses. The reported software security breaches in recent years such as Adobe’s security breaches, resulted in service unavailability and cloud system failures [ 69 ].

Design and human-made interaction faults currently dominate as one of the numerous sources of failures in cloud computing environments [ 67 ]. In other words, an external fault can be considered as an improper interaction with system during the operational time by an operator. But because environmental failures and human operational faults are considered as external faults from the system viewpoint, they are beyond the scope of this paper. Based on the previous discussion it can be concluded that hardware component failures and database failures are two most important hotspots for more future studies in this step. Likewise, the most impressive factors among all cloud components can be specified as a new failure classification in future work of this research step. In addition, some reliability and availability measuring tools that would enable us to identify the most effective components in the cloud systems’ availability and reliability will be used. So the second part of this step for answering “which?” question involves identifying and classifying these measures. In the rest of this section, some of these measures will be introduced.

• Recurring faults

As earlier discussed, another goal of this part is to classify all-important reliability and HA evaluation measures. The study in [ 72 ] proposes this point that when a PC component fails, it is much more likely to fail again. This is called recurring faults which can be helpful after identifying faulty components in this step.

•Reliability importance (RI)

Identifying the weaknesses of a complex system is not as easy as identifying these weak components in a simple system such as series systems. In the complex systems, the analyst requires a mathematical approach that will provide the means of identifying the importance of each system component. Reliability Importance (RI) is a suitable measure to identify the relative importance of each component according to the total reliability of the system. The reliability importance, IR i , of component i in a system of n components is given as [ 73 ]:

where R s ( t ): is the system reliability at a certain time, t; R i ( t ): is the component reliability at a certain time, t.

The RI measures the rate of change at the certain time t of the system reliability regarding the components reliability change. The probability that a component can cause system failure at time t, can also be measured by the RI. Both reliability and current position of a system component can affect the calculated reliability importance in Eq. 1 .

So, in this step, the most important components which can have a high impact on cloud computing reliability will be identified. Therefore this step focuses on reliability issues as the first concern.

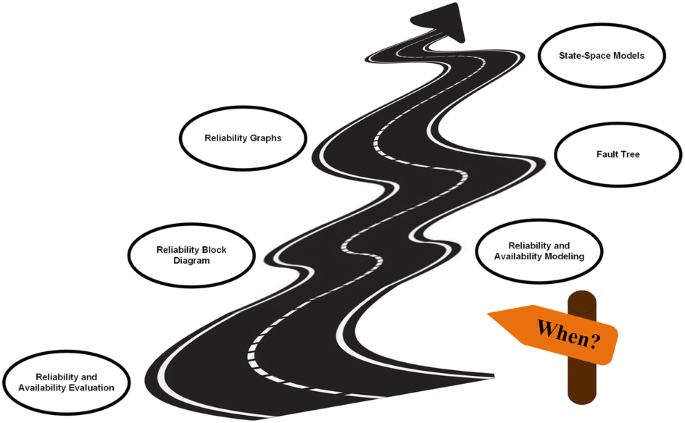

“When reliability and HA will decrease in cloud computing environments?”

Concentration will be on evaluating the different system states by answering the “When” question from the HA and reliability points of view to identify the causes and times of availability and reliability degradation at this step. Figure 5 shows the proposed roadmap of this step.

Proposed roadmap of the “When” step

Availability and reliability models capture failure and repair behavior of systems and their components. Model-based evaluation methods can be one of the discrete-event simulation models, analytic models or hybrid models using both simulation and analytic parts. An analytic model is made up of a set of equations describing the system behavior. The evaluation measures can be obtained by solving these equations. Actually, analytical models are mathematical models that present an abstraction from the real world system in relation to only the system behaviors and characteristics of interest.

This section introduces the model types used for evaluating the availability and reliability of a system. These types can be classified into these three classes:

Combinatorial model types

The three most common combinatorial model types which can be used for availability and reliability modeling under certain assumptions are “Fault Tree”, “Reliability Block Diagram” and “Reliability Graph”. These three models consider the structural relationships amongst the system components for analytical/numerical validations.

• Reliability block diagram

The reliability block diagram (RBD) is a type of inductive method which can be used for large and complex systems reliability and availability analysis. The series/parallel configuration of RBDs assist in modeling the logical interactions of complex system components failures [ 74 ]. RBDs can also be used to evaluate the dependability, availability and reliability of complex and large scale systems. In other words, it can be concluded that RBDs represent the logical structure of a system with respect to how the reliability of each component can affect the entire system reliability. In the RBD model, components can be organized into three: “Series”, “Parallel” or “k-out-of-n” configurations. In addition, these combinations together can be used in a single block diagram. Each component with the same type that appears more than once in the RBD is assumed to be a copy with independent and identical failure distribution. Every component has a failure probability, a failure rate, a failure distribution function or unavailability attached to it. A study of all existing RBD based reliability analysis techniques has been proposed by [ 74 ].

• Reliability graphs

The reliability graph is a schematic way of evaluating the availability and reliability. Generally, a reliability graph model consists of a set of nodes and edges, where the edges (arcs) represent components that have failure distributions. There is one node without any incoming edges in a reliability graph and is called the reliability graph source. In addition, there is another node without any outgoing edges and is called the sink or terminal or destination node. When there is no path from the source to the sink in a reliability graph model of a system, it is considered as a system failure. The edges can have failure probabilities, failure rates or distribution functions same as the RBDs.

• Fault tree

Fault trees are another type of combinatorial models widely used for assessing the reliability of complex systems through qualitative or quantitative analysis [ 75 ]. Fault trees can represent all the sequences of components failures that cause the entire system failure and stop system functioning, in a treelike structure. This method visualizes the cause of failure which can lead to the top event at different levels of detail up to basic events. Fault Tree Analysis (FTA) is a common probabilistic risk assessment technique that enables investigation of the safety and reliability of systems [ 76 ].

The root of a fault tree can simply be defined as a single and well-defined undesirable event. In the reliability and availability modeling, this undesirable event is the system failure. To evaluate the safety of the system, the potentially hazardous or unsafe condition is considered as an undesirable event. The fault tree is a schematic representation of a combination of events that can lead to the occurrence of a fault in the system.

State-space models

The models discussed in the previous section will be solved by using algorithms which assume that there is a stochastic independence interaction between different system components. Therefore, for availability or reliability modeling, it is assumed that the component failure or repair was not affected by other failures in the system. Therefore, to be able to model more complicated system interactions, other types of availability and reliability models such as state space models should be used.

State space models constitute a powerful method for capturing dependencies amongst system components. In other words, this model is a general approach for availability and reliability modeling which is a collection of stochastic variables which show the state of the system at any arbitrary time. The Markov chain is an example of this model type. A Markov model can be defined as a stochastic model which is used in the modeling of complex systems. It is assumed that future states in a Markov model only depend on the current state of the system. In other words, it is independent of the previous events. There are usually four known Markov models which can be used in different situations. The Markov chain is the simplest type.

A simple type of stochastic process which has the Markov properties can be considered as a Markov chain. The “Markov F Chain” term is used to refer to the sequence of the stochastic variables in this definition.

Reliability/availability modeling of any system may produce less precise results. The use of graceful degradation will make it possible for a system to provide its services at a reduced level in the existence of failures. The Markov reward model (MRM) is the usual method of modeling gracefully degradable systems [ 77 , 78 , 79 , 80 ]. In addition, the other common model types are: (1) Markov reward model or irreducible semi-Markov reward model and (2) stochastic reward nets.

Non-state-space models can help to achieve efficiency in specifying and solving the reliability evaluation, but these models assume that components are completely independent. For example, in RBDs, fault-tree or reliability graph, the components are considered as some completely independent entities in a system in terms of failure and repair behavior. It means these models assume that a component failure in a system cannot affect the function of another component. However, Markov models have the ability to model systems that reject the assumptions made by the non-state-space models but at the cost of the state space explosion.

Hierarchical models

Hierarchical models can assist in solving the state space explosion problem. They can allow the combination of random variables that show different system parameters to have a general distribution [ 81 ]. Therefore, by this approach, we can obtain more realistic reliability/availability models to analyze the complex systems. In other words, large-scale models can be avoided using hierarchical model composition. If the storage issue can be solved, the specification problem will be solved by using briefer model specifications which are transformable to the Markov models.

As a kind of summary for this section, we can express that in the context of availability and reliability modeling, many of the initial works focus on the use of Markov chains [ 82 , 83 , 84 , 85 , 86 ], because a cloud computing system is effectively considered as a complex availability problem. Other studies concentrate on the conceptual problems, priority and hierarchical graphs or the development of performance indices [ 87 ]. As stated earlier, combinatorial model types like RBDs consider the structural relationships amongst the system components which can represent the logical structure of a system with respect to how the reliability of its components affects the system’s reliability. In addition, state space models like Markov chains can be used for the modeling of more complicated interactions between components. Therefore, it seems that using hierarchical hybrid models [ 88 ], for example, combining RBDs and Generalized Stochastic Petri Nets (GSPNs) [ 89 ], RBDs and an MRM [ 90 ] are more suitable for evaluating cloud-based data centers’ availability and reliability.

To have a better understanding of reliability/availability models, the relationship between availability and reliability and their relationship with maintainability for determining the degradation states in details need to be considered, like the study proposed by [ 91 ]. Table 5 shows a summary of this study on these relationships.

Availability is defined as the probability that the system is operating properly at a given time t and when it is accessed for use. In other words, availability is defined as the probability that a system is functional and up at a specified time. At first, it would seem that if a system has a high availability, then it is also expected to have a high reliability. However, this is not necessarily true [ 91 ], as shown in Table 5 .

Reliability shows the probability of a system/component for performing the required functions in a period of time without failure. It does not contain any repair process in its definition. It also accounts for the period of time that it will take the system/component to fail during its operating time. Therefore, according to the definition of availability, it can be said that availability is not only a function of reliability, but it is also a function of maintainability. Table 5 shows the reliability, maintainability, and availability relationships. From this table, it should be noted that an increase in maintainability means a decrease in the repair time and maintenance actions period. As shown in Table 5 , if the reliability can be held constant, even at a high value, this does not directly mean providing high availability, because as the time to repair increases, the availability will decrease. Therefore, even a system with a low reliability could have a high availability provided that the repair time does not take too long.

Finally, for answering the ‘When’ question is to have an appropriate definition of availability. The definition of availability can be flexible, depending on the types of downtimes and actor’s points of view considered in the availability analysis. Therefore, different definition and classifications of availability can be offered. A classification of availability types has been proposed by [ 91 , 92 ]. In the following, four common types of availability will be introduced briefly:

• Instantaneous availability

Instantaneous availability is defined as the probability that a system is operating at any given time. This definition is somehow similar to the reliability function definition which gives the probability that a system will function at the given time t. However, different from the reliability definition, the instantaneous availability contains information on maintainability. According to the instantaneous availability, the system will be operational provided the following conditions could be met:

The required function can be properly provided during time t with probability R(t);

It can provide the required function since the last repair time like u, 0 < u < t, with the following probability:

where m (u) is the system renewal density function.

The instantaneous availability is calculated as:

• Mean availability

The average uptime availability or mean availability can be defined as the proportion of mission time or time period that a system is available for use. The mean value of the instantaneous availability over a specific period of time such as (0, T), is defined by this availability.

• Steady-state availability

The steady state availability can be determined by calculating the limit of the instantaneous availability as the time goes to infinity. In fact, when the time approximates to about four times the MTBF in instantaneous availability, it can be said that the instantaneous availability function approaches the steady state value. Therefore, the steady state availability can be calculated by using the following equation:

• Operational availability

Operational availability can be considered as an important measurement for evaluating the system effectiveness and performance. It evaluates the system availability which includes all the downtime sources, such as diagnostic downtime, administrative downtime, logistic downtime, etc. The operational availability is calculated as:

where the operating cycle is the overall time of the investigated operation period whereas Uptime is the system’s total functional time during the operating cycle. It is important to note that the availability that a customer actually experiences in the system is operational availability which is the availability according to the events that happened to the system.

“How to provide high availability and reliability while preventing performance degradation or supporting graceful degradation?”

As the last important step that should be taken, suitable solutions will be chosen to provide HA and reliability, while supporting graceful degradation based on the previous results as shown in Fig. 6 . Table 6 presents fault tolerance (FT) methods classification in cloud computing environments.

Proposed roadmap of the “How” step

By identifying the states which have higher failure rate and specifying the causes based on the results obtained from “Where?”, “Which?” and “When?” steps, we will be able to provide high available and reliable services in our cloud computing systems. Therefore, appropriate action can be taken according to the specific occurrence and failure type because there is no one-size-fits-all solution in the HA and reliability issues area.

Fault tolerance mechanisms deal with quick repairing and replacement of faulty components for retaining the system. FT in cloud computing systems is the ability to withstand the abrupt changes which occur due to different types of failures. Recovery point objective (RPO) and recovery time objective (RTO) are two major and important parameters in fault management study. The RPO shows the amount of data to be lost as a result of a fault or disaster, whereas RTO shows the minimum downtime for recovering from faults [ 93 ].

Ganesh et al. [ 93 ] presented a study on fault tolerance mechanisms in cloud computing environments. As noted in this paper, there are mainly two standard FT policies available for running real-time applications in the cloud; they are “Proactive Fault Tolerance Policy” and “Reactive Fault Tolerant Policy”. These FT policies are mainly used to provide fault tolerance mechanisms in the cloud.

The proactive fault tolerance policy is used to avoid failures by proactively taking preventive measures [ 95 , 96 , 97 ]. These measures are reserved by studying the pre-fault indicators and predicting the underlying faults. The next step is applying proactive fault tolerance measures by refactoring the code or failure prone components replacement at the development time. Using proactive fault tolerance mechanisms can guarantee that a job execution will be completed without any further reconfiguration [ 98 ].

The principle of reactive fault tolerance policy is based on dealing with measures applied for reducing the effects of the faults that already occurred in the cloud system. Table 6 shows a summary of this study on FT policies and techniques in cloud computing systems.

Although cloud computing systems have improved significantly over the past few years, improvements in computing power depend on system scale and complexity. Modern processing elements are extremely reliable, but they are not perfect [ 99 ]. The overall failure rate of high-performance computing (HPC) systems such as cloud systems, increases with their size [ 99 ]. Therefore, fault tolerating methods are important for systems with high failure rate.

Proactive FT policies in cooperation with failure prediction mechanisms can avoid failures by taking proactive actions. The recovery process overheads can be significantly reduced by using this technology [ 99 ]. Task migration is one of the widely used techniques in Proactive FT techniques. While task migration can avoid failures and achieve much lower overheads than Reactive FT techniques like Checkpointing/Restart, there are two shortcomings in this technique. First, migration will not be performed if there is no spare node for accommodating the processes of the suspect node. The second problem is that task migration is not an appropriate method for software errors [ 100 ]. Thus, a proactive fault tolerance method which only rely on migration cannot gain all advantages of the failure prediction.

One of the other techniques to provide fault tolerance in cloud environments is by using VM migration. Jung et al. [ 101 ] propose a VM migration scheme using Checkpointing to solve the task waiting time problem. A fault tolerant mechanism usually triggers the VM migration before a failure event. It actually backs VM to the same physical server after system maintenance ends [ 102 ].

Redundancy is a common technique for providing high available and reliable systems [ 103 ], but it could not be as effective as expected as a result of particular types of failures such as transient failures and software aging. The most common procedure for combating software aging is to apply software rejuvenation. A software rejuvenation process can be done by restarting the software application. Therefore, this technique will help to restart the application to its standard level of performance and effectively solve the software aging problem. In other words, software rejuvenation is a preventive and proactive technique which is particularly useful for counteracting the appearance of software aging, aimed at cleaning up the internal states of the system to prevent further and future failure events. Without any rejuvenation, both the OS software and the hosted running application software will be degraded in performance with time due to the exhaustion of system resources such as free memory that could finally lead to a system crash, which is very undesirable to HA systems and fatal to mission-critical applications. The disadvantage of rejuvenation is the temporary outage of service. To completely eliminate the service outage during the rejuvenation process, the use of virtualization technology is one of the possible approaches [ 104 ].

The software rejuvenation process can be applied to the system according to the different parameters of software aging or the elapsed time since the last rejuvenation event. Software rejuvenation can be used at different scopes like system, application, process, or thread [ 105 ].

A classification of techniques has been proposed by [ 106 ] which distinguishes between two classes of failure handling techniques, namely, task level failure handling and workflow level failure handling. The recovery techniques that can be performed at the task level for masking the fault effects are called task level techniques. These types of recovery techniques have been widely studied in distributed and parallel environments. These recovery techniques are classified into four different types: retry, alternate resource, checkpoint/restart, and replication techniques. Generally, it can be concluded that the two simplest task level techniques are retry and alternate, as they simply try to execute the same task on the same or alternate resource again after failure.

The checkpoint is mostly efficient for long-running applications. The checkpointing approach has attracted significant attention over the recent years in the context of fault tolerance research like studies that have been carried out by [ 107 , 108 , 109 , 110 ]. Generally, the checkpoint technique periodically saves the state of an application. After the checkpoint, failed tasks can restart from the failure point by moving the task to another resource. There are several different checkpointing solutions for large-scale distributed computing systems. A classification of checkpointing mechanisms have been proposed in [ 111 ]. It shows that these mechanisms can be classified based on four different viewpoints. From one viewpoint, the checkpointing mechanism can be classified into two groups called the incremental checkpointing mechanisms and the full checkpointing mechanisms, depending on whether the newly modified page states are saved or the entire system running states are stored. The second viewpoint classifies the checkpointing mechanisms into two local and global checkpointing mechanisms, according to how checkpointing data is saved, locally or globally. Depending on whether the checkpointing data is saved coordinately or not, the third classification can be proposed, which is organized into two coordinated checkpointing mechanisms and uncoordinated checkpointing mechanisms. Finally, it can be concluded that the last classification is based on saving the checkpointing data on disk or not, and they are called disk and diskless checkpointing mechanisms.

Finally, as the last task level technique, we point to the replication. Running the same task on different computing resources and simultaneously ensures that there is at least one successful completed task which is the replication technique [ 112 , 113 ].

As previously mentioned, workflow-level techniques are the second class of failures handling in distributed systems. Manipulating workflow structure for dealing with erroneous conditions is referred as workflow level technique. In other words, workflow level FTTs change the flow of execution on failure according to the knowledge of task execution context. They can also be classified into four different types: alternate task, redundancy, user defined exception handling and rescue workflow [ 106 ]. The only difference between alternate task and retry technique is that alternate task exchanges a task with a different implementation of the same task with different execution characteristics on the failure of the first one. The rescue workflow technique allows the workflow to continue even when task failure occurs. In another technique, the particular treatment to the task workflow is specified by the user and it is called user defined exception handling technique.

As a conclusion of the previous discussion, it can be said that both proactive and reactive fault tolerance policies have advantages and disadvantages. The results of the experiment show that migration techniques (proactive FT policy) are more efficient than checkpoint/restart techniques (reactive FT policy) [ 98 ]. Even though proactive techniques are more efficient, they are not frequently used. This is because the system is less affected by incorrect predictions due to proactive fault tolerance and also reactive methods are relatively simple to implement as FT techniques are not applied during the development time.

There is a serious gap in cloud computing fault tolerance research area, that is, the mutual impact of cloud performance overhead [ 52 , 114 , 115 , 116 , 117 ] and HA solutions.

There are hot topics like VM Granularity, IaaS Routing Operations (such as live VM migration, concurrent deployment and snapshotting of multiple VMs …), and the inability to isolate shared cloud network and storage resources in the cloud performance overhead research area. All these factors can impact on VM performance, total system performance and therefore VM’s availability and reliability. On the other hand, using additional mechanisms for providing HA and reliability in cloud computing systems can be considered as a system overhead which can have negative effects on system performance.

It seems that interesting and desired results would be achieved as the contribution of this research step by studying these mutual impacts for providing highly available and utilized cloud computing systems and considering performability concerns.

Discussion and open issues

Many research works have been carried out on cloud computing HA and reliability, but there is no comprehensive and complete overview of the entire problem domain. Attempt was made to propose a reference roadmap that covers all the aspects of the problem from different cloud actor’s viewpoints, especially cloud consumers and providers. This study proposed a big picture which divided the problem space into four main steps to cover the various requirements in the desired research area. A specific question was posed for each step that answering these questions will enable cloud providers to offer high available and reliable services. Therefore, while cloud providers can satisfy the cloud consumers’ requirements, they can also have highly utilized resources to achieve more business profits.

The four major questions starting with “Where?”, “Which?”, “When?” and “How” keywords form the main steps of the proposed roadmap. By taking these steps, our purposes can be achieved. In fact, taking each step means understanding the main concern presented by the specific question and proposing suitable solutions for that issue. Some suggestions were proposed as the primary answers for each of these questions which can be helpful for more future work in this research area. Making future research suggestions and proposing efficient solutions for each of these steps in the big picture is one of the important open issues in this study. Therefore, the proposed big picture can lead to future researches in cloud computing in the field of high availability and reliability.

Two main research gaps were specified through the proposed big picture, which have been neglected in the literature review. The first one is related to the providing HA and reliability solutions regardless of considering all cloud actors’ requirements and viewpoints. By proposing “Where” question, attempt was made to consider both cloud consumers and providers which are most important actors in the cloud computing environments. Therefore, not only was attention given to have a high available and reliable system, but also provider’s demands for having highly utilized systems were considered. The second research gap was proposed in “How” question section. As pointed out, adding an additional mechanism to the system can have negative effects on the total system performance. In addition, some performance overhead issues can degrade system availability and reliability. Therefore, this scientific hypothesis that system performance overhead and HA solutions can have the mutual impact on one another was proposed.

This paper presented a reference roadmap of HA and reliability problem in cloud computing systems that can lead to future research papers. By proposing this roadmap, all the different aspects of HA and reliability problem in cloud computing systems were specified. Furthermore, the effects of cloud computing main components on total system failure rate were studied according to the proposed eucalyptus-based architecture in our big picture. In this study, we focus on the comprehensive roadmap and covering all the different related aspects of HA and reliability in cloud environments. In addition, attempt was made to consider not only techniques (‘When’ and ‘How’ steps) that improve availability and reliability, but also characteristics (‘Where’ and ‘Which’ steps) of cloud computing systems. However, we will go into the details of specific technologies for each step as the future work of this study. Therefore, we can assess each step of the proposed solution in a more specific way. Evaluating the mutual impact of system performance overhead and HA solutions using the OpenStack platform is one of the main future works. SDN-based technology such as Espresso in Google cloud is one of the latest technology trends in cloud computing that can make cloud computing environments faster and more available. The application of this approach will be considered as a solution to ‘How’ step in future work. Furthermore, the contributions in this paper raise some other future works of reliability and HA in cloud computing through interesting proposed aspects of the problem. Some other significant future works are as follows:

A comprehensive study of cloud failure modes, causes and failure rate, and reliability/availability measuring tools;

A highly utilized and more profitable cloud economy that can guarantee the provision of highly available and reliable services.

Evaluating availability and reliability of cloud computing system and components based on the proposed architecture;

Studying the mutual impact of HA mechanisms and VM performance overhead.

Ardagna D (2015) Cloud and multi-cloud computing: current challenges and future applications. In: 7th international workshop on principles of engineering service-oriented and cloud systems (PESOS) 2015. IEEE/ACM, Piscataway, pp 1–2

Rastogi G, Sushil R (2015) Cloud computing implementation: key issues and solutions. In: 2nd international conference on computing for sustainable global development (INDIACom). IEEE, Piscataway, pp 320–324

Mell P, Grance T (2011) The NIST definition of cloud computing. Commun ACM 53(6):50

Google Scholar

Buyya R et al (2009) Cloud computing and emerging IT platforms: vision, hype, and reality for delivering computing as the 5th utility. Future Gener Comput Syst 25(6):599–616

Article Google Scholar