An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- HCA Healthc J Med

- v.1(2); 2020

- PMC10324782

Introduction to Research Statistical Analysis: An Overview of the Basics

Christian vandever.

1 HCA Healthcare Graduate Medical Education

Description

This article covers many statistical ideas essential to research statistical analysis. Sample size is explained through the concepts of statistical significance level and power. Variable types and definitions are included to clarify necessities for how the analysis will be interpreted. Categorical and quantitative variable types are defined, as well as response and predictor variables. Statistical tests described include t-tests, ANOVA and chi-square tests. Multiple regression is also explored for both logistic and linear regression. Finally, the most common statistics produced by these methods are explored.

Introduction

Statistical analysis is necessary for any research project seeking to make quantitative conclusions. The following is a primer for research-based statistical analysis. It is intended to be a high-level overview of appropriate statistical testing, while not diving too deep into any specific methodology. Some of the information is more applicable to retrospective projects, where analysis is performed on data that has already been collected, but most of it will be suitable to any type of research. This primer will help the reader understand research results in coordination with a statistician, not to perform the actual analysis. Analysis is commonly performed using statistical programming software such as R, SAS or SPSS. These allow for analysis to be replicated while minimizing the risk for an error. Resources are listed later for those working on analysis without a statistician.

After coming up with a hypothesis for a study, including any variables to be used, one of the first steps is to think about the patient population to apply the question. Results are only relevant to the population that the underlying data represents. Since it is impractical to include everyone with a certain condition, a subset of the population of interest should be taken. This subset should be large enough to have power, which means there is enough data to deliver significant results and accurately reflect the study’s population.

The first statistics of interest are related to significance level and power, alpha and beta. Alpha (α) is the significance level and probability of a type I error, the rejection of the null hypothesis when it is true. The null hypothesis is generally that there is no difference between the groups compared. A type I error is also known as a false positive. An example would be an analysis that finds one medication statistically better than another, when in reality there is no difference in efficacy between the two. Beta (β) is the probability of a type II error, the failure to reject the null hypothesis when it is actually false. A type II error is also known as a false negative. This occurs when the analysis finds there is no difference in two medications when in reality one works better than the other. Power is defined as 1-β and should be calculated prior to running any sort of statistical testing. Ideally, alpha should be as small as possible while power should be as large as possible. Power generally increases with a larger sample size, but so does cost and the effect of any bias in the study design. Additionally, as the sample size gets bigger, the chance for a statistically significant result goes up even though these results can be small differences that do not matter practically. Power calculators include the magnitude of the effect in order to combat the potential for exaggeration and only give significant results that have an actual impact. The calculators take inputs like the mean, effect size and desired power, and output the required minimum sample size for analysis. Effect size is calculated using statistical information on the variables of interest. If that information is not available, most tests have commonly used values for small, medium or large effect sizes.

When the desired patient population is decided, the next step is to define the variables previously chosen to be included. Variables come in different types that determine which statistical methods are appropriate and useful. One way variables can be split is into categorical and quantitative variables. ( Table 1 ) Categorical variables place patients into groups, such as gender, race and smoking status. Quantitative variables measure or count some quantity of interest. Common quantitative variables in research include age and weight. An important note is that there can often be a choice for whether to treat a variable as quantitative or categorical. For example, in a study looking at body mass index (BMI), BMI could be defined as a quantitative variable or as a categorical variable, with each patient’s BMI listed as a category (underweight, normal, overweight, and obese) rather than the discrete value. The decision whether a variable is quantitative or categorical will affect what conclusions can be made when interpreting results from statistical tests. Keep in mind that since quantitative variables are treated on a continuous scale it would be inappropriate to transform a variable like which medication was given into a quantitative variable with values 1, 2 and 3.

Categorical vs. Quantitative Variables

Both of these types of variables can also be split into response and predictor variables. ( Table 2 ) Predictor variables are explanatory, or independent, variables that help explain changes in a response variable. Conversely, response variables are outcome, or dependent, variables whose changes can be partially explained by the predictor variables.

Response vs. Predictor Variables

Choosing the correct statistical test depends on the types of variables defined and the question being answered. The appropriate test is determined by the variables being compared. Some common statistical tests include t-tests, ANOVA and chi-square tests.

T-tests compare whether there are differences in a quantitative variable between two values of a categorical variable. For example, a t-test could be useful to compare the length of stay for knee replacement surgery patients between those that took apixaban and those that took rivaroxaban. A t-test could examine whether there is a statistically significant difference in the length of stay between the two groups. The t-test will output a p-value, a number between zero and one, which represents the probability that the two groups could be as different as they are in the data, if they were actually the same. A value closer to zero suggests that the difference, in this case for length of stay, is more statistically significant than a number closer to one. Prior to collecting the data, set a significance level, the previously defined alpha. Alpha is typically set at 0.05, but is commonly reduced in order to limit the chance of a type I error, or false positive. Going back to the example above, if alpha is set at 0.05 and the analysis gives a p-value of 0.039, then a statistically significant difference in length of stay is observed between apixaban and rivaroxaban patients. If the analysis gives a p-value of 0.91, then there was no statistical evidence of a difference in length of stay between the two medications. Other statistical summaries or methods examine how big of a difference that might be. These other summaries are known as post-hoc analysis since they are performed after the original test to provide additional context to the results.

Analysis of variance, or ANOVA, tests can observe mean differences in a quantitative variable between values of a categorical variable, typically with three or more values to distinguish from a t-test. ANOVA could add patients given dabigatran to the previous population and evaluate whether the length of stay was significantly different across the three medications. If the p-value is lower than the designated significance level then the hypothesis that length of stay was the same across the three medications is rejected. Summaries and post-hoc tests also could be performed to look at the differences between length of stay and which individual medications may have observed statistically significant differences in length of stay from the other medications. A chi-square test examines the association between two categorical variables. An example would be to consider whether the rate of having a post-operative bleed is the same across patients provided with apixaban, rivaroxaban and dabigatran. A chi-square test can compute a p-value determining whether the bleeding rates were significantly different or not. Post-hoc tests could then give the bleeding rate for each medication, as well as a breakdown as to which specific medications may have a significantly different bleeding rate from each other.

A slightly more advanced way of examining a question can come through multiple regression. Regression allows more predictor variables to be analyzed and can act as a control when looking at associations between variables. Common control variables are age, sex and any comorbidities likely to affect the outcome variable that are not closely related to the other explanatory variables. Control variables can be especially important in reducing the effect of bias in a retrospective population. Since retrospective data was not built with the research question in mind, it is important to eliminate threats to the validity of the analysis. Testing that controls for confounding variables, such as regression, is often more valuable with retrospective data because it can ease these concerns. The two main types of regression are linear and logistic. Linear regression is used to predict differences in a quantitative, continuous response variable, such as length of stay. Logistic regression predicts differences in a dichotomous, categorical response variable, such as 90-day readmission. So whether the outcome variable is categorical or quantitative, regression can be appropriate. An example for each of these types could be found in two similar cases. For both examples define the predictor variables as age, gender and anticoagulant usage. In the first, use the predictor variables in a linear regression to evaluate their individual effects on length of stay, a quantitative variable. For the second, use the same predictor variables in a logistic regression to evaluate their individual effects on whether the patient had a 90-day readmission, a dichotomous categorical variable. Analysis can compute a p-value for each included predictor variable to determine whether they are significantly associated. The statistical tests in this article generate an associated test statistic which determines the probability the results could be acquired given that there is no association between the compared variables. These results often come with coefficients which can give the degree of the association and the degree to which one variable changes with another. Most tests, including all listed in this article, also have confidence intervals, which give a range for the correlation with a specified level of confidence. Even if these tests do not give statistically significant results, the results are still important. Not reporting statistically insignificant findings creates a bias in research. Ideas can be repeated enough times that eventually statistically significant results are reached, even though there is no true significance. In some cases with very large sample sizes, p-values will almost always be significant. In this case the effect size is critical as even the smallest, meaningless differences can be found to be statistically significant.

These variables and tests are just some things to keep in mind before, during and after the analysis process in order to make sure that the statistical reports are supporting the questions being answered. The patient population, types of variables and statistical tests are all important things to consider in the process of statistical analysis. Any results are only as useful as the process used to obtain them. This primer can be used as a reference to help ensure appropriate statistical analysis.

Funding Statement

This research was supported (in whole or in part) by HCA Healthcare and/or an HCA Healthcare affiliated entity.

Conflicts of Interest

The author declares he has no conflicts of interest.

Christian Vandever is an employee of HCA Healthcare Graduate Medical Education, an organization affiliated with the journal’s publisher.

This research was supported (in whole or in part) by HCA Healthcare and/or an HCA Healthcare affiliated entity. The views expressed in this publication represent those of the author(s) and do not necessarily represent the official views of HCA Healthcare or any of its affiliated entities.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

Statistics articles within Scientific Reports

Article 23 May 2024 | Open Access

Analysis of player speed and angle toward the ball in soccer

- Álvaro Novillo

- , Antonio Cordón-Carmona

- & Javier M. Buldú

Article 15 May 2024 | Open Access

Modified correlated measurement errors model for estimation of population mean utilizing auxiliary information

- Housila P. Singh

- & Neha Garg

Article 14 May 2024 | Open Access

Employing machine learning for enhanced abdominal fat prediction in cavitation post-treatment

- Doaa A. Abdel Hady

- , Omar M. Mabrouk

- & Tarek Abd El-Hafeez

Article 09 May 2024 | Open Access

Towards optimal model evaluation: enhancing active testing with actively improved estimators

- JooChul Lee

- , Likhitha Kolla

- & Jinbo Chen

Article 08 May 2024 | Open Access

Research on proactive defense and dynamic repair of complex networks considering cascading effects

- Zhuoying Shi

- , Ying Wang

- & Chaoqi Fu

Article 07 May 2024 | Open Access

A Markov network approach for reproducing purchase behaviours observed in convenience stores

- Dan Johansson

- , Hideki Takayasu

- & Misako Takayasu

Article 06 May 2024 | Open Access

A Bayesian spatio-temporal model of COVID-19 spread in England

- Xueqing Yin

- , John M. Aiken

- & Jonathan L. Bamber

Max-mixed EWMA control chart for joint monitoring of mean and variance: an application to yogurt packing process

- Seher Malik

- , Muhammad Hanif

- & Jumanah Ahmed Darwish

Causal impact evaluation of occupational safety policies on firms’ default using machine learning uplift modelling

- Berardino Barile

- , Marco Forti

- & Angelo Castaldo

Article 04 May 2024 | Open Access

Estimating neutrosophic finite median employing robust measures of the auxiliary variable

- Saadia Masood

- , Bareera Ibrar

- & Zabihullah Movaheedi

Article 01 May 2024 | Open Access

Zika emergence, persistence, and transmission rate in Colombia: a nationwide application of a space-time Markov switching model

- Laís Picinini Freitas

- , Dirk Douwes-Schultz

- & Kate Zinszer

Article 29 April 2024 | Open Access

Exploring drivers of overnight stays and same-day visits in the tourism sector

- Francesco Scotti

- , Andrea Flori

- & Giovanni Azzone

A support vector machine based drought index for regional drought analysis

- Mohammed A Alshahrani

- , Muhammad Laiq

- & Muhammad Nabi

Article 25 April 2024 | Open Access

Joint Bayesian estimation of cell dependence and gene associations in spatially resolved transcriptomic data

- Arhit Chakrabarti

- & Bani K. Mallick

Estimating SARS-CoV-2 infection probabilities with serological data and a Bayesian mixture model

- Benjamin Glemain

- , Xavier de Lamballerie

- & Fabrice Carrat

Article 24 April 2024 | Open Access

Applications of nature-inspired metaheuristic algorithms for tackling optimization problems across disciplines

- Elvis Han Cui

- , Zizhao Zhang

- & Weng Kee Wong

Article 23 April 2024 | Open Access

Variable parameters memory-type control charts for simultaneous monitoring of the mean and variability of multivariate multiple linear regression profiles

- Hamed Sabahno

- & Marie Eriksson

Article 22 April 2024 | Open Access

Modeling health and well-being measures using ZIP code spatial neighborhood patterns

- , Michael LaValley

- & Shariq Mohammed

Article 20 April 2024 | Open Access

Sequence based model using deep neural network and hybrid features for identification of 5-hydroxymethylcytosine modification

- Salman Khan

- , Islam Uddin

- & Dost Muhammad Khan

Article 19 April 2024 | Open Access

Identification of CT radiomic features robust to acquisition and segmentation variations for improved prediction of radiotherapy-treated lung cancer patient recurrence

- Thomas Louis

- , François Lucia

- & Roland Hustinx

Explainable prediction of node labels in multilayer networks: a case study of turnover prediction in organizations

- László Gadár

- & János Abonyi

Article 18 April 2024 | Open Access

The quasi-xgamma frailty model with survival analysis under heterogeneity problem, validation testing, and risk analysis for emergency care data

- Hamami Loubna

- , Hafida Goual

- & Haitham M. Yousof

Memory type Bayesian adaptive max-EWMA control chart for weibull processes

- Abdullah A. Zaagan

- , Imad Khan

- & Bakhtiyar Ahmad

Article 17 April 2024 | Open Access

Improved data quality and statistical power of trial-level event-related potentials with Bayesian random-shift Gaussian processes

- Dustin Pluta

- , Beniamino Hadj-Amar

- & Marina Vannucci

Article 16 April 2024 | Open Access

Comparison and evaluation of overcoring and hydraulic fracturing stress measurements

- , Meifeng Cai

- & Mostafa Gorjian

Predictors of divorce and duration of marriage among first marriage women in Dejne administrative town

- Nigusie Gashaye Shita

- & Liknaw Bewket Zeleke

Article 12 April 2024 | Open Access

Determinants of multimodal fake review generation in China’s E-commerce platforms

- Chunnian Liu

- & Lan Yi

Article 11 April 2024 | Open Access

New ridge parameter estimators for the quasi-Poisson ridge regression model

- Aamir Shahzad

- , Muhammad Amin

- & Muhammad Faisal

A bicoherence approach to analyze multi-dimensional cross-frequency coupling in EEG/MEG data

- Alessio Basti

- , Guido Nolte

- & Laura Marzetti

Article 10 April 2024 | Open Access

Response times are affected by mispredictions in a stochastic game

- Paulo Roberto Cabral-Passos

- , Antonio Galves

- & Claudia D. Vargas

The effect of city reputation on Chinese corporate risk-taking

- & Haifeng Jiang

Article 06 April 2024 | Open Access

Improvement in variance estimation using transformed auxiliary variable under simple random sampling

- , Syed Muhammad Asim

- & Soofia Iftikhar

Article 28 March 2024 | Open Access

Fatty liver classification via risk controlled neural networks trained on grouped ultrasound image data

- Tso-Jung Yen

- , Chih-Ting Yang

- & Hsin-Chou Yang

Article 27 March 2024 | Open Access

A new unit distribution: properties, estimation, and regression analysis

- Kadir Karakaya

- , C. S. Rajitha

- & Ahmed M. Gemeay

Article 26 March 2024 | Open Access

GeneAI 3.0: powerful, novel, generalized hybrid and ensemble deep learning frameworks for miRNA species classification of stationary patterns from nucleotides

- Jaskaran Singh

- , Narendra N. Khanna

- & Jasjit S. Suri

On topological indices and entropy measures of beryllonitrene network via logarithmic regression model

- , Muhammad Kamran Siddiqui

- & Fikre Bogale Petros

Article 22 March 2024 | Open Access

Measuring the similarity of charts in graphical statistics

- Krzysztof Górnisiewicz

- , Zbigniew Palka

- & Waldemar Ratajczak

Article 21 March 2024 | Open Access

Risk prediction and interaction analysis using polygenic risk score of type 2 diabetes in a Korean population

- Minsun Song

- , Soo Heon Kwak

- & Jihyun Kim

A longitudinal causal graph analysis investigating modifiable risk factors and obesity in a European cohort of children and adolescents

- Ronja Foraita

- , Janine Witte

- & Vanessa Didelez

Article 19 March 2024 | Open Access

A novel group decision making method based on CoCoSo and interval-valued Q-rung orthopair fuzzy sets

- , Hongwu Qin

- & Xiuqin Ma

Impact of using virtual avatars in educational videos on user experience

- Ruyuan Zhang

- & Qun Wu

A generalisation of the method of regression calibration and comparison with Bayesian and frequentist model averaging methods

- Mark P. Little

- , Nobuyuki Hamada

- & Lydia B. Zablotska

Article 18 March 2024 | Open Access

Monitoring gamma type-I censored data using an exponentially weighted moving average control chart based on deep learning networks

- Pei-Hsi Lee

- & Shih-Lung Liao

Article 15 March 2024 | Open Access

Statistical detection of selfish mining in proof-of-work blockchain systems

- Sheng-Nan Li

- , Carlo Campajola

- & Claudio J. Tessone

Article 13 March 2024 | Open Access

Evaluation metrics and statistical tests for machine learning

- Oona Rainio

- , Jarmo Teuho

- & Riku Klén

PARSEG: a computationally efficient approach for statistical validation of botanical seeds’ images

- Luca Frigau

- , Claudio Conversano

- & Jaromír Antoch

Application of analysis of variance to determine important features of signals for diagnostic classifiers of displacement pumps

- Jarosław Konieczny

- , Waldemar Łatas

- & Jerzy Stojek

Article 12 March 2024 | Open Access

Prediction and detection of side effects severity following COVID-19 and influenza vaccinations: utilizing smartwatches and smartphones

- , Margaret L. Brandeau

- & Dan Yamin

Article 08 March 2024 | Open Access

Evaluating the lifetime performance index of omega distribution based on progressive type-II censored samples

- N. M. Kilany

- & Lobna H. El-Refai

Article 07 March 2024 | Open Access

Development of risk models of incident hypertension using machine learning on the HUNT study data

- Filip Emil Schjerven

- , Emma Maria Lovisa Ingeström

- & Frank Lindseth

Browse broader subjects

- Mathematics and computing

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Reporting statistical methods and outcome of statistical analyses in research articles

- Published: 15 June 2020

- Volume 72 , pages 481–485, ( 2020 )

Cite this article

- Mariusz Cichoń 1

17k Accesses

13 Citations

1 Altmetric

Explore all metrics

Avoid common mistakes on your manuscript.

Introduction

Statistical methods constitute a powerful tool in modern life sciences. This tool is primarily used to disentangle whether the observed differences, relationships or congruencies are meaningful or may just occur by chance. Thus, statistical inference is an unavoidable part of scientific work. The knowledge of statistics is usually quite limited among researchers representing the field of life sciences, particularly when it comes to constraints imposed on the use of statistical tools and possible interpretations. A common mistake is that researchers take for granted the ability to perform a valid statistical analysis. However, at the stage of data analysis, it may turn out that the gathered data cannot be analysed with any known statistical tools or that there are critical flaws in the interpretation of the results due to violations of basic assumptions of statistical methods. A common mistake made by authors is to thoughtlessly copy the choice of the statistical tests from other authors analysing similar data. This strategy, although sometimes correct, may lead to an incorrect choice of statistical tools and incorrect interpretations. Here, I aim to give some advice on how to choose suitable statistical methods and how to present the results of statistical analyses.

Important limits in the use of statistics

Statistical tools face a number of constraints. Constraints should already be considered at the stage of planning the research, as mistakes made at this stage may make statistical analyses impossible. Therefore, careful planning of sampling is critical for future success in data analyses. The most important is ensuring that the general population is sampled randomly and independently, and that the experimental design corresponds to the aims of the research. Planning a control group/groups is of particular importance. Without a suitable control group, any further inference may not be possible. Parametric tests are stronger (it is easier to reject a null hypothesis), so they should always be preferred, but such methods can be used only when the data are drawn from a general population with normal distribution. For methods based on analysis of variance (ANOVA), residuals should come from a general population with normal distribution, and in this case there is an additional important assumption of homogeneity of variance. Inferences made from analyses violating these assumptions may be incorrect.

Statistical inference

Statistical inference is asymmetrical. Scientific discovery is based on rejecting null hypotheses, so interpreting non-significant results should be taken with special care. We never know for sure why we fail to reject the null hypothesis. It may indeed be true, but it is also possible that our sample size was too small or variance too large to capture the differences or relationships. We also may fail just by chance. Assuming a significance level of p = 0.05 means that we run the risk of rejecting a null hypothesis in 5% of such analyses. Thus, interpretation of non-significant results should always be accompanied by the so-called power analysis, which shows the strength of our inference.

Experimental design and data analyses

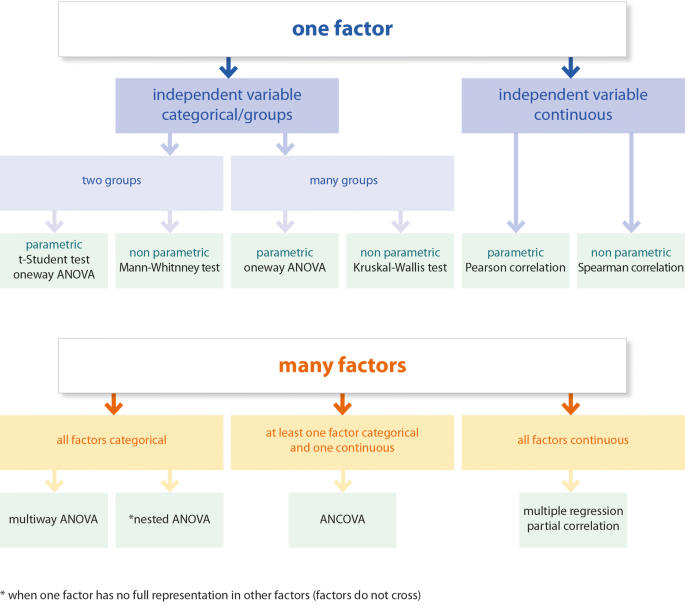

The experimental design is a critical part of study planning. The design must correspond to the aims of the study presented in the Introduction section. In turn, the statistical methods must be suited to the experimental design so that the data analyses will enable the questions stated in the Introduction to be answered. In general, simple experimental designs allow the use of simple methods like t-tests, simple correlations, etc., while more complicated designs (multifactor designs) require more advanced methods (see, Fig. 1 ). Data coming from more advanced designs usually cannot be analysed with simple methods. Therefore, multifactor designs cannot be followed by a simple t-test or even with one-way ANOVA, as factors may not act independently, and in such a case the interpretation of the results of one-way ANOVA may be incorrect. Here, it is particularly important that one may be interested in a concerted action of factors (interaction) or an action of a given factor while controlling for other factors (independent action of a factor). But even with one factor design with more than two levels, one cannot use just a simple t-test with multiple comparisons between groups. In such a case, one-way ANOVA should be performed followed by a post hoc test. The post hoc test can be done only if ANOVA rejects the null hypothesis. There is no point in using the post hoc test if the factors have only two levels (groups). In this case, the differences are already clear after ANOVA.

Test selection chart

Description of statistical methods in the Materials and methods section

It is in the author’s interest to provide the reader with all necessary information to judge whether the statistical tools used in the paper are the most suitable to answer the scientific question and are suited to the data structure. In the Materials and methods section, the experimental design must be described in detail, so that the reader may easily understand how the study was performed and later why such specific statistical methods were chosen. It must be clear whether the study is planned to test the relationships or differences between groups. Here, the reader should already understand the data structure, what the dependent variable is, what the factors are, and should be able to determine, even without being directly informed, whether the factors are categorical or continuous, and whether they are fixed or random. The sample size used in the analysis should be clearly stated. Sometimes sample sizes used in analyses are smaller than the original. This can happen for various reasons, for example if one fails to perform some measurements, and in such a case, the authors must clearly explain why the original sample size differs from the one used in the analyses. There must be a very good reason to omit existing data points from the analyses. Removing the so-called outliers should be an exception rather than the rule.

A description of the statistical methods should come at the end of the Materials and methods section. Here, we start by introducing the statistical techniques used to test predictions formulated in the Introduction. We describe in detail the structure of the statistical model (defining the dependent variable, the independent variables—factors, interactions if present, character of the factors—fixed or random). The variables should be defined as categorical or continuous. In the case of more advanced models, information on the methods of effects estimation or degrees of freedom should be provided. Unless there are good reasons, interactions should always be tested, even if the study is not aimed at testing an interaction. If the interaction is not the main aim of the study, non-significant interactions should be dropped from the model and new analyses without interactions should be carried out and such results reported. If the interaction appears to be significant, one cannot remove it from the model even if the interaction is not the main aim of the study. In such a case, only the interaction can be interpreted, while the interpretation of the main effects is not allowed. The author should clearly describe how the interactions will be dealt with. One may also consider using a model selection procedure which should also be clearly described.

The authors should reassure the reader that the assumptions of the selected statistical technique are fully met. It must be described how the normality of data distribution and homogeneity of variance was checked and whether these assumptions have been met. When performing data transformation, one needs to explain how it was done and whether the transformation helped to fulfil the assumptions of the parametric tests. If these assumptions are not fulfilled, one may apply non-parametric tests. It must be clearly stated why non-parametric tests are performed. Post hoc tests can be performed only when the ANOVA/Kruskal–Wallis test shows significant effects. These tests are valid for the main effects only when the interaction is not included in the model. These tests are also applicable for significant interactions. There are a number of different post hoc tests, so the selected test must be introduced in the materials and methods section.

The significance level is often mentioned in the materials and methods section. There is common consensus among researchers in life sciences for a significance level set at p = 0.05, so it is not strictly necessary to report this conventional level unless the authors always give the I type error (p-value) throughout the paper. If the author sets the significance level at a lower value, which could be the case, for example, in medical sciences, the reader must be informed about the use of a more conservative level. If the significance level is not reported, the reader will assume p = 0.05. In general, it does not matter which statistical software was used for the analyses. However, the outcome may differ slightly between different software, even if exactly the same model is set. Thus, it may be a good practice to report the name of the software at the end of the subsection describing the statistical methods. If the original code of the model analysed is provided, it would be sensible to inform the reader of the specific software and version that was used.

Presentation of the outcome in the Results section

Only the data and the analyses needed to test the hypotheses and predictions stated in the Introduction and those important for discussion should be placed in the Results section. All other outcome might be provided as supplementary materials. Some descriptive statistics are often reported in the Results section, such as means, standard errors (SE), standard deviation (SD), confidence interval (CI). It is of critical importance that these estimates can only be provided if the described data are drawn from a general population with normal distribution; otherwise median values with quartiles should be provided. A common mistake is to provide the results of non-parametric tests with parametric estimates. If one cannot assume normal distribution, providing arithmetic mean with standard deviation is misleading, as they are estimates of normal distribution. I recommend using confidence intervals instead of SE or SD, as confidence intervals are more informative (non-overlapping intervals suggest the existence of potential differences).

Descriptive statistics can be calculated from raw data (measured values) or presented as estimates from the calculated models (values corrected for independent effects of other factors in the model). The issue whether estimates from models or statistics calculated from the raw data provided throughout the paper should be clearly stated in the Materials and methods section. It is not necessary to report the descriptive statistics in the text if it is already reported in the tables or can be easily determined from the graphs.

The Results section is a narrative text which tells the reader about all the findings and guides them to refer to tables and figures if present. Each table and figure should be referenced in the text at least once. It is in the author’s interest to provide the reader the outcome of the statistical tests in such a way that the correctness of the reported values can be assessed. The value of the appropriate statistics (e.g. F, t, H, U, z, r) must always be provided, along with the sample size (N; non-parametric tests) or degrees of freedom (df; parametric tests) and I type error (p-value). The p-value is an important information, as it tells the reader about confidence related to rejecting the null hypothesis. Thus one needs to provide an exact value of I type error. A common mistake is to provide information as an inequality (p < 0.05). There is an important difference for interpretation if p = 0.049 or p = 0.001.

The outcome of simple tests (comparing two groups, testing relationship between two variables) can easily be reported in the text, but in case of multivariate models, one may rather report the outcome in the form of a table in which all factors with their possible interactions are listed with their estimates, statistics and p-values. The results of post hoc tests, if performed, may be reported in the main text, but if one reports differences between many groups or an interaction, then presenting such results in the form of a table or graph could be more informative.

The main results are often presented graphically, particularly when the effects appear to be significant. The graphs should be constructed so that they correspond to the analyses. If the main interest of the study is in an interaction, then it should be depicted in the graph. One should not present interaction in the graph if it appeared to be non-significant. When presenting differences, the mean or median value should be visualised as a dot, circle or some other symbol with some measure of variability (quartiles if a non-parametric test was performed, and SD, SE or preferably confidence intervals in the case of parametric tests) as whiskers below and above the midpoint. The midpoints should not be linked with a line unless an interaction is presented or, more generally, if the line has some biological/logical meaning in the experimental design. Some authors present differences as bar graphs. When using bar graphs, the Y -axis must start from a zero value. If a bar graph is used to show differences between groups, some measure of variability (SD, SE, CI) must also be provided, as whiskers, for example. Graphs may present the outcome of post hoc tests in the form of letters placed above the midpoint or whiskers, with the same letter indicating lack of differences and different letters signalling pairwise differences. The significant differences can also be denoted as asterisks or, preferably, p-values placed above the horizontal line linking the groups. All this must be explained in the figure caption. Relationships should be presented in the form of a scatterplot. This could be accompanied by a regression line, but only if the relationship is statistically significant. The regression line is necessary if one is interested in describing a functional relationship between two variables. If one is interested in correlation between variables, the regression line is not necessary, but could be placed in order to visualise the relationship. In this case, it must be explained in the figure caption. If regression is of interest, then providing an equation of this regression is necessary in the figure caption. Remember that graphs serve to represent the analyses performed, so if the analyses were carried out on the transformed data, the graphs should also present transformed data. In general, the tables and figure captions must be self-explanatory, so that the reader is able to understand the table/figure content without reading the main text. The table caption should be written in such a way that it is possible to understand the statistical analysis from which the results are presented.

Guidelines for the Materials and methods section:

Provide detailed description of the experimental design so that the statistical techniques will be understandable for the reader.

Make sure that factors and groups within factors are clearly introduced.

Describe all statistical techniques applied in the study and provide justification for each test (both parametric and non-parametric methods).

If parametric tests are used, describe how the normality of data distribution and homogeneity of variance (in the case of analysis of variance) was checked and state clearly that these important assumptions for parametric tests are met.

Give a rationale for using non-parametric tests.

If data transformation was applied, provide details of how this transformation was performed and state clearly that this helped to achieve normal distribution/homogeneity of variance.

In the case of multivariate analyses, describe the statistical model in detail and explain what you did with interactions.

If post hoc tests are used, clearly state which tests you use.

Specify the type of software and its version if you think it is important.

Guidelines for presentation of the outcome of statistical analyses in the Results section:

Make sure you report appropriate descriptive statistics—means, standard errors (SE), standard deviation (SD), confidence intervals (CI), etc. in case of parametric tests or median values with quartiles in case of non-parametric tests.

Provide appropriate statistics for your test (t value for t-test, F for ANOVA, H for Kruskal–Wallis test, U for Mann–Whitney test, χ 2 for chi square test, or r for correlation) along with the sample size (non-parametric tests) or degrees of freedom (df; parametric tests).

t 23 = 3.45 (the number in the subscript denotes degree of freedom, meaning the sample size of the first group minus 1 plus the sample size of the second group minus 1 for the test with independent groups, or number of pairs in paired t-test minus 1).

F 1,23 = 6.04 (first number in the subscript denotes degrees of freedom for explained variance—number of groups within factor minus 1, second number denotes degree of freedom for unexplained variance—residual variance). F-statistics should be provided separately for all factors and interactions (only if interactions are present in the model).

H = 13.8, N 1 = 15, N 2 = 18, N 3 = 12 (N 1, N 2, N 3 are sample sizes for groups compared).

U = 50, N 1 = 20, N 2 = 19 for Mann–Whitney test (N 1 and N 2 are sample sizes for groups).

χ 2 = 3.14 df = 1 (here meaning e.g. 2 × 2 contingency table).

r = 0.78, N = 32 or df = 30 (df = N − 2).

Provide exact p-values (e.g. p = 0.03), rather than standard inequality (p ≤ 0.05)

If the results of statistical analysis are presented in the form of a table, make sure the statistical model is accurately described so that the reader will understand the context of the table without referring to the text. Please ensure that the table is cited in the text.

The figure caption should include all information necessary to understand what is seen in the figure. Describe what is denoted by a bar, symbols, whiskers (mean/median, SD, SE, CI/quartiles). If you present transformed data, inform the reader about the transformation you applied. If you present the results of a post hoc test on the graph, please note what test was used and how you denote the significant differences. If you present a regression line on the scatter plot, give information as to whether you provide the line to visualise the relationship or you are indeed interested in regression, and in the latter case, give the equation for this regression line.

Further reading in statistics:

Sokal and Rolf. 2011. Biometry. Freeman.

Zar. 2010. Biostatistical analyses. Prentice Hall.

McDonald, J.H. 2014. Handbook of biological statistics. Sparky House Publishing, Baltimore, Maryland.

Quinn and Keough. 2002. Experimental design and data analysis for biologists. Cambridge University Press.

Author information

Authors and affiliations.

Institute of Environmental Sciences, Jagiellonian University, Gronostajowa 7, 30-376, Kraków, Poland

Mariusz Cichoń

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Mariusz Cichoń .

Rights and permissions

Reprints and permissions

About this article

Cichoń, M. Reporting statistical methods and outcome of statistical analyses in research articles. Pharmacol. Rep 72 , 481–485 (2020). https://doi.org/10.1007/s43440-020-00110-5

Download citation

Published : 15 June 2020

Issue Date : June 2020

DOI : https://doi.org/10.1007/s43440-020-00110-5

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Find a journal

- Publish with us

- Track your research

Have a thesis expert improve your writing

Check your thesis for plagiarism in 10 minutes, generate your apa citations for free.

- Knowledge Base

The Beginner's Guide to Statistical Analysis | 5 Steps & Examples

Statistical analysis means investigating trends, patterns, and relationships using quantitative data . It is an important research tool used by scientists, governments, businesses, and other organisations.

To draw valid conclusions, statistical analysis requires careful planning from the very start of the research process . You need to specify your hypotheses and make decisions about your research design, sample size, and sampling procedure.

After collecting data from your sample, you can organise and summarise the data using descriptive statistics . Then, you can use inferential statistics to formally test hypotheses and make estimates about the population. Finally, you can interpret and generalise your findings.

This article is a practical introduction to statistical analysis for students and researchers. We’ll walk you through the steps using two research examples. The first investigates a potential cause-and-effect relationship, while the second investigates a potential correlation between variables.

Table of contents

Step 1: write your hypotheses and plan your research design, step 2: collect data from a sample, step 3: summarise your data with descriptive statistics, step 4: test hypotheses or make estimates with inferential statistics, step 5: interpret your results, frequently asked questions about statistics.

To collect valid data for statistical analysis, you first need to specify your hypotheses and plan out your research design.

Writing statistical hypotheses

The goal of research is often to investigate a relationship between variables within a population . You start with a prediction, and use statistical analysis to test that prediction.

A statistical hypothesis is a formal way of writing a prediction about a population. Every research prediction is rephrased into null and alternative hypotheses that can be tested using sample data.

While the null hypothesis always predicts no effect or no relationship between variables, the alternative hypothesis states your research prediction of an effect or relationship.

- Null hypothesis: A 5-minute meditation exercise will have no effect on math test scores in teenagers.

- Alternative hypothesis: A 5-minute meditation exercise will improve math test scores in teenagers.

- Null hypothesis: Parental income and GPA have no relationship with each other in college students.

- Alternative hypothesis: Parental income and GPA are positively correlated in college students.

Planning your research design

A research design is your overall strategy for data collection and analysis. It determines the statistical tests you can use to test your hypothesis later on.

First, decide whether your research will use a descriptive, correlational, or experimental design. Experiments directly influence variables, whereas descriptive and correlational studies only measure variables.

- In an experimental design , you can assess a cause-and-effect relationship (e.g., the effect of meditation on test scores) using statistical tests of comparison or regression.

- In a correlational design , you can explore relationships between variables (e.g., parental income and GPA) without any assumption of causality using correlation coefficients and significance tests.

- In a descriptive design , you can study the characteristics of a population or phenomenon (e.g., the prevalence of anxiety in U.S. college students) using statistical tests to draw inferences from sample data.

Your research design also concerns whether you’ll compare participants at the group level or individual level, or both.

- In a between-subjects design , you compare the group-level outcomes of participants who have been exposed to different treatments (e.g., those who performed a meditation exercise vs those who didn’t).

- In a within-subjects design , you compare repeated measures from participants who have participated in all treatments of a study (e.g., scores from before and after performing a meditation exercise).

- In a mixed (factorial) design , one variable is altered between subjects and another is altered within subjects (e.g., pretest and posttest scores from participants who either did or didn’t do a meditation exercise).

- Experimental

- Correlational

First, you’ll take baseline test scores from participants. Then, your participants will undergo a 5-minute meditation exercise. Finally, you’ll record participants’ scores from a second math test.

In this experiment, the independent variable is the 5-minute meditation exercise, and the dependent variable is the math test score from before and after the intervention. Example: Correlational research design In a correlational study, you test whether there is a relationship between parental income and GPA in graduating college students. To collect your data, you will ask participants to fill in a survey and self-report their parents’ incomes and their own GPA.

Measuring variables

When planning a research design, you should operationalise your variables and decide exactly how you will measure them.

For statistical analysis, it’s important to consider the level of measurement of your variables, which tells you what kind of data they contain:

- Categorical data represents groupings. These may be nominal (e.g., gender) or ordinal (e.g. level of language ability).

- Quantitative data represents amounts. These may be on an interval scale (e.g. test score) or a ratio scale (e.g. age).

Many variables can be measured at different levels of precision. For example, age data can be quantitative (8 years old) or categorical (young). If a variable is coded numerically (e.g., level of agreement from 1–5), it doesn’t automatically mean that it’s quantitative instead of categorical.

Identifying the measurement level is important for choosing appropriate statistics and hypothesis tests. For example, you can calculate a mean score with quantitative data, but not with categorical data.

In a research study, along with measures of your variables of interest, you’ll often collect data on relevant participant characteristics.

In most cases, it’s too difficult or expensive to collect data from every member of the population you’re interested in studying. Instead, you’ll collect data from a sample.

Statistical analysis allows you to apply your findings beyond your own sample as long as you use appropriate sampling procedures . You should aim for a sample that is representative of the population.

Sampling for statistical analysis

There are two main approaches to selecting a sample.

- Probability sampling: every member of the population has a chance of being selected for the study through random selection.

- Non-probability sampling: some members of the population are more likely than others to be selected for the study because of criteria such as convenience or voluntary self-selection.

In theory, for highly generalisable findings, you should use a probability sampling method. Random selection reduces sampling bias and ensures that data from your sample is actually typical of the population. Parametric tests can be used to make strong statistical inferences when data are collected using probability sampling.

But in practice, it’s rarely possible to gather the ideal sample. While non-probability samples are more likely to be biased, they are much easier to recruit and collect data from. Non-parametric tests are more appropriate for non-probability samples, but they result in weaker inferences about the population.

If you want to use parametric tests for non-probability samples, you have to make the case that:

- your sample is representative of the population you’re generalising your findings to.

- your sample lacks systematic bias.

Keep in mind that external validity means that you can only generalise your conclusions to others who share the characteristics of your sample. For instance, results from Western, Educated, Industrialised, Rich and Democratic samples (e.g., college students in the US) aren’t automatically applicable to all non-WEIRD populations.

If you apply parametric tests to data from non-probability samples, be sure to elaborate on the limitations of how far your results can be generalised in your discussion section .

Create an appropriate sampling procedure

Based on the resources available for your research, decide on how you’ll recruit participants.

- Will you have resources to advertise your study widely, including outside of your university setting?

- Will you have the means to recruit a diverse sample that represents a broad population?

- Do you have time to contact and follow up with members of hard-to-reach groups?

Your participants are self-selected by their schools. Although you’re using a non-probability sample, you aim for a diverse and representative sample. Example: Sampling (correlational study) Your main population of interest is male college students in the US. Using social media advertising, you recruit senior-year male college students from a smaller subpopulation: seven universities in the Boston area.

Calculate sufficient sample size

Before recruiting participants, decide on your sample size either by looking at other studies in your field or using statistics. A sample that’s too small may be unrepresentative of the sample, while a sample that’s too large will be more costly than necessary.

There are many sample size calculators online. Different formulas are used depending on whether you have subgroups or how rigorous your study should be (e.g., in clinical research). As a rule of thumb, a minimum of 30 units or more per subgroup is necessary.

To use these calculators, you have to understand and input these key components:

- Significance level (alpha): the risk of rejecting a true null hypothesis that you are willing to take, usually set at 5%.

- Statistical power : the probability of your study detecting an effect of a certain size if there is one, usually 80% or higher.

- Expected effect size : a standardised indication of how large the expected result of your study will be, usually based on other similar studies.

- Population standard deviation: an estimate of the population parameter based on a previous study or a pilot study of your own.

Once you’ve collected all of your data, you can inspect them and calculate descriptive statistics that summarise them.

Inspect your data

There are various ways to inspect your data, including the following:

- Organising data from each variable in frequency distribution tables .

- Displaying data from a key variable in a bar chart to view the distribution of responses.

- Visualising the relationship between two variables using a scatter plot .

By visualising your data in tables and graphs, you can assess whether your data follow a skewed or normal distribution and whether there are any outliers or missing data.

A normal distribution means that your data are symmetrically distributed around a center where most values lie, with the values tapering off at the tail ends.

In contrast, a skewed distribution is asymmetric and has more values on one end than the other. The shape of the distribution is important to keep in mind because only some descriptive statistics should be used with skewed distributions.

Extreme outliers can also produce misleading statistics, so you may need a systematic approach to dealing with these values.

Calculate measures of central tendency

Measures of central tendency describe where most of the values in a data set lie. Three main measures of central tendency are often reported:

- Mode : the most popular response or value in the data set.

- Median : the value in the exact middle of the data set when ordered from low to high.

- Mean : the sum of all values divided by the number of values.

However, depending on the shape of the distribution and level of measurement, only one or two of these measures may be appropriate. For example, many demographic characteristics can only be described using the mode or proportions, while a variable like reaction time may not have a mode at all.

Calculate measures of variability

Measures of variability tell you how spread out the values in a data set are. Four main measures of variability are often reported:

- Range : the highest value minus the lowest value of the data set.

- Interquartile range : the range of the middle half of the data set.

- Standard deviation : the average distance between each value in your data set and the mean.

- Variance : the square of the standard deviation.

Once again, the shape of the distribution and level of measurement should guide your choice of variability statistics. The interquartile range is the best measure for skewed distributions, while standard deviation and variance provide the best information for normal distributions.

Using your table, you should check whether the units of the descriptive statistics are comparable for pretest and posttest scores. For example, are the variance levels similar across the groups? Are there any extreme values? If there are, you may need to identify and remove extreme outliers in your data set or transform your data before performing a statistical test.

From this table, we can see that the mean score increased after the meditation exercise, and the variances of the two scores are comparable. Next, we can perform a statistical test to find out if this improvement in test scores is statistically significant in the population. Example: Descriptive statistics (correlational study) After collecting data from 653 students, you tabulate descriptive statistics for annual parental income and GPA.

It’s important to check whether you have a broad range of data points. If you don’t, your data may be skewed towards some groups more than others (e.g., high academic achievers), and only limited inferences can be made about a relationship.

A number that describes a sample is called a statistic , while a number describing a population is called a parameter . Using inferential statistics , you can make conclusions about population parameters based on sample statistics.

Researchers often use two main methods (simultaneously) to make inferences in statistics.

- Estimation: calculating population parameters based on sample statistics.

- Hypothesis testing: a formal process for testing research predictions about the population using samples.

You can make two types of estimates of population parameters from sample statistics:

- A point estimate : a value that represents your best guess of the exact parameter.

- An interval estimate : a range of values that represent your best guess of where the parameter lies.

If your aim is to infer and report population characteristics from sample data, it’s best to use both point and interval estimates in your paper.

You can consider a sample statistic a point estimate for the population parameter when you have a representative sample (e.g., in a wide public opinion poll, the proportion of a sample that supports the current government is taken as the population proportion of government supporters).

There’s always error involved in estimation, so you should also provide a confidence interval as an interval estimate to show the variability around a point estimate.

A confidence interval uses the standard error and the z score from the standard normal distribution to convey where you’d generally expect to find the population parameter most of the time.

Hypothesis testing

Using data from a sample, you can test hypotheses about relationships between variables in the population. Hypothesis testing starts with the assumption that the null hypothesis is true in the population, and you use statistical tests to assess whether the null hypothesis can be rejected or not.

Statistical tests determine where your sample data would lie on an expected distribution of sample data if the null hypothesis were true. These tests give two main outputs:

- A test statistic tells you how much your data differs from the null hypothesis of the test.

- A p value tells you the likelihood of obtaining your results if the null hypothesis is actually true in the population.

Statistical tests come in three main varieties:

- Comparison tests assess group differences in outcomes.

- Regression tests assess cause-and-effect relationships between variables.

- Correlation tests assess relationships between variables without assuming causation.

Your choice of statistical test depends on your research questions, research design, sampling method, and data characteristics.

Parametric tests

Parametric tests make powerful inferences about the population based on sample data. But to use them, some assumptions must be met, and only some types of variables can be used. If your data violate these assumptions, you can perform appropriate data transformations or use alternative non-parametric tests instead.

A regression models the extent to which changes in a predictor variable results in changes in outcome variable(s).

- A simple linear regression includes one predictor variable and one outcome variable.

- A multiple linear regression includes two or more predictor variables and one outcome variable.

Comparison tests usually compare the means of groups. These may be the means of different groups within a sample (e.g., a treatment and control group), the means of one sample group taken at different times (e.g., pretest and posttest scores), or a sample mean and a population mean.

- A t test is for exactly 1 or 2 groups when the sample is small (30 or less).

- A z test is for exactly 1 or 2 groups when the sample is large.

- An ANOVA is for 3 or more groups.

The z and t tests have subtypes based on the number and types of samples and the hypotheses:

- If you have only one sample that you want to compare to a population mean, use a one-sample test .

- If you have paired measurements (within-subjects design), use a dependent (paired) samples test .

- If you have completely separate measurements from two unmatched groups (between-subjects design), use an independent (unpaired) samples test .

- If you expect a difference between groups in a specific direction, use a one-tailed test .

- If you don’t have any expectations for the direction of a difference between groups, use a two-tailed test .

The only parametric correlation test is Pearson’s r . The correlation coefficient ( r ) tells you the strength of a linear relationship between two quantitative variables.

However, to test whether the correlation in the sample is strong enough to be important in the population, you also need to perform a significance test of the correlation coefficient, usually a t test, to obtain a p value. This test uses your sample size to calculate how much the correlation coefficient differs from zero in the population.

You use a dependent-samples, one-tailed t test to assess whether the meditation exercise significantly improved math test scores. The test gives you:

- a t value (test statistic) of 3.00

- a p value of 0.0028

Although Pearson’s r is a test statistic, it doesn’t tell you anything about how significant the correlation is in the population. You also need to test whether this sample correlation coefficient is large enough to demonstrate a correlation in the population.

A t test can also determine how significantly a correlation coefficient differs from zero based on sample size. Since you expect a positive correlation between parental income and GPA, you use a one-sample, one-tailed t test. The t test gives you:

- a t value of 3.08

- a p value of 0.001

The final step of statistical analysis is interpreting your results.

Statistical significance

In hypothesis testing, statistical significance is the main criterion for forming conclusions. You compare your p value to a set significance level (usually 0.05) to decide whether your results are statistically significant or non-significant.

Statistically significant results are considered unlikely to have arisen solely due to chance. There is only a very low chance of such a result occurring if the null hypothesis is true in the population.

This means that you believe the meditation intervention, rather than random factors, directly caused the increase in test scores. Example: Interpret your results (correlational study) You compare your p value of 0.001 to your significance threshold of 0.05. With a p value under this threshold, you can reject the null hypothesis. This indicates a statistically significant correlation between parental income and GPA in male college students.

Note that correlation doesn’t always mean causation, because there are often many underlying factors contributing to a complex variable like GPA. Even if one variable is related to another, this may be because of a third variable influencing both of them, or indirect links between the two variables.

Effect size

A statistically significant result doesn’t necessarily mean that there are important real life applications or clinical outcomes for a finding.

In contrast, the effect size indicates the practical significance of your results. It’s important to report effect sizes along with your inferential statistics for a complete picture of your results. You should also report interval estimates of effect sizes if you’re writing an APA style paper .

With a Cohen’s d of 0.72, there’s medium to high practical significance to your finding that the meditation exercise improved test scores. Example: Effect size (correlational study) To determine the effect size of the correlation coefficient, you compare your Pearson’s r value to Cohen’s effect size criteria.

Decision errors

Type I and Type II errors are mistakes made in research conclusions. A Type I error means rejecting the null hypothesis when it’s actually true, while a Type II error means failing to reject the null hypothesis when it’s false.

You can aim to minimise the risk of these errors by selecting an optimal significance level and ensuring high power . However, there’s a trade-off between the two errors, so a fine balance is necessary.

Frequentist versus Bayesian statistics

Traditionally, frequentist statistics emphasises null hypothesis significance testing and always starts with the assumption of a true null hypothesis.

However, Bayesian statistics has grown in popularity as an alternative approach in the last few decades. In this approach, you use previous research to continually update your hypotheses based on your expectations and observations.

Bayes factor compares the relative strength of evidence for the null versus the alternative hypothesis rather than making a conclusion about rejecting the null hypothesis or not.

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

The research methods you use depend on the type of data you need to answer your research question .

- If you want to measure something or test a hypothesis , use quantitative methods . If you want to explore ideas, thoughts, and meanings, use qualitative methods .

- If you want to analyse a large amount of readily available data, use secondary data. If you want data specific to your purposes with control over how they are generated, collect primary data.

- If you want to establish cause-and-effect relationships between variables , use experimental methods. If you want to understand the characteristics of a research subject, use descriptive methods.

Statistical analysis is the main method for analyzing quantitative research data . It uses probabilities and models to test predictions about a population from sample data.

Is this article helpful?

Other students also liked, a quick guide to experimental design | 5 steps & examples, controlled experiments | methods & examples of control, between-subjects design | examples, pros & cons, more interesting articles.

- Central Limit Theorem | Formula, Definition & Examples

- Central Tendency | Understanding the Mean, Median & Mode

- Correlation Coefficient | Types, Formulas & Examples

- Descriptive Statistics | Definitions, Types, Examples

- How to Calculate Standard Deviation (Guide) | Calculator & Examples

- How to Calculate Variance | Calculator, Analysis & Examples

- How to Find Degrees of Freedom | Definition & Formula

- How to Find Interquartile Range (IQR) | Calculator & Examples

- How to Find Outliers | Meaning, Formula & Examples

- How to Find the Geometric Mean | Calculator & Formula

- How to Find the Mean | Definition, Examples & Calculator

- How to Find the Median | Definition, Examples & Calculator

- How to Find the Range of a Data Set | Calculator & Formula

- Inferential Statistics | An Easy Introduction & Examples

- Levels of measurement: Nominal, ordinal, interval, ratio

- Missing Data | Types, Explanation, & Imputation

- Normal Distribution | Examples, Formulas, & Uses

- Null and Alternative Hypotheses | Definitions & Examples

- Poisson Distributions | Definition, Formula & Examples

- Skewness | Definition, Examples & Formula

- T-Distribution | What It Is and How To Use It (With Examples)

- The Standard Normal Distribution | Calculator, Examples & Uses

- Type I & Type II Errors | Differences, Examples, Visualizations

- Understanding Confidence Intervals | Easy Examples & Formulas

- Variability | Calculating Range, IQR, Variance, Standard Deviation

- What is Effect Size and Why Does It Matter? (Examples)

- What Is Interval Data? | Examples & Definition

- What Is Nominal Data? | Examples & Definition

- What Is Ordinal Data? | Examples & Definition

- What Is Ratio Data? | Examples & Definition

- What Is the Mode in Statistics? | Definition, Examples & Calculator

Click through the PLOS taxonomy to find articles in your field.

For more information about PLOS Subject Areas, click here .

Loading metrics

Open Access

Peer-reviewed

Research Article

A statistical analysis of the novel coronavirus (COVID-19) in Italy and Spain

Roles Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Project administration, Resources, Software, Validation, Visualization, Writing – original draft, Writing – review & editing

* E-mail: [email protected]

Affiliation School of Statistics, Renmin University of China, Beijing, China

- Jeffrey Chu

- Published: March 25, 2021

- https://doi.org/10.1371/journal.pone.0249037

- Reader Comments

The novel coronavirus (COVID-19) that was first reported at the end of 2019 has impacted almost every aspect of life as we know it. This paper focuses on the incidence of the disease in Italy and Spain—two of the first and most affected European countries. Using two simple mathematical epidemiological models—the Susceptible-Infectious-Recovered model and the log-linear regression model, we model the daily and cumulative incidence of COVID-19 in the two countries during the early stage of the outbreak, and compute estimates for basic measures of the infectiousness of the disease including the basic reproduction number, growth rate, and doubling time. Estimates of the basic reproduction number were found to be larger than 1 in both countries, with values being between 2 and 3 for Italy, and 2.5 and 4 for Spain. Estimates were also computed for the more dynamic effective reproduction number, which showed that since the first cases were confirmed in the respective countries the severity has generally been decreasing. The predictive ability of the log-linear regression model was found to give a better fit and simple estimates of the daily incidence for both countries were computed.

Citation: Chu J (2021) A statistical analysis of the novel coronavirus (COVID-19) in Italy and Spain. PLoS ONE 16(3): e0249037. https://doi.org/10.1371/journal.pone.0249037

Editor: Abdallah M. Samy, Faculty of Science, Ain Shams University (ASU), EGYPT

Received: July 14, 2020; Accepted: March 9, 2021; Published: March 25, 2021

Copyright: © 2021 Jeffrey Chu. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Data Availability: The raw data files for the incidence of COVID-19 in Italy and Spain are available from the following links: https://github.com/pcm-dpc/COVID-19 https://github.com/datadista/datasets/tree/master/COVID%2019 .

Funding: The author(s) received no specific funding for this work.

Competing interests: The authors have declared that no competing interests exist.

Introduction

The novel coronavirus (COVID-19) was widely reported to have first been detected in Wuhan (Hebei province, China) in December 2019. After the initial outbreak, COVID-19 continued to spread to all provinces in China and very quickly spread to other countries within and outside of Asia. At present, over 45 million cases of infected individuals have been confirmed in over 180 countries with in excess of 1 million deaths [ 1 ]. Although the foundations of this disease are very similar to the severe acute respiratory syndrome (SARS) virus that took hold of Asia in 2003, it is shown to spread much more easily and there currently exists no vaccine.

Since the first confirmed cases were reported in China, much of the literature has focused on the outbreak in China including the transmission of the disease, the risk factors of infection, and the biological properties of the virus—see for example key literature such as [ 2 – 6 ]. However, more recent literature has started to cover an increasing number of regions outside of China.

For example, studies covering the wider Asia region include: investigations into the outbreak on board the Diamond Princess cruise ship in Japan, using a Bayesian framework with a Hamiltonian Monte Carlo algorithm [ 7 ]; estimation of the ascertainment rate in Japan using a Poisson process [ 8 ]; modelling the evolution of the basic and effective reproduction numbers in South Korea using Susceptible-Infected-Susceptible models [ 9 ] and generalised growth models with varying growth rates [ 10 ]; modelling the basic reproduction number in India with a classical Susceptible-Exposed-Infectious-Recovered-type compartmental model [ 11 ]; forecasting numbers of cases in Indian states using deep learning-based models [ 12 ].

Analyses on North and South America have also used similar classical methods, for example [ 13 ] model the progression of the outbreak in the United States until the end of 2021 with the simple Susceptible-Infected-Recovered model, and [ 14 ] predict epidemic trends in Brazil and Peru using a logistic growth model and machine learning techniques. However, other studies include: analysis of the spatial variability of the incidence in the United States using spatial lag and error models, and geographically weighted regression [ 15 ]; estimation of the number of deaths in the United States using a modified logistic fault-dependent detection model [ 16 ]; estimating prevalence and infection rates across different states in the United States using a sample selection model [ 17 ]; investigating the relationship between social media communication and the incidence in Colombia using non-linear regression models.

Focusing on Africa, [ 18 ] simulate and predict the spread of the disease in South Africa, Egypt, Algeria, Nigeria, Senegal, and Kenya, using a modified Susceptible-Exposed-Infectious-Recovered model; [ 19 ] apply a six-compartmental model to model the transmission in South Africa; [ 20 ] predict the spread of the disease in West Africa using a deterministic Susceptible-Exposed-Infectious-Recovered model; [ 21 ] implement Autoregressive Integrated Moving Average models to forecast the prevalence of COVID-19 in East Africa; [ 22 ] predict the spread of the disease using travel history and personal contact in Nigeria through ordinary least squares regression; [ 23 ] use logistic growth and Susceptible-Infected-Recovered models to generate real-time forecasts of daily confirmed cases in Saudi Arabia.