Distributed Systems Design - Netflix

Feb 16, 2021 / Karim Elatov / netflix , spark , kafka , elasticsearch , cdn

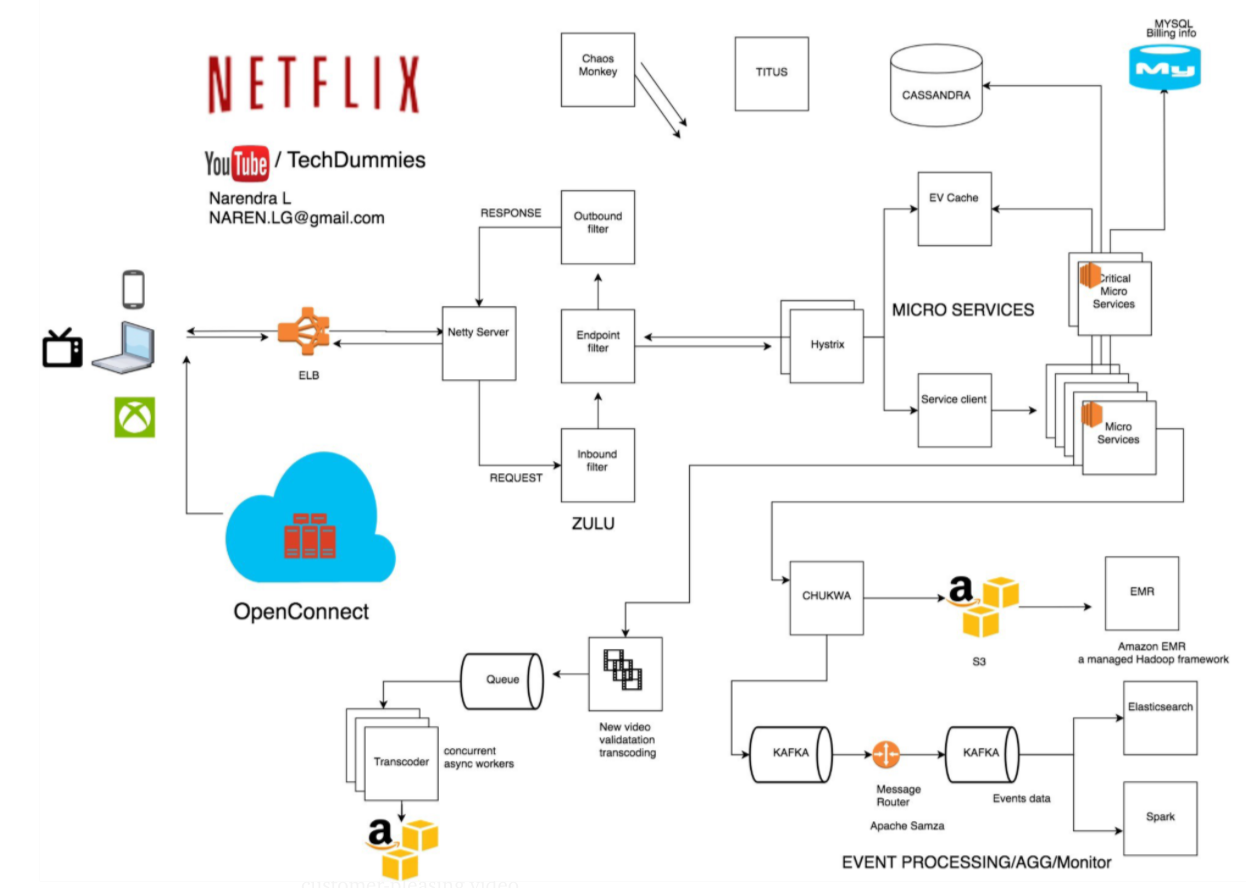

Let’s try to cover some of the components of the Netflix Architecture. As with the twitter post I ran into a bunch of resources on this topic:

- Designing Youtube or Netflix

- Netflix: What Happens When You Press Play?

- NETFLIX system design

And here are some nice YouTube Videos:

- NETFLIX System design, software architecture for netflix

- Netflix System Design, Media Streaming Platform

- Netflix System Design, YouTube System Design

And here are some nice diagrams I ran into:

I am going to break down into parts that stuck out to me.

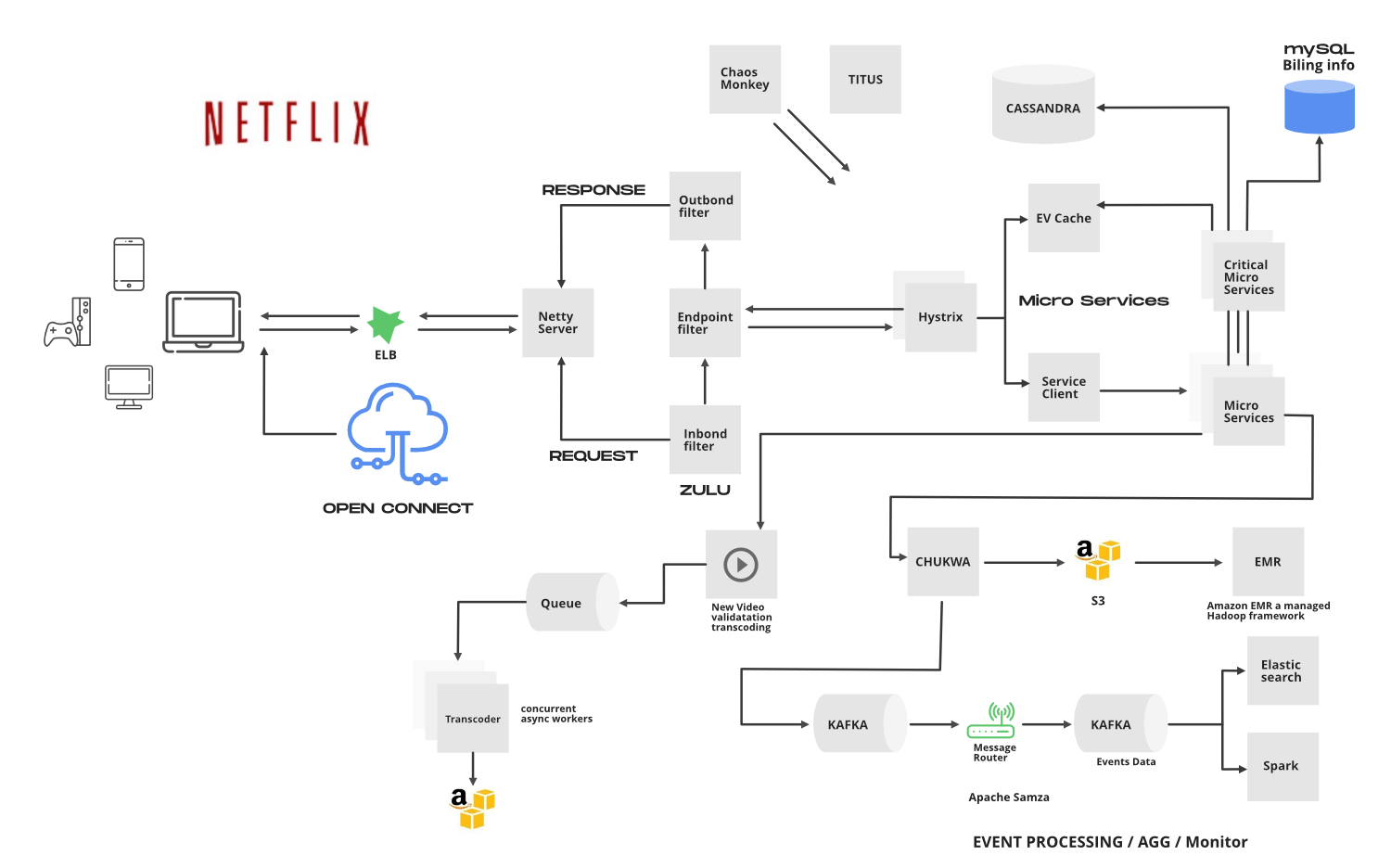

Uploading a Video

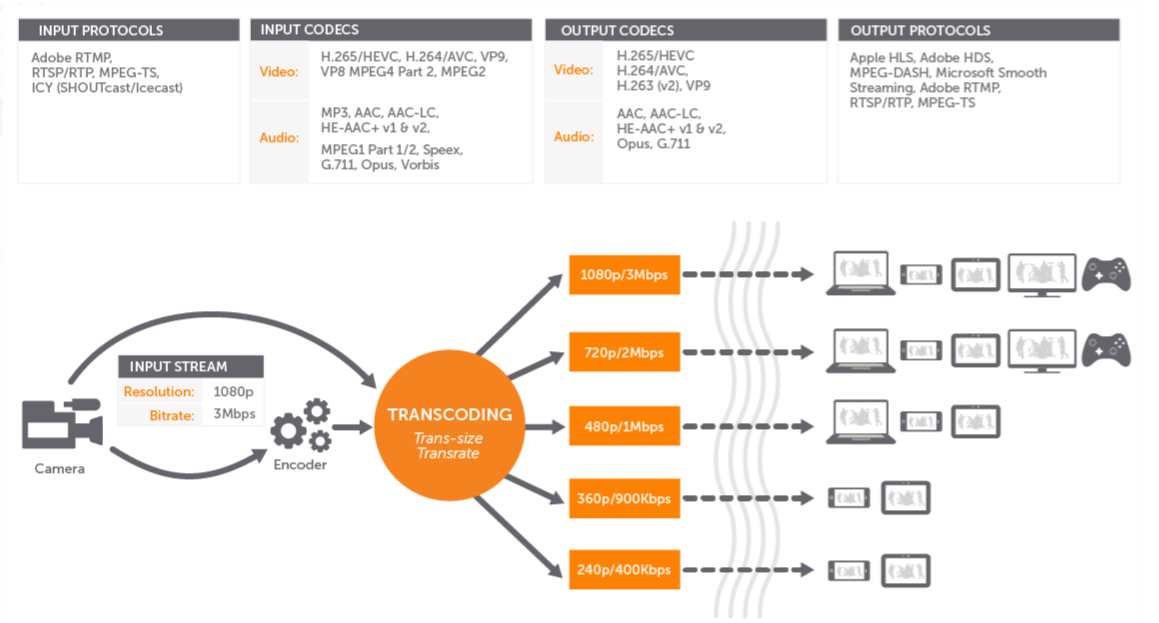

When a user uploads a video a lot happens in the back ground. Some things that were interesting to me is that the video is first broken apart into many chunks and then each chunk is transcoded or encoded into multiple different formats. This way it’s preparing the contents to be played by multiple different players and also different resolutions based on the bandwidth.

At the same time other processes are kicked off to update the analytics pipeline and the search databases. At the end the chunk is written to cache which is closest to the user, but more on that later.

Spark and Elasticsearch

Spark and ElasticSearch are used for analytics and search capabilities. Spark is focused on processing data in parallel across a cluster of multiple compute nodes. A lot of the times Spark is compared with Hadoop… one of the differences between them is Hadoop reads and writes files to HDFS, while Spark processes data in RAM using a concept known as an RDD, Resilient Distributed Dataset. Both products are used for processing lots of data in a distributed manner and that output of the data can provide analytics.

Elasticsearch is a search engine which is based on Apache Lucene. Elasticsearch is a NoSQL database which means it stores data in an unstructured way and while you can’t run complex queries on it like with a typical RDBMS, you can shard the data across multiple nodes a lot easier. There are many different NoSQL databases out there, however unlike most , Elasticsearch has a strong focus on search capabilities.

Since there are a couple of processes happening at the same time how do they all get started? Whenever you are streaming new data and want to communicate across multiple products/processes a queue comes to mind. Kafka is a distributed streaming platform that is used publish and subscribe to streams of records. Kafka replicates topic log partitions to multiple servers. Kafka is used for real-time streams of data, to collect big data, or to do real time analysis (or both). While Kafka is great choice for distributing your message queue it also comes with a caveat and that is ordering. As with any messaging queue there is a producer and a subscriber, and they use a topic to communicate. When the topic is partitioned into multiple parts (or sharded) to allow for scalability we can run into a scenario where messages are placed into different partitions and we don’t know in which order the producer sent them to each partition. There are actually a couple of pretty cool posts on this: A Visual Understanding to Ensuring Your Kafka Data is (Literally) in Order and Does Kafka really guarantee the order of messages?

Whenever you have a microservice architecture (where each service is responsible for a small action) a message queue comes it handy to coordinate between all the services. Since netflix uses a lot of miroservices to scale the processing of the data, kafka plays a major role to ensuring a successful result is the outcome.

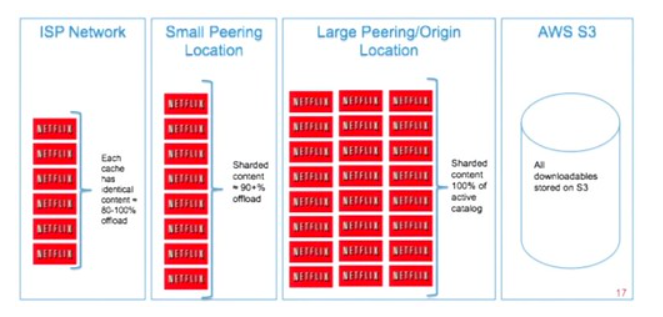

CDN (Content Delivery Network)

After the media file is chunked and transcoded into many pieces it is placed into an amazon blob storage (AWS S3).

A BLOB storage is a binary large object data store. A lot of cloud providers provide one: AWS S3, GCP Cloud Storage, and Azure Blob Storage. They offer performance and scalability of cloud with advanced security and sharing capabilities. You are able to store large files which are automatically sharded across multiple regions and provide durability. Since AWS S3 provided their service across multiple regions it was a natural fit for netflix.

OCA (Open Connect Appliance)

While there are multiple CDNs (Fastly, Akamai, and cloudflare) Netflix decided to create it’s own appliance to cache the media files from the S3 Buckets. And they placed their appliances at the ISPs datacenters so they can be closer to the users:

CDN Caching Capabilities

A content delivery network (CDN) is a globally distributed network of servers, serving content from locations closer to the user across multiple geolocations. Generally, static files such as HTML/CSS/JS, photos, and videos are served from CDN. The are a couple of concepts here, like a Cache Hit (which is when you get the data you wanted from the cache) and there is also a Cache Miss (which is when the data you request is not in the cache and the cache system has to go to the backend to retrieve the data and store it in the cache). Usually the first request ends up in a Cache Miss. You can also set a TTL when the data is expired from the cache so you can provide space for other data to be cached.

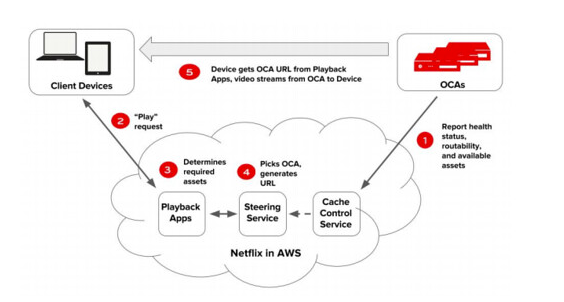

Client playing a video

Now that we have all the components created all we have to do is forward the client to the appropriate cache location closest to the client. This is handled my another microservice called the steering service it figures out which location is the best and then forwards the user there:

- Product Engineering And Development Simform acts as a strategic software engineering partner to build products designed to cater the unique requirements of each client. From rapid prototyping to iterative development, we help you validate your idea and make it a reality.

- Performance Engineering and Testing Our service portfolio offers a full spectrum of world-class performance engineering services. We employ a dual-shift approach to help you plan capacity proactively for increased ROI and faster delivery.

- Digital Experience Design Work with cross-functional teams of smart designers and product visionaries to create incredible UX and CX experiences. Simform pairs human-centric design thinking methodologies with industry-led tech expertise to transform user journeys and create incredible digital experience designs.

- Application Management and Modernization Simform’s application modernization experts enable IT leaders to create a custom roadmap and help migrate to modern infrastructure using cloud technologies to generate better ROI and reduce cloud expenditure.

- Project Strategy At Simform, we don’t just build digital products, but we also define project strategies to improve your organization’s operations. We use Agile software development with DevOps acceleration, to improve the software delivery process and encourage reliable releases that bring exceptional end-user experience.

- Cloud Native App Development Build, test, deploy, and scale on the cloud

- Cloud Consulting Audit cloud infrastructure, optimize cost and maximize cloud ROI

- Microservice Architecture Remodel your app into independent and scalable microservices

- Kubernetes Consulting Container orchestration made simple

- Cloud Migration Consulting Assess, discover, design, migrate and optimize the cloud workloads

- Cloud Assessment Assess cloud spending, performance, and bottlenecks

- Serverless Seize the power of auto-scaling and reduced operational costs

- Cloud Architecture Design Optimize your architecture to scale effectively

- DevOps Consulting DevOps implementation strategies to accelerate software delivery

- Infrastructure Management and Monitoring Competently setup, configure, monitor and optimize the cloud infrastructure

- Containerization and Orchestration Reliably manage the lifecycle of containers in large and dynamic environments

- Infrastructure as a Code Manage and provision IT infrastructure though code

- CI/CD Implementation Automate and efficiently manage complex software development

- BI and Data Engineering Our Data and BI experts help you bridge the gap between your data sources and business goals to analyze and examine data, gather meaningful insights, and make actionable business decisions.

- Test Automation Reduce manual testing and focus on improving the turnaround time

- Microservice Testing Make your microservices more reliable with robust testing

- API Testing Build safer application and system integrations

- Performance Testing Identify performance bottlenecks and build a stable product

- Load Testing Achieve consistent performance under extreme load conditions

- Security Testing Uncover vulnerabilities and mitigate malicious threats

- Technology Partnerships Reap benefits of our partnerships with top infrastructure platforms

- Process Management Right processes to deliver competitive digital products

- SaaS Development Services Build competitive SaaS apps with best experts & tools

- Cloud Migration Scale your infrastructure with AWS cloud migration

- Cloud Solutions for SMB Make your business smarter with AWS SMB

- Data Engineering Collect, process, and analyze data with AWS data engineering

- Serverless and Orchestration Manage complex workflows and ensure optimal resource utilization

- Cloud Management Improve AWS efficiency, automation, and visibility for better cloud operations

- AWS DevOps Consulting Accelerate the development of scalable cloud-native applications

- Advertising and Marketing Technology Transform customer engagement with AWS Advertising expertise

- AWS Retail Services Improve customer engagement and address retail challenges efficiently.

- AWS Healthcare Services Improve patient care and streamline operations with AWS

- AWS Supply Chain Services Achieve supply chain efficiency and real-time visibility

- AWS Finance Services Accelerate financial innovation with AWS expertise

- Technology Comparisons

- How it works

How Netflix Became A Master of DevOps? An Exclusive Case Study

Find out how Netflix excelled at DevOps without even thinking about it and became a gold standard in the DevOps world.

Table of Contents

- Netflix's move to the cloud

Netflix’s Chaos Monkey and the Simian Army

Netflix’s container journey, netflix’s “operate what you build” culture, lessons we can learn from netflix’s devops strategy, how simform can help.

Even though Netflix is an entertainment company, it has left many top tech companies behind in terms of tech innovation. With its single video-streaming application, Netflix has significantly influenced the technology world with its world-class engineering efforts, culture, and product development over the years.

One such practice that Netflix is a fantastic example of is DevOps. Their DevOps culture has enabled them to innovate faster, leading to many business benefits. It also helped them achieve near-perfect uptime, push new features faster to the users, and increase their subscribers and streaming hours.

With nearly 214 million subscribers worldwide and streaming in over 190 countries , Netflix is globally the most used streaming service today. And much of this success is owed to its ability to adopt newer technologies and its DevOps culture that allows them to innovate quickly to meet consumer demands and enhance user experiences. But Netflix doesn’t think DevOps.

So how did they become the poster child of DevOps? In this case study, you’ll learn about how Netflix organically developed a DevOps culture with out-of-the-box ideas and how it benefited them.

Simform is a leading DevOps consulting and implementation company , helping businesses build innovative products that meet dynamic user demands efficiently. To grow your business with DevOps, contact us today!

Netflix’s move to the cloud

It all began with the worst outage in Netflix’s history when they faced a major database corruption in 2008 and couldn’t ship DVDs to their members for three days. At the time, Netflix had roughly 8.4 million customers and one-third of them were affected by the outage. It prompted Netflix to move to the cloud and give their infrastructure a complete makeover. Netflix chose AWS as its cloud partner and took nearly seven years to complete its cloud migration.

Netflix didn’t just forklift the systems and dump them into AWS. Instead, it chose to rewrite the entire application in the cloud to become truly cloud-native, which fundamentally changed the way the company operated. In the words of Yury Izrailevsky, Vice President, Cloud and Platform Engineering at Netflix:

“We realized that we had to move away from vertically scaled single points of failure, like relational databases in our datacenter, towards highly reliable, horizontally scalable, distributed systems in the cloud.”

As a significant part of their transformation, Netflix converted its monolithic, data center-based Java application into cloud-based Java microservices architecture. It brought about the following changes:

- Denormalized data model using NoSQL databases

- Enabled teams at Netflix to be loosely coupled

- Allowed teams to build and push changes at the speed that they were comfortable with

- Centralized release coordination

- Multi-week hardware provisioning cycles led to continuous delivery

- Engineering teams made independent decisions using self-service tools

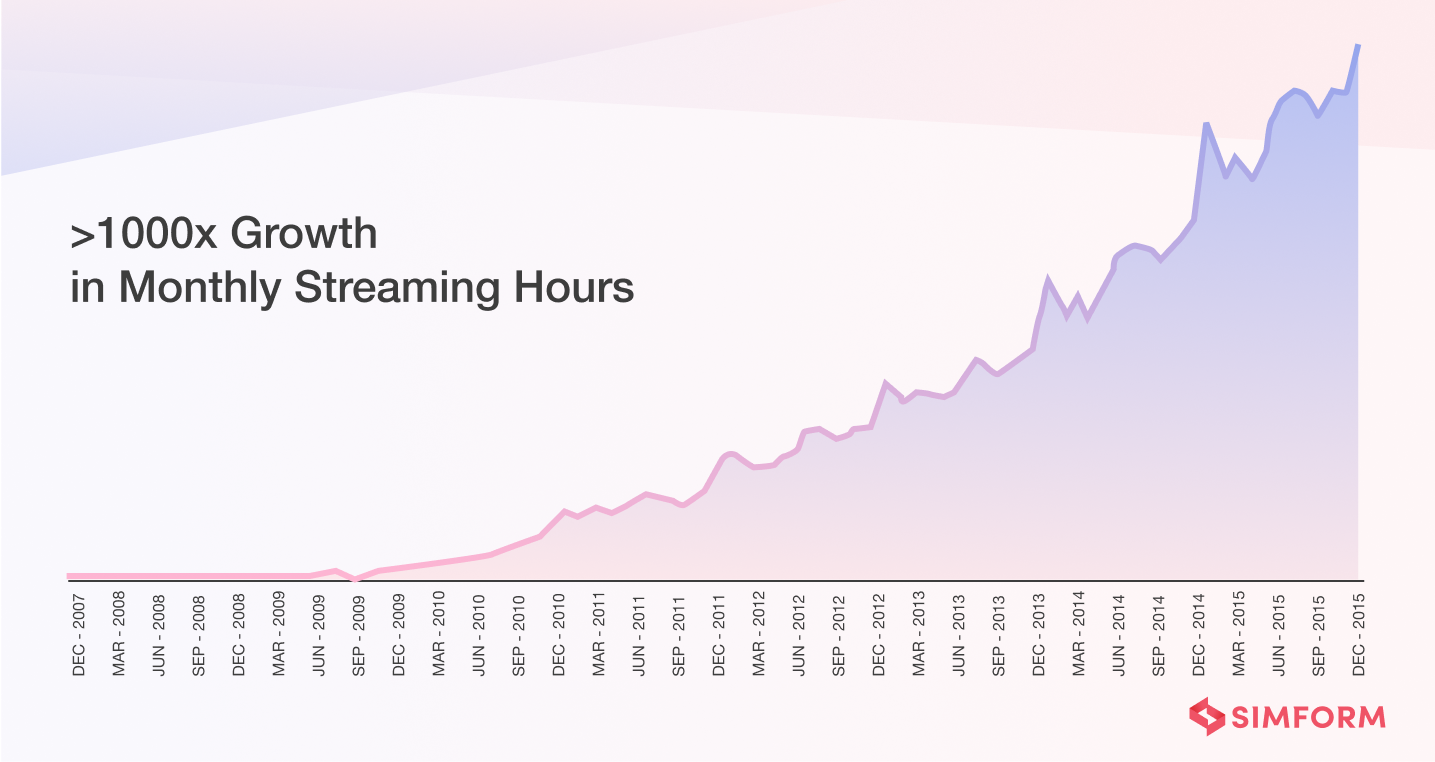

As a result, it helped Netflix accelerate innovation and stumble upon the DevOps culture. Netflix also gained eight times as many subscribers as it had in 2008. And Netflix’s monthly streaming hours also grew a thousand times from Dec 2007 to Dec 2015.

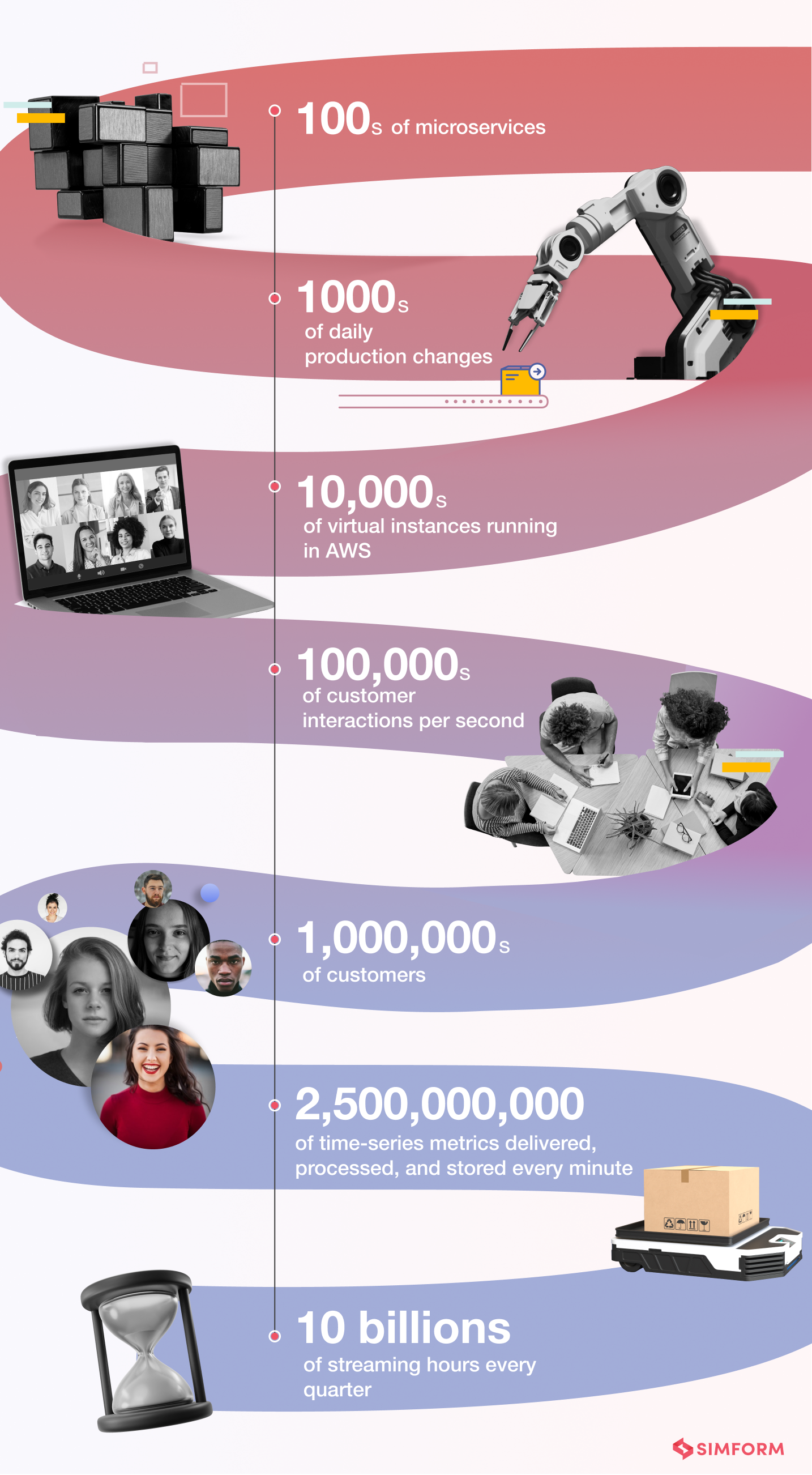

After completing their cloud migration to AWS by 2016, Netflix had:

And it handled all of the above with 0 Network Ops Centers and some 70 operations engineers, who were all software engineers focusing on writing tools that enabled other software developers to focus on things they were good at.

Migrating to the cloud made Netflix resilient to the kind of outages it faced in 2008. But they wanted to be prepared for any unseen errors that could cause them equivalent or worse damage in the future.

Engineers at Netflix perceived that the best way to avoid failure was to fail constantly. And so they set out to make their cloud infrastructure more safe, secure, and available the DevOps way – by automating failure and continuous testing.

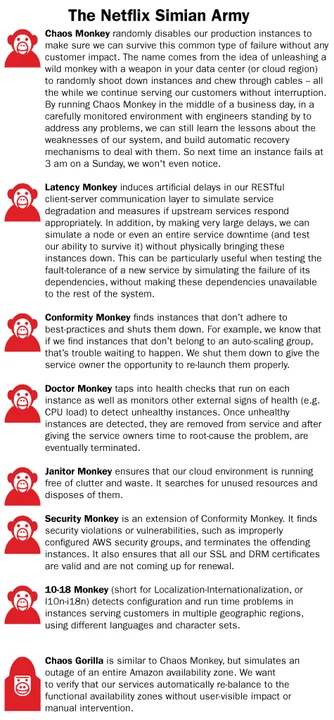

Chaos Monkey

Netflix created Chaos Monkey, a tool to constantly test its ability to survive unexpected outages without impacting the consumers. Chaos Monkey is a script that runs continuously in all Netflix environments, randomly killing production instances and services in the architecture. It helped developers:

- Identify weaknesses in the system

- Build automatic recovery mechanisms to deal with the weaknesses

- Test their code in unexpected failure conditions

- Build fault-tolerant systems on day to day basis

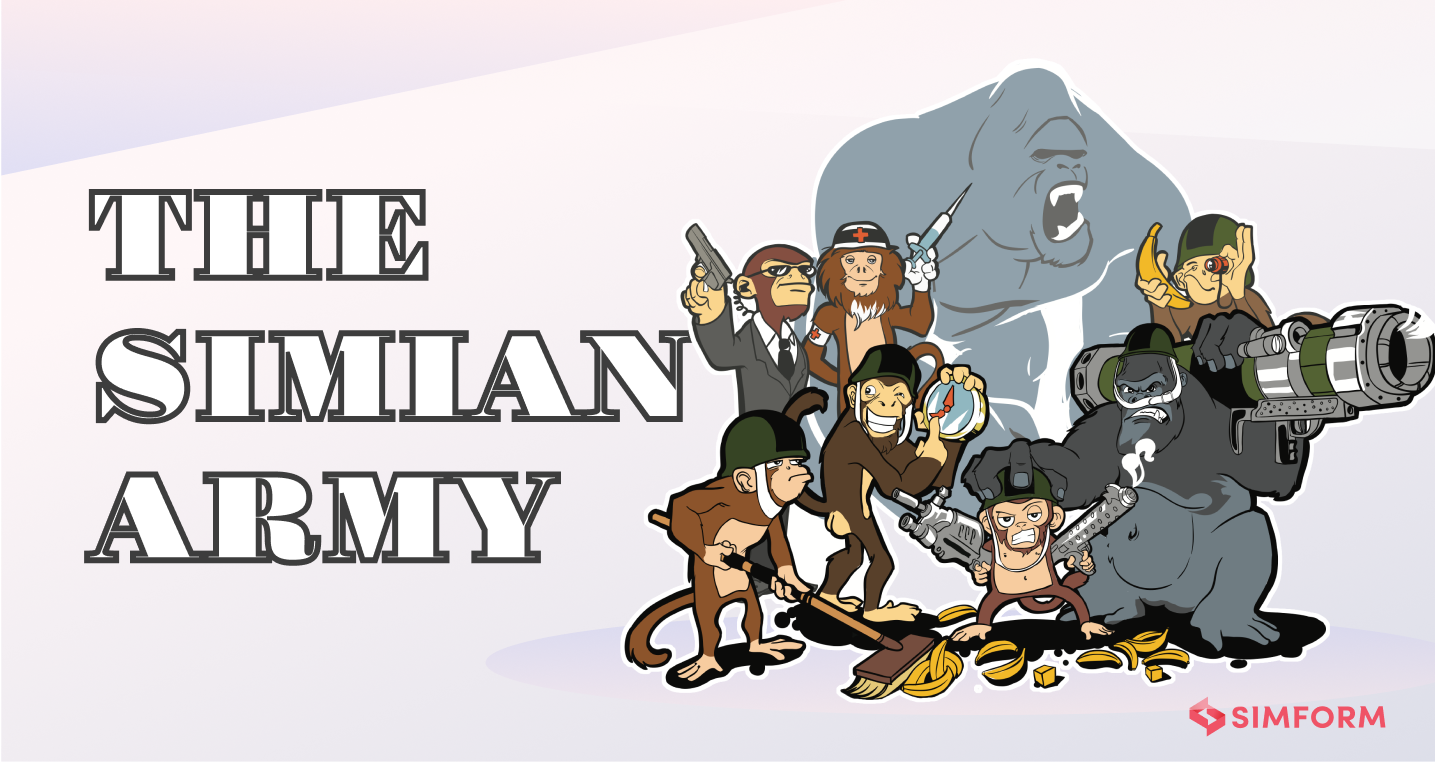

The Simian Army

After their success with Chaos Monkey, Netflix engineers wanted to test their resilience to all sorts of inevitable failures, detect abnormal conditions. So, they built the Simian Army , a virtual army of tools discussed below.

- Latency Monkey

It creates false delays in the RESTful client-server communication layers, simulating service degradation and checking if the upstream services respond correctly. Moreover, creating very large delays can simulate an entire service downtime without physically bringing it down and testing the ability to survive. The tool was particularly useful to test new services by simulating the failure of dependencies without affecting the rest of the system.

- Conformity Monkey

It looks for instances that do not adhere to the best practices and shuts them down, giving the service owner a chance to re-launch them properly.

- Doctor Monkey

It detects unhealthy instances by tapping into health checks running on each instance and also monitors other external health signs (such as CPU load). The unhealthy instances are removed from service and terminated after service owners identify the root cause of the problem.

- Janitor Monkey

It ensures the cloud environment runs without clutter and waste. It also searches for unused resources and discards them.

- Security Monkey

An extension of Conformity Monkey, it identifies security violations or vulnerabilities (e.g., improperly configured AWS security groups) and eliminates the offending instances. It also ensures the SSL (Secure Sockets Layer) and DRM (Digital Rights Management) certificates were valid and not due for renewal.

- 10-18 Monkey

Short for Localization-Internationalization, it identifies configuration and runtime issues in instances serving users in multiple geographic locations with different languages and character sets.

- Chaos Gorilla

Like Chaos Monkey, the Gorilla simulates an outage of a whole Amazon availability zone to verify if the services automatically re-balance to the functional availability zones without manual intervention or any visible impact on users.

Today, Netflix still uses Chaos Engineering and has a dedicated team for chaos experiments called the Resilience Engineering team (earlier called the Chaos team).

In a way, Simian Army incorporated DevOps principles of automation, quality assurance, and business needs prioritization. As a result, it helped Netflix develop the ability to deal with unexpected failures and minimize their impact on users.

On 21st April 2011 , AWS experienced a large outage in the US East region, but Netflix’s streaming ran without any interruption. And on 24th December 2012 , AWS faced problems in Elastic Load Balancer(ELB) services, but Netflix didn’t experience an immediate blackout. Netflix’s website was up throughout the outage, supporting most of their services and streaming, although with higher latency on some devices.

Netflix had a cloud-native, microservices-driven VM architecture that was amazingly resilient, CI/CD enabled, and elastically scalable. It was more reliable, with no SPoFs (single points of failure) and small manageable software components. So why did they adopt container technology? The major factors that prompted Netflix’s investment in containers are:

- Container images used in local development are very similar to those run in production. This end-to-end packaging allows developers to build and test applications easily in production-like environments, reducing development overhead.

- Container images help build application-specific images easily.

- Containers are lightweight, allowing building and deploying them faster than VM infrastructure.

- Containers only have what a single application needs, are smaller and densely packed, which reduces overall infrastructure cost and footprint.

- Containers improve developer productivity, allowing them to develop, deploy, and innovate faster.

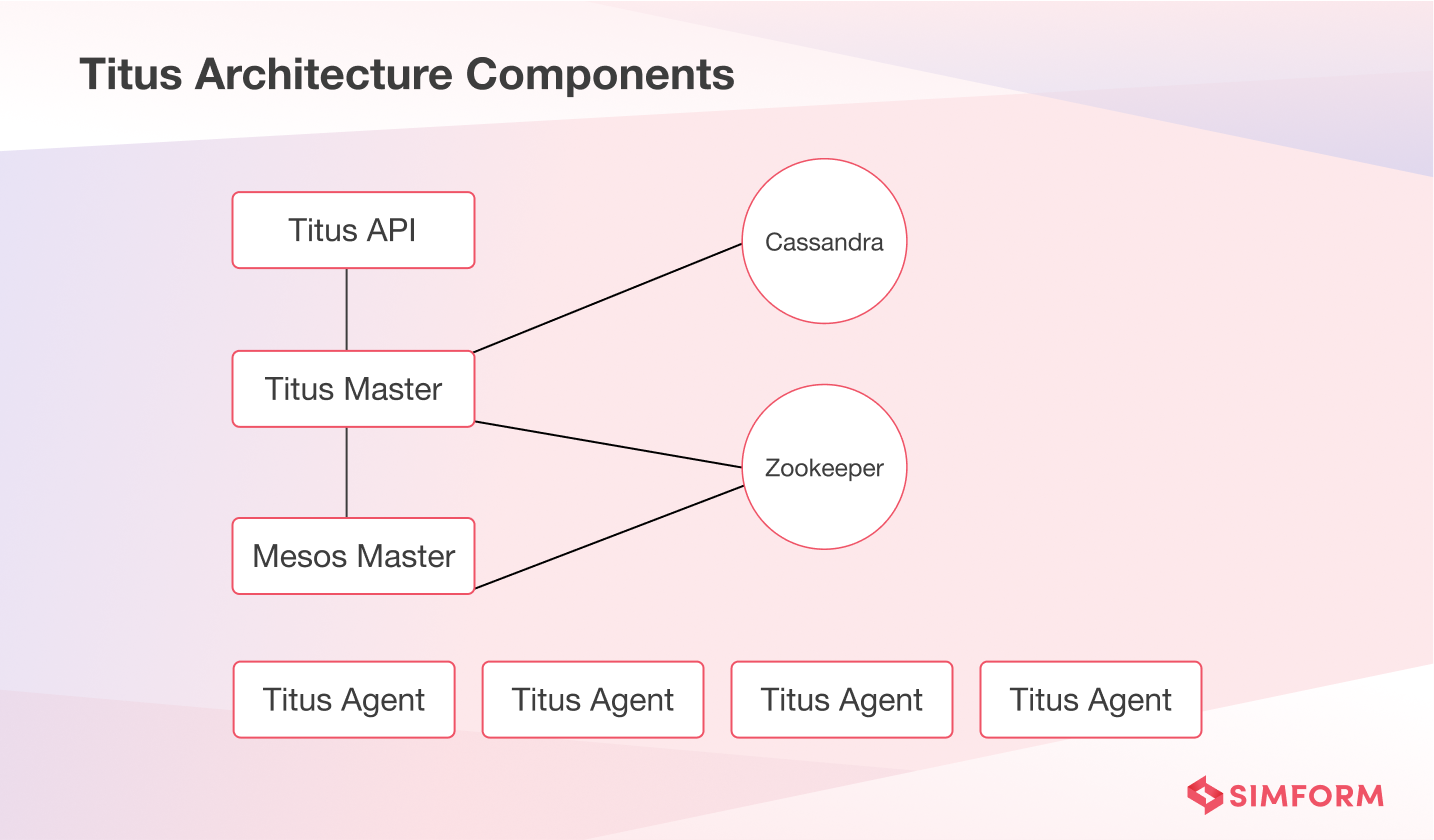

Moreover, Netflix teams had already started using containers and seen tangible benefits. But they faced some challenges such as migrating to containers without refactoring, ensuring seamless connectivity between VMs and containers, and more. As a result, Netflix designed a container management platform called Titus to meet its unique requirements.

Titus provided a scalable and reliable container execution solution to Netflix and seamlessly integrated with AWS. In addition, it enabled easy deployment of containerized batches and service applications.

Titus served as a standard deployment unit and a generic batch job scheduling system. It helped Netflix expand support to growing batch use cases.

- Batch users could also put together sophisticated infrastructure quickly and pack larger instances across many workloads efficiently. Batch users could immediately schedule locally developed code for scaled execution on Titus.

- Beyond batch, service users benefited from Titus with simpler resource management and local test environments consistent with production deployment.

- Developers could also push new versions of applications faster than before.

Overall, Titus deployments were done in one or two minutes which took tens of minutes earlier. As a result, both batch and service users could experiment locally, test quickly and deploy with greater confidence than before.

“The theme that underlies all these improvements is developer innovation velocity.”

-Netflix tech blog

This velocity enabled Netflix to deliver fast features to the customers, making containers extremely important for their business.

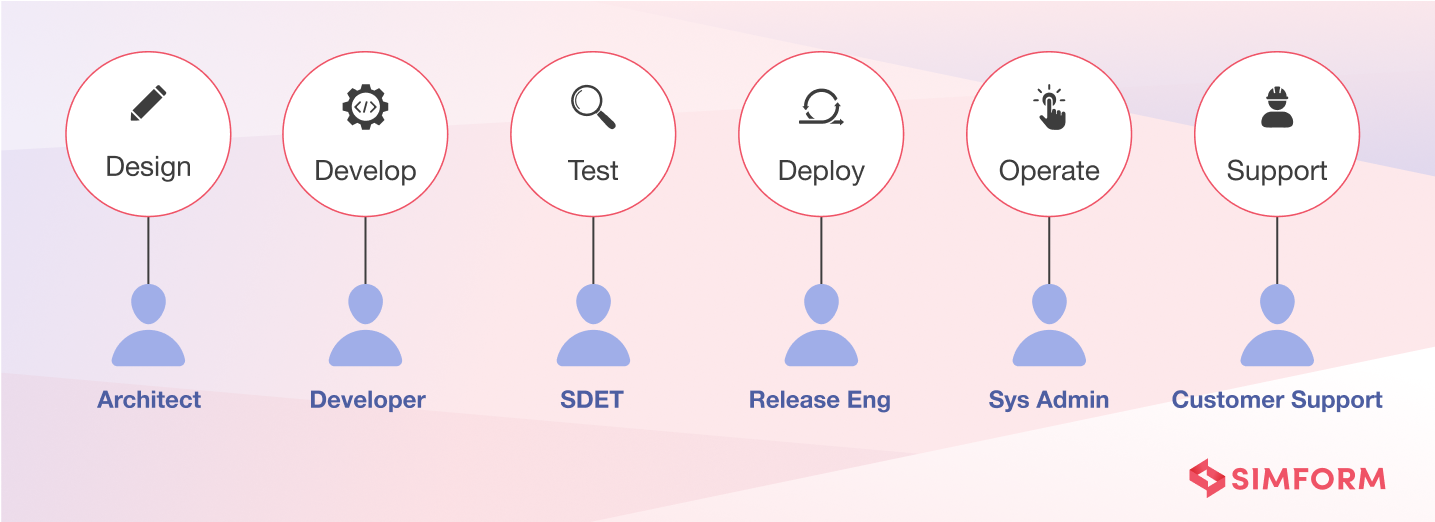

Netflix invests and experiments significantly in improving development and operations for the engineering teams. But before Netflix adopted the “Operate what you build” model, it had siloed teams. The Ops teams focused on deploy, operate and support parts of the software life cycle. And Developers handed off the code to the ops team for deployment and operation. So each stage in the SDLC was owned by a different person and looked like this:

The specialized roles created efficiencies within each segment but created inefficiencies across the entire SDLC. The issues that they faced were:

- Individual silos that slowed down end-to-end progress

- Added communication overhead, bottlenecks and hampered effectiveness of feedback loops

- Knowledge transfers between developers and ops/SREs were lossy

- Higher time-to-detect and time-to-resolve for deployment problems

- Longer gaps between code complete and deployment, with releases taking weeks

Operate what you build

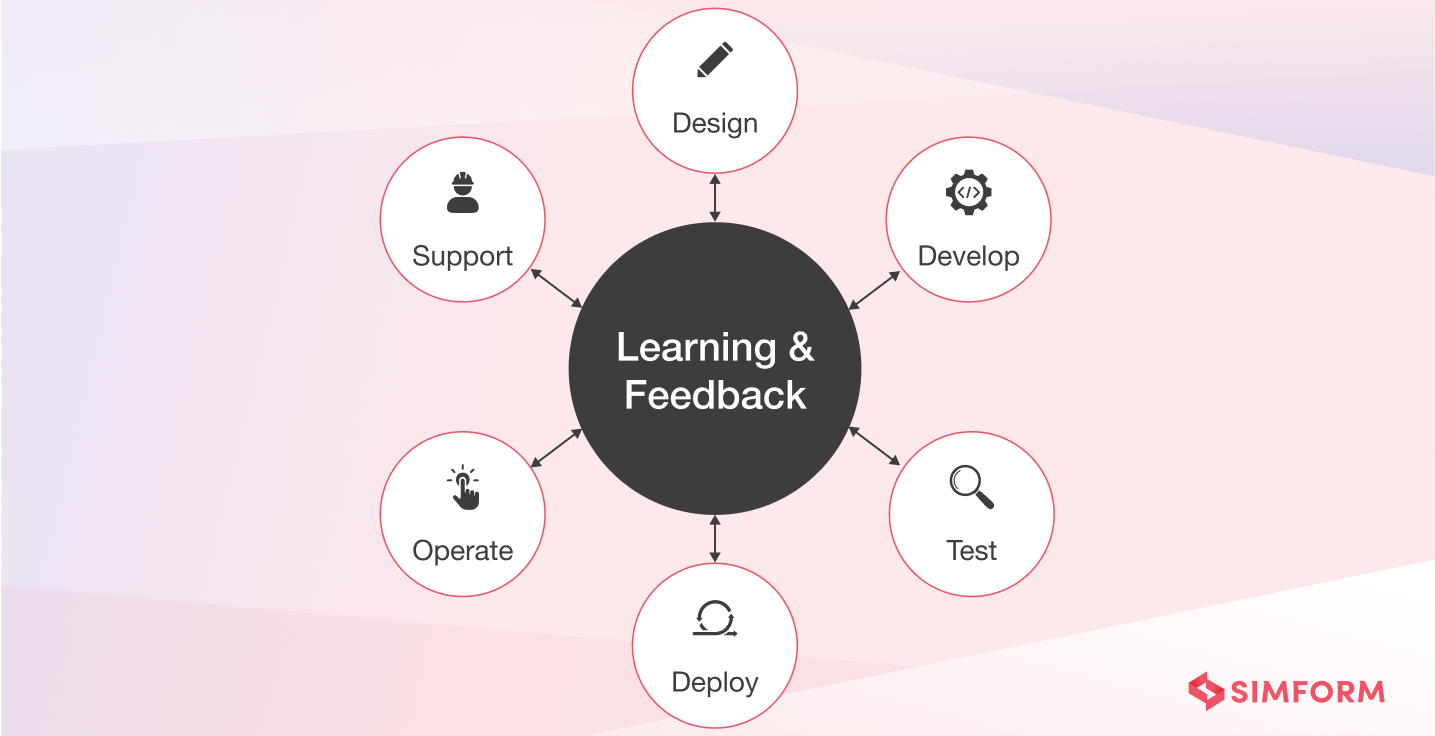

To deal with the above challenges and drawing inspiration from DevOps principles, Netflix encouraged shared ownership of the full SDLC and broke down silos. The teams developing a system were responsible for operating and supporting it. Each team owned its own deployment issues, performance bugs, alerting gaps, capacity planning, partner support, and so on.

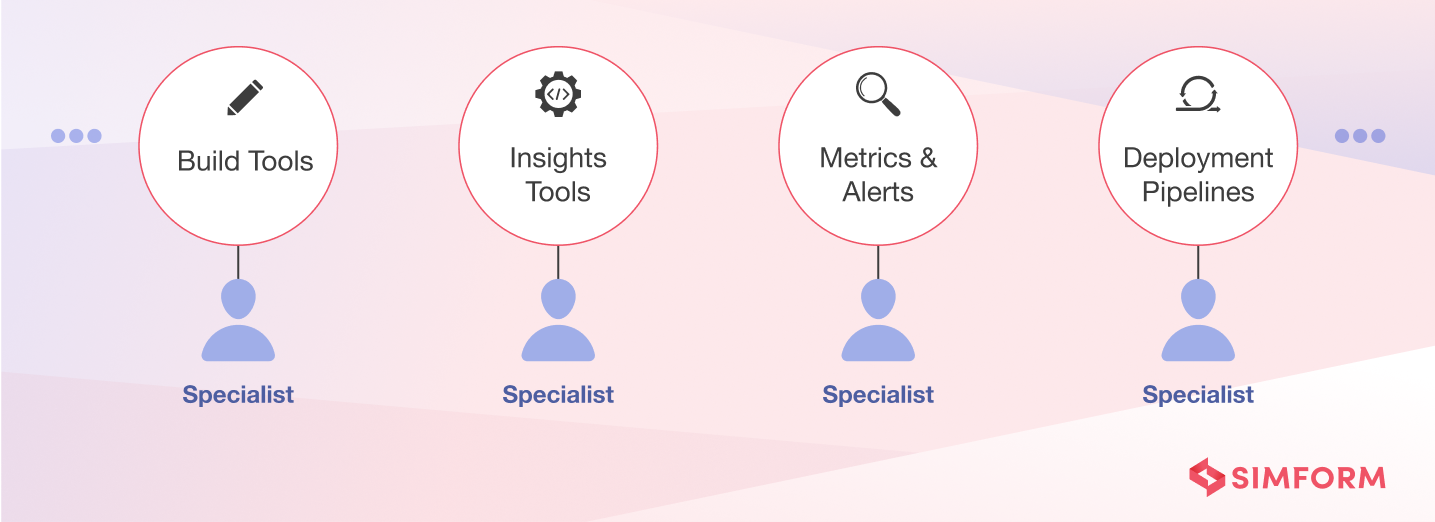

Moreover, they also introduced centralized tooling to simplify and automate dealing with common development problems of the teams. When additional tooling needs arise, the central team assesses if the needs are common across multiple development teams and built tools. In case of too team-specific problems, the development team decides if their need is important enough to solve on their own.

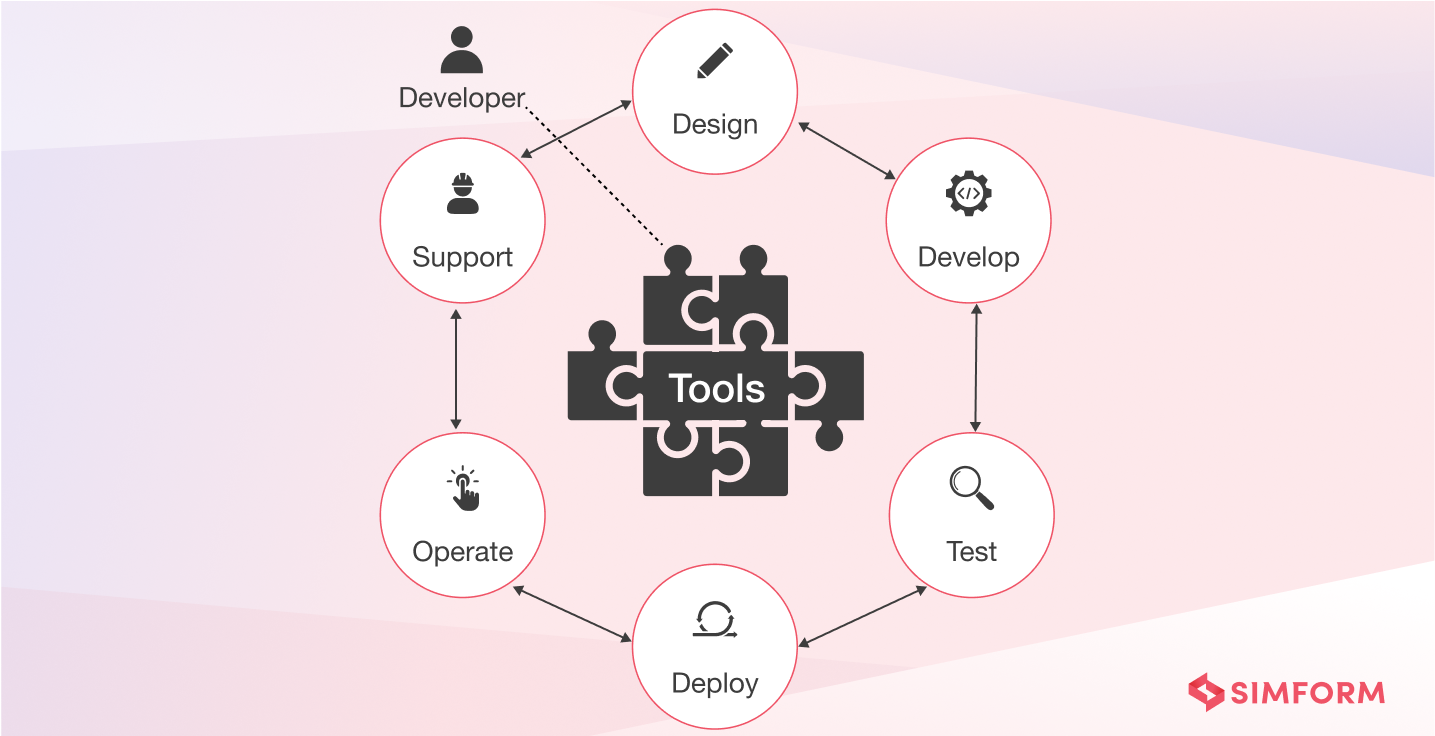

Full Cycle Developers

Combining the above ideas, Netflix built an even better model where dev teams are equipped with amazing productivity tools and are responsible for the entire SDLC, as shown below.

Netflix provided ongoing training and support in different forms (e.g., dev boot camps) to help new developers build up these skills. Easy-to-use tools for deployment pipelines also helped the developers, e.g., Spinnaker. It is a Continuous Delivery platform for releasing software changes with high velocity and confidence.

However, such models require a significant shift in the mindsets of teams/developers. To apply this model outside Netflix, you can start with evaluating what you need, count costs, and be mindful of bringing in the least amount of complexities necessary. And then attempt a mindset shift.

Netflix practices are unique to their work environment and needs and might not suit all organizations. But here are a few lessons to learn from their DevOps strategy and apply:

- Don’t build systems that say no to your developers

Netflix has no push schedules, push windows, or crucibles that developers must go through to push their code into production. Instead, every engineer at Netflix has full access to the production environment. And there are neither strict policies nor procedures that prevent them from accessing the production environment.

- Focus on giving freedom and responsibility to the engineers

Netflix aims to hire intelligent people and provide them with the freedom to solve problems in their own way that they see as best. So it doesn’t have to create artificial constraints and guardrails to predict what their developers need to do. But instead, hire people who can develop a balance of freedom and responsibility.

- Don’t think about uptime at all costs

Netflix servers their millions of users with a near-perfect uptime. But it didn’t think about uptime when they started chaos testing their environment to deal with unexpected failure.

- Prize the velocity of innovation

Netflix wants its engineers to do fun, exciting things and develop new features to delight its customers with reduced time-to-market.

- Eliminate a lot of processes and procedures

They limit an organization from moving fast. So instead, Netflix focuses on hiring people they can trust and have independent decision-making capabilities.

- Practice context over control

Netflix doesn’t control and contain too much. What they do focus on is context. Managers at Netflix ensure that their teams have a quality and constant flow of context of the business, rather than controlling them.

- Don’t do a lot of required standards, but focus on enablement

Teams at Netflix can work with their choice of programming languages, libraries, frameworks, or IDEs as they see best. In addition, they don’t have to go through any research or approval processes to rewrite a portion of the system.

- Don’t do silos, walls, and fences

Netflix teams know where they fit in the ecosystem, their workings with other teams, dependents, and dependencies. There are no operational fences over which developers can throw the code for production.

- Adopt “you build it, you run it” culture

Netflix focuses on making ownership easy. So it has the “operate what you build” culture but with the enablement idea that we learned about earlier.

- Focus on data

Netflix is a data-driven, decision-driven company. It doesn’t do guesses or fall victim to gut instincts and traditional thinking. It invests in algorithms and systems that combs enormous amounts of data quickly and notify when there’s an issue.

- Always put customer satisfaction first

The end goal of DevOps is to make customer-driven and focus on enhancing the user experience with every release.

- Don’t do DevOps, but focus on the culture

At Netflix, DevOps emerged as the wonderful result of their healthy culture, thinking and practices.

Get in Touch

Netflix has been a gold standard in the DevOps world for years, but copy-pasting their culture might not work for every organization. DevOps is a mindset that requires molding your processes and organizational structure to continuously improve the software quality and increase your business value. DevOps can be approached through many practices such as automation, continuous integration, delivery, deployment, continuous testing, monitoring, and more.

At Simform, our engineering teams will help you streamline the delivery and deployment pipelines with the right DevOps toolchain and skills. Our DevOps managed services will help accelerate the product life cycle, innovate faster and achieve maximum business efficiency by delivering high-quality software with reduced time-to-market.

Hiren Dhaduk

Hiren is VP of Technology at Simform with an extensive experience in helping enterprises and startups streamline their business performance through data-driven innovation.

Cancel reply

Your email address will not be published. Required fields are marked *

Your comment here*

Sign up for the free Newsletter

For exclusive strategies not found on the blog

Sign up today!

Related Posts

Kubernetes Architecture and Components with Diagram

11 Powerful Docker Alternatives to Revolutionize Containerization in 2024

DevOps, CI/CD and Containerization: 44 Images Explaining a Winning Trio

Get insights on Gen AI adoption and implementation in 2024. Download the survey report now!

December 19, 2019

The distributed authorization system: A Netflix case study

Manish Mehta and Torin Sandall lead a deep dive into how Netflix enforces authorization policies (who can do what) at scale in its microservices ecosystem in a public cloud without introducing unreasonable latency in the request path.

Since 2008, Netflix has been on the cutting edge of cloud-based microservice deployments and is now recognized as an industry leader in building and operating cloud-native systems at scale. Like many organizations, Netflix has unique security requirements for many of its workloads. This variety requires a holistic approach to authorization to address “who can do what” across a range of resources, enforcement points, and execution environments. Manish Mehta and Torin Sandall explain how Netflix is solving authorization across the stack in cloud-native environments. You’ll learn how Netflix enforces authorization decisions at scale across various kinds of resources (e.g., HTTP APIs, gRPC methods, and SSH), enforcement points (e.g., microservices, proxies, and host-level daemons), and execution environments (e.g., VMs and containers) without introducing unreasonable latency. They then lead a deep dive into the architecture of Netflix’s distributed authorization system and demonstrate how authorization decisions can be offloaded to an open source, general purpose policy engine (Open Policy Agent).

Running VM Workloads Side by Side with Container Workloads

December 18, 2019

Shopifys $25k Bug Report, and the Cluster Takeover That Didnt Happen

December 9, 2019

You call it data lake; we call it Data Historian.

December 4, 2019

Keynote: Shaping the Cloud Native Future

November 30, 2019

Cloud-native deployment options: A review of container, serverless, and microservice implementations (sponsored by IBM)

November 17, 2019

From monolith to microservices: An architectural strategy (sponsored by CAST Software)

November 16, 2019

- Publications

- News and Events

- Education and Outreach

Software Engineering Institute

Cite this post.

AMS Citation

Cois, C., 2015: DevOps Case Study: Netflix and the Chaos Monkey. Carnegie Mellon University, Software Engineering Institute's Insights (blog), Accessed April 18, 2024, https://insights.sei.cmu.edu/blog/devops-case-study-netflix-and-the-chaos-monkey/.

APA Citation

Cois, C. (2015, April 30). DevOps Case Study: Netflix and the Chaos Monkey. Retrieved April 18, 2024, from https://insights.sei.cmu.edu/blog/devops-case-study-netflix-and-the-chaos-monkey/.

Chicago Citation

Cois, C. Aaron. "DevOps Case Study: Netflix and the Chaos Monkey." Carnegie Mellon University, Software Engineering Institute's Insights (blog) . Carnegie Mellon's Software Engineering Institute, April 30, 2015. https://insights.sei.cmu.edu/blog/devops-case-study-netflix-and-the-chaos-monkey/.

IEEE Citation

C. Cois, "DevOps Case Study: Netflix and the Chaos Monkey," Carnegie Mellon University, Software Engineering Institute's Insights (blog) . Carnegie Mellon's Software Engineering Institute, 30-Apr-2015 [Online]. Available: https://insights.sei.cmu.edu/blog/devops-case-study-netflix-and-the-chaos-monkey/. [Accessed: 18-Apr-2024].

BibTeX Code

@misc{cois_2015, author={Cois, C. Aaron}, title={DevOps Case Study: Netflix and the Chaos Monkey}, month={Apr}, year={2015}, howpublished={Carnegie Mellon University, Software Engineering Institute's Insights (blog)}, url={https://insights.sei.cmu.edu/blog/devops-case-study-netflix-and-the-chaos-monkey/}, note={Accessed: 2024-Apr-18} }

DevOps Case Study: Netflix and the Chaos Monkey

C. Aaron Cois

April 30, 2015, published in.

This post has been shared 3 times.

DevOps can be succinctly defined as a mindset of molding your process and organizational structures to promote

- business value

- software quality attributes most important to your organization

- continuous improvement

As I have discussed in previous posts on DevOps at Amazon and software quality in DevOps , while DevOps is often approached through practices such as Agile development, automation, and continuous delivery, the spirit of DevOps can be applied in many ways. In this blog post, I am going to look at another seminal case study of DevOps thinking applied in a somewhat out-of-the-box way: Netflix .

Netflix is a fantastic case study for DevOps because their software-engineering process shows a fundamental understanding of DevOps thinking and a focus on quality attributes through automation-assisted process. Recall, DevOps practitioners espouse a driven focus on quality attributes to meet business needs, leveraging automated processes to achieve consistency and efficiency.

Netflix's streaming service is a large distributed system hosted on Amazon Web Services (AWS) . Since there are so many components that have to work together to provide reliable video streams to customers across a wide range of devices, Netflix engineers needed to focus heavily on the quality attributes of reliability and robustness for both server- and client-side components. In short, they concluded that the only way to be comfortable handling failure is to constantly practice failing. To achieve the desired level of confidence and quality, in true DevOps style, Netflix engineers set about automating failure .

If you have ever used Netflix software on your computer, a game console, or a mobile device, you may have noticed that while the software is impressively reliable, occasionally the available streams of videos change. Sometimes, the 'Recommended Picks' stream may not appear, for example. When this happens it is because the service in AWS that serves the 'Recommended Picks' data is down. However, your Netflix application doesn't crash, it doesn't throw any errors, and it doesn't suffer from any degradation in performance. Netflix software merely omits the stream, or displays an alternate stream, with no hindered experience to the user--exhibiting ideal, elegant failure behavior.

To achieve this result, Netflix dramatically altered their engineering process by introducing a tool called Chaos Monkey , the first in a series of tools collectively known as the Netflix Simian Army . Chaos Monkey is basically a script that runs continually in all Netflix environments, causing chaos by randomly shutting down server instances. Thus, while writing code, Netflix developers are constantly operating in an environment of unreliable services and unexpected outages. This chaos not only gives developers a unique opportunity to test their software in unexpected failure conditions, but incentivizes them to build fault-tolerant systems to make their day-to-day job as developers less frustrating. This is DevOps at its finest: altering the development process and using automation to set up a system where the behavioral economics favors producing a desirable level of software quality. In response to creating software in this type of environment, Netflix developers will design their systems to be modular, testable, and highly resilient against back-end service outages from the start.

In a DevOps organization, leaders must ask: What can we do to incentivize the organization to achieve the outcomes we want? How can we change our organization to drive ever-closer to our goals? To master DevOps and dramatically improve outcomes in your organization, this is the type of thinking you must encourage.

Then, most importantly, organizations must be willing to make the changes and sacrifices necessary (such as intentionally, continually causing failures) to set themselves up for success. As evidence to the value of their investment, Netflix has credited this 'chaos testing' approach to giving their systems the resiliency to handle the 9/25/14 reboot of 10 percent of AWS servers without issue. The unmitigated success of this approach inspired the creation of the Simian Army, a full suite of tools to enable chaos testing, which is now available as open source software .

Every two weeks, the SEI will publish a new blog post offering guidelines and practical advice for organizations seeking to adopt DevOps in practice. We welcome your feedback on this series, as well as suggestions for future content. Please leave feedback in the comments section below.

Additional Resources

To view the webinar Culture Shock: Unlocking DevOps with Collaboration and Communication with Aaron Volkmann and Todd Waits please click here .

To view the webinar What DevOps is Not! with Hasan Yasar and C. Aaron Cois, please click here .

To listen to the podcast D evOps--Transform Development and Operations for Fast, Secure Deployments featuring Gene Kim and Julia Allen, please click here .

To read all of the blog posts in our DevOps series, please click here .

Author Page

Digital library publications, send a message, more by the author, continuous integration in devops, april 8, 2015 • by c. aaron cois, devops case study: amazon aws, february 5, 2015 • by c. aaron cois, january 26, 2015 • by c. aaron cois, devops and your organization: where to begin, december 18, 2014 • by c. aaron cois, devops and agile, november 13, 2014 • by c. aaron cois, more in devsecops, example case: using devsecops to redefine minimum viable product, march 11, 2024 • by joe yankel, acquisition archetypes seen in the wild, devsecops edition: clinging to the old ways, december 18, 2023 • by william e. novak, extending agile and devsecops to improve efforts tangential to software product development, august 7, 2023 • by david sweeney , lyndsi a. hughes, 5 challenges to implementing devsecops and how to overcome them, june 12, 2023 • by joe yankel , hasan yasar, actionable data from the devsecops pipeline, may 1, 2023 • by bill nichols , julie b. cohen, get updates on our latest work..

Sign up to have the latest post sent to your inbox weekly.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

- System Design Tutorial

- What is System Design

- System Design Life Cycle

- High Level Design HLD

- Low Level Design LLD

- Design Patterns

- UML Diagrams

- System Design Interview Guide

- Crack System Design Round

- System Design Bootcamp

- System Design Interview Questions

- Microservices

- Scalability

- Most Commonly Asked System Design Interview Problems/Questions

- Grokking Modern System Design Interview Guide

- How to Crack System Design Interview Round

- System Design Interview Questions and Answers

- 5 Common System Design Concepts for Interview Preparation

- 5 Tips to Crack Low-Level System Design Interviews

Chat Applications Design Problems

- Designing Facebook Messenger | System Design Interview

Media Storage and Streaming Design Problems

System design netflix | a complete architecture.

- Design Dropbox - A System Design Interview Question

- Designing Content Delivery Network (CDN) | System Design

Search and Location Design Problems

- System Design of Uber App | Uber System Architecture

Payments Design Problems

- Vending Machine: High Level System Design

Video Games Design Problems

- Low Level Design of Tic Tac Toe | System Design

Usability Design Problems

- System Design | URL Shortner (bit.ly, TinyURL, etc)

Designing Netflix is a quite common question of system design rounds in interviews. In the world of streaming services, Netflix stands as a monopoly, captivating millions of viewers worldwide with its vast library of content delivered seamlessly to screens of all sizes. Behind this seemingly effortless experience lies a nicely crafted system design. In this article, we will study Netflix’s system design.

Important Topics for the Netflix System Design

- Requirements of Netflix System Design

- Microservices Architecture of Netflix

- How Does Netflix Onboard a Movie/Video?

- How Netflix balance the high traffic load

- Data Processing in Netflix Using Kafka And Apache Chukwa

- Elastic Search

- Apache Spark For Movie Recommendation

- Database Design of Netflix System Design

1. Requirements of Netflix System Design

1.1. functional requirements.

- Users should be able to create accounts, log in, and log out.

- Subscription management for users.

- Allow users to play videos and pause, play, rewind, and fast-forward functionalities.

- Ability to download content for offline viewing.

- Personalized content recommendations based on user preferences and viewing history.

1.2. Non-Functional Requirements

- Low latency and high responsiveness during content playback.

- Scalability to handle a large number of concurrent users.

- High availability with minimal downtime.

- Secure user authentication and authorization.

- Intuitive user interface for easy navigation.

2. High-Level Design of Netflix System Design

We all are familiar with Netflix services. It handles large categories of movies and television content and users pay the monthly rent to access these contents. Netflix has 180M+ subscribers in 200+ countries.

Netflix works on two clouds AWS and Open Connect . These two clouds work together as the backbone of Netflix and both are highly responsible for providing the best video to the subscribers.

The application has mainly 3 components:

- Device (User Interface) which is used to browse and play Netflix videos. TV, XBOX, laptop or mobile phone, etc

- CDN is the network of distributed servers in different geographical locations, and Open Connect is Netflix’s own custom global CDN (Content delivery network).

- It handles everything which involves video streaming.

- It is distributed in different locations and once you hit the play button the video stream from this component is displayed on your device.

- So if you’re trying to play the video sitting in North America, the video will be served from the nearest open connect (or server) instead of the original server (faster response from the nearest server).

- This part handles everything that doesn’t involve video streaming (before you hit the play button) such as onboarding new content, processing videos, distributing them to servers located in different parts of the world, and managing the network traffic.

- Most of the processes are taken care of by Amazon Web Services.

2.1. Microservices Architecture of Netflix

Netflix’s architectural style is built as a collection of services. This is known as microservices architecture and this power all of the APIs needed for applications and Web apps. When the request arrives at the endpoint it calls the other microservices for required data and these microservices can also request the data from different microservices. After that, a complete response for the API request is sent back to the endpoint.

In a microservice architecture, services should be independent of each other. For example, The video storage service would be decoupled from the service responsible for transcoding videos.

How to make microservice architecture reliable?

- Use Hystrix (Already explained above)

- We can separate out some critical services (or endpoint or APIs) and make it less dependent or independent of other services.

- You can also make some critical services dependent only on other reliable services.

- While choosing the critical microservices you can include all the basic functionalities, like searching for a video, navigating to the videos, hitting and playing the video, etc.

- This way you can make the endpoints highly available and even in worst-case scenarios at least a user will be able to do the basic things.

- To understand this concept think of your servers like a herd of cows and you care about how many gallons of milk you get every day.

- If one day you notice that you’re getting less milk from a cow then you just need to replace that cow (producing less milk) with another cow.

- You don’t need to be dependent on a specific cow to get the required amount of milk. We can relate the above example to our application.

- The idea is to design the service in such a way that if one of the endpoints is giving the error or if it’s not serving the request in a timely fashion then you can switch to another server and get your work done.

3. Low Level Design of Netflix System Design

3.1. how does netflix onboard a movie/video.

Netflix receives very high-quality videos and content from the production houses, so before serving the videos to the users it does some preprocessing.

- Netflix supports more than 2200 devices and each one of them requires different resolutions and formats.

- To make the videos viewable on different devices, Netflix performs transcoding or encoding, which involves finding errors and converting the original video into different formats and resolutions.

Netflix also creates file optimization for different network speeds. The quality of a video is good when you’re watching the video at high network speed. Netflix creates multiple replicas (approx 1100-1200) for the same movie with different resolutions.

These replicas require a lot of transcoding and preprocessing. Netflix breaks the original video into different smaller chunks and using parallel workers in AWS it converts these chunks into different formats (like mp4, 3gp, etc) across different resolutions (like 4k, 1080p, and more). After transcoding, once we have multiple copies of the files for the same movie, these files are transferred to each and every Open Connect server which is placed in different locations across the world.

Below is the step by step process of how Netflix ensures optimal streaming quality:

- When the user loads the Netflix app on his/her device firstly AWS instances come into the picture and handle some tasks such as login, recommendations, search, user history, the home page, billing, customer support, etc.

- After that, when the user hits the play button on a video, Netflix analyzes the network speed or connection stability, and then it figures out the best Open Connect server near to the user.

- Depending on the device and screen size, the right video format is streamed into the user’s device. While watching a video, you might have noticed that the video appears pixelated and snaps back to HD after a while.

- This happens because the application keeps checking the best streaming open connect server and switches between formats (for the best viewing experience) when it’s needed.

User data is saved in AWS such as searches, viewing, location, device, reviews, and likes, Netflix uses it to build the movie recommendation for users using the Machine learning model or Hadoop.

3.2. How Netflix balance the high traffic load

1. elastic load balancer.

ELB in Netflix is responsible for routing the traffic to front-end services. ELB performs a two-tier load-balancing scheme where the load is balanced over zones first and then instances (servers).

- The First-tier consists of basic DNS-based Round Robin Balancing. When the request lands on the first load balancing ( see the figure), it is balanced across one of the zones (using round-robin) that your ELB is configured to use.

- The second tier is an array of load balancer instances, and it performs the Round Robin Balancing technique to distribute the request across the instances that are behind it in the same zone.

ZUUL is a gateway service that provides dynamic routing, monitoring, resiliency, and security. It provides easy routing based on query parameters, URL, and path. Let’s understand the working of its different parts:

- The Netty server takes responsibility to handle the network protocol, web server, connection management, and proxying work. When the request will hit the Netty server, it will proxy the request to the inbound filter.

- The inbound filter is responsible for authentication, routing, or decorating the request. Then it forwards the request to the endpoint filter.

- The endpoint filter is used to return a static response or to forward the request to the backend service (or origin as we call it).

- Once it receives the response from the backend service, it sends the request to the outbound filter.

- An outbound filter is used for zipping the content, calculating the metrics, or adding/removing custom headers. After that, the response is sent back to the Netty server and then it is received by the client.

Advantages of using ZUUL:

- You can create some rules and share the traffic by distributing the different parts of the traffic to different servers.

- Developers can also do l oad testing on newly deployed clusters in some machines. They can route some existing traffic on these clusters and check how much load a specific server can bear.

- You can also test new services. When you upgrade the service and you want to check how it behaves with the real-time API requests, in that case, you can deploy the particular service on one server and you can redirect some part of the traffic to the new service to check the service in real-time.

- We can also filter the bad request by setting the custom rules at the endpoint filter or firewall.

In a complex distributed system a server may rely on the response of another server. Dependencies among these servers can create latency and the entire system may stop working if one of the servers will inevitably fail at some point. To solve this problem we can isolate the host application from these external failures.

Hystrix library is designed to do this job. It helps you to control the interactions between these distributed services by adding latency tolerance and fault tolerance logic. Hystrix does this by isolating points of access between the services, remote system, and 3rd party libraries. The library helps to:

- Stop cascading failures in a complex distributed system.

- control over latency and failure from dependencies accessed (typically over the network) via third-party client libraries.

- Fail fast and rapidly recover.

- Fallback and gracefully degrade when possible.

- Enable near real-time monitoring, alerting, and operational control.

- Concurrency-aware request caching. Automated batching through request collapsing

3.3. EV Cache

In most applications, some amount of data is frequently used. For faster response, these data can be cached in so many endpoints and it can be fetched from the cache instead of the original server. This reduces the load from the original server but the problem is if the node goes down all the cache goes down and this can hit the performance of the application.

To solve this problem Netflix has built its own custom caching layer called EV cache. EV cache is based on Memcached and it is actually a wrapper around Memcached.

Netflix has deployed a lot of clusters in a number of AWS EC2 instances and these clusters have so many nodes of Memcached and they also have cache clients.

- The data is shared across the cluster within the same zone and multiple copies of the cache are stored in sharded nodes.

- Every time when write happens to the client all the nodes in all the clusters are updated but when the read happens to the cache, it is only sent to the nearest cluster (not all the cluster and nodes) and its nodes.

- In case, a node is not available then read from a different available node. This approach increases performance, availability, and reliability.

3.4. Data Processing in Netflix Using Kafka And Apache Chukwa

When you click on a video Netflix starts processing data in various terms and it takes less than a nanosecond. Let’s discuss how the evolution pipeline works on Netflix.

Netflix uses Kafka and Apache Chukwe to ingest the data which is produced in a different part of the system. Netflix provides almost 500B data events that consume 1.3 PB/day and 8 million events that consume 24 GB/Second during peak time. These events include information like:

- UI activities

- Performance events

- Video viewing activities

- Troubleshooting and diagnostic events.

Apache Chukwe is an open-source data collection system for collecting logs or events from a distributed system. It is built on top of HDFS and Map-reduce framework. It comes with Hadoop’s scalability and robustness features.

- It includes a lot of powerful and flexible toolkits to display, monitor, and analyze the result.

- Chukwe collects the events from different parts of the system and from Chukwe you can do monitoring and analysis or you can use the dashboard to view the events.

- Chukwe writes the event in the Hadoop file sequence format (S3). After that Big Data team processes these S3 Hadoop files and writes Hive in Parquet data format.

- This process is called batch processing which basically scans the whole data at the hourly or daily frequency.

To upload online events to EMR/S3, Chukwa also provide traffic to Kafka (the main gate in real-time data processing).

- Kafka is responsible for moving data from fronting Kafka to various sinks: S3, Elasticsearch, and secondary Kafka.

- Routing of these messages is done using the Apache Samja framework.

- Traffic sent by the Chukwe can be full or filtered streams so sometimes you may have to apply further filtering on the Kafka streams.

- That is the reason we consider the router to take from one Kafka topic to a different Kafka topic.

3.5. Elastic Search

In recent years we have seen massive growth in using Elasticsearch within Netflix. Netflix is running approximately 150 clusters of elastic search and 3, 500 hosts with instances. Netflix is using elastic search for data visualization, customer support, and for some error detection in the system.

For example:

If a customer is unable to play the video then the customer care executive will resolve this issue using elastic search. The playback team goes to the elastic search and searches for the user to know why the video is not playing on the user’s device.

They get to know all the information and events happening for that particular user. They get to know what caused the error in the video stream. Elastic search is also used by the admin to keep track of some information. It is also used to keep track of resource usage and to detect signup or login problems.

3.6. Apache Spark For Movie Recommendation

Netflix uses Apache Spark and Machine learning for Movie recommendations. Let’s understand how it works with an example.

When you load the front page you see multiple rows of different kinds of movies. Netflix personalizes this data and decides what kind of rows or what kind of movies should be displayed to a specific user. This data is based on the user’s historical data and preferences.

Also, for that specific user, Netflix performs sorting of the movies and calculates the relevance ranking (for the recommendation) of these movies available on their platform. In Netflix, Apache Spark is used for content recommendations and personalization.

A majority of the machine learning pipelines are run on these large spark clusters. These pipelines are then used to do row selection, sorting, title relevance ranking, and artwork personalization among others.

Video Recommendation System

If a user wants to discover some content or video on Netflix, the recommendation system of Netflix helps users to find their favorite movies or videos. To build this recommendation system Netflix has to predict the user interest and it gathers different kinds of data from the users such as:

- User interaction with the service (viewing history and how the user rated other titles)

- Other members with similar tastes and preferences.

- Metadata information from the previously watched videos for a user such as titles, genre, categories, actors, release year, etc.

- The device of the user, at what time a user is more active, and for how long a user is active.

- Netflix uses two different algorithms to build a recommendation system…

- The idea of this filtering is that if two users have similar rating histories then they will behave similarly in the future.

- For example, consider there are two-person. One person liked the movie and rated the movie with a good score.

- Now, there is a good chance that the other person will also have a similar pattern and he/she will do the same thing that the first person has done.

- The idea is to filter those videos which are similar to the video a user has liked before.

- Content-based filtering is highly dependent on the information from the products such as movie title, release year, actors, the genre.

- So to implement this filtering it’s important to know the information describing each item and some sort of user profile describing what the user likes is also desirable.

4. Database Design of Netflix System Design

Netflix uses two different databases i.e. MySQL(RDBMS) and Cassandra(NoSQL) for different purposes.

4.1. EC2 Deployed MySQL

Netflix saves data like billing information, user information, and transaction information in MySQL because it needs ACID compliance. Netflix has a master-master setup for MySQL and it is deployed on Amazon’s large EC2 instances using InnoDB.

The setup follows the “ Synchronous replication protocol ” where if the writer happens to be the primary master node then it will be also replicated to another master node. The acknowledgment will be sent only if both the primary and remote master nodes’ write have been confirmed. This ensures the high availability of data. Netflix has set up the read replica for each and every node (local, as well as cross-region). This ensures high availability and scalability.

All the read queries are redirected to the read replicas and only the write queries are redirected to the master nodes.

- In the case of a primary master MySQL failure, the secondary master node will take over the primary role, and the route53 (DNS configuration) entry for the database will be changed to this new primary node.

- This will also redirect the write queries to this new primary master node.

4.2. Cassandra

Cassandra is a NoSQL database that can handle large amounts of data and it can also handle heavy writing and reading. When Netflix started acquiring more users, the viewing history data for each member also started increasing. This increases the total number of viewing history data and it becomes challenging for Netflix to handle this massive amount of data.

Netflix scaled the storage of viewing history data-keeping two main goals in their mind:

- Smaller Storage Footprint.

- Consistent Read/Write Performance as viewing per member grows (viewing history data write-to-read ratio is about 9:1 in Cassandra).

Total Denormalized Data Model

- Over 50 Cassandra Clusters

- Over 500 Nodes

- Over 30TB of daily backups

- The biggest cluster has 72 nodes.

- 1 cluster over 250K writes/s

Initially, the viewing history was stored in Cassandra in a single row. When the number of users started increasing on Netflix the row sizes as well as the overall data size increased. This resulted in high storage, more operational cost, and slow performance of the application. The solution to this problem was to compress the old rows.

Netflix divided the data into two parts:

- This section included the small number of recent viewing historical data of users with frequent updates. The data is frequently used for the ETL jobs and stored in uncompressed form.

- A large amount of older viewing records with rare updates is categorized in this section. The data is stored in a single column per row key, also in compressed form to reduce the storage footprint.

Please Login to comment...

Similar reads.

- System-Design

- System Design

- How to Organize Your Digital Files with Cloud Storage and Automation

- 10 Best Blender Alternatives for 3D Modeling in 2024

- How to Transfer Photos From iPhone to iPhone

- What are Tiktok AI Avatars?

- 30 OOPs Interview Questions and Answers (2024)

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

Fast, Frictionless, and Secure: Explore our 120+ Connectors Portfolio | Join Webinar!

Login Contact Us

Featuring Apache Kafka in the Netflix Studio and Finance World

Get started with confluent, for free, watch demo: kafka streaming in 10 minutes.

- Nitin Sharma

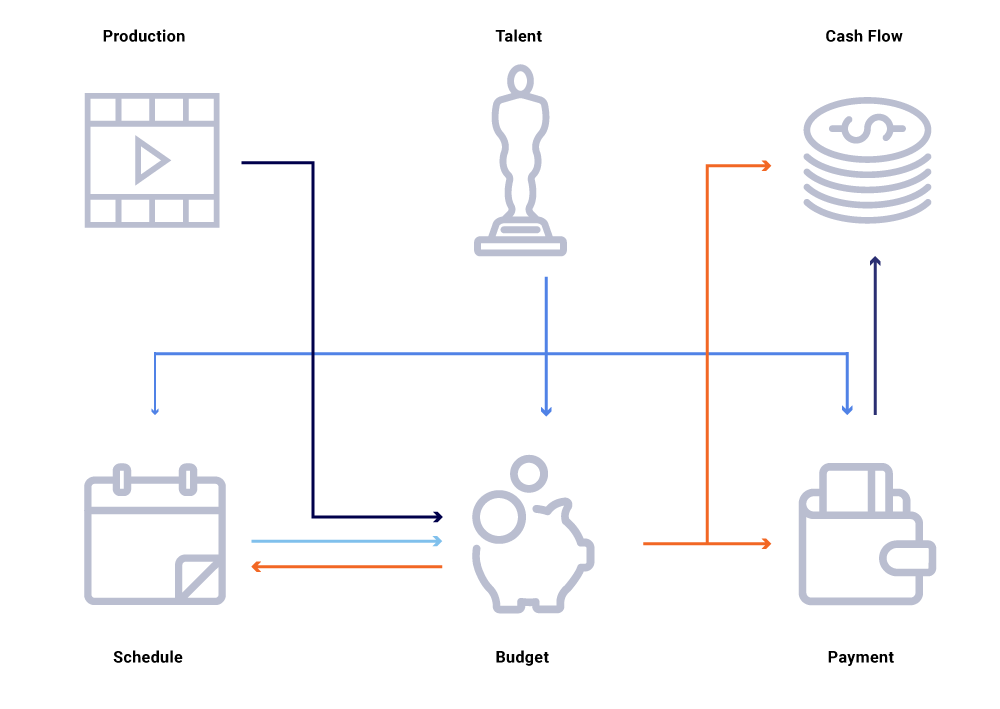

Netflix spent an estimated $15 billion to produce world-class original content in 2019. When stakes are so high, it is paramount to enable our business with critical insights that help plan, determine spending, and account for all Netflix content. These insights can include:

- How much should we spend in the next year on international movies and series?

- Are we trending to go over our production budget and does anyone need to step in to keep things on track?

- How do we program a catalog years in advance with data, intuition, and analytics to help create the best slate possible?

- How do we produce financials for content across the globe and report to Wall Street?

Similar to how VCs rigorously tune their eye for good investments, the Content Finance Engineering Team’s charter is to help Netflix invest, track, and learn from our actions so that we continuously make better investments in the future.

Embrace eventing

From an engineering standpoint, every financial application is modeled and implemented as a microservice. Netflix embraces distributed governance and encourages a microservices-driven approach to applications, which helps achieve the right balance between data abstraction and velocity as the company scales. In a simple world, services can interact through HTTP just fine, but as we scale out, they evolves into a complex graph of synchronous, request-based interactions that can potentially lead to a split-brain/state and disrupt availability.

Consider in the above graph of related entities, a change in the production date of a show. This impacts our programming slate, which in turn influences cash flow projects, talent payments, budgets for the year, etc. Often in a microservice architecture, some percentage of failure is acceptable. However, a failure in any one of the microservice calls for Content Finance Engineering would lead to a plethora of computations being out of sync and could result in data being off by millions of dollars. It would also lead to availability problems as the call graph spans out and cause blind spots while trying to effectively track down and answer business questions, such as: why do cash flow projections deviate from our launch schedule? Why is the forecast for the current year not taking into account the shows that are in active development? When can we expect our cost reports to accurately reflect upstream changes?

Rethinking service interactions as streams of event exchanges—as opposed to a sequence of synchronous requests—lets us build infrastructure that is inherently asynchronous. It promotes decoupling and provides traceability as a first-class citizen in a web of distributed transactions. Events are much more than triggers and updates. They become the immutable stream from which we can reconstruct the entire system state.

Moving towards a publish/subscribe model enables every service to publish its changes as events into a message bus, which can then be consumed by another service of interest that needs to adjust its state of the world. Such a model allows us to track whether services are in sync with respect to state changes and, if not, how long before they can be in sync. These insights are extremely powerful when operating a large graph of dependent services. Event-based communication and decentralized consumption helps us overcome issues we usually see in large synchronous call graphs (as mentioned above).

Netflix embraces Apache Kafka ® as the de-facto standard for its eventing, messaging, and stream processing needs. Kafka acts as a bridge for all point-to-point and Netflix Studio wide communications. It provides us with the high durability and linearly scalable, multi-tenant architecture required for operating systems at Netflix. Our in-house Kafka as a service offering provides fault tolerance, observability, multi-region deployments, and self-service. This makes it easier for our entire ecosystem of microservices to easily produce and consume meaningful events and unleash the power of asynchronous communication.

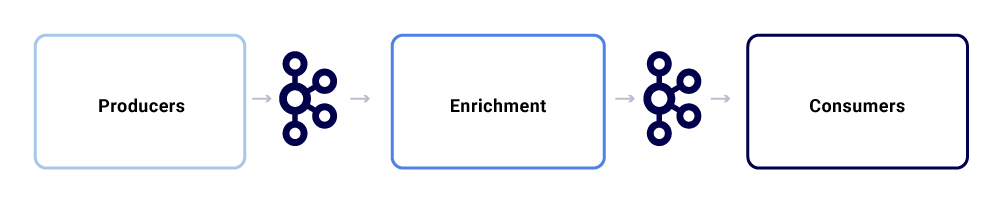

A typical message exchange within Netflix Studio ecosystem looks like this:

We can break them up as three major sub-components.

A producer can be any system that wants to publish its entire state or hint that a critical piece of its internal state has changed for a particular entity. Apart from the payload, an event needs to adhere to a normalized format, which makes it easier to trace and understand. This format includes:

- UUID: Universally unique identifier

- Type: One of the create, read, update, or delete (CRUD) types

- Ts: Timestamp of the event

Change data capture (CDC) tools are another category of event producers that derive events out of database changes. This can be useful when you want to make database changes available to multiple consumers. We also use this pattern for replicating the same data across datacenters (for single master databases). An example is when we have data in MySQL that needs to be indexed in Elasticsearch or Apache Solr™. The benefit of using CDC is that it does not impose additional load on the source application.

For CDC events, the TYPE field in the event format makes it easy to adapt and transform events as required by the respective sinks.

Once data exists in Kafka, various consumption patterns can be applied to it. Events are used in many ways, including as triggers for system computations, payload transfer for near-real-time communication, and cues to enrich and materialize in-memory views of data.

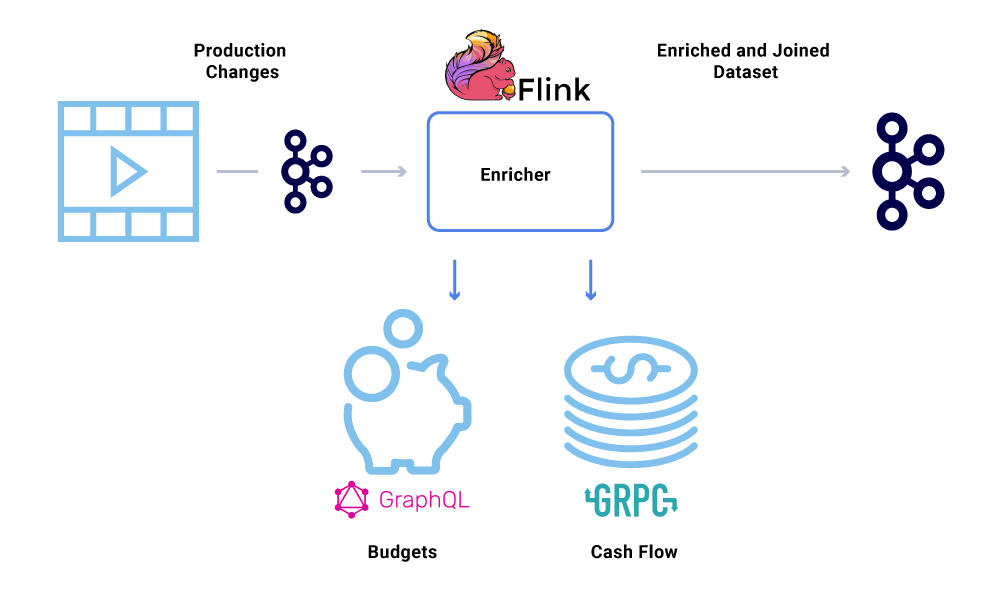

Data enrichment is becoming increasingly common where microservices need the full view of a dataset but part of the data is coming from another service’s dataset. A joined dataset can be useful for improving query performance or providing a near-real-time view of aggregated data. To enrich the event data, consumers read the data from Kafka and call other services (using methods that include gRPC and GraphQL) to construct the joined dataset, which are then later fed to other Kafka topics.

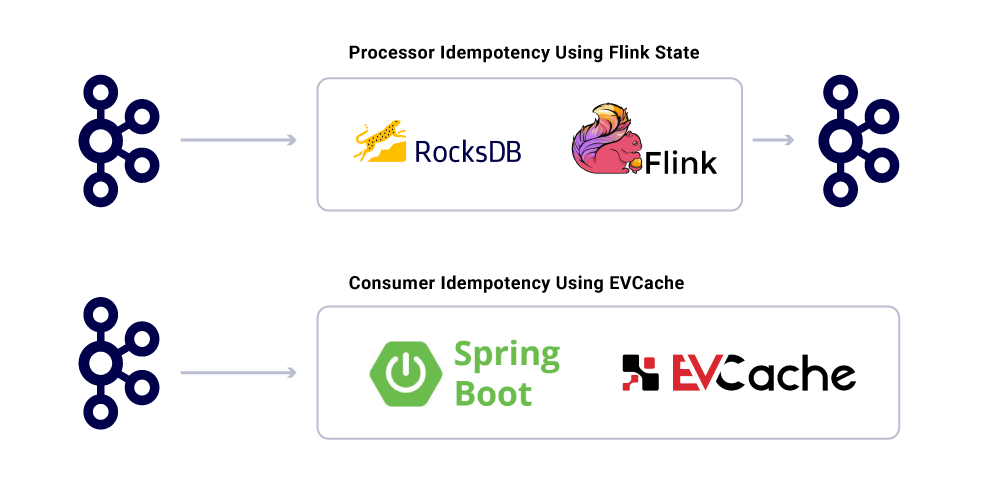

Enrichment can be run as a separate microservice in it of itself that is responsible for doing the fan-out and for materializing datasets. There are cases where we want to do more complex processing like windowing, sessionization, and state management. For such cases, it is recommended to use a mature stream processing engine on top of Kafka to build business logic. At Netflix, we use Apache Flink ® and RocksDB to do stream processing. We’re also considering ksqlDB for similar purposes.

Ordering of events

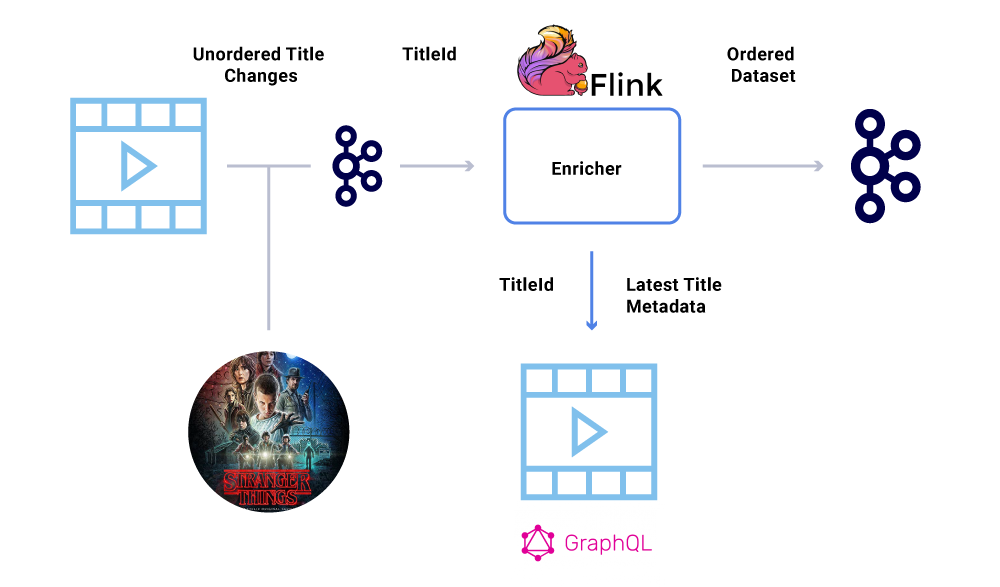

One of the key requirements within a financial dataset is the strict ordering of events. Kafka helps us achieve this is by sending keyed messages. Any event or message sent with the same key, will have guaranteed ordering since they are sent to the same partition. However, the producers can still mess up the ordering of events.

For example, the launch date of “Stranger Things” was originally moved from July to June but then back from June to July. For a variety of reasons, these events could be written out in the wrong order to Kafka (network timeout when producer tried to reach Kafka, a concurrency bug in producer code, etc). An ordering hiccup could have heavily impacted various financial calculations.

To circumvent this scenario, producers are encouraged to send only the primary ID of the entity that has changed and not the full payload in the Kafka message. The enrichment process (described in the above section) queries the source service with the ID of the entity to get the most up-to-date state/payload, thus providing an elegant way of circumventing the out-of-order issue. We refer to this as delayed materialization , and it guarantees ordered datasets.

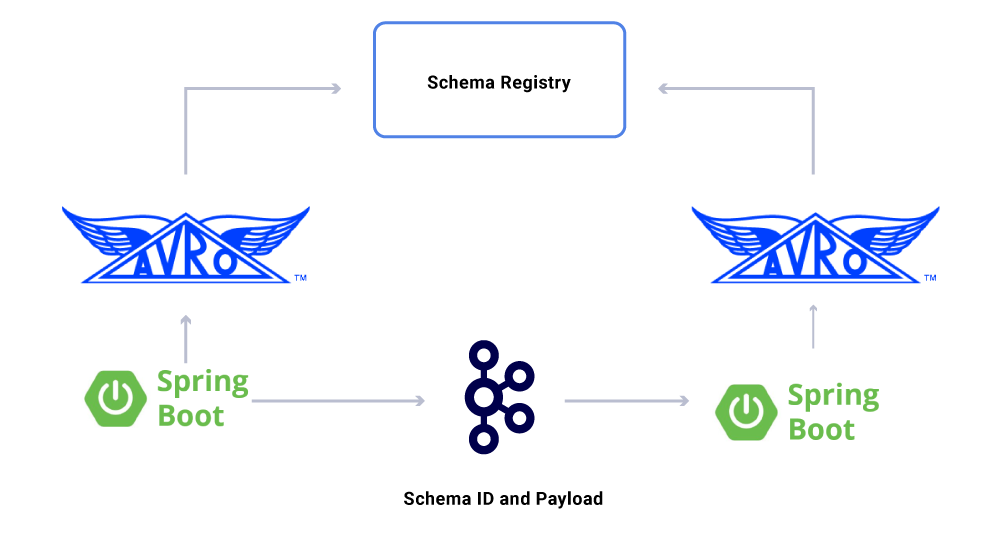

We use Spring Boot to implement many of the consuming microservices that read from the Kafka topics. Spring Boot offers great built-in Kafka consumers called Spring Kafka Connectors, which make consumption seamless, providing easy ways to wire up annotations for consumption and deserialization of data.

One aspect of the data that we haven’t discussed yet are contracts . As we scale out our use of event streams, we end up with a varied group of datasets, some of which are consumed by a large number of applications. In these cases, defining a schema on the output is ideal and helps ensure backward compatibility. To do this, we leverage Confluent Schema Registry and Apache Avro™ to build our schematized streams for versioning data streams.

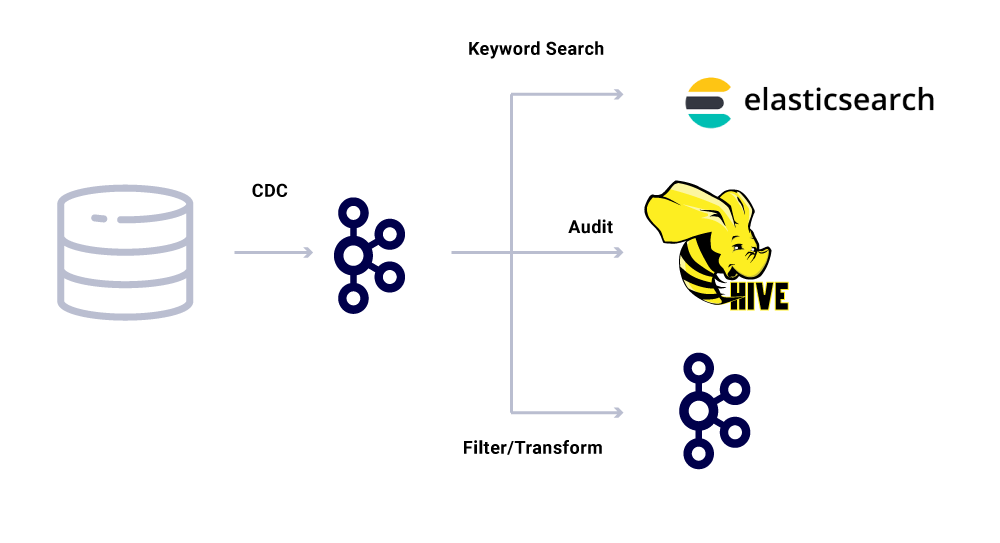

In addition to dedicated microservice consumers, we also have CDC sinks that index the data into a variety of stores for further analysis. These include Elasticsearch for keyword search, Apache Hive™ for auditing, and Kafka itself for further downstream processing. The payload for such sinks is directly derived from the Kafka message by using the ID field as the primary key and TYPE for identifying CRUD operations.

Message delivery guarantees

Guaranteeing exactly once delivery in a distributed system is nontrivial due to the complexities involved and a plethora of moving parts. Consumers should have idempotent behavior to account for any potential infrastructure and producer mishaps.

Despite the fact that applications are idempotent, they should not repeat compute heavy operations for already-processed messages. A popular way of ensuring this is to keep track of the UUID of messages consumed by a service in a distributed cache with reasonable expiry (defined based on Service Level Agreements (SLA). Anytime the same UUID is encountered within the expiry interval, the processing is skipped.

Processing in Flink provides this guarantee by using its internal RocksDB-based state management, with the key being the UUID of the message. If you want to do this purely using Kafka, Kafka Streams offers a way to do that as well. Consuming applications based on Spring Boot use EVCache to achieve this.

Monitoring infrastructure service levels

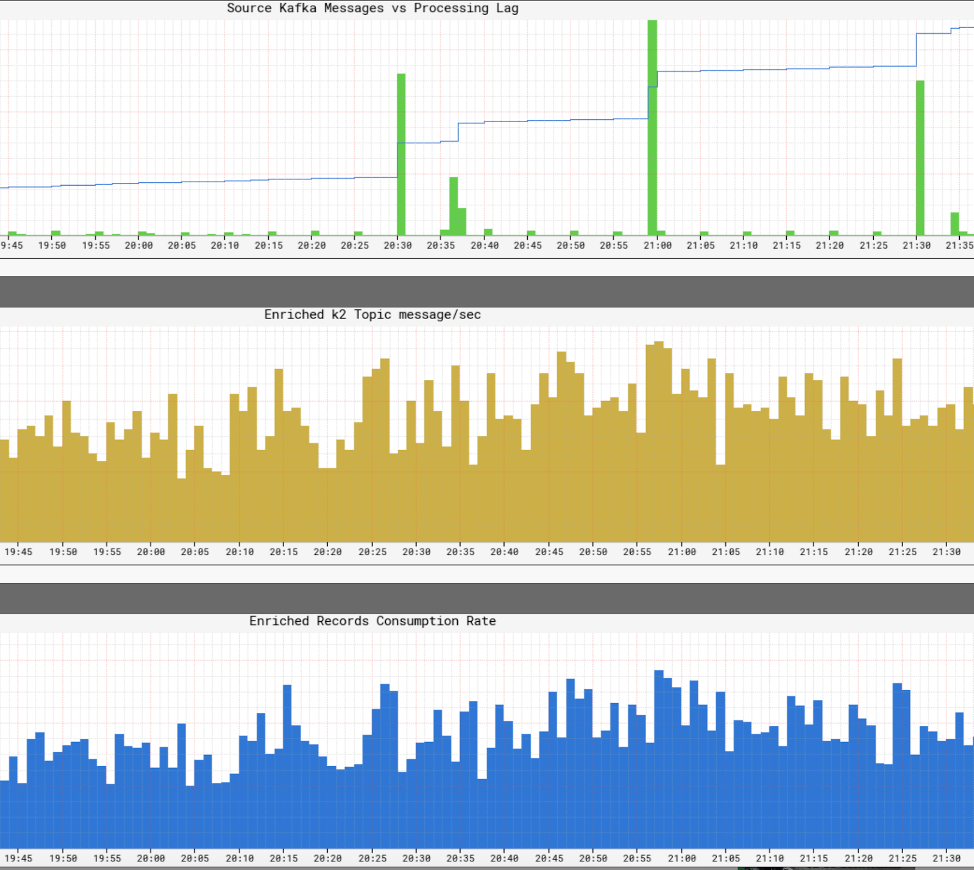

It’s crucial for Netflix to have a real-time view of the service levels within its infrastructure. Netflix wrote Atlas to manage dimensional time series data, from which we publish and visualize metrics. We use a variety of metrics published by producers, processors, and consumers to help us construct a near-real-time picture of the entire infrastructure.

Some of the key aspects we monitor are:

- What is the time end to end from the production of an event until it reaches all sinks?

- What is the processing lag for every consumer?

- How large of a payload are we able to send?

- Should we compress the data?

- Are we efficiently utilizing our resources?

- Can we consume faster?

- Are we able to create a checkpoint for our state and resume in the case of failures?

- If we are not able to keep up with the event firehose, can we apply backpressure to the corresponding sources without crashing our application?

- How do we deal with event bursts?

- Are we sufficiently provisioned to meet the SLA?

The Netflix Studio Productions and Finance Team embraces distributed governance as the way of architecting systems. We use Kafka as our platform of choice for working with events, which are an immutable way to record and derive system state. Kafka has helped us achieve greater levels of visibility and decoupling in our infrastructure while helping us organically scale out operations. It is at the heart of revolutionizing Netflix Studio infrastructure and with it, the film industry.

Interested in more?

If you’d like to know more, you can view the recording and slides of my Kafka Summit San Francisco presentation Eventing Things – A Netflix Original!

Did you like this blog post? Share it now

Subscribe to the confluent blog.

Confluent’s Customer Zero: Building a Real-Time Alerting System With Confluent Cloud and Slack

Turning events into outcomes at scale is not easy! It starts with knowing what events are actually meaningful to your business or customer’s journey and capturing them. At Confluent, we have a good sense of what these critical events or moments are.

- Matt Mangia

How To Automatically Detect PII for Real-Time Cyber Defense

Our new PII Detection solution enables you to securely utilize your unstructured text by enabling entity-level control. Combined with our suite of data governance tools, you can execute a powerful real-time cyber defense strategy.

- Robbie Palmer

Anatomy of testing in production: A Netflix original case study

Who is this presentation for?

Prerequisite knowledge, what you'll learn, description.

So you want to test your complex application that involves large-scale distributed systems. But how do you feel about testing it effectively just using your test environment? Today, automated testing of Netflix client and server applications runs at scale in production. Within a few years, the company’s testing has gone from a low-volume manual mode to one where it is continuous, voluminous, and fully automated. Collectively, Netflix teams create hundreds of thousands of tester accounts every day, each being used in thousands of test scenarios, to the point where service providers are more wary of getting paged for causing instability to internal testers than for causing an external outage.

Vasanth Asokan offers a study of the evolution and anatomy of production testing at scale at Netflix, explaining why there was a desire to test in production, what Netflix did to try to keep testing out of production, and where testing belongs, anyway. Along the way, Vasanth shares a few case studies to demonstrate both the benefits and the less tangible diffused impacts of concentrated, uncoordinated testing against customer-facing infrastructure. Vasanth also looks at other forms of testing, such as load, failure, and simulation testing, and explains the role they play in ensuring a fully functioning customer experience.

Join in to learn whether the benefits outweigh the risks of executing untested code in production or whether it’s better to focus on creating a production mirror. If you run large-scale distributed systems, this talk will better inform your overall testing strategy, illustrate specific techniques that work at scale, and provide trade-offs to consider.

Vasanth Asokan

Vasanth Asokan is an Engineering Leader at Netflix, where he heads a developer productivity team for large-scale microservices. Service oriented architectures, continuous integration and delivery, automation, testing, resiliency, serverless trends, developer experience, and education are favorite topics. In a former phase of his career, he focussed on embedded SoC development and EDA tools, compilers, Embedded RTOS -es, and Eclipse plug-in development. He likes exploring vague opportunities, building bridges between ideas and solving problems (both human and technical). Curious by nature and people oriented, he has a high regard for products, processes, and engineering that actually reach people in meaningful ways.

Sponsorship Opportunities

For exhibition and sponsorship opportunities, email [email protected]

Partner Opportunities

For information on trade opportunities with O'Reilly conferences, email [email protected]

View a complete list of O'Reilly Software Architecture contacts

©2019, O'Reilly Media, Inc. • (800) 889-8969 or (707) 827-7019 • Monday-Friday 7:30am-5pm PT • All trademarks and registered trademarks appearing on oreilly.com are the property of their respective owners. • [email protected]

Recommender Systems Handbook pp 385–419 Cite as

Recommender Systems in Industry: A Netflix Case Study

- Xavier Amatriain 4 , 5 &

- Justin Basilico 4

20k Accesses

30 Citations

The Netflix Prize put a spotlight on the importance and use of recommender systems in real-world applications. Many the competition provided many lessons about how to approach recommendation and many more have been learned since the Grand Prize was awarded in 2009. The evolution of industrial applications of recommender systems has been driven by the availability of different kinds of user data and the level of interest for the area within the research community. The goal of this chapter is to give an up-to-date overview of recommender systems techniques used in an industrial setting. We will give a high-level description the practical use of recommendation and personalization techniques. We will highlight some of the main lessons learned from the Netflix Prize. We will then use Netflix personalization as a case study to describe several approaches and techniques used in a real-world recommendation system. Finally, we will pinpoint what we see as some promising current research avenues and unsolved problems that deserve attention in this domain from an industry perspective.

This is a preview of subscription content, log in via an institution .

Buying options

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

The application of Matrix Factorization to the task of rating prediction closely resembles the technique known as Singular Value Decomposition used, for example, to identify latent factors in Information Retrieval. Therefore, it is common to see people referring to this MF solution as SVD.