There are lies, damned lies, and statistics. —Mark Twain

What this handout is about

The purpose of this handout is to help you use statistics to make your argument as effectively as possible.

Introduction

Numbers are power. Apparently freed of all the squishiness and ambiguity of words, numbers and statistics are powerful pieces of evidence that can effectively strengthen any argument. But statistics are not a panacea. As simple and straightforward as these little numbers promise to be, statistics, if not used carefully, can create more problems than they solve.

Many writers lack a firm grasp of the statistics they are using. The average reader does not know how to properly evaluate and interpret the statistics he or she reads. The main reason behind the poor use of statistics is a lack of understanding about what statistics can and cannot do. Many people think that statistics can speak for themselves. But numbers are as ambiguous as words and need just as much explanation.

In many ways, this problem is quite similar to that experienced with direct quotes. Too often, quotes are expected to do all the work and are treated as part of the argument, rather than a piece of evidence requiring interpretation (see our handout on how to quote .) But if you leave the interpretation up to the reader, who knows what sort of off-the-wall interpretations may result? The only way to avoid this danger is to supply the interpretation yourself.

But before we start writing statistics, let’s actually read a few.

Reading statistics

As stated before, numbers are powerful. This is one of the reasons why statistics can be such persuasive pieces of evidence. However, this same power can also make numbers and statistics intimidating. That is, we too often accept them as gospel, without ever questioning their veracity or appropriateness. While this may seem like a positive trait when you plug them into your paper and pray for your reader to submit to their power, remember that before we are writers of statistics, we are readers. And to be effective readers means asking the hard questions. Below you will find a useful set of hard questions to ask of the numbers you find.

1. Does your evidence come from reliable sources?

This is an important question not only with statistics, but with any evidence you use in your papers. As we will see in this handout, there are many ways statistics can be played with and misrepresented in order to produce a desired outcome. Therefore, you want to take your statistics from reliable sources (for more information on finding reliable sources, please see our handout on evaluating print sources ). This is not to say that reliable sources are infallible, but only that they are probably less likely to use deceptive practices. With a credible source, you may not need to worry as much about the questions that follow. Still, remember that reading statistics is a bit like being in the middle of a war: trust no one; suspect everyone.

2. What is the data’s background?

Data and statistics do not just fall from heaven fully formed. They are always the product of research. Therefore, to understand the statistics, you should also know where they come from. For example, if the statistics come from a survey or poll, some questions to ask include:

- Who asked the questions in the survey/poll?

- What, exactly, were the questions?

- Who interpreted the data?

- What issue prompted the survey/poll?

- What (policy/procedure) potentially hinges on the results of the poll?

- Who stands to gain from particular interpretations of the data?

All these questions help you orient yourself toward possible biases or weaknesses in the data you are reading. The goal of this exercise is not to find “pure, objective” data but to make any biases explicit, in order to more accurately interpret the evidence.

3. Are all data reported?

In most cases, the answer to this question is easy: no, they aren’t. Therefore, a better way to think about this issue is to ask whether all data have been presented in context. But it is much more complicated when you consider the bigger issue, which is whether the text or source presents enough evidence for you to draw your own conclusion. A reliable source should not exclude data that contradicts or weakens the information presented.

An example can be found on the evening news. If you think about ice storms, which make life so difficult in the winter, you will certainly remember the newscasters warning people to stay off the roads because they are so treacherous. To verify this point, they tell you that the Highway Patrol has already reported 25 accidents during the day. Their intention is to scare you into staying home with this number. While this number sounds high, some studies have found that the number of accidents actually goes down on days with severe weather. Why is that? One possible explanation is that with fewer people on the road, even with the dangerous conditions, the number of accidents will be less than on an “average” day. The critical lesson here is that even when the general interpretation is “accurate,” the data may not actually be evidence for the particular interpretation. This means you have no way to verify if the interpretation is in fact correct.

There is generally a comparison implied in the use of statistics. How can you make a valid comparison without having all the facts? Good question. You may have to look to another source or sources to find all the data you need.

4. Have the data been interpreted correctly?

If the author gives you her statistics, it is always wise to interpret them yourself. That is, while it is useful to read and understand the author’s interpretation, it is merely that—an interpretation. It is not the final word on the matter. Furthermore, sometimes authors (including you, so be careful) can use perfectly good statistics and come up with perfectly bad interpretations. Here are two common mistakes to watch out for:

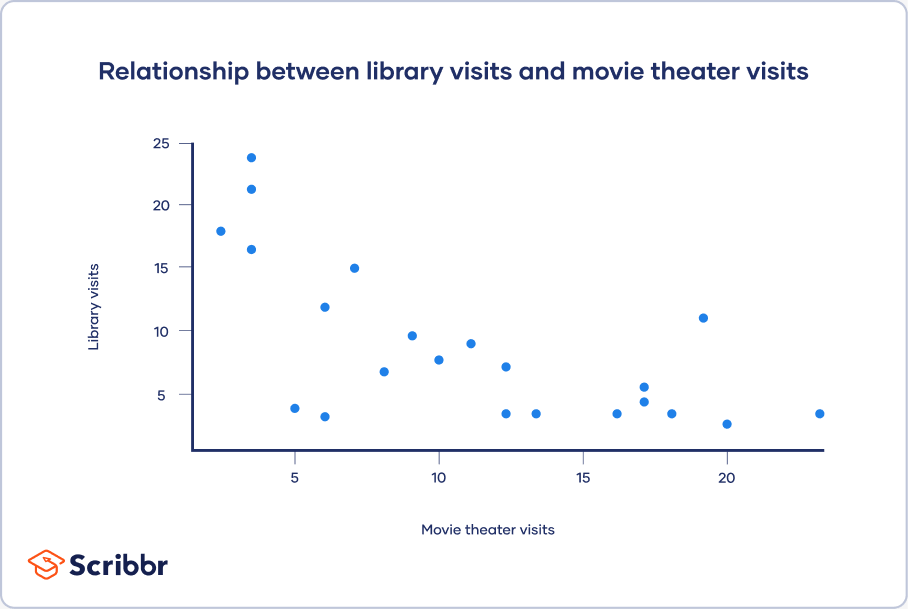

- Confusing correlation with causation. Just because two things vary together does not mean that one of them is causing the other. It could be nothing more than a coincidence, or both could be caused by a third factor. Such a relationship is called spurious.The classic example is a study that found that the more firefighters sent to put out a fire, the more damage the fire did. Yikes! I thought firefighters were supposed to make things better, not worse! But before we start shutting down fire stations, it might be useful to entertain alternative explanations. This seemingly contradictory finding can be easily explained by pointing to a third factor that causes both: the size of the fire. The lesson here? Correlation does not equal causation. So it is important not only to think about showing that two variables co-vary, but also about the causal mechanism.

- Ignoring the margin of error. When survey results are reported, they frequently include a margin of error. You might see this written as “a margin of error of plus or minus 5 percentage points.” What does this mean? The simple story is that surveys are normally generated from samples of a larger population, and thus they are never exact. There is always a confidence interval within which the general population is expected to fall. Thus, if I say that the number of UNC students who find it difficult to use statistics in their writing is 60%, plus or minus 4%, that means, assuming the normal confidence interval of 95%, that with 95% certainty we can say that the actual number is between 56% and 64%.

Why does this matter? Because if after introducing this handout to the students of UNC, a new poll finds that only 56%, plus or minus 3%, are having difficulty with statistics, I could go to the Writing Center director and ask for a raise, since I have made a significant contribution to the writing skills of the students on campus. However, she would no doubt point out that a) this may be a spurious relationship (see above) and b) the actual change is not significant because it falls within the margin of error for the original results. The lesson here? Margins of error matter, so you cannot just compare simple percentages.

Finally, you should keep in mind that the source you are actually looking at may not be the original source of your data. That is, if you find an essay that quotes a number of statistics in support of its argument, often the author of the essay is using someone else’s data. Thus, you need to consider not only your source, but the author’s sources as well.

Writing statistics

As you write with statistics, remember your own experience as a reader of statistics. Don’t forget how frustrated you were when you came across unclear statistics and how thankful you were to read well-presented ones. It is a sign of respect to your reader to be as clear and straightforward as you can be with your numbers. Nobody likes to be played for a fool. Thus, even if you think that changing the numbers just a little bit will help your argument, do not give in to the temptation.

As you begin writing, keep the following in mind. First, your reader will want to know the answers to the same questions that we discussed above. Second, you want to present your statistics in a clear, unambiguous manner. Below you will find a list of some common pitfalls in the world of statistics, along with suggestions for avoiding them.

1. The mistake of the “average” writer

Nobody wants to be average. Moreover, nobody wants to just see the word “average” in a piece of writing. Why? Because nobody knows exactly what it means. There are not one, not two, but three different definitions of “average” in statistics, and when you use the word, your reader has only a 33.3% chance of guessing correctly which one you mean.

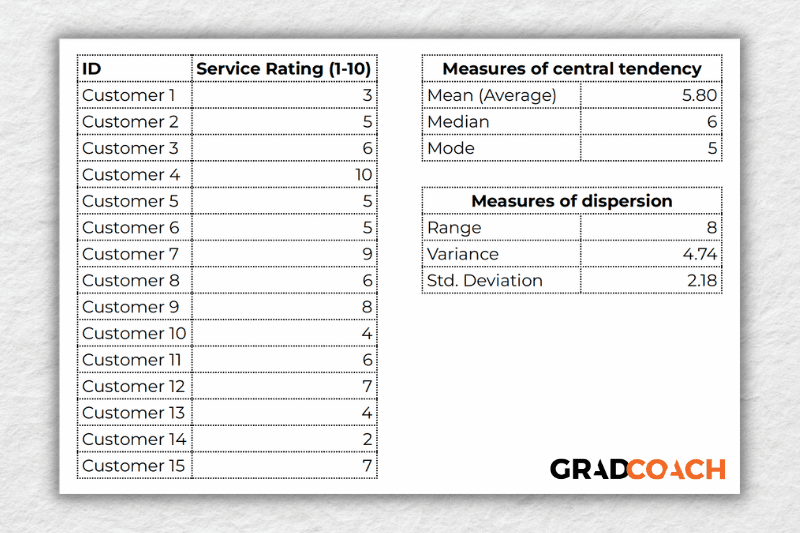

For the following definitions, please refer to this set of numbers: 5, 5, 5, 8, 12, 14, 21, 33, 38

- Mean (arithmetic mean) This may be the most average definition of average (whatever that means). This is the weighted average—a total of all numbers included divided by the quantity of numbers represented. Thus the mean of the above set of numbers is 5+5+5+8+12+14+21+33+38, all divided by 9, which equals 15.644444444444 (Wow! That is a lot of numbers after the decimal—what do we do about that? Precision is a good thing, but too much of it is over the top; it does not necessarily make your argument any stronger. Consider the reasonable amount of precision based on your input and round accordingly. In this case, 15.6 should do the trick.)

- Median Depending on whether you have an odd or even set of numbers, the median is either a) the number midway through an odd set of numbers or b) a value halfway between the two middle numbers in an even set. For the above set (an odd set of 9 numbers), the median is 12. (5, 5, 5, 8 < 12 < 14, 21, 33, 38)

- Mode The mode is the number or value that occurs most frequently in a series. If, by some cruel twist of fate, two or more values occur with the same frequency, then you take the mean of the values. For our set, the mode would be 5, since it occurs 3 times, whereas all other numbers occur only once.

As you can see, the numbers can vary considerably, as can their significance. Therefore, the writer should always inform the reader which average he or she is using. Otherwise, confusion will inevitably ensue.

2. Match your facts with your questions

Be sure that your statistics actually apply to the point/argument you are making. If we return to our discussion of averages, depending on the question you are interesting in answering, you should use the proper statistics.

Perhaps an example would help illustrate this point. Your professor hands back the midterm. The grades are distributed as follows:

The professor felt that the test must have been too easy, because the average (median) grade was a 95.

When a colleague asked her about how the midterm grades came out, she answered, knowing that her classes were gaining a reputation for being “too easy,” that the average (mean) grade was an 80.

When your parents ask you how you can justify doing so poorly on the midterm, you answer, “Don’t worry about my 63. It is not as bad as it sounds. The average (mode) grade was a 58.”

I will leave it up to you to decide whether these choices are appropriate. Selecting the appropriate facts or statistics will help your argument immensely. Not only will they actually support your point, but they will not undermine the legitimacy of your position. Think about how your parents will react when they learn from the professor that the average (median) grade was 95! The best way to maintain precision is to specify which of the three forms of “average” you are using.

3. Show the entire picture

Sometimes, you may misrepresent your evidence by accident and misunderstanding. Other times, however, misrepresentation may be slightly less innocent. This can be seen most readily in visual aids. Do not shape and “massage” the representation so that it “best supports” your argument. This can be achieved by presenting charts/graphs in numerous different ways. Either the range can be shortened (to cut out data points which do not fit, e.g., starting a time series too late or ending it too soon), or the scale can be manipulated so that small changes look big and vice versa. Furthermore, do not fiddle with the proportions, either vertically or horizontally. The fact that USA Today seems to get away with these techniques does not make them OK for an academic argument.

Charts A, B, and C all use the same data points, but the stories they seem to be telling are quite different. Chart A shows a mild increase, followed by a slow decline. Chart B, on the other hand, reveals a steep jump, with a sharp drop-off immediately following. Conversely, Chart C seems to demonstrate that there was virtually no change over time. These variations are a product of changing the scale of the chart. One way to alleviate this problem is to supplement the chart by using the actual numbers in your text, in the spirit of full disclosure.

Another point of concern can be seen in Charts D and E. Both use the same data as charts A, B, and C for the years 1985-2000, but additional time points, using two hypothetical sets of data, have been added back to 1965. Given the different trends leading up to 1985, consider how the significance of recent events can change. In Chart D, the downward trend from 1990 to 2000 is going against a long-term upward trend, whereas in Chart E, it is merely the continuation of a larger downward trend after a brief upward turn.

One of the difficulties with visual aids is that there is no hard and fast rule about how much to include and what to exclude. Judgment is always involved. In general, be sure to present your visual aids so that your readers can draw their own conclusions from the facts and verify your assertions. If what you have cut out could affect the reader’s interpretation of your data, then you might consider keeping it.

4. Give bases of all percentages

Because percentages are always derived from a specific base, they are meaningless until associated with a base. So even if I tell you that after this reading this handout, you will be 23% more persuasive as a writer, that is not a very meaningful assertion because you have no idea what it is based on—23% more persuasive than what?

Let’s look at crime rates to see how this works. Suppose we have two cities, Springfield and Shelbyville. In Springfield, the murder rate has gone up 75%, while in Shelbyville, the rate has only increased by 10%. Which city is having a bigger murder problem? Well, that’s obvious, right? It has to be Springfield. After all, 75% is bigger than 10%.

Hold on a second, because this is actually much less clear than it looks. In order to really know which city has a worse problem, we have to look at the actual numbers. If I told you that Springfield had 4 murders last year and 7 this year, and Shelbyville had 30 murders last year and 33 murders this year, would you change your answer? Maybe, since 33 murders are significantly more than 7. One would certainly feel safer in Springfield, right?

Not so fast, because we still do not have all the facts. We have to make the comparison between the two based on equivalent standards. To do that, we have to look at the per capita rate (often given in rates per 100,000 people per year). If Springfield has 700 residents while Shelbyville has 3.3 million, then Springfield has a murder rate of 1,000 per 100,000 people, and Shelbyville’s rate is merely 1 per 100,000. Gadzooks! The residents of Springfield are dropping like flies. I think I’ll stick with nice, safe Shelbyville, thank you very much.

Percentages are really no different from any other form of statistics: they gain their meaning only through their context. Consequently, percentages should be presented in context so that readers can draw their own conclusions as you emphasize facts important to your argument. Remember, if your statistics really do support your point, then you should have no fear of revealing the larger context that frames them.

Important questions to ask (and answer) about statistics

- Is the question being asked relevant?

- Do the data come from reliable sources?

- Margin of error/confidence interval—when is a change really a change?

- Are all data reported, or just the best/worst?

- Are the data presented in context?

- Have the data been interpreted correctly?

- Does the author confuse correlation with causation?

Now that you have learned the lessons of statistics, you have two options. Use this knowledge to manipulate your numbers to your advantage, or use this knowledge to better understand and use statistics to make accurate and fair arguments. The choice is yours. Nine out of ten writers, however, prefer the latter, and the other one later regrets his or her decision.

You may reproduce it for non-commercial use if you use the entire handout and attribute the source: The Writing Center, University of North Carolina at Chapel Hill

Make a Gift

How To Write a Statistical Analysis Essay

Home » Videos » How To Write a Statistical Analysis Essay

Statistical analysis is a powerful tool used to draw meaningful insights from data. It can be applied to almost any field and has been used in everything from natural sciences, economics, and sociology to sports analytics and business decisions. Writing a statistical analysis essay requires an understanding of the concepts behind it as well as proficiency with data manipulation techniques.

In this guide, we’ll look at the steps involved in writing a statistical analysis essay. Experts in research paper writing from https://domypaper.me/write-my-research-paper/ give detailed instructions on how to properly conduct a statistical analysis and make valid conclusions.

Overview of statistical analysis essays

A statistical analysis essay is an academic paper that involves analyzing quantitative data and interpreting the results. It is often used in social sciences, economics and business to draw meaningful conclusions from the data. The objective of a statistical analysis essay is to analyze a specific dataset or multiple datasets in order to answer a question or prove or disprove a hypothesis. To achieve this effectively, the information must be analyzed using appropriate statistical techniques such as descriptive statistics, inferential statistics, regression analysis and correlation analysis.

Researching the subject matter

Before writing your statistical analysis essay it is important to research the subject matter thoroughly so that you have an understanding of what you are dealing with. This can include collecting and organizing any relevant data sets as well as researching different types of statistical techniques available for analyzing them. Furthermore, it is important to become familiar with the terminology associated with statistical analysis such as mean, median and mode.

Structuring your statistical analysis essay

The structure of your essay will depend on the type of data you are analyzing and the research question or hypothesis that you are attempting to answer. Generally speaking, it should include an introduction which introduces the research question or hypothesis; a body section which includes an overview of relevant literature; a description of how the data was collected and analyzed and any conclusions drawn from it; and finally a conclusion which summarizes all findings.

Analyzing data and drawing conclusions from it

After collecting and organizing your data, you must analyze it in order to draw meaningful conclusions from it. This involves using appropriate statistical techniques such as descriptive statistics, inferential statistics, regression analysis and correlation analysis. It is important to understand the assumptions made when using each technique in order to analyze the data correctly and draw accurate conclusions from it. When choosing a statistical technique for your research, it is important to consult with an expert https://typemyessay.me/service/research-paper-writing-service who can guide you on the most appropriate approach for your study.

Interpreting results and writing a conclusion

Once you have analyzed the data successfully, you must interpret the results carefully in order to answer your research question or prove/disprove your hypothesis. This involves making sure that any conclusions drawn are soundly based on the evidence presented. After interpreting the results, you should write a conclusion which summarizes all of your findings.

Using sources in your analysis

In order to back up your claims and provide support for your arguments, it is important to use credible sources within your analysis. This could include peer-reviewed articles, journals and books which can provide evidence to support your conclusion. It is also important to cite all sources used in order to avoid plagiarism.

Proofreading and finalizing your work

Once you have written your essay it is important to proofread it carefully before submitting it. This involves checking for grammar, spelling and punctuation errors as well as ensuring that the flow of the essay makes sense. Additionally, make sure that any references cited are correct and up-to-date. If you find it hard to complete your research statistical paper, you may want to consider buying a research paper for sale . This service can save you time and money, allowing you to focus on other important tasks.

Tips for writing a successful statistical analysis essay

Here are some tips for writing a successful statistical analysis essay:

- Research your subject matter thoroughly before writing your essay.

- Structure your paper according to the type of data you are analyzing.

- Analyze your data using appropriate statistical techniques.

- Interpret and draw meaningful conclusions from your results.

- Use credible sources to back up any claims or arguments made.

- Proofread and finalize your work before submitting it.

These tips will help ensure that your essay is well researched, structured correctly and contains accurate information. Following these tips will help you write a successful statistical analysis essay which can be used to answer research questions or prove/disprove hypotheses.

Sources and links For the articles and videos I use different databases, such as Eurostat, OECD World Bank Open Data, Data Gov and others. You are free to use the video I have made on your site using the link or the embed code. If you have any questions, don’t hesitate to write to me!

Support statistics and data, if you have reached the end and like this project, you can donate a coffee to “statistics and data”..

Copyright © 2022 Statistics and Data

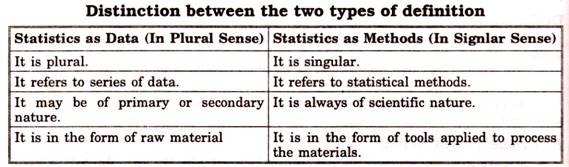

Understanding and Using Statistical Methods

Statistics is a set of tools used to organize and analyze data. Data must either be numeric in origin or transformed by researchers into numbers. For instance, statistics could be used to analyze percentage scores English students receive on a grammar test: the percentage scores ranging from 0 to 100 are already in numeric form. Statistics could also be used to analyze grades on an essay by assigning numeric values to the letter grades, e.g., A=4, B=3, C=2, D=1, and F=0.

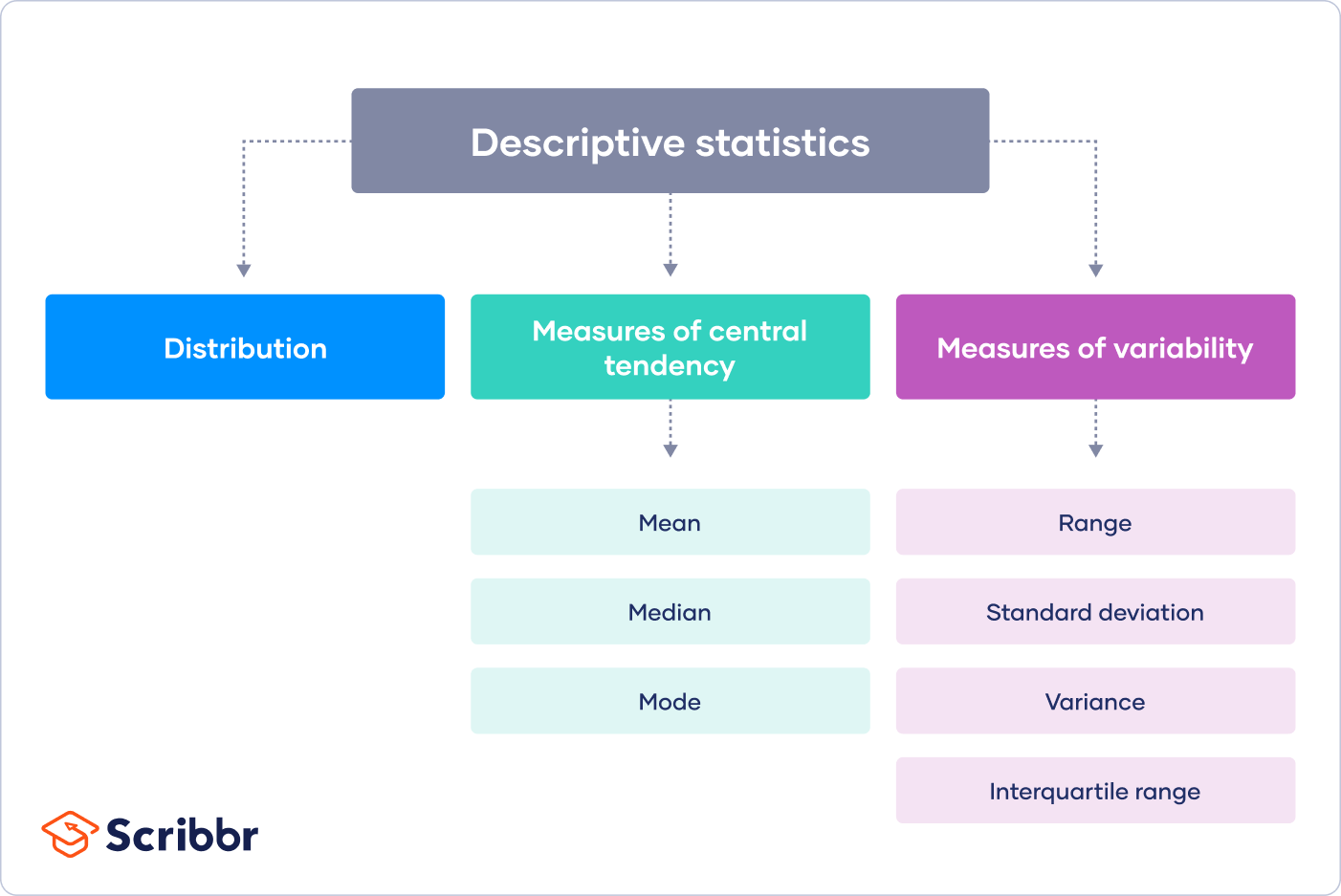

Employing statistics serves two purposes, (1) description and (2) prediction. Statistics are used to describe the characteristics of groups. These characteristics are referred to as variables . Data is gathered and recorded for each variable. Descriptive statistics can then be used to reveal the distribution of the data in each variable.

Statistics is also frequently used for purposes of prediction. Prediction is based on the concept of generalizability : if enough data is compiled about a particular context (e.g., students studying writing in a specific set of classrooms), the patterns revealed through analysis of the data collected about that context can be generalized (or predicted to occur in) similar contexts. The prediction of what will happen in a similar context is probabilistic . That is, the researcher is not certain that the same things will happen in other contexts; instead, the researcher can only reasonably expect that the same things will happen.

Prediction is a method employed by individuals throughout daily life. For instance, if writing students begin class every day for the first half of the semester with a five-minute freewriting exercise, then they will likely come to class the first day of the second half of the semester prepared to again freewrite for the first five minutes of class. The students will have made a prediction about the class content based on their previous experiences in the class: Because they began all previous class sessions with freewriting, it would be probable that their next class session will begin the same way. Statistics is used to perform the same function; the difference is that precise probabilities are determined in terms of the percentage chance that an outcome will occur, complete with a range of error. Prediction is a primary goal of inferential statistics.

Revealing Patterns Using Descriptive Statistics

Descriptive statistics, not surprisingly, "describe" data that have been collected. Commonly used descriptive statistics include frequency counts, ranges (high and low scores or values), means, modes, median scores, and standard deviations. Two concepts are essential to understanding descriptive statistics: variables and distributions .

Statistics are used to explore numerical data (Levin, 1991). Numerical data are observations which are recorded in the form of numbers (Runyon, 1976). Numbers are variable in nature, which means that quantities vary according to certain factors. For examples, when analyzing the grades on student essays, scores will vary for reasons such as the writing ability of the student, the students' knowledge of the subject, and so on. In statistics, these reasons are called variables. Variables are divided into three basic categories:

Nominal Variables

Nominal variables classify data into categories. This process involves labeling categories and then counting frequencies of occurrence (Runyon, 1991). A researcher might wish to compare essay grades between male and female students. Tabulations would be compiled using the categories "male" and "female." Sex would be a nominal variable. Note that the categories themselves are not quantified. Maleness or femaleness are not numerical in nature, rather the frequencies of each category results in data that is quantified -- 11 males and 9 females.

Ordinal Variables

Ordinal variables order (or rank) data in terms of degree. Ordinal variables do not establish the numeric difference between data points. They indicate only that one data point is ranked higher or lower than another (Runyon, 1991). For instance, a researcher might want to analyze the letter grades given on student essays. An A would be ranked higher than a B, and a B higher than a C. However, the difference between these data points, the precise distance between an A and a B, is not defined. Letter grades are an example of an ordinal variable.

Interval Variables

Interval variables score data. Thus the order of data is known as well as the precise numeric distance between data points (Runyon, 1991). A researcher might analyze the actual percentage scores of the essays, assuming that percentage scores are given by the instructor. A score of 98 (A) ranks higher than a score of 87 (B), which ranks higher than a score of 72 (C). Not only is the order of these three data points known, but so is the exact distance between them -- 11 percentage points between the first two, 15 percentage points between the second two and 26 percentage points between the first and last data points.

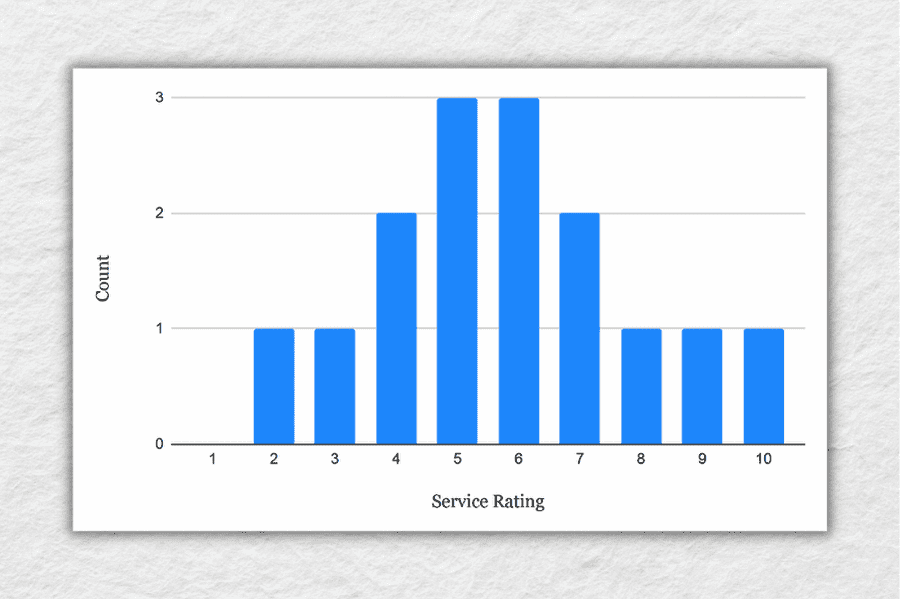

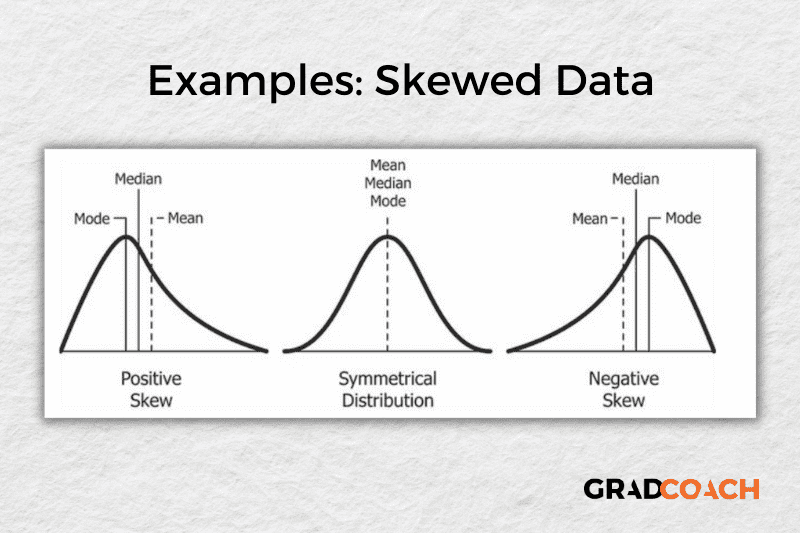

Distributions

A distribution is a graphic representation of data. The line formed by connecting data points is called a frequency distribution. This line may take many shapes. The single most important shape is that of the bell-shaped curve, which characterizes the distribution as "normal." A perfectly normal distribution is only a theoretical ideal. This ideal, however, is an essential ingredient in statistical decision-making (Levin, 1991). A perfectly normal distribution is a mathematical construct which carries with it certain mathematical properties helpful in describing the attributes of the distribution. Although frequency distribution based on actual data points seldom, if ever, completely matches a perfectly normal distribution, a frequency distribution often can approach such a normal curve.

The closer a frequency distribution resembles a normal curve, the more probable that the distribution maintains those same mathematical properties as the normal curve. This is an important factor in describing the characteristics of a frequency distribution. As a frequency distribution approaches a normal curve, generalizations about the data set from which the distribution was derived can be made with greater certainty. And it is this notion of generalizability upon which statistics is founded. It is important to remember that not all frequency distributions approach a normal curve. Some are skewed. When a frequency distribution is skewed, the characteristics inherent to a normal curve no longer apply.

Making Predictions Using Inferential Statistics

Inferential statistics are used to draw conclusions and make predictions based on the descriptions of data. In this section, we explore inferential statistics by using an extended example of experimental studies. Key concepts used in our discussion are probability, populations, and sampling.

Experiments

A typical experimental study involves collecting data on the behaviors, attitudes, or actions of two or more groups and attempting to answer a research question (often called a hypothesis). Based on the analysis of the data, a researcher might then attempt to develop a causal model that can be generalized to populations.

A question that might be addressed through experimental research might be "Does grammar-based writing instruction produce better writers than process-based writing instruction?" Because it would be impossible and impractical to observe, interview, survey, etc. all first-year writing students and instructors in classes using one or the other of these instructional approaches, a researcher would study a sample – or a subset – of a population. Sampling – or the creation of this subset of a population – is used by many researchers who desire to make sense of some phenomenon.

To analyze differences in the ability of student writers who are taught in each type of classroom, the researcher would compare the writing performance of the two groups of students.

Dependent Variables

In an experimental study, a variable whose score depends on (or is determined or caused by) another variable is called a dependent variable. For instance, an experiment might explore the extent to which the writing quality of final drafts of student papers is affected by the kind of instruction they received. In this case, the dependent variable would be writing quality of final drafts.

Independent Variables

In an experimental study, a variable that determines (or causes) the score of a dependent variable is called an independent variable. For instance, an experiment might explore the extent to which the writing quality of final drafts of student papers is affected by the kind of instruction they received. In this case, the independent variable would be the kind of instruction students received.

Probability

Beginning researchers most often use the word probability to express a subjective judgment about the likelihood, or degree of certainty, that a particular event will occur. People say such things as: "It will probably rain tomorrow." "It is unlikely that we will win the ball game." It is possible to assign a number to the event being predicted, a number between 0 and 1, which represents degree of confidence that the event will occur. For example, a student might say that the likelihood an instructor will give an exam next week is about 90 percent, or .9. Where 100 percent, or 1.00, represents certainty, .9 would mean the student is almost certain the instructor will give an exam. If the student assigned the number .6, the likelihood of an exam would be just slightly greater than the likelihood of no exam. A rating of 0 would indicate complete certainty that no exam would be given(Shoeninger, 1971).

The probability of a particular outcome or set of outcomes is called a p-value . In our discussion, a p-value will be symbolized by a p followed by parentheses enclosing a symbol of the outcome or set of outcomes. For example, p(X) should be read, "the probability of a given X score" (Shoeninger). Thus p(exam) should be read, "the probability an instructor will give an exam next week."

A population is a group which is studied. In educational research, the population is usually a group of people. Researchers seldom are able to study every member of a population. Usually, they instead study a representative sample – or subset – of a population. Researchers then generalize their findings about the sample to the population as a whole.

Sampling is performed so that a population under study can be reduced to a manageable size. This can be accomplished via random sampling, discussed below, or via matching.

Random sampling is a procedure used by researchers in which all samples of a particular size have an equal chance to be chosen for an observation, experiment, etc (Runyon and Haber, 1976). There is no predetermination as to which members are chosen for the sample. This type of sampling is done in order to minimize scientific biases and offers the greatest likelihood that a sample will indeed be representative of the larger population. The aim here is to make the sample as representative of the population as possible. Note that the closer a sample distribution approximates the population distribution, the more generalizable the results of the sample study are to the population. Notions of probability apply here. Random sampling provides the greatest probability that the distribution of scores in a sample will closely approximate the distribution of scores in the overall population.

Matching is a method used by researchers to gain accurate and precise results of a study so that they may be applicable to a larger population. After a population has been examined and a sample has been chosen, a researcher must then consider variables, or extrinsic factors, that might affect the study. Matching methods apply when researchers are aware of extrinsic variables before conducting a study. Two methods used to match groups are:

Precision Matching

In precision matching , there is an experimental group that is matched with a control group. Both groups, in essence, have the same characteristics. Thus, the proposed causal relationship/model being examined allows for the probabilistic assumption that the result is generalizable.

Frequency Distribution

Frequency distribution is more manageable and efficient than precision matching. Instead of one-to-one matching that must be administered in precision matching, frequency distribution allows the comparison of an experimental and control group through relevant variables. If three Communications majors and four English majors are chosen for the control group, then an equal proportion of three Communications major and four English majors should be allotted to the experiment group. Of course, beyond their majors, the characteristics of the matched sets of participants may in fact be vastly different.

Although, in theory, matching tends to produce valid conclusions, a rather obvious difficulty arises in finding subjects which are compatible. Researchers may even believe that experimental and control groups are identical when, in fact, a number of variables have been overlooked. For these reasons, researchers tend to reject matching methods in favor of random sampling.

Statistics can be used to analyze individual variables, relationships among variables, and differences between groups. In this section, we explore a range of statistical methods for conducting these analyses.

Statistics can be used to analyze individual variables, relationships among variables, and differences between groups.

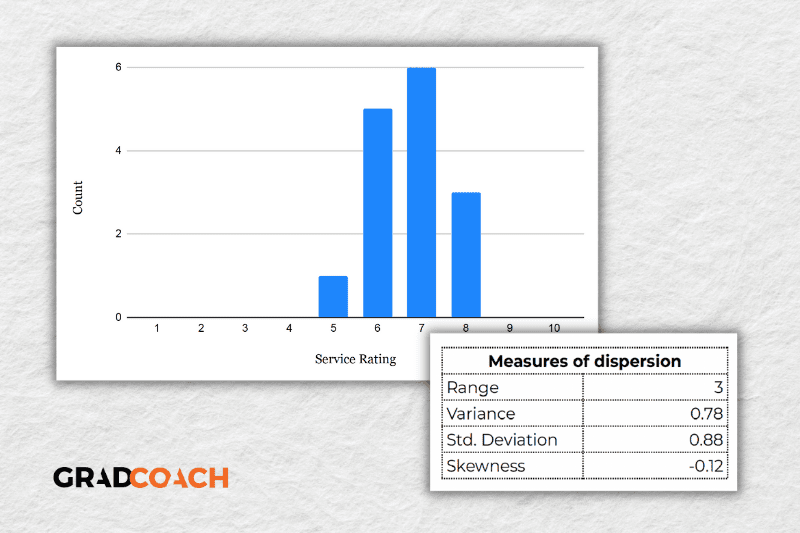

Analyzing Individual Variables

The statistical procedures used to analyze a single variable describing a group (such as a population or representative sample) involve measures of central tendency and measures of variation . To explore these measures, a researcher first needs to consider the distribution , or range of values of a particular variable in a population or sample. Normal distribution occurs if the distribution of a population is completely normal. When graphed, this type of distribution will look like a bell curve; it is symmetrical and most of the scores cluster toward the middle. Skewed Distribution simply means the distribution of a population is not normal. The scores might cluster toward the right or the left side of the curve, for instance. Or there might be two or more clusters of scores, so that the distribution looks like a series of hills.

Once frequency distributions have been determined, researchers can calculate measures of central tendency and measures of variation. Measures of central tendency indicate averages of the distribution, and measures of variation indicate the spread, or range, of the distribution (Hinkle, Wiersma and Jurs 1988).

Measures of Central Tendency

Central tendency is measured in three ways: mean , median and mode . The mean is simply the average score of a distribution. The median is the center, or middle score within a distribution. The mode is the most frequent score within a distribution. In a normal distribution, the mean, median and mode are identical.

Measures of Variation

Measures of variation determine the range of the distribution, relative to the measures of central tendency. Where the measures of central tendency are specific data points, measures of variation are lengths between various points within the distribution. Variation is measured in terms of range, mean deviation, variance, and standard deviation (Hinkle, Wiersma and Jurs 1988).

The range is the distance between the lowest data point and the highest data point. Deviation scores are the distances between each data point and the mean.

Mean deviation is the average of the absolute values of the deviation scores; that is, mean deviation is the average distance between the mean and the data points. Closely related to the measure of mean deviation is the measure of variance .

Variance also indicates a relationship between the mean of a distribution and the data points; it is determined by averaging the sum of the squared deviations. Squaring the differences instead of taking the absolute values allows for greater flexibility in calculating further algebraic manipulations of the data. Another measure of variation is the standard deviation .

Standard deviation is the square root of the variance. This calculation is useful because it allows for the same flexibility as variance regarding further calculations and yet also expresses variation in the same units as the original measurements (Hinkle, Wiersma and Jurs 1988).

Analyzing Differences Between Groups

Statistical tests can be used to analyze differences in the scores of two or more groups. The following statistical tests are commonly used to analyze differences between groups:

A t-test is used to determine if the scores of two groups differ on a single variable. A t-test is designed to test for the differences in mean scores. For instance, you could use a t-test to determine whether writing ability differs among students in two classrooms.

Note: A t-test is appropriate only when looking at paired data. It is useful in analyzing scores of two groups of participants on a particular variable or in analyzing scores of a single group of participants on two variables.

Matched Pairs T-Test

This type of t-test could be used to determine if the scores of the same participants in a study differ under different conditions. For instance, this sort of t-test could be used to determine if people write better essays after taking a writing class than they did before taking the writing class.

Analysis of Variance (ANOVA)

The ANOVA (analysis of variance) is a statistical test which makes a single, overall decision as to whether a significant difference is present among three or more sample means (Levin 484). An ANOVA is similar to a t-test. However, the ANOVA can also test multiple groups to see if they differ on one or more variables. The ANOVA can be used to test between-groups and within-groups differences. There are two types of ANOVAs:

One-Way ANOVA: This tests a group or groups to determine if there are differences on a single set of scores. For instance, a one-way ANOVA could determine whether freshmen, sophomores, juniors, and seniors differed in their reading ability.

Multiple ANOVA (MANOVA): This tests a group or groups to determine if there are differences on two or more variables. For instance, a MANOVA could determine whether freshmen, sophomores, juniors, and seniors differed in reading ability and whether those differences were reflected by gender. In this case, a researcher could determine (1) whether reading ability differed across class levels, (2) whether reading ability differed across gender, and (3) whether there was an interaction between class level and gender.

Analyzing Relationships Among Variables

Statistical relationships between variables rely on notions of correlation and regression. These two concepts aim to describe the ways in which variables relate to one another:

Correlation

Correlation tests are used to determine how strongly the scores of two variables are associated or correlated with each other. A researcher might want to know, for instance, whether a correlation exists between students' writing placement examination scores and their scores on a standardized test such as the ACT or SAT. Correlation is measured using values between +1.0 and -1.0. Correlations close to 0 indicate little or no relationship between two variables, while correlations close to +1.0 (or -1.0) indicate strong positive (or negative) relationships (Hayes et al. 554).

Correlation denotes positive or negative association between variables in a study. Two variables are positively associated when larger values of one tend to be accompanied by larger values of the other. The variables are negatively associated when larger values of one tend to be accompanied by smaller values of the other (Moore 208).

An example of a strong positive correlation would be the correlation between age and job experience. Typically, the longer people are alive, the more job experience they might have.

An example of a strong negative relationship might occur between the strength of people's party affiliations and their willingness to vote for a candidate from different parties. In many elections, Democrats are unlikely to vote for Republicans, and vice versa.

Regression analysis attempts to determine the best "fit" between two or more variables. The independent variable in a regression analysis is a continuous variable, and thus allows you to determine how one or more independent variables predict the values of a dependent variable.

Simple Linear Regression is the simplest form of regression. Like a correlation, it determines the extent to which one independent variables predicts a dependent variable. You can think of a simple linear regression as a correlation line. Regression analysis provides you with more information than correlation does, however. It tells you how well the line "fits" the data. That is, it tells you how closely the line comes to all of your data points. The line in the figure indicates the regression line drawn to find the best fit among a set of data points. Each dot represents a person and the axes indicate the amount of job experience and the age of that person. The dotted lines indicate the distance from the regression line. A smaller total distance indicates a better fit. Some of the information provided in a regression analysis, as a result, indicates the slope of the regression line, the R value (or correlation), and the strength of the fit (an indication of the extent to which the line can account for variations among the data points).

Multiple Linear Regression allows one to determine how well multiple independent variables predict the value of a dependent variable. A researcher might examine, for instance, how well age and experience predict a person's salary. The interesting thing here is that one would no longer be dealing with a regression "line." Instead, since the study deals with three dimensions (age, experience, and salary), it would be dealing with a plane, that is, with a two-dimensional figure. If a fourth variable was added to the equations, one would be dealing with a three-dimensional figure, and so on.

Misuses of Statistics

Statistics consists of tests used to analyze data. These tests provide an analytic framework within which researchers can pursue their research questions. This framework provides one way of working with observable information. Like other analytic frameworks, statistical tests can be misused, resulting in potential misinterpretation and misrepresentation. Researchers decide which research questions to ask, which groups to study, how those groups should be divided, which variables to focus upon, and how best to categorize and measure such variables. The point is that researchers retain the ability to manipulate any study even as they decide what to study and how to study it.

Potential Misuses:

- Manipulating scale to change the appearance of the distribution of data

- Eliminating high/low scores for more coherent presentation

- Inappropriately focusing on certain variables to the exclusion of other variables

- Presenting correlation as causation

Measures Against Potential Misuses:

- Testing for reliability and validity

- Testing for statistical significance

- Critically reading statistics

Annotated Bibliography

Dear, K. (1997, August 28). SurfStat australia . Available: http://surfstat.newcastle.edu.au/surfstat/main/surfstat-main.html

A comprehensive site contain an online textbook, links together statistics sites, exercises, and a hotlist for Java applets.

de Leeuw, J. (1997, May 13 ). Statistics: The study of stability in variation . Available: http://www.stat.ucla.edu/textbook/ [1997, December 8].

An online textbook providing discussions specifically regarding variability.

Ewen, R.B. (1988). The workbook for introductory statistics for the behavioral sciences. Orlando, FL: Harcourt Brace Jovanovich.

A workbook providing sample problems typical of the statistical applications in social sciences.

Glass, G. (1996, August 26). COE 502: Introduction to quantitative methods . Available: http://seamonkey.ed.asu.edu/~gene/502/home.html

Outline of a basic statistics course in the college of education at Arizona State University, including a list of statistic resources on the Internet and access to online programs using forms and PERL to analyze data.

Hartwig, F., Dearing, B.E. (1979). Exploratory data analysis . Newberry Park, CA: Sage Publications, Inc.

Hayes, J. R., Young, R.E., Matchett, M.L., McCaffrey, M., Cochran, C., and Hajduk, T., eds. (1992). Reading empirical research studies: The rhetoric of research . Hillsdale, NJ: Lawrence Erlbaum Associates.

A text focusing on the language of research. Topics vary from "Communicating with Low-Literate Adults" to "Reporting on Journalists."

Hinkle, Dennis E., Wiersma, W. and Jurs, S.G. (1988). Applied statistics for the behavioral sciences . Boston: Houghton.

This is an introductory text book on statistics. Each of 22 chapters includes a summary, sample exercises and highlighted main points. The book also includes an index by subject.

Kleinbaum, David G., Kupper, L.L. and Muller K.E. Applied regression analysis and other multivariable methods 2nd ed . Boston: PWS-KENT Publishing Company.

An introductory text with emphasis on statistical analyses. Chapters contain exercises.

Kolstoe, R.H. (1969). Introduction to statistics for the behavioral sciences . Homewood, ILL: Dorsey.

Though more than 25-years-old, this textbook uses concise chapters to explain many essential statistical concepts. Information is organized in a simple and straightforward manner.

Levin, J., and James, A.F. (1991). Elementary statistics in social research, 5th ed . New York: HarperCollins.

This textbook presents statistics in three major sections: Description, From Description to Decision Making and Decision Making. The first chapter underlies reasons for using statistics in social research. Subsequent chapters detail the process of conducting and presenting statistics.

Liebetrau, A.M. (1983). Measures of association . Newberry Park, CA: Sage Publications, Inc.

Mendenhall, W.(1975). Introduction to probability and statistics, 4th ed. North Scltuate, MA: Duxbury Press.

An introductory textbook. A good overview of statistics. Includes clear definitions and exercises.

Moore, David S. (1979). Statistics: Concepts and controversies , 2nd ed . New York: W. H. Freeman and Company.

Introductory text. Basic overview of statistical concepts. Includes discussions of concrete applications such as opinion polls and Consumer Price Index.

Mosier, C.T. (1997). MG284 Statistics I - notes. Available: http://phoenix.som.clarkson.edu/~cmosier/statistics/main/outline/index.html

Explanations of fundamental statistical concepts.

Newton, H.J., Carrol, J.H., Wang, N., & Whiting, D.(1996, Fall). Statistics 30X class notes. Available: http://stat.tamu.edu/stat30x/trydouble2.html [1997, December 10].

This site contains a hyperlinked list of very comprehensive course notes from and introductory statistics class. A large variety of statistical concepts are covered.

Runyon, R.P., and Haber, A. (1976). Fundamentals of behavioral statistics , 3rd ed . Reading, MA: Addison-Wesley Publishing Company.

This is a textbook that divides statistics into categories of descriptive statistics and inferential statistics. It presents statistical procedures primarily through examples. This book includes sectional reviews, reviews of basic mathematics and also a glossary of symbols common to statistics.

Schoeninger, D.W. and Insko, C.A. (1971). Introductory statistics for the behavioral sciences . Boston: Allyn and Bacon, Inc.

An introductory text including discussions of correlation, probability, distribution, and variance. Includes statistical tables in the appendices.

Stevens, J. (1986). Applied multivariate statistics for the social sciences . Hillsdale, NJ: Lawrence Erlbaum Associates.

Stockberger, D. W. (1996). Introductory statistics: Concepts, models and applications . Available: http://www.psychstat.smsu.edu/ [1997, December 8].

Describes various statistical analyses. Includes statistical tables in the appendix.

Local Resources

If you are a member of the Colorado State University community and seek more in-depth help with analyzing data from your research (e.g., from an undergraduate or graduate research project), please contact CSU's Graybill Statistical Laboratory for statistical consulting assistance at http://www.stat.colostate.edu/statlab.html .

Jackson, Shawna, Karen Marcus, Cara McDonald, Timothy Wehner, & Mike Palmquist. (2005). Statistics: An Introduction. Writing@CSU . Colorado State University. https://writing.colostate.edu/guides/guide.cfm?guideid=67

Purdue Online Writing Lab Purdue OWL® College of Liberal Arts

Writing with Descriptive Statistics

Welcome to the Purdue OWL

This page is brought to you by the OWL at Purdue University. When printing this page, you must include the entire legal notice.

Copyright ©1995-2018 by The Writing Lab & The OWL at Purdue and Purdue University. All rights reserved. This material may not be published, reproduced, broadcast, rewritten, or redistributed without permission. Use of this site constitutes acceptance of our terms and conditions of fair use.

Usually there is no good way to write a statistic. It rarely sounds good, and often interrupts the structure or flow of your writing. Oftentimes the best way to write descriptive statistics is to be direct. If you are citing several statistics about the same topic, it may be best to include them all in the same paragraph or section.

The mean of exam two is 77.7. The median is 75, and the mode is 79. Exam two had a standard deviation of 11.6.

Overall the company had another excellent year. We shipped 14.3 tons of fertilizer for the year, and averaged 1.7 tons of fertilizer during the summer months. This is an increase over last year, where we shipped only 13.1 tons of fertilizer, and averaged only 1.4 tons during the summer months. (Standard deviations were as followed: this summer .3 tons, last summer .4 tons).

Some fields prefer to put means and standard deviations in parentheses like this:

If you have lots of statistics to report, you should strongly consider presenting them in tables or some other visual form. You would then highlight statistics of interest in your text, but would not report all of the statistics. See the section on statistics and visuals for more details.

If you have a data set that you are using (such as all the scores from an exam) it would be unusual to include all of the scores in a paper or article. One of the reasons to use statistics is to condense large amounts of information into more manageable chunks; presenting your entire data set defeats this purpose.

At the bare minimum, if you are presenting statistics on a data set, it should include the mean and probably the standard deviation. This is the minimum information needed to get an idea of what the distribution of your data set might look like. How much additional information you include is entirely up to you. In general, don't include information if it is irrelevant to your argument or purpose. If you include statistics that many of your readers would not understand, consider adding the statistics in a footnote or appendix that explains it in more detail.

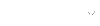

How to present data and statistics in your paper

In most academic fields, presenting stats and data is key. words like 'values', 'equations', 'numbers', and 'tests' are common in theses and papers. but how do you use these words; what other words do they usually combine with in this analysis, we explore what phrases authors use most often when they present data and statistics..

Our analysis

We built a data set of 300 million sentences from published papers. From these sentences, we extracted all three-word combinations following the pattern subject + verb + object (for example, 'data shows difference').

We then collected the 100 most frequent combinations and their frequency, and visualized these (see image below). The 3 most-used triples were 'equation have solution', 'data provide evidence', and 'test show difference'.

Note that all phrases are lemmatized: they reflect the total counts of all forms. For example, the phrase 'test show difference' includes 'tests showing differences', 'tests showed differences', and others. The combined words were also not necessarily adjacent in the original sentence; for instance, an occurrence of 'test show difference' might have been 'test A showed a small difference' in the original paper.

The image below shows the most frequently used word combinations. The subject is shown in bold, the verb in regular script, and the object in italics. The figure uses hierarchical clustering, with the phrases first being grouped by subject and then by verb.

Not surprisingly, ‘data’ is the most frequent subject. It is often combined with the verbs 'provide', 'show', and 'support'. For example, data 'provide' 'evidence', 'information', or 'insights'; data 'show' 'differences', 'increases', and 'correlations'; and data 'support' 'hypotheses', 'notions', and 'ideas'. The subject 'test' is also frequent, and most often followed by 'reveal', 'indicate', or 'show' '(a) difference'.

Next time you’re writing your methods or results section and you’re stuck for words, see if this image helps you! It might give you the words you’re looking for.

About the author

Hilde is Chief Applied Linguist at Writefull.

Writefull webinars

Looking for more academic writing tips? Join our free webinars hosted by Writefull's linguists!

Introductory essay

Written by the educators who created Visualizing Data, a brief look at the key facts, tough questions and big ideas in their field. Begin this TED Study with a fascinating read that gives context and clarity to the material.

The reality of today

All of us now are being blasted by information design. It's being poured into our eyes through the Web, and we're all visualizers now; we're all demanding a visual aspect to our information...And if you're navigating a dense information jungle, coming across a beautiful graphic or a lovely data visualization, it's a relief, it's like coming across a clearing in the jungle. David McCandless

In today's complex 'information jungle,' David McCandless observes that "Data is the new soil." McCandless, a data journalist and information designer, celebrates data as a ubiquitous resource providing a fertile and creative medium from which new ideas and understanding can grow. McCandless's inspiration, statistician Hans Rosling, builds on this idea in his own TEDTalk with his compelling image of flowers growing out of data/soil. These 'flowers' represent the many insights that can be gleaned from effective visualization of data.

We're just learning how to till this soil and make sense of the mountains of data constantly being generated. As Gary King, Director of Harvard's Institute for Quantitative Social Science says in his New York Times article "The Age of Big Data":

It's a revolution. We're really just getting under way. But the march of quantification, made possible by enormous new sources of data, will sweep through academia, business and government. There is no area that is going to be untouched.

How do we deal with all this data without getting information overload? How do we use data to gain real insight into the world? Finding ways to pull interesting information out of data can be very rewarding, both personally and professionally. The managing editor of Financial Times observed on CNN's Your Money : "The people who are able to in a sophisticated and practical way analyze that data are going to have terrific jobs." Those who learn how to present data in effective ways will be valuable in every field.

Many people, when they think of data, think of tables filled with numbers. But this long-held notion is eroding. Today, we're generating streams of data that are often too complex to be presented in a simple "table." In his TEDTalk, Blaise Aguera y Arcas explores images as data, while Deb Roy uses audio, video, and the text messages in social media as data.

Some may also think that only a few specialized professionals can draw insights from data. When we look at data in the right way, however, the results can be fun, insightful, even whimsical — and accessible to everyone! Who knew, for example, that there are more relationship break-ups on Monday than on any other day of the week, or that the most break-ups (at least those discussed on Facebook) occur in mid-December? David McCandless discovered this by analyzing thousands of Facebook status updates.

Data, data, everywhere

There is more data available to us now than we can possibly process. Every minute , Internet users add the following to the big data pool (i):

- 204,166,667 email messages sent

- More than 2,000,000 Google searches

- 684,478 pieces of content added on Facebook

- $272,070 spent by consumers via online shopping

- More than 100,000 tweets on Twitter

- 47,000 app downloads from Apple

- 34,722 "likes" on Facebook for different brands and organizations

- 27,778 new posts on Tumblr blogs

- 3,600 new photos on Instagram

- 3,125 new photos on Flickr

- 2,083 check-ins on Foursquare

- 571 new websites created

- 347 new blog posts published on Wordpress

- 217 new mobile web users

- 48 hours of new video on YouTube

These numbers are almost certainly higher now, as you read this. And this just describes a small piece of the data being generated and stored by humanity. We're all leaving data trails — not just on the Internet, but in everything we do. This includes reams of financial data (from credit cards, businesses, and Wall Street), demographic data on the world's populations, meteorological data on weather and the environment, retail sales data that records everything we buy, nutritional data on food and restaurants, sports data of all types, and so on.

Governments are using data to search for terrorist plots, retailers are using it to maximize marketing strategies, and health organizations are using it to track outbreaks of the flu. But did you ever think of collecting data on every minute of your child's life? That's precisely what Deb Roy did. He recorded 90,000 hours of video and 140,000 hours of audio during his son's first years. That's a lot of data! He and his colleagues are using the data to understand how children learn language, and they're now extending this work to analyze publicly available conversations on social media, allowing them to take "the real-time pulse of a nation."

Data can provide us with new and deeper insight into our world. It can help break stereotypes and build understanding. But the sheer quantity of data, even in just any one small area of interest, is overwhelming. How can we make sense of some of this data in an insightful way?

The power of visualizing data

Visualization can help transform these mountains of data into meaningful information. In his TEDTalk, David McCandless comments that the sense of sight has by far the fastest and biggest bandwidth of any of the five senses. Indeed, about 80% of the information we take in is by eye. Data that seems impenetrable can come alive if presented well in a picture, graph, or even a movie. Hans Rosling tells us that "Students get very excited — and policy-makers and the corporate sector — when they can see the data."

It makes sense that, if we can effectively display data visually, we can make it accessible and understandable to more people. Should we worry, however, that by condensing data into a graph, we are simplifying too much and losing some of the important features of the data? Let's look at a fascinating study conducted by researchers Emre Soyer and Robin Hogarth . The study was conducted on economists, who are certainly no strangers to statistical analysis. Three groups of economists were asked the same question concerning a dataset:

- One group was given the data and a standard statistical analysis of the data; 72% of these economists got the answer wrong.

- Another group was given the data, the statistical analysis, and a graph; still 61% of these economists got the answer wrong.

- A third group was given only the graph, and only 3% got the answer wrong.

Visualizing data can sometimes be less misleading than using the raw numbers and statistics!

What about all the rest of us, who may not be professional economists or statisticians? Nathalie Miebach finds that making art out of data allows people an alternative entry into science. She transforms mountains of weather data into tactile physical structures and musical scores, adding both touch and hearing to the sense of sight to build even greater understanding of data.

Another artist, Chris Jordan, is concerned about our ability to comprehend big numbers. As citizens of an ever-more connected global world, we have an increased need to get useable information from big data — big in terms of the volume of numbers as well as their size. Jordan's art is designed to help us process such numbers, especially numbers that relate to issues of addiction and waste. For example, Jordan notes that the United States has the largest percentage of its population in prison of any country on earth: 2.3 million people in prison in the United States in 2005 and the number continues to rise. Jordan uses art, in this case a super-sized image of 2.3 million prison jumpsuits, to help us see that number and to help us begin to process the societal implications of that single data value. Because our brains can't truly process such a large number, his artwork makes it real.

The role of technology in visualizing data

The TEDTalks in this collection depend to varying degrees on sophisticated technology to gather, store, process, and display data. Handling massive amounts of data (e.g., David McCandless tracking 10,000 changes in Facebook status, Blaise Aguera y Arcas synching thousands of online images of the Notre Dame Cathedral, or Deb Roy searching for individual words in 90,000 hours of video tape) requires cutting-edge computing tools that have been developed specifically to address the challenges of big data. The ability to manipulate color, size, location, motion, and sound to discover and display important features of data in a way that makes it readily accessible to ordinary humans is a challenging task that depends heavily on increasingly sophisticated technology.

The importance of good visualization

There are good ways and bad ways of presenting data. Many examples of outstanding presentations of data are shown in the TEDTalks. However, sometimes visualizations of data can be ineffective or downright misleading. For example, an inappropriate scale might make a relatively small difference look much more substantial than it should be, or an overly complicated display might obfuscate the main relationships in the data. Statistician Kaiser Fung's blog Junk Charts offers many examples of poor representations of data (and some good ones) with descriptions to help the reader understand what makes a graph effective or ineffective. For more examples of both good and bad representations of data, see data visualization architect Andy Kirk's blog at visualisingdata.com . Both consistently have very current examples from up-to-date sources and events.

Creativity, even artistic ability, helps us see data in new ways. Magic happens when interesting data meets effective design: when statistician meets designer (sometimes within the same person). We are fortunate to live in a time when interactive and animated graphs are becoming commonplace, and these tools can be incredibly powerful. Other times, simpler graphs might be more effective. The key is to present data in a way that is visually appealing while allowing the data to speak for itself.

Changing perceptions through data

While graphs and charts can lead to misunderstandings, there is ultimately "truth in numbers." As Steven Levitt and Stephen Dubner say in Freakonomics , "[T]eachers and criminals and real-estate agents may lie, and politicians, and even C.I.A. analysts. But numbers don't." Indeed, consideration of data can often be the easiest way to glean objective insights. Again from Freakonomics : "There is nothing like the sheer power of numbers to scrub away layers of confusion and contradiction."

Data can help us understand the world as it is, not as we believe it to be. As Hans Rosling demonstrates, it's often not ignorance but our preconceived ideas that get in the way of understanding the world as it is. Publicly-available statistics can reshape our world view: Rosling encourages us to "let the dataset change your mindset."

Chris Jordan's powerful images of waste and addiction make us face, rather than deny, the facts. It's easy to hear and then ignore that we use and discard 1 million plastic cups every 6 hours on airline flights alone. When we're confronted with his powerful image, we engage with that fact on an entirely different level (and may never see airline plastic cups in the same way again).

The ability to see data expands our perceptions of the world in ways that we're just beginning to understand. Computer simulations allow us to see how diseases spread, how forest fires might be contained, how terror networks communicate. We gain understanding of these things in ways that were unimaginable only a few decades ago. When Blaise Aguera y Arcas demonstrates Photosynth, we feel as if we're looking at the future. By linking together user-contributed digital images culled from all over the Internet, he creates navigable "immensely rich virtual models of every interesting part of the earth" created from the collective memory of all of us. Deb Roy does somewhat the same thing with language, pulling in publicly available social media feeds to analyze national and global conversation trends.

Roy sums it up with these powerful words: "What's emerging is an ability to see new social structures and dynamics that have previously not been seen. ...The implications here are profound, whether it's for science, for commerce, for government, or perhaps most of all, for us as individuals."

Let's begin with the TEDTalk from David McCandless, a self-described "data detective" who describes how to highlight hidden patterns in data through its artful representation.

David McCandless

The beauty of data visualization.

i. Data obtained June 2012 from “How Much Data Is Created Every Minute?” on http://mashable.com/2012/06/22/data-created-every-minute/ .

Relevant talks

Hans Rosling

The magic washing machine.

Nathalie Miebach

Art made of storms.

Chris Jordan

Turning powerful stats into art.

Blaise Agüera y Arcas

How photosynth can connect the world's images.

The birth of a word

Writing With Statistics: Mistakes to Watch Out For

Numbers are power. Adding relevant statistics to your content can strengthen any argument. But if not used carefully, numbers create more problems than they solve.

I wrote a book for content writers called From Reads To Leads . You should check it out to learn what rules you need to follow to write content that converts readers into leads. One of these rules is about using statistics in writing. Go to my home page to get the book or read the first chapter.

Many writers pick up numbers off the street to make their messages more compelling. They aren’t looking to support their arguments or to make their stories more accurate. They aren’t looking for truth.

Let's look at this example:

If 36 percent of Americans use food delivery services, does this mean that the popularity of these services is growing? To show growth in popularity, we would need to compare the percentage of Americans who used food delivery services in March 2019, for example, with the percentage who used them in March 2021. Growth can only be demonstrated over time. Since there’s nothing to which readers can compare this 36 percent, they might doubt whether the popularity of food delivery services is actually growing.

Let's look at another example:

Any percentage is meaningless to your readers unless you compare it against some base percentage. The stats in the example I've just mentioned look reasonable. The author compares Black Friday sales completed using mobile devices last year with sales completed using mobile devices a few years ago. But let’s think about it for a second. Don’t these statistics raise any questions? Firstly of all, they come from two different sources. They may have been collected using wildly different methodologies and by surveying entirely different demographics. There’s no way for the reader to know whether these percentages can reasonably be compared.

Sometimes a percentage might look high, but without context, it might not be telling the whole story. You need to dig deeper to uncover the truth:

The author did solid research to help her readers arrive at the conclusion that despite a seemingly large number of women-owned businesses, there’s still gender inequality in entrepreneurship.

How to write with statistics

As you use statistics in your writing, here are a few things you need to pay attention to:

1 . Numbers can be just as ambiguous as words and need just as much explanation.

For example:

Is 57 percent good or bad? This statement requires an explanation:

Now it’s clear that we’re making progress.

2. Don’t just throw numbers everywhere you can because it’s considered a good SEO practice. Your statistics need to help you make your point . They can make your arguments believable.

For example, let’s say our key message is “eating fat keeps you healthy.” One of the arguments we can use to support this message is that polyunsaturated fats can lower cholesterol levels, which, as a result, can lower the risk of heart disease and stroke. We can use statistics to make this argument believable:

We didn’t use these stats to demonstrate expertise . We added them to support our key message.

3. When trying to prove your argument with statistics, you need to be sure that what you’re saying is true. I you have doubts, you need to look first at the numbers to help you shape your message and ideas. Don’t do it the other way round.

If you’ve already shaped your message about something, you’ll be tempted to look for data to prove that you’re right. But the truth is, you might be wrong. You can find seemingly legitimate evidence to support any claim, but your argument won’t be convincing if it’s built on a shaky foundation.

The next time you find yourself thinking that what you want to say might not be accurate, don’t head to Google to prove you’re right. Instead, look to answer the question with data. Good writing is about telling the truth, not trying to dupe the reader.

4. Numbers without context or specific details are just that—numbers. They don’t help readers arrive at conclusions.

Now let’s see how to author, uses details to communicate the gravity of the situation:

The statement “the cost of college increased by more than 25% in the last 10 years” doesn’t give readers a clear idea of the growing cost of higher education. But comparing what students paid (on average) for a college education in 1978, 2008, and today helps the reader realize that the costs of college are increasing at a breakneck pace and that something has to be done about it.

You need statistics to prove your arguments. And when citing statistics, you must obey the same rules of clarity you obey with words. Learn more about these rules in my book From Reads To Leads.

Watch it on YouTube:

Hope this article was useful! Thank you for reading and subscribing to my YouTube channel .

When it comes to attracting leads to your business, there’s no substitute for content that teaches your audience something valuable. Read on to discover how you can share valuable knowledge through different types of content.

Do you know for sure who exactly will be reading your content? You don't. Read on to learn how to write content that serves and resonates with as many people as possible.

Follow me to get better at writing

Great writing comes before anything else! Subscribe to get useful supplies to fuel your writing.

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

1.2: Importance of Statistics

- Last updated

- Save as PDF

- Page ID 264

- Rice University

Learning Objectives

- Give examples of statistics encountered in everyday life

- Give examples of how statistics can lend credibility to an argument

Like most people, you probably feel that it is important to "take control of your life." But what does this mean? Partly, it means being able to properly evaluate the data and claims that bombard you every day. If you cannot distinguish good from faulty reasoning, then you are vulnerable to manipulation and to decisions that are not in your best interest. Statistics provides tools that you need in order to react intelligently to information you hear or read. In this sense, statistics is one of the most important things that you can study.

To be more specific, here are some claims that we have heard on several occasions. (We are not saying that each one of these claims is true!)

- \(4\) out of \(5\) dentists recommend Dentine.

- Almost \(85\%\) of lung cancers in men and \(45\%\) in women are tobacco-related.

- Condoms are effective \(94\%\) of the time.

- Native Americans are significantly more likely to be hit crossing the street than are people of other ethnicities.