Rubric for Evaluating Student Presentations

- Kellie Hayden

- Categories : Student assessment tools & principles

- Tags : Teaching methods, tools & strategies

Make Assessing Easier with a Rubric

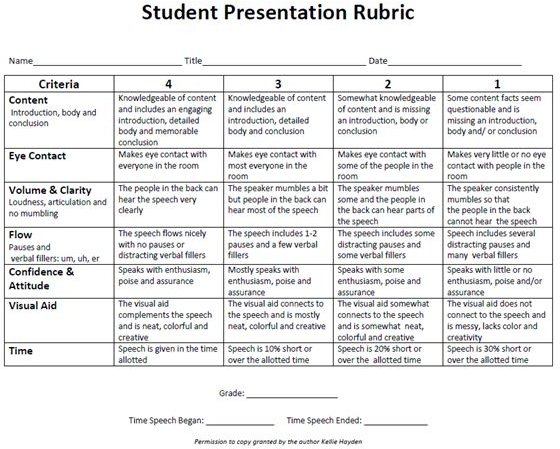

The rubric that you use to assess your student presentations needs to be clear and easy to read by your students. A well-thought out rubric will also make it easier to grade speeches.

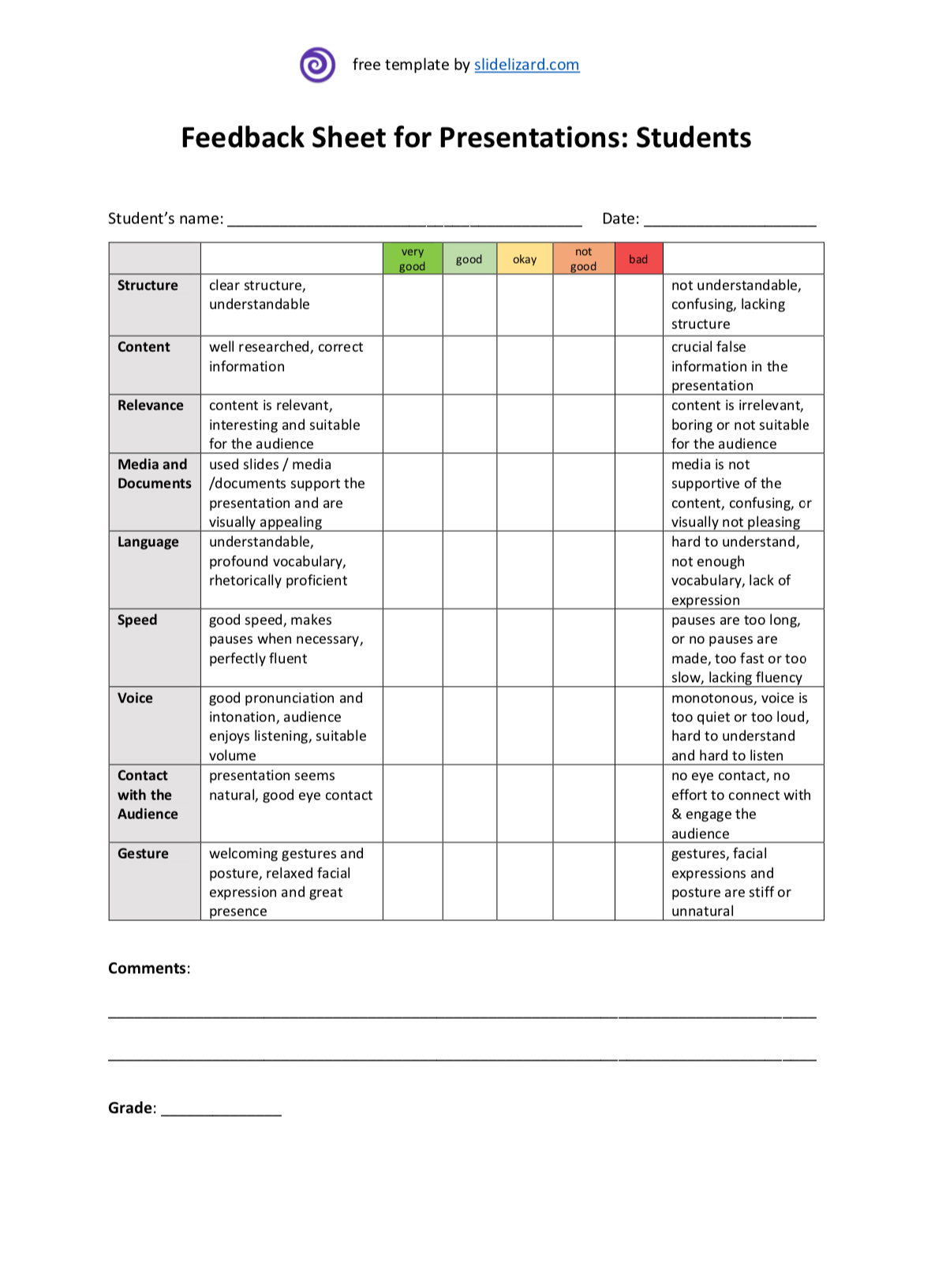

Before directing students to create a presentation, you need to tell them how they will be evaluated with the rubric. For every rubric, there are certain criteria listed or specific areas to be assessed. For the rubric download that is included, the following are the criteria: content, eye contact, volume and clarity, flow, confidence and attitude, visual aids, and time.

Student Speech Presentation Rubric Download

Assessment Tool Explained in Detail

Content : The information in the speech should be organized. It should have an engaging introduction that grabs the audience’s attention. The body of the speech should include details, facts and statistics to support the main idea. The conclusion should wrap up the speech and leave the audiences with something to remember.

In addition, the speech should be accurate. Teachers should decide how students should cite their sources if they are used. These should be turned in at the time of the speech. Good speakers will mention their sources during the speech.

Last, the content should be clear. The information should be understandable for the audience and not confusing or ambiguous.

Eye Contact

Students eyes should not be riveted to the paper or note cards that they prepare for the presentation. It is best if students write talking points on their note cards. These are main points that they want to discuss. If students write their whole speech on the note cards, they will be more likely to read the speech word-for-word, which is boring and usually monotone.

Students should not stare at one person or at the floor. It is best if they can make eye contact with everyone in the room at least once during the presentation. Staring at a spot on the wall is not great, but is better than staring at their shoes or their papers.

Volume and Clarity

Students should be loud enough so that people sitting in the back of the room can hear and understand them. They should not scream or yell. They need to practice using their diaphragm to project their voice.

Clarity means not talking too fast, mumbling, slurring or stuttering. When students are nervous, this tends to happen. Practice will help with this problem.

When speaking, the speaker should not have distracting pauses during the speech. Sometimes a speaker may pause for effect; this is to tell the audience that what he or she is going to say next is important. However, when students pause because they become confused or forget the speech, this is distracting.

Another problem is verbal fillers. Student may say “um,” “er” or “uh” when they are thinking or between ideas. Some people do it unintentionally when they are nervous.

If students chronically say “um” or use any type of verbal filler, they first need to be made aware of the problem while practicing. To fix this problem, a trusted friend can point out when they doing during practice. This will help students be aware when they are saying the verbal fillers.

Confidence and Attitude

When students speak, they should stand tall and exude confidence to show that what they are going to say is important. If they are nervous or are not sure about their speech, they should not slouch. They need to give their speech with enthusiasm and poise. If it appears that the student does not care about his or her topic, why should the audience? Confidence can many times make a boring speech topic memorable.

Visual Aids

The visual that a student uses should aid the speech. This aid should explain a facts or an important point in more detail with graphics, diagrams, pictures or graphs.

These can be presented as projected diagrams, large photos, posters, electronic slide presentations, short clips of videos, 3-D models, etc. It is important that all visual aids be neat, creative and colorful. A poorly executed visual aid can take away from a strong speech.

One of the biggest mistakes that students make is that they do not mention the visual aid in the speech. Students need to plan when the visual aid will be used in the speech and what they will say about it.

Another problem with slide presentations is that students read word-for-word what is on each slide. The audience can read. Students need to talk about the slide and/or offer additional information that is not on the slide.

The teacher needs to set the time limit. Some teachers like to give a range. For example, the teacher can ask for short speeches to be1-2 minutes or 2-5 minutes. Longer ones could be 10-15 minutes. Many students will not speak long enough while others will ramble on way beyond the limit. The best way for students to improve their time limit is to practice.

The key to a good speech is for students to write out an outline, make note cards and practice. The speech presentation rubric allows your students to understand your expectations.

- A Research Guide.com. Chapter 3. Public Speaking .

- 10 Fail Proof Tips for Delivering a Powerful Speech by K. Stone on DumbLittleMan.

- Photo credit: Kellie Hayden

- Planning Student Presentations by Laura Goering for Carleton College.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Am J Pharm Educ

- v.74(9); 2010 Nov 10

A Standardized Rubric to Evaluate Student Presentations

Michael j. peeters.

a University of Toledo College of Pharmacy

Eric G. Sahloff

Gregory e. stone.

b University of Toledo College of Education

To design, implement, and assess a rubric to evaluate student presentations in a capstone doctor of pharmacy (PharmD) course.

A 20-item rubric was designed and used to evaluate student presentations in a capstone fourth-year course in 2007-2008, and then revised and expanded to 25 items and used to evaluate student presentations for the same course in 2008-2009. Two faculty members evaluated each presentation.

The Many-Facets Rasch Model (MFRM) was used to determine the rubric's reliability, quantify the contribution of evaluator harshness/leniency in scoring, and assess grading validity by comparing the current grading method with a criterion-referenced grading scheme. In 2007-2008, rubric reliability was 0.98, with a separation of 7.1 and 4 rating scale categories. In 2008-2009, MFRM analysis suggested 2 of 98 grades be adjusted to eliminate evaluator leniency, while a further criterion-referenced MFRM analysis suggested 10 of 98 grades should be adjusted.

The evaluation rubric was reliable and evaluator leniency appeared minimal. However, a criterion-referenced re-analysis suggested a need for further revisions to the rubric and evaluation process.

INTRODUCTION

Evaluations are important in the process of teaching and learning. In health professions education, performance-based evaluations are identified as having “an emphasis on testing complex, ‘higher-order’ knowledge and skills in the real-world context in which they are actually used.” 1 Objective structured clinical examinations (OSCEs) are a common, notable example. 2 On Miller's pyramid, a framework used in medical education for measuring learner outcomes, “knows” is placed at the base of the pyramid, followed by “knows how,” then “shows how,” and finally, “does” is placed at the top. 3 Based on Miller's pyramid, evaluation formats that use multiple-choice testing focus on “knows” while an OSCE focuses on “shows how.” Just as performance evaluations remain highly valued in medical education, 4 authentic task evaluations in pharmacy education may be better indicators of future pharmacist performance. 5 Much attention in medical education has been focused on reducing the unreliability of high-stakes evaluations. 6 Regardless of educational discipline, high-stakes performance-based evaluations should meet educational standards for reliability and validity. 7

PharmD students at University of Toledo College of Pharmacy (UTCP) were required to complete a course on presentations during their final year of pharmacy school and then give a presentation that served as both a capstone experience and a performance-based evaluation for the course. Pharmacists attending the presentations were given Accreditation Council for Pharmacy Education (ACPE)-approved continuing education credits. An evaluation rubric for grading the presentations was designed to allow multiple faculty evaluators to objectively score student performances in the domains of presentation delivery and content. Given the pass/fail grading procedure used in advanced pharmacy practice experiences, passing this presentation-based course and subsequently graduating from pharmacy school were contingent upon this high-stakes evaluation. As a result, the reliability and validity of the rubric used and the evaluation process needed to be closely scrutinized.

Each year, about 100 students completed presentations and at least 40 faculty members served as evaluators. With the use of multiple evaluators, a question of evaluator leniency often arose (ie, whether evaluators used the same criteria for evaluating performances or whether some evaluators graded easier or more harshly than others). At UTCP, opinions among some faculty evaluators and many PharmD students implied that evaluator leniency in judging the students' presentations significantly affected specific students' grades and ultimately their graduation from pharmacy school. While it was plausible that evaluator leniency was occurring, the magnitude of the effect was unknown. Thus, this study was initiated partly to address this concern over grading consistency and scoring variability among evaluators.

Because both students' presentation style and content were deemed important, each item of the rubric was weighted the same across delivery and content. However, because there were more categories related to delivery than content, an additional faculty concern was that students feasibly could present poor content but have an effective presentation delivery and pass the course.

The objectives for this investigation were: (1) to describe and optimize the reliability of the evaluation rubric used in this high-stakes evaluation; (2) to identify the contribution and significance of evaluator leniency to evaluation reliability; and (3) to assess the validity of this evaluation rubric within a criterion-referenced grading paradigm focused on both presentation delivery and content.

The University of Toledo's Institutional Review Board approved this investigation. This study investigated performance evaluation data for an oral presentation course for final-year PharmD students from 2 consecutive academic years (2007-2008 and 2008-2009). The course was taken during the fourth year (P4) of the PharmD program and was a high-stakes, performance-based evaluation. The goal of the course was to serve as a capstone experience, enabling students to demonstrate advanced drug literature evaluation and verbal presentations skills through the development and delivery of a 1-hour presentation. These presentations were to be on a current pharmacy practice topic and of sufficient quality for ACPE-approved continuing education. This experience allowed students to demonstrate their competencies in literature searching, literature evaluation, and application of evidence-based medicine, as well as their oral presentation skills. Students worked closely with a faculty advisor to develop their presentation. Each class (2007-2008 and 2008-2009) was randomly divided, with half of the students taking the course and completing their presentation and evaluation in the fall semester and the other half in the spring semester. To accommodate such a large number of students presenting for 1 hour each, it was necessary to use multiple rooms with presentations taking place concurrently over 2.5 days for both the fall and spring sessions of the course. Two faculty members independently evaluated each student presentation using the provided evaluation rubric. The 2007-2008 presentations involved 104 PharmD students and 40 faculty evaluators, while the 2008-2009 presentations involved 98 students and 46 faculty evaluators.

After vetting through the pharmacy practice faculty, the initial rubric used in 2007-2008 focused on describing explicit, specific evaluation criteria such as amounts of eye contact, voice pitch/volume, and descriptions of study methods. The evaluation rubric used in 2008-2009 was similar to the initial rubric, but with 5 items added (Figure (Figure1). 1 ). The evaluators rated each item (eg, eye contact) based on their perception of the student's performance. The 25 rubric items had equal weight (ie, 4 points each), but each item received a rating from the evaluator of 1 to 4 points. Thus, only 4 rating categories were included as has been recommended in the literature. 8 However, some evaluators created an additional 3 rating categories by marking lines in between the 4 ratings to signify half points ie, 1.5, 2.5, and 3.5. For example, for the “notecards/notes” item in Figure Figure1, 1 , a student looked at her notes sporadically during her presentation, but not distractingly nor enough to warrant a score of 3 in the faculty evaluator's opinion, so a 3.5 was given. Thus, a 7-category rating scale (1, 1.5, 2, 2.5. 3, 3.5, and 4) was analyzed. Each independent evaluator's ratings for the 25 items were summed to form a score (0-100%). The 2 evaluators' scores then were averaged and a letter grade was assigned based on the following scale: >90% = A, 80%-89% = B, 70%-79% = C, <70% = F.

Rubric used to evaluate student presentations given in a 2008-2009 capstone PharmD course.

EVALUATION AND ASSESSMENT

Rubric reliability.

To measure rubric reliability, iterative analyses were performed on the evaluations using the Many-Facets Rasch Model (MFRM) following the 2007-2008 data collection period. While Cronbach's alpha is the most commonly reported coefficient of reliability, its single number reporting without supplementary information can provide incomplete information about reliability. 9 - 11 Due to its formula, Cronbach's alpha can be increased by simply adding more repetitive rubric items or having more rating scale categories, even when no further useful information has been added. The MFRM reports separation , which is calculated differently than Cronbach's alpha, is another source of reliability information. Unlike Cronbach's alpha, separation does not appear enhanced by adding further redundant items. From a measurement perspective, a higher separation value is better than a lower one because students are being divided into meaningful groups after measurement error has been accounted for. Separation can be thought of as the number of units on a ruler where the more units the ruler has, the larger the range of performance levels that can be measured among students. For example, a separation of 4.0 suggests 4 graduations such that a grade of A is distinctly different from a grade of B, which in turn is different from a grade of C or of F. In measuring performances, a separation of 9.0 is better than 5.5, just as a separation of 7.0 is better than a 6.5; a higher separation coefficient suggests that student performance potentially could be divided into a larger number of meaningfully separate groups.

The rating scale can have substantial effects on reliability, 8 while description of how a rating scale functions is a unique aspect of the MFRM. With analysis iterations of the 2007-2008 data, the number of rating scale categories were collapsed consecutively until improvements in reliability and/or separation were no longer found. The last positive iteration that led to positive improvements in reliability or separation was deemed an optimal rating scale for this evaluation rubric.

In the 2007-2008 analysis, iterations of the data where run through the MFRM. While only 4 rating scale categories had been included on the rubric, because some faculty members inserted 3 in-between categories, 7 categories had to be included in the analysis. This initial analysis based on a 7-category rubric provided a reliability coefficient (similar to Cronbach's alpha) of 0.98, while the separation coefficient was 6.31. The separation coefficient denoted 6 distinctly separate groups of students based on the items. Rating scale categories were collapsed, with “in-between” categories included in adjacent full-point categories. Table Table1 1 shows the reliability and separation for the iterations as the rating scale was collapsed. As shown, the optimal evaluation rubric maintained a reliability of 0.98, but separation improved the reliability to 7.10 or 7 distinctly separate groups of students based on the items. Another distinctly separate group was added through a reduction in the rating scale while no change was seen to Cronbach's alpha, even though the number of rating scale categories was reduced. Table Table1 1 describes the stepwise, sequential pattern across the final 4 rating scale categories analyzed. Informed by the 2007-2008 results, the 2008-2009 evaluation rubric (Figure (Figure1) 1 ) used 4 rating scale categories and reliability remained high.

Evaluation Rubric Reliability and Separation with Iterations While Collapsing Rating Scale Categories.

a Reliability coefficient of variance in rater response that is reproducible (ie, Cronbach's alpha).

b Separation is a coefficient of item standard deviation divided by average measurement error and is an additional reliability coefficient.

c Optimal number of rating scale categories based on the highest reliability (0.98) and separation (7.1) values.

Evaluator Leniency

Described by Fleming and colleagues over half a century ago, 6 harsh raters (ie, hawks) or lenient raters (ie, doves) have also been demonstrated in more recent studies as an issue as well. 12 - 14 Shortly after 2008-2009 data were collected, those evaluations by multiple faculty evaluators were collated and analyzed in the MFRM to identify possible inconsistent scoring. While traditional interrater reliability does not deal with this issue, the MFRM had been used previously to illustrate evaluator leniency on licensing examinations for medical students and medical residents in the United Kingdom. 13 Thus, accounting for evaluator leniency may prove important to grading consistency (and reliability) in a course using multiple evaluators. Along with identifying evaluator leniency, the MFRM also corrected for this variability. For comparison, course grades were calculated by summing the evaluators' actual ratings (as discussed in the Design section) and compared with the MFRM-adjusted grades to quantify the degree of evaluator leniency occurring in this evaluation.

Measures created from the data analysis in the MFRM were converted to percentages using a common linear test-equating procedure involving the mean and standard deviation of the dataset. 15 To these percentages, student letter grades were assigned using the same traditional method used in 2007-2008 (ie, 90% = A, 80% - 89% = B, 70% - 79% = C, <70% = F). Letter grades calculated using the revised rubric and the MFRM then were compared to letter grades calculated using the previous rubric and course grading method.

In the analysis of the 2008-2009 data, the interrater reliability for the letter grades when comparing the 2 independent faculty evaluations for each presentation was 0.98 by Cohen's kappa. However, using the 3-facet MRFM revealed significant variation in grading. The interaction of evaluator leniency on student ability and item difficulty was significant, with a chi-square of p < 0.01. As well, the MFRM showed a reliability of 0.77, with a separation of 1.85 (ie, almost 2 groups of evaluators). The MFRM student ability measures were scaled to letter grades and compared with course letter grades. As a result, 2 B's became A's and so evaluator leniency accounted for a 2% change in letter grades (ie, 2 of 98 grades).

Validity and Grading

Explicit criterion-referenced standards for grading are recommended for higher evaluation validity. 3 , 16 - 18 The course coordinator completed 3 additional evaluations of a hypothetical student presentation rating the minimal criteria expected to describe each of an A, B, or C letter grade performance. These evaluations were placed with the other 196 evaluations (2 evaluators × 98 students) from 2008-2009 into the MFRM, with the resulting analysis report giving specific cutoff percentage scores for each letter grade. Unlike the traditional scoring method of assigning all items an equal weight, the MFRM ordered evaluation items from those more difficult for students (given more weight) to those less difficult for students (given less weight). These criterion-referenced letter grades were compared with the grades generated using the traditional grading process.

When the MFRM data were rerun with the criterion-referenced evaluations added into the dataset, a 10% change was seen with letter grades (ie, 10 of 98 grades). When the 10 letter grades were lowered, 1 was below a C, the minimum standard, and suggested a failing performance. Qualitative feedback from faculty evaluators agreed with this suggested criterion-referenced performance failure.

Measurement Model

Within modern test theory, the Rasch Measurement Model maps examinee ability with evaluation item difficulty. Items are not arbitrarily given the same value (ie, 1 point) but vary based on how difficult or easy the items were for examinees. The Rasch measurement model has been used frequently in educational research, 19 by numerous high-stakes testing professional bodies such as the National Board of Medical Examiners, 20 and also by various state-level departments of education for standardized secondary education examinations. 21 The Rasch measurement model itself has rigorous construct validity and reliability. 22 A 3-facet MFRM model allows an evaluator variable to be added to the student ability and item difficulty variables that are routine in other Rasch measurement analyses. Just as multiple regression accounts for additional variables in analysis compared to a simple bivariate regression, the MFRM is a multiple variable variant of the Rasch measurement model and was applied in this study using the Facets software (Linacre, Chicago, IL). The MFRM is ideal for performance-based evaluations with the addition of independent evaluator/judges. 8 , 23 From both yearly cohorts in this investigation, evaluation rubric data were collated and placed into the MFRM for separate though subsequent analyses. Within the MFRM output report, a chi-square for a difference in evaluator leniency was reported with an alpha of 0.05.

The presentation rubric was reliable. Results from the 2007-2008 analysis illustrated that the number of rating scale categories impacted the reliability of this rubric and that use of only 4 rating scale categories appeared best for measurement. While a 10-point Likert-like scale may commonly be used in patient care settings, such as in quantifying pain, most people cannot process more then 7 points or categories reliably. 24 Presumably, when more than 7 categories are used, the categories beyond 7 either are not used or are collapsed by respondents into fewer than 7 categories. Five-point scales commonly are encountered, but use of an odd number of categories can be problematic to interpretation and is not recommended. 25 Responses using the middle category could denote a true perceived average or neutral response or responder indecisiveness or even confusion over the question. Therefore, removing the middle category appears advantageous and is supported by our results.

With 2008-2009 data, the MFRM identified evaluator leniency with some evaluators grading more harshly while others were lenient. Evaluator leniency was indeed found in the dataset but only a couple of changes were suggested based on the MFRM-corrected evaluator leniency and did not appear to play a substantial role in the evaluation of this course at this time.

Performance evaluation instruments are either holistic or analytic rubrics. 26 The evaluation instrument used in this investigation exemplified an analytic rubric, which elicits specific observations and often demonstrates high reliability. However, Norman and colleagues point out a conundrum where drastically increasing the number of evaluation rubric items (creating something similar to a checklist) could augment a reliability coefficient though it appears to dissociate from that evaluation rubric's validity. 27 Validity may be more than the sum of behaviors on evaluation rubric items. 28 Having numerous, highly specific evaluation items appears to undermine the rubric's function. With this investigation's evaluation rubric and its numerous items for both presentation style and presentation content, equal numeric weighting of items can in fact allow student presentations to receive a passing score while falling short of the course objectives, as was shown in the present investigation. As opposed to analytic rubrics, holistic rubrics often demonstrate lower yet acceptable reliability, while offering a higher degree of explicit connection to course objectives. A summative, holistic evaluation of presentations may improve validity by allowing expert evaluators to provide their “gut feeling” as experts on whether a performance is “outstanding,” “sufficient,” “borderline,” or “subpar” for dimensions of presentation delivery and content. A holistic rubric that integrates with criteria of the analytic rubric (Figure (Figure1) 1 ) for evaluators to reflect on but maintains a summary, overall evaluation for each dimension (delivery/content) of the performance, may allow for benefits of each type of rubric to be used advantageously. This finding has been demonstrated with OSCEs in medical education where checklists for completed items (ie, yes/no) at an OSCE station have been successfully replaced with a few reliable global impression rating scales. 29 - 31

Alternatively, and because the MFRM model was used in the current study, an items-weighting approach could be used with the analytic rubric. That is, item weighting based on the difficulty of each rubric item could suggest how many points should be given for that rubric items, eg, some items would be worth 0.25 points, while others would be worth 0.5 points or 1 point (Table (Table2). 2 ). As could be expected, the more complex the rubric scoring becomes, the less feasible the rubric is to use. This was the main reason why this revision approach was not chosen by the course coordinator following this study. As well, it does not address the conundrum that the performance may be more than the summation of behavior items in the Figure Figure1 1 rubric. This current study cannot suggest which approach would be better as each would have its merits and pitfalls.

Rubric Item Weightings Suggested in the 2008-2009 Data Many-Facet Rasch Measurement Analysis

Regardless of which approach is used, alignment of the evaluation rubric with the course objectives is imperative. Objectivity has been described as a general striving for value-free measurement (ie, free of the evaluator's interests, opinions, preferences, sentiments). 27 This is a laudable goal pursued through educational research. Strategies to reduce measurement error, termed objectification , may not necessarily lead to increased objectivity. 27 The current investigation suggested that a rubric could become too explicit if all the possible areas of an oral presentation that could be assessed (ie, objectification) were included. This appeared to dilute the effect of important items and lose validity. A holistic rubric that is more straightforward and easier to score quickly may be less likely to lose validity (ie, “lose the forest for the trees”), though operationalizing a revised rubric would need to be investigated further. Similarly, weighting items in an analytic rubric based on their importance and difficulty for students may alleviate this issue; however, adding up individual items might prove arduous. While the rubric in Figure Figure1, 1 , which has evolved over the years, is the subject of ongoing revisions, it appears a reliable rubric on which to build.

The major limitation of this study involves the observational method that was employed. Although the 2 cohorts were from a single institution, investigators did use a completely separate class of PharmD students to verify initial instrument revisions. Optimizing the rubric's rating scale involved collapsing data from misuse of a 4-category rating scale (expanded by evaluators to 7 categories) by a few of the evaluators into 4 independent categories without middle ratings. As a result of the study findings, no actual grading adjustments were made for students in the 2008-2009 presentation course; however, adjustment using the MFRM have been suggested by Roberts and colleagues. 13 Since 2008-2009, the course coordinator has made further small revisions to the rubric based on feedback from evaluators, but these have not yet been re-analyzed with the MFRM.

The evaluation rubric used in this study for student performance evaluations showed high reliability and the data analysis agreed with using 4 rating scale categories to optimize the rubric's reliability. While lenient and harsh faculty evaluators were found, variability in evaluator scoring affected grading in this course only minimally. Aside from reliability, issues of validity were raised using criterion-referenced grading. Future revisions to this evaluation rubric should reflect these criterion-referenced concerns. The rubric analyzed herein appears a suitable starting point for reliable evaluation of PharmD oral presentations, though it has limitations that could be addressed with further attention and revisions.

ACKNOWLEDGEMENT

Author contributions— MJP and EGS conceptualized the study, while MJP and GES designed it. MJP, EGS, and GES gave educational content foci for the rubric. As the study statistician, MJP analyzed and interpreted the study data. MJP reviewed the literature and drafted a manuscript. EGS and GES critically reviewed this manuscript and approved the final version for submission. MJP accepts overall responsibility for the accuracy of the data, its analysis, and this report.

Inspiring Today's Teachers

- Privacy Policy

- Terms Of Use

- Teaching Strategies

- accessories

- teacher gifts

- homeschooling information

- homeschooling resources

How Can I Evaluate And Assess Students’ Presentations And Public Speaking Skills?

In this article, we will explore effective strategies for evaluating and assessing students’ presentations and public speaking skills. Whether you are a teacher, a mentor, or a supervisor, assessing these skills can be a valuable tool in helping students develop confidence and proficiency in communication. By implementing various criteria and providing constructive feedback, we can create a supportive environment that fosters growth and improvement in public speaking abilities. Let’s discover some practical approaches to evaluating and assessing students’ presentations in order to help them shine in the spotlight.

Table of Contents

Preparation

Clear objectives and criteria.

When evaluating and assessing students’ presentations and public speaking skills, it is essential to have clear objectives and criteria in mind. This involves determining what specific skills and competencies you want the students to demonstrate during their presentations. Is it effective communication, critical thinking, or the ability to engage with the audience? By establishing clear objectives and criteria, you provide a framework for evaluating and assessing the students’ performance objectively.

Assignment instructions

Providing clear and detailed assignment instructions is crucial in ensuring that students understand what is expected of them. The instructions should outline the topic, format, and any specific requirements for the presentation. By giving students clear guidelines, it becomes easier to assess their ability to follow instructions and meet the requirements of the assignment.

Time management

Time management plays a crucial role in evaluating students’ presentations and public speaking skills. Evaluators should pay attention to how effectively students manage their time during the presentation. Are they able to convey their message within the allocated time limit? Do they allow for questions and interaction with the audience? Time management skills are vital in professional settings, and assessing students’ ability to efficiently use their allotted time can provide valuable insights into their overall competency.

Organization and structure

The organization and structure of a presentation determine its effectiveness in conveying information. Evaluators should consider whether the presentation has a clear introduction, body, and conclusion. Is there a logical flow of ideas? Are transitions between different sections smooth? Assessing the organization and structure of a presentation helps gauge the students’ ability to present information in a coherent and organized manner.

Relevance and accuracy

To determine the relevance and accuracy of a presentation, evaluators should examine whether the content is aligned with the assigned topic and meets the objectives of the presentation. Is the information presented accurate and supported by reliable sources? Evaluating the students’ ability to deliver relevant and accurate content demonstrates their research skills and critical thinking abilities.

Use of supporting evidence

Supporting evidence plays a crucial role in validating the arguments and statements made during a presentation. Evaluators should assess whether students incorporate relevant and reliable supporting evidence into their presentations. This can include citing research studies, statistics, or expert opinions. By evaluating the use of supporting evidence, you can determine the students’ ability to back up their claims and enhance the credibility and persuasiveness of their presentation.

Clarity and coherence

Clarity and coherence in a presentation are essential for effective communication. Evaluators should assess whether students articulate their ideas clearly and concisely. Are the main points easy to understand? Do the students use appropriate language and avoid jargon? Evaluating the clarity and coherence of a presentation helps identify the students’ communication skills and their ability to convey ideas in a manner that is easily comprehensible to the audience.

Creativity and originality

Assessing the creativity and originality of a presentation adds an element of uniqueness to the evaluation process. Evaluators should consider whether the students demonstrate innovative thinking or present ideas from a fresh perspective. Does the presentation incorporate creative visuals or storytelling techniques? By evaluating creativity and originality, it becomes possible to gauge the students’ ability to captivate and engage the audience in a unique and memorable way.

Verbal communication

Verbal communication skills are crucial in delivering an effective presentation. Evaluators should pay attention to factors such as clarity of speech, pronunciation, and appropriate volume. Assessing verbal communication skills involves considering whether the students speak confidently, engage with the audience, and maintain an appropriate pace throughout the presentation.

Non-verbal communication

Non-verbal communication, including body language and facial expressions, can significantly impact the delivery of a presentation. Evaluators should observe whether students use gestures, maintain good posture, and make eye contact with the audience. Assessing non-verbal communication helps determine the students’ ability to convey confidence, engage the audience, and establish a connection with the listeners.

Voice projection and articulation

The ability to project and articulate one’s voice is vital in public speaking. Evaluators should assess whether students speak loud enough to be clearly heard by the audience, and whether they enunciate their words effectively. Voice projection and articulation contribute to the overall clarity and impact of a presentation.

Eye contact and body language

Eye contact is a crucial aspect of engaging with the audience and establishing a connection. Evaluators should assess whether students maintain appropriate eye contact throughout the presentation. Additionally, the observation of body language can give insights into the students’ confidence and comfort level during public speaking. Are they relaxed or tense? Do they use open and welcoming gestures? Evaluating eye contact and body language helps gauge the students’ ability to engage with the audience and create a positive impression.

Engagement with the audience

The ability to engage and interact with the audience is a key aspect of public speaking. Evaluators should consider whether students actively involve the audience through questions, discussions, or activities. Assessing engagement with the audience helps determine the students’ ability to connect with listeners, hold their attention, and make the presentation interactive and memorable.

Visual aids

Relevance and effectiveness.

Visual aids, such as slides or props, can enhance the delivery and understanding of a presentation. Evaluators should assess whether students effectively use visual aids to support their message. Are the visual aids relevant to the topic and do they add value to the presentation? Evaluating the relevance and effectiveness of visual aids helps determine the students’ ability to enhance their message through visual representation.

Clarity and visibility

Visual aids should be clear and easily visible to the audience. Evaluators should assess whether students choose appropriate font sizes, colors, and visuals to ensure clarity. Can the audience read the text on slides or see the details of the visual aids? Evaluating the clarity and visibility of visual aids helps determine the students’ attention to detail and their consideration for the audience’s viewing experience.

Integration with the presentation

Visual aids should be seamlessly integrated into the presentation to enhance comprehension and engagement. Evaluators should consider whether students effectively incorporate their visual aids into the flow of the presentation. Are the visual aids introduced and explained clearly? Do they complement the spoken content? Assessing the integration of visual aids helps determine the students’ ability to use these tools as a cohesive part of their presentation.

Technical proficiency

When using digital visual aids, technical proficiency is essential. Evaluators should assess whether students are able to navigate through slides or other digital platforms smoothly. Are they confident in using presentation software or multimedia tools? Assessing students’ technical proficiency ensures that they can effectively utilize the available technology to enhance their presentations.

Language skills

Vocabulary and diction.

The choice of vocabulary and diction can significantly impact the clarity and persuasiveness of a presentation. Evaluators should assess whether students use appropriate and varied vocabulary to communicate their ideas effectively. Are they able to articulate their words clearly and pronounce them correctly? Evaluating vocabulary and diction helps determine the students’ command of language and their ability to communicate with fluency and precision.

Grammar and sentence structure

Grammar and sentence structure are essential aspects of effective communication. Evaluators should consider whether students use grammatically correct sentences and employ proper punctuation. Do they construct sentences that convey their intended meaning without confusion? Assessing grammar and sentence structure helps identify the students’ proficiency in written and spoken English.

Use of appropriate language

Different contexts require the use of appropriate language. Evaluators should assess whether students use language that is suitable for the audience and the topic of their presentation. Are they able to strike a balance between using professional language and avoiding jargon? Evaluating the use of appropriate language demonstrates the students’ awareness of audience expectations and their ability to adapt their language style accordingly.

Fluency and coherence

Fluency and coherence in speaking are crucial for maintaining the audience’s attention and understanding. Evaluators should assess whether students speak fluently without unnecessary pauses or stutters. Are they able to link their ideas coherently and present their arguments in a logical manner? Evaluating fluency and coherence helps determine the students’ ability to deliver a smooth and engaging presentation.

Adherence to time limits

Adhering to time limits is an important aspect of effective presentation skills. Evaluators should consider whether students are able to complete their presentation within the allocated time. Do they start and finish on time? Assessing adherence to time limits helps determine the students’ ability to manage their presentation time effectively and respect the given time constraints.

Efficient use of time

In addition to adhering to time limits, it is important to evaluate whether students use their time efficiently during the presentation. Do they allocate an appropriate amount of time to each section of their presentation? Are they able to convey their message concisely without unnecessary repetition or lagging? Evaluating the efficient use of time helps gauge the students’ time management skills and their ability to convey information effectively within a limited timeframe.

Ability to pace the presentation

Pacing is crucial in maintaining the audience’s engagement and understanding. Evaluators should assess whether students maintain an appropriate pace throughout their presentation. Are they able to adjust their speed and rhythm to emphasize key points or allow for audience interaction? Assessing the ability to pace the presentation helps determine the students’ awareness of timing and their ability to create a dynamic and engaging delivery.

Captivating introduction

The introduction sets the tone for a presentation and has the potential to capture the audience’s attention from the beginning. Evaluators should assess whether students are able to deliver a captivating and engaging introduction. Does it grab the audience’s interest and clearly introduce the topic? Evaluating the captivating introduction helps determine the students’ ability to hook the audience and create a strong opening impact.

Maintaining audience interest

Sustaining the audience’s interest throughout the presentation is crucial for effective communication. Evaluators should observe whether students use storytelling techniques, compelling visuals, or other engaging strategies to keep the audience engaged. Do they use examples or anecdotes that resonate with the listeners? Assessing the maintenance of audience interest helps determine the students’ ability to captivate and hold the attention of the audience.

Effective use of storytelling

Storytelling is a powerful technique in public speaking. Evaluators should assess whether students effectively incorporate storytelling into their presentations. Do they use narratives to illustrate and support their main points? Are they able to create an emotional connection with the audience through their stories? Evaluating the effective use of storytelling helps determine the students’ ability to engage and communicate in a compelling and relatable manner.

Engaging visual and verbal cues

Engaging visual and verbal cues can significantly enhance the impact of a presentation. Evaluators should observe whether students use gestures, facial expressions, or vocal variations to emphasize key points or convey emotions. Do they use visual aids or props to reinforce their message? Assessing the use of engaging visual and verbal cues helps determine the students’ ability to communicate effectively and capture the audience’s attention through non-verbal and verbal means.

Interaction with the audience

Interacting with the audience can create a dynamic and memorable presentation experience. Evaluators should assess whether students actively involve the audience through questions, discussions, or activities. Do they respond to audience reactions and adapt their delivery accordingly? Assessing interaction with the audience helps determine the students’ ability to forge a connection and make the presentation interactive and inclusive.

Confidence and poise

Composure and self-assurance.

Confidence and poise are essential attributes in public speaking. Evaluators should observe whether students appear calm and composed during their presentation. Do they maintain a confident demeanor and show self-assurance? Assessing composure and self-assurance helps determine the students’ ability to handle the pressure of public speaking and project a professional image.

Ability to handle unexpected situations

Public speaking often involves unexpected situations that can test a presenter’s adaptability. Evaluators should consider whether students are able to handle unexpected interruptions, technical issues, or challenging questions with composure. Can they think on their feet and respond effectively to unexpected circumstances? Assessing the ability to handle unexpected situations demonstrates the students’ resilience and ability to remain poised under pressure.

Body language and posture

Body language and posture communicate a lot about a presenter’s confidence and engagement. Evaluators should assess whether students maintain good posture, appearing relaxed and open. Do they use appropriate gestures and movement to enhance their message? Evaluating body language and posture helps determine the students’ ability to convey confidence and professionalism through non-verbal cues.

Use of gestures and facial expressions

Gestures and facial expressions can add depth and impact to a presentation. Evaluators should observe whether students use appropriate and meaningful gestures to illustrate their points. Are their facial expressions authentic and aligned with the content they are delivering? Assessing the use of gestures and facial expressions helps determine the students’ ability to effectively convey emotions and engage the audience through non-verbal communication.

Critical thinking

Ability to analyze and evaluate.

Critical thinking skills are essential in evaluating and assessing information. Evaluators should assess whether students demonstrate the ability to analyze and evaluate the content of their presentation. Do they present a balanced view and consider different perspectives? Are they able to identify strengths and weaknesses in their arguments? Evaluating the ability to analyze and evaluate helps determine the students’ capacity to think critically and make well-informed judgments.

Logical reasoning

Logical reasoning is fundamental in constructing a persuasive argument. Evaluators should observe whether students present their ideas in a logical and coherent manner. Do they provide clear and well-supported reasoning throughout their presentation? Assessing logical reasoning helps determine the students’ ability to present a structured and convincing argument.

Problem-solving skills

Problem-solving skills are valuable in addressing challenges and finding solutions. Evaluators should assess whether students demonstrate problem-solving abilities during their presentations. Do they identify problems or potential obstacles and propose viable solutions? Assessing problem-solving skills helps determine the students’ ability to think creatively and adapt their strategies when facing difficulties.

Use of persuasive techniques

Persuasive techniques are essential in convincing an audience to accept a particular viewpoint. Evaluators should consider whether students effectively use persuasive techniques, such as emotional appeals or logical reasoning, to influence the audience’s perspective. Do they present a compelling case for their arguments? Assessing the use of persuasive techniques helps determine the students’ ability to craft persuasive presentations that can influence the audience’s beliefs or actions.

Overall impression

Engagement and impact.

The overall impression of a presentation is influenced by the presenter’s ability to engage and leave a lasting impact on the audience. Evaluators should consider the overall engagement and impact of the presentation. Did it capture the audience’s attention throughout? Did it leave a memorable impression? Assessing the engagement and impact helps determine the students’ ability to create a compelling and memorable presentation that resonates with the audience.

Efficiency of the presentation

Efficiency is important in evaluating the overall performance of a presentation. Evaluators should assess whether students effectively conveyed their message within the given time limit. Did they make efficient use of time without unnecessary delays or excessive repetitions? Assessing the efficiency of the presentation helps determine the students’ ability to communicate their ideas effectively within specific constraints.

Appropriateness for the given context

The appropriateness of a presentation depends on the specific context in which it is delivered. Evaluators should consider whether students tailored their presentation to suit the audience, topic, and purpose of the presentation. Was the presentation well-suited to the given context? Assessing appropriateness for the given context helps determine the students’ ability to adapt their presentation style and content to suit the specific requirements and expectations of the audience.

Ability to meet the objectives

Ultimately, the evaluation of a presentation should assess whether the students successfully met the objectives established at the beginning. Did they effectively demonstrate the desired skills and competencies? Were they able to convey the intended message and fulfill the purpose of the presentation? Assessing the ability to meet the objectives helps determine the overall success of the students’ presentations and their competency in public speaking.

In conclusion, evaluating and assessing students’ presentations and public speaking skills requires a comprehensive approach that considers various aspects of their performance. From the clarity and relevance of the content to the delivery, engagement, and critical thinking demonstrated, each element contributes to a holistic evaluation. By following clear objectives and criteria, providing detailed assignment instructions, and managing time effectively, educators can ensure a fair and thorough assessment of students’ presentation skills. Through these assessments, students can receive valuable feedback and guidance to further enhance their abilities and become confident and effective communicators in various settings.

You May Also Like

What Are Effective Strategies For Accommodating Students With Different Math Abilities?

How Can I Differentiate Instruction For Students With Varying Reading Levels?

What Strategies Can I Use To Support English Language Learners In Mainstream Classrooms?

How Do I Adapt Teaching Strategies To Provide Enrichment For High-achieving Students?

What Are Effective Strategies For Tiered Instruction In A Multi-level Classroom?

How Can I Use Flexible Grouping Strategies To Differentiate Instruction?

Blog > Effective Feedback for Presentations - digital with PowerPoint or with printable sheets

Effective Feedback for Presentations - digital with PowerPoint or with printable sheets

10.26.20 • #powerpoint #feedback #presentation.

Do you know whether you are a good presenter or not? If you do, chances are it's because people have told you so - they've given you feedback. Getting other's opinions about your performance is something that's important for most aspects in life, especially professionally. However, today we're focusing on a specific aspect, which is (as you may have guessed from the title): presentations.

The importance of feedback

Take a minute to think about the first presentation you've given: what was it like? Was it perfect? Probably not. Practise makes perfect, and nobody does everything right in the beginning. Even if you're a natural at speaking and presenting, there is usually something to improve and to work on. And this is where feedback comes in - because how are you going to know what it is that you should improve? You can and should of course assess yourself after each and every presentation you give, as that is an important part of learning and improvement. The problem is that you yourself are not aware of all the things that you do well (or wrong) during your presentation. But your audience is! And that's why you should get audience feedback.

Qualities of good Feedback

Before we get into the different ways of how you can get feedback from your audience, let's briefly discuss what makes good feedback. P.S.: These do not just apply for presentations, but for any kind of feedback.

- Good feedback is constructive, not destructive. The person receiving feedback should feel empowered and inspired to work on their skills, not discouraged. You can of course criticize on an objective level, but mean and insulting comments have to be kept to yourself.

- Good feedback involves saying bot what has to be improved (if there is anything) and what is already good (there is almost always something!)

- After receiving good feedback, the recipient is aware of the steps he can and should take in order to improve.

Ways of receiving / giving Feedback after a Presentation

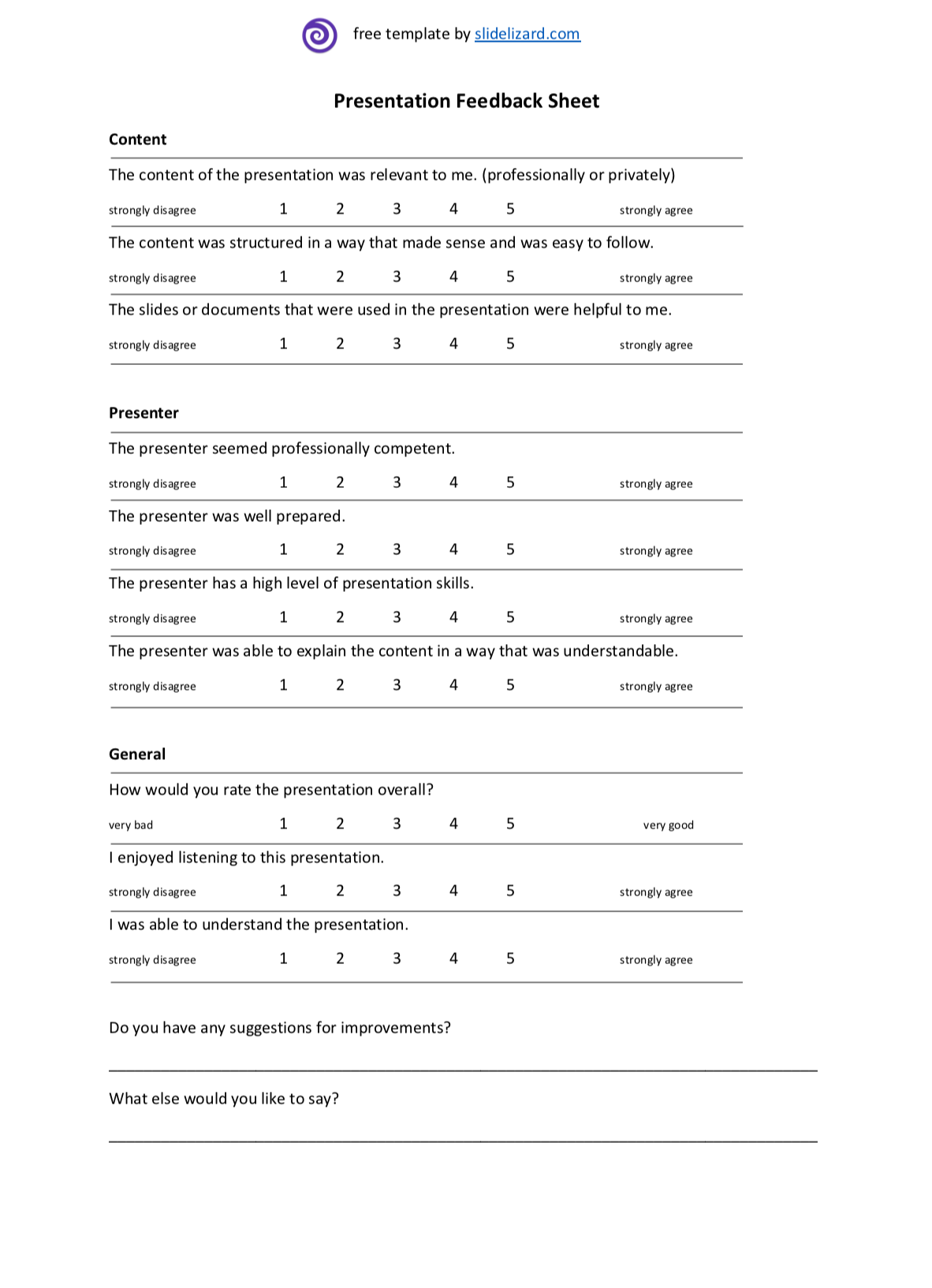

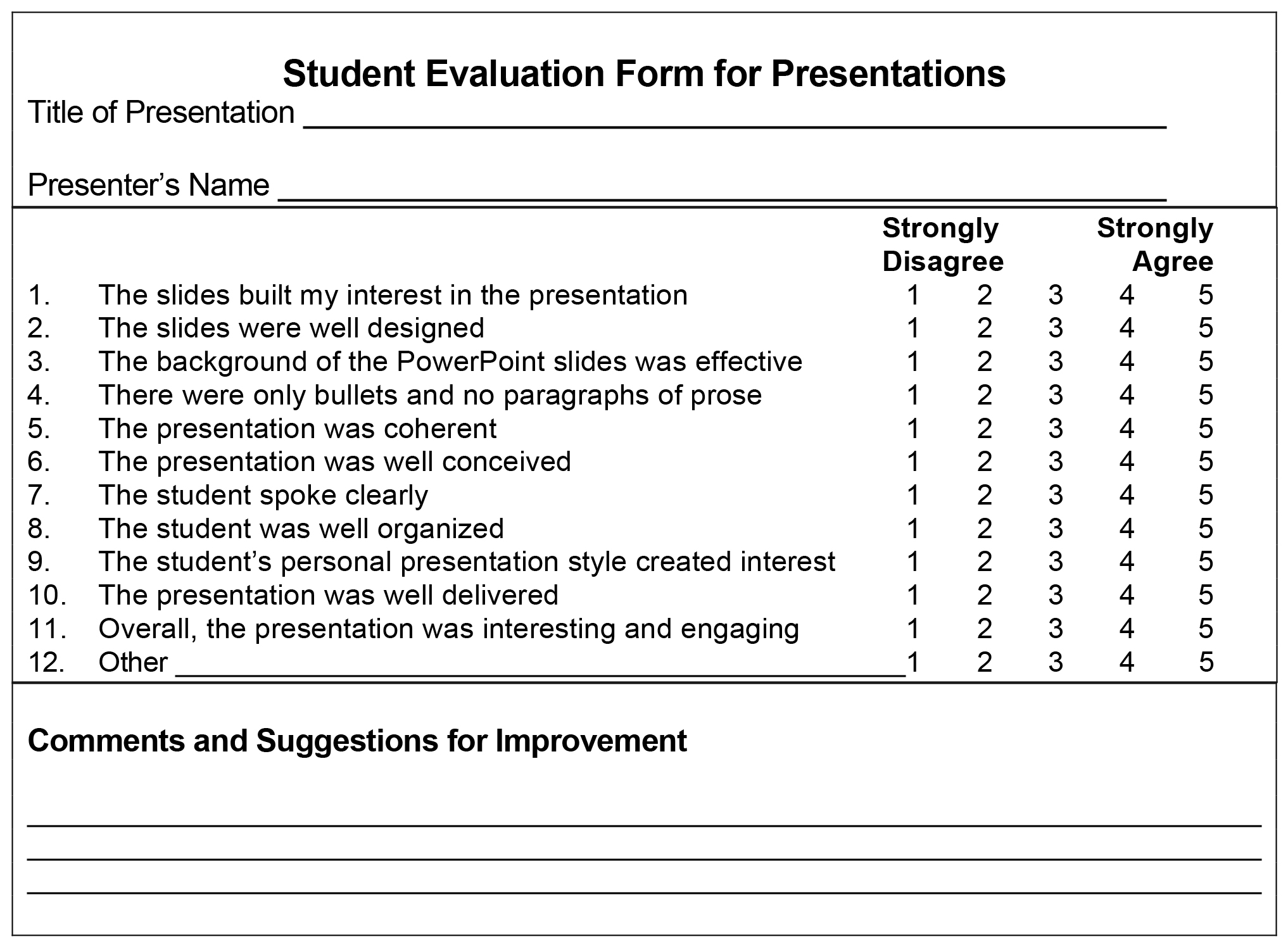

1. print a feedback form.

Let's start with a classic: the feedback / evaluation sheet. It contains several questions, these can be either open (aka "What did you like about the presentation?") or answered on a scale (e.g. from "strongly disagree" to "strongly agree"). The second question format makes a lot of sense if you have a large audience, and it also makes it easy to get an overview of the results. That's why in our feedback forms (which you can download at the end of this post), you'll find mainly statements with scales. This has been a proven way for getting and giving valuable feedback efficiently for years. We do like the feedback form a lot, though you have to be aware that you'll need to invest some time to prepare, count up and analyse.

- ask specifically what you want to ask

- good overview of the results

- anonymous (people are likely to be more honest)

- easy to access: you can just download a feedback sheet online (ours, for example, which you'll find at the end of this blog post!)

- analysing the results can be time-consuming

- you have to print out the sheets, it takes preparation

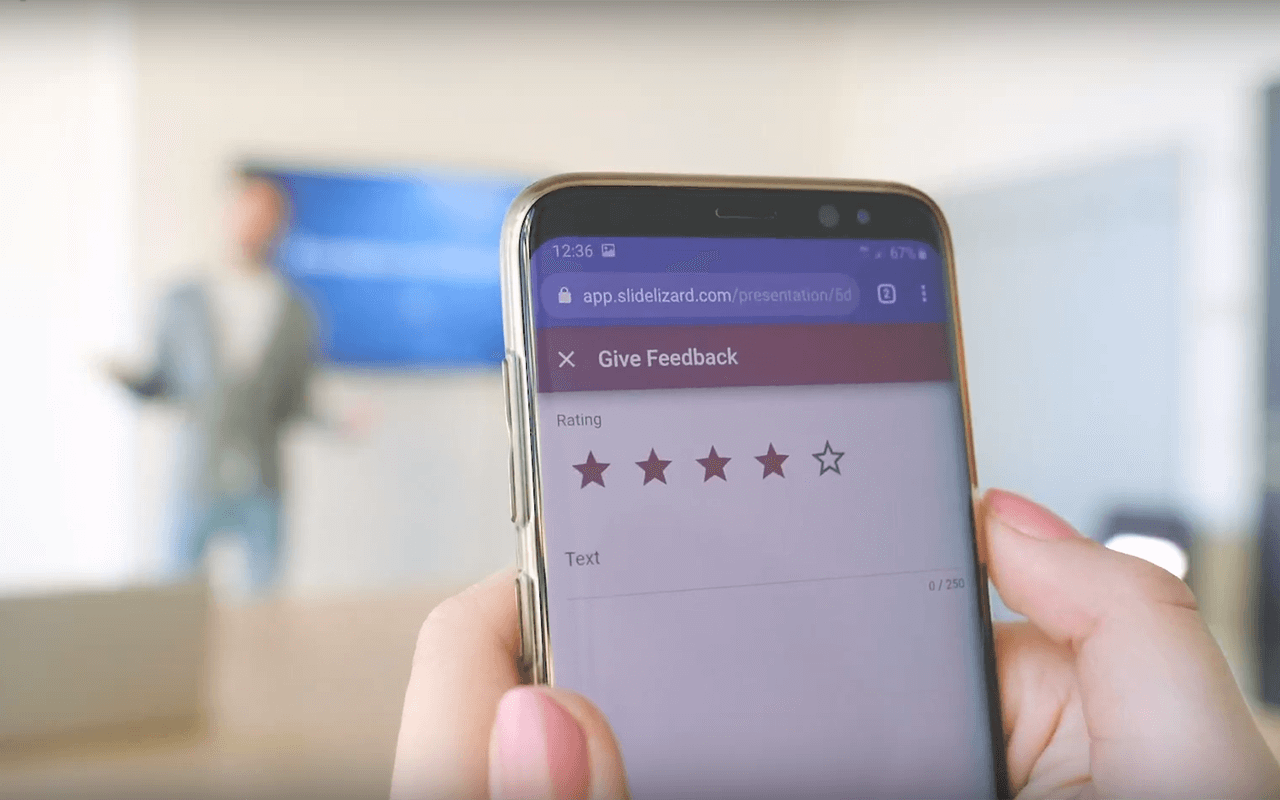

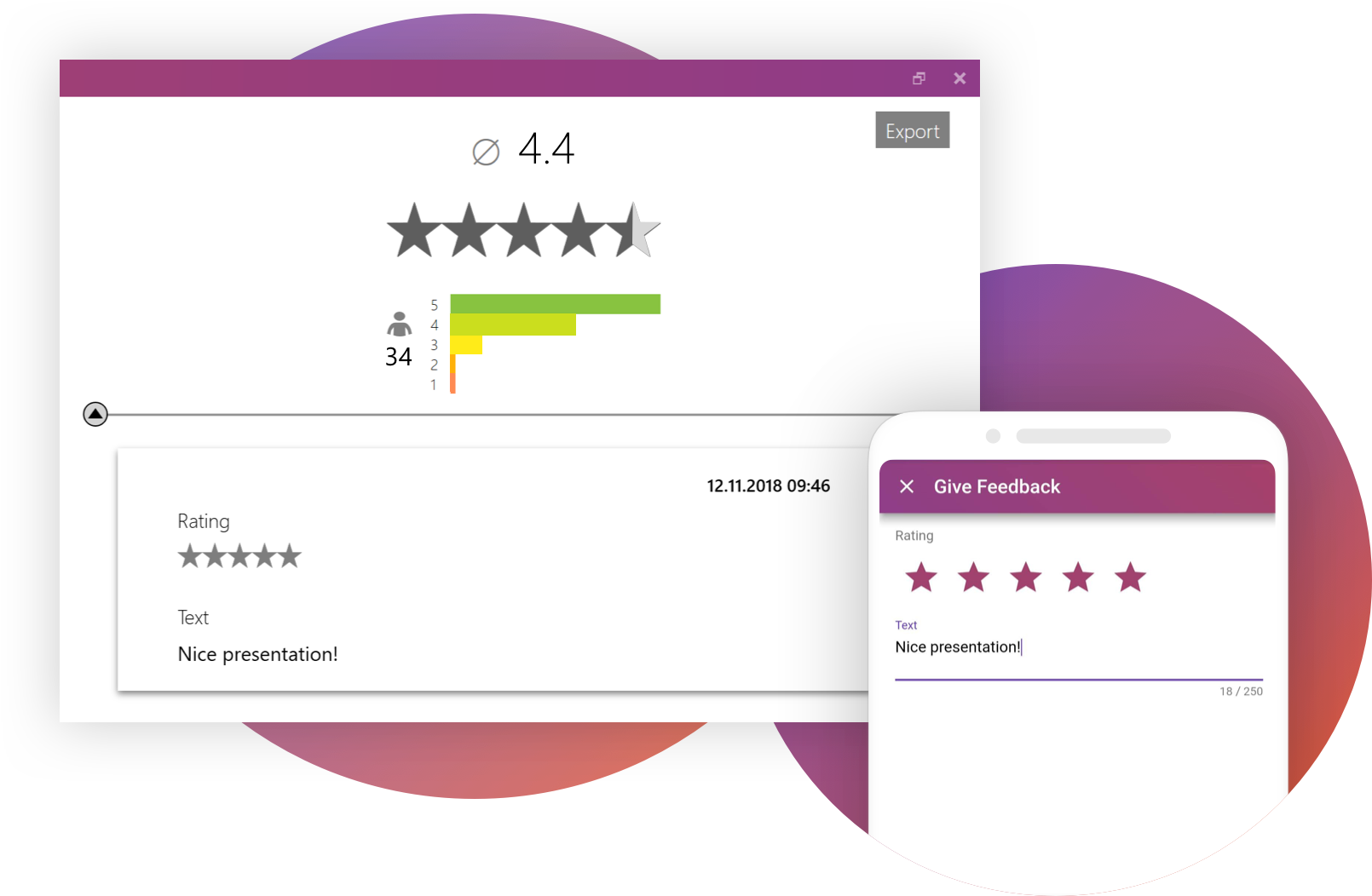

2. Online: Get digital Feedback

In the year 2020, there's got to be a better way of giving feedback, right? There is, and you should definitely try it out! SlideLizard is a free PowerPoint extension that allows you to get your audience's feedback in the quickest and easiest way possible. You can of course customize the feedback question form to your specific needs and make sure you get exactly the kind of feedback you need. Click here to download SlideLizard right now, or scroll down to read some more about the tool.

- quick and easy to access

- easy and fast export, analysis and overview of feedback

- save feedback directly on your computer

- Participants need a working Internet connection (but that usually isn't a problem nowadays)

3. Verbal Feedback

"So, how did you like the presentation?", asks the lecturer. A few people in the audience nod friendly, one or two might even say something about how the slides were nice and the content interesting. Getting verbal feedback is hard, especially in big groups. If you really want to analyse and improve your presentation habits and skills, we recommend using one of the other methods. However, if you have no internet connection and forgot to bring your feedback sheets, asking for verbal feedback is still better than nothing.

- no prerequisites

- open format

- okay for small audiences

- not anonymous (people might not be honest)

- time consuming

- no detailed evaluation

- no way to save the feedback (except for your memory)

- not suitable for big audiences

Feedback to yourself - Self Assessment

I've mentioned before that it is incredibly important to not only let others tell you what went well and what didn't in your presentation. Your own impressions are of huge value, too. After each presentation you give, ask yourself the following questions (or better yet, write your answers down!):

- What went wrong (in my opinion)? What can I do in order to avoid this from happening next time?

- What went well? What was well received by the audience? What should I do more of?

- How was I feeling during this presentation? (Nervous? Confident? ...)

Tip: If you really want to actively work on your presentation skills, filming yourself while presenting and analysing the video after is a great way to go. You'll get a different view on the way you talk, move, and come across.

Digital Feedback with SlideLizard

Were you intrigued by the idea of easy Online-feedback? With SlideLizard your attendees can easily give you feedback directly with their Smartphone. After the presentation you can analyze the result in detail.

- type in your own feedback questions

- choose your rating scale: 1-5 points, 1-6 points, 1-5 stars or 1-6 stars;

- show your attendees an open text field and let them enter any text they want

Note: SlideLizard is amazing for giving and receiving feedback, but it's definitely not the only thing it's great for. Once you download the extension, you get access to the most amazing tools - most importantly, live polls and quizzes, live Q&A sessions, attendee note taking, content and slide sharing, and presentation analytics. And the best thing about all this? You can get it for free, and it is really easy to use, as it is directly integrated in PowerPoint! Click here to discover more about SlideLizard.

Free Download: Printable Feedback Sheets for Business or School Presentations

If you'd rather stick with the good old paper-and-pen method, that's okay, too. You can choose between one of our two feedback sheet templates: there is one tailored to business presentations and seminars, and one that is created specifically for teachers assessing their students. Both forms can be downloaded as a Word, Excel, or pdf file. A lot of thought has gone into both of the forms, so you can benefit as much as possible; however, if you feel like you need to change some questions in order to better suit your needs, feel free to do so!

Feedback form for business

Template as PDF, Word & Excel - perfect for seminars, trainings,...

Feedback form for teachers (school or university)

Template as PDF, Word & Excel - perfect for school or university,...

Where can I find a free feedback form for presentations?

There are many templates available online. We designed two exclusive, free-to-download feedback sheets, which you can get in our blog article

What's the best way to get feedback for presentations?

You can get feedback on your presentations by using feedback sheets, asking for feedback verbally, or, the easiest and fastest option: get digital feedback with an online tool

Related articles

About the author.

Pia Lehner-Mittermaier

Pia works in Marketing as a graphic designer and writer at SlideLizard. She uses her vivid imagination and creativity to produce good content.

Get 1 Month for free!

Do you want to make your presentations more interactive.

With SlideLizard you can engage your audience with live polls, questions and feedback . Directly within your PowerPoint Presentation. Learn more

Top blog articles More posts

All about notes in PowerPoint Presentations

Create Flowchart / Decision Tree in PowerPoint – Templates & Tutorial

Get started with Live Polls, Q&A and slides

for your PowerPoint Presentations

The big SlideLizard presentation glossary

Normal view (slide view).

The normal view or slide view is the main working window in your PowerPoint presentation. You can see the slides at their full size on screen.

Declamation Speech

A declamation speech describes the re-giving of an important speech that has been given in the past. It is usually given with a lot of emotion and passion.

Learning Management System (LMS)

Learning Management Systems (LMS) are online platforms that provide learning resources and support the organisation of learning processes.

PowerPoint Online

PowerPoint Online is the web version of PowerPoint. You can present and edit your PowerPoint presentation with it, without having PowerPoint installed on your computer. It's only necessary to have a Microsoft - or a Microsoft 365 account.

Be the first to know!

The latest SlideLizard news, articles, and resources, sent straight to your inbox.

- or follow us on -

We use cookies to personalize content and analyze traffic to our website. You can choose to accept only cookies that are necessary for the website to function or to also allow tracking cookies. For more information, please see our privacy policy .

Cookie Settings

Necessary cookies are required for the proper functioning of the website. These cookies ensure basic functionalities and security features of the website.

Analytical cookies are used to understand how visitors interact with the website. These cookies help provide information about the number of visitors, etc.

Rubric Template for Evaluating Student Presentations: 1 Used by permission of Caroline McCullen, Instructional Technologist SAS inSchool, SAS Campus Dr., Cary, NC 27513 , 888-760-2515 X12869 FAX: 919-677-4444 MidLink Magazine Original Page Location Developed by Information Technology Evaluation Services, NC Department of Public Instruction, Caroline McCullen 1 2 3 4 Total Organization Audience cannot understand presentation because there is no sequence of information. Audience has difficulty following presentation because student jumps around. Student presents information in logical sequence which audience can follow. Student presents information in logical, interesting sequence which audience can follow. Subject Knowledge Student does not have grasp of information; student cannot answer questions about subject. Student is uncomfortable with information and is able to answer only rudimentary questions. Student is at ease with expected answers to all questions, but fails to elaborate. Student demonstrates full knowledge (more than required) by answering all class questions with explanations and elaboration. Graphics Student uses superfluous graphics or no graphics Student occasionally uses graphics that rarely support text and presentation. Student's graphics relate to text and presentation. Student's graphics explain and reinforce screen text and presentation. Mechanics Student's presentation has four or more spelling errors and/or grammatical errors. Presentation has three misspellings and/or grammatical errors. Presentation has no more than two misspellings and/or grammatical errors. Presentation has no misspellings or grammatical errors. Eye Contact Student reads all of report with no eye contact. Student occasionally uses eye contact, but still reads most of report. Student maintains eye contact most of the time but frequently returns to notes. Student maintains eye contact with audience, seldom returning to notes. Elocution Student mumbles, incorrectly pronounces terms, and speaks too quietly for students in the back of class to hear. Student's voice is low. Student incorrectly pronounces terms. Audience members have difficulty hearing presentation. Student's voice is clear. Student pronounces most words correctly. Most audience members can hear presentation. Student uses a clear voice and correct, precise pronunciation of terms so that all audience members can hear presentation. Total Points: GO TO NEXT PAGE Top of this Page Back to the Beginning of the Rubrics Program

Teaching & Learning

- Education Excellence

- Professional development

- Case studies

- Teaching toolkits

- MicroCPD-UCL

- Assessment resources

- Student partnership

- Generative AI Hub

- Community Engaged Learning

- UCL Student Success

Oral assessment

Oral assessment is a common practice across education and comes in many forms. Here is basic guidance on how to approach it.

1 August 2019

In oral assessment, students speak to provide evidence of their learning. Internationally, oral examinations are commonplace.

We use a wide variety of oral assessment techniques at UCL.

Students can be asked to:

- present posters

- use presentation software such as Power Point or Prezi

- perform in a debate

- present a case

- answer questions from teachers or their peers.

Students’ knowledge and skills are explored through dialogue with examiners.

Teachers at UCL recommend oral examinations, because they provide students with the scope to demonstrate their detailed understanding of course knowledge.

Educational benefits for your students

Good assessment practice gives students the opportunity to demonstrate learning in different ways.

Some students find it difficult to write so they do better in oral assessments. Others may find it challenging to present their ideas to a group of people.

Oral assessment takes account of diversity and enables students to develop verbal communication skills that will be valuable in their future careers.

Marking criteria and guides can be carefully developed so that assessment processes can be quick, simple and transparent.

How to organise oral assessment

Oral assessment can take many forms.

Audio and/or video recordings can be uploaded to Moodle if live assessment is not practicable.

Tasks can range from individual or group talks and presentations to dialogic oral examinations.

Oral assessment works well as a basis for feedback to students and/or to generate marks towards final results.

1. Consider the learning you're aiming to assess

How can you best offer students the opportunity to demonstrate that learning?

The planning process needs to start early because students must know about and practise the assessment tasks you design.

2. Inform the students of the criteria

Discuss the assessment criteria with students, ensuring that you include (but don’t overemphasise) presentation or speaking skills.

Identify activities which encourage the application or analysis of knowledge. You could choose from the options below or devise a task with a practical element adapted to learning in your discipline.

3. Decide what kind of oral assessment to use

Options for oral assessment can include:

Assessment task

- Presentation

- Question and answer session.

Individual or group

If group, how will you distribute the tasks and the marks?

Combination with other modes of assessment

- Oral presentation of a project report or dissertation.

- Oral presentation of posters, diagrams, or museum objects.

- Commentary on a practical exercise.

- Questions to follow up written tests, examinations, or essays.

Decide on the weighting of the different assessment tasks and clarify how the assessment criteria will be applied to each.

Peer or staff assessment or a combination: groups of students can assess other groups or individuals.

4. Brief your students

When you’ve decided which options to use, provide students with detailed information.

Integrate opportunities to develop the skills needed for oral assessment progressively as students learn.

If you can involve students in formulating assessment criteria, they will be motivated and engaged and they will gain insight into what is required, especially if examples are used.

5. Planning, planning planning!

Plan the oral assessment event meticulously.

Stick rigidly to planned timing. Ensure that students practise presentations with time limitations in mind. Allow time between presentations or interviews and keep presentations brief.

6. Decide how you will evaluate

Decide how you will evaluate presentations or students’ responses.

It is useful to create an assessment sheet with a grid or table using the relevant assessment criteria.

Focus on core learning outcomes, avoiding detail.

Two assessors must be present to:

- evaluate against a range of specific core criteria

- focus on forming a holistic judgment.

Leave time to make a final decision on marks perhaps after every four presentations. Refer to audio recordings later for borderline cases.

7. Use peers to assess presentations

Students will learn from presentations especially if you can use ‘audio/video recall’ for feedback.

Let speakers talk through aspects of the presentation, pointing out areas they might develop. Then discuss your evaluation with them. This can also be done in peer groups.

If you have large groups of students, they can support each other, each providing feedback to several peers. They can use the same assessment sheets as teachers. Marks can also be awarded for feedback.

8. Use peer review

A great advantage of oral assessment is that learning can be shared and peer reviewed, in line with academic practice.

There are many variants on the theme so why not let your students benefit from this underused form of assessment?

This guide has been produced by the UCL Arena Centre for Research-based Education . You are welcome to use this guide if you are from another educational facility, but you must credit the UCL Arena Centre.

Further information

More teaching toolkits - back to the toolkits menu

[email protected] : contact the UCL Arena Centre

UCL Education Strategy 2016–21

Assessment and feedback: resources and useful links

Six tips on how to develop good feedback practices toolkit

Download a printable copy of this guide

Case studies : browse related stories from UCL staff and students.

Sign up to the monthly UCL education e-newsletter to get the latest teaching news, events & resources.

Assessment and feedback events

Funnelback feed: https://search2.ucl.ac.uk/s/search.json?collection=drupal-teaching-learn... Double click the feed URL above to edit

Assessment and feedback case studies

- Try for free

Assessing a PowerPoint Presentation

Featured 5th Grade Resources

Related Resources

Eberly Center

Teaching excellence & educational innovation, instructor: robert dammon course: 45-901: corporate restructuring, tepper school of business assessment: rating scale for assessing oral presentations.

A key business communication skill is the ability to give effective oral presentations, and it is important for students to practice this skill. I wanted to create a systematic and consistent assessment of students’ oral presentation skills and to grade these skills consistently within the class and across semesters.

Implementation:

This oral presentation is part of a larger finance analysis assignment that students complete in groups. Each group decides which members will be involved in the oral presentation. I constructed a rating scale that decomposes the oral presentation into four major components: (1) preparation, (2) quality of handouts and overheads, (3) quality of presentation skills, and (4) quality of analysis. I rate preparation as “yes” or “no”; all other components are rated on a five-point scale. Immediately after the class in which the presentation was given, I complete the rating scale, write a few notes, and calculate an overall score, which accounts for 20% of the group’s grade on the overall assignment.

The presentation gives students important practice in oral communication, and the rating scale has made my grading much more consistent.

I have used this rating scale for several years, and the oral presentation is a standard course component. I have considered adapting the rating scale so that students also rate the oral presentation; however, I would want to give the students an abbreviated rating scale so that they focus primarily on the presentation.

CONTACT US to talk with an Eberly colleague in person!

- Faculty Support

- Graduate Student Support

- Canvas @ Carnegie Mellon

- Quick Links

Animoto Blog

- Video Marketing

- Video Ideas

- News & Features

How to Create a Rubric to Assess Student Videos

Jul 25, 2022

Using video in the classroom helps to keep students engaged and add make your lessons more memorable. Students can even make their own videos to share what they've learned in a way that is exciting and fun . But what do you do when it comes to grading students’ video projects?

One of the easiest ways to show students what’s expected of them is to create a rubric breaking down the different elements of a video project. You may have already created rubrics for other class projects — ones that involved posters, labs, or group work. Rubrics for video projects are similar. The medium may be different, but the learning and thinking students do are still there for you to assess.

Ways to assess a video:

You can use video projects at many different levels. Some of the elements in your rubric are going to be the same, whether you’re assigning a video to a high school physics class or using Animoto for a fourth grade vocabulary project.

Here are some things to include when developing a video project rubric:

Content: Clearly state what information and how much of it students should include. For example, in a biography project, students might be expected to include five interesting facts about their person in order to get the highest number of points on the rubric.

Images: Make sure your rubric states how many images you expect in an excellent, good, average, and poor project. You might want to add that those images should be relevant to the topic (e.g. no skateboards in a butterfly video) and appropriate. If you want to emphasize research skills, you could also require they use public domain images or cite their image sources.

Sources While this may not be necessary for very young students, middle and high school student videos can and should include a text slide with their bibliography or an accompanying paper bibliography.

Length: Just as you would set a page limit for an essay, you should set limits on video length, especially if you want to share the videos with the class. That length depends on your project — a simple “About Me” video project can be a minute long, while a more involved science or English assignment could be two to three minutes.

The style and flair of the video itself should really take second place to the student’s process — how a student researched the project, chose images, and organized their information. When your rubric reflects that, you’re truly assessing what a student learned.

Video project ideas

Creating Animoto accounts for you and your students is completely free! Once you have your free account set up, there are endless ways to strengthen your lessons using video. Here are some of our favorites.

Digital scavenger hunt

Take your lessons outside of the classroom with a digital scavanger hunt ! Have your students find specific plants and animals, architectural landmarks, historical features, and even shapes in their real-world environments and photograph them as they go. Then, they can add them to an exciting video that can be shared with the class using our Educational Presentation template.

Video autobiography or biography

Have your students research important figures throughout history or even share their own life stories with a video ! The Self-Introduction template makes it easy to share the most important moments of one's life in a fun and engaging way.

Vocabulary videos

Put new vocabulary into action with a video! You can teach students new vocabulary words and then have students find real-world examples of them in real life. Or, let students share all the new words they've learned over summer break using the Vocabulary Lesson template.

Book trailers

Book trailers are a great way to get the story across in just a few short minutes. Whether starting from scratch on a brand new book or creating a summary of a favorite book, the Book Trailer template makes it simple.

Video presentations

Video presentations are a great way to showcase your learnings without the anxiety of a traditional presentation. They can be used in virtual classrooms or shared "IRL" to supplement student presentations. The Educational Presentation template is versatile, engaging, and easy to customize and share.

Sports recap