- Journal Profile

- Editor-in-Chief

- Editorial Board

- Ethics Guidelines

- Open Access

- Subscription

Computer Science ›› 2022 , Vol. 49 ›› Issue (9) : 162-171. doi: 10.11896/jsjkx.220500204

• Artificial Intelligence • Previous Articles Next Articles

Temporal Knowledge Graph Representation Learning

XU Yong-xin 1,2 , ZHAO Jun-feng 1,2,3 , WANG Ya-sha 1,2,3 , XIE Bing 1,2,3 , YANG Kai 1,2,3

- 1 School of Computer Science,Peking University,Beijing 100871,China 2 Key Laboratory of High Confidence Software Technologies,Ministry of Education,Beijing 100871,China 3 Peking University Information Technology Institute(Tianjin Binhai),Tianjin 300450,China

- Received: 2021-10-22 Revised: 2022-05-16 Online: 2022-09-15 Published: 2022-09-09

- About author: XU Yong-xin,born in 1998,postgraduate.His main research interests include knowledge graph and so on. ZHAO Jun-feng,born in 1974,Ph.D,research professor,is a member of China Computer Federation.Her main research interests include big data analysis,knowledge graph,urban computing and so on.

- Supported by: National Natural Science Foundation of China(62172011).

- 1. 探讨2016版国际胰瘘研究小组定义和分级系统对胰腺术后患者胰瘘分级的影响.PDF (500KB)

Abstract: As a structured form of human knowledge,knowledge graphs have played a great supportive role in supporting the semantic intercommunication of massive,multi-source,heterogeneous data,and effectively support tasks such as data analysis,attracting the attention of academia and industry.At present,most knowledge graphs are constructed based on non-real-time static data,without considering the temporal characteristics of entities and relationships.However,data in application scenarios such as social network communication,financial trade,and epidemic spreading network are highly dynamic and exhibit complex temporal properties.How to use time series data to build knowledge graphs and effectively model them is a challenging problem.Recently,numerous studies use temporal information in time series data to enrich the characteristics of knowledge graphs,endowing know-ledge graphs with dynamic features,expanding fact triples into quadruple representation(head entity,relationship,tail entity,time).The knowledge graph which utilizes time-related quadruples to represent knowledge are collectively referred to as temporal knowledge graph.This paper summarizes the research work of temporal knowledge graph representation learning by sorting out and analyzing the corresponding literature.Specifically,it first briefly introduce the background and definition of temporal know-ledge graph.Next,it summarizes the advantages of the temporal knowledge graph representation learning method compared with the traditional knowledge graph representation learning method.Then it elaborates on the recent method of temporal knowledge graph representation learning from the perspective of the method modeling facts,introduces the dataset used by the above method and summarizes the main challenges of this technology.Finally,the future research direction is prospected.

Key words: Knowledge graph, Deep learning, Representation learning, Temporal information, Dynamic process

CLC Number:

Cite this article

XU Yong-xin, ZHAO Jun-feng, WANG Ya-sha, XIE Bing, YANG Kai. Temporal Knowledge Graph Representation Learning[J].Computer Science, 2022, 49(9): 162-171.

share this article

Add to citation manager EndNote | Reference Manager | ProCite | BibTeX | RefWorks

URL: https://www.jsjkx.com/EN/10.11896/jsjkx.220500204

https://www.jsjkx.com/EN/Y2022/V49/I9/162

Related Articles 15

Recommended 0.

- Related Articles

- Recommended

A Survey on Temporal Knowledge Graph: Representation Learning and Applications

Knowledge graphs have garnered significant research attention and are widely used to enhance downstream applications. However, most current studies mainly focus on static knowledge graphs, whose facts do not change with time, and disregard their dynamic evolution over time. As a result, temporal knowledge graphs have attracted more attention because a large amount of structured knowledge exists only within a specific period. Knowledge graph representation learning aims to learn low-dimensional vector embeddings for entities and relations in a knowledge graph. The representation learning of temporal knowledge graphs incorporates time information into the standard knowledge graph framework and can model the dynamics of entities and relations over time. In this paper, we conduct a comprehensive survey of temporal knowledge graph representation learning and its applications. We begin with an introduction to the definitions, datasets, and evaluation metrics for temporal knowledge graph representation learning. Next, we propose a taxonomy based on the core technologies of temporal knowledge graph representation learning methods, and provide an in-depth analysis of different methods in each category. Finally, we present various downstream applications related to the temporal knowledge graphs. In the end, we conclude the paper and have an outlook on the future research directions in this area.

[a]organization=School of Computer Science and Technology, East China Nomal University,addressline=3663 North Zhongshan Road, city=Shanghai, postcode=200062, country=China

[b]organization= College of Computer Science and Technology, Guizhou University,addressline=2708 South Huaxi Avenue, city=Guiyang, postcode=550025, state=Guizhou, country=China

[c]organization= Department of Industrial Engineering, Tsinghua University,addressline=30 Shuangqing Road, city=Beijing, postcode=100084, country=China

1 Introduction

Knowledge graphs (KGs) describe the real world with structured facts. A fact consists of two entities and a relation connecting them, which can be formally represented as a triple (head, relation, tail) , and an instance of a fact is (Barack Obama, make statement, Iran) . Knowledge graph representation learning (KGRL) [ 33 ] seeks to learn the low-dimentional vector embeddings of entities and relations and use these embeddings for downstream tasks such as information retrieval [ 16 ] , question answering [ 29 ] , and recommender systems [ 2 ] .

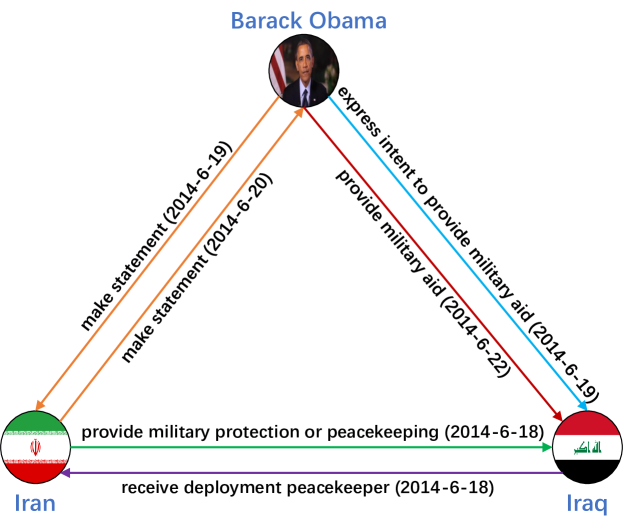

Existing KGs ignore the timestamp indicating when a fact occurred and cannot reflect their dynamic evolution over time. In order to represent KGs more accurately, Wikidata [ 73 ] and YOGO2 [ 32 ] add temporal information to the facts, and some event knowledge graphs [ 55 , 42 ] also contain the timestamps indicating when the events occurred. The knowledge graphs with temporal information are called temporal knowledge graphs (TKGs). Figure 1 is a subgraph of the temporal knowledge graph. The fact in TKGs are expanded into quadruple (head, relation, tail, timestamp) , a specific instance is (Barack Obama, make statement, Iran, 2014-6-19) .

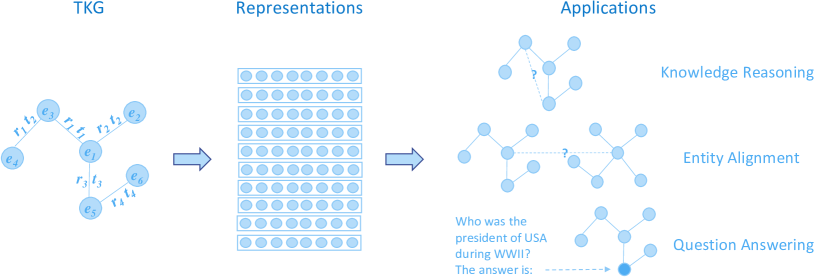

The emergence of TKGs has led to increased researcher interest in temporal knowledge graph representation learning (TKGRL) [ 53 ] . The acquired low-dimensional vector representations are capable of modelling the dynamics of entities and relations over time, thereby improving downstream applications such as time-aware knowledge reasoning [ 34 ] , entity alignment [ 81 ] , and question answering [ 59 ] .

Temporal knowledge graph representation learning and applications are at the forefront of current research. Nevertheless, as of now, a comprehensive survey on the topic is not yet available. Ji et al. [ 33 ] provides a survey on KGs, which includes a section on TKGs. However, this section only covers a limited number of early methods related to TKGRL. The paper [ 53 ] is a survey on TKGs, with one section dedicated to introducing representation learning methods of static knowledge graphs, and only five models related to TKGRL are elaborated in detail. The survey [ 6 ] is about temporal knowledge graph completion (TKGC), and it focuses solely on the interpolation-based temporal knowledge graph reasoning application.

This paper comprehensively summarizes the current research of TKGRL and its related applications. Our main contributions are summarized as follows: (1) We conduct an extensive investigation on various TKGRL methods up to the present, analyze their core technologies, and propose a new classification taxonomy. (2) We divide the TKGRL methods into ten distinct categories. Within each category, we provide detailed information on the key components of different methods and analyze the strengths and weaknesses of these methods. (3) We introduce the latest development of different applications related to TKGs, including temporal knowledge graph reasoning, entity alignment between temporal knowledge graphs, and question answering over temporal knowledge graphs. (4) We summarize the existing research of TKGRL and point out the future directions which can guide further work.

The remainder of this paper is organized as follows: Chapter 2 introduces the background of temporal knowledge graphs, including definitions, datasets, and evaluation metrics. Chapter 3 summarizes various temporal knowledge graph representation learning methods, including transformation-based methods, decomposition-based methods, graph neural networks-based methods, capsule network-based methods, autoregression-based methods, temporal point process-based methods, interpretability-based methods, language model methods, few-shot learning methods and others. Chapter 4 introduces the related applications of the temporal knowledge graph, such as temporal knowledge graph reasoning, entity alignment between temporal knowledge graphs, and question answering over temporal knowledge graphs. Chapter 5 highlights the future directions of Temporal Knowledge Graph Representation Learning (TKGRL), encompassing Scalability, Interpretability, Information Fusion, and the Integration of Large Language Models. Chapter 6 gives a conclusion of this paper.

2 Background

2.1 problem formulation.

Take Figure 1 for example, where the entity set E contains (Barack Obama, Iran, Iraq) , the relation set contains (make statement, express intent to provide military aid, provide military aid, provide military protection or peacekeeping, receive deployment peacekeeper) , the time set contains (2014-6-18, 2014-6-19, 2014-6-20, 2014-6-22) , and the fact set contains ((Iran, Provide military protection or peacekeeping, Iraq, 2014-6-18)), (Iraq, receive deployment peacekeeper, Iran, 2014-6-18), (Barack Obama, Make statement, Iran, 2014-6-19), (Iran, Make statement, Barack Obama, 2014-6-20),(Barack Obama, express intent to provide military aid, Iraq, 2014-6-19), (Barack Obama, provide military aid, Iraq, 2014-6-22)) .

2.2 Datasets

There are four commonly used datasets for temporal knowledge graph representation learning.

ICEWS The integrated crisis early warning system (ICEWS) [ 55 ] captures and processes millions of data points from digitized news, social media, and other sources to predict, track and respond to events around the world, primarily for early warning. Three subsets are typically used: ICEWS14, ICEWS05-15, and ICEWS18, which contain events in 2014, 2005-2015, and 2018, respectively.

GDELT The global database of events, language, and tone (GDELT) [ 42 ] is a global database of society. It includes the world’s broadcast, print, and web news from across every country in over 100 languages and continually updates every 15 minutes.

Wikidata The Wikidata [ 73 ] is a collaborative, multilingual auxiliary knowledge base hosted by the Wikimedia Foundation to support resource sharing and other Wikimedia projects. It is a free and open knowledge base that can be read and edited by both humans and machines. Many items in Wikidata have temporal information.

YAGO The YAGO [ 32 ] is a linked database developed by the Max Planck Institute in Germany. YAGO integrates data from Wikipedia, WordNet, and GeoNames. YAGO integrates WordNet’s word definitions with Wikipedia’s classification system, adding temporal and spatial information to many knowledge items.

The datasets of TKGs often require unique data processing methods for different downstream applications. Table 1 presents the statistics of datasets for various tasks of TKGs. In knowledge reasoning tasks, datasets are typically divided into different training sets, validation sets, and test sets based on task type (interpolation and extrapolation). In entity alignment tasks, as the same entites in the real world need to be aligned between different KGs, a dataset always includes two temporal knowledge graphs that must be learned simultaneously. For question answering tasks, the datasets not only include temporal knowledge graphs used to search for answers but also include temporal-related questions (which have not been showed here).

2.3 Evaluation Metrics

The evaluation metrics for verifying the performance of TKGRL are M R R 𝑀 𝑅 𝑅 MRR (mean reciprocal rank) and H i t @ k 𝐻 𝑖 𝑡 @ 𝑘 Hit@k .

𝑴 𝑹 𝑹 𝑴 𝑹 𝑹 \boldsymbol{MRR} The M R R 𝑀 𝑅 𝑅 MRR represents the average of the reciprocal ranks of the correct answers. It can be calculated as follows:

where S 𝑆 S is the set of all correct answers, | S | 𝑆 |S| is the number of the sets. The predicted result is a set sorted by the probability of the answer from high to low, and r a n k i 𝑟 𝑎 𝑛 subscript 𝑘 𝑖 rank_{i} is the rank of the i-th correct answer in the prediction result. The higher the MRR, the better the performance.

𝑯 𝒊 𝒕 @ 𝒌 𝑯 𝒊 𝒕 bold-@ 𝒌 \boldsymbol{Hit@k} The H i t s @ k 𝐻 𝑖 𝑡 𝑠 @ 𝑘 Hits@k reports the proportion of correct answers in the top k 𝑘 k predict results. It can be calculated by the following equation:

3 Temporal Knowledge Graph Representation Learning Methods

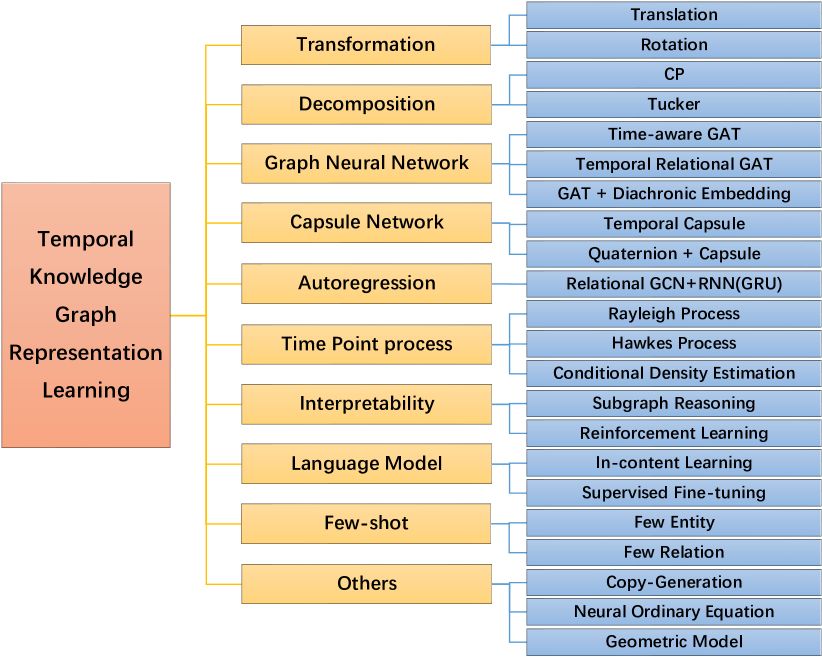

Compared to KGs, TKGs contain additional timestamps, which are taken into account in the construction of TKGRL methods. These methods can be broadly categorized into transformation-based, decomposition-based, graph neural networks-based, and capsule network-based approaches. Additionally, temporal knowledge graphs can be viewed as sequences of snapshots captured at different timestamps or events that occur over continuous time, and can be learned using autoregressive and temporal point process techniques. Moreover, some methods prioritize interpretability, language model, and few-shot learning. Thus, based on the core technologies employed by TKGRL methods, we group them into the following categories: transformation-based methods, decomposition-based methods, graph neural networks-based methods, capsule network-based methods, autoregression-based methods, temporal point process-based methods, interpretability-based methods, language model methods, few-shot learning methods, and others. A visualization of the categorization of TKGRL methods is presented in Figure 3 .

The notations in these methods are varied, and we define our notations to describe them uniformly. We use lower-case letters to denote scalars, bold lower-case letters to denote vectors, bold upper-case letters to denote matrices, bold calligraphy upper-case letters to denote order 3 tensors, and bold script upper-case letters to denote order 4 tensors. The main notations and their descriptions are listed in table 2 .

3.1 Transformation-based Methods

In the transformation-based method, timestamps or relations are regarded as the transformation between entities. The representation learning of TKGs is carried out by integrating temporal information or mapping entities and relations to temporal hyperplanes based on the existing KGRL methods. There are translation-based transformations and rotation-based transformations.

𝒉 𝒓 𝒕 1 2 \|\boldsymbol{h}+\boldsymbol{r}-\boldsymbol{t}\|_{1/2} .

𝒉 𝒓 𝝉 𝒕 1 2 \|\boldsymbol{h}+\boldsymbol{r}+\boldsymbol{\tau}-\boldsymbol{t}\|_{1/2} .

𝒉 subscript 𝒓 𝑠 𝑒 𝑞 𝒕 2 \|\boldsymbol{h}+\boldsymbol{r}_{seq}-\boldsymbol{t}\|_{2} .

subscript 𝒉 𝜏 subscript 𝒓 𝜏 subscript 𝒕 𝜏 1 2 \|\boldsymbol{h}_{\tau}+\boldsymbol{r}_{\tau}-\boldsymbol{t}_{\tau}\|_{1/2} .

subscript 𝒉 𝜏 𝒓 subscript ¯ 𝒕 𝜏 1 \|\boldsymbol{h}_{\tau}+\boldsymbol{r}-\overline{\boldsymbol{t}}_{\tau}\|_{1} .

subscript 𝒉 𝜏 𝒓 subscript ¯ 𝒕 𝜏 2 \|\boldsymbol{h}_{\tau}+\boldsymbol{r}-\overline{\boldsymbol{t}}_{\tau}\|_{2} .

3.2 Decomposition-based Methods

The main task of representation learning is to learn the low-dimensional vector representation of the knowledge graph. Tensor decomposition has three applications: dimension reduction, missing data completion, and implicit relation mining, which meet the needs of knowledge graph representation learning. The knowledge graph consists of triples and can be represented by an order 3 tensor. For the temporal knowledge graph, the additional temporal information can be represented by an order 4 tensor, and each tensor dimension is the head entity, relation, tail entity, and timestamp, respectively. Tensor decomposition includes Canonical Polyadic (CP) decomposition [ 30 ] and Tucker decomposition [ 69 ] .

3.3 Graph Neural Networks-based Methods

Graph Neural Networks (GNN) [ 60 ] have powerful structure modeling ability. The entity can enrich its representation with the attribute feature and the global structure feature by GNN. Typical graph neural networks include Graph Convolutional Networks (GCN) [ 38 ] and Graph Attention Networks (GAT) [ 72 ] . GCN gets the representation of nodes by aggregating neighbor embeddings, and GAT uses a multi-head attention mechanism to get the representation of nodes by aggregating weighted neighbor embedding. The knowledge graph is a kind of graph that has different relations. The relation-aware graph neural networks are developed to learn the representations of entities in the knowledge graph. Relational Graph Convolutional Networks (R-GCN) [ 61 ] is a graph neural network model for relational data. It learns the representation for each relation and obtains entity representation by aggregating neighborhood information under different relation representations. Temporal knowledge graphs have additional temporal information, and some methods enhance the representation of entities by a time-aware mechanism.

TEA-GNN [ 81 ] learns entity representations through a time-aware graph attention network, which incorporates relational and temporal information into the GNN structure. Specifically, it assigns different weights to different entities with orthogonal transformation matrices computed from the neighborhood’s relational embeddings and temporal embeddings and obtains the entity representations by aggregating the neighborhood.

TREA [ 82 ] learns more expressive entity representation through a temporal relational graph attention mechanism. It first maps entities, relations, and timestamps into an embedding space, then integrates entities’ relational and temporal features through a temporal relational graph attention mechanism from their neighborhood, and finally, uses a margin-based log-loss to train the model and obtains the optimized representations.

DEGAT [ 74 ] proposes a dynamic embedding graph attention network. It first uses the GAT to learn the static representations of entities by aggregating the features of neighbor nodes and relations, then adopts a diachronic embedding function to learn the dynamic representations of entities, and finally concatenates the two representations and uses the ConvKB as the decoder to obtain the score.

T 2 superscript 𝑇 2 T^{2} TKG [ 84 ] , or Latent relations Learning method for Temporal Knowledge Graph reasoning, is a novel approach that addresses the limitations of existing methods in explicitly capturing intra-time and inter-time latent relations for accurate prediction of future facts in Temporal Knowledge Graphs. It first employs a Structural Encoder (SE) to capture representations of entities at each timestamp, encoding their structural information. Then, it introduces a Latent Relations Learning (LRL) module to mine and exploit latent relations both within the same timestamp (intra-time) and across different timestamps (inter-time). Finally, the method fuses the temporal representations obtained from SE and LRL to enhance entity prediction tasks.

3.4 Capsule Network-based Methods

CapsNet is first proposed for computer vision tasks to solve the problem that CNN needs lots of training data and cannot recognize the spatial transformation of targets. The capsule network is composed of multiple capsules, and one capsule is a group of neurons. The capsules in the lowest layer are called primary capsules, usually implemented by convolution layers to detect the presence and pose of a particular pattern (such as eyes, nose, or mouth). The capsules in the higher level are called routing capsules, which are used to detect more complex patterns (such as faces). The output of a capsule is a vector whose length represents the probability that the pattern is present and whose orientation represents the pose of the pattern.

CapsE [ 54 ] explores the application of capsule network in the knowledge graph. It represents a triplet as a three-column matrix in which each column represents the embedding of the head entity, relation, and tail entity, respectively. The matrix was fed to the capsule network, which first maps the different features of the triplet by a CNN layer, then captures the various patterns by the primary capsule layer, and finally routes the patterns to the next capsule layer to obtain the continuous output vector whose length indicates whether the triplet is valid.

TempCaps [ 18 ] incorporates temporal information and proposes a capsule network for temporal knowledge graph completion. The model first selects the neighbors of the head entity in a time window and obtains the embeddings of these neighbors with the capsules, then adopts a dynamic routing process to connect the lower capsules and higher capsules and gets the head entity embedding, and finally uses a multi-layer perceptron (MLP) [ 20 ] as the decoder to produce the scores of all candidate entities.

subscript 𝒂 1 subscript 𝒃 1 𝒊 subscript 𝒄 1 𝒋 subscript 𝒅 1 𝒌 subscript 𝒂 0 subscript 𝒃 0 subscript 𝒄 0 subscript 𝒅 0 subscript 𝒂 1 subscript 𝒃 1 subscript 𝒄 1 subscript 𝒅 1 superscript ℝ 𝑑 \mathbb{H}^{d},\ \boldsymbol{q=q_{0}+q_{1}\xi},\ \boldsymbol{q_{0}=a_{0}+}\\ \boldsymbol{b_{0}i+c_{0}j+d_{0}k},\ \boldsymbol{q_{1}=a_{1}+b_{1}i+c_{1}j+d_{1}k},\ \boldsymbol{a_{0},\,b_{0},\,c_{0},\,d_{0},\,a_{1},\,b_{1},\,c_{1},}\\ \boldsymbol{d_{1}}\in\mathbb{R}^{d} ). Dual quaternions enable modeling of both rotation and translation operations simultaneously. The model first transforms the head entity through relations and timestamps in the dual quaternion space, where the representation is close to that of the tail entity. The scoring function is ‖ 𝒉 ⊙ 𝒓 ⊙ 𝝉 − 𝒕 ‖ norm direct-product 𝒉 𝒓 𝝉 𝒕 \|\boldsymbol{h}\odot\boldsymbol{r}\odot\boldsymbol{\tau}-\boldsymbol{t}\| . The learned representations are then inputted into the capsule network to obtain the final representation.

3.5 Autoregression-based Methods

𝑇 1 G_{T+1} in the future.

RE-NET [ 34 ] proposes a recurrent event network to model the TKG for predicting future facts. It believes that the facts in G T subscript 𝐺 𝑇 G_{T} at timestamp T 𝑇 T depend on the facts in the past m subgraphs G T − 1 : T − m subscript 𝐺 : 𝑇 1 𝑇 𝑚 G_{T-1:T-m} before T 𝑇 T . It first uses the R-GCN to learn the global structural representations and local neighborhood representations of the head entity at each timestamp. Then it utilizes the gated recurrent units (GRU) [ 11 ] to update the above representations and pass these representations to an MLP as a decoder to infer the facts at timestamp T 𝑇 T . This method only models the representations of the specific entity and relation in the query triple, ignoring the structural dependency between all triples in each subgraph, which may lose some important information from the entities not in the query triple.

Glean [ 14 ] thinks that most of the existing representation learning methods use the structural information of TKG, ignoring the unstructured information such as semantic information of words. It proposes a temporal graph neural network with heterogeneous data fusion. Specifically, it first constructs temporal event graphs based on historical facts at each timestamp and temporal word graphs from event summaries at each timestamp. Then it uses the CompGCN [ 70 ] to learn the structural representations of entities and relations in the temporal event graphs and the GCN to learn the textual semantic representations of entities and relations in the temporal word graphs. Finally, it fuses the two representations and utilizes a recurrent encoder to model temporal features for final prediction.

RE-GCN [ 46 ] splits the TKG into a sequence of KG according to the timestamps and encodes the facts in the past m steps recurrently to predict the entities and relations in the future. This model proposes a recurrent evolution network based on a graph convolution network to model the evolutional representations by incorporating the structural dependencies among concurrent facts, the sequential patterns across temporally adjacent facts, and the static properties. It uses the relation-aware GCN to capture the structural dependency and utilizes GRU to obtain the sequential pattern. Then it combines the static properties learned by R-GCN as a constraint to learn the evolutional representations of entities and relations and adopts ConvTransE [ 62 ] as the decoder to predict the probability of entities and relations at next timestamp.

TiRGN [ 43 ] argues that the above methods can only capture the local historical dependence of the adjacent timestamps and cannot fully learn the historical characteristics of the facts. It proposes a time-guided recurrent graph neural network with local-global historical patterns which can model the historical dependency of events at adjacent snapshots with a local recurrent encoder, the same as RE-GCN, and collect repeated historical facts by a global history encoder. The final representations are fed into a time-guided decoder named Time-ConvTransE/Time-ConvTransR to predict the entities and relations in the future.

Cen [ 44 ] believes that modeling historical facts with fixed time steps could not discover the complex evolutional patterns that vary in length. It proposes a complex evolutional network that use the evolution unit in RE-GCN as a sequence encoder to learn the representations of entities in each subgraph and utilizes the CNN as the decoder to obtain the feature maps of historical snapshots with different length. The curriculum learning strategy is used to learn the complex evolution pattern with different lengths of historical facts from short to long and automatically select the optimal maximum length to promote the prediction.

3.6 Temporal Point Process-based Methods

The autoregression-based methods sample the TKG into discrete snapshots according to a fixed time interval, which cannot effectively model the facts with irregular time intervals. Temporal point process (TPP) [ 12 ] is a stochastic process composed of a series of events in a continuous time domain. The representation learning methods based on TPP regard the TKG as a list of events changing continuously with time and formalize it as ( G , O ) 𝐺 𝑂 (G,O) , where G 𝐺 G is the initialized TKG at time τ 0 subscript 𝜏 0 \tau_{0} , O 𝑂 O is a series of observed events ( h , r , t , τ ) ℎ 𝑟 𝑡 𝜏 (h,r,t,\tau) . At any time τ > τ 0 𝜏 subscript 𝜏 0 \tau>\tau_{0} , the TKG can be updated by the events before time τ 𝜏 \tau . The TPP can be characterized by conditional intensity function λ ( τ ) 𝜆 𝜏 \lambda(\tau) . Given the historical events before a timestamp, if we can find a conditional intensity function to characterize them, then we can Predict whether the events will occur in the future with a conditional density and when the events will occur with an expectation.

Know-Evolve [ 67 ] combines the TPP and the deep neural network framework to model the occurrence of facts as a multi-dimensional TPP. It characterizes the TPP with the Rayleigh process and uses neural networks to simulate the intensity function. RNN is used to learn the dynamic representation of the entities, and bilinear relationship score is used to capture multiple relational interactions between entities to modulate the intensity function. Thus, it can predict whether and when the event will occur.

GHNN [ 27 ] believes that the Hawkes process [ 28 ] based on the neural network can effectively capture the influence of past facts on future facts, and proposes a graph Hawkes neural network (GHNN). Firstly, it solves the problem that Know-Evolve could not deal with co-occurrence facts and uses a neighborhood aggregation module to process multiple facts of entities co-occurring. Then, it utilizes the continuous-time LSTM (cLSTM) model [ 51 ] to simulate the Hawkes process to capture the evolving dynamics of the facts to implement link prediction and time prediction.

EvoKG [ 57 ] argues that the above methods based on TPP lack to model the evolving network structure, and the methods based on autoregression lack to model the event time. It proposes a model jointly modeling the evolving network structure and event time. First, it uses an extended R-GCN and RNN to learn the time-evolving structural representations of entities and relations and utilizes an MLP with softmax to model the conditional probability of event triple. Then, it uses the same framework to learn the time-evolving temporal representations and adopts the TPP based on conditional density estimation with a mixture of log-normal distributions to model the event time. Finally, it jointly trains the two tasks and predicts the event and time in the future.

3.7 Interpretability-based Methods

The aforementioned methods has resulted in a lack of interpretability and transparency in the generated results. As a result, we categorize interpretability-based methods as a separate category to underscore the crucial role of interpretability in developing reliable and transparent models. These methods aim to provide explanations for the predictions made by the models. Two popular types of such methods are subgraph reasoning-based and reinforcement learning-based approaches.

Subgraph Reasoning xERTE [ 23 ] is a subgraph reasoning-based method that proposes an explainable reasoning framework for predicting facts in the future. It starts from the head entity in the query and utilizes a temporal relational graph attention mechanism to learn the entity representation and relation representation. Then it samples the edges and temporal neighbors iteratively to construct the subgraph after several rounds of expansion and pruning. Finally, it predicts the tail entity in the subgraph.

Reinforcement Learning Reinforcement learning (RL) [ 35 ] is usually modeled as a Markov Decision Process (MDP) [ 4 ] , which includes a specific environment and an agent. The agent has an initial state. After performing an action, it receives a reward from the environment and transitions to a new state. The goal of reinforcement learning is to find the policy network to obtain the maximum reward from all action strategies.

CluSTeR [ 45 ] proposes a two-stage reasoning strategy to predict the facts in the future. First, the clue related to a given query is searched and deduced from the history based on reinforcement learning. Then, the clue at different timestamps is regarded as a subgraph related to the query, and the R-GCN and GRU are used to learn the evolving representations of entities in the subgraph. Finally, the two stages are jointly trained, and the prediction is inferred.

TITer [ 65 ] directly uses the temporal path-based reinforcement learning model to learn the representations of the TKG and reasons for future facts. It adds temporal edges to connect each historical snapshot of the TKG. The agent starts from the head entity of the query, transitions to the new node according to the policy network, and searches for the answer node. The method designs a time-shaped reward based on Dirichlet distribution to guide the model learning.

3.8 Language Model

In the domain of TKG, the rapid development of language models has prompted researchers to explore their application for predictive tasks. The current methodologies employing language models in the TKG domain predominantly encompass two distinct approaches: In-Context Learning and Supervised Fine-Tuning.

In-Context Learning ICLTKG [ 41 ] introduces a novel TKG forecasting approach that leverages large language models (LLMs) through in-context learning (ICL) [ 5 ] to efficiently capture and utilize irregular patterns of historical facts for accurate predictions. The implementation algorithm of this paper involves a three-stage pipeline designed to harness the capabilities of large language models (LLMs) for temporal knowledge graph (TKG) forecasting. The first stage focuses on selecting relevant historical facts from the TKG based on the prediction query. These facts are then used as context for the LLM, enabling it to capture temporal patterns and relationships between entities. Then the contextual facts are transformed into a lexical prompt that represents the prediction task. Finally, the output of the LLM is decoded into a probability distribution over the entities within the TKG. Throughout this pipeline, the algorithm controls the selection of background knowledge, the prompting strategy, and the decoding process to ensure accurate and efficient TKG forecasting. By leveraging the capabilities of LLMs and harnessing the irregular patterns embedded within historical data, this approach achieves competitive performance across a diverse range of TKG benchmarks without the need for extensive supervised training or specialized architectures.

zrLLM [ 17 ] introduces a novel approach that leverages large language models (LLMs) to enhance zero-shot relational learning on temporal knowledge graphs (TKGs). It proposes a method to first use an LLM to generate enriched relation descriptions based on textual descriptions of KG relations, and then a second LLM is employed to generate relation representations, which capture semantic information. Additionally, a relation history learner is developed to capture temporal patterns in relations, further enabling better reasoning over TKGs. The zrLLM approach is shown to significantly improve the performance of TKGF models in recognizing and forecasting facts with previously unseen zero-shot relations. Importantly, zrLLM achieves this without further fine-tuning of the LLMs, demonstrating the potential of alignment between the natural language space of LLMs and the embedding space of TKGF models. Experimental results exhibit substantial gains in zero-shot relational learning on TKGs, confirming the effectiveness and adaptability of the proposed zrLLM approach.

Supervised Fine-Tuning ECOLA [ 25 ] proposes a joint learning model by leveraging text knowledge to enhance temporal knowledge graph representations. As existing TKGs often lack fact description information, the authors construct three new datasets that contain such information. During model training, they jointly optimize the knowledge-text prediction (KTP) objective and the temporal knowledge embedding (tKE) objective to improve the representation of TKGs. KTP employs pre-trained language models such as transformers [ 71 ] , while tKE can utilize an existing TKGRL model such as DyERNIE [ 26 ] . By augmenting the temporal knowledge graph representation with text descriptions, the model achieves significant performance gains.

Frameworks such as GenTKG [ 47 ] and Chain of History [ 50 ] adopt retrieval augmented generation method for prediction. They utilize specific strategies to retrieve historical facts with high temporal relevance and logical coherence. Subsequently, these frameworks apply supervised fine-tuning language models to predict the future based on the retrieved historical facts. The input of the language model comprises historical facts and prediction queries, with the model outputting forecasted results. The authors have constructed a bespoke dataset of instructional data, which is utilized to train the language model, resulting in exemplary performance.

3.9 Few-shot Learning Methods

Few-shot learning (FSL) [ 76 ] is a type of machine learning problems that deals with the problem of learning new concepts or tasks from only a few examples. FSL has applications in a variety of domains, including computer vision [ 36 ] , natural language processing [ 77 ] , and robotics [ 49 ] , where data may be scarce or expensive to acquire. In TKGs, some entities and relations are only exist in a limited number of facts, and new entities and relations emerge over time. The latest TKGRL models now have the ability to perform FSL [ 8 ] , which is essential for better representing these limited data. Due to the differences in handling data and learning methods for few entities and few relations, we will introduce them separately.

Few Entities MetaTKG [ 78 ] reveals that new entities emerge over time in TKGs, and appear in only a few facts. Consequently, learning their representation from limited historical information leads to poor performance. Therefore, the authors propose a temporal meta-learning framework to address this issue. Specifically, they first divide the TKG into multiple temporal meta-tasks, then employ a temporal meta-learner to learn evolving meta-knowledge across these tasks, finally, the learned meta-knowledge guides the backbone (which can be an existing TKGRL model, such as RE-GCN [ 46 ] ) to adapt to new data.

MetaTKGR [ 75 ] confirms that emerging entities, which exist in only a small number of facts, are insufficient to learn their representations using existing models. To address this issue, the authors propose a meta temporal knowledge graph reasonging framework. The model leverages the temporal supervision signal of future facts as feedback to dynamically adjust the sampling and aggregation neighborhood strategy, and encoder the new entity representations. The optimized parameters can be learned via a bi-level optimization, where inner optimization initializes entity-specific parameters using the global parameters and fine-tunes them on the support set, while outer optimization operates on the query set using a temporal adaptation regularizer to stabilize meta temporal reasoning over time. The learned parameters can be easily adapted to new entities.

As existing datasets themselves often contain new entities that are associated with only a few facts, it is possible to directly divide the existing dataset into tasks to construct the support set and query set without requiring the creation of a new dataset.

Few Relations TR-Match [ 22 ] identifies that most relations in TKGs have only a few quadruples, and new relations are added over time. Existing models are inadequate in addressing the few-shot scenario and may not fully represent the evolving temporal and relational features of entities in TKGs. To address these issues, the authors propose a temporal-relational matching network. Specifically, the proposed approach incorporates a multi-scale time-relation attention encoder to adaptively capture local and global information based on time and relation to tackle the dynamic properties problem. A new matching processor is designed to address the few-shot problem by mapping the query to a few support quadruples in a relation-agnostic manner. To address the challenge posed by few relations in temporal knowledge graphs, three new datasets, namely ICEWS14-few, ICEWS05-15-few, and ICEWS18-few, are constructed based on existing TKG datasets. The proposed TR-Match framework is evaluated on these datasets, and the experimental results demonstrate its capability to achieve excellent performance in few-shot relation scenarios.

MTKGE [ 10 ] recognizes that TKGs are subject to the emergence of unseen entities and relations over time. To address this challenge, the authors propose a meta-learning-based temporal knowledge graph extrapolation model. The proposed approach includes a Relative Position Pattern Graph (RPPG) to construct several position patterns, a Temporal Sequence Pattern Graph (TSPG) to learn different temporal sequence patterns, and a Graph Convolutional Network (GCN) module for extrapolation. This model leverages meta-learning techniques to adapt to new data and extract useful information from the existing TKG. The proposed MTKGE framework represents an important advancement in TKGRL by introducing a novel approach to knowledge graph extrapolation.

3.10 Other Methods

Other methods leverage the unique characteristics of TKGs to learn entity, relation, and timestamp representations. For instance, One approach explores the repetitive patterns of TKG and learns a more expressive representation using the copy-generation patterns. Alternatively, other methods employ various geometric and algorithmic techniques to capture the structural properties of TKGs and learn effective representations.

Copy Generation CygNet [ 88 ] finds that many facts show repeated patterns along the timeline, which indicates that potential knowledge can be learned from historical facts. Therefore, it proposes a time-aware copy-generation model which can predict future facts concerning the known facts. It constructs a historical vocabulary with multi-hot vectors of head entity and relation in each snapshot. In copy mode, it generates an index vector with an MLP to obtain the probability of the tail entities in the historical entity vocabulary. In generation mode, it uses an MLP to get the probability of tail entities in the entire entity vocabulary. After combining the two probabilities, it predicts the tail entity.

Neural Ordinary Equation TANGO [ 24 ] contends that existing approaches for modeling TKGs are inadequate in capturing the continuous evolution of TKGs over time due to their reliance on discrete state spaces. To address this limitation, TANGO proposes a novel model based on Neural Ordinary Differential Equations (NODEs). More specifically, the proposed model first employs a multi-relational graph convolutional network module to capture the graph structure information at each point in time. A graph transformation module is also utilized to model changes in edge connectivity between entities and their neighbors. The output of these modules is integrated to obtain dynamic representations of entities and relations. Subsequently, an ODE solver is adopted to solve these dynamic representations, thereby enabling TANGO to learn continuous-time representations of TKGs. TANGO’s novel approach based on ODEs offers a more effective and accurate method for modeling the dynamic evolution of TKGs compared to existing techniques that rely on discrete representation space.

Geometric model The transformation models represent TKGs in a Euclidean space, while Dyernie [ 26 ] maps the TKGs to a non-Euclidean space and employs Riemannian manifold product to learn evolving entity representations. This approach can model multiple simultaneous non-Euclidean structures, such as hierarchical and cyclic structures, to more accurately capture the complex structural properties of TKGs. By leveraging these additional structural features, the Dyernie method can more effectively capture the relationships between entities in TKGs, resulting in improved performance on TKGRL tasks.

subscript 𝒃 𝒉 superscript 𝝉 𝒓 \boldsymbol{b_{h}+\tau^{r}} . Furthermore, BoxTE represents boxes using a time-induced relation head box 𝒓 𝒉 | 𝝉 = 𝒓 𝒉 − 𝝉 𝒓 superscript 𝒓 conditional 𝒉 𝝉 superscript 𝒓 𝒉 superscript 𝝉 𝒓 \boldsymbol{r^{h|\tau}}=\boldsymbol{r^{h}-\tau^{r}} and a time-induced relation tail box 𝒓 𝒕 | 𝝉 = 𝒓 𝒕 superscript 𝒓 conditional 𝒕 𝝉 superscript 𝒓 𝒕 \boldsymbol{r^{t|\tau}=\boldsymbol{r^{t}}} − 𝝉 𝒓 superscript 𝝉 𝒓 \boldsymbol{-\tau^{r}} . To determine whether a fact r ( h , t | τ ) 𝑟 ℎ conditional 𝑡 𝜏 r(h,t|\tau) holds, BoxTE checks if the representations for h ℎ h and t 𝑡 t lie within their corresponding boxes. This is expressed mathematically as follows: 𝒉 𝒓 ( 𝒉 , 𝒕 | 𝝉 ) ∈ 𝒓 𝒉 superscript 𝒉 𝒓 𝒉 conditional 𝒕 𝝉 superscript 𝒓 𝒉 \boldsymbol{h^{r(h,t|\tau)}}\in\boldsymbol{r^{h}} , and 𝒕 𝒓 ( 𝒉 , 𝒕 | 𝝉 ) ∈ 𝒓 𝒕 superscript 𝒕 𝒓 𝒉 conditional 𝒕 𝝉 superscript 𝒓 𝒕 \boldsymbol{t^{r(h,t|\tau)}}\in\boldsymbol{r^{t}} . By substracting 𝝉 𝒓 superscript 𝝉 𝒓 \boldsymbol{\tau^{r}} from both sides, it obtains the equivalent expressions: 𝒉 𝒓 ( 𝒉 , 𝒕 ) ∈ 𝒓 𝒉 | 𝝉 superscript 𝒉 𝒓 𝒉 𝒕 superscript 𝒓 conditional 𝒉 𝝉 \boldsymbol{h^{r(h,t)}}\in\boldsymbol{r^{h|\tau}} , 𝒕 𝒓 ( 𝒉 , 𝒕 ) ∈ 𝒓 𝒕 | 𝝉 superscript 𝒕 𝒓 𝒉 𝒕 superscript 𝒓 conditional 𝒕 𝝉 \boldsymbol{t^{r(h,t)}}\in\boldsymbol{r^{t|\tau}} . BoxTE enables the model to capture rigid inference patterns and cross-time inference patterns, thereby making it a powerful tool for TKGRL.

TGeomE [ 80 ] moves beyond the use of complex or hypercomplex spaces for TKGRL and instead proposes a novel geometric algebra-based embedding approach. This method utilizes multivector representations and performs fourth-order tensor factorization of TKGs while also introducing a new linear temporal regularization for time representation learning. The proposed TGeomE approach can naturally model temporal relations and enhance the performance of TKGRL models.

3.11 Summary

In this section, we divide the TKGRL methods into ten categories and introduce the core technologies of these methods in detail. Table 3 shows the summary of the methods, including the representation space, the encoder for mapping the entities and relations to the vector space, and the decoder for predicting the answer.

4 Applications of Temporal Knowledge Graph

By introducing temporal information, TKG can express the facts in the real world more accurately, improve the quality of knowledge graph representation learning, and answer temporal questions more reliably. It is helpful for the applications such as reasoning, entity alignment, and question answering.

4.1 Temporal Knowledge Graph Reasoning

TKGRL methods are widely used in temporal knowledge graph reasoning (TKGR) tasks which automatically infers new facts by learning the existing facts in the KG. TKGR usually has three subtasks: entity prediction, relation prediction, and time prediction. Entity prediction is the basic task of link prediction, which can be expressed as two queries ( ? , r , t , τ ) ? 𝑟 𝑡 𝜏 (?,r,t,\tau) and ( h , r , ? , τ ) ℎ 𝑟 ? 𝜏 (h,r,?,\tau) . Relation prediction and time prediction can be expressed as ( h , ? , t , τ ) ℎ ? 𝑡 𝜏 (h,?,t,\tau) and ( h , r , t , ? ) ℎ 𝑟 𝑡 ? (h,r,t,?) , respectively.

TKGR can be divided into two categories based on when the predictions of facts occur, namely interpolation and extrapolation. Suppose that a TKG is available from time τ 0 subscript 𝜏 0 \tau_{0} to τ T subscript 𝜏 𝑇 \tau_{T} . The primary objective of interpolation is to retrieve the missing facts at a specific point in time τ ( τ 0 ≤ τ ≤ τ T ) 𝜏 subscript 𝜏 0 𝜏 subscript 𝜏 𝑇 \tau\ (\tau_{0}\leq\tau\leq\tau_{T}) . This process is also known as temporal knowledge graph completion (TKGC). On the other hand, extrapolation aims to predict the facts that will occur in the future ( τ ≥ τ T ) 𝜏 subscript 𝜏 𝑇 (\tau\geq\tau_{T}) and is referred to as temporal knowledge graph forecasting.

Several methods have been proposed for Temporal Knowledge Graph Completion (TKGC) including transformation-based, decomposition-based, graph neural networks-based, capsule Network-based, and other geometric methods. These techniques aim to address the problem of missing facts in TKGs by leveraging various mathematical models and neural networks. In contrast, predicting future facts in TKGs requires a different approach that can model the temporal evolution of the graph. Autoregression-based, temporal point process-based, and few-shot learning methods are commonly used for this task. Interpretability-based methods are used to increase the reliability of prediction results. These techniques provide evidence to support predictions, helping to establish trust and improving the overall quality of predictions made by the model. To further enhance the performance of TKGRL, semantic augmentation technology can be employed to improve the quality and quantity of semantic information of TKGs. Utilizing entity and relation names, as well as textual descriptions of fact associations, can enrich their representation and promote the development of downstream tasks of TKGs. In addition, large language models (LLMs) for natural language processing (NLP) can facilitate the acquisition of rich semantic information about entities and relations, further augmenting the performance of TKGRL models.

4.2 Entity Alignment Between Temporal Knowledge Graphs

Entity alignment (EA) aims to find equivalent entities between different KGs, which is important to promote the knowledge fusion between multi-source and multi-lingual KGs. Defining G 1 = ( E 1 , R 1 , T 1 , F 1 ) subscript 𝐺 1 subscript 𝐸 1 subscript 𝑅 1 subscript 𝑇 1 subscript 𝐹 1 G_{1}=(E_{1},R_{1},T_{1},F_{1}) and G 2 = ( E 2 , R 2 , T 2 , F 2 ) subscript 𝐺 2 subscript 𝐸 2 subscript 𝑅 2 subscript 𝑇 2 subscript 𝐹 2 G_{2}=(E_{2},R_{2},T_{2},F_{2}) to be two TKGs, S = { ( e 1 i , e 2 j ) | e 1 i ∈ E 1 , e 2 j ∈ E 2 } 𝑆 conditional-set subscript 𝑒 subscript 1 𝑖 subscript 𝑒 subscript 2 𝑗 formulae-sequence subscript 𝑒 subscript 1 𝑖 subscript 𝐸 1 subscript 𝑒 subscript 2 𝑗 subscript 𝐸 2 S=\{(e_{1_{i}},e_{2_{j}})|e_{1_{i}}\in E_{1},e_{2_{j}}\in E_{2}\} is the set of alignment seeds between G 1 subscript 𝐺 1 G_{1} and G 2 subscript 𝐺 2 G_{2} . EA seeks to find new alignment entities according to the alignment seeds S 𝑆 S . The methods of EA between TKGS mainly adopt the GNN-based model.

Currently, exploring the entity alignment (EA) between Temporal Knowledge Graphs (TKGs) is an active area of research. TEA-GNN [ 81 ] was the first method to incorporate temporal information via a time-aware attention Graph Neural Network (GNN) to enhance EA. TREA [ 82 ] utilizes a temporal relational attention GNN to integrate relational and temporal features of entities for improved EA performance. STEA [ 7 ] identifies that the timestamps in many TKGs are uniform and proposes a simple GNN-based model with a temporal information matching mechanism to enhance EA. Initially, the structure and relation features of an entity are fused together to generate the entity embedding. Then, the entity embedding is updated using GNN aggregation from neighborhood. Finally, the entity embedding is obtained by concatenating the embedding of each layer of the GNN. STEA not only updates the representation of entities but also calculates time similarity by considering associated timestamps. The method combines both the similarities of entity embeddings and the similarities of entity timestamps to obtain aligned entities. Overall, STEA offers an effective way of improving entity representation in TKGs and provides a reliable solution for aligning entities over time.

4.3 Question Answering Over Temporal Knowledge Graphs

Question answering over KG (KGQA) aims to answer natural language questions based on KG. The answer to the question is usually an entity in the KG. In order to answer the question, one-hop or multi-hop reasoning is required on the KG. Question answering over TKG (TKGQA) aims to answer temporal natural language questions based on TKG, the answer to the question is entity or timestamp in the TKG, and the reasoning on TKG is more complex than it on KG.

Research on TKGQA is in progress. CRONKGQA [ 59 ] release a new dataset named CRONQUESTIONS and propose a model combining representation of TKG and question for TKGQA. It first uses TComplEx to obtain the representation of entities and timestamps in the TKG, and utilizes BERT [ 15 ] to obtain their representations in the question, then calculates the scores of all entities and times, and finally concatenated the score vectors to obtain the answer.

TSQA [ 63 ] argues existing TKGQA methods haven’t explore the implicit temporal feature in TKGs and temporal questions. It proposes a time sensitive question answering model which consists of a time-aware TKG encoder and a time-sensitive question answering module. The time-aware TKG encoder uses TComplEx with time-order constraints to obtain the representations of entities and timestamps. The time-sensitive question answering module first decomposes the question into entities and a temporal expression. It uses the entities to extract the neighbor graph to reduce the search space of timestamps and answer entities. The temporal expression is fed into the BERT to learn the temporal question representations. Finally, entity and temporal question representations are combined to estimate the time and predict the entity with contrastive learning.

5 Future Directions

Despite the significant progress made in TKGRL research, there remain several important future directions. These include addressing scalability challenges, improving interpretability, incorporating information from multiple modalities, and leveraging large language models to enhance the ability of representing dynamic and evolving TKGs.

5.1 Scalability

The current datasets available for TKG are insufficient in size compared to real-world knowledge graphs. Moreover, TKGRL methods tend to prioritize improving task-specific performance and often overlook the issue of scalability. Therefore, there is a pressing need for research on effective methods of learning TKG representations that can accommodate the growing demand for data. A possible avenue for future research in this field is to investigate various strategies for enhancing the scalability of TKGRL models.

One approach for improving the scalability of TKGRL models is to employ distributed computing techniques, such as parallel processing or distributed training, to enable more efficient processing of large-scale knowledge graphs. Parallel processing involves partitioning the dataset into smaller subsets and processing each subset simultaneously. In contrast, distributed training trains the model on various machines concurrently, with the outcomes combined to enhance the overall accuracy of the model. This approach could prove especially beneficial for real-time processing of extensive knowledge graphs in applications that require quick response times.

Another approach is to use sampling techniques to reduce the size of the knowledge graph without sacrificing accuracy. For example, researchers could use clustering algorithms to identify groups of entities and events that are highly interconnected and then sample a representative subset of these groups for training the model. This approach could help to reduce the computational complexity of the model without sacrificing accuracy. Sampling techniques can also be used for negative sampling in TKGRL. Negative sampling involves selecting negative samples that are not present in the knowledge graph to balance out the positive samples during training. By employing efficient negative sampling techniques, researchers can significantly reduce the computational complexity of the TKGRL model while maintaining high accuracy levels.

Overall, addressing issues related to scalability will be critical for advancing the state-of-the-art in temporal knowledge graph research and enabling practical applications in real-world scenarios.

5.2 Interpretability

The enhancement of interpretability is a crucial research direction, as it allows for better understanding of how model outputs are derived and ensures the reliability and applicability of the model’s results. Despite the availability of interpretable methods, developing more interpretable models and techniques for temporal knowledge graphs remains a vital research direction.

One promising approach involves incorporating attention mechanisms to identify the most relevant entities and events in the knowledge graph at different points in time. This approach would allow users to understand which parts of the graph are most important for a given prediction, which could improve the interpretability of the model.

In addition, researchers could explore the use of visualization techniques to help users understand the structure and evolution of the knowledge graph over time. For example, interactive visualizations could enable users to explore the graph and understand how different entities and events are connected.

By making TKGRL more interpretable, we can gain greater insights into complex real-world phenomena, support decision-making processes, and ensure that these models are useful for practical applications.

5.3 Information Fusion

Most TKGRL methods only utilize the structural information of TKGs, with few models incorporating textual information of entities and relations. However, text data contains rich features that can be leveraged to enhance TKGs’ representation. Therefore, effectively fusing various features of TKGs, including entity feature, relation feature, time feature, structure feature and textual feature, represents a promising future research direction.

One approach to information fusion in TKGRL is to use multi-modal data sources. For example, researchers can combine textual data, such as news articles or social media posts, with structured data from knowledge graphs to improve the accuracy of the model. This approach can help the TKGRL model to capture more relationships between entities and events that may not be apparent from structured data alone.

Another approach is to use attention mechanisms to dynamically weight the importance of different sources of information at different points in time. This approach would allow the model to focus on the most relevant information for a given prediction, which could improve the accuracy of the model while reducing computational complexity.

In general, information fusion is a powerful tool in TKGRL that can help researchers improve the accuracy and reliability of the model by combining information from multiple sources. However, it is essential to carefully weigh the benefits and costs of using different fusion techniques, depending on the specific dataset and research goals.

5.4 Incorporating Large Language Models

Recent advances in natural language processing, such as the development of large language models (LLMs) [ 87 ] has been largely advanced by both academia and industry. A notable achievement in the field of LLMs is the introduction of ChatGPT 1 1 1 https://openai.com/blog/chatgpt/ , a highly advanced AI chatbot. Developed using LLMs, ChatGPT has generated significant interest and attention from both the research community and society at large. ChatGPT uses the generative pre-trained transformer (GPT) such as GPT-4 [ 56 ] , have led to significant improvements in various natural language tasks. LLMs have been shown to be highly effective at capturing complex semantic relationships between words and phrases, and they may be able to provide valuable insights into the meaning and context of entities and relations in a knowledge graph. Efficiently combining LLMs with TKGRL is an novel research direction for the future.

One approach to incorporating LLMs into TKGRL is to use LLMs to generate embeddings for entities and relations. These embeddings could be used as input to a TKGRL model, enabling it to capture more rich feature of entities and relations over time.

Another potential approach is to use LLMs to generate textual descriptions of entities and facts in the TKGs. These descriptions could be used to enrich the TKGs with additional semantic information, which could then be used to improve the accuracy of predictions.

Aboveall, incorporating LLMs into TKGRL has the potential to significantly improve the accuracy and effectiveness of these models, and it is an exciting area for future research. However, it is essential to carefully consider the challenges and limitations of using LLMs, such as computational complexity and potential bias in the pre-trained data.

6 Conclusion

Temporal knowledge graphs (TKGs) provide a powerful framework for representing and analyzing complex real-world phenomena that evolve over time. Temporal knowledge graph representation learning (TKGRL) is an active area of research that investigates methods for automatically extracting meaningful representations from TKGs.

In this paper, we provide a detailed overview of the development, methods, and applications of TKGRL. We begin by the definition of TKGRL and discussing the datasets and evaluation metrics commonly used in this field. Next, we categorize TKGRL methods based on their core technologies and analyze the fundamental ideas and techniques employed by each category. Furthermore, we offer a comprehensive review of various applications of TKGRL, followed by a discussion of future directions for research in this area. By focusing on these areas, we can continue to drive advancements in TKGRL and enable practical applications in real-world scenarios.

Acknowledgements

We appreciate the support from National Natural Science Foundation of China with the Main Research Project on Machine Behavior and Human-Machine Collaborated Decision Making Methodology (72192820 & 72192824), Pudong New Area Science & Technology Development Fund (PKX2021-R05), Science and Technology Commission of Shanghai Municipality (22DZ-2229004), and Shanghai Trusted Industry Internet Software Collaborative Innovation Center.

- Abboud et al. [2020] Abboud, R., Ceylan, İ.İ., Lukasiewicz, T., Salvatori, T., 2020. Boxe: A box embedding model for knowledge base completion, in: Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H. (Eds.), Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, December 6-12, 2020, virtual. URL: https://proceedings.neurips.cc/paper/2020/hash/6dbbe6abe5f14af882ff977fc3f35501-Abstract.html .

- Anelli et al. [2021] Anelli, V.W., Di Noia, T., Di Sciascio, E., Ferrara, A., Mancino, A.C.M., 2021. Sparse feature factorization for recommender systems with knowledge graphs, in: Proceedings of the 15th ACM Conference on Recommender Systems, Association for Computing Machinery, New York, NY, USA. p. 154–165. URL: https://doi.org/10.1145/3460231.3474243 , doi: 10.1145/3460231.3474243 .

- Balazevic et al. [2019] Balazevic, I., Allen, C., Hospedales, T., 2019. TuckER: Tensor factorization for knowledge graph completion, in: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Association for Computational Linguistics, Stroudsburg, PA, USA. pp. 5185–5194. URL: https://aclanthology.org/D19-1522 , doi: 10.18653/v1/D19-1522 .

- Bellman [1957] Bellman, R., 1957. A markovian decision process. Indiana Univ. Math. J. 6, 679–684. URL: http://www.iumj.indiana.edu/IUMJ/FULLTEXT/1957/6/56038 .

- Brown et al. [2020] Brown, T., Mann, B., Ryder, N., Subbiah, M., Kaplan, J.D., Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G., Askell, A., et al., 2020. Language models are few-shot learners. Advances in neural information processing systems 33, 1877–1901.

- Cai et al. [2022a] Cai, B., Xiang, Y., Gao, L., Zhang, H., Li, Y., Li, J., 2022a. Temporal knowledge graph completion: A survey. CoRR abs/2201.08236. URL: https://arxiv.org/abs/2201.08236 , arXiv:2201.08236 .

- Cai et al. [2022b] Cai, L., Mao, X., Ma, M., Yuan, H., Zhu, J., Lan, M., 2022b. A simple temporal information matching mechanism for entity alignment between temporal knowledge graphs, in: Proceedings of the 29th International Conference on Computational Linguistics, International Committee on Computational Linguistics, Gyeongju, Republic of Korea. pp. 2075–2086. URL: https://aclanthology.org/2022.coling-1.181 .

- Chen et al. [2023a] Chen, J., Geng, Y., Chen, Z., Pan, J.Z., He, Y., Zhang, W., Horrocks, I., Chen, H., 2023a. Zero-shot and few-shot learning with knowledge graphs: A comprehensive survey. Proceedings of the IEEE , 1–33doi: 10.1109/JPROC.2023.3279374 .

- Chen et al. [2022] Chen, K., Wang, Y., Li, Y., Li, A., 2022. RotateQVS: Representing temporal information as rotations in quaternion vector space for temporal knowledge graph completion, in: Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Association for Computational Linguistics, Stroudsburg, PA, USA. pp. 5843–5857. URL: https://aclanthology.org/2022.acl-long.402 , doi: 10.18653/v1/2022.acl-long.402 .

- Chen et al. [2023b] Chen, Z., Xu, C., Su, F., Huang, Z., Dou, Y., 2023b. Meta-learning based knowledge extrapolation for temporal knowledge graph, in: Proceedings of the ACM Web Conference 2023, Association for Computing Machinery, New York, NY, USA. p. 2433–2443. URL: https://doi.org/10.1145/3543507.3583279 , doi: 10.1145/3543507.3583279 .

- Cho et al. [2014] Cho, K., van Merrienboer, B., Gülçehre, Ç., Bahdanau, D., Bougares, F., Schwenk, H., Bengio, Y., 2014. Learning phrase representations using RNN encoder-decoder for statistical machine translation, in: Moschitti, A., Pang, B., Daelemans, W. (Eds.), Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, EMNLP 2014, October 25-29, 2014, Doha, Qatar, A meeting of SIGDAT, a Special Interest Group of the ACL, Association for Computational Linguistics, Stroudsburg, PA, USA. pp. 1724–1734. URL: https://doi.org/10.3115/v1/d14-1179 , doi: 10.3115/v1/d14-1179 .

- Daley and Vere-Jones [2008] Daley, D.J., Vere-Jones, D., 2008. An Introduction to the Theory of Point Processes. Volume II: General Theory and Structure. Springer, Berlin, GER. URL: https://link.springer.com/book/10.1007/978-0-387-49835-5 .

- Dasgupta et al. [2018] Dasgupta, S.S., Ray, S.N., Talukdar, P., 2018. HyTE: Hyperplane-based temporally aware knowledge graph embedding, in: Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Association for Computational Linguistics, Stroudsburg, PA, USA. pp. 2001–2011. URL: https://aclanthology.org/D18-1225 , doi: 10.18653/v1/D18-1225 .

- Deng et al. [2020] Deng, S., Rangwala, H., Ning, Y., 2020. Dynamic knowledge graph based multi-event forecasting, in: Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery; Data Mining, Association for Computing Machinery, New York, NY, USA. p. 1585–1595. URL: https://doi.org/10.1145/3394486.3403209 , doi: 10.1145/3394486.3403209 .

- Devlin et al. [2019] Devlin, J., Chang, M., Lee, K., Toutanova, K., 2019. BERT: pre-training of deep bidirectional transformers for language understanding, in: Burstein, J., Doran, C., Solorio, T. (Eds.), Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2019, Minneapolis, MN, USA, June 2-7, 2019, Volume 1 (Long and Short Papers), Association for Computational Linguistics, Stroudsburg, PA, USA. pp. 4171–4186. URL: https://doi.org/10.18653/v1/n19-1423 , doi: 10.18653/v1/n19-1423 .

- Dietz et al. [2018] Dietz, L., Kotov, A., Meij, E., 2018. Utilizing knowledge graphs for text-centric information retrieval, in: The 41st International ACM SIGIR Conference on Research and Development in Information Retrieval, Association for Computing Machinery, New York, NY, USA. p. 1387–1390. URL: https://doi.org/10.1145/3209978.3210187 , doi: 10.1145/3209978.3210187 .

- Ding et al. [2023] Ding, Z., Cai, H., Wu, J., Ma, Y., Liao, R., Xiong, B., Tresp, V., 2023. Zero-shot relational learning on temporal knowledge graphs with large language models. arXiv preprint arXiv:2311.10112 .

- Fu et al. [2022] Fu, G., Meng, Z., Han, Z., Ding, Z., Ma, Y., Schubert, M., Tresp, V., Wattenhofer, R., 2022. TempCaps: A capsule network-based embedding model for temporal knowledge graph completion, in: Proceedings of the Sixth Workshop on Structured Prediction for NLP, Association for Computational Linguistics, Stroudsburg, PA, USA. pp. 22–31. URL: https://aclanthology.org/2022.spnlp-1.3 , doi: 10.18653/v1/2022.spnlp-1.3 .

- García-Durán et al. [2018] García-Durán, A., Dumancic, S., Niepert, M., 2018. Learning sequence encoders for temporal knowledge graph completion, in: Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Association for Computational Linguistics, Stroudsburg, PA, USA. pp. 4816–4821. URL: https://aclanthology.org/D18-1516 , doi: 10.18653/v1/D18-1516 .

- Gardner and Dorling [1998] Gardner, M.W., Dorling, S., 1998. Artificial neural networks (the multilayer perceptron)—a review of applications in the atmospheric sciences. Atmospheric environment 32, 2627–2636. URL: https://doi.org/10.1016/S1352-2310(97)00447-0 .

- Goel et al. [2020] Goel, R., Kazemi, S.M., Brubaker, M.A., Poupart, P., 2020. Diachronic embedding for temporal knowledge graph completion, in: The Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020, New York, NY, USA, February 7-12, 2020, AAAI Press, Palo Alto, CA, USA. pp. 3988–3995. URL: https://ojs.aaai.org/index.php/AAAI/article/view/5815 .

- Gong et al. [2023] Gong, X., Qin, J., Chai, H., Ding, Y., Jia, Y., Liao, Q., 2023. Temporal-relational matching network for few-shot temporal knowledge graph completion, in: Database Systems for Advanced Applications: 28th International Conference, DASFAA 2023, Tianjin, China, April 17–20, 2023, Proceedings, Part II, Springer-Verlag, Berlin, Heidelberg. p. 768–783. URL: https://doi.org/10.1007/978-3-031-30672-3_52 , doi: 10.1007/978-3-031-30672-3_52 .

- Han et al. [2021a] Han, Z., Chen, P., Ma, Y., Tresp, V., 2021a. Explainable subgraph reasoning for forecasting on temporal knowledge graphs, in: 9th International Conference on Learning Representations, ICLR 2021, Virtual Event, Austria, May 3-7, 2021, OpenReview.net, Austria. URL: https://openreview.net/forum?id=pGIHq1m7PU .

- Han et al. [2021b] Han, Z., Ding, Z., Ma, Y., Gu, Y., Tresp, V., 2021b. Learning neural ordinary equations for forecasting future links on temporal knowledge graphs, in: Moens, M., Huang, X., Specia, L., Yih, S.W. (Eds.), Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, EMNLP 2021, Virtual Event / Punta Cana, Dominican Republic, 7-11 November, 2021, Association for Computational Linguistics. pp. 8352–8364. URL: https://doi.org/10.18653/v1/2021.emnlp-main.658 , doi: 10.18653/v1/2021.emnlp-main.658 .

- Han et al. [2023] Han, Z., Liao, R., Gu, J., Zhang, Y., Ding, Z., Gu, Y., Köppl, H., Schütze, H., Tresp, V., 2023. Ecola: Enhanced temporal knowledge embeddings with contextualized language representations. arXiv:2203.09590 .

- Han et al. [2020a] Han, Z., Ma, Y., Chen, P., Tresp, V., 2020a. Dyernie: Dynamic evolution of riemannian manifold embeddings for temporal knowledge graph completion. arXiv:2011.03984 .

- Han et al. [2020b] Han, Z., Ma, Y., Wang, Y., Günnemann, S., Tresp, V., 2020b. Graph hawkes neural network for forecasting on temporal knowledge graphs, in: Das, D., Hajishirzi, H., McCallum, A., Singh, S. (Eds.), Conference on Automated Knowledge Base Construction, AKBC 2020, Virtual, June 22-24, 2020, OpenReview.net, Virtual. URL: https://doi.org/10.24432/C50018 , doi: 10.24432/C50018 .

- Hawkes [1971] Hawkes, A.G., 1971. Spectra of some self-exciting and mutually exciting point processes. Biometrika 58, 83–90. URL: https://doi.org/10.1093/biomet/58.1.83 .

- He et al. [2021] He, G., Lan, Y., Jiang, J., Zhao, W.X., Wen, J.R., 2021. Improving multi-hop knowledge base question answering by learning intermediate supervision signals, in: Proceedings of the 14th ACM International Conference on Web Search and Data Mining, Association for Computing Machinery, New York, NY, USA. p. 553–561. URL: https://doi.org/10.1145/3437963.3441753 , doi: 10.1145/3437963.3441753 .

- Hitchcock [1927] Hitchcock, F.L., 1927. The expression of a tensor or a polyadic as a sum of products. Journal of Mathematics and Physics 6, 164–189. URL: https://doi.org/10.1002/sapm192761164 , doi: 10.1002/sapm192761164 .

- Hochreiter and Schmidhuber [1997] Hochreiter, S., Schmidhuber, J., 1997. Long short-term memory. Neural Comput. 9, 1735–1780. URL: https://doi.org/10.1162/neco.1997.9.8.1735 , doi: 10.1162/neco.1997.9.8.1735 .

- Hoffart et al. [2011] Hoffart, J., Suchanek, F.M., Berberich, K., Lewis-Kelham, E., de Melo, G., Weikum, G., 2011. Yago2: Exploring and querying world knowledge in time, space, context, and many languages, in: Proceedings of the 20th International Conference Companion on World Wide Web, Association for Computing Machinery, New York, NY, USA. p. 229–232. URL: https://doi.org/10.1145/1963192.1963296 , doi: 10.1145/1963192.1963296 .

- Ji et al. [2021] Ji, S., Pan, S., Cambria, E., Marttinen, P., Philip, S.Y., 2021. A survey on knowledge graphs: Representation, acquisition, and applications. IEEE transactions on neural networks and learning systems 33, 494–514. URL: https://doi.org/10.1109/TNNLS.2021.3070843 , doi: 10.1109/TNNLS.2021.3070843 .

- Jin et al. [2020] Jin, W., Qu, M., Jin, X., Ren, X., 2020. Recurrent event network: Autoregressive structure inferenceover temporal knowledge graphs, in: Webber, B., Cohn, T., He, Y., Liu, Y. (Eds.), Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, EMNLP 2020, Online, November 16-20, 2020, Association for Computational Linguistics, Stroudsburg, PA, USA. pp. 6669–6683. URL: https://doi.org/10.18653/v1/2020.emnlp-main.541 , doi: 10.18653/v1/2020.emnlp-main.541 .

- Kaelbling et al. [1996] Kaelbling, L.P., Littman, M.L., Moore, A.W., 1996. Reinforcement learning: A survey. J. Artif. Intell. Res. 4, 237–285. URL: https://doi.org/10.1613/jair.301 , doi: 10.1613/jair.301 .

- Kang and Cho [2022] Kang, D., Cho, M., 2022. Integrative few-shot learning for classification and segmentation, in: IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2022, New Orleans, LA, USA, June 18-24, 2022, IEEE. pp. 9969–9980. URL: https://doi.org/10.1109/CVPR52688.2022.00974 , doi: 10.1109/CVPR52688.2022.00974 .

- Kazemi and Poole [2018] Kazemi, S.M., Poole, D., 2018. Simple embedding for link prediction in knowledge graphs, in: Bengio, S., Wallach, H., Larochelle, H., Grauman, K., Cesa-Bianchi, N., Garnett, R. (Eds.), Advances in Neural Information Processing Systems, Curran Associates, Inc., New York, NY, USA. URL: https://proceedings.neurips.cc/paper/2018/file/b2ab001909a8a6f04b51920306046ce5-Paper.pdf .

- Kipf and Welling [2017] Kipf, T.N., Welling, M., 2017. Semi-supervised classification with graph convolutional networks, in: 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, April 24-26, 2017, Conference Track Proceedings, OpenReview.net, Toulon, France. URL: https://openreview.net/forum?id=SJU4ayYgl .

- Lacroix et al. [2020] Lacroix, T., Obozinski, G., Usunier, N., 2020. Tensor decompositions for temporal knowledge base completion, in: 8th International Conference on Learning Representations, ICLR 2020, OpenReview.net, Addis Ababa, Ethiopia. URL: https://openreview.net/forum?id=rke2P1BFwS .

- Leblay and Chekol [2018] Leblay, J., Chekol, M.W., 2018. Deriving validity time in knowledge graph, in: Companion Proceedings of the The Web Conference 2018, International World Wide Web Conferences Steering Committee, Republic and Canton of Geneva, CHE. p. 1771–1776. URL: https://doi.org/10.1145/3184558.3191639 , doi: 10.1145/3184558.3191639 .

- Lee et al. [2023] Lee, D.H., Ahrabian, K., Jin, W., Morstatter, F., Pujara, J., 2023. Temporal knowledge graph forecasting without knowledge using in-context learning. arXiv preprint arXiv:2305.10613 .

- Leetaru and Schrodt [2013] Leetaru, K., Schrodt, P.A., 2013. Gdelt: Global data on events, location, and tone, 1979–2012, in: ISA annual convention, Citeseer. Citeseer, State College, PA, USA. pp. 1–49. URL: http://data.gdeltproject.org/documentation/ISA.2013.GDELT.pdf .

- Li et al. [2022a] Li, Y., Sun, S., Zhao, J., 2022a. Tirgn: Time-guided recurrent graph network with local-global historical patterns for temporal knowledge graph reasoning, in: Raedt, L.D. (Ed.), Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence, IJCAI 2022, Vienna, Austria, 23-29 July 2022, ijcai.org, San Francisco, CA, USA. pp. 2152–2158. URL: https://doi.org/10.24963/ijcai.2022/299 , doi: 10.24963/ijcai.2022/299 .

- Li et al. [2022b] Li, Z., Guan, S., Jin, X., Peng, W., Lyu, Y., Zhu, Y., Bai, L., Li, W., Guo, J., Cheng, X., 2022b. Complex evolutional pattern learning for temporal knowledge graph reasoning, in: Muresan, S., Nakov, P., Villavicencio, A. (Eds.), Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), ACL 2022, Dublin, Ireland, May 22-27, 2022, Association for Computational Linguistics, Stroudsburg, PA, USA. pp. 290–296. URL: https://doi.org/10.18653/v1/2022.acl-short.32 , doi: 10.18653/v1/2022.acl-short.32 .

- Li et al. [2021a] Li, Z., Jin, X., Guan, S., Li, W., Guo, J., Wang, Y., Cheng, X., 2021a. Search from history and reason for future: Two-stage reasoning on temporal knowledge graphs, in: Zong, C., Xia, F., Li, W., Navigli, R. (Eds.), Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, ACL/IJCNLP 2021, (Volume 1: Long Papers), Virtual Event, August 1-6, 2021, Association for Computational Linguistics, Stroudsburg, PA, USA. pp. 4732–4743. URL: https://doi.org/10.18653/v1/2021.acl-long.365 , doi: 10.18653/v1/2021.acl-long.365 .

- Li et al. [2021b] Li, Z., Jin, X., Li, W., Guan, S., Guo, J., Shen, H., Wang, Y., Cheng, X., 2021b. Temporal knowledge graph reasoning based on evolutional representation learning, in: Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Association for Computing Machinery, New York, NY, USA. p. 408–417. URL: https://doi.org/10.1145/3404835.3462963 , doi: 10.1145/3404835.3462963 .

- Liao et al. [2023] Liao, R., Jia, X., Ma, Y., Tresp, V., 2023. Gentkg: Generative forecasting on temporal knowledge graph. arXiv preprint arXiv:2310.07793 .

- Lin and She [2020] Lin, L., She, K., 2020. Tensor decomposition-based temporal knowledge graph embedding, in: 32nd IEEE International Conference on Tools with Artificial Intelligence, ICTAI 2020, Baltimore, MD, USA, November 9-11, 2020, IEEE, Baltimore, MD, USA. pp. 969–975. URL: https://doi.org/10.1109/ICTAI50040.2020.00151 , doi: 10.1109/ICTAI50040.2020.00151 .