Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Inferential Statistics | An Easy Introduction & Examples

Inferential Statistics | An Easy Introduction & Examples

Published on September 4, 2020 by Pritha Bhandari . Revised on June 22, 2023.

While descriptive statistics summarize the characteristics of a data set, inferential statistics help you come to conclusions and make predictions based on your data.

When you have collected data from a sample , you can use inferential statistics to understand the larger population from which the sample is taken.

Inferential statistics have two main uses:

- making estimates about populations (for example, the mean SAT score of all 11th graders in the US).

- testing hypotheses to draw conclusions about populations (for example, the relationship between SAT scores and family income).

Table of contents

Descriptive versus inferential statistics, estimating population parameters from sample statistics, hypothesis testing, other interesting articles, frequently asked questions about inferential statistics.

Descriptive statistics allow you to describe a data set, while inferential statistics allow you to make inferences based on a data set.

- Descriptive statistics

Using descriptive statistics, you can report characteristics of your data:

- The distribution concerns the frequency of each value.

- The central tendency concerns the averages of the values.

- The variability concerns how spread out the values are.

In descriptive statistics, there is no uncertainty – the statistics precisely describe the data that you collected. If you collect data from an entire population, you can directly compare these descriptive statistics to those from other populations.

Inferential statistics

Most of the time, you can only acquire data from samples, because it is too difficult or expensive to collect data from the whole population that you’re interested in.

While descriptive statistics can only summarize a sample’s characteristics, inferential statistics use your sample to make reasonable guesses about the larger population.

With inferential statistics, it’s important to use random and unbiased sampling methods . If your sample isn’t representative of your population, then you can’t make valid statistical inferences or generalize .

Sampling error in inferential statistics

Since the size of a sample is always smaller than the size of the population, some of the population isn’t captured by sample data. This creates sampling error , which is the difference between the true population values (called parameters) and the measured sample values (called statistics).

Sampling error arises any time you use a sample, even if your sample is random and unbiased. For this reason, there is always some uncertainty in inferential statistics. However, using probability sampling methods reduces this uncertainty.

Prevent plagiarism. Run a free check.

The characteristics of samples and populations are described by numbers called statistics and parameters :

- A statistic is a measure that describes the sample (e.g., sample mean ).

- A parameter is a measure that describes the whole population (e.g., population mean).

Sampling error is the difference between a parameter and a corresponding statistic. Since in most cases you don’t know the real population parameter, you can use inferential statistics to estimate these parameters in a way that takes sampling error into account.

There are two important types of estimates you can make about the population: point estimates and interval estimates .

- A point estimate is a single value estimate of a parameter. For instance, a sample mean is a point estimate of a population mean.

- An interval estimate gives you a range of values where the parameter is expected to lie. A confidence interval is the most common type of interval estimate.

Both types of estimates are important for gathering a clear idea of where a parameter is likely to lie.

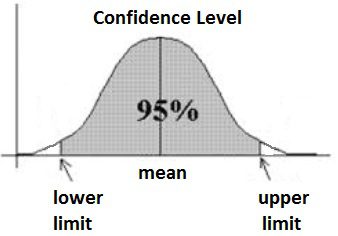

Confidence intervals

A confidence interval uses the variability around a statistic to come up with an interval estimate for a parameter. Confidence intervals are useful for estimating parameters because they take sampling error into account.

While a point estimate gives you a precise value for the parameter you are interested in, a confidence interval tells you the uncertainty of the point estimate. They are best used in combination with each other.

Each confidence interval is associated with a confidence level. A confidence level tells you the probability (in percentage) of the interval containing the parameter estimate if you repeat the study again.

A 95% confidence interval means that if you repeat your study with a new sample in exactly the same way 100 times, you can expect your estimate to lie within the specified range of values 95 times.

Although you can say that your estimate will lie within the interval a certain percentage of the time, you cannot say for sure that the actual population parameter will. That’s because you can’t know the true value of the population parameter without collecting data from the full population.

However, with random sampling and a suitable sample size, you can reasonably expect your confidence interval to contain the parameter a certain percentage of the time.

Your point estimate of the population mean paid vacation days is the sample mean of 19 paid vacation days.

Hypothesis testing is a formal process of statistical analysis using inferential statistics. The goal of hypothesis testing is to compare populations or assess relationships between variables using samples.

Hypotheses , or predictions, are tested using statistical tests . Statistical tests also estimate sampling errors so that valid inferences can be made.

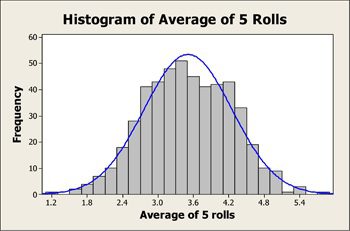

Statistical tests can be parametric or non-parametric. Parametric tests are considered more statistically powerful because they are more likely to detect an effect if one exists.

Parametric tests make assumptions that include the following:

- the population that the sample comes from follows a normal distribution of scores

- the sample size is large enough to represent the population

- the variances , a measure of variability , of each group being compared are similar

When your data violates any of these assumptions, non-parametric tests are more suitable. Non-parametric tests are called “distribution-free tests” because they don’t assume anything about the distribution of the population data.

Statistical tests come in three forms: tests of comparison, correlation or regression.

Comparison tests

Comparison tests assess whether there are differences in means, medians or rankings of scores of two or more groups.

To decide which test suits your aim, consider whether your data meets the conditions necessary for parametric tests, the number of samples, and the levels of measurement of your variables.

Means can only be found for interval or ratio data , while medians and rankings are more appropriate measures for ordinal data .

Correlation tests

Correlation tests determine the extent to which two variables are associated.

Although Pearson’s r is the most statistically powerful test, Spearman’s r is appropriate for interval and ratio variables when the data doesn’t follow a normal distribution.

The chi square test of independence is the only test that can be used with nominal variables.

Regression tests

Regression tests demonstrate whether changes in predictor variables cause changes in an outcome variable. You can decide which regression test to use based on the number and types of variables you have as predictors and outcomes.

Most of the commonly used regression tests are parametric. If your data is not normally distributed, you can perform data transformations.

Data transformations help you make your data normally distributed using mathematical operations, like taking the square root of each value.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Confidence interval

- Measures of central tendency

- Correlation coefficient

Methodology

- Cluster sampling

- Stratified sampling

- Types of interviews

- Cohort study

- Thematic analysis

Research bias

- Implicit bias

- Cognitive bias

- Survivorship bias

- Availability heuristic

- Nonresponse bias

- Regression to the mean

Descriptive statistics summarize the characteristics of a data set. Inferential statistics allow you to test a hypothesis or assess whether your data is generalizable to the broader population.

A statistic refers to measures about the sample , while a parameter refers to measures about the population .

A sampling error is the difference between a population parameter and a sample statistic .

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bhandari, P. (2023, June 22). Inferential Statistics | An Easy Introduction & Examples. Scribbr. Retrieved April 16, 2024, from https://www.scribbr.com/statistics/inferential-statistics/

Is this article helpful?

Pritha Bhandari

Other students also liked, parameter vs statistic | definitions, differences & examples, descriptive statistics | definitions, types, examples, hypothesis testing | a step-by-step guide with easy examples, what is your plagiarism score.

Forgot password? New user? Sign up

Existing user? Log in

Hypothesis Testing

Already have an account? Log in here.

A hypothesis test is a statistical inference method used to test the significance of a proposed (hypothesized) relation between population statistics (parameters) and their corresponding sample estimators . In other words, hypothesis tests are used to determine if there is enough evidence in a sample to prove a hypothesis true for the entire population.

The test considers two hypotheses: the null hypothesis , which is a statement meant to be tested, usually something like "there is no effect" with the intention of proving this false, and the alternate hypothesis , which is the statement meant to stand after the test is performed. The two hypotheses must be mutually exclusive ; moreover, in most applications, the two are complementary (one being the negation of the other). The test works by comparing the \(p\)-value to the level of significance (a chosen target). If the \(p\)-value is less than or equal to the level of significance, then the null hypothesis is rejected.

When analyzing data, only samples of a certain size might be manageable as efficient computations. In some situations the error terms follow a continuous or infinite distribution, hence the use of samples to suggest accuracy of the chosen test statistics. The method of hypothesis testing gives an advantage over guessing what distribution or which parameters the data follows.

Definitions and Methodology

Hypothesis test and confidence intervals.

In statistical inference, properties (parameters) of a population are analyzed by sampling data sets. Given assumptions on the distribution, i.e. a statistical model of the data, certain hypotheses can be deduced from the known behavior of the model. These hypotheses must be tested against sampled data from the population.

The null hypothesis \((\)denoted \(H_0)\) is a statement that is assumed to be true. If the null hypothesis is rejected, then there is enough evidence (statistical significance) to accept the alternate hypothesis \((\)denoted \(H_1).\) Before doing any test for significance, both hypotheses must be clearly stated and non-conflictive, i.e. mutually exclusive, statements. Rejecting the null hypothesis, given that it is true, is called a type I error and it is denoted \(\alpha\), which is also its probability of occurrence. Failing to reject the null hypothesis, given that it is false, is called a type II error and it is denoted \(\beta\), which is also its probability of occurrence. Also, \(\alpha\) is known as the significance level , and \(1-\beta\) is known as the power of the test. \(H_0\) \(\textbf{is true}\)\(\hspace{15mm}\) \(H_0\) \(\textbf{is false}\) \(\textbf{Reject}\) \(H_0\)\(\hspace{10mm}\) Type I error Correct Decision \(\textbf{Reject}\) \(H_1\) Correct Decision Type II error The test statistic is the standardized value following the sampled data under the assumption that the null hypothesis is true, and a chosen particular test. These tests depend on the statistic to be studied and the assumed distribution it follows, e.g. the population mean following a normal distribution. The \(p\)-value is the probability of observing an extreme test statistic in the direction of the alternate hypothesis, given that the null hypothesis is true. The critical value is the value of the assumed distribution of the test statistic such that the probability of making a type I error is small.

Methodologies: Given an estimator \(\hat \theta\) of a population statistic \(\theta\), following a probability distribution \(P(T)\), computed from a sample \(\mathcal{S},\) and given a significance level \(\alpha\) and test statistic \(t^*,\) define \(H_0\) and \(H_1;\) compute the test statistic \(t^*.\) \(p\)-value Approach (most prevalent): Find the \(p\)-value using \(t^*\) (right-tailed). If the \(p\)-value is at most \(\alpha,\) reject \(H_0\). Otherwise, reject \(H_1\). Critical Value Approach: Find the critical value solving the equation \(P(T\geq t_\alpha)=\alpha\) (right-tailed). If \(t^*>t_\alpha\), reject \(H_0\). Otherwise, reject \(H_1\). Note: Failing to reject \(H_0\) only means inability to accept \(H_1\), and it does not mean to accept \(H_0\).

Assume a normally distributed population has recorded cholesterol levels with various statistics computed. From a sample of 100 subjects in the population, the sample mean was 214.12 mg/dL (milligrams per deciliter), with a sample standard deviation of 45.71 mg/dL. Perform a hypothesis test, with significance level 0.05, to test if there is enough evidence to conclude that the population mean is larger than 200 mg/dL. Hypothesis Test We will perform a hypothesis test using the \(p\)-value approach with significance level \(\alpha=0.05:\) Define \(H_0\): \(\mu=200\). Define \(H_1\): \(\mu>200\). Since our values are normally distributed, the test statistic is \(z^*=\frac{\bar X - \mu_0}{\frac{s}{\sqrt{n}}}=\frac{214.12 - 200}{\frac{45.71}{\sqrt{100}}}\approx 3.09\). Using a standard normal distribution, we find that our \(p\)-value is approximately \(0.001\). Since the \(p\)-value is at most \(\alpha=0.05,\) we reject \(H_0\). Therefore, we can conclude that the test shows sufficient evidence to support the claim that \(\mu\) is larger than \(200\) mg/dL.

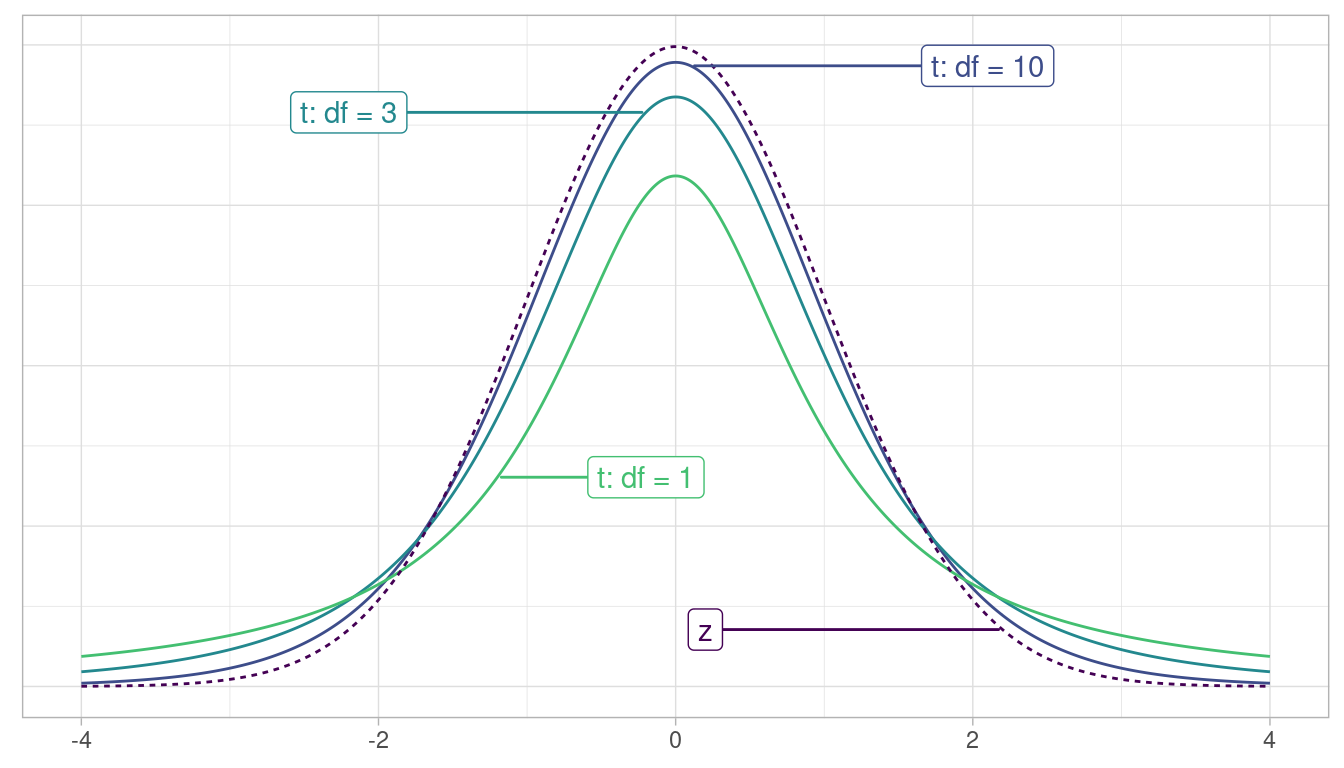

If the sample size was smaller, the normal and \(t\)-distributions behave differently. Also, the question itself must be managed by a double-tail test instead.

Assume a population's cholesterol levels are recorded and various statistics are computed. From a sample of 25 subjects, the sample mean was 214.12 mg/dL (milligrams per deciliter), with a sample standard deviation of 45.71 mg/dL. Perform a hypothesis test, with significance level 0.05, to test if there is enough evidence to conclude that the population mean is not equal to 200 mg/dL. Hypothesis Test We will perform a hypothesis test using the \(p\)-value approach with significance level \(\alpha=0.05\) and the \(t\)-distribution with 24 degrees of freedom: Define \(H_0\): \(\mu=200\). Define \(H_1\): \(\mu\neq 200\). Using the \(t\)-distribution, the test statistic is \(t^*=\frac{\bar X - \mu_0}{\frac{s}{\sqrt{n}}}=\frac{214.12 - 200}{\frac{45.71}{\sqrt{25}}}\approx 1.54\). Using a \(t\)-distribution with 24 degrees of freedom, we find that our \(p\)-value is approximately \(2(0.068)=0.136\). We have multiplied by two since this is a two-tailed argument, i.e. the mean can be smaller than or larger than. Since the \(p\)-value is larger than \(\alpha=0.05,\) we fail to reject \(H_0\). Therefore, the test does not show sufficient evidence to support the claim that \(\mu\) is not equal to \(200\) mg/dL.

The complement of the rejection on a two-tailed hypothesis test (with significance level \(\alpha\)) for a population parameter \(\theta\) is equivalent to finding a confidence interval \((\)with confidence level \(1-\alpha)\) for the population parameter \(\theta\). If the assumption on the parameter \(\theta\) falls inside the confidence interval, then the test has failed to reject the null hypothesis \((\)with \(p\)-value greater than \(\alpha).\) Otherwise, if \(\theta\) does not fall in the confidence interval, then the null hypothesis is rejected in favor of the alternate \((\)with \(p\)-value at most \(\alpha).\)

- Statistics (Estimation)

- Normal Distribution

- Correlation

- Confidence Intervals

Problem Loading...

Note Loading...

Set Loading...

- Comprehensive Learning Paths

- 150+ Hours of Videos

- Complete Access to Jupyter notebooks, Datasets, References.

Hypothesis Testing – A Deep Dive into Hypothesis Testing, The Backbone of Statistical Inference

- September 21, 2023

Explore the intricacies of hypothesis testing, a cornerstone of statistical analysis. Dive into methods, interpretations, and applications for making data-driven decisions.

In this Blog post we will learn:

- What is Hypothesis Testing?

- Steps in Hypothesis Testing 2.1. Set up Hypotheses: Null and Alternative 2.2. Choose a Significance Level (α) 2.3. Calculate a test statistic and P-Value 2.4. Make a Decision

- Example : Testing a new drug.

- Example in python

1. What is Hypothesis Testing?

In simple terms, hypothesis testing is a method used to make decisions or inferences about population parameters based on sample data. Imagine being handed a dice and asked if it’s biased. By rolling it a few times and analyzing the outcomes, you’d be engaging in the essence of hypothesis testing.

Think of hypothesis testing as the scientific method of the statistics world. Suppose you hear claims like “This new drug works wonders!” or “Our new website design boosts sales.” How do you know if these statements hold water? Enter hypothesis testing.

2. Steps in Hypothesis Testing

- Set up Hypotheses : Begin with a null hypothesis (H0) and an alternative hypothesis (Ha).

- Choose a Significance Level (α) : Typically 0.05, this is the probability of rejecting the null hypothesis when it’s actually true. Think of it as the chance of accusing an innocent person.

- Calculate Test statistic and P-Value : Gather evidence (data) and calculate a test statistic.

- p-value : This is the probability of observing the data, given that the null hypothesis is true. A small p-value (typically ≤ 0.05) suggests the data is inconsistent with the null hypothesis.

- Decision Rule : If the p-value is less than or equal to α, you reject the null hypothesis in favor of the alternative.

2.1. Set up Hypotheses: Null and Alternative

Before diving into testing, we must formulate hypotheses. The null hypothesis (H0) represents the default assumption, while the alternative hypothesis (H1) challenges it.

For instance, in drug testing, H0 : “The new drug is no better than the existing one,” H1 : “The new drug is superior .”

2.2. Choose a Significance Level (α)

When You collect and analyze data to test H0 and H1 hypotheses. Based on your analysis, you decide whether to reject the null hypothesis in favor of the alternative, or fail to reject / Accept the null hypothesis.

The significance level, often denoted by $α$, represents the probability of rejecting the null hypothesis when it is actually true.

In other words, it’s the risk you’re willing to take of making a Type I error (false positive).

Type I Error (False Positive) :

- Symbolized by the Greek letter alpha (α).

- Occurs when you incorrectly reject a true null hypothesis . In other words, you conclude that there is an effect or difference when, in reality, there isn’t.

- The probability of making a Type I error is denoted by the significance level of a test. Commonly, tests are conducted at the 0.05 significance level , which means there’s a 5% chance of making a Type I error .

- Commonly used significance levels are 0.01, 0.05, and 0.10, but the choice depends on the context of the study and the level of risk one is willing to accept.

Example : If a drug is not effective (truth), but a clinical trial incorrectly concludes that it is effective (based on the sample data), then a Type I error has occurred.

Type II Error (False Negative) :

- Symbolized by the Greek letter beta (β).

- Occurs when you accept a false null hypothesis . This means you conclude there is no effect or difference when, in reality, there is.

- The probability of making a Type II error is denoted by β. The power of a test (1 – β) represents the probability of correctly rejecting a false null hypothesis.

Example : If a drug is effective (truth), but a clinical trial incorrectly concludes that it is not effective (based on the sample data), then a Type II error has occurred.

Balancing the Errors :

In practice, there’s a trade-off between Type I and Type II errors. Reducing the risk of one typically increases the risk of the other. For example, if you want to decrease the probability of a Type I error (by setting a lower significance level), you might increase the probability of a Type II error unless you compensate by collecting more data or making other adjustments.

It’s essential to understand the consequences of both types of errors in any given context. In some situations, a Type I error might be more severe, while in others, a Type II error might be of greater concern. This understanding guides researchers in designing their experiments and choosing appropriate significance levels.

2.3. Calculate a test statistic and P-Value

Test statistic : A test statistic is a single number that helps us understand how far our sample data is from what we’d expect under a null hypothesis (a basic assumption we’re trying to test against). Generally, the larger the test statistic, the more evidence we have against our null hypothesis. It helps us decide whether the differences we observe in our data are due to random chance or if there’s an actual effect.

P-value : The P-value tells us how likely we would get our observed results (or something more extreme) if the null hypothesis were true. It’s a value between 0 and 1. – A smaller P-value (typically below 0.05) means that the observation is rare under the null hypothesis, so we might reject the null hypothesis. – A larger P-value suggests that what we observed could easily happen by random chance, so we might not reject the null hypothesis.

2.4. Make a Decision

Relationship between $α$ and P-Value

When conducting a hypothesis test:

We then calculate the p-value from our sample data and the test statistic.

Finally, we compare the p-value to our chosen $α$:

- If $p−value≤α$: We reject the null hypothesis in favor of the alternative hypothesis. The result is said to be statistically significant.

- If $p−value>α$: We fail to reject the null hypothesis. There isn’t enough statistical evidence to support the alternative hypothesis.

3. Example : Testing a new drug.

Imagine we are investigating whether a new drug is effective at treating headaches faster than drug B.

Setting Up the Experiment : You gather 100 people who suffer from headaches. Half of them (50 people) are given the new drug (let’s call this the ‘Drug Group’), and the other half are given a sugar pill, which doesn’t contain any medication.

- Set up Hypotheses : Before starting, you make a prediction:

- Null Hypothesis (H0): The new drug has no effect. Any difference in healing time between the two groups is just due to random chance.

- Alternative Hypothesis (H1): The new drug does have an effect. The difference in healing time between the two groups is significant and not just by chance.

Calculate Test statistic and P-Value : After the experiment, you analyze the data. The “test statistic” is a number that helps you understand the difference between the two groups in terms of standard units.

For instance, let’s say:

- The average healing time in the Drug Group is 2 hours.

- The average healing time in the Placebo Group is 3 hours.

The test statistic helps you understand how significant this 1-hour difference is. If the groups are large and the spread of healing times in each group is small, then this difference might be significant. But if there’s a huge variation in healing times, the 1-hour difference might not be so special.

Imagine the P-value as answering this question: “If the new drug had NO real effect, what’s the probability that I’d see a difference as extreme (or more extreme) as the one I found, just by random chance?”

For instance:

- P-value of 0.01 means there’s a 1% chance that the observed difference (or a more extreme difference) would occur if the drug had no effect. That’s pretty rare, so we might consider the drug effective.

- P-value of 0.5 means there’s a 50% chance you’d see this difference just by chance. That’s pretty high, so we might not be convinced the drug is doing much.

- If the P-value is less than ($α$) 0.05: the results are “statistically significant,” and they might reject the null hypothesis , believing the new drug has an effect.

- If the P-value is greater than ($α$) 0.05: the results are not statistically significant, and they don’t reject the null hypothesis , remaining unsure if the drug has a genuine effect.

4. Example in python

For simplicity, let’s say we’re using a t-test (common for comparing means). Let’s dive into Python:

Making a Decision : “The results are statistically significant! p-value < 0.05 , The drug seems to have an effect!” If not, we’d say, “Looks like the drug isn’t as miraculous as we thought.”

5. Conclusion

Hypothesis testing is an indispensable tool in data science, allowing us to make data-driven decisions with confidence. By understanding its principles, conducting tests properly, and considering real-world applications, you can harness the power of hypothesis testing to unlock valuable insights from your data.

More Articles

Correlation – connecting the dots, the role of correlation in data analysis, sampling and sampling distributions – a comprehensive guide on sampling and sampling distributions, law of large numbers – a deep dive into the world of statistics, central limit theorem – a deep dive into central limit theorem and its significance in statistics, skewness and kurtosis – peaks and tails, understanding data through skewness and kurtosis”, similar articles, complete introduction to linear regression in r, how to implement common statistical significance tests and find the p value, logistic regression – a complete tutorial with examples in r.

Subscribe to Machine Learning Plus for high value data science content

© Machinelearningplus. All rights reserved.

Machine Learning A-Z™: Hands-On Python & R In Data Science

Free sample videos:.

Statistical Inference via Data Science

Chapter 9 Hypothesis Testing

Now that we’ve studied confidence intervals in Chapter 8 , let’s study another commonly used method for statistical inference: hypothesis testing. Hypothesis tests allow us to take a sample of data from a population and infer about the plausibility of competing hypotheses. For example, in the upcoming “promotions” activity in Section 9.1 , you’ll study the data collected from a psychology study in the 1970s to investigate whether gender-based discrimination in promotion rates existed in the banking industry at the time of the study.

The good news is we’ve already covered many of the necessary concepts to understand hypothesis testing in Chapters 7 and 8 . We will expand further on these ideas here and also provide a general framework for understanding hypothesis tests. By understanding this general framework, you’ll be able to adapt it to many different scenarios.

The same can be said for confidence intervals. There was one general framework that applies to all confidence intervals and the infer package was designed around this framework. While the specifics may change slightly for different types of confidence intervals, the general framework stays the same.

We believe that this approach is much better for long-term learning than focusing on specific details for specific confidence intervals using theory-based approaches. As you’ll now see, we prefer this general framework for hypothesis tests as well.

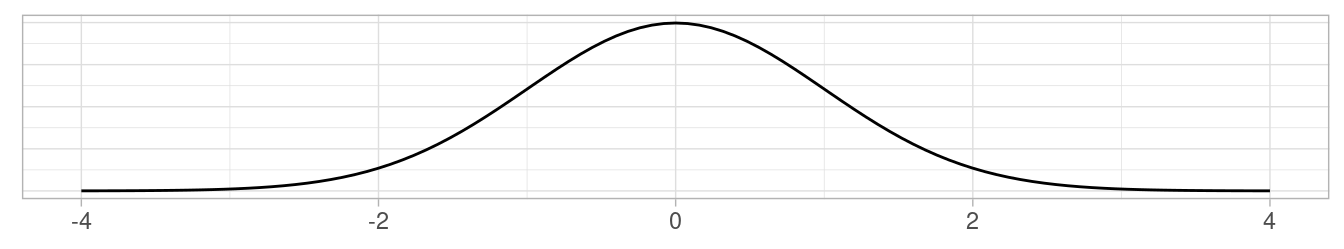

If you’d like more practice or you’re curious to see how this framework applies to different scenarios, you can find fully-worked out examples for many common hypothesis tests and their corresponding confidence intervals in Appendix B. We recommend that you carefully review these examples as they also cover how the general frameworks apply to traditional theory-based methods like the \(t\) -test and normal-theory confidence intervals. You’ll see there that these traditional methods are just approximations for the computer-based methods we’ve been focusing on. However, they also require conditions to be met for their results to be valid. Computer-based methods using randomization, simulation, and bootstrapping have much fewer restrictions. Furthermore, they help develop your computational thinking, which is one big reason they are emphasized throughout this book.

Needed packages

Let’s load all the packages needed for this chapter (this assumes you’ve already installed them). Recall from our discussion in Section 4.4 that loading the tidyverse package by running library(tidyverse) loads the following commonly used data science packages all at once:

- ggplot2 for data visualization

- dplyr for data wrangling

- tidyr for converting data to “tidy” format

- readr for importing spreadsheet data into R

- As well as the more advanced purrr , tibble , stringr , and forcats packages

If needed, read Section 1.3 for information on how to install and load R packages.

9.1 Promotions activity

Let’s start with an activity studying the effect of gender on promotions at a bank.

9.1.1 Does gender affect promotions at a bank?

Say you are working at a bank in the 1970s and you are submitting your résumé to apply for a promotion. Will your gender affect your chances of getting promoted? To answer this question, we’ll focus on data from a study published in the Journal of Applied Psychology in 1974. This data is also used in the OpenIntro series of statistics textbooks.

To begin the study, 48 bank supervisors were asked to assume the role of a hypothetical director of a bank with multiple branches. Every one of the bank supervisors was given a résumé and asked whether or not the candidate on the résumé was fit to be promoted to a new position in one of their branches.

However, each of these 48 résumés were identical in all respects except one: the name of the applicant at the top of the résumé. Of the supervisors, 24 were randomly given résumés with stereotypically “male” names, while 24 of the supervisors were randomly given résumés with stereotypically “female” names. Since only (binary) gender varied from résumé to résumé, researchers could isolate the effect of this variable in promotion rates.

While many people today (including us, the authors) disagree with such binary views of gender, it is important to remember that this study was conducted at a time where more nuanced views of gender were not as prevalent. Despite this imperfection, we decided to still use this example as we feel it presents ideas still relevant today about how we could study discrimination in the workplace.

The moderndive package contains the data on the 48 applicants in the promotions data frame. Let’s explore this data by looking at six randomly selected rows:

The variable id acts as an identification variable for all 48 rows, the decision variable indicates whether the applicant was selected for promotion or not, while the gender variable indicates the gender of the name used on the résumé. Recall that this data does not pertain to 24 actual men and 24 actual women, but rather 48 identical résumés of which 24 were assigned stereotypically “male” names and 24 were assigned stereotypically “female” names.

Let’s perform an exploratory data analysis of the relationship between the two categorical variables decision and gender . Recall that we saw in Subsection 2.8.3 that one way we can visualize such a relationship is by using a stacked barplot.

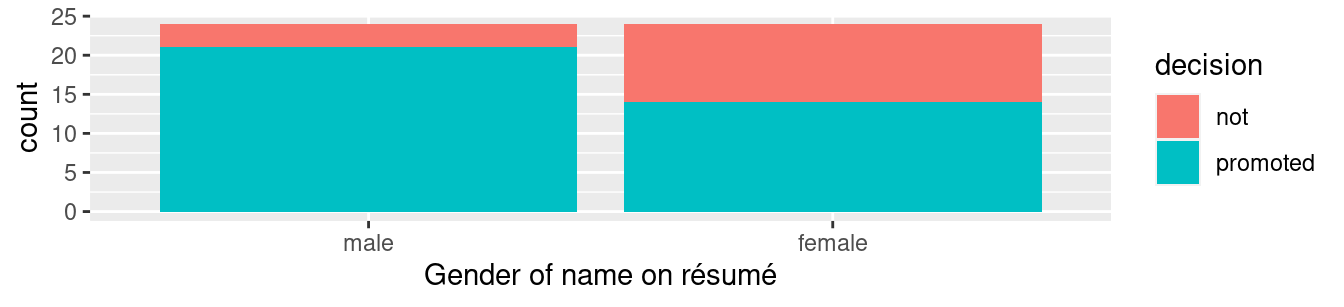

FIGURE 9.1: Barplot relating gender to promotion decision.

Observe in Figure 9.1 that it appears that résumés with female names were much less likely to be accepted for promotion. Let’s quantify these promotion rates by computing the proportion of résumés accepted for promotion for each group using the dplyr package for data wrangling. Note the use of the tally() function here which is a shortcut for summarize(n = n()) to get counts.

So of the 24 résumés with male names, 21 were selected for promotion, for a proportion of 21/24 = 0.875 = 87.5%. On the other hand, of the 24 résumés with female names, 14 were selected for promotion, for a proportion of 14/24 = 0.583 = 58.3%. Comparing these two rates of promotion, it appears that résumés with male names were selected for promotion at a rate 0.875 - 0.583 = 0.292 = 29.2% higher than résumés with female names. This is suggestive of an advantage for résumés with a male name on it.

The question is, however, does this provide conclusive evidence that there is gender discrimination in promotions at banks? Could a difference in promotion rates of 29.2% still occur by chance, even in a hypothetical world where no gender-based discrimination existed? In other words, what is the role of sampling variation in this hypothesized world? To answer this question, we’ll again rely on a computer to run simulations .

9.1.2 Shuffling once

First, try to imagine a hypothetical universe where no gender discrimination in promotions existed. In such a hypothetical universe, the gender of an applicant would have no bearing on their chances of promotion. Bringing things back to our promotions data frame, the gender variable would thus be an irrelevant label. If these gender labels were irrelevant, then we could randomly reassign them by “shuffling” them to no consequence!

To illustrate this idea, let’s narrow our focus to 6 arbitrarily chosen résumés of the 48 in Table 9.1 . The decision column shows that 3 résumés resulted in promotion while 3 didn’t. The gender column shows what the original gender of the résumé name was.

However, in our hypothesized universe of no gender discrimination, gender is irrelevant and thus it is of no consequence to randomly “shuffle” the values of gender . The shuffled_gender column shows one such possible random shuffling. Observe in the fourth column how the number of male and female names remains the same at 3 each, but they are now listed in a different order.

Again, such random shuffling of the gender label only makes sense in our hypothesized universe of no gender discrimination. How could we extend this shuffling of the gender variable to all 48 résumés by hand? One way would be by using standard deck of 52 playing cards, which we display in Figure 9.2 .

FIGURE 9.2: Standard deck of 52 playing cards.

Since half the cards are red (diamonds and hearts) and the other half are black (spades and clubs), by removing two red cards and two black cards, we would end up with 24 red cards and 24 black cards. After shuffling these 48 cards as seen in Figure 9.3 , we can flip the cards over one-by-one, assigning “male” for each red card and “female” for each black card.

FIGURE 9.3: Shuffling a deck of cards.

We’ve saved one such shuffling in the promotions_shuffled data frame of the moderndive package. If you compare the original promotions and the shuffled promotions_shuffled data frames, you’ll see that while the decision variable is identical, the gender variable has changed.

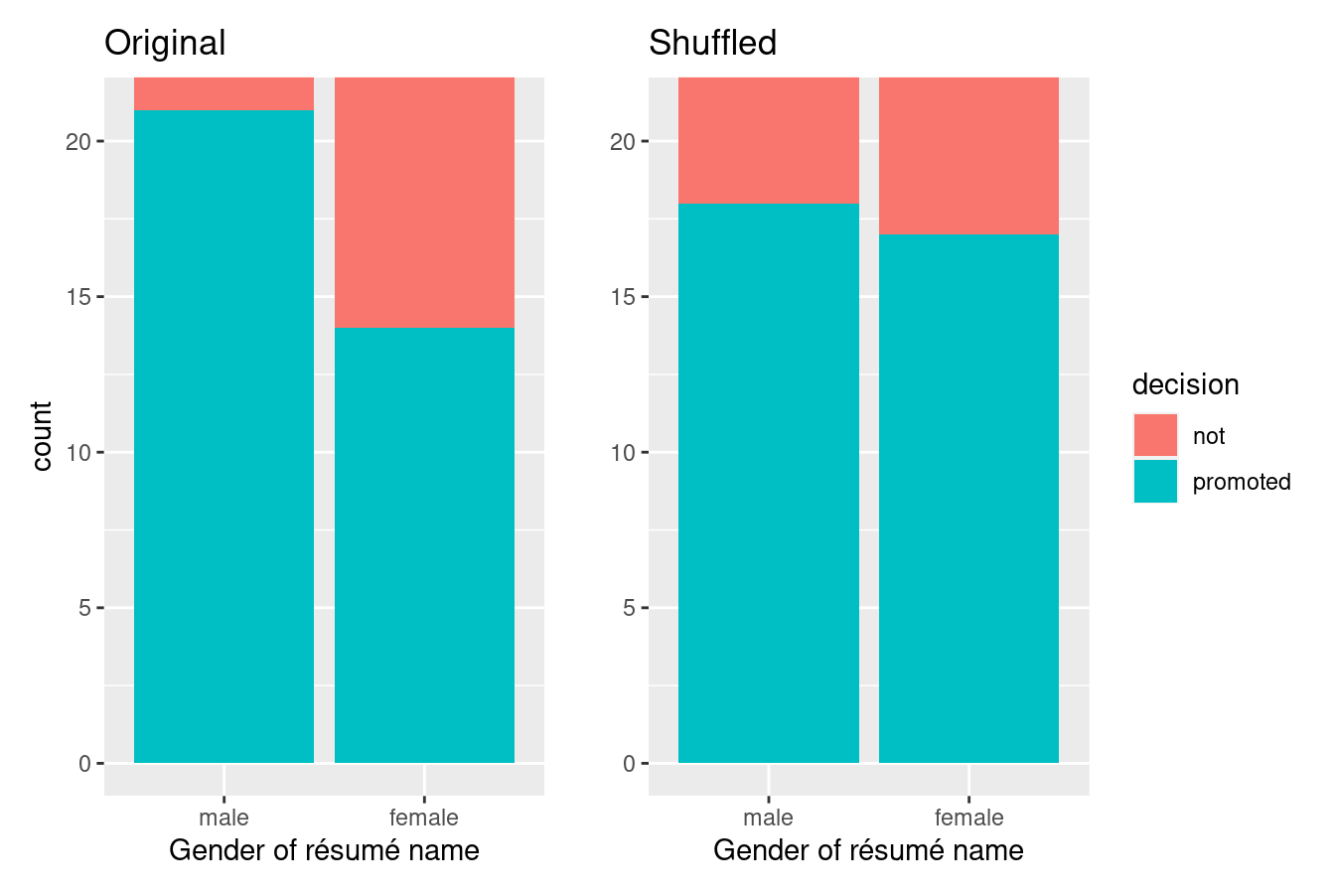

Let’s repeat the same exploratory data analysis we did for the original promotions data on our promotions_shuffled data frame. Let’s create a barplot visualizing the relationship between decision and the new shuffled gender variable and compare this to the original unshuffled version in Figure 9.4 .

FIGURE 9.4: Barplots of relationship of promotion with gender (left) and shuffled gender (right).

It appears the difference in “male names” versus “female names” promotion rates is now different. Compared to the original data in the left barplot, the new “shuffled” data in the right barplot has promotion rates that are much more similar.

Let’s also compute the proportion of résumés accepted for promotion for each group:

So in this hypothetical universe of no discrimination, \(18/24 = 0.75 = 75\%\) of “male” résumés were selected for promotion. On the other hand, \(17/24 = 0.708 = 70.8\%\) of “female” résumés were selected for promotion.

Let’s next compare these two values. It appears that résumés with stereotypically male names were selected for promotion at a rate that was \(0.75 - 0.708 = 0.042 = 4.2\%\) different than résumés with stereotypically female names.

Observe how this difference in rates is not the same as the difference in rates of 0.292 = 29.2% we originally observed. This is once again due to sampling variation . How can we better understand the effect of this sampling variation? By repeating this shuffling several times!

9.1.3 Shuffling 16 times

We recruited 16 groups of our friends to repeat this shuffling exercise. They recorded these values in a shared spreadsheet ; we display a snapshot of the first 10 rows and 5 columns in Figure 9.5 .

FIGURE 9.5: Snapshot of shared spreadsheet of shuffling results (m for male, f for female).

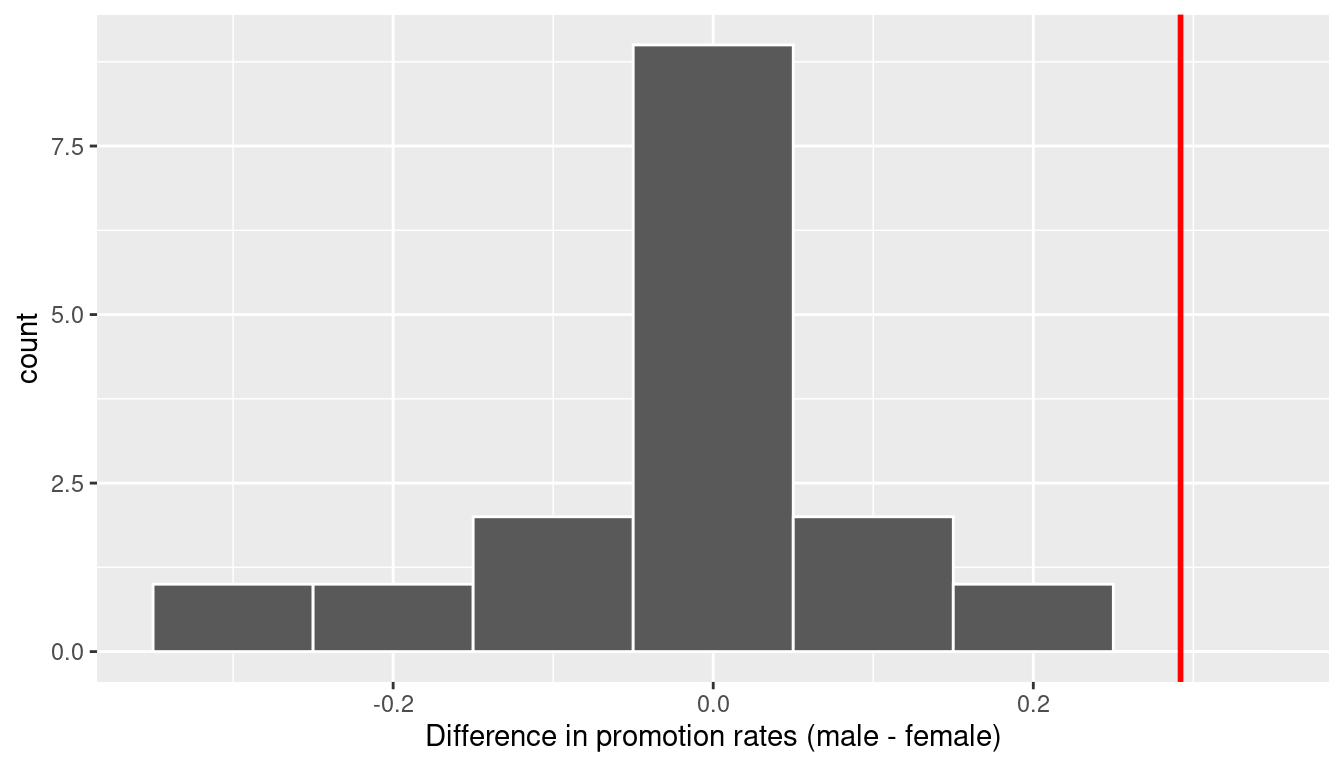

For each of these 16 columns of shuffles , we computed the difference in promotion rates, and in Figure 9.6 we display their distribution in a histogram. We also mark the observed difference in promotion rate that occurred in real life of 0.292 = 29.2% with a dark line.

FIGURE 9.6: Distribution of shuffled differences in promotions.

Before we discuss the distribution of the histogram, we emphasize the key thing to remember: this histogram represents differences in promotion rates that one would observe in our hypothesized universe of no gender discrimination.

Observe first that the histogram is roughly centered at 0. Saying that the difference in promotion rates is 0 is equivalent to saying that both genders had the same promotion rate. In other words, the center of these 16 values is consistent with what we would expect in our hypothesized universe of no gender discrimination.

However, while the values are centered at 0, there is variation about 0. This is because even in a hypothesized universe of no gender discrimination, you will still likely observe small differences in promotion rates because of chance sampling variation . Looking at the histogram in Figure 9.6 , such differences could even be as extreme as -0.292 or 0.208.

Turning our attention to what we observed in real life: the difference of 0.292 = 29.2% is marked with a vertical dark line. Ask yourself: in a hypothesized world of no gender discrimination, how likely would it be that we observe this difference? While opinions here may differ, in our opinion not often! Now ask yourself: what do these results say about our hypothesized universe of no gender discrimination?

9.1.4 What did we just do?

What we just demonstrated in this activity is the statistical procedure known as hypothesis testing using a permutation test . The term “permutation” is the mathematical term for “shuffling”: taking a series of values and reordering them randomly, as you did with the playing cards.

In fact, permutations are another form of resampling , like the bootstrap method you performed in Chapter 8 . While the bootstrap method involves resampling with replacement, permutation methods involve resampling without replacement.

Think of our exercise involving the slips of paper representing pennies and the hat in Section 8.1 : after sampling a penny, you put it back in the hat. Now think of our deck of cards. After drawing a card, you laid it out in front of you, recorded the color, and then you did not put it back in the deck.

In our previous example, we tested the validity of the hypothesized universe of no gender discrimination. The evidence contained in our observed sample of 48 résumés was somewhat inconsistent with our hypothesized universe. Thus, we would be inclined to reject this hypothesized universe and declare that the evidence suggests there is gender discrimination.

Recall our case study on whether yawning is contagious from Section 8.6 . The previous example involves inference about an unknown difference of population proportions as well. This time, it will be \(p_{m} - p_{f}\) , where \(p_{m}\) is the population proportion of résumés with male names being recommended for promotion and \(p_{f}\) is the equivalent for résumés with female names. Recall that this is one of the scenarios for inference we’ve seen so far in Table 9.2 .

So, based on our sample of \(n_m\) = 24 “male” applicants and \(n_w\) = 24 “female” applicants, the point estimate for \(p_{m} - p_{f}\) is the difference in sample proportions \(\widehat{p}_{m} -\widehat{p}_{f}\) = 0.875 - 0.583 = 0.292 = 29.2%. This difference in favor of “male” résumés of 0.292 is greater than 0, suggesting discrimination in favor of men.

However, the question we asked ourselves was “is this difference meaningfully greater than 0?”. In other words, is that difference indicative of true discrimination, or can we just attribute it to sampling variation ? Hypothesis testing allows us to make such distinctions.

9.2 Understanding hypothesis tests

Much like the terminology, notation, and definitions relating to sampling you saw in Section 7.3 , there are a lot of terminology, notation, and definitions related to hypothesis testing as well. Learning these may seem like a very daunting task at first. However, with practice, practice, and more practice, anyone can master them.

First, a hypothesis is a statement about the value of an unknown population parameter. In our résumé activity, our population parameter of interest is the difference in population proportions \(p_{m} - p_{f}\) . Hypothesis tests can involve any of the population parameters in Table 7.5 of the five inference scenarios we’ll cover in this book and also more advanced types we won’t cover here.

Second, a hypothesis test consists of a test between two competing hypotheses: (1) a null hypothesis \(H_0\) (pronounced “H-naught”) versus (2) an alternative hypothesis \(H_A\) (also denoted \(H_1\) ).

Generally the null hypothesis is a claim that there is “no effect” or “no difference of interest.” In many cases, the null hypothesis represents the status quo or a situation that nothing interesting is happening. Furthermore, generally the alternative hypothesis is the claim the experimenter or researcher wants to establish or find evidence to support. It is viewed as a “challenger” hypothesis to the null hypothesis \(H_0\) . In our résumé activity, an appropriate hypothesis test would be:

\[ \begin{aligned} H_0 &: \text{men and women are promoted at the same rate}\\ \text{vs } H_A &: \text{men are promoted at a higher rate than women} \end{aligned} \]

Note some of the choices we have made. First, we set the null hypothesis \(H_0\) to be that there is no difference in promotion rate and the “challenger” alternative hypothesis \(H_A\) to be that there is a difference. While it would not be wrong in principle to reverse the two, it is a convention in statistical inference that the null hypothesis is set to reflect a “null” situation where “nothing is going on.” As we discussed earlier, in this case, \(H_0\) corresponds to there being no difference in promotion rates. Furthermore, we set \(H_A\) to be that men are promoted at a higher rate, a subjective choice reflecting a prior suspicion we have that this is the case. We call such alternative hypotheses one-sided alternatives . If someone else however does not share such suspicions and only wants to investigate that there is a difference, whether higher or lower, they would set what is known as a two-sided alternative .

We can re-express the formulation of our hypothesis test using the mathematical notation for our population parameter of interest, the difference in population proportions \(p_{m} - p_{f}\) :

\[ \begin{aligned} H_0 &: p_{m} - p_{f} = 0\\ \text{vs } H_A&: p_{m} - p_{f} > 0 \end{aligned} \]

Observe how the alternative hypothesis \(H_A\) is one-sided with \(p_{m} - p_{f} > 0\) . Had we opted for a two-sided alternative, we would have set \(p_{m} - p_{f} \neq 0\) . To keep things simple for now, we’ll stick with the simpler one-sided alternative. We’ll present an example of a two-sided alternative in Section 9.5 .

Third, a test statistic is a point estimate/sample statistic formula used for hypothesis testing. Note that a sample statistic is merely a summary statistic based on a sample of observations. Recall we saw in Section 3.3 that a summary statistic takes in many values and returns only one. Here, the samples would be the \(n_m\) = 24 résumés with male names and the \(n_f\) = 24 résumés with female names. Hence, the point estimate of interest is the difference in sample proportions \(\widehat{p}_{m} - \widehat{p}_{f}\) .

Fourth, the observed test statistic is the value of the test statistic that we observed in real life. In our case, we computed this value using the data saved in the promotions data frame. It was the observed difference of \(\widehat{p}_{m} -\widehat{p}_{f} = 0.875 - 0.583 = 0.292 = 29.2\%\) in favor of résumés with male names.

Fifth, the null distribution is the sampling distribution of the test statistic assuming the null hypothesis \(H_0\) is true . Ooof! That’s a long one! Let’s unpack it slowly. The key to understanding the null distribution is that the null hypothesis \(H_0\) is assumed to be true. We’re not saying that \(H_0\) is true at this point, we’re only assuming it to be true for hypothesis testing purposes. In our case, this corresponds to our hypothesized universe of no gender discrimination in promotion rates. Assuming the null hypothesis \(H_0\) , also stated as “Under \(H_0\) ,” how does the test statistic vary due to sampling variation? In our case, how will the difference in sample proportions \(\widehat{p}_{m} - \widehat{p}_{f}\) vary due to sampling under \(H_0\) ? Recall from Subsection 7.3.2 that distributions displaying how point estimates vary due to sampling variation are called sampling distributions . The only additional thing to keep in mind about null distributions is that they are sampling distributions assuming the null hypothesis \(H_0\) is true .

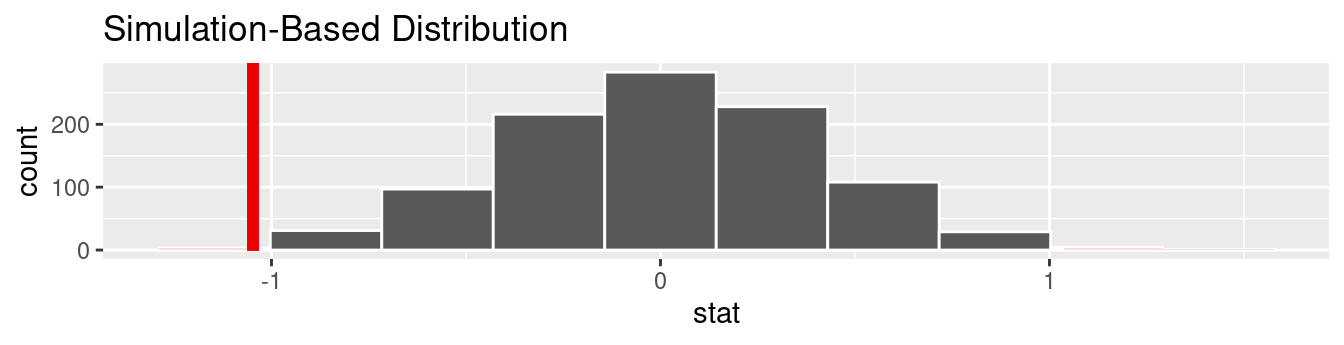

In our case, we previously visualized a null distribution in Figure 9.6 , which we re-display in Figure 9.7 using our new notation and terminology. It is the distribution of the 16 differences in sample proportions our friends computed assuming a hypothetical universe of no gender discrimination. We also mark the value of the observed test statistic of 0.292 with a vertical line.

FIGURE 9.7: Null distribution and observed test statistic.

Sixth, the \(p\) -value is the probability of obtaining a test statistic just as extreme or more extreme than the observed test statistic assuming the null hypothesis \(H_0\) is true . Double ooof! Let’s unpack this slowly as well. You can think of the \(p\) -value as a quantification of “surprise”: assuming \(H_0\) is true, how surprised are we with what we observed? Or in our case, in our hypothesized universe of no gender discrimination, how surprised are we that we observed a difference in promotion rates of 0.292 from our collected samples assuming \(H_0\) is true? Very surprised? Somewhat surprised?

The \(p\) -value quantifies this probability, or in the case of our 16 differences in sample proportions in Figure 9.7 , what proportion had a more “extreme” result? Here, extreme is defined in terms of the alternative hypothesis \(H_A\) that “male” applicants are promoted at a higher rate than “female” applicants. In other words, how often was the discrimination in favor of men even more pronounced than \(0.875 - 0.583 = 0.292 = 29.2\%\) ?

In this case, 0 times out of 16, we obtained a difference in proportion greater than or equal to the observed difference of 0.292 = 29.2%. A very rare (in fact, not occurring) outcome! Given the rarity of such a pronounced difference in promotion rates in our hypothesized universe of no gender discrimination, we’re inclined to reject our hypothesized universe. Instead, we favor the hypothesis stating there is discrimination in favor of the “male” applicants. In other words, we reject \(H_0\) in favor of \(H_A\) .

Seventh and lastly, in many hypothesis testing procedures, it is commonly recommended to set the significance level of the test beforehand. It is denoted by the Greek letter \(\alpha\) (pronounced “alpha”). This value acts as a cutoff on the \(p\) -value, where if the \(p\) -value falls below \(\alpha\) , we would “reject the null hypothesis \(H_0\) .”

Alternatively, if the \(p\) -value does not fall below \(\alpha\) , we would “fail to reject \(H_0\) .” Note the latter statement is not quite the same as saying we “accept \(H_0\) .” This distinction is rather subtle and not immediately obvious. So we’ll revisit it later in Section 9.4 .

While different fields tend to use different values of \(\alpha\) , some commonly used values for \(\alpha\) are 0.1, 0.01, and 0.05; with 0.05 being the choice people often make without putting much thought into it. We’ll talk more about \(\alpha\) significance levels in Section 9.4 , but first let’s fully conduct the hypothesis test corresponding to our promotions activity using the infer package.

9.3 Conducting hypothesis tests

In Section 8.4 , we showed you how to construct confidence intervals. We first illustrated how to do this using dplyr data wrangling verbs and the rep_sample_n() function from Subsection 7.2.3 which we used as a virtual shovel. In particular, we constructed confidence intervals by resampling with replacement by setting the replace = TRUE argument to the rep_sample_n() function.

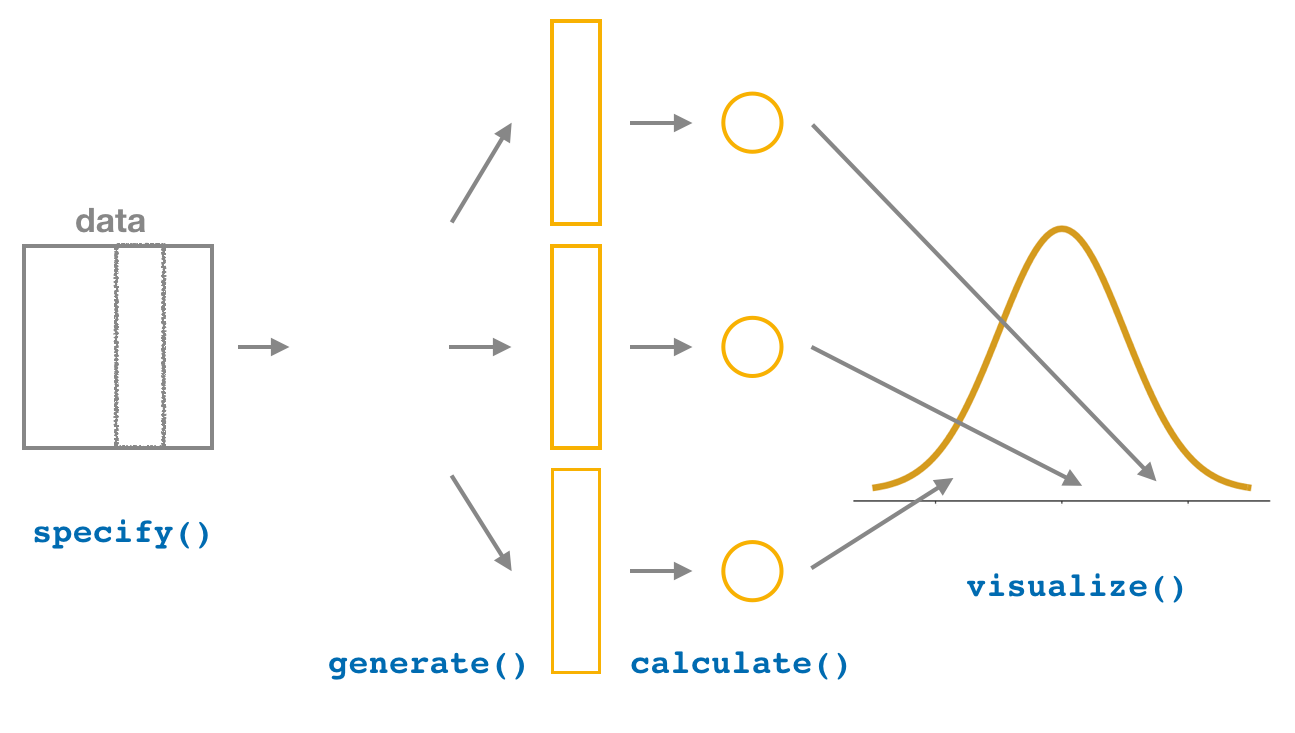

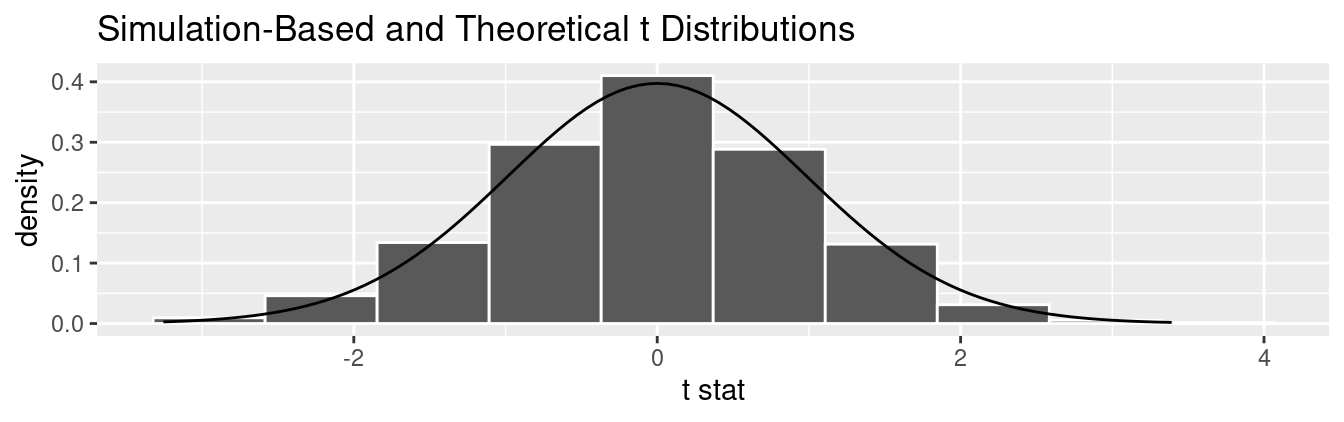

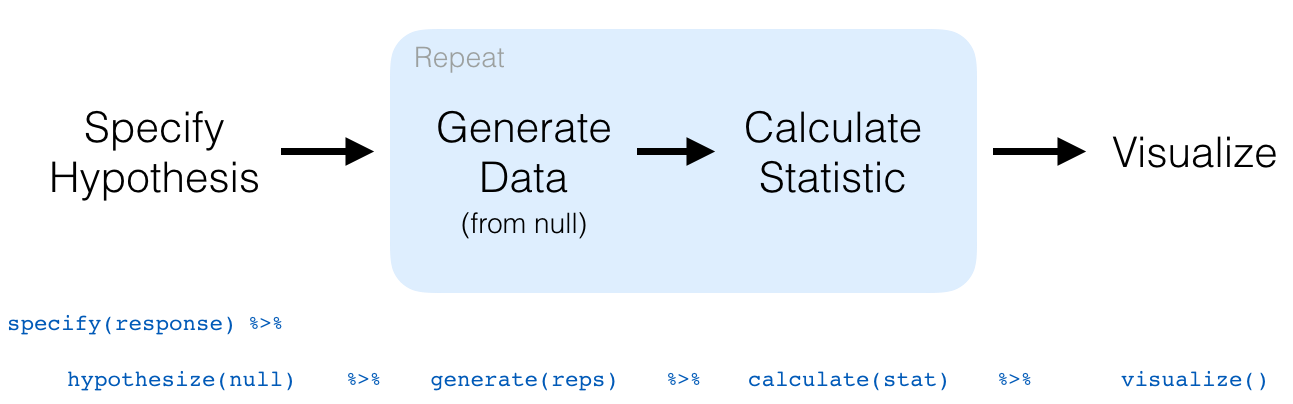

We then showed you how to perform the same task using the infer package workflow. While both workflows resulted in the same bootstrap distribution from which we can construct confidence intervals, the infer package workflow emphasizes each of the steps in the overall process in Figure 9.8 . It does so using function names that are intuitively named with verbs:

- specify() the variables of interest in your data frame.

- generate() replicates of bootstrap resamples with replacement.

- calculate() the summary statistic of interest.

- visualize() the resulting bootstrap distribution and confidence interval.

FIGURE 9.8: Confidence intervals with the infer package.

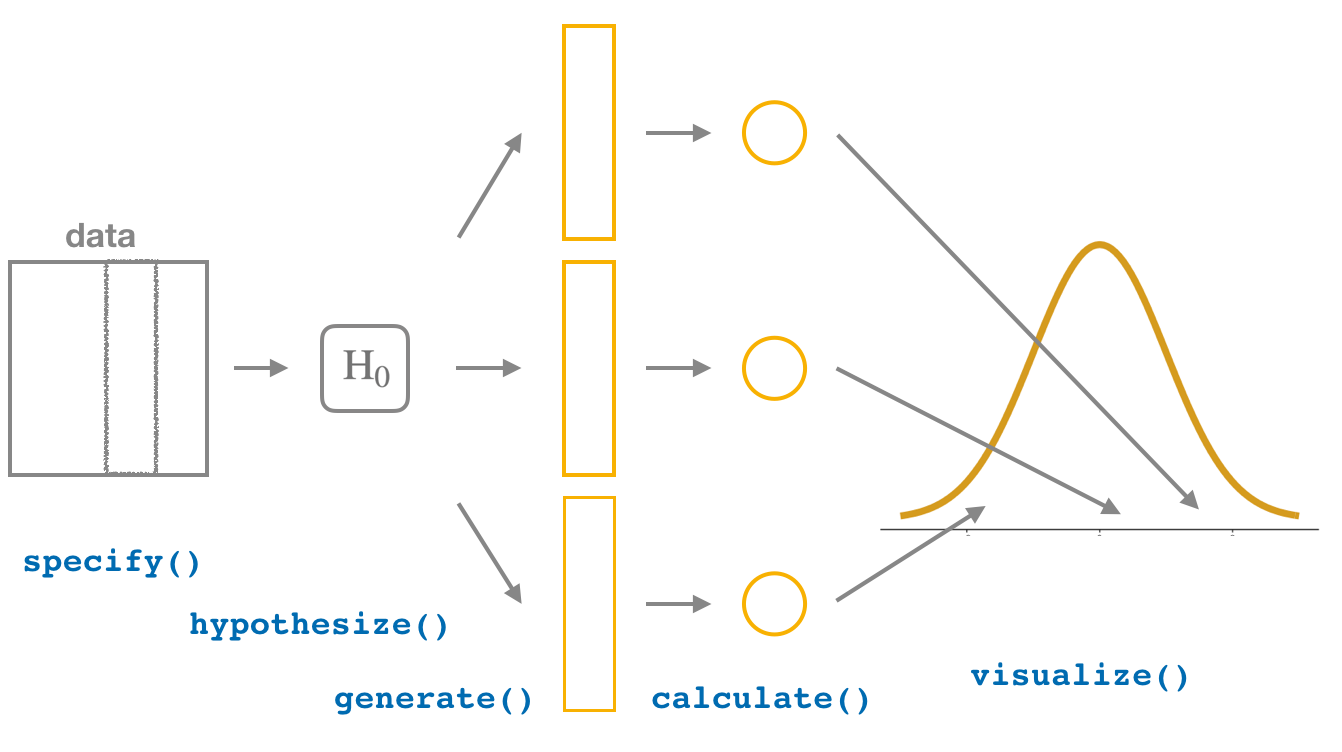

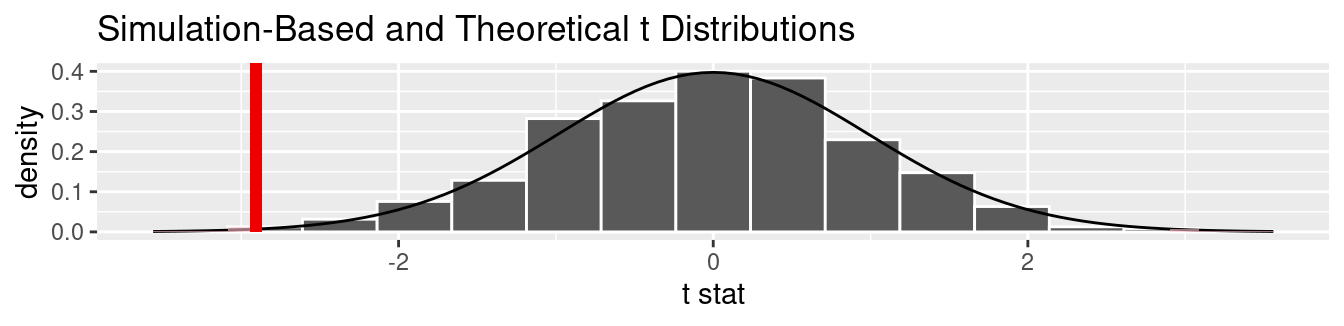

In this section, we’ll now show you how to seamlessly modify the previously seen infer code for constructing confidence intervals to conduct hypothesis tests. You’ll notice that the basic outline of the workflow is almost identical, except for an additional hypothesize() step between the specify() and generate() steps, as can be seen in Figure 9.9 .

FIGURE 9.9: Hypothesis testing with the infer package.

Furthermore, we’ll use a pre-specified significance level \(\alpha\) = 0.05 for this hypothesis test. Let’s leave discussion on the choice of this \(\alpha\) value until later on in Section 9.4 .

9.3.1 infer package workflow

1. specify variables.

Recall that we use the specify() verb to specify the response variable and, if needed, any explanatory variables for our study. In this case, since we are interested in any potential effects of gender on promotion decisions, we set decision as the response variable and gender as the explanatory variable. We do so using formula = response ~ explanatory where response is the name of the response variable in the data frame and explanatory is the name of the explanatory variable. So in our case it is decision ~ gender .

Furthermore, since we are interested in the proportion of résumés "promoted" , and not the proportion of résumés not promoted, we set the argument success to "promoted" .

Again, notice how the promotions data itself doesn’t change, but the Response: decision (factor) and Explanatory: gender (factor) meta-data do. This is similar to how the group_by() verb from dplyr doesn’t change the data, but only adds “grouping” meta-data, as we saw in Section 3.4 .

2. hypothesize the null

In order to conduct hypothesis tests using the infer workflow, we need a new step not present for confidence intervals: hypothesize() . Recall from Section 9.2 that our hypothesis test was

\[ \begin{aligned} H_0 &: p_{m} - p_{f} = 0\\ \text{vs. } H_A&: p_{m} - p_{f} > 0 \end{aligned} \]

In other words, the null hypothesis \(H_0\) corresponding to our “hypothesized universe” stated that there was no difference in gender-based discrimination rates. We set this null hypothesis \(H_0\) in our infer workflow using the null argument of the hypothesize() function to either:

- "point" for hypotheses involving a single sample or

- "independence" for hypotheses involving two samples.

In our case, since we have two samples (the résumés with “male” and “female” names), we set null = "independence" .

Again, the data has not changed yet. This will occur at the upcoming generate() step; we’re merely setting meta-data for now.

Where do the terms "point" and "independence" come from? These are two technical statistical terms. The term “point” relates from the fact that for a single group of observations, you will test the value of a single point. Going back to the pennies example from Chapter 8 , say we wanted to test if the mean year of all US pennies was equal to 1993 or not. We would be testing the value of a “point” \(\mu\) , the mean year of all US pennies, as follows

\[ \begin{aligned} H_0 &: \mu = 1993\\ \text{vs } H_A&: \mu \neq 1993 \end{aligned} \]

The term “independence” relates to the fact that for two groups of observations, you are testing whether or not the response variable is independent of the explanatory variable that assigns the groups. In our case, we are testing whether the decision response variable is “independent” of the explanatory variable gender that assigns each résumé to either of the two groups.

3. generate replicates

After we hypothesize() the null hypothesis, we generate() replicates of “shuffled” datasets assuming the null hypothesis is true. We do this by repeating the shuffling exercise you performed in Section 9.1 several times. Instead of merely doing it 16 times as our groups of friends did, let’s use the computer to repeat this 1000 times by setting reps = 1000 in the generate() function. However, unlike for confidence intervals where we generated replicates using type = "bootstrap" resampling with replacement, we’ll now perform shuffles/permutations by setting type = "permute" . Recall that shuffles/permutations are a kind of resampling, but unlike the bootstrap method, they involve resampling without replacement.

Observe that the resulting data frame has 48,000 rows. This is because we performed shuffles/permutations for each of the 48 rows 1000 times and \(48,000 = 1000 \cdot 48\) . If you explore the promotions_generate data frame with View() , you’ll notice that the variable replicate indicates which resample each row belongs to. So it has the value 1 48 times, the value 2 48 times, all the way through to the value 1000 48 times.

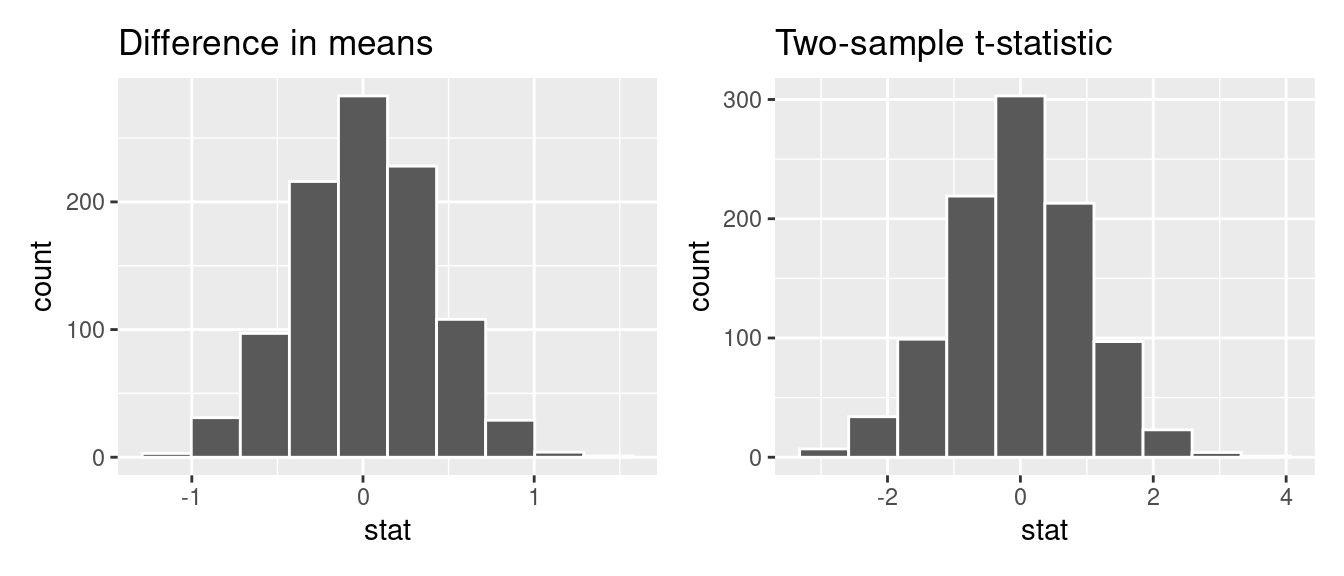

4. calculate summary statistics

Now that we have generated 1000 replicates of “shuffles” assuming the null hypothesis is true, let’s calculate() the appropriate summary statistic for each of our 1000 shuffles. From Section 9.2 , point estimates related to hypothesis testing have a specific name: test statistics . Since the unknown population parameter of interest is the difference in population proportions \(p_{m} - p_{f}\) , the test statistic here is the difference in sample proportions \(\widehat{p}_{m} - \widehat{p}_{f}\) .

For each of our 1000 shuffles, we can calculate this test statistic by setting stat = "diff in props" . Furthermore, since we are interested in \(\widehat{p}_{m} - \widehat{p}_{f}\) we set order = c("male", "female") . As we stated earlier, the order of the subtraction does not matter, so long as you stay consistent throughout your analysis and tailor your interpretations accordingly.

Let’s save the result in a data frame called null_distribution :

Observe that we have 1000 values of stat , each representing one instance of \(\widehat{p}_{m} - \widehat{p}_{f}\) in a hypothesized world of no gender discrimination. Observe as well that we chose the name of this data frame carefully: null_distribution . Recall once again from Section 9.2 that sampling distributions when the null hypothesis \(H_0\) is assumed to be true have a special name: the null distribution .

What was the observed difference in promotion rates? In other words, what was the observed test statistic \(\widehat{p}_{m} - \widehat{p}_{f}\) ? Recall from Section 9.1 that we computed this observed difference by hand to be 0.875 - 0.583 = 0.292 = 29.2%. We can also compute this value using the previous infer code but with the hypothesize() and generate() steps removed. Let’s save this in obs_diff_prop :

5. visualize the p-value

The final step is to measure how surprised we are by a promotion difference of 29.2% in a hypothesized universe of no gender discrimination. If the observed difference of 0.292 is highly unlikely, then we would be inclined to reject the validity of our hypothesized universe.

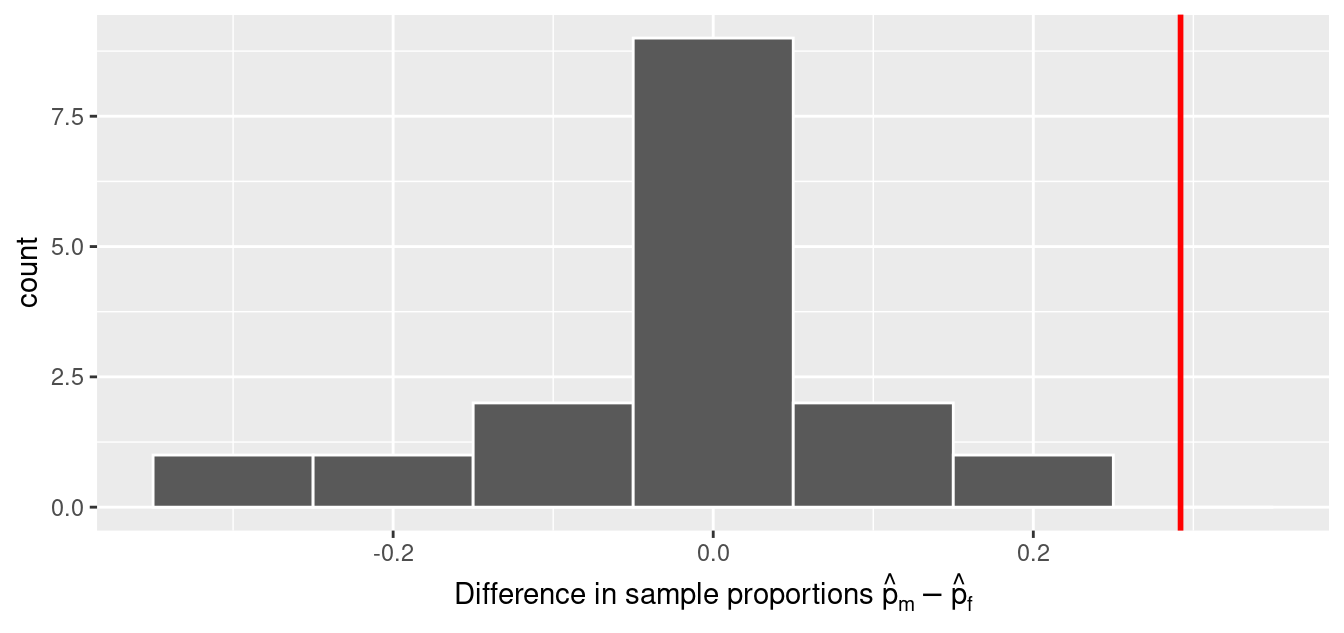

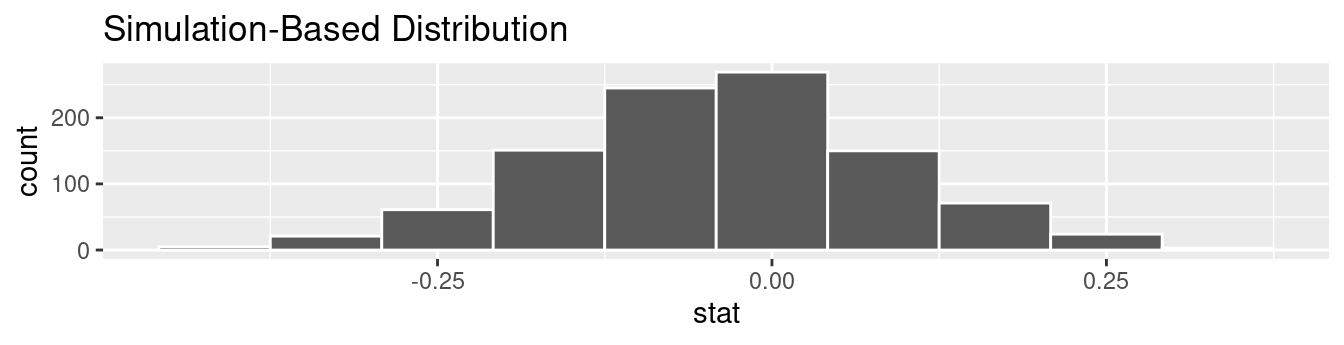

We start by visualizing the null distribution of our 1000 values of \(\widehat{p}_{m} - \widehat{p}_{f}\) using visualize() in Figure 9.10 . Recall that these are values of the difference in promotion rates assuming \(H_0\) is true. This corresponds to being in our hypothesized universe of no gender discrimination.

FIGURE 9.10: Null distribution.

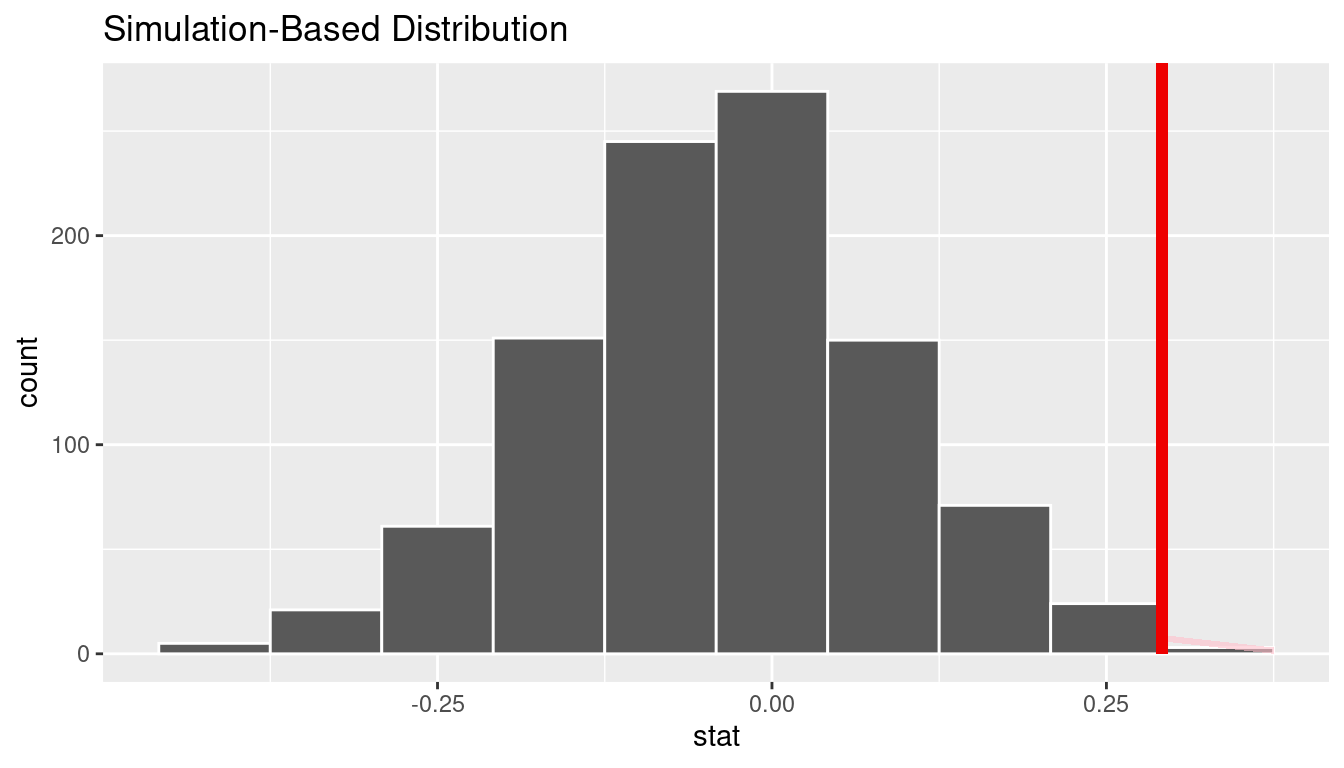

Let’s now add what happened in real life to Figure 9.10 , the observed difference in promotion rates of 0.875 - 0.583 = 0.292 = 29.2%. However, instead of merely adding a vertical line using geom_vline() , let’s use the shade_p_value() function with obs_stat set to the observed test statistic value we saved in obs_diff_prop .

Furthermore, we’ll set the direction = "right" reflecting our alternative hypothesis \(H_A: p_{m} - p_{f} > 0\) . Recall our alternative hypothesis \(H_A\) is that \(p_{m} - p_{f} > 0\) , stating that there is a difference in promotion rates in favor of résumés with male names. “More extreme” here corresponds to differences that are “bigger” or “more positive” or “more to the right.” Hence we set the direction argument of shade_p_value() to be "right" .

On the other hand, had our alternative hypothesis \(H_A\) been the other possible one-sided alternative \(p_{m} - p_{f} < 0\) , suggesting discrimination in favor of résumés with female names, we would’ve set direction = "left" . Had our alternative hypothesis \(H_A\) been two-sided \(p_{m} - p_{f} \neq 0\) , suggesting discrimination in either direction, we would’ve set direction = "both" .

FIGURE 9.11: Shaded histogram to show \(p\) -value.

In the resulting Figure 9.11 , the solid dark line marks 0.292 = 29.2%. However, what does the shaded-region correspond to? This is the \(p\) -value . Recall the definition of the \(p\) -value from Section 9.2 :

A \(p\) -value is the probability of obtaining a test statistic just as or more extreme than the observed test statistic assuming the null hypothesis \(H_0\) is true .

So judging by the shaded region in Figure 9.11 , it seems we would somewhat rarely observe differences in promotion rates of 0.292 = 29.2% or more in a hypothesized universe of no gender discrimination. In other words, the \(p\) -value is somewhat small. Hence, we would be inclined to reject this hypothesized universe, or using statistical language we would “reject \(H_0\) .”

What fraction of the null distribution is shaded? In other words, what is the exact value of the \(p\) -value? We can compute it using the get_p_value() function with the same arguments as the previous shade_p_value() code:

Keeping the definition of a \(p\) -value in mind, the probability of observing a difference in promotion rates as large as 0.292 = 29.2% due to sampling variation alone in the null distribution is 0.027 = 2.7%. Since this \(p\) -value is smaller than our pre-specified significance level \(\alpha\) = 0.05, we reject the null hypothesis \(H_0: p_{m} - p_{f} = 0\) . In other words, this \(p\) -value is sufficiently small to reject our hypothesized universe of no gender discrimination. We instead have enough evidence to change our mind in favor of gender discrimination being a likely culprit here. Observe that whether we reject the null hypothesis \(H_0\) or not depends in large part on our choice of significance level \(\alpha\) . We’ll discuss this more in Subsection 9.4.3 .

9.3.2 Comparison with confidence intervals

One of the great things about the infer package is that we can jump seamlessly between conducting hypothesis tests and constructing confidence intervals with minimal changes! Recall the code from the previous section that creates the null distribution, which in turn is needed to compute the \(p\) -value:

To create the corresponding bootstrap distribution needed to construct a 95% confidence interval for \(p_{m} - p_{f}\) , we only need to make two changes. First, we remove the hypothesize() step since we are no longer assuming a null hypothesis \(H_0\) is true. We can do this by deleting or commenting out the hypothesize() line of code. Second, we switch the type of resampling in the generate() step to be "bootstrap" instead of "permute" .

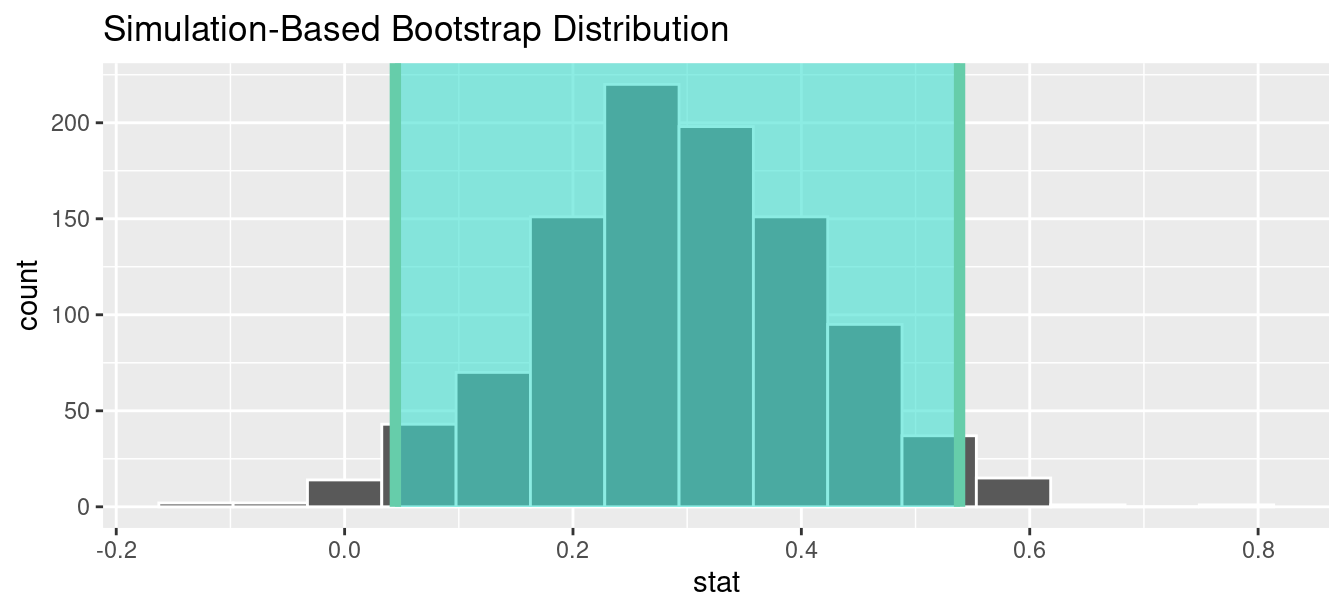

Using this bootstrap_distribution , let’s first compute the percentile-based confidence intervals, as we did in Section 8.4 :

Using our shorthand interpretation for 95% confidence intervals from Subsection 8.5.2 , we are 95% “confident” that the true difference in population proportions \(p_{m} - p_{f}\) is between (0.044, 0.539). Let’s visualize bootstrap_distribution and this percentile-based 95% confidence interval for \(p_{m} - p_{f}\) in Figure 9.12 .

FIGURE 9.12: Percentile-based 95% confidence interval.

Notice a key value that is not included in the 95% confidence interval for \(p_{m} - p_{f}\) : the value 0. In other words, a difference of 0 is not included in our net, suggesting that \(p_{m}\) and \(p_{f}\) are truly different! Furthermore, observe how the entirety of the 95% confidence interval for \(p_{m} - p_{f}\) lies above 0, suggesting that this difference is in favor of men.

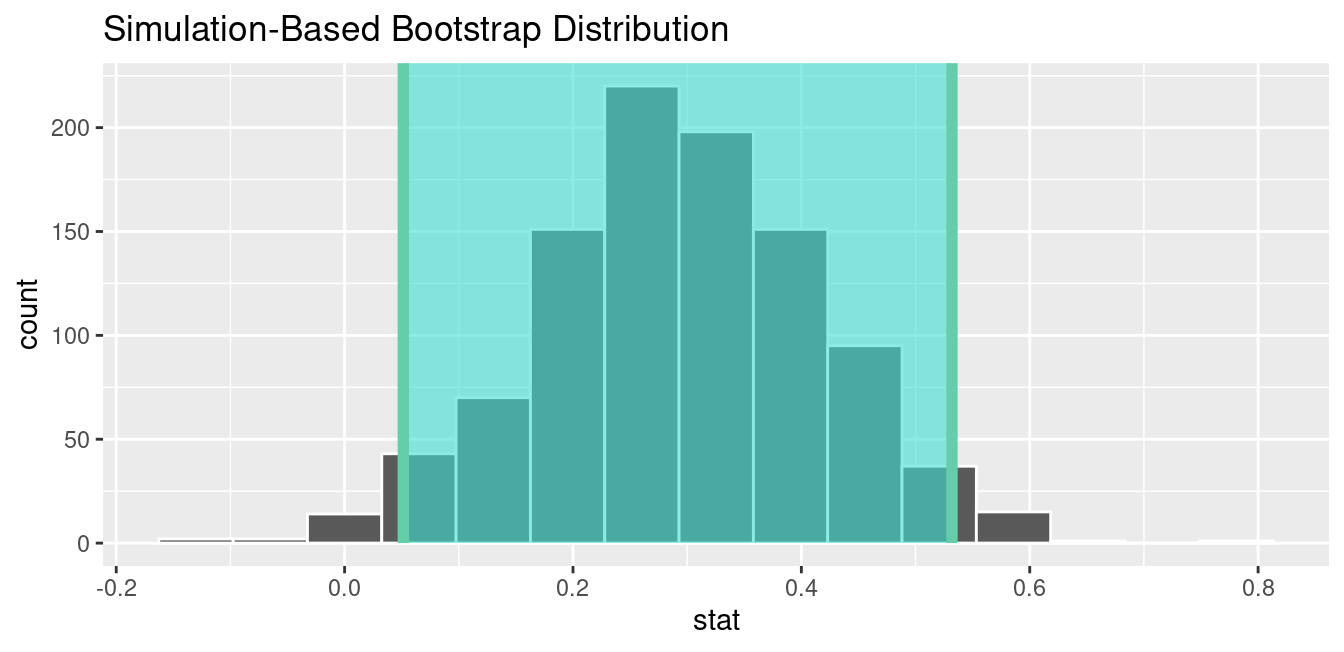

Since the bootstrap distribution appears to be roughly normally shaped, we can also use the standard error method as we did in Section 8.4 . In this case, we must specify the point_estimate argument as the observed difference in promotion rates 0.292 = 29.2% saved in obs_diff_prop . This value acts as the center of the confidence interval.

Let’s visualize bootstrap_distribution again, but now the standard error based 95% confidence interval for \(p_{m} - p_{f}\) in Figure 9.13 . Again, notice how the value 0 is not included in our confidence interval, again suggesting that \(p_{m}\) and \(p_{f}\) are truly different!

FIGURE 9.13: Standard error-based 95% confidence interval.

Learning check

(LC9.1) Why does the following code produce an error? In other words, what about the response and predictor variables make this not a possible computation with the infer package?

(LC9.2) Why are we relatively confident that the distributions of the sample proportions will be good approximations of the population distributions of promotion proportions for the two genders?

(LC9.3) Using the definition of p-value , write in words what the \(p\) -value represents for the hypothesis test comparing the promotion rates for males and females.

9.3.3 “There is only one test”

Let’s recap the steps necessary to conduct a hypothesis test using the terminology, notation, and definitions related to sampling you saw in Section 9.2 and the infer workflow from Subsection 9.3.1 :

- hypothesize() the null hypothesis \(H_0\) . In other words, set a “model for the universe” assuming \(H_0\) is true.

- generate() shuffles assuming \(H_0\) is true. In other words, simulate data assuming \(H_0\) is true.

- calculate() the test statistic of interest, both for the observed data and your simulated data.

- visualize() the resulting null distribution and compute the \(p\) -value by comparing the null distribution to the observed test statistic.

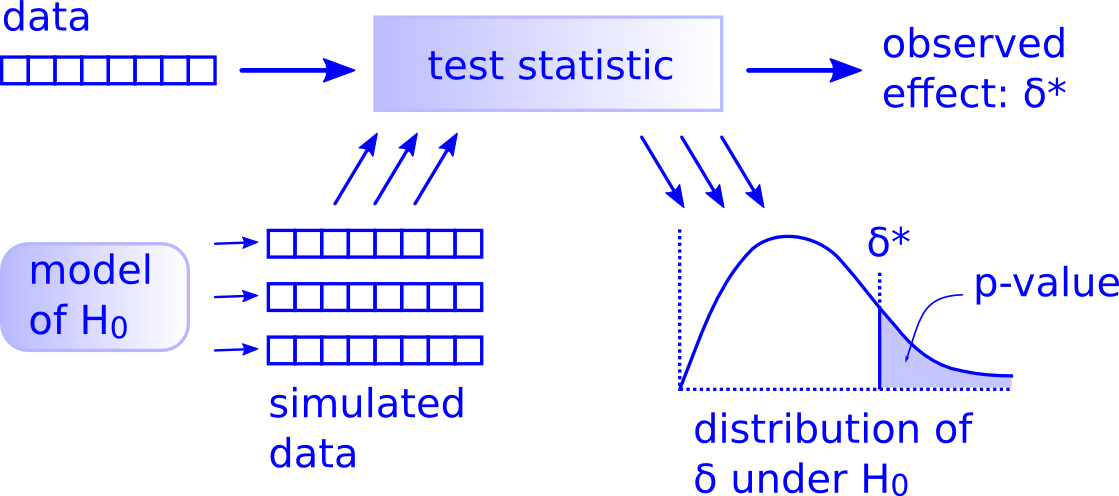

While this is a lot to digest, especially the first time you encounter hypothesis testing, the nice thing is that once you understand this general framework, then you can understand any hypothesis test. In a famous blog post, computer scientist Allen Downey called this the “There is only one test” framework, for which he created the flowchart displayed in Figure 9.14 .

FIGURE 9.14: Allen Downey’s hypothesis testing framework.

Notice its similarity with the “hypothesis testing with infer ” diagram you saw in Figure 9.9 . That’s because the infer package was explicitly designed to match the “There is only one test” framework. So if you can understand the framework, you can easily generalize these ideas for all hypothesis testing scenarios. Whether for population proportions \(p\) , population means \(\mu\) , differences in population proportions \(p_1 - p_2\) , differences in population means \(\mu_1 - \mu_2\) , and as you’ll see in Chapter 10 on inference for regression, population regression slopes \(\beta_1\) as well. In fact, it applies more generally even than just these examples to more complicated hypothesis tests and test statistics as well.

(LC9.4) Describe in a paragraph how we used Allen Downey’s diagram to conclude if a statistical difference existed between the promotion rate of males and females using this study.

9.4 Interpreting hypothesis tests

Interpreting the results of hypothesis tests is one of the more challenging aspects of this method for statistical inference. In this section, we’ll focus on ways to help with deciphering the process and address some common misconceptions.

9.4.1 Two possible outcomes

In Section 9.2 , we mentioned that given a pre-specified significance level \(\alpha\) there are two possible outcomes of a hypothesis test:

- If the \(p\) -value is less than \(\alpha\) , then we reject the null hypothesis \(H_0\) in favor of \(H_A\) .

- If the \(p\) -value is greater than or equal to \(\alpha\) , we fail to reject the null hypothesis \(H_0\) .

Unfortunately, the latter result is often misinterpreted as “accepting the null hypothesis \(H_0\) .” While at first glance it may seem that the statements “failing to reject \(H_0\) ” and “accepting \(H_0\) ” are equivalent, there actually is a subtle difference. Saying that we “accept the null hypothesis \(H_0\) ” is equivalent to stating that “we think the null hypothesis \(H_0\) is true.” However, saying that we “fail to reject the null hypothesis \(H_0\) ” is saying something else: “While \(H_0\) might still be false, we don’t have enough evidence to say so.” In other words, there is an absence of enough proof. However, the absence of proof is not proof of absence.

To further shed light on this distinction, let’s use the United States criminal justice system as an analogy. A criminal trial in the United States is a similar situation to hypothesis tests whereby a choice between two contradictory claims must be made about a defendant who is on trial:

- The defendant is truly either “innocent” or “guilty.”

- The defendant is presumed “innocent until proven guilty.”

- The defendant is found guilty only if there is strong evidence that the defendant is guilty. The phrase “beyond a reasonable doubt” is often used as a guideline for determining a cutoff for when enough evidence exists to find the defendant guilty.

- The defendant is found to be either “not guilty” or “guilty” in the ultimate verdict.

In other words, not guilty verdicts are not suggesting the defendant is innocent , but instead that “while the defendant may still actually be guilty, there wasn’t enough evidence to prove this fact.” Now let’s make the connection with hypothesis tests:

- Either the null hypothesis \(H_0\) or the alternative hypothesis \(H_A\) is true.

- Hypothesis tests are conducted assuming the null hypothesis \(H_0\) is true.

- We reject the null hypothesis \(H_0\) in favor of \(H_A\) only if the evidence found in the sample suggests that \(H_A\) is true. The significance level \(\alpha\) is used as a guideline to set the threshold on just how strong of evidence we require.

- We ultimately decide to either “fail to reject \(H_0\) ” or “reject \(H_0\) .”

So while gut instinct may suggest “failing to reject \(H_0\) ” and “accepting \(H_0\) ” are equivalent statements, they are not. “Accepting \(H_0\) ” is equivalent to finding a defendant innocent. However, courts do not find defendants “innocent,” but rather they find them “not guilty.” Putting things differently, defense attorneys do not need to prove that their clients are innocent, rather they only need to prove that clients are not “guilty beyond a reasonable doubt”.

So going back to our résumés activity in Section 9.3 , recall that our hypothesis test was \(H_0: p_{m} - p_{f} = 0\) versus \(H_A: p_{m} - p_{f} > 0\) and that we used a pre-specified significance level of \(\alpha\) = 0.05. We found a \(p\) -value of 0.027. Since the \(p\) -value was smaller than \(\alpha\) = 0.05, we rejected \(H_0\) . In other words, we found needed levels of evidence in this particular sample to say that \(H_0\) is false at the \(\alpha\) = 0.05 significance level. We also state this conclusion using non-statistical language: we found enough evidence in this data to suggest that there was gender discrimination at play.

9.4.2 Types of errors

Unfortunately, there is some chance a jury or a judge can make an incorrect decision in a criminal trial by reaching the wrong verdict. For example, finding a truly innocent defendant “guilty”. Or on the other hand, finding a truly guilty defendant “not guilty.” This can often stem from the fact that prosecutors don’t have access to all the relevant evidence, but instead are limited to whatever evidence the police can find.

The same holds for hypothesis tests. We can make incorrect decisions about a population parameter because we only have a sample of data from the population and thus sampling variation can lead us to incorrect conclusions.

There are two possible erroneous conclusions in a criminal trial: either (1) a truly innocent person is found guilty or (2) a truly guilty person is found not guilty. Similarly, there are two possible errors in a hypothesis test: either (1) rejecting \(H_0\) when in fact \(H_0\) is true, called a Type I error or (2) failing to reject \(H_0\) when in fact \(H_0\) is false, called a Type II error . Another term used for “Type I error” is “false positive,” while another term for “Type II error” is “false negative.”

This risk of error is the price researchers pay for basing inference on a sample instead of performing a census on the entire population. But as we’ve seen in our numerous examples and activities so far, censuses are often very expensive and other times impossible, and thus researchers have no choice but to use a sample. Thus in any hypothesis test based on a sample, we have no choice but to tolerate some chance that a Type I error will be made and some chance that a Type II error will occur.

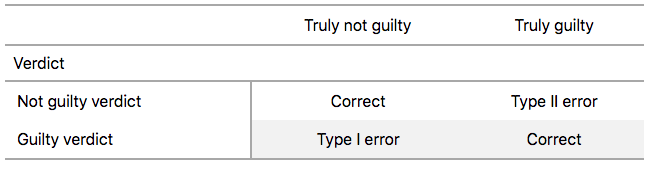

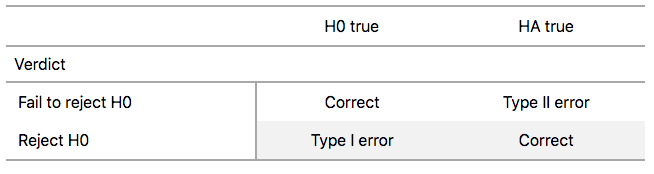

To help understand the concepts of Type I error and Type II errors, we apply these terms to our criminal justice analogy in Figure 9.15 .

FIGURE 9.15: Type I and Type II errors in criminal trials.

Thus a Type I error corresponds to incorrectly putting a truly innocent person in jail, whereas a Type II error corresponds to letting a truly guilty person go free. Let’s show the corresponding table in Figure 9.16 for hypothesis tests.

FIGURE 9.16: Type I and Type II errors in hypothesis tests.

9.4.3 How do we choose alpha?

If we are using a sample to make inferences about a population, we run the risk of making errors. For confidence intervals, a corresponding “error” would be constructing a confidence interval that does not contain the true value of the population parameter. For hypothesis tests, this would be making either a Type I or Type II error. Obviously, we want to minimize the probability of either error; we want a small probability of making an incorrect conclusion:

- The probability of a Type I Error occurring is denoted by \(\alpha\) . The value of \(\alpha\) is called the significance level of the hypothesis test, which we defined in Section 9.2 .

- The probability of a Type II Error is denoted by \(\beta\) . The value of \(1-\beta\) is known as the power of the hypothesis test.

In other words, \(\alpha\) corresponds to the probability of incorrectly rejecting \(H_0\) when in fact \(H_0\) is true. On the other hand, \(\beta\) corresponds to the probability of incorrectly failing to reject \(H_0\) when in fact \(H_0\) is false.

Ideally, we want \(\alpha = 0\) and \(\beta = 0\) , meaning that the chance of making either error is 0. However, this can never be the case in any situation where we are sampling for inference. There will always be the possibility of making either error when we use sample data. Furthermore, these two error probabilities are inversely related. As the probability of a Type I error goes down, the probability of a Type II error goes up.

What is typically done in practice is to fix the probability of a Type I error by pre-specifying a significance level \(\alpha\) and then try to minimize \(\beta\) . In other words, we will tolerate a certain fraction of incorrect rejections of the null hypothesis \(H_0\) , and then try to minimize the fraction of incorrect non-rejections of \(H_0\) .

So for example if we used \(\alpha\) = 0.01, we would be using a hypothesis testing procedure that in the long run would incorrectly reject the null hypothesis \(H_0\) one percent of the time. This is analogous to setting the confidence level of a confidence interval.

So what value should you use for \(\alpha\) ? Different fields have different conventions, but some commonly used values include 0.10, 0.05, 0.01, and 0.001. However, it is important to keep in mind that if you use a relatively small value of \(\alpha\) , then all things being equal, \(p\) -values will have a harder time being less than \(\alpha\) . Thus we would reject the null hypothesis less often. In other words, we would reject the null hypothesis \(H_0\) only if we have very strong evidence to do so. This is known as a “conservative” test.

On the other hand, if we used a relatively large value of \(\alpha\) , then all things being equal, \(p\) -values will have an easier time being less than \(\alpha\) . Thus we would reject the null hypothesis more often. In other words, we would reject the null hypothesis \(H_0\) even if we only have mild evidence to do so. This is known as a “liberal” test.

(LC9.5) What is wrong about saying, “The defendant is innocent.” based on the US system of criminal trials?

(LC9.6) What is the purpose of hypothesis testing?

(LC9.7) What are some flaws with hypothesis testing? How could we alleviate them?

(LC9.8) Consider two \(\alpha\) significance levels of 0.1 and 0.01. Of the two, which would lead to a more liberal hypothesis testing procedure? In other words, one that will, all things being equal, lead to more rejections of the null hypothesis \(H_0\) .

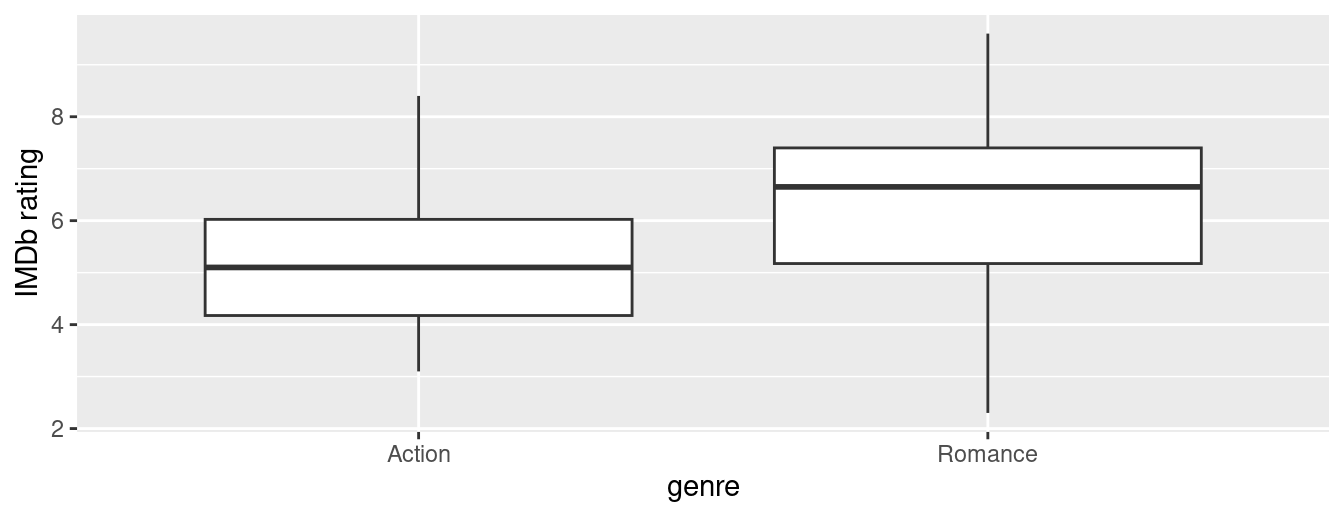

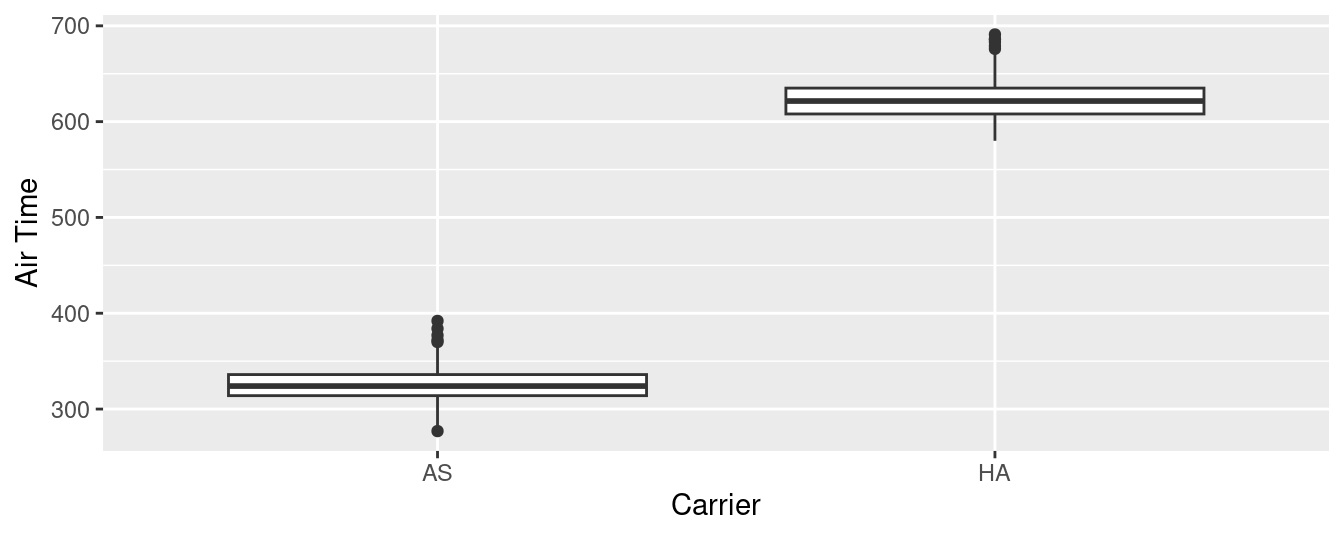

9.5 Case study: Are action or romance movies rated higher?

Let’s apply our knowledge of hypothesis testing to answer the question: “Are action or romance movies rated higher on IMDb?”. IMDb is a database on the internet providing information on movie and television show casts, plot summaries, trivia, and ratings. We’ll investigate if, on average, action or romance movies get higher ratings on IMDb.

9.5.1 IMDb ratings data

The movies dataset in the ggplot2movies package contains information on 58,788 movies that have been rated by users of IMDb.com.

We’ll focus on a random sample of 68 movies that are classified as either “action” or “romance” movies but not both. We disregard movies that are classified as both so that we can assign all 68 movies into either category. Furthermore, since the original movies dataset was a little messy, we provide a pre-wrangled version of our data in the movies_sample data frame included in the moderndive package. If you’re curious, you can look at the necessary data wrangling code to do this on GitHub .

The variables include the title and year the movie was filmed. Furthermore, we have a numerical variable rating , which is the IMDb rating out of 10 stars, and a binary categorical variable genre indicating if the movie was an Action or Romance movie. We are interested in whether Action or Romance movies got a higher rating on average.