An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- J Clin Diagn Res

- v.11(5); 2017 May

Critical Appraisal of Clinical Research

Azzam al-jundi.

1 Professor, Department of Orthodontics, King Saud bin Abdul Aziz University for Health Sciences-College of Dentistry, Riyadh, Kingdom of Saudi Arabia.

Salah Sakka

2 Associate Professor, Department of Oral and Maxillofacial Surgery, Al Farabi Dental College, Riyadh, KSA.

Evidence-based practice is the integration of individual clinical expertise with the best available external clinical evidence from systematic research and patient’s values and expectations into the decision making process for patient care. It is a fundamental skill to be able to identify and appraise the best available evidence in order to integrate it with your own clinical experience and patients values. The aim of this article is to provide a robust and simple process for assessing the credibility of articles and their value to your clinical practice.

Introduction

Decisions related to patient value and care is carefully made following an essential process of integration of the best existing evidence, clinical experience and patient preference. Critical appraisal is the course of action for watchfully and systematically examining research to assess its reliability, value and relevance in order to direct professionals in their vital clinical decision making [ 1 ].

Critical appraisal is essential to:

- Combat information overload;

- Identify papers that are clinically relevant;

- Continuing Professional Development (CPD).

Carrying out Critical Appraisal:

Assessing the research methods used in the study is a prime step in its critical appraisal. This is done using checklists which are specific to the study design.

Standard Common Questions:

- What is the research question?

- What is the study type (design)?

- Selection issues.

- What are the outcome factors and how are they measured?

- What are the study factors and how are they measured?

- What important potential confounders are considered?

- What is the statistical method used in the study?

- Statistical results.

- What conclusions did the authors reach about the research question?

- Are ethical issues considered?

The Critical Appraisal starts by double checking the following main sections:

I. Overview of the paper:

- The publishing journal and the year

- The article title: Does it state key trial objectives?

- The author (s) and their institution (s)

The presence of a peer review process in journal acceptance protocols also adds robustness to the assessment criteria for research papers and hence would indicate a reduced likelihood of publication of poor quality research. Other areas to consider may include authors’ declarations of interest and potential market bias. Attention should be paid to any declared funding or the issue of a research grant, in order to check for a conflict of interest [ 2 ].

II. ABSTRACT: Reading the abstract is a quick way of getting to know the article and its purpose, major procedures and methods, main findings, and conclusions.

- Aim of the study: It should be well and clearly written.

- Materials and Methods: The study design and type of groups, type of randomization process, sample size, gender, age, and procedure rendered to each group and measuring tool(s) should be evidently mentioned.

- Results: The measured variables with their statistical analysis and significance.

- Conclusion: It must clearly answer the question of interest.

III. Introduction/Background section:

An excellent introduction will thoroughly include references to earlier work related to the area under discussion and express the importance and limitations of what is previously acknowledged [ 2 ].

-Why this study is considered necessary? What is the purpose of this study? Was the purpose identified before the study or a chance result revealed as part of ‘data searching?’

-What has been already achieved and how does this study be at variance?

-Does the scientific approach outline the advantages along with possible drawbacks associated with the intervention or observations?

IV. Methods and Materials section : Full details on how the study was actually carried out should be mentioned. Precise information is given on the study design, the population, the sample size and the interventions presented. All measurements approaches should be clearly stated [ 3 ].

V. Results section : This section should clearly reveal what actually occur to the subjects. The results might contain raw data and explain the statistical analysis. These can be shown in related tables, diagrams and graphs.

VI. Discussion section : This section should include an absolute comparison of what is already identified in the topic of interest and the clinical relevance of what has been newly established. A discussion on a possible related limitations and necessitation for further studies should also be indicated.

Does it summarize the main findings of the study and relate them to any deficiencies in the study design or problems in the conduct of the study? (This is called intention to treat analysis).

- Does it address any source of potential bias?

- Are interpretations consistent with the results?

- How are null findings interpreted?

- Does it mention how do the findings of this study relate to previous work in the area?

- Can they be generalized (external validity)?

- Does it mention their clinical implications/applicability?

- What are the results/outcomes/findings applicable to and will they affect a clinical practice?

- Does the conclusion answer the study question?

- -Is the conclusion convincing?

- -Does the paper indicate ethics approval?

- -Can you identify potential ethical issues?

- -Do the results apply to the population in which you are interested?

- -Will you use the results of the study?

Once you have answered the preliminary and key questions and identified the research method used, you can incorporate specific questions related to each method into your appraisal process or checklist.

1-What is the research question?

For a study to gain value, it should address a significant problem within the healthcare and provide new or meaningful results. Useful structure for assessing the problem addressed in the article is the Problem Intervention Comparison Outcome (PICO) method [ 3 ].

P = Patient or problem: Patient/Problem/Population:

It involves identifying if the research has a focused question. What is the chief complaint?

E.g.,: Disease status, previous ailments, current medications etc.,

I = Intervention: Appropriately and clearly stated management strategy e.g.,: new diagnostic test, treatment, adjunctive therapy etc.,

C= Comparison: A suitable control or alternative

E.g.,: specific and limited to one alternative choice.

O= Outcomes: The desired results or patient related consequences have to be identified. e.g.,: eliminating symptoms, improving function, esthetics etc.,

The clinical question determines which study designs are appropriate. There are five broad categories of clinical questions, as shown in [ Table/Fig-1 ].

[Table/Fig-1]:

Categories of clinical questions and the related study designs.

2- What is the study type (design)?

The study design of the research is fundamental to the usefulness of the study.

In a clinical paper the methodology employed to generate the results is fully explained. In general, all questions about the related clinical query, the study design, the subjects and the correlated measures to reduce bias and confounding should be adequately and thoroughly explored and answered.

Participants/Sample Population:

Researchers identify the target population they are interested in. A sample population is therefore taken and results from this sample are then generalized to the target population.

The sample should be representative of the target population from which it came. Knowing the baseline characteristics of the sample population is important because this allows researchers to see how closely the subjects match their own patients [ 4 ].

Sample size calculation (Power calculation): A trial should be large enough to have a high chance of detecting a worthwhile effect if it exists. Statisticians can work out before the trial begins how large the sample size should be in order to have a good chance of detecting a true difference between the intervention and control groups [ 5 ].

- Is the sample defined? Human, Animals (type); what population does it represent?

- Does it mention eligibility criteria with reasons?

- Does it mention where and how the sample were recruited, selected and assessed?

- Does it mention where was the study carried out?

- Is the sample size justified? Rightly calculated? Is it adequate to detect statistical and clinical significant results?

- Does it mention a suitable study design/type?

- Is the study type appropriate to the research question?

- Is the study adequately controlled? Does it mention type of randomization process? Does it mention the presence of control group or explain lack of it?

- Are the samples similar at baseline? Is sample attrition mentioned?

- All studies report the number of participants/specimens at the start of a study, together with details of how many of them completed the study and reasons for incomplete follow up if there is any.

- Does it mention who was blinded? Are the assessors and participants blind to the interventions received?

- Is it mentioned how was the data analysed?

- Are any measurements taken likely to be valid?

Researchers use measuring techniques and instruments that have been shown to be valid and reliable.

Validity refers to the extent to which a test measures what it is supposed to measure.

(the extent to which the value obtained represents the object of interest.)

- -Soundness, effectiveness of the measuring instrument;

- -What does the test measure?

- -Does it measure, what it is supposed to be measured?

- -How well, how accurately does it measure?

Reliability: In research, the term reliability means “repeatability” or “consistency”

Reliability refers to how consistent a test is on repeated measurements. It is important especially if assessments are made on different occasions and or by different examiners. Studies should state the method for assessing the reliability of any measurements taken and what the intra –examiner reliability was [ 6 ].

3-Selection issues:

The following questions should be raised:

- - How were subjects chosen or recruited? If not random, are they representative of the population?

- - Types of Blinding (Masking) Single, Double, Triple?

- - Is there a control group? How was it chosen?

- - How are patients followed up? Who are the dropouts? Why and how many are there?

- - Are the independent (predictor) and dependent (outcome) variables in the study clearly identified, defined, and measured?

- - Is there a statement about sample size issues or statistical power (especially important in negative studies)?

- - If a multicenter study, what quality assurance measures were employed to obtain consistency across sites?

- - Are there selection biases?

- • In a case-control study, if exercise habits to be compared:

- - Are the controls appropriate?

- - Were records of cases and controls reviewed blindly?

- - How were possible selection biases controlled (Prevalence bias, Admission Rate bias, Volunteer bias, Recall bias, Lead Time bias, Detection bias, etc.,)?

- • Cross Sectional Studies:

- - Was the sample selected in an appropriate manner (random, convenience, etc.,)?

- - Were efforts made to ensure a good response rate or to minimize the occurrence of missing data?

- - Were reliability (reproducibility) and validity reported?

- • In an intervention study, how were subjects recruited and assigned to groups?

- • In a cohort study, how many reached final follow-up?

- - Are the subject’s representatives of the population to which the findings are applied?

- - Is there evidence of volunteer bias? Was there adequate follow-up time?

- - What was the drop-out rate?

- - Any shortcoming in the methodology can lead to results that do not reflect the truth. If clinical practice is changed on the basis of these results, patients could be harmed.

Researchers employ a variety of techniques to make the methodology more robust, such as matching, restriction, randomization, and blinding [ 7 ].

Bias is the term used to describe an error at any stage of the study that was not due to chance. Bias leads to results in which there are a systematic deviation from the truth. As bias cannot be measured, researchers need to rely on good research design to minimize bias [ 8 ]. To minimize any bias within a study the sample population should be representative of the population. It is also imperative to consider the sample size in the study and identify if the study is adequately powered to produce statistically significant results, i.e., p-values quoted are <0.05 [ 9 ].

4-What are the outcome factors and how are they measured?

- -Are all relevant outcomes assessed?

- -Is measurement error an important source of bias?

5-What are the study factors and how are they measured?

- -Are all the relevant study factors included in the study?

- -Have the factors been measured using appropriate tools?

Data Analysis and Results:

- Were the tests appropriate for the data?

- Are confidence intervals or p-values given?

- How strong is the association between intervention and outcome?

- How precise is the estimate of the risk?

- Does it clearly mention the main finding(s) and does the data support them?

- Does it mention the clinical significance of the result?

- Is adverse event or lack of it mentioned?

- Are all relevant outcomes assessed?

- Was the sample size adequate to detect a clinically/socially significant result?

- Are the results presented in a way to help in health policy decisions?

- Is there measurement error?

- Is measurement error an important source of bias?

Confounding Factors:

A confounder has a triangular relationship with both the exposure and the outcome. However, it is not on the causal pathway. It makes it appear as if there is a direct relationship between the exposure and the outcome or it might even mask an association that would otherwise have been present [ 9 ].

6- What important potential confounders are considered?

- -Are potential confounders examined and controlled for?

- -Is confounding an important source of bias?

7- What is the statistical method in the study?

- -Are the statistical methods described appropriate to compare participants for primary and secondary outcomes?

- -Are statistical methods specified insufficient detail (If I had access to the raw data, could I reproduce the analysis)?

- -Were the tests appropriate for the data?

- -Are confidence intervals or p-values given?

- -Are results presented as absolute risk reduction as well as relative risk reduction?

Interpretation of p-value:

The p-value refers to the probability that any particular outcome would have arisen by chance. A p-value of less than 1 in 20 (p<0.05) is statistically significant.

- When p-value is less than significance level, which is usually 0.05, we often reject the null hypothesis and the result is considered to be statistically significant. Conversely, when p-value is greater than 0.05, we conclude that the result is not statistically significant and the null hypothesis is accepted.

Confidence interval:

Multiple repetition of the same trial would not yield the exact same results every time. However, on average the results would be within a certain range. A 95% confidence interval means that there is a 95% chance that the true size of effect will lie within this range.

8- Statistical results:

- -Do statistical tests answer the research question?

Are statistical tests performed and comparisons made (data searching)?

Correct statistical analysis of results is crucial to the reliability of the conclusions drawn from the research paper. Depending on the study design and sample selection method employed, observational or inferential statistical analysis may be carried out on the results of the study.

It is important to identify if this is appropriate for the study [ 9 ].

- -Was the sample size adequate to detect a clinically/socially significant result?

- -Are the results presented in a way to help in health policy decisions?

Clinical significance:

Statistical significance as shown by p-value is not the same as clinical significance. Statistical significance judges whether treatment effects are explicable as chance findings, whereas clinical significance assesses whether treatment effects are worthwhile in real life. Small improvements that are statistically significant might not result in any meaningful improvement clinically. The following questions should always be on mind:

- -If the results are statistically significant, do they also have clinical significance?

- -If the results are not statistically significant, was the sample size sufficiently large to detect a meaningful difference or effect?

9- What conclusions did the authors reach about the study question?

Conclusions should ensure that recommendations stated are suitable for the results attained within the capacity of the study. The authors should also concentrate on the limitations in the study and their effects on the outcomes and the proposed suggestions for future studies [ 10 ].

- -Are the questions posed in the study adequately addressed?

- -Are the conclusions justified by the data?

- -Do the authors extrapolate beyond the data?

- -Are shortcomings of the study addressed and constructive suggestions given for future research?

- -Bibliography/References:

Do the citations follow one of the Council of Biological Editors’ (CBE) standard formats?

10- Are ethical issues considered?

If a study involves human subjects, human tissues, or animals, was approval from appropriate institutional or governmental entities obtained? [ 10 , 11 ].

Critical appraisal of RCTs: Factors to look for:

- Allocation (randomization, stratification, confounders).

- Follow up of participants (intention to treat).

- Data collection (bias).

- Sample size (power calculation).

- Presentation of results (clear, precise).

- Applicability to local population.

[ Table/Fig-2 ] summarizes the guidelines for Consolidated Standards of Reporting Trials CONSORT [ 12 ].

[Table/Fig-2]:

Summary of the CONSORT guidelines.

Critical appraisal of systematic reviews: provide an overview of all primary studies on a topic and try to obtain an overall picture of the results.

In a systematic review, all the primary studies identified are critically appraised and only the best ones are selected. A meta-analysis (i.e., a statistical analysis) of the results from selected studies may be included. Factors to look for:

- Literature search (did it include published and unpublished materials as well as non-English language studies? Was personal contact with experts sought?).

- Quality-control of studies included (type of study; scoring system used to rate studies; analysis performed by at least two experts).

- Homogeneity of studies.

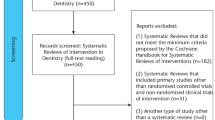

[ Table/Fig-3 ] summarizes the guidelines for Preferred Reporting Items for Systematic reviews and Meta-Analyses PRISMA [ 13 ].

[Table/Fig-3]:

Summary of PRISMA guidelines.

Critical appraisal is a fundamental skill in modern practice for assessing the value of clinical researches and providing an indication of their relevance to the profession. It is a skills-set developed throughout a professional career that facilitates this and, through integration with clinical experience and patient preference, permits the practice of evidence based medicine and dentistry. By following a systematic approach, such evidence can be considered and applied to clinical practice.

Financial or other Competing Interests

- En español – ExME

- Em português – EME

Critical Appraisal: A Checklist

Posted on 6th September 2016 by Robert Will

Critical appraisal of scientific literature is a necessary skill for healthcare students. Students can be overwhelmed by the vastness of search results. Database searching is a skill in itself, but will not be covered in this blog. This blog assumes that you have found a relevant journal article to answer a clinical question. After selecting an article, you must be able to sit with the article and critically appraise it. Critical appraisal of a journal article is a literary and scientific systematic dissection in an attempt to assign merit to the conclusions of an article. Ideally, an article will be able to undergo scrutiny and retain its findings as valid.

The specific questions used to assess validity change slightly with different study designs and article types. However, in an attempt to provide a generalized checklist, no specific subtype of article has been chosen. Rather, the 20 questions below should be used as a quick reference to appraise any journal article. The first four checklist questions should be answered “Yes.” If any of the four questions are answered “no,” then you should return to your search and attempt to find an article that will meet these criteria.

Critical appraisal of…the Introduction

- Does the article attempt to answer the same question as your clinical question?

- Is the article recently published (within 5 years) or is it seminal (i.e. an earlier article but which has strongly influenced later developments)?

- Is the journal peer-reviewed?

- Do the authors present a hypothesis?

Critical appraisal of…the Methods

- Is the study design valid for your question?

- Are both inclusion and exclusion criteria described?

- Is there an attempt to limit bias in the selection of participant groups?

- Are there methodological protocols (i.e. blinding) used to limit other possible bias?

- Do the research methods limit the influence of confounding variables?

- Are the outcome measures valid for the health condition you are researching?

Critical appraisal of…the Results

- Is there a table that describes the subjects’ demographics?

- Are the baseline demographics between groups similar?

- Are the subjects generalizable to your patient?

- Are the statistical tests appropriate for the study design and clinical question?

- Are the results presented within the paper?

- Are the results statistically significant and how large is the difference between groups?

- Is there evidence of significance fishing (i.e. changing statistical tests to ensure significance)?

Critical appraisal of…the Discussion/Conclusion

- Do the authors attempt to contextualise non-significant data in an attempt to portray significance? (e.g. talking about findings which had a trend towards significance as if they were significant).

- Do the authors acknowledge limitations in the article?

- Are there any conflicts of interests noted?

This is by no means a comprehensive checklist of how to critically appraise a scientific journal article. However, by answering the previous 20 questions based on a detailed reading of an article, you can appraise most articles for their merit, and thus determine whether the results are valid. I have attempted to list the questions based on the sections most commonly present in a journal article, starting at the introduction and progressing to the conclusion. I believe some of these items are weighted heavier than others (i.e. methodological questions vs journal reputation). However, without taking this list through rigorous testing, I cannot assign a weight to them. Maybe one day, you will be able to critically appraise my future paper: How Online Checklists Influence Healthcare Students’ Ability to Critically Appraise Journal Articles.

Feature Image by Arek Socha from Pixabay

Robert Will

Leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

No Comments on Critical Appraisal: A Checklist

Hi Ella, I have found a checklist here for before and after study design: https://www.nhlbi.nih.gov/health-topics/study-quality-assessment-tools and you may also find a checklist from this blog, which has a huge number of tools listed: https://s4be.cochrane.org/blog/2018/01/12/appraising-the-appraisal/

What kind of critical appraisal tool can be used for before and after study design article? Thanks

Hello, I am currently writing a book chapter on critical appraisal skills. This chapter is limited to 1000 words so your simple 20 questions framework would be the perfect format to cite within this text. May I please have your permission to use your checklist with full acknowledgement given to you as author? Many thanks

Thank you Robert, I came across your checklist via the Royal College of Surgeons of England website; https://www.rcseng.ac.uk/library-and-publications/library/blog/dissecting-the-literature-the-importance-of-critical-appraisal/ . I really liked it and I have made reference to it for our students. I really appreciate your checklist and it is still current, thank you.

Hi Kirsten. Thank you so much for letting us know that Robert’s checklist has been used in that article – that’s so good to see. If any of your students have any comments about the blog, then do let us know. If you also note any topics that you would like to see on the website, then we can add this to the list of suggested blogs for students to write about. Thank you again. Emma.

i am really happy with it. thank you very much

A really useful guide for helping you ask questions about the studies you are reviewing BRAVO

Dr.Suryanujella,

Thank you for the comment. I’m glad you find it helpful.

Feel free to use the checklist. S4BE asks that you cite the page when you use it.

I have read your article and found it very useful , crisp with all relevant information.I would like to use it in my presentation with your permission

That’s great thank you very much. I will definitely give that a go.

I find the MEAL writing approach very versatile. You can use it to plan the entire paper and each paragraph within the paper. There are a lot of helpful MEAL resources online. But understanding the acronym can get you started.

M-Main Idea (What are you arguing?) E-Evidence (What does the literature say?) A-Analysis (Why does the literature matter to your argument?) L-Link (Transition to next paragraph or section)

I hope that is somewhat helpful. -Robert

Hi, I am a university student at Portsmouth University, UK. I understand the premise of a critical appraisal however I am unsure how to structure an essay critically appraising a paper. Do you have any pointers to help me get started?

Thank you. I’m glad that you find this helpful.

Very informative & to the point for all medical students

How can I know what is the name of this checklist or tool?

This is a checklist that the author, Robert Will, has designed himself.

Thank you for asking. I am glad you found it helpful. As Emma said, please cite the source when you use it.

Greetings Robert, I am a postgraduate student at QMUL in the UK and I have just read this comprehensive critical appraisal checklist of your. I really appreciate you. if I may ask, can I have it downloaded?

Please feel free to use the information from this blog – if you could please cite the source then that would be much appreciated.

Robert Thank you for your comptrehensive account of critical appraisal. I have just completed a teaching module on critical appraisal as part of a four module Evidence Based Medicine programme for undergraduate Meducal students at RCSI Perdana medical school in Malaysia. If you are agreeable I would like to cite it as a reference in our module.

Anthony, Please feel free to cite my checklist. Thank you for asking. I hope that your students find it helpful. They should also browse around S4BE. There are numerous other helpful articles on this site.

Subscribe to our newsletter

You will receive our monthly newsletter and free access to Trip Premium.

Related Articles

Risk Communication in Public Health

Learn why effective risk communication in public health matters and where you can get started in learning how to better communicate research evidence.

Why was the CONSORT Statement introduced?

The CONSORT statement aims at comprehensive and complete reporting of randomized controlled trials. This blog introduces you to the statement and why it is an important tool in the research world.

Measures of central tendency in clinical research papers: what we should know whilst analysing them

Learn more about the measures of central tendency (mean, mode, median) and how these need to be critically appraised when reading a paper.

- - Google Chrome

Intended for healthcare professionals

- Access provided by Google Indexer

- My email alerts

- BMA member login

- Username * Password * Forgot your log in details? Need to activate BMA Member Log In Log in via OpenAthens Log in via your institution

Search form

- Advanced search

- Search responses

- Search blogs

- Critically appraising...

Critically appraising qualitative research

- Related content

- Peer review

- Ayelet Kuper , assistant professor 1 ,

- Lorelei Lingard , associate professor 2 ,

- Wendy Levinson , Sir John and Lady Eaton professor and chair 3

- 1 Department of Medicine, Sunnybrook Health Sciences Centre, and Wilson Centre for Research in Education, University of Toronto, 2075 Bayview Avenue, Room HG 08, Toronto, ON, Canada M4N 3M5

- 2 Department of Paediatrics and Wilson Centre for Research in Education, University of Toronto and SickKids Learning Institute; BMO Financial Group Professor in Health Professions Education Research, University Health Network, 200 Elizabeth Street, Eaton South 1-565, Toronto

- 3 Department of Medicine, Sunnybrook Health Sciences Centre

- Correspondence to: A Kuper ayelet94{at}post.harvard.edu

Six key questions will help readers to assess qualitative research

Summary points

Appraising qualitative research is different from appraising quantitative research

Qualitative research papers should show appropriate sampling, data collection, and data analysis

Transferability of qualitative research depends on context and may be enhanced by using theory

Ethics in qualitative research goes beyond review boards’ requirements to involve complex issues of confidentiality, reflexivity, and power

Over the past decade, readers of medical journals have gained skills in critically appraising studies to determine whether the results can be trusted and applied to their own practice settings. Criteria have been designed to assess studies that use quantitative methods, and these are now in common use.

In this article we offer guidance for readers on how to assess a study that uses qualitative research methods by providing six key questions to ask when reading qualitative research (box 1). However, the thorough assessment of qualitative research is an interpretive act and requires informed reflective thought rather than the simple application of a scoring system.

Box 1 Key questions to ask when reading qualitative research studies

Was the sample used in the study appropriate to its research question, were the data collected appropriately, were the data analysed appropriately, can i transfer the results of this study to my own setting, does the study adequately address potential ethical issues, including reflexivity.

Overall: is what the researchers did clear?

One of the critical decisions in a qualitative study is whom or what to include in the sample—whom to interview, whom to observe, what texts to analyse. An understanding that qualitative research is based in experience and in the construction of meaning, combined with the specific research question, should guide the sampling process. For example, a study of the experience of survivors of domestic violence that examined their reasons for not seeking help from healthcare providers might focus on interviewing a sample of such survivors (rather than, for example, healthcare providers, social services workers, or academics in the field). The sample should be broad enough to capture the many facets of a phenomenon, and limitations to the sample should be clearly justified. Since the answers to questions of experience and meaning also relate to people’s social affiliations (culture, religion, socioeconomic group, profession, etc), it is also important that the researcher acknowledges these contexts in the selection of a study sample.

In contrast with quantitative approaches, qualitative studies do not usually have predetermined sample sizes. Sampling stops when a thorough understanding of the phenomenon under study has been reached, an end point that is often called saturation. Researchers consider samples to be saturated when encounters (interviews, observations, etc) with new participants no longer elicit trends or themes not already raised by previous participants. Thus, to sample to saturation, data analysis has to happen while new data are still being collected. Multiple sampling methods may be used to broaden the understanding achieved in a study (box 2). These sampling issues should be clearly articulated in the methods section.

Box 2 Qualitative sampling methods for interviews and focus groups 9

Examples are for a hypothetical study of financial concerns among adult patients with chronic renal failure receiving ongoing haemodialysis in a single hospital outpatient unit.

Typical case sampling —sampling the most ordinary, usual cases of a phenomenon

The sample would include patients likely to have had typical experiences for that haemodialysis unit and patients who fit the profile of patients in the unit for factors found on literature review. Other typical cases could be found via snowball sampling (see below)

Deviant case sampling —sampling the most extreme cases of a phenomenon

The sample would include patients likely to have had different experiences of relevant aspects of haemodialysis. For example, if most patients in the unit are 60-70 years old and recently began haemodialysis for diabetic nephropathy, researchers might sample the unmarried university student in his 20s on haemodialysis since childhood, the 32 year old woman with lupus who is now trying to get pregnant, and the 90 year old who newly started haemodialysis due to an adverse reaction to radio-opaque contrast dye. Other deviant cases could be found via theoretical and/or snowball sampling (see below)

Critical case sampling —sampling cases that are predicted (based on theoretical models or previous research) to be especially information-rich and thus particularly illuminating

The nature of this sample depends on previous research. For example, if research showed that marital status was a major determinant of financial concerns for haemodialysis patients, then critical cases might include patients whose marital status changed while on haemodialysis

Maximum-variation sampling —sampling as wide a range of perspectives as possible to capture the broadest set of information and experiences)

The sample would include typical, deviant, and critical cases (as above), plus any other perspectives identified

Confirming-disconfirming sampling —Sampling both individuals or texts whose perspectives are likely to confirm the researcher’s developing understanding of the phenomenon under study and those whose perspectives are likely to challenge that understanding

The sample would include patients whose experiences would likely either confirm or disconfirm what the researchers had already learnt (from other patients) about financial concerns among patients in the haemodialysis unit. This could be accomplished via theoretical and/or snowball sampling (see below)

Snowball sampling —sampling participants found by asking current participants in a study to recommend others whose experiences would be relevant to the study

Current participants could be asked to provide the names of others in the unit who they thought, when asked about financial concerns, would either share their views (confirming), disagree with their views (disconfirming), have views typical of patients on their unit (typical cases), or have views different from most other patients on their unit (deviant cases)

Theoretical sampling —sampling individuals or texts whom the researchers predict (based on theoretical models or previous research) would add new perspectives to those already represented in the sample

Researchers could use their understanding of known issues for haemodialysis patients that would, in theory, relate to financial concerns to ensure that the relevant perspectives were represented in the study. For example, if, as the research progressed, it turned out that none of the patients in the sample had had to change or leave a job in order to accommodate haemodialysis scheduling, the researchers might (based on previous research) choose to intentionally sample patients who had left their jobs because of the time commitment of haemodialysis (but who could not do peritoneal dialysis) and others who had switched to jobs with more flexible scheduling because of their need for haemodialysis

It is important that a qualitative study carefully describes the methods used in collecting data. The appropriateness of the method(s) selected to use for the specific research question should be justified, ideally with reference to the research literature. It should be clear that methods were used systematically and in an organised manner. Attention should be paid to specific methodological challenges such as the Hawthorne effect, 1 whereby the presence of an observer may influence participants’ behaviours. By using a technique called thick description, qualitative studies often aim to include enough contextual information to provide readers with a sense of what it was like to have been in the research setting.

Another technique that is often used is triangulation, with which a researcher uses multiple methods or perspectives to help produce a more comprehensive set of findings. A study can triangulate data, using different sources of data to examine a phenomenon in different contexts (for example, interviewing palliative patients who are at home, those who are in acute care hospitals, and those who are in specialist palliative care units); it can also triangulate methods, collecting different types of data (for example, interviews, focus groups, observations) to increase insight into a phenomenon.

Another common technique is the use of an iterative process, whereby concurrent data analysis is used to inform data collection. For example, concurrent analysis of an interview study about lack of adherence to medications among a particular social group might show that early participants seem to be dismissive of the efforts of their local pharmacists; the interview script might then be changed to include an exploration of this phenomenon. The iterative process constitutes a distinctive qualitative tradition, in contrast to the tradition of stable processes and measures in quantitative studies. Iterations should be explicit and justified with reference to the research question and sampling techniques so that the reader understands how data collection shaped the resulting insights.

Qualitative studies should include a clear description of a systematic form of data analysis. Many legitimate analytical approaches exist; regardless of which is used, the study should report what was done, how, and by whom. If an iterative process was used, it should be clearly delineated. If more than one researcher analysed the data (which depends on the methodology used) it should be clear how differences between analyses were negotiated. Many studies make reference to a technique called member checking, wherein the researcher shows all or part of the study’s findings to participants to determine if they are in accord with their experiences. 2 Studies may also describe an audit trail, which might include researchers’ analysis notes, minutes of researchers’ meetings, and other materials that could be used to follow the research process.

The contextual nature of qualitative research means that careful thought must be given to the potential transferability of its results to other sociocultural settings. Though the study should discuss the extent of the findings’ resonance with the published literature, 3 much of the onus of assessing transferability is left to readers, who must decide if the setting of the study is sufficiently similar for its results to be transferable to their own context. In doing so, the reader looks for resonance—the extent that research findings have meaning for the reader.

Transferability may be helped by the study’s discussion of how its results advance theoretical understandings that are relevant to multiple situations. For example, a study of patients’ preferences in palliative care may contribute to theories of ethics and humanity in medicine, thus suggesting relevance to other clinical situations such as the informed consent exchange before treatment. We have explained elsewhere in this series the importance of theory in qualitative research, and there are many who believe that a key indicator of quality in qualitative research is its contribution to advancing theoretical understanding as well as useful knowledge. This debate continues in the literature, 4 but from a pragmatic perspective most qualitative studies in health professions journals emphasise results that relate to practice; theoretical discussions tend to be published elsewhere.

Reflexivity is particularly important within the qualitative paradigm. Reflexivity refers to recognition of the influence a researcher brings to the research process. It highlights potential power relationships between the researcher and research participants that might shape the data being collected, particularly when the researcher is a healthcare professional or educator and the participant is a patient, client, or student. 5 It also acknowledges how a researcher’s gender, ethnic background, profession, and social status influence the choices made within the study, such as the research question itself and the methods of data collection. 6 7

Research articles written in the qualitative paradigm should show evidence both of reflexive practice and of consideration of other relevant ethical issues. Ethics in qualitative research should extend beyond prescriptive guidelines and research ethics boards into a thorough exploration of the ethical consequences of collecting personal experiences and opening those experiences to public scrutiny (a detailed discussion of this problem within a research report may, however, be limited by the practicalities of word count limitations). 8 Issues of confidentiality and anonymity can become quite complex when data constitute personal reports of experience or perception; the need to minimise harm may involve not only protection from external scrutiny but also mechanisms to mitigate potential distress to participants from sharing their personal stories.

In conclusion: is what the researchers did clear?

The qualitative paradigm includes a wide range of theoretical and methodological options, and qualitative studies must include clear descriptions of how they were conducted, including the selection of the study sample, the data collection methods, and the analysis process. The list of key questions for beginning readers to ask when reading qualitative research articles (see box 1) is intended not as a finite checklist, but rather as a beginner’s guide to a complex topic. Critical appraisal of particular qualitative articles may differ according to the theories and methodologies used, and achieving a nuanced understanding in this area is fairly complex.

Further reading

Crabtree F, Miller WL, eds. Doing qualitative research . 2nd ed. Thousand Oaks, CA: Sage, 1999.

Denzin NK, Lincoln YS, eds. Handbook of qualitative research . 2nd ed. Thousand Oaks, CA: Sage, 2000.

Finlay L, Ballinger C, eds. Qualitative research for allied health professionals: challenging choices . Chichester: Wiley, 2006.

Flick U. An introduction to qualitative research . 2nd ed. London: Sage, 2002.

Green J, Thorogood N. Qualitative methods for health research . London: Sage, 2004.

Lingard L, Kennedy TJ. Qualitative research in medical education . Edinburgh: Association for the Study of Medical Education, 2007.

Mauthner M, Birch M, Jessop J, Miller T, eds. Ethics in Qualitative Research . Thousand Oaks, CA: Sage, 2002.

Seale C. The quality of qualitative research . London: Sage, 1999.

Silverman D. Doing qualitative research . Thousand Oaks, CA: Sage, 2000.

Journal articles

Greenhalgh T. How to read a paper: papers that go beyond numbers. BMJ 1997;315:740-3.

Mays N, Pope C. Qualitative research: Rigour and qualitative research. BMJ 1995;311:109-12.

Mays N, Pope C. Qualitative research in health care: assessing quality in qualitative research. BMJ 2000;320:50-2.

Popay J, Rogers A, Williams G. Rationale and standards for the systematic review of qualitative literature in health services research. Qual Health Res 1998;8:341-51.

Internet resources

National Health Service Public Health Resource Unit. Critical appraisal skills programme: qualitative research appraisal tool . 2006. www.phru.nhs.uk/Doc_Links/Qualitative%20Appraisal%20Tool.pdf

Cite this as: BMJ 2008;337:a1035

- Related to doi: , 10.1136/bmj.a288

- doi: , 10.1136/bmj.39602.690162.47

- doi: , 10.1136/bmj.a1020

- doi: , 10.1136/bmj.a879

- doi: 10.1136/bmj.a949

This is the last in a series of six articles that aim to help readers to critically appraise the increasing number of qualitative research articles in clinical journals. The series editors are Ayelet Kuper and Scott Reeves.

For a definition of general terms relating to qualitative research, see the first article in this series.

Contributors: AK wrote the first draft of the article and collated comments for subsequent iterations. LL and WL made substantial contributions to the structure and content, provided examples, and gave feedback on successive drafts. AK is the guarantor.

Funding: None.

Competing interests: None declared.

Provenance and peer review: Commissioned; externally peer reviewed.

- ↵ Holden JD. Hawthorne effects and research into professional practice. J Evaluation Clin Pract 2001 ; 7 : 65 -70. OpenUrl CrossRef PubMed Web of Science

- ↵ Hammersley M, Atkinson P. Ethnography: principles in practice . 2nd ed. London: Routledge, 1995 .

- ↵ Silverman D. Doing qualitative research . Thousand Oaks, CA: Sage, 2000 .

- ↵ Mays N, Pope C. Qualitative research in health care: assessing quality in qualitative research. BMJ 2000 ; 320 : 50 -2. OpenUrl FREE Full Text

- ↵ Lingard L, Kennedy TJ. Qualitative research in medical education . Edinburgh: Association for the Study of Medical Education, 2007 .

- ↵ Seale C. The quality of qualitative research . London: Sage, 1999 .

- ↵ Wallerstein N. Power between evaluator and community: research relationships within New Mexico’s healthier communities. Soc Sci Med 1999 ; 49 : 39 -54. OpenUrl CrossRef PubMed Web of Science

- ↵ Mauthner M, Birch M, Jessop J, Miller T, eds. Ethics in qualitative research . Thousand Oaks, CA: Sage, 2002 .

- ↵ Kuzel AJ. Sampling in qualitative inquiry. In: Crabtree F, Miller WL, eds. Doing qualitative research . 2nd ed. Thousand Oaks, CA: Sage, 1999 :33-45.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Published: 08 April 2022

How to appraise the literature: basic principles for the busy clinician - part 1: randomised controlled trials

- Aslam Alkadhimi 1 ,

- Samuel Reeves 2 &

- Andrew T. DiBiase 3

British Dental Journal volume 232 , pages 475–481 ( 2022 ) Cite this article

815 Accesses

1 Citations

2 Altmetric

Metrics details

Critical appraisal is the process of carefully, judiciously and systematically examining research to adjudicate its trustworthiness and its value and relevance in clinical practice. The first part of this two-part series will discuss the principles of critically appraising randomised controlled trials. The second part will discuss the principles of critically appraising systematic reviews and meta-analyses.

Evidence-based dentistry (EBD) is the integration of the dentist's clinical expertise, the patient's needs and preferences and the most current, clinically relevant evidence. Critical appraisal of the literature is an invaluable and indispensable skill that dentists should possess to help them deliver EBD.

This article seeks to act as a refresher and guide for generalists, specialists and the wider readership, so that they can efficiently and confidently appraise research - specifically, randomised controlled trials - that may be pertinent to their daily clinical practice.

Evidence-based dentistry is discussed.

Efficient techniques for critically appraising randomised controlled trials are described.

Important methodological and statistical considerations are explicated.

This is a preview of subscription content, access via your institution

Access options

Subscribe to this journal

Receive 24 print issues and online access

251,40 € per year

only 10,48 € per issue

Rent or buy this article

Prices vary by article type

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

How to appraise the literature: basic principles for the busy clinician - part 2: systematic reviews and meta-analyses

Aslam Alkadhimi, Samuel Reeves & Andrew T. DiBiase

Making sense of the literature: an introduction to critical appraisal for the primary care practitioner

Kishan Patel & Meera Pajpani

Critical appraisal of systematic reviews of intervention in dentistry published between 2019-2020 using the AMSTAR 2 tool

Patrícia Pauletto, Helena Polmann, … Graziela De Luca Canto

Burls A. What is critical appraisal? 2014. Available at http://www.whatisseries.co.uk/whatiscritical-appraisal/ (accessed April 2021).

Hong B, Plugge E. Critical appraisal skills teaching in UK dental schools. Br Dent J 2017; 222: 209-213.

Isham A, Bettiol S, Hoang H, Crocombe L. A Systematic Literature Review of the Information-Seeking Behaviour of Dentists in Developed Countries. J Dent Educ 2016; 80: 569-577.

Critical Appraisal Skills Programme. CASP Checklist. Available at https://casp-uk.net/wp-content/uploads/2018/01/CASP-Randomised-Controlled-Trial-Checklist-2018.pdf (accessed April 2021).

Schulz K F, Altman D G, Moher D, CONSORT Group. CONSORT 2010 statement: updated guidelines for reporting parallel group randomized trials. Ann Int Med 2010; 152 : 726-732.

Sterne J A C, Savović J, Page M J et al. RoB 2: a revised tool for assessing risk of bias in randomised trials. BMJ 2019; DOI: 10.1136/bmj.l4898.

Petrou S, Grey A. Economic evaluation alongside randomised controlled trials: design, conduct, analysis, and reporting. BMJ 2011; DOI: 10.1136/bmj.d1548.

Black W C. The CE plane: a graphic representation of cost-effectiveness. Med Decis Making 1990; 10: 212-214.

Download references

Author information

Authors and affiliations.

Senior Registrar in Orthodontics, The Royal London Hospital Barts Health NHS Trust and East Kent Hospitals University NHS Foundation Trust, London, UK

Aslam Alkadhimi

Dental Core Trainee, East Kent Hospitals University NHS Foundation Trust, UK

Samuel Reeves

Consultant Orthodontist, East Kent Hospitals University NHS Foundation Trust, UK

Andrew T. DiBiase

You can also search for this author in PubMed Google Scholar

Contributions

Aslam Alkadhimi contributed to conceptualisation, literature search, original draft preparation and drafting and critically revising the manuscript; Samuel Reeves contributed to original draft preparation and editing; and Andrew DiBiase contributed to supervision, draft editing and critically revising the manuscript.

Corresponding author

Correspondence to Aslam Alkadhimi .

Ethics declarations

The authors declare no competing interests.

Ethical approval and consent to participate did not apply to this study.

Rights and permissions

Reprints and permissions

About this article

Cite this article.

Alkadhimi, A., Reeves, S. & DiBiase, A. How to appraise the literature: basic principles for the busy clinician - part 1: randomised controlled trials. Br Dent J 232 , 475–481 (2022). https://doi.org/10.1038/s41415-022-4096-y

Download citation

Received : 31 January 2021

Accepted : 25 April 2021

Published : 08 April 2022

Issue Date : 08 April 2022

DOI : https://doi.org/10.1038/s41415-022-4096-y

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Log in using your username and password

- Search More Search for this keyword Advanced search

- Latest content

- Current issue

- Write for Us

- BMJ Journals More You are viewing from: Google Indexer

You are here

- Volume 21, Issue 4

- How to appraise quantitative research

- Article Text

- Article info

- Citation Tools

- Rapid Responses

- Article metrics

This article has a correction. Please see:

- Correction: How to appraise quantitative research - April 01, 2019

- Xabi Cathala 1 ,

- Calvin Moorley 2

- 1 Institute of Vocational Learning , School of Health and Social Care, London South Bank University , London , UK

- 2 Nursing Research and Diversity in Care , School of Health and Social Care, London South Bank University , London , UK

- Correspondence to Mr Xabi Cathala, Institute of Vocational Learning, School of Health and Social Care, London South Bank University London UK ; cathalax{at}lsbu.ac.uk and Dr Calvin Moorley, Nursing Research and Diversity in Care, School of Health and Social Care, London South Bank University, London SE1 0AA, UK; Moorleyc{at}lsbu.ac.uk

https://doi.org/10.1136/eb-2018-102996

Statistics from Altmetric.com

Request permissions.

If you wish to reuse any or all of this article please use the link below which will take you to the Copyright Clearance Center’s RightsLink service. You will be able to get a quick price and instant permission to reuse the content in many different ways.

Introduction

Some nurses feel that they lack the necessary skills to read a research paper and to then decide if they should implement the findings into their practice. This is particularly the case when considering the results of quantitative research, which often contains the results of statistical testing. However, nurses have a professional responsibility to critique research to improve their practice, care and patient safety. 1 This article provides a step by step guide on how to critically appraise a quantitative paper.

Title, keywords and the authors

The authors’ names may not mean much, but knowing the following will be helpful:

Their position, for example, academic, researcher or healthcare practitioner.

Their qualification, both professional, for example, a nurse or physiotherapist and academic (eg, degree, masters, doctorate).

This can indicate how the research has been conducted and the authors’ competence on the subject. Basically, do you want to read a paper on quantum physics written by a plumber?

The abstract is a resume of the article and should contain:

Introduction.

Research question/hypothesis.

Methods including sample design, tests used and the statistical analysis (of course! Remember we love numbers).

Main findings.

Conclusion.

The subheadings in the abstract will vary depending on the journal. An abstract should not usually be more than 300 words but this varies depending on specific journal requirements. If the above information is contained in the abstract, it can give you an idea about whether the study is relevant to your area of practice. However, before deciding if the results of a research paper are relevant to your practice, it is important to review the overall quality of the article. This can only be done by reading and critically appraising the entire article.

The introduction

Example: the effect of paracetamol on levels of pain.

My hypothesis is that A has an effect on B, for example, paracetamol has an effect on levels of pain.

My null hypothesis is that A has no effect on B, for example, paracetamol has no effect on pain.

My study will test the null hypothesis and if the null hypothesis is validated then the hypothesis is false (A has no effect on B). This means paracetamol has no effect on the level of pain. If the null hypothesis is rejected then the hypothesis is true (A has an effect on B). This means that paracetamol has an effect on the level of pain.

Background/literature review

The literature review should include reference to recent and relevant research in the area. It should summarise what is already known about the topic and why the research study is needed and state what the study will contribute to new knowledge. 5 The literature review should be up to date, usually 5–8 years, but it will depend on the topic and sometimes it is acceptable to include older (seminal) studies.

Methodology

In quantitative studies, the data analysis varies between studies depending on the type of design used. For example, descriptive, correlative or experimental studies all vary. A descriptive study will describe the pattern of a topic related to one or more variable. 6 A correlational study examines the link (correlation) between two variables 7 and focuses on how a variable will react to a change of another variable. In experimental studies, the researchers manipulate variables looking at outcomes 8 and the sample is commonly assigned into different groups (known as randomisation) to determine the effect (causal) of a condition (independent variable) on a certain outcome. This is a common method used in clinical trials.

There should be sufficient detail provided in the methods section for you to replicate the study (should you want to). To enable you to do this, the following sections are normally included:

Overview and rationale for the methodology.

Participants or sample.

Data collection tools.

Methods of data analysis.

Ethical issues.

Data collection should be clearly explained and the article should discuss how this process was undertaken. Data collection should be systematic, objective, precise, repeatable, valid and reliable. Any tool (eg, a questionnaire) used for data collection should have been piloted (or pretested and/or adjusted) to ensure the quality, validity and reliability of the tool. 9 The participants (the sample) and any randomisation technique used should be identified. The sample size is central in quantitative research, as the findings should be able to be generalised for the wider population. 10 The data analysis can be done manually or more complex analyses performed using computer software sometimes with advice of a statistician. From this analysis, results like mode, mean, median, p value, CI and so on are always presented in a numerical format.

The author(s) should present the results clearly. These may be presented in graphs, charts or tables alongside some text. You should perform your own critique of the data analysis process; just because a paper has been published, it does not mean it is perfect. Your findings may be different from the author’s. Through critical analysis the reader may find an error in the study process that authors have not seen or highlighted. These errors can change the study result or change a study you thought was strong to weak. To help you critique a quantitative research paper, some guidance on understanding statistical terminology is provided in table 1 .

- View inline

Some basic guidance for understanding statistics

Quantitative studies examine the relationship between variables, and the p value illustrates this objectively. 11 If the p value is less than 0.05, the null hypothesis is rejected and the hypothesis is accepted and the study will say there is a significant difference. If the p value is more than 0.05, the null hypothesis is accepted then the hypothesis is rejected. The study will say there is no significant difference. As a general rule, a p value of less than 0.05 means, the hypothesis is accepted and if it is more than 0.05 the hypothesis is rejected.

The CI is a number between 0 and 1 or is written as a per cent, demonstrating the level of confidence the reader can have in the result. 12 The CI is calculated by subtracting the p value to 1 (1–p). If there is a p value of 0.05, the CI will be 1–0.05=0.95=95%. A CI over 95% means, we can be confident the result is statistically significant. A CI below 95% means, the result is not statistically significant. The p values and CI highlight the confidence and robustness of a result.

Discussion, recommendations and conclusion

The final section of the paper is where the authors discuss their results and link them to other literature in the area (some of which may have been included in the literature review at the start of the paper). This reminds the reader of what is already known, what the study has found and what new information it adds. The discussion should demonstrate how the authors interpreted their results and how they contribute to new knowledge in the area. Implications for practice and future research should also be highlighted in this section of the paper.

A few other areas you may find helpful are:

Limitations of the study.

Conflicts of interest.

Table 2 provides a useful tool to help you apply the learning in this paper to the critiquing of quantitative research papers.

Quantitative paper appraisal checklist

- 1. ↵ Nursing and Midwifery Council , 2015 . The code: standard of conduct, performance and ethics for nurses and midwives https://www.nmc.org.uk/globalassets/sitedocuments/nmc-publications/nmc-code.pdf ( accessed 21.8.18 ).

- Gerrish K ,

- Moorley C ,

- Tunariu A , et al

- Shorten A ,

Competing interests None declared.

Patient consent Not required.

Provenance and peer review Commissioned; internally peer reviewed.

Correction notice This article has been updated since its original publication to update p values from 0.5 to 0.05 throughout.

Linked Articles

- Miscellaneous Correction: How to appraise quantitative research BMJ Publishing Group Ltd and RCN Publishing Company Ltd Evidence-Based Nursing 2019; 22 62-62 Published Online First: 31 Jan 2019. doi: 10.1136/eb-2018-102996corr1

Read the full text or download the PDF:

How To Write a Critical Appraisal

A critical appraisal is an academic approach that refers to the systematic identification of strengths and weakness of a research article with the intent of evaluating the usefulness and validity of the work’s research findings. As with all essays, you need to be clear, concise, and logical in your presentation of arguments, analysis, and evaluation. However, in a critical appraisal there are some specific sections which need to be considered which will form the main basis of your work.

Structure of a Critical Appraisal

Introduction.

Your introduction should introduce the work to be appraised, and how you intend to proceed. In other words, you set out how you will be assessing the article and the criteria you will use. Focusing your introduction on these areas will ensure that your readers understand your purpose and are interested to read on. It needs to be clear that you are undertaking a scientific and literary dissection and examination of the indicated work to assess its validity and credibility, expressed in an interesting and motivational way.

Body of the Work

The body of the work should be separated into clear paragraphs that cover each section of the work and sub-sections for each point that is being covered. In all paragraphs your perspectives should be backed up with hard evidence from credible sources (fully cited and referenced at the end), and not be expressed as an opinion or your own personal point of view. Remember this is a critical appraisal and not a presentation of negative parts of the work.

When appraising the introduction of the article, you should ask yourself whether the article answers the main question it poses. Alongside this look at the date of publication, generally you want works to be within the past 5 years, unless they are seminal works which have strongly influenced subsequent developments in the field. Identify whether the journal in which the article was published is peer reviewed and importantly whether a hypothesis has been presented. Be objective, concise, and coherent in your presentation of this information.

Once you have appraised the introduction you can move onto the methods (or the body of the text if the work is not of a scientific or experimental nature). To effectively appraise the methods, you need to examine whether the approaches used to draw conclusions (i.e., the methodology) is appropriate for the research question, or overall topic. If not, indicate why not, in your appraisal, with evidence to back up your reasoning. Examine the sample population (if there is one), or the data gathered and evaluate whether it is appropriate, sufficient, and viable, before considering the data collection methods and survey instruments used. Are they fit for purpose? Do they meet the needs of the paper? Again, your arguments should be backed up by strong, viable sources that have credible foundations and origins.

One of the most significant areas of appraisal is the results and conclusions presented by the authors of the work. In the case of the results, you need to identify whether there are facts and figures presented to confirm findings, assess whether any statistical tests used are viable, reliable, and appropriate to the work conducted. In addition, whether they have been clearly explained and introduced during the work. In regard to the results presented by the authors you need to present evidence that they have been unbiased and objective, and if not, present evidence of how they have been biased. In this section you should also dissect the results and identify whether any statistical significance reported is accurate and whether the results presented and discussed align with any tables or figures presented.

The final element of the body text is the appraisal of the discussion and conclusion sections. In this case there is a need to identify whether the authors have drawn realistic conclusions from their available data, whether they have identified any clear limitations to their work and whether the conclusions they have drawn are the same as those you would have done had you been presented with the findings.

The conclusion of the appraisal should not introduce any new information but should be a concise summing up of the key points identified in the body text. The conclusion should be a condensation (or precis) of all that you have already written. The aim is bringing together the whole paper and state an opinion (based on evaluated evidence) of how valid and reliable the paper being appraised can be considered to be in the subject area. In all cases, you should reference and cite all sources used. To help you achieve a first class critical appraisal we have put together some key phrases that can help lift you work above that of others.

Key Phrases for a Critical Appraisal

- Whilst the title might suggest

- The focus of the work appears to be…

- The author challenges the notion that…

- The author makes the claim that…

- The article makes a strong contribution through…

- The approach provides the opportunity to…

- The authors consider…

- The argument is not entirely convincing because…

- However, whilst it can be agreed that… it should also be noted that…

- Several crucial questions are left unanswered…

- It would have been more appropriate to have stated that…

- This framework extends and increases…

- The authors correctly conclude that…

- The authors efforts can be considered as…

- Less convincing is the generalisation that…

- This appears to mislead readers indicating that…

- This research proves to be timely and particularly significant in the light of…

You may also like

IMAGES

VIDEO

COMMENTS

Critical appraisal is the course of action for watchfully and systematically examining research to assess its reliability, value and relevance in order to direct professionals in their vital clinical decision making [ 1 ]. Critical appraisal is essential to: Continuing Professional Development (CPD).

Critical appraisal is the assessment of research studies' worth to clinical practice. Critical appraisal—the heart of evidence-based practice—involves four phases: rapid critical appraisal, evaluation, synthesis, and recommendation. This article reviews each phase and provides examples, tips, and caveats to help evidence appraisers ...

Here are some of the tools and basic considerations you might find useful when critically appraising an article. In a nutshell when appraising an article, you are assessing: 1. Its relevance ...

Critical appraisal is the process of carefully and systematically examining research to judge its trustworthiness, and its value and relevance in a particular context. (Burls 2009) ... To critically appraise a journal article, you would have to start by assessing the research methods used in the

Critical appraisal is a crucial component in conducting research and evidence-based clinical practice. One dictionary of epidemiology defines a critical appraisal as the "application of rules of evidence to a study to assess the validity of the data, completeness of reporting, methods and procedures, conclusions, compliance with ethical ...

SuMMarY. Critical appraisal is a systematic process used to identify the strengths. and weaknesse s of a res earch article in order t o assess the usefulness and. validity of r esearch findings ...

Key Points. Critical appraisal is a systematic process used to identify the strengths and weaknesses of a research article. Critical appraisal provides a basis for decisions on whether to use the ...

After selecting an article, you must be able to sit with the article and critically appraise it. Critical appraisal of a journal article is a literary and scientific systematic dissection in an attempt to assign merit to the conclusions of an article. Ideally, an article will be able to undergo scrutiny and retain its findings as valid.

Six key questions will help readers to assess qualitative research #### Summary points Over the past decade, readers of medical journals have gained skills in critically appraising studies to determine whether the results can be trusted and applied to their own practice settings. Criteria have been designed to assess studies that use quantitative methods, and these are now in common use.

Critical appraisal 'The notion of systematic review - looking at the totality of evidence - is quietly one of the most important innovations in medicine over the past 30 years' (Goldacre, Citation 2011, p. xi).These sentiments apply equally to sport and exercise psychology; systematic review or evidence synthesis provides transparent and methodical procedures that assist reviewers in ...

a guide to the critical-appraisal process. SeLeCTION AND CRITICAL APPRAISAL OF ReSeARCH LITeRATURe Ten key questions (Box 1) can be used to assess the validity and relevance of a research article. These questions can assist clinicians to identify the most relevant, high-quality studies that are available to guide their clinical practice.

Critical appraisal is the process of carefully, judiciously and systematically examining research to adjudicate its trustworthiness and its value and relevance in clinical practice. The first part ...

In order to make a decision about implementing evidence into practice, nurses need to be able to critically appraise research. Nurses also have a professional responsibility to maintain up-to-date practice.1 This paper provides a guide on how to critically appraise a qualitative research paper. Qualitative research concentrates on understanding phenomena and may focus on meanings, perceptions ...

1. Introduction to critical appraisal. Critical appraisal is the process of carefully and systematically examining research to judge its trustworthiness, and its value and relevance in a particular context. (Burls 2009) Critical appraisal is an important element of evidence-based medicine. The five steps of evidence-based medicine are:

Summary. Critical appraisal is a systematic process used to identify the strengths and weaknesses of a research article in order to assess the usefulness and validity of research findings. The ...

However, nurses have a professional responsibility to critique research to improve their practice, care and patient safety.1 This article provides a step by step guide on how to critically appraise a quantitative paper. ### Title, keywords and the authors The title of a paper should be clear and give a good idea of the subject area.

Written by Emma Taylor. A critical appraisal is an academic approach that refers to the systematic identification of strengths and weakness of a research article with the intent of evaluating the usefulness and validity of the work's research findings. As with all essays, you need to be clear, concise, and logical in your presentation of ...