Speech recognition, also known as automatic speech recognition (ASR), computer speech recognition or speech-to-text, is a capability that enables a program to process human speech into a written format.

While speech recognition is commonly confused with voice recognition, speech recognition focuses on the translation of speech from a verbal format to a text one whereas voice recognition just seeks to identify an individual user’s voice.

IBM has had a prominent role within speech recognition since its inception, releasing of “Shoebox” in 1962. This machine had the ability to recognize 16 different words, advancing the initial work from Bell Labs from the 1950s. However, IBM didn’t stop there, but continued to innovate over the years, launching VoiceType Simply Speaking application in 1996. This speech recognition software had a 42,000-word vocabulary, supported English and Spanish, and included a spelling dictionary of 100,000 words.

While speech technology had a limited vocabulary in the early days, it is utilized in a wide number of industries today, such as automotive, technology, and healthcare. Its adoption has only continued to accelerate in recent years due to advancements in deep learning and big data. Research (link resides outside ibm.com) shows that this market is expected to be worth USD 24.9 billion by 2025.

Explore the free O'Reilly ebook to learn how to get started with Presto, the open source SQL engine for data analytics.

Register for the guide on foundation models

Many speech recognition applications and devices are available, but the more advanced solutions use AI and machine learning . They integrate grammar, syntax, structure, and composition of audio and voice signals to understand and process human speech. Ideally, they learn as they go — evolving responses with each interaction.

The best kind of systems also allow organizations to customize and adapt the technology to their specific requirements — everything from language and nuances of speech to brand recognition. For example:

- Language weighting: Improve precision by weighting specific words that are spoken frequently (such as product names or industry jargon), beyond terms already in the base vocabulary.

- Speaker labeling: Output a transcription that cites or tags each speaker’s contributions to a multi-participant conversation.

- Acoustics training: Attend to the acoustical side of the business. Train the system to adapt to an acoustic environment (like the ambient noise in a call center) and speaker styles (like voice pitch, volume and pace).

- Profanity filtering: Use filters to identify certain words or phrases and sanitize speech output.

Meanwhile, speech recognition continues to advance. Companies, like IBM, are making inroads in several areas, the better to improve human and machine interaction.

The vagaries of human speech have made development challenging. It’s considered to be one of the most complex areas of computer science – involving linguistics, mathematics and statistics. Speech recognizers are made up of a few components, such as the speech input, feature extraction, feature vectors, a decoder, and a word output. The decoder leverages acoustic models, a pronunciation dictionary, and language models to determine the appropriate output.

Speech recognition technology is evaluated on its accuracy rate, i.e. word error rate (WER), and speed. A number of factors can impact word error rate, such as pronunciation, accent, pitch, volume, and background noise. Reaching human parity – meaning an error rate on par with that of two humans speaking – has long been the goal of speech recognition systems. Research from Lippmann (link resides outside ibm.com) estimates the word error rate to be around 4 percent, but it’s been difficult to replicate the results from this paper.

Various algorithms and computation techniques are used to recognize speech into text and improve the accuracy of transcription. Below are brief explanations of some of the most commonly used methods:

- Natural language processing (NLP): While NLP isn’t necessarily a specific algorithm used in speech recognition, it is the area of artificial intelligence which focuses on the interaction between humans and machines through language through speech and text. Many mobile devices incorporate speech recognition into their systems to conduct voice search—e.g. Siri—or provide more accessibility around texting.

- Hidden markov models (HMM): Hidden Markov Models build on the Markov chain model, which stipulates that the probability of a given state hinges on the current state, not its prior states. While a Markov chain model is useful for observable events, such as text inputs, hidden markov models allow us to incorporate hidden events, such as part-of-speech tags, into a probabilistic model. They are utilized as sequence models within speech recognition, assigning labels to each unit—i.e. words, syllables, sentences, etc.—in the sequence. These labels create a mapping with the provided input, allowing it to determine the most appropriate label sequence.

- N-grams: This is the simplest type of language model (LM), which assigns probabilities to sentences or phrases. An N-gram is sequence of N-words. For example, “order the pizza” is a trigram or 3-gram and “please order the pizza” is a 4-gram. Grammar and the probability of certain word sequences are used to improve recognition and accuracy.

- Neural networks: Primarily leveraged for deep learning algorithms, neural networks process training data by mimicking the interconnectivity of the human brain through layers of nodes. Each node is made up of inputs, weights, a bias (or threshold) and an output. If that output value exceeds a given threshold, it “fires” or activates the node, passing data to the next layer in the network. Neural networks learn this mapping function through supervised learning, adjusting based on the loss function through the process of gradient descent. While neural networks tend to be more accurate and can accept more data, this comes at a performance efficiency cost as they tend to be slower to train compared to traditional language models.

- Speaker Diarization (SD): Speaker diarization algorithms identify and segment speech by speaker identity. This helps programs better distinguish individuals in a conversation and is frequently applied at call centers distinguishing customers and sales agents.

A wide number of industries are utilizing different applications of speech technology today, helping businesses and consumers save time and even lives. Some examples include:

Automotive: Speech recognizers improves driver safety by enabling voice-activated navigation systems and search capabilities in car radios.

Technology: Virtual agents are increasingly becoming integrated within our daily lives, particularly on our mobile devices. We use voice commands to access them through our smartphones, such as through Google Assistant or Apple’s Siri, for tasks, such as voice search, or through our speakers, via Amazon’s Alexa or Microsoft’s Cortana, to play music. They’ll only continue to integrate into the everyday products that we use, fueling the “Internet of Things” movement.

Healthcare: Doctors and nurses leverage dictation applications to capture and log patient diagnoses and treatment notes.

Sales: Speech recognition technology has a couple of applications in sales. It can help a call center transcribe thousands of phone calls between customers and agents to identify common call patterns and issues. AI chatbots can also talk to people via a webpage, answering common queries and solving basic requests without needing to wait for a contact center agent to be available. It both instances speech recognition systems help reduce time to resolution for consumer issues.

Security: As technology integrates into our daily lives, security protocols are an increasing priority. Voice-based authentication adds a viable level of security.

Convert speech into text using AI-powered speech recognition and transcription.

Convert text into natural-sounding speech in a variety of languages and voices.

AI-powered hybrid cloud software.

Enable speech transcription in multiple languages for a variety of use cases, including but not limited to customer self-service, agent assistance and speech analytics.

Learn how to keep up, rethink how to use technologies like the cloud, AI and automation to accelerate innovation, and meet the evolving customer expectations.

IBM watsonx Assistant helps organizations provide better customer experiences with an AI chatbot that understands the language of the business, connects to existing customer care systems, and deploys anywhere with enterprise security and scalability. watsonx Assistant automates repetitive tasks and uses machine learning to resolve customer support issues quickly and efficiently.

Blog - Use Cases

May 17, 2023 | Read time 6 min

7 Real-World Examples of Voice Recognition Technology

Speechmatics’ Autonomous Voice Recognition shows how powerful speech-to-text can be. Speech recognition’s versatility makes it a vital tool in the 21st century.

Speechmatics Team

Speech recognition technology is the hub of millions of homes worldwide – devices that listen to your voice and carry out a subsequent command. You may think that technology doesn’t extend much further, but you might want to grab a ladder – this hole is a deep one.

The technology within speech recognition software goes beyond what most of us know. Speech-to-text, such as Speechmatics’ Autonomous Speech Recognition (ASR), stretches its influence across society. This article will dive into seven examples of speech recognition and areas where speech-to-text technology makes a valuable difference.

1) Doctor’s Virtual Assistant

Despite having vastly different healthcare systems, both the US and the UK suffer from extended wait times . It’s clear that hospitals around the world would benefit from anything that saves them time.

If doctors have easy access to speech-to-text technology, they shorten the average appointment by converting their notes from speech to text instead of transcribing by hand. The less time a doctor spends typing their notes, the more patients they can see during a day.

Furthermore, effective speech recognition systems such as our world-leading ASR cuts out the middleman more frequently. Instead of waiting for a human operative, many medical institutions use speech recognition to help you identify your symptoms and whether you need a doctor.

There is, however, a concern with the information speech-to-text software would ingest – it would likely need to be validated by recognized medical institutions from a data security perspective.

Despite this, speech-to-text in healthcare seems like a no brainer. When you save time, you save lives.

2) Autonomous Bank Deposits

According to a survey from PwC, 32% of customers will ditch a brand they love after a singular negative experience. Good customer service is vital to keeping customers and enticing new ones.

Banks often struggle with customer service, as customers get bounced from employee to manager, explaining the same details repeatedly. This is where speech-to-text software comes into play. As we move further into the 2020s, banks are adapting their services to the technology available.

There are numerous instances of major banks using speech-to-text technology. The Royal Bank of Canada, for example, lets customers pay bills using voice commands. The USAA offers members access to information about account balances, transactions, and spending patterns through Amazon’s Alexa. Banks such as U.S. Bank Smart Assistant provide tips and insights to help customers with their money. If banks want to reduce the need for human employees where possible.

3) Personalizing Adverts

“My phone keeps listening to me!” seems to pop up in modern conversation more and more these days.

What may seem like spyware is in fact speech-to-text technology collecting your data. Your devices listen for accents, speech patterns, and specific vocabulary used to find a consumer’s age, location, and other information. The software then collates that data into keywords which are then fed to you in the form of personalized ads.

While tracking your search history is vital for marketers, speech-to-text offers a more thorough behavior assessment. Text is often quite limited – you say what you need to say in as little words as possible. Speaking is more fluid and offers a better glimpse into your behavior, so by capturing that, marketers can tailor ads more to your needs.

4) Making Our Home Lives Easier

According to Statista, over 5 billion people will use voice-activated search in 2021, with predicted numbers reaching 6.4 billion in 2022. In addition, 30% of voice-assistant customers say they bought the software to control their homes.

In essence, people use speech recognition technology to make their lives easier. It's 2022, why should we trek over to the light switch to turn it on?

The pandemic pushed speech-to-text technology to greater heights, as people ordered shopping through Alexa, Siri, and co more often. Life is becoming as automated as possible.

5) Handsfree Playlist Shuffling

Take a seat in most modern cars and you’ll see ‘Apple CarPlay’ appear on the center console. This allows you to answer and make phone calls, change songs, send messages, and get directions without taking your hands off the steering wheel.

Not only do these features dramatically increase road safety, but they also make the driving experience more comfortable. You don’t need to queue fifty songs in a row and print off directions to your destination. Instead, speech recognition hears your request to send a text message, transcribes, and sends.

None of that would be possible without technology like speech-to-text.

6) Productivity Manager

COVID-19 changed the workplace forever. Offices have adapted since 2020, with many adopting a hybrid approach to working. Speechmatics is no different. Many of our employees work remotely, some work in our head office, and others started using our newly rented WeWork office spaces.

Organizations need to stay modern, or risk being left behind. Speech-to-text technology helps maintain productivity and efficiency no matter where employees are based. Microsoft Teams and Zoom are now office essentials. Emails and documents are transcribed without typing, saving time and hassle.

Meeting minutes are recorded and transcribed so absent workers can catch up. All of this allows for a more forgiving environment where employees can claim back some agency.

7) Giving Air Force Pilots Less to Think About

Fighter planes are the technological pinnacle of most nations’ weapons arsenal. The RAF’s EuroFighter Typhoon, for example, is one of the most feared jets on the planet. A large part of its operating system is done using speech recognition software . The pilot creates a template used for an array of cockpit functions, lightening their workload.

Step back onto the ground and speech-to-text technology is still just as prevalent. Speech recognition helps soldiers access vital mission information, consult maps, and transmit messages in the heat of battle.

Step back even further into government and speech recognition is everywhere. Departments often use it in place of a human operative, saving labor and money.

Speech Recognition Is Everywhere

In this day and age, you’ll be hard-pressed to find an area of your life not influenced by speech recognition technology. The scale is colossal, as while you tell Apple CarPlay to reply to your partner’s message, a doctor is shifting through their transcribed notes, and a fighter pilot is telling their plane to lock onto a target.

Of course, there are still many challenges – the technology is far from perfect – but the benefits are there for all to see. We at Speechmatics will continue to ensure the world reaps ASR’s potential rewards.

Ready to Try Speechmatics?

Try for free and we'll guide you through the implementation of our API. We pride ourselves on offering the best support for your business needs. If you have any questions, just ask.

Related Articles

Mar 7, 2023

Introducing Ursa from Speechmatics

Nov 17, 2023

Transforming the spoken word into written chapters

Rohan Sarin

Product Manager (ML)

Mar 9, 2023

Achieving Accessibility Through Incredible Accuracy with Ursa

Benedetta Cevoli

Senior Data Scientist

Speech Recognition: Everything You Need to Know in 2024

Speech recognition, also known as automatic speech recognition (ASR) , enables seamless communication between humans and machines. This technology empowers organizations to transform human speech into written text. Speech recognition technology can revolutionize many business applications , including customer service, healthcare, finance and sales.

In this comprehensive guide, we will explain speech recognition, exploring how it works, the algorithms involved, and the use cases of various industries.

If you require training data for your speech recognition system, here is a guide to finding the right speech data collection services.

What is speech recognition?

Speech recognition, also known as automatic speech recognition (ASR), speech-to-text (STT), and computer speech recognition, is a technology that enables a computer to recognize and convert spoken language into text.

Speech recognition technology uses AI and machine learning models to accurately identify and transcribe different accents, dialects, and speech patterns.

What are the features of speech recognition systems?

Speech recognition systems have several components that work together to understand and process human speech. Key features of effective speech recognition are:

- Audio preprocessing: After you have obtained the raw audio signal from an input device, you need to preprocess it to improve the quality of the speech input The main goal of audio preprocessing is to capture relevant speech data by removing any unwanted artifacts and reducing noise.

- Feature extraction: This stage converts the preprocessed audio signal into a more informative representation. This makes raw audio data more manageable for machine learning models in speech recognition systems.

- Language model weighting: Language weighting gives more weight to certain words and phrases, such as product references, in audio and voice signals. This makes those keywords more likely to be recognized in a subsequent speech by speech recognition systems.

- Acoustic modeling : It enables speech recognizers to capture and distinguish phonetic units within a speech signal. Acoustic models are trained on large datasets containing speech samples from a diverse set of speakers with different accents, speaking styles, and backgrounds.

- Speaker labeling: It enables speech recognition applications to determine the identities of multiple speakers in an audio recording. It assigns unique labels to each speaker in an audio recording, allowing the identification of which speaker was speaking at any given time.

- Profanity filtering: The process of removing offensive, inappropriate, or explicit words or phrases from audio data.

What are the different speech recognition algorithms?

Speech recognition uses various algorithms and computation techniques to convert spoken language into written language. The following are some of the most commonly used speech recognition methods:

- Hidden Markov Models (HMMs): Hidden Markov model is a statistical Markov model commonly used in traditional speech recognition systems. HMMs capture the relationship between the acoustic features and model the temporal dynamics of speech signals.

- Estimate the probability of word sequences in the recognized text

- Convert colloquial expressions and abbreviations in a spoken language into a standard written form

- Map phonetic units obtained from acoustic models to their corresponding words in the target language.

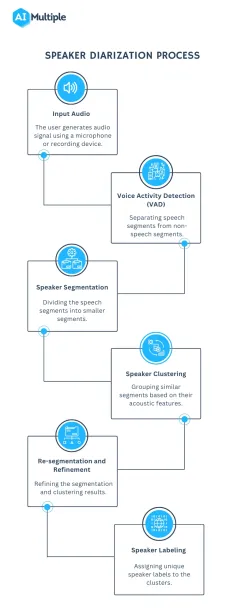

- Speaker Diarization (SD): Speaker diarization, or speaker labeling, is the process of identifying and attributing speech segments to their respective speakers (Figure 1). It allows for speaker-specific voice recognition and the identification of individuals in a conversation.

Figure 1: A flowchart illustrating the speaker diarization process

- Dynamic Time Warping (DTW): Speech recognition algorithms use Dynamic Time Warping (DTW) algorithm to find an optimal alignment between two sequences (Figure 2).

Figure 2: A speech recognizer using dynamic time warping to determine the optimal distance between elements

5. Deep neural networks: Neural networks process and transform input data by simulating the non-linear frequency perception of the human auditory system.

6. Connectionist Temporal Classification (CTC): It is a training objective introduced by Alex Graves in 2006. CTC is especially useful for sequence labeling tasks and end-to-end speech recognition systems. It allows the neural network to discover the relationship between input frames and align input frames with output labels.

Speech recognition vs voice recognition

Speech recognition is commonly confused with voice recognition, yet, they refer to distinct concepts. Speech recognition converts spoken words into written text, focusing on identifying the words and sentences spoken by a user, regardless of the speaker’s identity.

On the other hand, voice recognition is concerned with recognizing or verifying a speaker’s voice, aiming to determine the identity of an unknown speaker rather than focusing on understanding the content of the speech.

What are the challenges of speech recognition with solutions?

While speech recognition technology offers many benefits, it still faces a number of challenges that need to be addressed. Some of the main limitations of speech recognition include:

Acoustic Challenges:

- Assume a speech recognition model has been primarily trained on American English accents. If a speaker with a strong Scottish accent uses the system, they may encounter difficulties due to pronunciation differences. For example, the word “water” is pronounced differently in both accents. If the system is not familiar with this pronunciation, it may struggle to recognize the word “water.”

Solution: Addressing these challenges is crucial to enhancing speech recognition applications’ accuracy. To overcome pronunciation variations, it is essential to expand the training data to include samples from speakers with diverse accents. This approach helps the system recognize and understand a broader range of speech patterns.

- For instance, you can use data augmentation techniques to reduce the impact of noise on audio data. Data augmentation helps train speech recognition models with noisy data to improve model accuracy in real-world environments.

Figure 3: Examples of a target sentence (“The clown had a funny face”) in the background noise of babble, car and rain.

Linguistic Challenges:

- Out-of-vocabulary words: Since the speech recognizers model has not been trained on OOV words, they may incorrectly recognize them as different or fail to transcribe them when encountering them.

Figure 4: An example of detecting OOV word

Solution: Word Error Rate (WER) is a common metric that is used to measure the accuracy of a speech recognition or machine translation system. The word error rate can be computed as:

Figure 5: Demonstrating how to calculate word error rate (WER)

- Homophones: Homophones are words that are pronounced identically but have different meanings, such as “to,” “too,” and “two”. Solution: Semantic analysis allows speech recognition programs to select the appropriate homophone based on its intended meaning in a given context. Addressing homophones improves the ability of the speech recognition process to understand and transcribe spoken words accurately.

Technical/System Challenges:

- Data privacy and security: Speech recognition systems involve processing and storing sensitive and personal information, such as financial information. An unauthorized party could use the captured information, leading to privacy breaches.

Solution: You can encrypt sensitive and personal audio information transmitted between the user’s device and the speech recognition software. Another technique for addressing data privacy and security in speech recognition systems is data masking. Data masking algorithms mask and replace sensitive speech data with structurally identical but acoustically different data.

Figure 6: An example of how data masking works

- Limited training data: Limited training data directly impacts the performance of speech recognition software. With insufficient training data, the speech recognition model may struggle to generalize different accents or recognize less common words.

Solution: To improve the quality and quantity of training data, you can expand the existing dataset using data augmentation and synthetic data generation technologies.

13 speech recognition use cases and applications

In this section, we will explain how speech recognition revolutionizes the communication landscape across industries and changes the way businesses interact with machines.

Customer Service and Support

- Interactive Voice Response (IVR) systems: Interactive voice response (IVR) is a technology that automates the process of routing callers to the appropriate department. It understands customer queries and routes calls to the relevant departments. This reduces the call volume for contact centers and minimizes wait times. IVR systems address simple customer questions without human intervention by employing pre-recorded messages or text-to-speech technology . Automatic Speech Recognition (ASR) allows IVR systems to comprehend and respond to customer inquiries and complaints in real time.

- Customer support automation and chatbots: According to a survey, 78% of consumers interacted with a chatbot in 2022, but 80% of respondents said using chatbots increased their frustration level.

- Sentiment analysis and call monitoring: Speech recognition technology converts spoken content from a call into text. After speech-to-text processing, natural language processing (NLP) techniques analyze the text and assign a sentiment score to the conversation, such as positive, negative, or neutral. By integrating speech recognition with sentiment analysis, organizations can address issues early on and gain valuable insights into customer preferences.

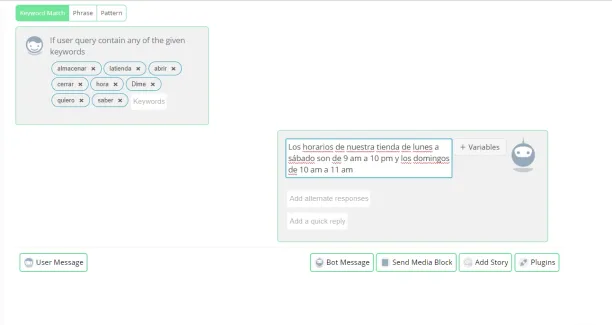

- Multilingual support: Speech recognition software can be trained in various languages to recognize and transcribe the language spoken by a user accurately. By integrating speech recognition technology into chatbots and Interactive Voice Response (IVR) systems, organizations can overcome language barriers and reach a global audience (Figure 7). Multilingual chatbots and IVR automatically detect the language spoken by a user and switch to the appropriate language model.

Figure 7: Showing how a multilingual chatbot recognizes words in another language

- Customer authentication with voice biometrics: Voice biometrics use speech recognition technologies to analyze a speaker’s voice and extract features such as accent and speed to verify their identity.

Sales and Marketing:

- Virtual sales assistants: Virtual sales assistants are AI-powered chatbots that assist customers with purchasing and communicate with them through voice interactions. Speech recognition allows virtual sales assistants to understand the intent behind spoken language and tailor their responses based on customer preferences.

- Transcription services : Speech recognition software records audio from sales calls and meetings and then converts the spoken words into written text using speech-to-text algorithms.

Automotive:

- Voice-activated controls: Voice-activated controls allow users to interact with devices and applications using voice commands. Drivers can operate features like climate control, phone calls, or navigation systems.

- Voice-assisted navigation: Voice-assisted navigation provides real-time voice-guided directions by utilizing the driver’s voice input for the destination. Drivers can request real-time traffic updates or search for nearby points of interest using voice commands without physical controls.

Healthcare:

- Recording the physician’s dictation

- Transcribing the audio recording into written text using speech recognition technology

- Editing the transcribed text for better accuracy and correcting errors as needed

- Formatting the document in accordance with legal and medical requirements.

- Virtual medical assistants: Virtual medical assistants (VMAs) use speech recognition, natural language processing, and machine learning algorithms to communicate with patients through voice or text. Speech recognition software allows VMAs to respond to voice commands, retrieve information from electronic health records (EHRs) and automate the medical transcription process.

- Electronic Health Records (EHR) integration: Healthcare professionals can use voice commands to navigate the EHR system , access patient data, and enter data into specific fields.

Technology:

- Virtual agents: Virtual agents utilize natural language processing (NLP) and speech recognition technologies to understand spoken language and convert it into text. Speech recognition enables virtual agents to process spoken language in real-time and respond promptly and accurately to user voice commands.

Further reading

- Top 5 Speech Recognition Data Collection Methods in 2023

- Top 11 Speech Recognition Applications in 2023

External Links

- 1. Databricks

- 2. PubMed Central

- 3. Qin, L. (2013). Learning Out-of-vocabulary Words in Automatic Speech Recognition . Carnegie Mellon University.

- 4. Wikipedia

Next to Read

10+ speech data collection services in 2024, top 5 speech recognition data collection methods in 2024, top 4 speech recognition challenges & solutions in 2024.

Your email address will not be published. All fields are required.

Related research

Top 11 Voice Recognition Applications in 2024

- > Artificial Intelligence

Automatic Speech Recognition: Types and Examples

- Yashoda Gandhi

- Mar 02, 2022

.jpg)

Voice assistants such as Google Home, Amazon Echo, Siri, Cortana, and others have become increasingly popular in recent years. These are some of the most well-known examples of automatic speech recognition (ASR).

This type of app starts with a clip of spoken audio in a specific language and converts the words spoken into text. As a result, they're also called Speech-to-Text algorithms.

Apps like Siri and the others mentioned above, of course, go even further. They not only extract the text but also interpret and comprehend the semantic meaning of what was said, allowing them to respond to the user's commands with answers or actions.

Automatic Speech Recognition

ASR (Automated speech recognition) is a technology that allows users to enter data into information systems by speaking rather than punching numbers into a keypad. ASR is primarily used for providing information and forwarding phone calls.

In recent years, ASR has grown in popularity among large corporation customer service departments. It is also used by some government agencies and other organizations. Basic ASR systems recognize single-word entries such as yes-or-no responses and spoken numerals.

This enables users to navigate through automated menus without having to manually enter dozens of numerals with no margin for error. In a manual-entry situation, a customer may press the wrong key after entering 20 or 30 numerals at random intervals in the menu and abandon the call rather than call back and start over. This issue is virtually eliminated with ASR.

Natural Language Processing, or NLP for short, is at the heart of the most advanced version of currently available ASR technologies. Though this variant of ASR is still a long way from realizing its full potential, we're already seeing some impressive results in the form of intelligent smartphone interfaces like Apple's Siri and other systems used in business and advanced technology.

Even with a "accuracy" of 96 to 99 percent , these NLP programs can only achieve these kinds of results under ideal circumstances, such as when humans ask them simple yes or no questions with a small number of possible responses based on selected keywords.

Also Read | A Step Towards Artificial Super Intelligence (ASI)

How to carry out Automatic Speech Recognition ?

We’ve listed three significant ways for automatic speech recognition.

Old fashioned way

With ARPA funding in the 1970s, a team at Carnegie Melon University developed technology that could generate transcripts from context-specific speech, such as voice-controlled chess, chart-plotting for GIS and navigation, and document management in the office environment.

These types of products had one major flaw: they could only reliably convert speech to text for one person at a time. This is due to the fact that no two people speak in the same way. In fact, even if the same person speaks the same sentence twice, the sounds are mathematically different when recorded and measured!

Two mathematical realities for silicon brains, the same word to our human, meat-based brains! These ASR-based, personal transcription tools and products were revolutionary and had legitimate business uses, despite their inability to transcribe the utterances of multiple speakers.

Frankenstein approach

In the mid-2000s, companies like Nuance, Google, and Amazon realized that by making ASR work for multiple speakers and in noisy environments, they could improve on the 1970s approach.

Rather than having to train ASR to understand a single speaker, these Franken-ASRs were able to understand multiple speakers fairly well, which is an impressive feat given the acoustic and mathematical realities of spoken language. This is possible because these neural-network algorithms can "learn on their own" when given certain stimuli.

However, slapping a neural network on top of older machinery (remember, this is based on 1970s techniques) results in bulky, complex, and resource-hungry machines like Back-to-the-DeLorean Future's or my college bicycle: a franken-bike that worked when the tides and winds were just right, usually except when it didn't.

While clumsy, the mid-2000s hybrid approach to ASR works well enough for some applications; after all, Siri isn't supposed to answer any real-world data questions.

End to end Deep Learning

The most recent method, end-to-end deep learning ASR, makes use of neural networks and replaces the clumsy 1970s method. In essence, this new approach allows you to do something that was unthinkable even two years ago: train the ASR to recognize dialects, accents, and industry-specific word sets quickly and accurately.

It's a Mr. Fusion bicycle, complete with rusted bike frames and ill-fated auto brands. Several factors contribute to this, including breakthrough math from the 1980s, computing power/technology from the mid-2010s, big data, and the ability to innovate quickly.

It's crucial to be able to experiment with new architectures, technologies, and approaches. Legacy ASR systems based on the franken-ASR hybrid are designed to handle "general" audio rather than specialized audio for industry, business, or even academic purposes.To put it another way, they provide generalized speech recognition and cannot realistically be trained to improve your speech data.

Also Read | Speech Analytics

Types of ASR

The two main types of Automatic Speech Recognition software variants are directed dialogue conversations and natural language conversations.

Detecting a direct dialogue speech

Directed Dialogue conversations are a much less complicated version of ASR at work, consisting of machine interfaces that instruct you to respond verbally with a specific word from a limited list of options, forming their response to your narrowly defined request. Directed conversation Automated telephone banking and other customer service interfaces frequently use ASR software.

Analyze natural language conversation

Natural Language Conversations (the NLP we discussed in the introduction) are more advanced versions of ASR that attempt to simulate real conversation by allowing you to use an open-ended chat format with them rather than a severely limited menu of words. One of the most advanced examples of these systems is the Siri interface on the iPhone.

Applications of ASR

Where continuous conversations must be tracked or recorded word for word, ASR is used in a variety of industries, including higher education, legal, finance, government, health care, and the media.

In legal proceedings, it's critical to record every word, and court reporters are in short supply right now. ASR technology has several advantages, including digital transcription and scalability.

ASR can be used by universities to provide captions and transcriptions in the classroom for students with hearing loss or other disabilities. It can also benefit non-native English speakers, commuters, and students with a variety of learning needs.

ASR is used by doctors to transcribe notes from patient meetings or to document surgical procedures.

Media companies can use ASR to provide live captions and media transcription for all of their productions.

Businesses use ASR for captioning and transcription to make training materials more accessible and to create more inclusive workplaces.

Also Read | Hyper Automation

Advantages of ASR over Traditional Transcriptions

We’ve listed some advantages of ASR over Traditional Transcriptions below :

ASR machines can help improve caption and transcription efficiencies, in addition to the growing shortage of skilled traditional transcribers.

In conversations, lectures, meetings, and proceedings, the technology can distinguish between voices, allowing you to figure out who said what and when.

Because disruptions among participants are common in these conversations with multiple stakeholders, the ability to distinguish between speakers can be very useful.

Users can train the ASR machine by uploading hundreds of related documents, such as books, articles, and other materials.

The technology can absorb this vast amount of data faster than a human, allowing it to recognize different accents, dialects, and terminology with greater accuracy.

Of course, in order to achieve the near-perfect accuracy required, the ideal format would involve using human intelligence to fact-check the artificial intelligence that is being used.

Automatic Speech Recognition Systems (ASRs) can convert spoken words into understandable text.

Its application to air traffic control and automated car environments has been studied due to its ability to convert speech in real-time.

The Hidden Markov model is used in feature extraction by the ASR system for air traffic control, and its phraseology is based on the commands used in air applications.

Speech recognition is used in the car environment for route navigation applications.

Also Read | Artificial Intelligence vs Human Intelligence

Automatic Speech Recognition vs Voice Recognition

The difference between Voice Recognition and Automatic Speech Recognition (the technical term for AI speech recognition, or ASR) is how they process and respond to audio.

You'll be able to use voice recognition with devices like Amazon Alexa or Google Dot. It listens to your voice and responds in real-time. Most digital assistants use voice recognition, which has limited functionality and is usually limited to the task at hand.

ASR differs from other voice recognition systems in that it recognizes speech rather than voices. It can accurately generate an audio transcript using NLP, resulting in real-time captioning. ASR isn't perfect; in fact, even under ideal conditions, it rarely exceeds 90%-95 percent accuracy . However, it compensates for this by being quick and inexpensive.

In essence, ASR is a transcription of what someone said, whereas Voice Recognition is a transcription of who said it. Both processes are inextricably linked, and they are frequently used interchangeably. The distinctions are subtle but noticeable.

Share Blog :

Be a part of our Instagram community

Trending blogs

5 Factors Influencing Consumer Behavior

Elasticity of Demand and its Types

What is PESTLE Analysis? Everything you need to know about it

An Overview of Descriptive Analysis

What is Managerial Economics? Definition, Types, Nature, Principles, and Scope

5 Factors Affecting the Price Elasticity of Demand (PED)

6 Major Branches of Artificial Intelligence (AI)

Dijkstra’s Algorithm: The Shortest Path Algorithm

Scope of Managerial Economics

Different Types of Research Methods

Latest Comments

jasonbennett355

Omg I Finally Got Helped !! I'm so excited right now, I just have to share my testimony on this Forum.. The feeling of being loved takes away so much burden from our shoulders. I had all this but I made a big mistake when I cheated on my wife with another woman and my wife left me for over 4 months after she found out.. I was lonely, sad and devastated. Luckily I was directed to a very powerful spell caster Dr Emu who helped me cast a spell of reconciliation on our Relationship and he brought back my wife and now she loves me far more than ever.. I'm so happy with life now. Thank you so much Dr Emu, kindly Contact Dr Emu Today and get any kind of help you want.. Via Email [email protected] or Call/WhatsApp cell number +2347012841542 Https://web.facebook.com/Emu-Temple-104891335203341

An Easy Introduction to Speech AI

Artificial intelligence (AI) has transformed synthesized speech from monotone robocalls and decades-old GPS navigation systems to the polished tone of virtual assistants in smartphones and smart speakers.

It has never been so easy for organizations to use customized state-of-the-art speech AI technology for their specific industries and domains.

Speech AI is being used to power virtual assistants , scale call centers, humanize digital avatars , enhance AR experiences , and provide a frictionless medical experience for patients by automating clinical note-taking.

According to Gartner Research , customers will prefer using speech interfaces to initiate 70% of self-service customer interactions (up from 40% in 2019) by 2023. The demand for personalized and automated experiences only continues to grow.

In this post, I discuss speech AI, how it works, the benefits of voice recognition technology, and examples of speech AI use cases.

What is speech AI, and what are the benefits?

Speech AI uses AI for voice-based technologies: automatic speech recognition (ASR), also known as speech-to-text, and text-to-speech (TTS). Examples include automatic live captioning in virtual meetings and adding voice-based interfaces to virtual assistants.

Similarly, language-based applications such as chatbots, text analytics, and digital assistants use speech AI as part of larger applications or systems, alongside natural language processing (NLP). For more information, see the Conversational AI glossary .

There are many benefits of speech AI:

- High availability : Speech AI applications can respond to customer calls during and outside of human agent hours, allowing contact centers to operate more efficiently.

- Real-time insights: Real-time transcripts are dictated and used as inputs for customer-focused business analyses such as sentiment analysis, customer experience analysis, and fraud detection.

- Instant scalability: During peak seasons, speech AI applications can automatically scale to handle tens of thousands of requests from customers.

- Enhanced experiences : Speech AI improves customer satisfaction by reducing holding times, quickly resolving customer queries, and providing human-like interactions with customizable voice interfaces.

- Digital accessibility: From speech-to-text to text-to-speech applications, speech AI tools are helping those with reading and hearing impairments to learn from generated spoken audio and written text.

Who is using speech AI and how?

Today, speech AI is revolutionizing the world’s largest industries such as finance, telecommunications, and unified communication as a service (UCaaS).

Companies starting out with deep-learning, speech-based technologies and mature companies augmenting existing speech-based conversational AI platforms benefit from speech AI.

Here are some specific examples of speech AI driving efficiencies and business outcomes.

Call center transcription

About 10 million call center agents are answering 2 billion phone calls daily worldwide. Call center use cases include all of the following:

- Trend analysis

- Regulatory compliance

- Real-time security or fraud analysis

- Real-time sentiment analysis

- Real-time translation

For example, automatic speech recognition transcribes live conversations between customers and call center agents for text analysis, which is then used to provide agents with real-time recommendations for quickly resolving customer queries .

Clinical note taking

In healthcare, speech AI applications improve patient access to medical professionals and claims representatives. ASR automates note-taking during patient-physician conversations and information extraction for claims agents.

Virtual assistants

Virtual assistants are found in every industry enhancing user experience. ASR is used to transcribe an audio query for a virtual assistant. Then, text-to-speech generates the virtual assistant’s synthetic voice. Besides humanizing transactional situations, virtual assistants also help the visually impaired interact with non-braille texts, the vocally challenged to communicate with individuals , and children to learn how to read .

How does speech AI work?

Speech AI uses automatic speech recognition and text-to-speech technology to provide a voice interface for conversational applications. A typical speech AI pipeline consists of data preprocessing stages, neural network model training, and post-processing.

In this section, I discuss these stages in both ASR and TTS pipelines.

Automatic speech recognition

For machines to hear and speak with humans, they need a common medium for translating sound into code. How can a device or an application “see” the world through sound?

An ASR pipeline processes and transcribes a given raw audio file containing speech into corresponding text while minimizing a metric known as the word error rate (WER).

WER is used to measure and compare performance between types of speech recognition systems and algorithms. It is calculated by the number of errors divided by the number of words in the clip being transcribed.

ASR pipelines must accomplish a series of tasks, including feature extraction, acoustic modeling, as well as language modeling.

The feature extraction task involves converting raw analog audio signals into spectrograms, which are visual charts that represent the loudness of a signal over time at various frequencies and resemble heat maps. Part of the transformation process involves traditional signal preprocessing techniques like standardization and windowing .

Acoustic modeling is then used to model the relationship between the audio signal and the phonetic units in the language. It maps an audio segment to the most likely distinct unit of speech and corresponding characters.

The final task in an ASR pipeline involves language modeling. A language model adds contextual representation and corrects the acoustic model’s mistakes. In other words, when you have the characters from the acoustic model, you can convert these characters to sequences of words, which can be further processed into phrases and sentences.

Historically, this series of tasks was performed using a generative approach that required using a language model, pronunciation model, and acoustic model to translate pronunciations to audio waveforms. Then, either a Gaussian mixture model or hidden Markov model would be used to try to find the words that most likely match the sounds from the audio waveform.

This statistical approach was less accurate and more intensive in both time and effort to implement and deploy. This was especially true when trying to ensure that each time step of the audio data matched the correct output of characters.

However, end-to-end deep learning models, like connectionist temporal classification (CTC) models and sequence-to-sequence models with attention , can generate the transcript directly from the audio signal and with a lower WER.

In other words, deep learning-based models like Jasper , QuartzNet , and Citrinet enable companies to create less expensive, more powerful, and more accurate speech AI applications.

Text-to-speech

A TTS or speech synthesis pipeline is responsible for converting text into natural-sounding speech that is artificially produced with human-like intonation and clear articulation.

TTS pipelines potentially must accomplish a number of different tasks, including text analysis, linguistic analysis , and waveform generation.

During the text analysis stage, raw text (with symbols, abbreviations, and so on) is converted into full words and sentences, expanding abbreviations, and analyzing expressions. The output is passed into linguistic analysis for refining intonation, duration, and otherwise understanding grammatical structure. As a result, a spectrogram or mel-spectrogram is produced to be converted into continuous human-like audio.

The preceding approach that I walked through is a typical two-step process requiring a synthesis network and a vocoder network. These are two separate networks trained for the subsequent purposes of generating a spectrogram from text (using a Tacotron architecture or FastPitch ) and generating audio from the spectrogram or other intermediate representation (like WaveGlow or HiFiGAN ).

As well as the two-stage approach, another possible implementation of a TTS pipeline involves using an end-to-end deep learning model that uses a single model to generate audio straight from the text. The neural network is trained directly from text-audio pairs without depending on intermediate representations.

The end-to-end approach decreases complexity as it reduces error propagation between networks, mitigates the need for separate training pipelines, and minimizes the cost of manual annotation of duration information.

Traditional TTS approaches also tend to result in more robotic and unnatural-sounding voices that affect user engagement, particularly with consumer-facing applications and services.

Challenges in building a speech AI system

Successful speech AI applications must enable the following functionality.

Access to state-of-the-art models

Creating highly trained and accurate deep learning models from scratch is costly and time-consuming.

By providing access to cutting-edge models as soon as they’re published, even data and resource-constrained companies can use highly accurate, pretrained models and transfer learning in their products and services out-of-the-box.

High accuracy

To be deployed globally or to any industry or domain, models must be customized to account for multiple languages (a fraction of the 6,500 spoken languages in the world), dialects, accents, and contexts. Some domains use specific terminology and technical jargon .

Real-time performance

Pipelines consisting of multiple deep learning models must run inferences in milliseconds for real-time interactivity, precisely far less than 300 ms, as most users start to notice lags and communication breakdowns around 100 ms, preceding which conversations or experiences begin to feel unnatural.

Flexible and scalable deployment

Companies require different deployment patterns and may even require a mix of cloud, on-premises, and edge deployment. Successful systems support scaling to hundreds of thousands of concurrent users with fluctuating demand.

Data ownership and privacy

Companies should be able to implement the appropriate security practices for their industries and domains, such as safe data processing on-premises or in an organization’s cloud. For example, healthcare companies abiding by HIPAA or other regulations may be required to restrict access to data and data processing.

The future of speech AI

Thanks to advancements in computing infrastructure, speech AI algorithms, increased demand for remote services, and exciting new use cases in existing and emerging industries, there is now a robust ecosystem and infrastructure for speech AI-based products and services.

As powerful as the current applications of speech AI are in driving business outcomes, the next generation of speech AI applications must be equipped to handle multi-language, multi-domain, and multi-user conversations.

Organizations that can successfully integrate speech AI technology into their core operations will be well-equipped to scale their services and offerings for use cases yet to be listed.

Learn how your organization can deploy speech AI by checking out the free ebook, Building Speech AI Applications .

Related resources

- DLI course: Building Conversational AI Applications

- GTC session: Speech AI Demystified

- GTC session: Mastering Speech AI for Multilingual Multimedia Transformation

- GTC session: Speaking in Every Language: A Quick-Start Guide to TTS Models for Accented, Multilingual Communication

- Webinar: How Telcos Transform Customer Experiences with Conversational AI

- Webinar: How to Build and Deploy an AI Voice-Enabled Virtual Assistant for Financial Services Contact Centers

About the Authors

Related posts

How Speech Recognition Improves Customer Service in Telecommunications

Speech AI Spotlight: Reimagine Customer Service with Virtual Agents

Making an NVIDIA Riva ASR Service for a New Language

Exploring Unique Applications of Automatic Speech Recognition Technology

Essential Guide to Automatic Speech Recognition Technology

Efficient CUDA Debugging: Memory Initialization and Thread Synchronization with NVIDIA Compute Sanitizer

Analyzing the Security of Machine Learning Research Code

Comparing Solutions for Boosting Data Center Redundancy

Validating nvidia drive sim radar models.

New Video Series: CUDA Developer Tools Tutorials

From Talk to Tech: Exploring the World of Speech Recognition

What is Speech Recognition Technology?

Imagine being able to control electronic devices, order groceries, or dictate messages with just voice. Speech recognition technology has ushered in a new era of interaction with devices, transforming the way we communicate with them. It allows machines to understand and interpret human speech, enabling a range of applications that were once thought impossible.

Speech recognition leverages machine learning algorithms to recognize speech patterns, convert audio files into text, and examine word meaning. Siri, Alexa, Google's Assistant, and Microsoft's Cortana are some of the most popular speech to text voice assistants used today that can interpret human speech and respond in a synthesized voice.

From personal assistants that can understand every command directed towards them to self-driving cars that can comprehend voice instructions and take the necessary actions, the potential applications of speech recognition are manifold. As technology continues to advance, the possibilities are endless.

How do Speech Recognition Systems Work?

speech to text processing is traditionally carried out in the following way:

Recording the audio: The first step of speech to text conversion involves recording the audio and voice signals using a microphone or other audio input devices.

Breaking the audio into parts: The recorded voice or audio signals are then broken down into small segments, and features are extracted from each piece, such as the sound's frequency, pitch, and duration.

Digitizing speech into computer-readable format: In the third step, the speech data is digitized into a computer-readable format that identifies the sequence of characters to remember the words or phrases that were most likely spoken.

Decoding speech using the algorithm: Finally, language models decode the speech using speech recognition algorithms to produce a transcript or other output.

To adapt to the nature of human speech and language, speech recognition is designed to identify patterns, speaking styles, frequency of words spoken, and speech dialects on various levels. Advanced speech recognition software are also capable of eliminating background noises that often accompany speech signals.

When it comes to processing human speech, the following two types of models are used:

Acoustic Models

Acoustic models are a type of machine learning model used in speech recognition systems. These models are designed to help a computer understand and interpret spoken language by analyzing the sound waves produced by a person's voice.

Language Models

Based on the speech context, language models employ statistical algorithms to forecast the likelihood of words and phrases. They compare the acoustic model's output to a pre-built vocabulary of words and phrases to identify the most likely word order that makes sense in a given context of the speech.

Applications of Speech Recognition Technology

Automatic speech recognition is becoming increasingly integrated into our daily lives, and its potential applications are continually expanding. With the help of speech to text applications, it's now becoming convenient to convert a speech or spoken word into a text format, in minutes.

Speech recognition is also used across industries, including healthcare , customer service, education, automotive, finance, and more, to save time and work efficiently. Here are some common speech recognition applications:

Voice Command for Smart Devices

Today, there are many home devices designed with voice recognition. Mobile devices and home assistants like Amazon Echo or Google Home are among the most widely used speech recognition system. One can easily use such devices to set reminders, place calls, play music, or turn on lights with simple voice commands.

Online Voice Search

Finding information online is now more straightforward and practical, thanks to speech to text technology. With online voice search, users can search using their voice rather than typing. This is an excellent advantage for people with disabilities and physical impairments and those that are multitasking and don't have the time to type a prompt.

Help People with Disabilities

People with disabilities can also benefit from speech to text applications because it allows them to use voice recognition to operate equipment, communicate, and carry out daily duties. In other words, it improves their accessibility. For example, in case of emergencies, people with visual impairment can use voice commands to call their friends and family on their mobile devices.

Business Applications of Speech Recognition

Speech recognition has various uses in business, including banking, healthcare, and customer support. In these industries, voice recognition mainly aims at enhancing productivity, communication, and accessibility. Some common applications of speech technology in business sectors include:

Speech recognition is used in the banking industry to enhance customer service and expedite internal procedures. Banks can also utilize speech to text programs to enable clients to access their accounts and conduct transactions using only their voice.

Customers in the bank who have difficulties entering or navigating through complicated data will find speech to text particularly useful. They can simply voice search the necessary data. In fact, today, banks are automating procedures like fraud detection and customer identification using this impressive technology, which can save costs and boost security.

Voice recognition is used in the healthcare industry to enhance patient care and expedite administrative procedures. For instance, physicians can dictate notes about patient visits using speech recognition programs, which can then be converted into electronic medical records. This also helps to save a lot of time, and correct data is recorded in the best way possible with this technology.

Customer Support

Speech recognition is employed in customer care to enhance the customer experience and cut expenses. For instance, businesses can automate time-consuming processes using speech to text so that customers can access information and solve problems without speaking to a live representative. This could shorten wait times and increase customer satisfaction.

Challenges with Speech Recognition Technology

Although speech recognition has become popular in recent years and made our lives easier, there are still several challenges concerning speech recognition that needs to be addressed.

Accuracy may not always be perfect

A speech recognition software can still have difficulty accurately recognizing speech in noisy or crowded environments or when the speaker has an accent or speech impediment. This can lead to incorrect transcriptions and miscommunications.

The software can not always understand complexity and jargon

Any speech recognition software has a limited vocabulary, so it may struggle to identify uncommon or specialized vocabulary like complex sentences or technical jargon, making it less useful in specific industries or contexts. Errors in interpretation or translation may happen if the speech recognition fails to recognize the context of words or phrases.

Concern about data privacy, data can be recorded.

Speech recognition technology relies on recording and storing audio data, which can raise concerns about data privacy. Users may be uncomfortable with their voice recordings being stored and used for other purposes. Also, voice notes, phone calls, and recordings may be recorded without the user's knowledge, and hacking or impersonation can be vulnerable to these security breaches. These things raise privacy and security concerns.

Software that Use Speech Recognition Technology

Many software programs use speech recognition technology to transcribe spoken words into text. Here are some of the most popular ones:

Nuance Dragon.

Amazon Transcribe.

Google Text to Speech

Watson Speech to Text

To sum up, speech recognition technology has come a long way in recent years. Given its benefits, including increased efficiency, productivity, and accessibility, its finding applications across a wide range of industries. As we continue to explore the potential of this evolving technology, we can expect to see even more exciting applications emerge in the future.

With the power of AI and machine learning at our fingertips, we're poised to transform the way we interact with technology in ways we never thought possible. So, let's embrace this exciting future and see where speech recognition takes us next!

What are the three steps of speech recognition?

The three steps of speech recognition are as follows:

Step 1: Capture the acoustic signal

The first step is to capture the acoustic signal using an audio input device and later pre-process the motion to remove noise and other unwanted sounds. The movement is then broken down into small segments, and features such as frequency, pitch, and duration are extracted from each piece.

Step 2: Combining the acoustic and language models

The second step involves combining the acoustic and language models to produce a transcription of the spoken words and word sequences.

Step 3: Converting the text into a synthesized voice

The final step is converting the text into a synthesized voice or using the transcription to perform other actions, such as controlling a computer or navigating a system.

What are examples of speech recognition?

Speech recognition is used in a wide range of applications. The most famous examples of speech recognition are voice assistants like Apple's Siri, Amazon's Alexa, and Google Assistant. These assistants use effective speech recognition to understand and respond to voice commands, allowing users to ask questions, set reminders, and control their smart home devices using only voice.

What is the importance of speech recognition?

Speech recognition is essential for improving accessibility for people with disabilities, including those with visual or motor impairments. It can also improve productivity in various settings and promote language learning and communication in multicultural environments. Speech recognition can break down language barriers, save time, and reduce errors.

You should also read:

Understanding Speech to Text in Depth

Top 10 Speech to Text Software in 2024

How Speech Recognition is Changing Language Learning

A Complete Guide to Speech Recognition Technology

Last Updated June 11, 2021

Here’s everything you need to know about speech recognition technology. History, how it works, how it’s used today, what the future holds, and what it all means for you.

Back in 2008, many of us were captivated by Tony Stark’s virtual butler, J.A.R.V.I.S, in Marvel’s Iron Man movie.

J.A.R.V.I.S. started as a computer interface. It was eventually upgraded to an artificial intelligence system that ran the business and provided global security.

Learn more about our speech data solutions.

J.A.R.V.I.S. opened our eyes – and ears – to the possibilities inherent in speech recognition technology. While we’re maybe not all the way there just yet, advancements are being used in many ways on a wide variety of devices.

Speech recognition technology allows for hands-free control of smartphones, speakers, and even vehicles in a wide variety of languages.

It’s an advancement that’s been dreamt of and worked on for decades. The goal is, quite simply, to make life simpler and safer.

In this guide we are going to take a brief look at the history of speech recognition technology. We’ll start with how it works and some devices that make use of it. Then we’ll examine what might be just around the corner.

History of Speech Recognition Technology

Speech recognition is valuable because it saves consumers and companies time and money.

The average typing speed on a desktop computer is around 40 words per minute. That rate diminishes a bit when it comes to typing on smartphones and mobile devices.

When it comes to speech, though, we can rack up between 125 and 150 words per minute. That’s a drastic increase.

Therefore, speech recognition helps us do everything faster—whether it’s creating a document or talking to an automated customer service agent .

The substance of speech recognition technology is the use of natural language to trigger an action. Modern speech technology began in the 1950s and took off over the decades.

Speech Recognition Through the Years

- 1950s : Bell laboratories developed “Audrey”, a system able to recognize the numbers 1-9 spoken by a single voice.

- 1960s : IBM came up with a device called “Shoebox” that could recognize and differentiate between 16 spoken English words.

- 1970s : The It led to the ‘Harpy’ system at Carnegie Mellon that could understand over 1,000 words.

- 1990s : The advent of personal computing brought quicker processors and opened the door for dictation technology. Bell was at it again with dial-in interactive voice recognition systems.

- 2000s : Speech recognition achieved close to an 80% accuracy rate. Then Google Voice came on the scene, making the technology available to millions of users and allowing Google to collect valuable data.

- 2010s : Apple launched Siri and Amazon came out with Alexa in a bid to compete with Google. This big three continues to lead the charge.

Slowly but surely, developers have moved towards the goal of enabling machines to understand and respond to more and more of our verbalized commands.

Today’s leading speech recognition systems—Google Assistant, Amazon Alexa, and Apple’s Siri—would not be where they are today without the early pioneers who paved the way.

Thanks to the integration of new technologies such as cloud-based processing and continuous improvements made thanks to speech data collection , these speech systems have continuously improved their ability to ‘hear’ and understand a wider variety of words, languages, and accents .

How Does Voice Recognition Work?

Now that we’re surrounded by smart cars, smart home appliances, and voice assistants, it’s easy to take for granted how speech recognition technology works .

Because the simplicity of being able to speak to digital assistants is misleading. Voice recognition is incredibly complicated—even now.

Think about how a child learns a language.

From day one, they hear words being used all around them. Parents speak and their child listens. The child absorbs all kinds of verbal cues: intonation, inflection, syntax, and pronunciation. Their brain is tasked with identifying complex patterns and connections based on how their parents use language.

But whereas human brains are hard-wired to acquire speech, speech recognition developers have to build the hard wiring themselves.

The challenge is building the language-learning mechanism. There are thousands of languages, accents, and dialects to consider, after all.

That’s not to say we aren’t making progress. In early 2020, researchers at Google were finally able to beat human performance on a broad range of language understanding tasks.

Google’s updated model now performs better than humans in labelling sentences and finding the right answers to a question.

Basic Steps

- A microphone transmits the vibrations of a person’s voice into a wavelike electrical signal.

- This signal in turn is converted by the system’s hardware—a computer’s sound card, for examples—into a digital signal.

- The speech recognition software analyzes the digital signal to register phonemes, units of sound that distinguish one word from another in a particular language.

- The phenomes are reconstructed into words.

To pick the correct word, the program must rely on context cues, accomplished through trigram analysis .

This method relies on a database of frequent three-word clusters in which probabilities are assigned that any two words will be followed by a given third word.

Think about the predictive text on your phone’s keyboard. A simple example would be typing “how are” and you phone would suggest “you?” The more you use it, though, the more it gets to know your tendencies and will suggest frequently used phrases.

Speech recognition software works by breaking down the audio of a speech recording into individual sounds, analyzing each sound, using algorithms to find the most probable word fit in that language, and transcribing those sounds into text.

How do companies build speech recognition technology?

A lot of this depends on what you’re trying to achieve and how much you’re willing to invest.

As it stands, there’s no need to start from scratch in terms of coding and acquiring speech data because much of that groundwork has been laid and is available to be built upon.

For instance, you can tap into commercial application programming interfaces (APIs) and access their speech recognition algorithms. The problem, though, is they’re not customizable.

You might instead need to seek out speech data collection that can be accessed quickly and efficiently through an easy-to-use API, such as:

- The Speech-to-text API from Google Cloud

- The Automatic Speech Recognition (ASR) system from Nuance

- IBM Watson “Speech to text” API

From there, you design and develop software to suit your requirements. For example, you might code algorithms and modules using Python

Regional accents and speech impediments can throw off word recognition platforms, and background noise can be difficult to penetrate, not to mention multiple-voice input. In other words, understanding speech is a much bigger challenge than simply recognizing sounds.

Different Models

- Acoustic : Take the waveform of speech and break it up into small fragments to predict the most likely phonemes in the speech.

- Pronunciation : Take the sounds and tie them together to make words, i.e. associate words with their phonetic representations.

- Language : Take the words and tie them together to make sentences, i.e. predict the most likely sequence of words (or text strings) among several a set of text strings.

Algorithms can also combine the predictions of acoustic and language models to offer outputs the most likely text string for a given speech file input.

To further highlight the challenge, speech recognition systems have to be able to distinguish between homophones (words with the same pronunciation but different meanings), to learn the difference between proper names and separate words (“Tim Cook” is a person, not a request for Tim to cook), and more.

After all, speech recognition accuracy is what determines whether voice assistants become a can’t-live-without accessory.

How Voice Assistants Bring Speech Recognition into Everyday Life

Speech recognition technology has grown leaps and bounds in the early 21 st century and has literally come home to roost.

Look around you. There could be a handful of devices at your disposal at this very moment.

Let’s look at a few of the leading options.

Apple’s Siri

Apple’s Siri emerged as the first popular voice assistant after its debut in 2011. Since then, it has been integrated on all iPhones, iPads, the Apple Watch, the HomePod, Mac computers, and Apple TV.

Siri is even used as the key user interface in Apple’s CarPlay infotainment system, as well as the wireless AirPod earbuds, and the HomePod Mini.

Siri is with you everywhere you go; on the road, in your home, and for some, literally on your body. This gave Apple a huge advantage in terms of early adoption.

Naturally, being the earliest quite often means receiving most of the flack for functionality that might not work as expected.

Although Apple had a big head start with Siri, many users expressed frustration at its seeming inability to properly understand and interpret voice commands.

If you asked Siri to send a text message or make a call on your behalf, it could easily do so. However, when it came to interacting with third-party apps, Siri was a little less robust compared to its competitors.

But today, an iPhone user can say, “Hey Siri, I’d like a ride to the airport” or “Hey Siri, order me a car,” and Siri will open whatever ride service app you have on your phone and book the trip.

Focusing on the system’s ability to handle follow-up questions, language translation, and revamping Siri’s voice to something more human-esque is helping to iron out the voice assistant’s user experience.

As of 2021, Apple hovers over its competitors in terms of availability by country and thus in Siri’s understanding of foreign accents. Siri is available in more than 30 countries and 21 languages – and, in some cases, several different dialects.

Amazon Alexa

Amazon announced Alexa and the Echo to the world in 2014, kicking off the age of the smart speaker.

Alexa is now housed inside the following:

- Smart speaker

- Show (a voice-controlled tablet)

- Spot (a voice-controlled alarm clock)

- Buds headphones (Amazon’s version of Apple’s AirPods).

In contrast to Apple, Amazon has always believed the voice assistant with the most “skills”, (its term for voice apps on its Echo assistant devices) “will gain a loyal following, even if it sometimes makes mistakes and takes more effort to use”.

Although some users pegged Alexa’s word recognition rate as being a shade behind other voice platforms, the good news is that Alexa adapts to your voice over time, offsetting any issues it may have with your particular accent or dialect.

Speaking of skills, Amazon’s Alexa Skills Kit (ASK) is perhaps what has propelled Alexa forward as a bonafide platform. ASK allows third-party developers to create apps and tap into the power of Alexa without ever needing native support.

Alexa was ahead of the curve with its integration with smart home devices. They had cameras, door locks, entertainment systems, lighting, and thermostats.

Ultimately, giving users absolute control of their home whether they’re cozying up on their couch or on-the-go. With Amazon’s Smart Home Skill API , you can enable customers to control their connected devices from tens of millions of Alexa-enabled endpoints.

When you ask Siri to add something to your shopping list, she adds it without buying it for you. Alexa however goes a step further.

If you ask Alexa to re-order garbage bags, she’ll scroll Amazon and order some. In fact, you can order millions of products off Amazon without ever lifting a finger; a natural and unique ability that Alexa has over its competitors.

Google Assistant

How many of us have said or heard “let me Google that for you”? Almost everyone, it seems. It only makes sense then, that Google Assistant prevails when it comes to answering (and understanding) all questions its users may have.

From asking for a phrase to be translated into another language, to converting the number of sticks of butter in one cup, Google Assistant not only answers correctly, but also gives some additional context and cites a source website for the information.