Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 06 October 2023

A whole learning process-oriented formative assessment framework to cultivate complex skills

- Xianlong Xu 1 ,

- Wangqi Shen 2 ,

- A.Y.M. Atiquil Islam ORCID: orcid.org/0000-0002-5430-8057 1 , 3 &

- Yang Zhou 1

Humanities and Social Sciences Communications volume 10 , Article number: 653 ( 2023 ) Cite this article

1710 Accesses

3 Altmetric

Metrics details

In the 21st century, cultivating complex skills has become an urgent educational need, especially in vocational training and learning. The widespread lack of formative assessment during complex problem-solving and skill-learning processes limits students’ self-perception of their weakness and teachers’ effective monitoring of students’ mastery of complex skills in class. To investigate methods of how to design and carry out formative assessments for the learning of complex skills, a whole learning process-oriented formative assessment framework for complex skills was established. To verify the feasibility and effects of the formative assessment, a controlled experiment involving 35 students majoring in industrial robotics from one of Shanghai’s Technical Institutes was designed. The results indicate that the formative assessment can effectively promote students’ learning of conceptual knowledge and the construction and automation of cognitive schema as well as improve students’ competency in the implementation and transference of complex skills. In addition, the formative assessment, which can optimize the allocation of psychological effort by increasing the proportion of germane cognitive load within the overall cognitive load, does not place an additional cognitive load on students. It can provide methodological support for promoting students’ learning of complex skills.

Similar content being viewed by others

Fostering twenty-first century skills among primary school students through math project-based learning

Nadia Rehman, Wenlan Zhang, … Samia Batool

The sub-dimensions of metacognition and their influence on modeling competency

Riyan Hidayat, Hermandra & Sharon Tie Ding Ying

Exploring the connection between deep learning and learning assessments: a cross-disciplinary engineering education perspective

Sabrina Fawzia & Azharul Karim

Introduction

The global, organizational, and technical evolution of the 21st century has created the urgent need for learners to acquire complex skills across all levels of education and training (Maddens et al., 2020 ; OECD, 2018 ). Complex 21st century skills (21CS), such as creativity, complex problem solving, and collaborative learning, are usually characterized and understood as skills that integrate conceptual knowledge, operative technique, and social affective attitude, which can help people solve complex and unstructured problems in real life (Alahmad et al., 2021 ; Jian et al., 2021 ). The cultivation of students’ complex 21CS is an increasing challenge for people learning and working in current and future societies. Assessment of the learning outcomes of complex 21CS has long been highlighted as one of the most challenging issues that educators and researchers currently face (Eseryel et al., 2013 ; Iñiguez-Berrozpe and Boeren, 2020 ; Kerrigan et al., 2021 ).

In recent decades, the role of educational assessment has expanded from the summative display of students’ acquisition of teaching content to a formative evaluation during the teaching process. The concept of formative assessment emphasizes the integration of instruction and evaluation. It requires a dynamic, continuous framework for evaluating performance that promotes students’ progress and the mastery of increasingly sophisticated skills, which is important for the cultivating of complex 21CS (Ackermans et al., 2021 ; Care et al., 2019 ; Nadolski et al., 2021 ). However, since complex 21CS involve complex learning of different but interconnected knowledge and competencies, formative evaluation practices in the school curricula are relatively scarce (Ackermans et al., 2021 ; Webb et al., 2018 ). As a result, there are still some key issues that must be explored in how to evaluate and monitor students’ learning progress under complex context, such as how and when formative assessment should be offered (Bhagat and Spector, 2017 ; Kim, 2021 ).

Complex skill learning

Learning skills to solve ill-structured complex problems has always been an important issue in the field of educational technology (Thima and Chaijaroen, 2021 ). Complex problem solving is often considered to be the key transferable skill for learners in the 21st century to be competent in multiple working scenarios (Koehler and Vilarinho-Pereira, 2021 ). Mayer ( 1992 ) believes that learners’ experiences with complex problem solving contribute to the formation of their cognitive skills. Merrill ( 2002 a) recommends the Pebble-In-The-Pond design model to provide complex skill training.

Van-Merriënboer and Kester ( 2014 ) propose a detailed theoretical explanation for the complex learning process and the composition of complex skills under real problem backgrounds through the Four Components Instructional Design (4C/ID) model, which was introduced in 2002. The process of learning complex skills is considered the integrative realization process of a series of learning objectives, including an integrative mastery of knowledge, skills, and attitudes (Frerejean et al., 2019 ). The implementation of learning activities is based on decomposed complex skills (which are called constituent skills) under the premise of understanding the process of problem solving. During the process of cultivating complex skills, learners must invest sufficient effort into the construction of cognitive schema and achieve rules automation, which is the automation of cognitive schema (Melo, 2018 ). In addition, to avoid placing an excessive cognitive load on learners during learning tasks, based on the Cognitive Load Theory, the 4C/ID model claims to scaffold students’ learning by designing components of supportive information, procedural information, and part-task practice within different tasks (Marcellis et al., 2018 ).

The 4C/ID model reveals the learning process during complex problem solving, which provides researchers and practitioners with direct and clear teaching approaches (Costa et al., 2021 ; Merrill, 2002 b). This model is widely used in the field of complex learning because it can help learners master complex skills and improve skill transfer (Frerejean et al., 2019 ; Maddens et al., 2020 ; Zhou et al., 2020 ). The instructional effect of 4C/ID on complex skills has been confirmed by multiple studies (Melo and Miranda, 2015 ; Van-Rosmalen et al., 2013 ). Similarly, the authors’ team found in their research focused on Chinese vocational education that applying the 4C/ID model in instructional design can better enhance students’ academic performance (Xu et al., 2019 ). Therefore, our study uses the 4C/ID model to explain the process of learning complex skills.

Formative assessment of complex skill learning

Formative assessment has been widely recognized as a preferred means of facilitating learning and providing diagnostic information for students and teachers (McCallum and Milner, 2021 ). Formative assessment can evaluate each student comprehensively (Li et al., 2020 ), which is conducive to stimulating students’ internal drive and improving their engagement (Liu, 2022 ). Meanwhile, formative assessment provides students with feedback about learning as an external reference for self-reflection so as to reduce the metacognitive load, adjust and optimize the original knowledge structure, and to continue to develop high-level thinking skills such as analysis, evaluation, and creation (Le and Cai, 2019 ). Although the idea that assessment design should be developed with learning tasks geared toward acquiring complex skills has been proposed, there are few studies that practically explore this domain (Frerejean et al., 2019 ).

Most of the existing research on complex skill evaluation focuses on supporting students’ complex skill learning by applying the 4C/ID model to design curriculum. Summative assessment has been conducted in these studies from the cognitive perspective of skill mastery and cognitive load and the emotional perspective of learning motivation and attitude (Melo and Miranda, 2015 ; Larmuseau et al., 2019 ). However, previous studies have gradually revealed the limitations of instructional design based on summative evaluation in promoting the learning of complex skills. Through a study on 171 psychological and educational science students, Larmuseau et al. ( 2018 ) found that the setting of learning tasks and procedural information had a minimal effect on students’ learning. As such, subsequent research should be conducted on helping learners to choose their own learning task paths better through learners’ collection and evaluation of information in the learning process. Zhou et al. ( 2020 ) research showed that teachers’ demand-oriented intervention could not effectively narrow the gap among students in the class concerning academic performance. Ndiaye et al. ( 2021 ) call for more in-depth measurement and intervention in the teaching process. In a word, these studies illustrate the urgency of formative assessment design and practical research in the field of complex skill learning.

However, current research on the formative assessment of complex skills has faced a series of challenges, including the need to construct an assessment framework concerning specific complex skills, the need to determine how and when to provide formative assessment, and the need to determine the impact of formative assessment on complex skill learning (Bhagat and Spector, 2017 ; Kim, 2021 ; Min, 2012 ). Recently, some researchers have started to focus on the design and implementation of formative assessment and the development of an assessment system. A common method is to provide corrective or cognitive feedback when designing support information for learning tasks. For example, Marcellis et al. ( 2018 ) provided real-time corrective feedback for learners through a Lint code analyzer offered by Android Studio; they also provided cognitive feedback for learners by offering lectures on the problem-solving process. Frerejean et al. ( 2019 ) described a medical practice course based on the 4C/ID model in a blended learning environment in which peers and educators provided corrective and cognitive feedback upon the completion of tasks to promote students’ self-reflection on rules and problem-solving mental models. Leif et al. ( 2020 ) hold that it is necessary to incorporate procedural information composed of operational instruction with corrective feedback using the construction of digital learning environments for complex skill learning based on the 4C/ID model to help students learn rules according to their needs.

Existing studies have put forward some operational modes for how to design courses and provide formative assessment in an ICT-integrated learning environment. However, the main problem lies in the application of formative assessment methods to teaching processes in real classes. On one hand, learners’ understanding of evaluation indicators is the key factor in determining whether formative evaluation can effectively help them identify and overcome their own weaknesses. The teaching process and assessment should be consistent to ensure that students can successfully solve learning tasks; however, existing instructional and assessing frameworks fail to do this (Larmuseau, 2020 ; Pardo et al., 2019 ; Van-Gog et al., 2010 ). On the other hand, as subject experts conducting evaluation in classroom settings, teachers play an important role in monitoring learners’ complex skill learning processes and identifying students’ problems (Crossouard, 2011 ; Sulaiman et al., 2020 ). However, existing studies rarely discuss how to provide teachers with feedback channels concerning formative assessment results to improve the effectiveness of assessment.

Few studies have explored how to implement formative evaluation and obtain helpful feedback on complex skill learning in class. Frerejean et al. ( 2021 ) offer guidance on how to provide formative assessment to cultivate teachers’ differentiated instruction skills. However, although the study theoretically describes the formative assessment design process of complex skill learning to a relatively complete extent, it does not verify the effectiveness of the formative assessment on students’ cognitive and emotional development.

Research questions

Previous research has mainly focused on how ICT can support the timely provision of feedback. Only a few studies have focused on the whole process of formative assessment intervention during learning processes from the perspective of formative assessment framework construction. Meanwhile, there is a lack of analysis on the effect of formative assessment intervention from multiple points of view. Therefore, to monitor and intervene in complex skill learning processes effectively, we aim to construct a formative assessment framework that focuses on the whole learning process of complex skills. Three research questions have been raised to address the current lack of information in this field:

How could the formative assessment model be established to assess the learning progress dynamically, longitudinally, and comprehensively?

How should formative assessment be designed and administered during the complex skill learning processes?

How could the effect of formative assessment on the complex skill learning process be evaluated and understood?

To address these questions, a controlled experimental study was carried out on the complex skill of “Industrial Robot Programming.” Thirty-five students from one of Shanghai’s Technical Institutes participated in this research.

Whole learning process–oriented formative assessment framework for complex skill learning

The first step in accomplishing a dynamic, longitudinal, and comprehensive assessment of students’ learning is understanding the entire scope of complex skill learning processes. The effective cultivation of students’ complex skills requires a systematic approach which could reflect a contemporary understanding of the educational relationships between learning inputs, learning processes, and learning outputs.

Astin ( 1993 ) proposed the I-E-O model and claimed the potential influence of students’ input and environment on their output. The System Theory developed by Bertalanffy also held to the idea that input influences output (Bertalanffy, 1968 ; Yanto et al., 2011 ). Salam, ( 2015 ) suggested that as far as the educational domain is concerned, to implement teaching systematically, teachers and researchers should consider input, process, and output and determine the relationships between learning objectives, content, methods, and evaluation. Therefore, combining the 4C/ID theory and the above elements, the key concepts for the whole complex skill learning process are extracted, including learning objectives in specialized courses, learning environment, construction, automation and transfer of cognitive schema, formative evaluation, mastery of complex skills and problem solving. They correspond to the input, environment, content, method, process, and output in the learning process, respectively.

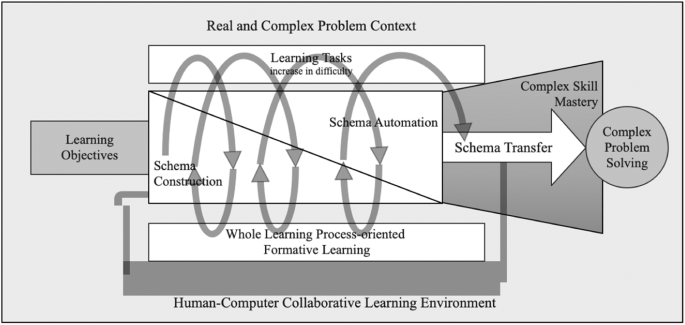

Moreover, to reveal students’ concrete learning process, we must ascertain the pathways for complex skill learning. The instructional design method of the spiral curriculum proposed by Bruner ( 1977 ) and the Spiral Human Performance Improvement (HPI) Framework proposed by Marker et al. ( 2014 ) provided us with inspiration. Bruner indicated that students’ mastery of knowledge often climbs from simple to complex. Marker et al. used spiral channels in their spiral HPI framework to the cyclical thinking process that professionals experience when facing complex problems. Based on these ideas, we defined the learning process as the process of developing cognitive schemas supported by a series of learning tasks with increasing difficulty and repetitive practice tasks, during which formative assessment ensures student learning and promotes the cycle of learning process for complex skill. This learning process needs to be carried out smoothly under the support of specific technical environments. Finally, we proposed the Spiral Complex Skill Learning Framework (SCSLF).

As shown in Fig. 1 , the whole process of learning complex skills is composed of learning input, learning environments and processes, and learning output. Learning input refers to learning objectives that have been designed with regard to learners’ characteristics and the learning content involved in the given complex skills. The learning environment and process refer to the cultivation of students’ cognition and attitude regarding the learning situation, learning task, assessment, and technical environment. The learning environment includes learning tasks designed in real and complex problem situations and formative assessment based on the human-computer collaborative assessment environment. In addition, as the process of learning complex skills often involves repeated exercises of constituent skills and the increase of task difficulty, which is consistent with the instructional design of Bruner’s ( 1977 ) spiral curriculum, the spiral channel in the SCSLF is used to represent students’ complete learning path. During the learning process, students acquire complex skills through steps ranging from simple to complex: students repeatedly construct proficient cognitive schemas through learning tasks with increasing difficulty and formative feedback, and they acquire the ability to use and transfer schemas comprehensively. After completing relatively lower-level complex skills by following the above process, students attempt to learn more difficult complex skills until they eventually master them all. Learning output refers to the students’ ability to solve problems in similar situations using the complex skill.

The figure presents the whole process of learning complex skills.

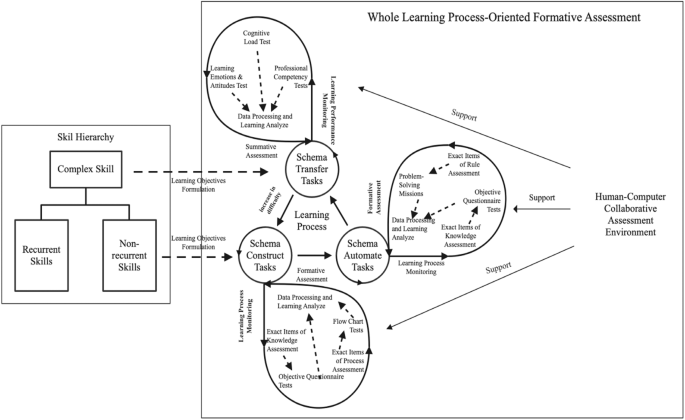

On this basis, we have determined the development method of formative evaluation tools, the implementation process and approaches of formative assessment, and the effect verification method based on the understanding that the SCSLF provides. The formative assessment design for the whole learning process is shown in Fig. 2 .

The figure indicates the whole learning process–oriented formative assessment process for complex skill learning.

First, since the learning process is an upward spiral that is integrated by schema construction, schema automation, and schema transfer, it is possible to establish learning objectives of constituent skills within each level based on skill hierarchy relationships. Thus, we broke down corresponding task objectives to form a comprehensive and measurable assessment indicator system.

Second, the formative assessment tools were developed. Based on the 4C/ID model, the formative assessment process was integrated into the tasks of schema construction, schema proficiency, and comprehensive application and transfer. According to the assessment indicators system above, the corresponding conceptual knowledge tests, process tests, rule tests, and comprehensive project tests were designed to monitor and promote students’ learning process.

Third, we made recommendations for the environment in which the course would take place. The effective implementation of evaluation tasks, as well as the collection, processing, and feedback of learning process data could be conducted through a human-machine collaborative assessment environment.

Finally, to verify the effectiveness of the formative assessment for the whole complex skill learning process, we carried out the summative evaluation of learners’ academic performance through a comprehensive transfer task of complex skills. Because the learner’s cognitive load, learning attitude, and emotional factors also play important roles in the learning process, by adding relevant tests to the summative evaluation, the influence of formative assessment was further verified by the comprehensive summative evaluation.

As mentioned above, we explored how to integrate formative assessment into the overall process of students’ complex skill learning in aspects of theory and methodology. We explored the feasibility and means of applying the whole learning process-oriented formative assessment framework for learning real complex skills in the classroom and ascertained its effectiveness in promoting students’ complex skill cultivation.

Industrial Robot Trajectory Programming is a professional course for Mechatronics students at the Shanghai Technical Institute of Electronics & Information (STIEI). In this course, students are required to configure a workstation that can make an industrial robot move according to a specific trajectory in a simulated environment. The environment is created by a computer that mimics a real-world situation.

Freshmen majoring in Mechatronics generally are characterized by difficulty in understanding and mastering the knowledge and skills related to industrial robot programming and lack of practical ability. To improve students’ learning effect regarding complex skills, our team spent a semester with teachers to design a full-task course based on the 4C/ID model. In the previous study, students who received full-task instruction demonstrated better academic performance after the course than those who received traditional lecture-and-exercise-based learning. However, through communication with teachers, we noticed that there were still large learning gaps between application skills and transferable skills. These problems further raised our expectations that formative assessment would improve students’ learning of complex skills. Therefore, we applied the whole learning process-oriented formative assessment framework to the Industrial Robot Trajectory Programming course to improve the learning effect of the whole task-based course.

The experiment and data collection procedure for the study was approved by the Research Ethics Panel of East China Normal University in China. The authors’ team successfully passed the relevant ethics tests. Before conducting the experiment, we conducted verbal recruitment of participants and obtained informed consent from collaborating schools, teachers, and students. Sensitive data collected during the experiment is stored securely in the researchers’ personal database and kept strictly confidential. All data will be completely destroyed within three years after the research is concluded.

Participants

Thirty-five students from two classes in the Mechatronics Division in STIEI participated in the experiment. They attended the Industrial Robot Trajectory Programming course in their second year and had at least two lessons a week of one and a half hours each during the study. Neither class had any experience in programming industrial robotics. Before the experiment, we conducted a pre-test on the knowledge concepts related to the course for the two classes, and the result was that the students in the two classes got similar low scores, and there was no significant difference between the two. As such, we randomly selected the two classes as the experimental group and the control group, including 16 students in the experimental group and 19 students in the control group. Teachers at STIEI volunteered to participate in the study and were responsible for teaching under the two experimental conditions.

Designing the formative assessment of industrial robot programming skill learning

Constructing a formative assessment indicators system.

The first step of achieving a comprehensive and dynamic evaluation and monitoring learning processes for complex skills was to construct a formative assessment index system for complex skills. In order to extract well-organized, measurable, and systematic evaluation indicators from the learning objectives of the course, it was necessary to divide the learning objectives into different phases.

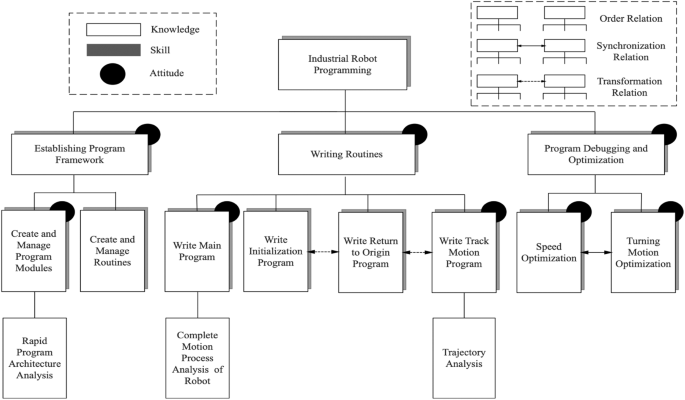

After consulting researchers and professional teachers who were experts in the field, we defined the learning objective of “Industrial Robot Trajectory Programming” as the ability to skillfully configure a robot trajectory motion workstation in an industrial robot simulation program based on the analysis of customer needs. This included the construction of a robot program framework, the compilation of a main program and other functional programs, and the optimization and debugging of the program to successfully and smoothly solve trajectory and motion problems. The skill hierarchy of Industrial Robot Trajectory Programming is shown in Fig. 3 .

The presents the skill hierarchy of industrial robot trajectory programming.

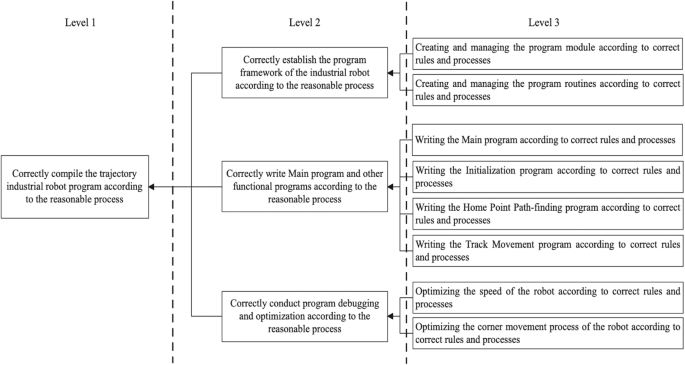

After dividing recurrent and non-recurrent skills, we designed the formative assessment indicator system based on the requirements of the learning objectives of each constituent skill (see Fig. 4 ). As can be seen in Table 1 , we proposed eighteen specific assessment points. These cover concepts and attitudes related to problem-solving, the process of problem-solving, rules of problem-solving, and the ability of the comprehensive implementation and transferring of the complex skills. These assessment points indicate the corresponding relationships between formative assessment objectives and key points of assessment.

The figure shows the formative assessment objectives hierarchy of the industrial robot programming skill.

Designing formative assessment tasks

To discern when the assessment should be given and to implement real-time monitoring of learning, we designed different types of learning tasks into which assessment indicators were integrated.

According to the key indicators of formative assessment, four kinds of assessment tests were used to investigate learners’ academic performance: the construction, automation, comprehensive implementation, and transfer competencies of cognitive schema during the learning process. Firstly, the knowledge test was designed to evaluate learners’ concept mastery through objective questions and answers. Secondly, the process test was designed to examine the completeness and accuracy of the learner’s grasp of the problem-solving process in the form of a flow chart. Thirdly, the rule test was used to examine the learner’s ability to use operational skills and related rules to solve practical problems in specific situations. Finally, the test for implementation and transferring examined the learners’ application and transfer ability through completing comprehensive tasks in similar situations.

According to the above assessment test types, four formative assessment tasks and one summative task for the industrial robot programming skill learning were designed, as shown in Table 2 . Task 1 mainly assessed whether students had mastered the process of programming robot trajectory motion according to the specific needs of different customers. Tasks 2 to 4 mainly assessed whether the students had mastered the skills required to create a qualified program framework and to write and optimize the program according to the specific needs of different customers with fixed rules and operating steps. Task 5 mainly assessed whether the students had mastered the analysis and creation of industrial robot trajectory motion programming in similar situations according to the different needs of customers.

After implementing the aforementioned process-oriented assessment, the evaluation results were provided to students individually, as well as the aggregated statistics to the teacher in both the experimental and control groups. Teachers offered guidance to individual students or the entire class based on the concepts with higher error rates, incorrect problem-solving procedures, and mistakes during the program execution process that resulted in errors. This guidance was delivered through direct lectures, breaking down problem-solving processes and identifying reasons, and offering one-on-one operational support, among other methods.

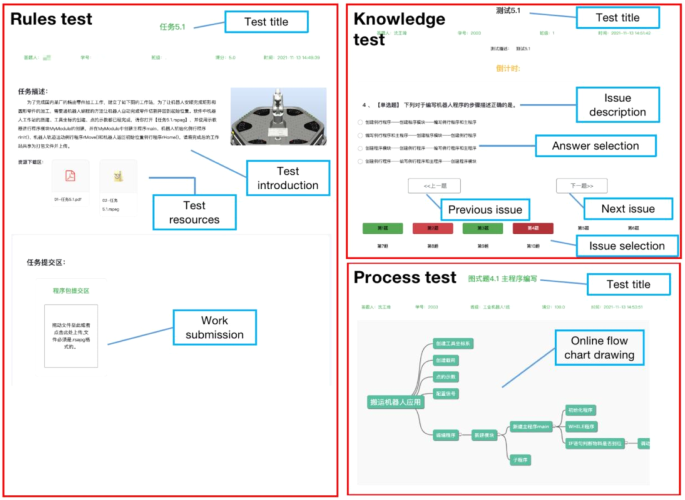

Human-computer collaborative assessment environment

The next problem lay in determining how the assessment should be carried out, specifically, how to collect and process the data acquired from students’ task results and to offer instantaneous feedback to teachers and students. The Complex Skills Automatic Assessment System (CSAAS) is an automatic human-computer collaborative assessment system developed by the authors’ research group to address this problem.

The CSAAS is a computer-based learning environment designed for technical and vocational education and training students in Industrial Robotics through presenting hypermedia materials, collecting and analyzing data, and visualizing the learning process and outcomes. The CSAAS encompasses a behavioral recording/analyzing module, an assessment module, and a feedback module to assess students’ learning trajectories and outcomes. The effect of CSAAS on students’ complex skill learning behavior has been verified through an 8-week experiment in which the students showed high academic performance, perceived usefulness and satisfaction with the system. The relevant research results have been compiled into the academic article which is under review currently.

As shown in Fig. 5 , CSAAS offers an interface in which students can submit compressed packages, question answers, flow charts, and other contents according to the requirements of different tasks. The data acquisition methods of different measurements are defined according to different aspects of the course content. For example, during the knowledge test and collection of flow chart data, the results are collected automatically by the computer, while in the rules test evaluation process, students uploaded their products to the system in a classroom equipped with computers. As a result, different data processing methods were adopted. For example, the computer automatically processed the obtained knowledge test data, and the data from the flow chart and rules tests was adjusted and scored manually. After the automated collection and manual processing of the test results, the CSAAS was used to perform statistical analysis on the collected data and to provide specific feedback items for each test based on the formative assessment indicators framework to support students’ schema modification.

The figure presents the CSAAS offers an interface in which students can submit compressed packages, question answers and flow charts.

Verifying the effectiveness of whole learning process–oriented formative assessment

Lastly, to verify the formative evaluation effectiveness of the Robot Programming Skill on the whole learning process, we carried out a summative assessment of complex skills after students had completed the learning processes of all constituent skills. Usually, assigning a comprehensive task concerning transfer competencies is the most direct and effective way to determine the influence of formative assessment on complex learning. Based on assessment Task 5, students’ knowledge concept acquisition, the completeness of schema construction, and the standardization and proficiency of schema application were tested in a similar and real robot programming problem setting. Another method used was to conduct a longitudinal comparison of students’ personal competencies to verify the effect of formative assessment through the implementation of timely feedback and intervention based on assessment results. The current study analyzes learners’ procedural learning status and final mastery of each aspect of industrial robot programming skills based on the formative assessment indicators system.

In addition, complex learning tasks and process assessment may aggravate students’ cognitive load, resulting in a negative impact on learning (Larmuseau et al., 2019 ; Marcellis et al., 2018 ; Melo, 2018 ). Motivational characteristics such as self-efficacy are important components for the understanding of students’ learning output and problem solving (Larmuseau et al., 2018 ; Peranginangin et al., 2019 ). We validated questionnaires to verify the impact of formative assessment on learners’ cognitive load and self-efficacy. The purpose of this was to explain the influence of the complex skill learning process on learners’ internal emotions and psychological efforts under the guidance of the whole learning process–oriented assessment.

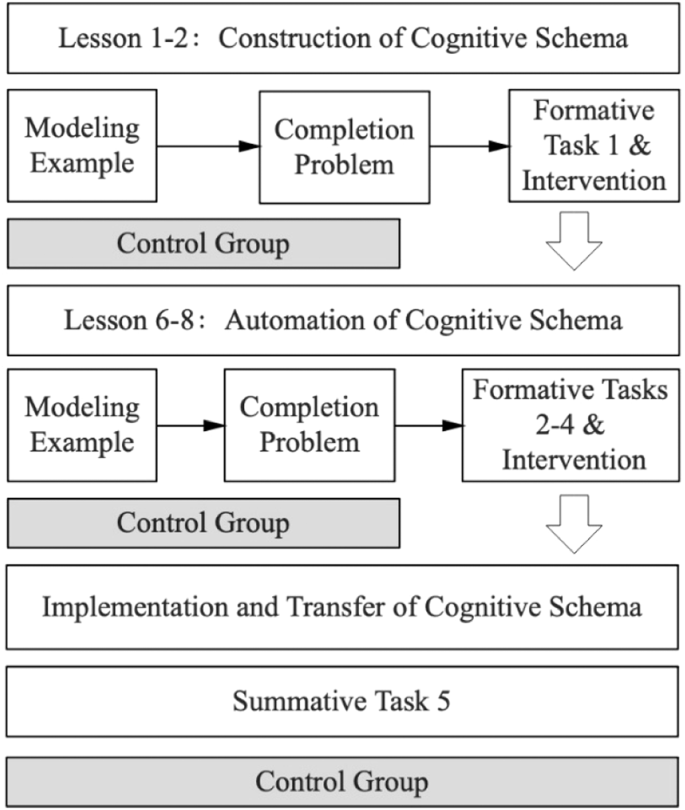

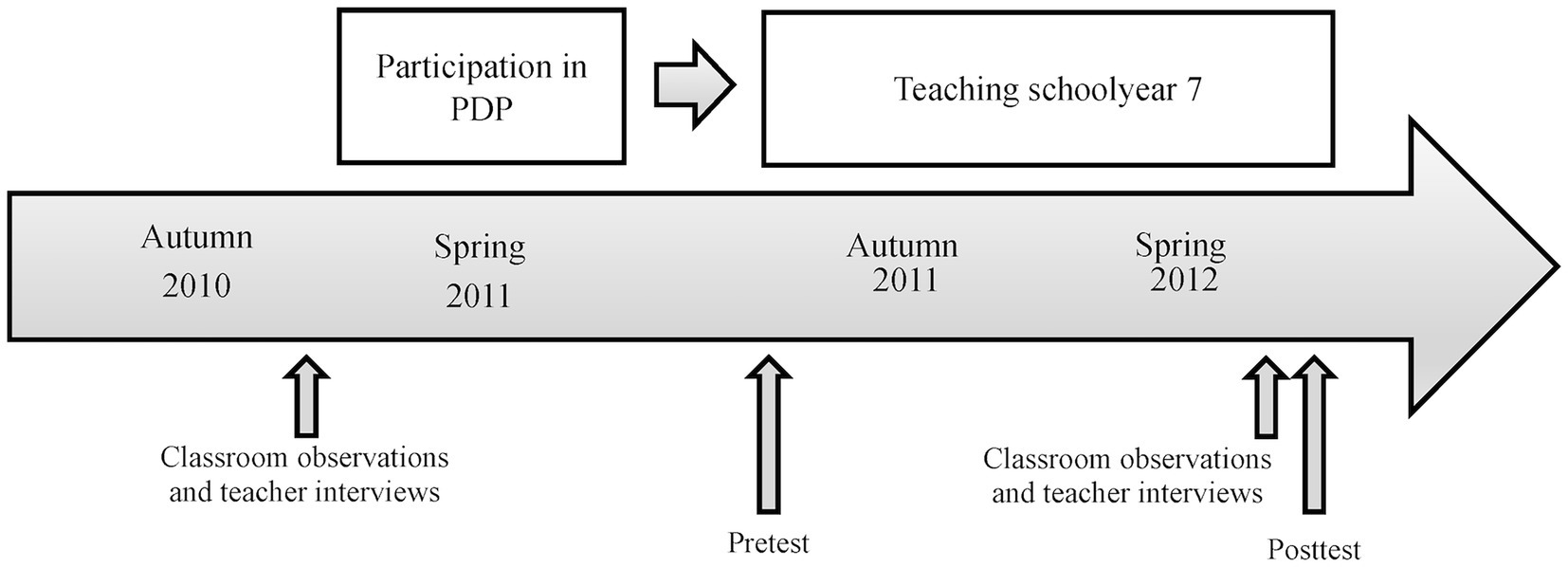

Experimental procedures

A total of eight lessons were designed, and two lessons were taught every week (see Fig. 6 ). Since it is impossible to control the complexity and order of complex tasks in class, we referred to Frerejean et al. ( 2021 )’s method which recommends focusing learners’ attention on different aspects of complex skills in a specific order and setting up real and complex tasks with the same scenarios to help learners identify and connect the relationships between different constituent skills. In the experimental group, case tasks were offered during the first and second weeks to help students construct schemas and learn knowledge related to industrial robot programming. Students in the experimental group gradually constructed and automated schemas in lessons 1–8. Tasks 1–4 were completed in the students’ academic context. One week after the end of the course, we administered the summative assessment Task 5 to students in the experimental group. The control group was taught according to the above learning task sequence, but the obtained data were not formatively evaluated, and learning feedback was not provided.

The figure indicates a total of eight lessons were designed, and two lessons were taught every week.

Instruments

As shown in Table 3 , we established an academic performance scale that displayed students’ knowledge mastery, schema construction, and rule automation during the learning process, as well as students’ complex skill transfer performance from a comprehensive perspective. The scale content consists of 18 key points of measurement in the assessment point system, which is further subdivided into 58 specific items. Researchers and course experts jointly determined the content and weight of the evaluation points. Formative assessment Tasks 1–4 each contain its corresponding parts (see Table 1 and Table 2 ). Summative Task 5 involved all 58 assessment points in the students’ learning process and was designed to characterize students’ comprehensive implementation and transfer competencies regarding complex skills. The questions and answers of formative and summative evaluation were written by two coders independently under the same evaluation guide. In the early stages, the difficulties and differences of the tests were discussed, and an agreement was reached to ensure a unified evaluation.

Cognitive load and self-efficacy were measured in the summary assessment. As shown in Table 4 , Q1-q14 were used to measure cognitive load, and a 5-point Likert scale was designed to reflect students’ psychological load and effort in the process of learning regarding psychological condition, emotional experience, effective teaching, curriculum arrangement, and autonomous learning (Huang et al., 2013 ; Ji, 2012 ). The 14 items on the scale aimed to analyze the impact of the whole learning process–oriented assessment framework on students’ complex skill learning through the investigation of students’ intrinsic, extraneous, and germane cognitive load during learning (Chen, 2007 ; Hadie and Yusoff, 2016 ). The validity and reliability values of the questionnaire were verified by 107 students majoring in robotics from Shanghai vocational and technical school. According to the statistical results of SPSS, the Cronbach’s α coefficient of intrinsic, extraneous, and germane aspects of cognitive load were 0.976, 0.990, and 0.981, and the KMO sampling was 0.893, which is higher than Kaiser and Rice’s ( 1974 ) proposed minimally acceptable value of 0.5.

The self-efficacy questionnaire for students using complex skills to program industrial robotics was developed based on the research of Peranginangin et al. ( 2019 ) and Semilarski et al. ( 2021 ). q15-q18 were used to measure self-efficacy, and a 5-point Likert scale with four items from “totally disagree” to “totally agree” was designed. According to the statistical results of the 107 students, the Cronbach’s α coefficient of intrinsic, extraneous, and germane aspects of cognitive load was 0.931, and the KMO sampling was 0.844.

Data analyses

First, to verify the feasibility and effectiveness of the formative assessment framework, the effect of formative assessment application on complex skills was processed and analyzed using descriptive statistics, and line charts were drawn. Secondly, to understand the specific effects of the application of formative assessment framework, descriptive statistics and a t-test were carried out for academic success, and area maps were drawn. Finally, descriptive statistics and a t test were carried out on the questionnaire data regarding cognitive load and self-efficacy to understand the cognitive load and self-efficacy of students in this process.

Effectiveness of formative assessment for complex skill learning

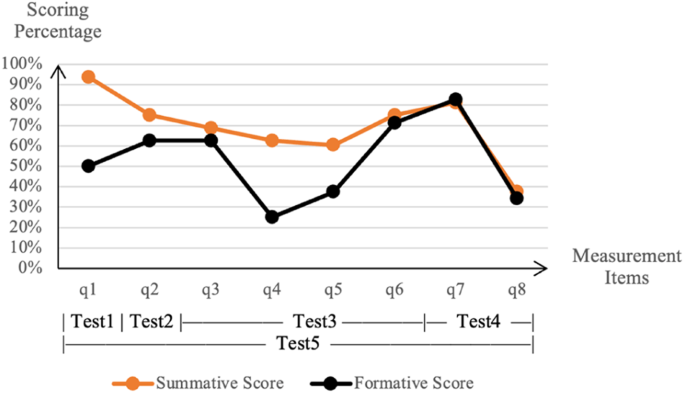

Figure 7 compares learners’ mastery of knowledge and concepts in the test tasks in both the formative assessment and the summative assessment. During the learning process, the formative assessment and immediate feedback based on teachers’ explanations effectively promoted students’ mastery of knowledge, especially for learners who had poor mastery of robot programming and application concepts and robot command concepts. However, as for measurement items q7-q8, which stand for the relationship between turning motion parameters and actual robot movement, the formative assessment failed to cultivate learners’ knowledge. This phenomenon might have occurred due to this study’s time constraints. The summative assessment was immediately carried out after receiving the feedback from test 4 results, which did not give students much time to review related knowledge.

The figure presents the comparison of objective formative and summative scores concerning knowledge and concept in the experimental group.

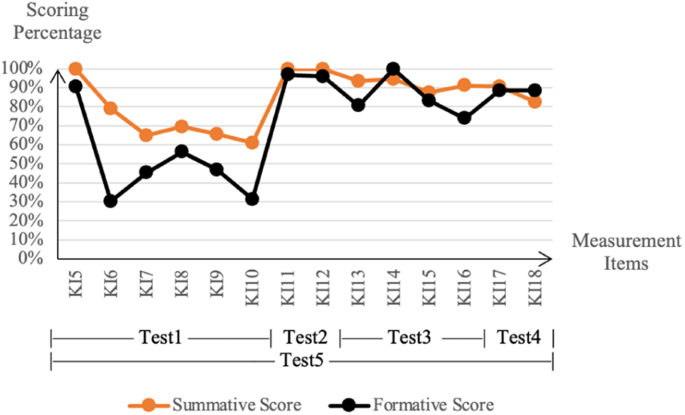

The promotion effect of the formative assessment framework for learning processes on learners’ complex skill schema construction and automation can be verified by comparing the scores of assessment points in Tasks 1–4 with the corresponding assessment points in Task 5. Data analysis results are shown in Fig. 8 . The results show that in schema construction, the score rates of students for assessment points KI6-10 were less than 60%, which indicates that learners did not establish a complete cognitive schema for robot programming. After receiving cognitive feedback from teachers based on formative assessment, learners’ construction and mastery of overall schema increased dramatically.

The figure indicates the results of comparison between formative and summative score concerning schema construction and automation in the experimental group.

The results also show that in terms of schema automation, although learners have better mastered most of the operating skills and working rules of industrial robot programming, the application of the complex skill process evaluation framework further improved the learning effect in some relatively weak assessment points. The results show that for the assessment points that students master (such as KI13 and KI15), formative assessment can offer a better intervention effect. However, formative assessment offers a limited effect on constituent skills that learners’ have mastered during the learning process. Through the evaluation of learners’ ability to apply complex skill transfer, we found that learners’ mastery of each assessment indicator generally reached 80%, indicating that the application of the complex skill process evaluation framework can promote learners’ schema construction to a certain extent in weak areas of complex skill learning.

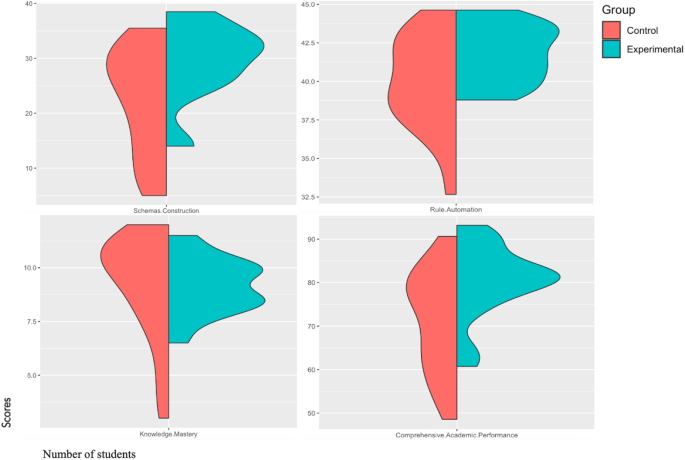

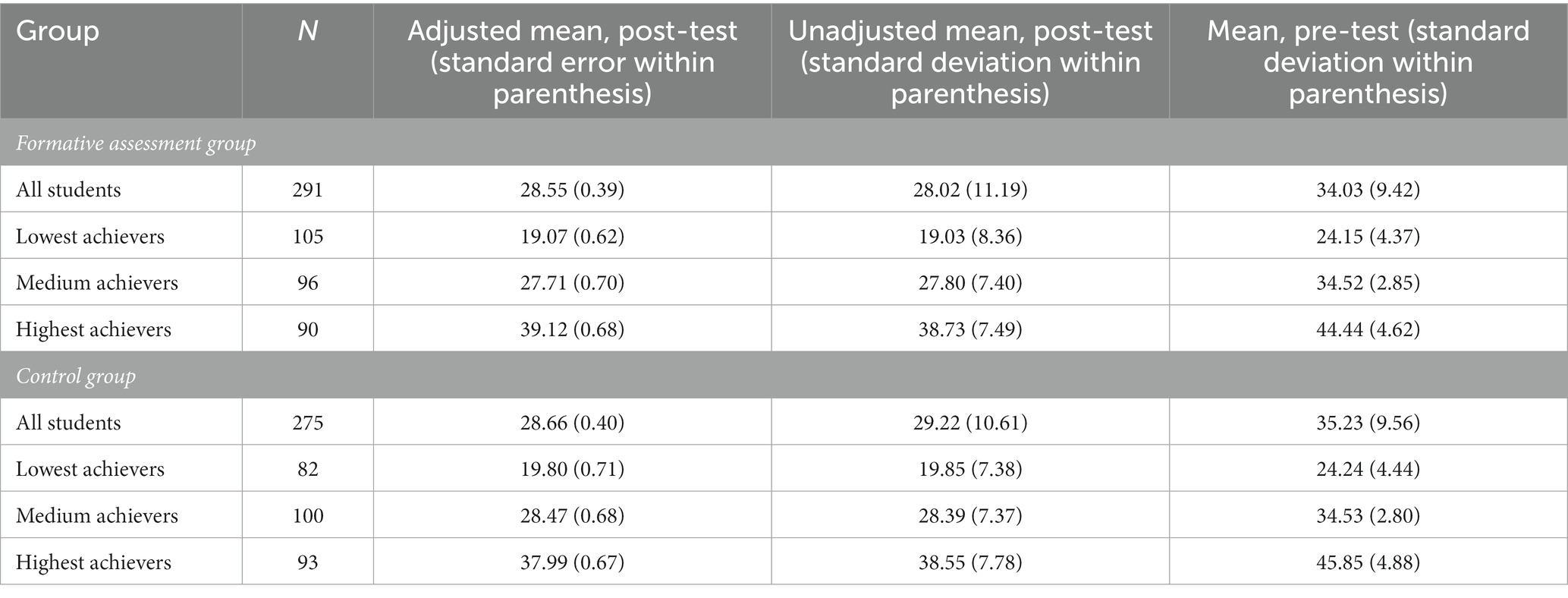

Academic success

Task 5 was used to verify the results of students’ implementation and transfer competency of complex skills. According to the results of the independent sample t-test, there were significant differences in the application of complex skill transfer between the experimental group and the control group ( t = 2.56, p = 0.015, Cohen’s d = 0.86). The academic performance of students in the experimental group in schema construction ( t = 2.38, p = 0. 024, Cohen’s d = 0.87) and schema proficiency ( t = 2.083, p = 0.045, Cohen’s d = 0.72) was significantly higher than that of the control group. However, in terms of memorizing knowledge concepts, the students in the experimental group were only slightly better than those in the control group ( t = 0.038, p = 0.970).

Figure 9 shows the overall state of students’ academic performance in each group. The results show that within a learning environment that incorporates procedural evaluation, students performed better overall in the learning of complex problem-solving processes and operating rules and could better transfer schemas to solve new problems. Although it was not very beneficial for students’ knowledge mastery, formative assessment was indeed effective in reducing the academic gap between students and promoting students’ complex skill learning.

The figure presents the findings of comparison of academic performance between the experimental and control groups.

Cognitive load and self-efficacy

We conducted a summative evaluation of learners’ cognitive load that aimed to explore the impact of formative assessment and intervention oriented to the whole learning process of students’ internal cognitive processing. The summative evaluation also included the self-efficacy test that reflected the influence formative assessment had on students’ learning emotions. Table 5 presents the t-test results of the cognitive load and self-efficacy between the experimental group and the control group. The germane cognitive load of students in the experimental group was significantly higher than that in the control group ( t = 2.19, p = 0.035). As for intrinsic ( t = 0.85, p = 0.402), extraneous ( t = 0.95, p = 0.352), and cognitive load as a whole ( t = 1.66, p = 0.107), students in the experimental group showed a higher level than those in the control group, but there was no significant difference between the two groups. Meanwhile, there was no significant difference in self-efficacy ( t = 0.727, p = 0.472) between the experimental group and the control group.

The formative assessment of students’ complex skills should be a dynamic and spiral process following the learning tests

To construct a formative evaluation framework for complex skills, the learning process is supposed to be analyzed primarily. Frerejean et al. ( 2019 ) indicated that the development of an evaluation framework should be synchronized with the development of learning tasks and used to promote students’ learning process, which has also been proven effectively in this study. Focusing on the key components proposed by the 4C/ID model, I-E-O model, spiral curriculum theory and HPI, the SCSLF was constructed. This framework consists of a spiral learning pathway for students to cultivate complex problem-solving skills. The overview of the instruction and formative assessment process was further proposed for suggesting guidelines to instructors. This overview includes a comprehensive scope of the source, tools, environment, and verification of the formative assessment.

Design of the formative assessment indicators and tasks

When it comes to the concrete steps of design and implemention of the formative assessment, the practical dimensions and tools are developed on the basis of the 4C/ID model. Our research introduces a process similar to Wolterinck et al. ( 2022 ), which is based on the 4C/ID model of designing assessments for learning and includes the definition of learning outcomes, instructional methods, and data collection techniques. However, what makes this study unique is its emphasis on creating a learning environment that enables more effective ways of tracking students’ performance in different aspects. The objectives for learning complex skill were deconstructed based on the learning processes. Focusing on four aspects (objective knowledge mastery, schema construction, schema automation, and schema transfer), the learning objectives were converted into a measurable key point framework of formative assessment. Moreover, a full-task learning model for complex skills learning was proposed, and a series of specific formative assessment tasks were designed according to the above-mentioned assessment point framework. Finally, the acquisition and processing methods of assessment data were analyzed, and assessment tasks were integrated into the whole learning process within the actual learning environment.

Formative assessment could promote students’ mastery of complex problem-solving skills without increasing their cognitive load significantly

To verify the effectiveness of the formative assessment on students’ complex skill learning, the formative and summative evaluating data were collected and analyzed. The results show that the formative assessment assisted learning process effectively improves students’ comprehensive transfer of and ability to implement complex skills. This conclusion echoes Frerejean et al.’s ( 2019 ) view, which indicated that the development of an evaluation framework should be synchronized with the development of learning tasks and used to promote students’ learning process. The formative assessment framework contributes to the monitoring and leading of students’ performance in the learning process. This study supported the findings of Costa et al. ( 2021 ) in their meta-analysis on the effect of the 4C/ID model, which indicated that continuous assessment and interventions could better consolidate students’ learning process and enhance their mastery of procedural and declarative knowledge. The cognitive load of the students in the experimental group was not significantly different from that in the controlled group, which shows that the application of formative assessment will not increase students’ learning burden. Particularly, the students in the experimental group had a significantly larger related cognitive load than those in the control group, which is consistent with Larmuseau et al. ( 2019 ) research results and shows that formative assessment contributes to students’ cultivation of complex skills effectively through promoting their cognitive efforts. In addition, integrating both manual and electronic assessment methods helped teachers integrate formative assessment into their teaching process, which is consistent with Ackerman et al.’s ( 2021 ) findings. However, contrary to the findings of Argelagós et al. ( 2022 ), our study showed that students’ self-efficacy did not significantly improve as a result of receiving the treatment, even though self-efficacy is believed to be related to students’ actual abilities. This could be attributed to the feedback provided, which not only assisted students in their learning but also exposed them to potential areas of improvement.

To further explore the different aspects of the impact of the formative assessment framework on learners’ complex skills learning, we compared the process and summary scores of students in the experimental group. The results show that the whole formative assessment framework can reveal the overall mastery of complex skills in the learning process as well as promote students’ learning and the construction of mature mental models concerning complex problem-solving processes and operation rules. This finding is in line with Kim’s ( 2021 ) research results. In addition, we find that the formative assessment framework can effectively reflect the current state of students’ knowledge mastery, but its promotion effect towards knowledge mastery is relatively limited. This is consistent with Van-Rosmalen et al.’s ( 2013 ) findings. In terms of professional knowledge in industrial robot programming, there was no significant improvement in students’ accuracy on the final test. This phenomenon might have occurred because the feedback provided to students after their formative assessments did not match their actual needs. The cognitive feedback teachers gave could not provide effective support for students’ recognition and understanding of weak objective knowledge concepts. Therefore, it is necessary to improve the forms of evaluation and feedback given to students in the process of solving complex problems in the current complex evaluation system. One possibility would be offering specific exercises and tasks to assess implicit knowledge concepts under a particular working context to improve students’ cognition and mastery of objective knowledge more effectively in related complex fields.

Limitations and suggestions for future research

Although the current research has discussed the possibility of carrying out formative assessment of learners’ complex problem-solving skills, there are still some limitations in the research. Firstly, the study only involved a limited number of students majoring in industrial robotics in a vocational college. The differences in the impact of whole learning process-oriented formative assessment on cognitive skill learning in other complex problem scenarios was not explored. Secondly, this study only explored the impact of formative assessment on the learning of complex skills from the cognitive perspective. Non-cognitive factors such as emotion and motivation are also important parts of and challenges in the assessment process which were not included in the scope of our assessment. Lastly, due to time constraints during the experiment, we did not provide students with sufficient time for review after they had learned all the course materials. This might have resulted in inconsistent assessment and feedback effectiveness in the results section.

In future studies, it is necessary to design and apply the formative assessment framework under a wider range of complex skills scenarios. Firstly, richer measurement and intervention methods also must be introduced to make up for the lack of cultivation of learners’ objective knowledge in current instruction based on the 4C/ID model. This may involve using video clips of classroom sessions to facilitate reflective questioning (Wolterinck-Broekhuis CHD et al. ( 2022 )), designing effective discussions, as well as fostering teacher-student interactions to explore conceptual understanding of objective knowledge (Helms-Lorenz and Visscher, 2022 ). Additionally, emerging technologies such as Virtual Reality (VR) and computer simulation software could be employed to enhance students’ comprehension of abstract foundational knowledge (Solmaz et al., 2023 ). Students need to be given sufficient time to digest the results of formative assessment, as it is a crucial step for the effectiveness of formative assessment. Secondly, during the design process of formative assessment systems, researchers must comprehensively consider the cultivation of students’ cognitive schema construction, cognitive load, learning attitude, and professional emotion associated with complex problem-solving situations in order to achieve a more comprehensive evaluation and improvement of students’ knowledge, skills, and attitudes in the learning process (Pardo et al., 2019 ). Moreover, since we have delved deeply into the complex process of students’ skill acquisition and the specific design of formative assessments, we still discovered that many studies focused on other aspects of assessment for learning. These aspects include teachers’ metacognitive abilities and knowledge base, effective classroom management and diverse teaching methods, as well as interactions and collaboration between teachers and students (Helms-Lorenz and Visscher, 2022 ; Wolterinck-Broekhuis CHD et al., ( 2022 )). These concepts could also play significant roles in the design and implementation of formative assessments in classroom practices.

Conclusions

In the 21st century, complex skills are becoming increasingly indispensable competencies for students and workers. The instruction of complex skills in schools plays a very important role in the cultivation of the talent required by the market. During the current complex skill learning process, the lack of systematic formative evaluation limits students’ learning. Therefore, in order to design a feasible and effective whole learning process–oriented formative evaluation system, this study offered a formative evaluation method for complex skill learning based on the 4C/ID model. An intervention research was then carried out among vocational students to verify the effectiveness of the framework. Our study shows that compared with a simple complex skill learning process based on summative evaluation, a learning process integrated with formative assessment significantly improves learners’ performance in the problem-solving process, implementing problem-solving competencies, and transferring complex skills to similar situations. Moreover, with the goal of not adding an additional cognitive burden to students, the formative assessment method effectively improves students’ germane cognitive load, which also improves students’ active efforts during the learning process. This research will play a role in verifying the effectiveness of formative assessment in the learning of complex problems and guiding the design and development of formative assessment in real and complex learning situations.

Data availability

The datasets generated during and/or analyzed during the current study are available in the Harvard Dataverse repository, https://doi.org/10.7910/DVN/DNYBKB .

Ackermans K, Rusman E, Nadolski R, Specht M, Brand‐Gruwel S (2021) Video‐enhanced or textual rubrics: does the viewbrics’ formative assessment methodology support the mastery of complex (21st century) skills? J Comput Assist Learn 37(3):810–824

Article Google Scholar

Alahmad A, Stamenkovska T, Gyori J (2021) Preparing pre-service teachers for 21st century skills education: a teacher education model. GiLE J Skills Dev 1(1):67–86

Argelagós E, Garcia C, Privado J, Wopereis I (2022) Fostering information problem solving skills through online task-centred instruction in higher education. Comput Educ 180:104–433

Astin AW (1993) Assessment for excellence: The philosophy and practice of assessment and evaluation in higher education. Orix Press, New York, USA

Google Scholar

Bertalanffy L (1968) General system theory: foundations, development, applications. George Braziller, New York, p 289. http://hdl.handle.net/10822/763002

Bhagat KK, Spector JM (2017) Formative assessment in complex problem-solving domains: the emerging role of assessment technologies. Educ Techno Soc 20(4):312–317

Bruner J (1977) The process of education. Harvard University Press, MA

Care E, Kim H, Vista A, Anderson K (2019) Education system alignment for 21st century skills: focus on assessment. Center for Universal Education at the Brookings Institution, Washington. https://eric.ed.gov/?id=ED592779

Chen Q (2007) Cognitive load theory and its development. Technol Educ 9:15–19

CAS Google Scholar

Costa JM, Miranda GL, Melo M (2021) Four-component instructional design (4C/ID) model: a meta-analysis on use and effect. Learn Environ Res 25:445–463. https://doi.org/10.1007/s10984-021-09373-y

Crossouard B (2011) Using formative assessment to support complex learning in conditions of social adversity. Assess Educ 18(1):59–72

Eseryel D, Ifenthaler D, Ge X (2013) Validation study of a method for assessing complex ill-structured problem solving by using causal representations. Educ Technol Res Dev 61(3):443–463

Frerejean J, Van-Merriënboer JJ, Kirschner PA, Roex A, Aertgeerts B, Marcellis M (2019) Designing instruction for complex learning: 4C/ID in higher education. Eur J Educ 54(4):513–524

Frerejean J, Van-Geel M, Keuning T, Dolmans D, Van-Merriënboer JJ, Visscher AJ (2021) Ten steps to 4C/ID: training differentiation skills in a professional development program for teachers. Instr Sci 49:395–418. https://doi.org/10.1007/s11251-021-09540-x

Hadie SN, Yusoff MS (2016) Assessing the validity of the cognitive load scale in a problem-based learning setting. J Taibah Univ Med Sci 11(3):194–202

Helms-Lorenz M, Visscher AJ (2022) Unravelling the challenges of the data-based approach to teaching improvement. Sch Eff Sch Improv 33(1):125–147

Hwang GJ, Yang LH, Wang SY (2013) A concept map-embedded educational computer game for improving students’ learning performance in natural science courses. Comput Educ 69:121–130

Iñiguez-Berrozpe T, Boeren E (2020) 21st century skills for all: Adults and problem solving in technology rich environments. Tech Know Learn 25:929–951

Ji C (2012) Development of cognitive load scale for senior high school students and related research. Nanjing Normal University, Nanjing. https://t.cnki.net/kcms/detail?v=yBz58I57kKfYNhmNOQoKzW4DinFJ48hmyuSIvzsPaRDcfPgtZ2iq98gxOO_a58R3SXGlipnHq7LfhWq-CmJDgfBM9tfgKTxJ3l4OsdtKGrwGlaTICBukUw==&uniplatform=NZKPT

Jian J, Ma P, Zhang X (2021) Active choice: a positive understanding of “High Dropout Rate” of online courses based on the perspective of learner investment theory. E-Educ Res 4:45–52

Kaiser HF, Rice J (1974) Little jiffy, mark IV. Educ Psychol Meas 34(1):111–117

Kerrigan S, Feng S, Vuthaluru R, Ifenthaler D, Gibson D (2021) Network analytics of collaborative problem-solving. In: Ifenthaler D and Sampson DG (eds) Balancing the tension between digital technologies and learning sciences: Cognition and exploratory learning in the digital age. Springer, Cham. https://doi.org/10.1007/978-3-030-65657-7_4

Kim MK (2021) A design experiment on technology‐based learning progress feedback in a graduate‐level online course. Hum Behav Emerg Technol 3(5):1–19. https://doi.org/10.1002/hbe2.308

Koehler AA, Vilarinho-Pereira DR (2021) Using social media affordances to support ill-structured problem-solving skills: Considering possibilities and challenges. Educ Technol Res Dev 71:199–235. https://doi.org/10.1007/s11423-021-10060-1

Larmuseau C, Elen J, Depaepe F (2018) The influence of students’ cognitive and motivational characteristics on students’ use of a 4C/ID-based online learning environment and their learning gain. In: Proceedings of the 8th International Conference on Learning Analytics and Knowledge, pp 171–180. https://doi.org/10.1145/3170358.3170363

Larmuseau C, Coucke H, Kerkhove P, Desmet P, Depaepe F (2019) Cognitive load during online complex problem-solving in a teacher training context. In: EDEN Conference Proceedings, pp 466–474. https://doi.org/10.38069/edenconf-2019-ac-0052

Larmuseau C, Desmet P, Lancieri L, Depaepe F (2019) Investigating the effectiveness of online learning environments for complex learning. https://dl.acm.org/citation.cfm?id=3303772

Larmuseau C (2020) Learning analytics for the understanding of learning processes in online learning environments. Université de Lille, Lille; Katholieke universiteit te Leuven, Leuven. https://hal.archives-ouvertes.fr/tel-03086072/

Le H, Cai L (2019) Flipped classroom research to promote deep learning: from the perspective of cognitive load theory. J Teach Manag 12:92–95

Leif S, Head D, McCreery M, Fiorentini J, Cole LQ (2020) Acclimation by Design: Using 4C/ID to Scaffold Digital Learning Environments. In: Langran E (ed). Proceedings of SITE Interactive 2020 Online Conference. Online: Association for the Advancement of Computing in Education (AACE), pp 513-517. https://www.learntechlib.org/primary/p/218195/

Li Y, Hu Y, Li Y, Ma H, Su K (2020) Application and practice of process evaluation in general zoology curriculum—Take Shanxi Agricultural University as an example. Heilongjiang Anim Sci Vet Med 23:143–146

Liu Y (2022) Application research of process evaluation in nursing skill training teaching of undergraduate nursing students. Chin Nurs Res 36(2):353–355

MathSciNet Google Scholar

Maddens L, Depaepe F, Raes A, Elen J (2020) The instructional design of a 4C/ID-inspired learning environment for upper secondary school students’ research skills. Int J Des Learn 11(3):126–147

Marcellis M, Barendsen E, Van-Merriënboer J (2018) Designing a blended course in Android app development using 4C/ID. In: Proceedings of the 18th Koli Calling International Conference on Computing Education Research, pp 1–5. https://doi.org/10.1145/3279720.3279739

Marker A, Villachica SW, Stepich D, Allen DA, Stanton L (2014) An updated framework for human performance improvement in the workplace: the spiral HPI framework. Perform Improv 53(1):10–23

Mayer RE (1992) Thinking, problem solving, cognition (2nd ed). Freeman WH/Times Books/Henry Holt & Co. https://psycnet.apa.org/record/1992-97696-000

McCallum S, Milner MM (2021) The effectiveness of formative assessment: student views and staff reflections. Assess Eval High Educ 46(1):1–16

Melo M, Miranda GL (2015) Learning electrical circuits: the effects of the 4C-ID instructional approach in the acquisition and transfer of knowledge. J Inf Technol Educ 14:313–337

Melo M (2018) The 4C/ID-model in physics education: instructional design of a digital learning environment to teach electrical circuits. Int J Instr 11(1):103–122

Merrill MD(2002a) A pebble‐in‐the‐pond model for instructional design Perform Improv 41(7):41–46

Merrill MD (2002b) First principles of instruction. Educ Technol Res Dev 50(3):43–59

Min KK (2012) Theoretically grounded guidelines for assessing learning progress: cognitive changes in ill-structured complex problem-solving contexts. Educ Technol Res Dev 60(4):601–622

Nadolski RJ, Hummel HG, Rusman E, Ackermans K (2021) Rubric formats for the formative assessment of oral presentation skills acquisition in secondary education. Educ Technol Res Dev 69(5):2663–2682

Ndiaye Y, Hérold JF, Chatoney M (2021) Applying the 4C/ID-model to help students structure their knowledge system when learning the concept of force in technology. Techne Serien 28(2):260–268. https://journals.oslomet.no/index.php/techneA/article/view/4319

OECD. (2018) PISA 2015 Results in Focus. https://www.oecd.org/pisa/pisa-2015-results-infocus.pdf

Pardo A, Jovanovic J, Dawson S, Gašević D, Mirriahi N (2019) Using learning analytics to scale the provision of personalised feedback. Br J Educ Technol 50(1):128–138

Peranginangin SA, Saragih S, Siagian P (2019) Development of learning materials through PBL with Karo culture context to improve students’ problem-solving ability and self-efficacy. Int Electron J Math Educ 14(2):265–274

Salam A (2015) Input, process and output: System approach in education to assure the quality and excellence in performance. Bangladesh J Med Sci 14(1):1–2

Article MathSciNet Google Scholar

Semilarski H, Soobard R, Rannikmäe M (2021) Promoting students’ perceived self-efficacy towards 21st century skills through everyday life-related scenarios. Educ Sci 11(10):570. https://doi.org/10.3390/educsci11100570

Solmaz S, Kester L, Van-Gerven T (2023) An immersive virtual reality learning environment with CFD simulations: Unveiling the Virtual Garage concept. Educ Inf Technol. https://doi.org/10.1007/s10639-023-11747-z

Sulaiman T, Kotamjani SS, Rahim SSA, Hakim MN (2020) Malaysian public university lecturers’ perceptions and practices of formative and alternative assessments. Int J Learn Teach Educ Res 19(5):379–394

Thima S & Chaijaroen S (2021) The framework for development of the constructivist learning environment model to enhance Ill-structured problem solving in industrial automation system supporting by metacognition. In: International Conference on Innovative Technologies and Learning. Springer, Cham, pp 511–520

Van-Gog T, Sluijsmans DMA, Joosten-Ten BD, Prins JF (2010) Formative assessment in an online learning environment to support flexible on-the-job learning in complex professional domains. Educ Technol Res Dev 58:311–324

Van-Merriënboer JJG, Kester L (2014) The four-component instructional design model: Multimedia principles in environments for complex learning. In: Mayer RE (ed) The Cambridge handbook of multimedia learning. Cambridge University Press, Cambridge, UK, pp 104–148. https://doi.org/10.1017/CBO9781139547369.007

Van-Rosmalen P, Boyle EA, Nadolski R, Van-Der-Baaren J, Fernández-Manjón B, MacArthur E & Star K (2013) Acquiring 21st Century Skills: Gaining insight into the design and applicability of a serious game with 4C-ID. In: International Conference on Games and Learning Alliance. Springer, Cham, pp 327–334. https://doi.org/10.1007/978-3-319-12157-4_26

Webb ME, Prasse D, Phillips M, Kadijevich DM, Angeli C, Strijker A, Carvalho AA, Andresen BB, Dobozy E, Laugesen A (2018) Challenges for IT-enabled formative assessment of complex 21st century skills. Tech Know Learn 23:441–456

Wolterinck C, Poortman C, Schildkamp K & Visscher A (2022) Assessment for Learning: developing the required teacher competencies. Eur J Teach Educ. https://doi.org/10.1080/02619768.2022.2124912

Wolterinck-Broekhuis CHD, Poortman CL, Schildkamp K & Visscher AJ (2022) Teacher professional development in Assessment for Learning. University of Twente. https://doi.org/10.3990/1.9789036554664

Xu X, Zhou Z, Yue Y, Wang M, Gu X (2019) Design and effectiveness of comprehensive learning for complex skills based on 4C/ID model. Chin Educ Technol 10:124–131

Yanto H, Mula JM, Kavanagh MH (2011) Developing students’ accounting competencies using Astin’s IEO model: An identification of key educational inputs based on Indonesian student perspectives. In: Proceedings of the RMIT Accounting Educators’ Conference, 2011: Accounting Education or Educating Accountants?. University of Southern Queensland, Brisbane

Zhou S, Zhang Y, Liu X, Wang Y, Shen X (2020) Empirical research of oral English teaching in primary school based on 4C/ID model. J High Educ Res 1(4):123–130

Download references

Acknowledgements

This work was supported by Key Project of Science and Technology Commission of Shanghai Municipality (Project No.: 17DZ2281800) and Fundamental Research Funds for the Central Universities (Project No.: 2020ECNU-HLYT035). XX and WS have equally contributed to this article, and they should be considered as first authors.

Author information

Authors and affiliations.

Department of Education Information Technology, Faculty of Education, East China Normal University, Shanghai, China

Xianlong Xu, A.Y.M. Atiquil Islam & Yang Zhou

Institute of Education, Tsinghua University, Beijing, China

Wangqi Shen

School of Teacher Education, Jiangsu University, Zhenjiang, Jiangsu, China

A.Y.M. Atiquil Islam

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to A.Y.M. Atiquil Islam .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Ethical approval

Informed consent.

Informed consent was obtained from all the participants and their teachers with the permission of the institute involved in the study.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Xu, X., Shen, W., Islam, A.A. et al. A whole learning process-oriented formative assessment framework to cultivate complex skills. Humanit Soc Sci Commun 10 , 653 (2023). https://doi.org/10.1057/s41599-023-02200-0

Download citation

Received : 27 March 2023

Accepted : 25 September 2023

Published : 06 October 2023

DOI : https://doi.org/10.1057/s41599-023-02200-0

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Formative Assessment in Educational Research Published at the Beginning of the New Millennium: Bibliometric Analysis

- Published: 06 November 2023

- Volume 7 , pages 106–125, ( 2023 )

Cite this article

- Ataman Karaçöp ORCID: orcid.org/0000-0001-8939-3725 1 &

- Tufan İnaltekin ORCID: orcid.org/0000-0002-3843-7393 1

198 Accesses

Explore all metrics

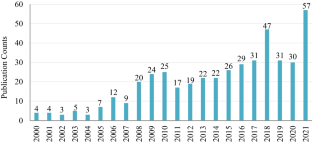

Today, many educational reforms emphasize the importance of formative assessment (FA) for effectiveness in teaching. Considering the importance of FA in education and a perceived lack of same, it is clear that much more research is needed on this subject. However, it is also very important for researchers to formulate new studies and not to repeat exiting ones, and to increase the visibility of exiting effective studies that can make teachers in service understand the importance of FA. In this context, being able to review qualified academic research is very valuable. Therefore, we aimed to conduct a bibliometric analysis using the VOSviewer to determine the focus of research on formative assessment in education (FAE). This bibliometric analysis included 447 studies on FA published in the Web of Science (WoS) from 2000 to 2021. We performed the citation analysis, created a co-authored network map, and performed an analysis of author keywords in FA publications. Results showed that Erin M. Furtak has been the most prolific author in FAE in terms of the number of publications. Moreover, David J. Nicol and Debra Macfarlane-Dick, who had a co-authored publication, were the most influential authors in terms of citations. Results indicated that Univ Colorado/USA with 13 publications was the most productive institution. However, the USA and England were the most productive countries in terms of both numbers of publications and citations. Of the 19 documents with over a hundred citations, Nicol and Macfarlane-Dick ( 2006 ) and Black and Wiliam ( 2009 ) were the most influential documents. According to the number of publications and citations, “Assessment & Evaluation in Higher Education” and “Computers & Education” came to the fore among the top five most productive sources. The results of the co-occurrence analysis showed that the terms “assessment,” “mathematics,” and “professional development” were most often co-occurrence. Moreover, FA, which was the focus of this bibliometric analysis, had a high degree of co-occurrence with feedback, followed by summative evaluation, self-regulation, and professional development. These results will contribute significantly to the efforts of the scientific community towards FA research.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

Ethical Considerations of Conducting Systematic Reviews in Educational Research

Tools for assessing teacher digital literacy: a review

Lan Anh Thuy Nguyen & Anita Habók

The power of assessment feedback in teaching and learning: a narrative review and synthesis of the literature

Michael Agyemang Adarkwah

Data Availability

The data that support the findings of this study are available from https://www.webofscience.com/wos/woscc/summary/506d2eeb-9361-4460-8bf3-2a2bd56ed309-720760d4/date-descending/1 .

Antoniou, P., & James, M. (2014). Exploring formative assessment in primary school classrooms: Developing a framework of actions and strategies. Educational Assessment, Evaluation and Accountability, 26 (2), 153–176. https://doi.org/10.1007/s11092-013-9188-4

Article Google Scholar

Badaluddin, N. A., Lion, M., Razali, S. M., & Khalit, S. I. (2021). Bibliometric analysis of global trends on soil moisture assessment using the remote sensing research study from 2000 to 2020. Water, Air, & Soil Pollution, 232 (7), 1–10. https://doi.org/10.1007/s11270-021-05218-9

Beatty, I. D., & Gerace, W. J. (2009). Technology-enhanced formative assessment: A research-based pedagogy for teaching science with classroom response technology. Journal of Science Education and Technology, 18 (2), 146–162. https://doi.org/10.1007/s10956-008-9140-4

Bell, B., & Cowie, B. (2001). The characteristics of formative assessment in science education. Science Education, 85 (5), 536–553. https://doi.org/10.15663/wje.v7i1.430

Bennett, R. E. (2011). Formative assessment: A critical review. Assessment in Education: Principles, Policy & Practice, 18 (1), 5–25. https://doi.org/10.1080/0969594X.2010.513678

Birenbaum, M., DeLuca, C., Earl, L., Heritage, M., Klenowski, V., Looney, A., & Wyatt-Smith, C. (2015). International trends in the implementation of assessment for learning: Implications for policy and practice. Policy Futures in Education, 13 (1), 117–140. https://doi.org/10.1177/147821031456673

Bjork, S., Offer, A., & Söderberg, G. (2014). Time series citation data: The Nobel Prize in economics. Scientometrics, 98 (1), 185–196. https://doi.org/10.1007/s11192-013-0989-5

Black, P. (2015). Formative assessment–an optimistic but incomplete vision. Assessment in Education: Principles, Policy & Practice, 22 (1), 161–177. https://doi.org/10.1080/0969594X.2014.999643

Black, P., & Wiliam, D. (1998). Assessment and classroom learning. Assessment in Education: Principles Policy and Practice, 5 (1), 7–73. https://doi.org/10.1080/0969595980050102

Black, P., & Wiliam, D. (2003). ‘In praise of educational research’: Formative assessment. British Educational Research Journal, 29 (5), 623–637. https://doi.org/10.1080/0141192032000133721

Black, P., & Wiliam, D. (2009). Developing the theory of formative assessment. Educational Assessment, Evaluation and Accountability, 21 (1), 5–31. https://doi.org/10.1007/s11092-008-9068-5

Bozkurt, N. O. (2021). Academics’ opinions regarding the quality of scientific publications and their quality problems. Journal of Higher Education and Science, 11 (1), 128–137. https://doi.org/10.5961/jhes.2021.435

Buchanan, T. (2000). The efficacy of a World-Wide Web mediated formative assessment. Journal of Computer Assisted Learning, 16 (3), 193–200. https://doi.org/10.1046/j.1365-2729.2000.00132.x

Cagasan, L., Care, E., Robertson, P., & Luo, R. (2020). Developing a formative assessment protocol to examine formative assessment practices in the Philippines. Educational Assessment, 25 (4), 259–275. https://doi.org/10.1080/10627197.2020.1766960

Cancino, C., Merigó, J. M., Coronado, F., Dessouky, Y., & Dessouky, M. (2017). Forty years of Computers & Industrial Engineering: A bibliometric analysis. Computers & Industrial Engineering, 113 , 614–629. https://doi.org/10.1016/j.cie.2017.08.033

Cao, Y., Qi, F., Cui, H., & Yuan, M. (2022). Knowledge domain and emerging trends of carbon footprint in the field of climate change and energy use: A bibliometric analysis. Environmental Science and Pollution Research . https://doi.org/10.1007/s11356-022-24756-1

Cisterna, D., & Gotwals, A. W. (2018). Enactment of ongoing formative assessment: Challenges and opportunities for professional development and practice. Journal of Science Teacher Education, 29 (3), 200–222. https://doi.org/10.1080/1046560X.2018.1432227

Cizek, G. J., Andrade, H. L., & Bennett, R. E. (2019). Formative assessment: History, definition, and progress. In Handbook of formative assessment in the disciplines (pp. 3–19). Routledge.

Coffey, J. E., Hammer, D., Levin, D. M., & Grant, T. (2011). The missing disciplinary substance of formative assessment. Journal of Research in Science Teaching, 48 (10), 1109–1136. https://doi.org/10.1002/tea.20440

Correia, C. F., & Harrison, C. (2020). Teachers’ beliefs about inquiry-based learning and its impact on formative assessment practice. Research in Science & Technological Education, 38 (3), 355–376. https://doi.org/10.1080/02635143.2019.1634040

Cowie, B., & Bell, B. (1999). A model of formative assessment in science education. Assessment in Education: Principles, Policy & Practice, 6 (1), 101–116. https://doi.org/10.1080/09695949993026

De Backer, F., Van Avermaet, P., & Slembrouck, S. (2017). Schools as laboratories for exploring multilingual assessment policies and practices. Language and Education, 31 (3), 217–230. https://doi.org/10.1080/09500782.2016.1261896

DeLuca, C. (2012). Preparing teachers for the age of accountability: Toward a framework for assessment education. Action in Teacher Education, 34 (5–6), 576–591. https://doi.org/10.1080/01626620.2012.730347

DeLuca, C., Valiquette, A., Coombs, A., LaPointe-McEwan, D., & Luhanga, U. (2018). Teachers’ approaches to classroom assessment: A large-scale survey. Assessment in Education: Principles, Policy & Practice, 25 (4), 355–375. https://doi.org/10.1080/0969594X.2016.1244514