Rubrics for Oral Presentations

Introduction.

Many instructors require students to give oral presentations, which they evaluate and count in students’ grades. It is important that instructors clarify their goals for these presentations as well as the student learning objectives to which they are related. Embedding the assignment in course goals and learning objectives allows instructors to be clear with students about their expectations and to develop a rubric for evaluating the presentations.

A rubric is a scoring guide that articulates and assesses specific components and expectations for an assignment. Rubrics identify the various criteria relevant to an assignment and then explicitly state the possible levels of achievement along a continuum, so that an effective rubric accurately reflects the expectations of an assignment. Using a rubric to evaluate student performance has advantages for both instructors and students. Creating Rubrics

Rubrics can be either analytic or holistic. An analytic rubric comprises a set of specific criteria, with each one evaluated separately and receiving a separate score. The template resembles a grid with the criteria listed in the left column and levels of performance listed across the top row, using numbers and/or descriptors. The cells within the center of the rubric contain descriptions of what expected performance looks like for each level of performance.

A holistic rubric consists of a set of descriptors that generate a single, global score for the entire work. The single score is based on raters’ overall perception of the quality of the performance. Often, sentence- or paragraph-length descriptions of different levels of competencies are provided.

When applied to an oral presentation, rubrics should reflect the elements of the presentation that will be evaluated as well as their relative importance. Thus, the instructor must decide whether to include dimensions relevant to both form and content and, if so, which one. Additionally, the instructor must decide how to weight each of the dimensions – are they all equally important, or are some more important than others? Additionally, if the presentation represents a group project, the instructor must decide how to balance grading individual and group contributions. Evaluating Group Projects

Creating Rubrics

The steps for creating an analytic rubric include the following:

1. Clarify the purpose of the assignment. What learning objectives are associated with the assignment?

2. Look for existing rubrics that can be adopted or adapted for the specific assignment

3. Define the criteria to be evaluated

4. Choose the rating scale to measure levels of performance

5. Write descriptions for each criterion for each performance level of the rating scale

6. Test and revise the rubric

Examples of criteria that have been included in rubrics for evaluation oral presentations include:

- Knowledge of content

- Organization of content

- Presentation of ideas

- Research/sources

- Visual aids/handouts

- Language clarity

- Grammatical correctness

- Time management

- Volume of speech

- Rate/pacing of Speech

- Mannerisms/gestures

- Eye contact/audience engagement

Examples of scales/ratings that have been used to rate student performance include:

- Strong, Satisfactory, Weak

- Beginning, Intermediate, High

- Exemplary, Competent, Developing

- Excellent, Competent, Needs Work

- Exceeds Standard, Meets Standard, Approaching Standard, Below Standard

- Exemplary, Proficient, Developing, Novice

- Excellent, Good, Marginal, Unacceptable

- Advanced, Intermediate High, Intermediate, Developing

- Exceptional, Above Average, Sufficient, Minimal, Poor

- Master, Distinguished, Proficient, Intermediate, Novice

- Excellent, Good, Satisfactory, Poor, Unacceptable

- Always, Often, Sometimes, Rarely, Never

- Exemplary, Accomplished, Acceptable, Minimally Acceptable, Emerging, Unacceptable

Grading and Performance Rubrics Carnegie Mellon University Eberly Center for Teaching Excellence & Educational Innovation

Creating and Using Rubrics Carnegie Mellon University Eberly Center for Teaching Excellence & Educational Innovation

Using Rubrics Cornell University Center for Teaching Innovation

Rubrics DePaul University Teaching Commons

Building a Rubric University of Texas/Austin Faculty Innovation Center

Building a Rubric Columbia University Center for Teaching and Learning

Rubric Development University of West Florida Center for University Teaching, Learning, and Assessment

Creating and Using Rubrics Yale University Poorvu Center for Teaching and Learning

Designing Grading Rubrics Brown University Sheridan Center for Teaching and Learning

Examples of Oral Presentation Rubrics

Oral Presentation Rubric Pomona College Teaching and Learning Center

Oral Presentation Evaluation Rubric University of Michigan

Oral Presentation Rubric Roanoke College

Oral Presentation: Scoring Guide Fresno State University Office of Institutional Effectiveness

Presentation Skills Rubric State University of New York/New Paltz School of Business

Oral Presentation Rubric Oregon State University Center for Teaching and Learning

Oral Presentation Rubric Purdue University College of Science

Group Class Presentation Sample Rubric Pepperdine University Graziadio Business School

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Am J Pharm Educ

- v.74(9); 2010 Nov 10

A Standardized Rubric to Evaluate Student Presentations

Michael j. peeters.

a University of Toledo College of Pharmacy

Eric G. Sahloff

Gregory e. stone.

b University of Toledo College of Education

To design, implement, and assess a rubric to evaluate student presentations in a capstone doctor of pharmacy (PharmD) course.

A 20-item rubric was designed and used to evaluate student presentations in a capstone fourth-year course in 2007-2008, and then revised and expanded to 25 items and used to evaluate student presentations for the same course in 2008-2009. Two faculty members evaluated each presentation.

The Many-Facets Rasch Model (MFRM) was used to determine the rubric's reliability, quantify the contribution of evaluator harshness/leniency in scoring, and assess grading validity by comparing the current grading method with a criterion-referenced grading scheme. In 2007-2008, rubric reliability was 0.98, with a separation of 7.1 and 4 rating scale categories. In 2008-2009, MFRM analysis suggested 2 of 98 grades be adjusted to eliminate evaluator leniency, while a further criterion-referenced MFRM analysis suggested 10 of 98 grades should be adjusted.

The evaluation rubric was reliable and evaluator leniency appeared minimal. However, a criterion-referenced re-analysis suggested a need for further revisions to the rubric and evaluation process.

INTRODUCTION

Evaluations are important in the process of teaching and learning. In health professions education, performance-based evaluations are identified as having “an emphasis on testing complex, ‘higher-order’ knowledge and skills in the real-world context in which they are actually used.” 1 Objective structured clinical examinations (OSCEs) are a common, notable example. 2 On Miller's pyramid, a framework used in medical education for measuring learner outcomes, “knows” is placed at the base of the pyramid, followed by “knows how,” then “shows how,” and finally, “does” is placed at the top. 3 Based on Miller's pyramid, evaluation formats that use multiple-choice testing focus on “knows” while an OSCE focuses on “shows how.” Just as performance evaluations remain highly valued in medical education, 4 authentic task evaluations in pharmacy education may be better indicators of future pharmacist performance. 5 Much attention in medical education has been focused on reducing the unreliability of high-stakes evaluations. 6 Regardless of educational discipline, high-stakes performance-based evaluations should meet educational standards for reliability and validity. 7

PharmD students at University of Toledo College of Pharmacy (UTCP) were required to complete a course on presentations during their final year of pharmacy school and then give a presentation that served as both a capstone experience and a performance-based evaluation for the course. Pharmacists attending the presentations were given Accreditation Council for Pharmacy Education (ACPE)-approved continuing education credits. An evaluation rubric for grading the presentations was designed to allow multiple faculty evaluators to objectively score student performances in the domains of presentation delivery and content. Given the pass/fail grading procedure used in advanced pharmacy practice experiences, passing this presentation-based course and subsequently graduating from pharmacy school were contingent upon this high-stakes evaluation. As a result, the reliability and validity of the rubric used and the evaluation process needed to be closely scrutinized.

Each year, about 100 students completed presentations and at least 40 faculty members served as evaluators. With the use of multiple evaluators, a question of evaluator leniency often arose (ie, whether evaluators used the same criteria for evaluating performances or whether some evaluators graded easier or more harshly than others). At UTCP, opinions among some faculty evaluators and many PharmD students implied that evaluator leniency in judging the students' presentations significantly affected specific students' grades and ultimately their graduation from pharmacy school. While it was plausible that evaluator leniency was occurring, the magnitude of the effect was unknown. Thus, this study was initiated partly to address this concern over grading consistency and scoring variability among evaluators.

Because both students' presentation style and content were deemed important, each item of the rubric was weighted the same across delivery and content. However, because there were more categories related to delivery than content, an additional faculty concern was that students feasibly could present poor content but have an effective presentation delivery and pass the course.

The objectives for this investigation were: (1) to describe and optimize the reliability of the evaluation rubric used in this high-stakes evaluation; (2) to identify the contribution and significance of evaluator leniency to evaluation reliability; and (3) to assess the validity of this evaluation rubric within a criterion-referenced grading paradigm focused on both presentation delivery and content.

The University of Toledo's Institutional Review Board approved this investigation. This study investigated performance evaluation data for an oral presentation course for final-year PharmD students from 2 consecutive academic years (2007-2008 and 2008-2009). The course was taken during the fourth year (P4) of the PharmD program and was a high-stakes, performance-based evaluation. The goal of the course was to serve as a capstone experience, enabling students to demonstrate advanced drug literature evaluation and verbal presentations skills through the development and delivery of a 1-hour presentation. These presentations were to be on a current pharmacy practice topic and of sufficient quality for ACPE-approved continuing education. This experience allowed students to demonstrate their competencies in literature searching, literature evaluation, and application of evidence-based medicine, as well as their oral presentation skills. Students worked closely with a faculty advisor to develop their presentation. Each class (2007-2008 and 2008-2009) was randomly divided, with half of the students taking the course and completing their presentation and evaluation in the fall semester and the other half in the spring semester. To accommodate such a large number of students presenting for 1 hour each, it was necessary to use multiple rooms with presentations taking place concurrently over 2.5 days for both the fall and spring sessions of the course. Two faculty members independently evaluated each student presentation using the provided evaluation rubric. The 2007-2008 presentations involved 104 PharmD students and 40 faculty evaluators, while the 2008-2009 presentations involved 98 students and 46 faculty evaluators.

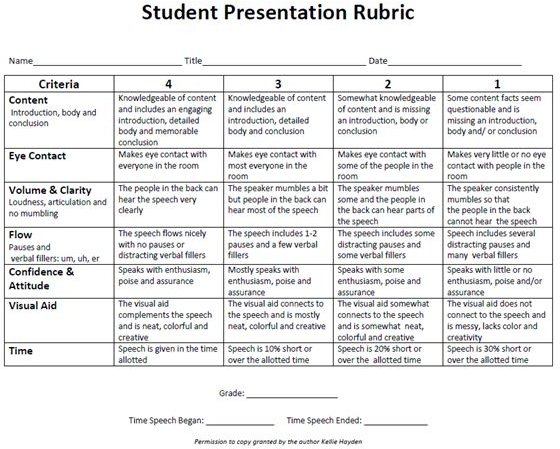

After vetting through the pharmacy practice faculty, the initial rubric used in 2007-2008 focused on describing explicit, specific evaluation criteria such as amounts of eye contact, voice pitch/volume, and descriptions of study methods. The evaluation rubric used in 2008-2009 was similar to the initial rubric, but with 5 items added (Figure (Figure1). 1 ). The evaluators rated each item (eg, eye contact) based on their perception of the student's performance. The 25 rubric items had equal weight (ie, 4 points each), but each item received a rating from the evaluator of 1 to 4 points. Thus, only 4 rating categories were included as has been recommended in the literature. 8 However, some evaluators created an additional 3 rating categories by marking lines in between the 4 ratings to signify half points ie, 1.5, 2.5, and 3.5. For example, for the “notecards/notes” item in Figure Figure1, 1 , a student looked at her notes sporadically during her presentation, but not distractingly nor enough to warrant a score of 3 in the faculty evaluator's opinion, so a 3.5 was given. Thus, a 7-category rating scale (1, 1.5, 2, 2.5. 3, 3.5, and 4) was analyzed. Each independent evaluator's ratings for the 25 items were summed to form a score (0-100%). The 2 evaluators' scores then were averaged and a letter grade was assigned based on the following scale: >90% = A, 80%-89% = B, 70%-79% = C, <70% = F.

Rubric used to evaluate student presentations given in a 2008-2009 capstone PharmD course.

EVALUATION AND ASSESSMENT

Rubric reliability.

To measure rubric reliability, iterative analyses were performed on the evaluations using the Many-Facets Rasch Model (MFRM) following the 2007-2008 data collection period. While Cronbach's alpha is the most commonly reported coefficient of reliability, its single number reporting without supplementary information can provide incomplete information about reliability. 9 - 11 Due to its formula, Cronbach's alpha can be increased by simply adding more repetitive rubric items or having more rating scale categories, even when no further useful information has been added. The MFRM reports separation , which is calculated differently than Cronbach's alpha, is another source of reliability information. Unlike Cronbach's alpha, separation does not appear enhanced by adding further redundant items. From a measurement perspective, a higher separation value is better than a lower one because students are being divided into meaningful groups after measurement error has been accounted for. Separation can be thought of as the number of units on a ruler where the more units the ruler has, the larger the range of performance levels that can be measured among students. For example, a separation of 4.0 suggests 4 graduations such that a grade of A is distinctly different from a grade of B, which in turn is different from a grade of C or of F. In measuring performances, a separation of 9.0 is better than 5.5, just as a separation of 7.0 is better than a 6.5; a higher separation coefficient suggests that student performance potentially could be divided into a larger number of meaningfully separate groups.

The rating scale can have substantial effects on reliability, 8 while description of how a rating scale functions is a unique aspect of the MFRM. With analysis iterations of the 2007-2008 data, the number of rating scale categories were collapsed consecutively until improvements in reliability and/or separation were no longer found. The last positive iteration that led to positive improvements in reliability or separation was deemed an optimal rating scale for this evaluation rubric.

In the 2007-2008 analysis, iterations of the data where run through the MFRM. While only 4 rating scale categories had been included on the rubric, because some faculty members inserted 3 in-between categories, 7 categories had to be included in the analysis. This initial analysis based on a 7-category rubric provided a reliability coefficient (similar to Cronbach's alpha) of 0.98, while the separation coefficient was 6.31. The separation coefficient denoted 6 distinctly separate groups of students based on the items. Rating scale categories were collapsed, with “in-between” categories included in adjacent full-point categories. Table Table1 1 shows the reliability and separation for the iterations as the rating scale was collapsed. As shown, the optimal evaluation rubric maintained a reliability of 0.98, but separation improved the reliability to 7.10 or 7 distinctly separate groups of students based on the items. Another distinctly separate group was added through a reduction in the rating scale while no change was seen to Cronbach's alpha, even though the number of rating scale categories was reduced. Table Table1 1 describes the stepwise, sequential pattern across the final 4 rating scale categories analyzed. Informed by the 2007-2008 results, the 2008-2009 evaluation rubric (Figure (Figure1) 1 ) used 4 rating scale categories and reliability remained high.

Evaluation Rubric Reliability and Separation with Iterations While Collapsing Rating Scale Categories.

a Reliability coefficient of variance in rater response that is reproducible (ie, Cronbach's alpha).

b Separation is a coefficient of item standard deviation divided by average measurement error and is an additional reliability coefficient.

c Optimal number of rating scale categories based on the highest reliability (0.98) and separation (7.1) values.

Evaluator Leniency

Described by Fleming and colleagues over half a century ago, 6 harsh raters (ie, hawks) or lenient raters (ie, doves) have also been demonstrated in more recent studies as an issue as well. 12 - 14 Shortly after 2008-2009 data were collected, those evaluations by multiple faculty evaluators were collated and analyzed in the MFRM to identify possible inconsistent scoring. While traditional interrater reliability does not deal with this issue, the MFRM had been used previously to illustrate evaluator leniency on licensing examinations for medical students and medical residents in the United Kingdom. 13 Thus, accounting for evaluator leniency may prove important to grading consistency (and reliability) in a course using multiple evaluators. Along with identifying evaluator leniency, the MFRM also corrected for this variability. For comparison, course grades were calculated by summing the evaluators' actual ratings (as discussed in the Design section) and compared with the MFRM-adjusted grades to quantify the degree of evaluator leniency occurring in this evaluation.

Measures created from the data analysis in the MFRM were converted to percentages using a common linear test-equating procedure involving the mean and standard deviation of the dataset. 15 To these percentages, student letter grades were assigned using the same traditional method used in 2007-2008 (ie, 90% = A, 80% - 89% = B, 70% - 79% = C, <70% = F). Letter grades calculated using the revised rubric and the MFRM then were compared to letter grades calculated using the previous rubric and course grading method.

In the analysis of the 2008-2009 data, the interrater reliability for the letter grades when comparing the 2 independent faculty evaluations for each presentation was 0.98 by Cohen's kappa. However, using the 3-facet MRFM revealed significant variation in grading. The interaction of evaluator leniency on student ability and item difficulty was significant, with a chi-square of p < 0.01. As well, the MFRM showed a reliability of 0.77, with a separation of 1.85 (ie, almost 2 groups of evaluators). The MFRM student ability measures were scaled to letter grades and compared with course letter grades. As a result, 2 B's became A's and so evaluator leniency accounted for a 2% change in letter grades (ie, 2 of 98 grades).

Validity and Grading

Explicit criterion-referenced standards for grading are recommended for higher evaluation validity. 3 , 16 - 18 The course coordinator completed 3 additional evaluations of a hypothetical student presentation rating the minimal criteria expected to describe each of an A, B, or C letter grade performance. These evaluations were placed with the other 196 evaluations (2 evaluators × 98 students) from 2008-2009 into the MFRM, with the resulting analysis report giving specific cutoff percentage scores for each letter grade. Unlike the traditional scoring method of assigning all items an equal weight, the MFRM ordered evaluation items from those more difficult for students (given more weight) to those less difficult for students (given less weight). These criterion-referenced letter grades were compared with the grades generated using the traditional grading process.

When the MFRM data were rerun with the criterion-referenced evaluations added into the dataset, a 10% change was seen with letter grades (ie, 10 of 98 grades). When the 10 letter grades were lowered, 1 was below a C, the minimum standard, and suggested a failing performance. Qualitative feedback from faculty evaluators agreed with this suggested criterion-referenced performance failure.

Measurement Model

Within modern test theory, the Rasch Measurement Model maps examinee ability with evaluation item difficulty. Items are not arbitrarily given the same value (ie, 1 point) but vary based on how difficult or easy the items were for examinees. The Rasch measurement model has been used frequently in educational research, 19 by numerous high-stakes testing professional bodies such as the National Board of Medical Examiners, 20 and also by various state-level departments of education for standardized secondary education examinations. 21 The Rasch measurement model itself has rigorous construct validity and reliability. 22 A 3-facet MFRM model allows an evaluator variable to be added to the student ability and item difficulty variables that are routine in other Rasch measurement analyses. Just as multiple regression accounts for additional variables in analysis compared to a simple bivariate regression, the MFRM is a multiple variable variant of the Rasch measurement model and was applied in this study using the Facets software (Linacre, Chicago, IL). The MFRM is ideal for performance-based evaluations with the addition of independent evaluator/judges. 8 , 23 From both yearly cohorts in this investigation, evaluation rubric data were collated and placed into the MFRM for separate though subsequent analyses. Within the MFRM output report, a chi-square for a difference in evaluator leniency was reported with an alpha of 0.05.

The presentation rubric was reliable. Results from the 2007-2008 analysis illustrated that the number of rating scale categories impacted the reliability of this rubric and that use of only 4 rating scale categories appeared best for measurement. While a 10-point Likert-like scale may commonly be used in patient care settings, such as in quantifying pain, most people cannot process more then 7 points or categories reliably. 24 Presumably, when more than 7 categories are used, the categories beyond 7 either are not used or are collapsed by respondents into fewer than 7 categories. Five-point scales commonly are encountered, but use of an odd number of categories can be problematic to interpretation and is not recommended. 25 Responses using the middle category could denote a true perceived average or neutral response or responder indecisiveness or even confusion over the question. Therefore, removing the middle category appears advantageous and is supported by our results.

With 2008-2009 data, the MFRM identified evaluator leniency with some evaluators grading more harshly while others were lenient. Evaluator leniency was indeed found in the dataset but only a couple of changes were suggested based on the MFRM-corrected evaluator leniency and did not appear to play a substantial role in the evaluation of this course at this time.

Performance evaluation instruments are either holistic or analytic rubrics. 26 The evaluation instrument used in this investigation exemplified an analytic rubric, which elicits specific observations and often demonstrates high reliability. However, Norman and colleagues point out a conundrum where drastically increasing the number of evaluation rubric items (creating something similar to a checklist) could augment a reliability coefficient though it appears to dissociate from that evaluation rubric's validity. 27 Validity may be more than the sum of behaviors on evaluation rubric items. 28 Having numerous, highly specific evaluation items appears to undermine the rubric's function. With this investigation's evaluation rubric and its numerous items for both presentation style and presentation content, equal numeric weighting of items can in fact allow student presentations to receive a passing score while falling short of the course objectives, as was shown in the present investigation. As opposed to analytic rubrics, holistic rubrics often demonstrate lower yet acceptable reliability, while offering a higher degree of explicit connection to course objectives. A summative, holistic evaluation of presentations may improve validity by allowing expert evaluators to provide their “gut feeling” as experts on whether a performance is “outstanding,” “sufficient,” “borderline,” or “subpar” for dimensions of presentation delivery and content. A holistic rubric that integrates with criteria of the analytic rubric (Figure (Figure1) 1 ) for evaluators to reflect on but maintains a summary, overall evaluation for each dimension (delivery/content) of the performance, may allow for benefits of each type of rubric to be used advantageously. This finding has been demonstrated with OSCEs in medical education where checklists for completed items (ie, yes/no) at an OSCE station have been successfully replaced with a few reliable global impression rating scales. 29 - 31

Alternatively, and because the MFRM model was used in the current study, an items-weighting approach could be used with the analytic rubric. That is, item weighting based on the difficulty of each rubric item could suggest how many points should be given for that rubric items, eg, some items would be worth 0.25 points, while others would be worth 0.5 points or 1 point (Table (Table2). 2 ). As could be expected, the more complex the rubric scoring becomes, the less feasible the rubric is to use. This was the main reason why this revision approach was not chosen by the course coordinator following this study. As well, it does not address the conundrum that the performance may be more than the summation of behavior items in the Figure Figure1 1 rubric. This current study cannot suggest which approach would be better as each would have its merits and pitfalls.

Rubric Item Weightings Suggested in the 2008-2009 Data Many-Facet Rasch Measurement Analysis

Regardless of which approach is used, alignment of the evaluation rubric with the course objectives is imperative. Objectivity has been described as a general striving for value-free measurement (ie, free of the evaluator's interests, opinions, preferences, sentiments). 27 This is a laudable goal pursued through educational research. Strategies to reduce measurement error, termed objectification , may not necessarily lead to increased objectivity. 27 The current investigation suggested that a rubric could become too explicit if all the possible areas of an oral presentation that could be assessed (ie, objectification) were included. This appeared to dilute the effect of important items and lose validity. A holistic rubric that is more straightforward and easier to score quickly may be less likely to lose validity (ie, “lose the forest for the trees”), though operationalizing a revised rubric would need to be investigated further. Similarly, weighting items in an analytic rubric based on their importance and difficulty for students may alleviate this issue; however, adding up individual items might prove arduous. While the rubric in Figure Figure1, 1 , which has evolved over the years, is the subject of ongoing revisions, it appears a reliable rubric on which to build.

The major limitation of this study involves the observational method that was employed. Although the 2 cohorts were from a single institution, investigators did use a completely separate class of PharmD students to verify initial instrument revisions. Optimizing the rubric's rating scale involved collapsing data from misuse of a 4-category rating scale (expanded by evaluators to 7 categories) by a few of the evaluators into 4 independent categories without middle ratings. As a result of the study findings, no actual grading adjustments were made for students in the 2008-2009 presentation course; however, adjustment using the MFRM have been suggested by Roberts and colleagues. 13 Since 2008-2009, the course coordinator has made further small revisions to the rubric based on feedback from evaluators, but these have not yet been re-analyzed with the MFRM.

The evaluation rubric used in this study for student performance evaluations showed high reliability and the data analysis agreed with using 4 rating scale categories to optimize the rubric's reliability. While lenient and harsh faculty evaluators were found, variability in evaluator scoring affected grading in this course only minimally. Aside from reliability, issues of validity were raised using criterion-referenced grading. Future revisions to this evaluation rubric should reflect these criterion-referenced concerns. The rubric analyzed herein appears a suitable starting point for reliable evaluation of PharmD oral presentations, though it has limitations that could be addressed with further attention and revisions.

ACKNOWLEDGEMENT

Author contributions— MJP and EGS conceptualized the study, while MJP and GES designed it. MJP, EGS, and GES gave educational content foci for the rubric. As the study statistician, MJP analyzed and interpreted the study data. MJP reviewed the literature and drafted a manuscript. EGS and GES critically reviewed this manuscript and approved the final version for submission. MJP accepts overall responsibility for the accuracy of the data, its analysis, and this report.

Center for Teaching Innovation

Resource library.

- AACU VALUE Rubrics

Using rubrics

A rubric is a type of scoring guide that assesses and articulates specific components and expectations for an assignment. Rubrics can be used for a variety of assignments: research papers, group projects, portfolios, and presentations.

Why use rubrics?

Rubrics help instructors:

- Assess assignments consistently from student-to-student.

- Save time in grading, both short-term and long-term.

- Give timely, effective feedback and promote student learning in a sustainable way.

- Clarify expectations and components of an assignment for both students and course teaching assistants (TAs).

- Refine teaching methods by evaluating rubric results.

Rubrics help students:

- Understand expectations and components of an assignment.

- Become more aware of their learning process and progress.

- Improve work through timely and detailed feedback.

Considerations for using rubrics

When developing rubrics consider the following:

- Although it takes time to build a rubric, time will be saved in the long run as grading and providing feedback on student work will become more streamlined.

- A rubric can be a fillable pdf that can easily be emailed to students.

- They can be used for oral presentations.

- They are a great tool to evaluate teamwork and individual contribution to group tasks.

- Rubrics facilitate peer-review by setting evaluation standards. Have students use the rubric to provide peer assessment on various drafts.

- Students can use them for self-assessment to improve personal performance and learning. Encourage students to use the rubrics to assess their own work.

- Motivate students to improve their work by using rubric feedback to resubmit their work incorporating the feedback.

Getting Started with Rubrics

- Start small by creating one rubric for one assignment in a semester.

- Ask colleagues if they have developed rubrics for similar assignments or adapt rubrics that are available online. For example, the AACU has rubrics for topics such as written and oral communication, critical thinking, and creative thinking. RubiStar helps you to develop your rubric based on templates.

- Examine an assignment for your course. Outline the elements or critical attributes to be evaluated (these attributes must be objectively measurable).

- Create an evaluative range for performance quality under each element; for instance, “excellent,” “good,” “unsatisfactory.”

- Avoid using subjective or vague criteria such as “interesting” or “creative.” Instead, outline objective indicators that would fall under these categories.

- The criteria must clearly differentiate one performance level from another.

- Assign a numerical scale to each level.

- Give a draft of the rubric to your colleagues and/or TAs for feedback.

- Train students to use your rubric and solicit feedback. This will help you judge whether the rubric is clear to them and will identify any weaknesses.

- Rework the rubric based on the feedback.

Center for Excellence in Teaching

Home > Resources > Group presentation rubric

Group presentation rubric

This is a grading rubric an instructor uses to assess students’ work on this type of assignment. It is a sample rubric that needs to be edited to reflect the specifics of a particular assignment. Students can self-assess using the rubric as a checklist before submitting their assignment.

Download this file

Download this file [63.74 KB]

Back to Resources Page

Advertisement

Rubric formats for the formative assessment of oral presentation skills acquisition in secondary education

- Development Article

- Open access

- Published: 20 July 2021

- Volume 69 , pages 2663–2682, ( 2021 )

Cite this article

You have full access to this open access article

- Rob J. Nadolski ORCID: orcid.org/0000-0002-6585-0888 1 ,

- Hans G. K. Hummel 1 ,

- Ellen Rusman 1 &

- Kevin Ackermans 1

10k Accesses

4 Citations

1 Altmetric

Explore all metrics

Acquiring complex oral presentation skills is cognitively demanding for students and demands intensive teacher guidance. The aim of this study was twofold: (a) to identify and apply design guidelines in developing an effective formative assessment method for oral presentation skills during classroom practice, and (b) to develop and compare two analytic rubric formats as part of that assessment method. Participants were first-year secondary school students in the Netherlands ( n = 158) that acquired oral presentation skills with the support of either a formative assessment method with analytic rubrics offered through a dedicated online tool (experimental groups), or a method using more conventional (rating scales) rubrics (control group). One experimental group was provided text-based and the other was provided video-enhanced rubrics. No prior research is known about analytic video-enhanced rubrics, but, based on research on complex skill development and multimedia learning, we expected this format to best capture the (non-verbal aspects of) oral presentation performance. Significant positive differences on oral presentation performance were found between the experimental groups and the control group. However, no significant differences were found between both experimental groups. This study shows that a well-designed formative assessment method, using analytic rubric formats, outperforms formative assessment using more conventional rubric formats. It also shows that higher costs of developing video-enhanced analytic rubrics cannot be justified by significant more performance gains. Future studies should address the generalizability of such formative assessment methods for other contexts, and for complex skills other than oral presentation, and should lead to more profound understanding of video-enhanced rubrics.

Similar content being viewed by others

Viewbrics: A Technology-Enhanced Formative Assessment Method to Mirror and Master Complex Skills with Video-Enhanced Rubrics and Peer Feedback in Secondary Education

Students’ and Teachers’ Perceptions of the Usability and Usefulness of the First Viewbrics-Prototype: A Methodology and Online Tool to Formatively Assess Complex Generic Skills with Video-Enhanced Rubrics (VER) in Dutch Secondary Education

The Dilemmas of Formulating Theory-Informed Design Guidelines for a Video Enhanced Rubric

Avoid common mistakes on your manuscript.

Introduction

Both practitioners and scholars agree that students should be able to present orally (e.g., Morreale & Pearson, 2008 ; Smith & Sodano, 2011 ). Oral presentation involves the development and delivery of messages to the public with attention to vocal variety, articulation, and non-verbal signals, and with the aim to inform, self-express, relate to and persuade listeners (Baccarini & Bonfanti, 2015 ; De Grez et al., 2009a ; Quianthy, 1990 ). The current study is restricted to informative presentations (as opposed to persuasive presentations), as these are most common in secondary education. Oral presentation skills are complex generic skills of increasing importance for both society and education (Voogt & Roblin, 2012 ). However, secondary education seems to be in lack of instructional design guidelines for supporting oral presentation skills acquisition. Many secondary schools in the Netherlands are struggling with how to teach and assess students’ oral presentation skills, lack clear performance criteria for oral presentations, and fall short in offering adequate formative assessment methods that support the effective acquisition of oral presentation skills (Sluijsmans et al., 2013 ).

Many researchers agree that the acquisition and assessment of presentation skills should depart from a socio-cognitive perspective (Bandura, 1986 ) with emphasis on observation, practice, and feedback. Students practice new presentation skills by observing other presentations as modeling examples, then practice their own presentation, after which the feedback is addressed by adjusting their presentations towards the required levels. Evidently, delivering effective oral presentations requires much preparation, rehearsal, and practice, interspersed with good feedback, preferably from oral presentation experts. However, large class sizes in secondary schools of the Netherlands offer only limited opportunities for teacher-student interaction, and offer even fewer practice opportunities. Based on research on complex skill development and multimedia learning, it can be expected that video-enhanced analytic rubric formats best capture and guide oral presentation performance, since much non-verbal behavior cannot be captured in text (Van Gog et al., 2014 ; Van Merriënboer & Kirschner, 2013 ).

Formative assessment of complex skills

To support complex skills acquisition under limited teacher guidance, we will need more effective formative assessment methods (Boud & Molloy, 2013 ) based on proven instructional design guidelines. During skills acquisition students will perceive specific feedback as more adequate than non-specific feedback (Shute, 2008 ). Adequate feedback should inform students about (i) their task-performance, (ii) their progress towards intended learning goals, and (iii) what they should do to further progress towards those goals (Hattie & Timperly, 2007 ; Narciss, 2008 ). Students receiving specific feedback on criteria and performance levels will become equipped to improve oral presentation skills (De Grez et al., 2009a ; Ritchie, 2016 ). Analytic rubrics are therefore promising formats to provide specific feedback on oral presentations, because they can demonstrate the relations between subskills and explain the open-endedness of ideal presentations (through textual descriptions and their graphical design).

Ritchie ( 2016 ) showed that adding structure and self-assessment to peer- and teacher-assessments resulted in better oral presentation performance. Students were required to use analytic rubrics for self-assessment when following their (project-based) classroom education. In this way, they had ample opportunity for observing and reflecting on (good) oral presentations attributes, which was shown to foster acquisition of their oral presentation skills.

Analytic rubrics incorporate performance criteria to inform teachers and students when preparing oral presentation. Such rubrics support mental model formation, and enable adequate feedback provision by teachers, peers, and self (Brookhart & Chen, 2015 ; Jonsson & Svingby, 2007 ; Panadero & Jonsson, 2013 ). Such research is inconclusive about what are most effective formats and delivery media, but most studies dealt with analytic text-based rubrics delivered on paper. However, digital video-enhanced analytic rubrics are expected to be more effective for acquiring oral presentation skills, since many behavioral aspects refer to non-verbal actions and processes that can only be captured on video (e.g., body posture or use of voice during a presentation).

This study is situated within the Viewbrics project where video-modelling examples are integrated with analytic text-based rubrics (Ackermans et al., 2019a ). Video-modelling examples contain question prompts that illustrate behavior associated with (sub)skills performance levels in context, and are presented by young actors the target group can identify with. The question prompts require students to link behavior to performance levels, and build a coherent picture of the (sub)skills and levels. To the best of authors’ knowledge, there exist no previous studies on such video-enhanced analytic rubrics. The Viewbrics tool has been incrementally developed and validated with teachers and students to structure the formative assessment method in classroom settings (Rusman et al., 2019 ).

The purpose of our study is twofold. On the one hand, it investigates whether the application of evidence-based design guidelines results in a more effective formative assessment method in classroom. On the other hand, it investigates (within that method) whether video-enhanced analytic rubrics are more effective than text-based analytic rubrics.

Research questions

The twofold purpose of this study is stated by two research questions: (1) To what extent do analytic rubrics within formative assessment lead to better oral presentation performance? (the design-based part of this study); and (2) To what extent do video-enhanced analytic rubrics lead to better oral presentation performance (growth) than text-based analytic rubrics? (the experimental part of this study). We hypothesize that all students will improve their oral presentation performance in time, but that students in the experimental groups (receiving analytic rubrics designed according to proven design guidelines) will outperform a control group (receiving conventional rubrics) (Hypothesis 1). Furthermore, we expect the experimental group using video-enhanced rubrics to achieve more performance growth than the experimental group using text-based rubrics (Hypothesis 2).

After this introduction, the second section describes previous research on design guidelines that were applied to develop the analytic rubrics in the present study. The actual design, development and validation of these rubrics is described in “ Development of analytic rubrics tool ” section. “ Method ” section describes the experimental method of this study, whereas “ Results ” section reports its results. Finally, in the concluding “ Conclusions and discussion ” section, main findings and limitations of the study are discussed, and suggestions for future research are provided.

Previous research and design guidelines for formative assessment with analytic rubrics

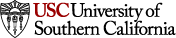

Analytic rubrics are inextricably linked with assessment, either summative (for final grading of learning products) or formative (for scaffolding learning processes). They provide textual descriptions of skills’ mastery levels with performance indicators that describe concrete behavior for all constituent subskills at each mastery level (Allen & Tanner, 2006 ; Reddy, 2011 ; Sluijsmans et al., 2013 ) (see Figs. 1 and 2 in “ Development of analytic rubrics tool ” section for an example). Such performance indicators specify aspects of variation in the complexity of a (sub)skill (e.g., presenting for a small, homogeneous group as compared to presenting for a large heterogeneous group) and related mastery levels (Van Merriënboer & Kirschner, 2013 ). Analytic rubrics explicate criteria and expectations, can be used to check students’ progress, monitor learning, and diagnose learning problems, either by teachers, students themselves or by their peers (Rusman & Dirkx, 2017 ).

Subskills for oral presentation assessment

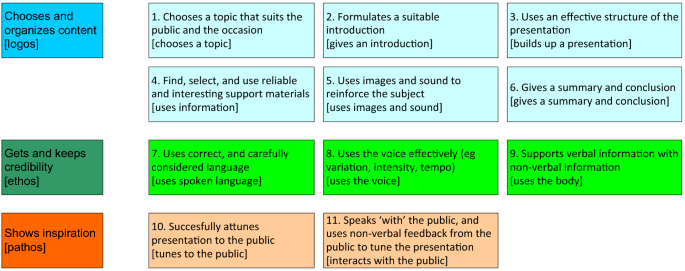

Specification of performance levels for criterium 4

Several motives for deploying analytic rubrics in education are distinguished. A review study by Panadero and Jonsson ( 2013 ) identified following motives: increasing transparency, reducing anxiety, aiding the feedback process, improving student self-efficacy, and supporting student self-regulation. Analytic rubrics also improve reliability among teachers when rating their students (Jonsson & Svingby, 2007 ). Evidence has shown that analytic rubrics can be utilized to enhance student performance and learning when they were used for formative assessment purposes in combination with metacognitive activities, like reflection and goal-setting, but research shows mixed results about their learning effectiveness (Panadero & Jonsson, 2013 ).

It remains unclear what is exactly needed to make their feedback effective (Reddy & Andrade, 2010 ; Reitmeier & Vrchota, 2009 ). Apparently, transparency of assessment criteria and learning goals (i.e., make expectations and criteria explicit) is not enough to establish effectiveness (Wöllenschläger et al., 2016 ). Several researchers stressed the importance of how and which feedback to provide with rubrics (Bower et al., 2011 ; De Grez et al., 2009b ; Kerby & Romine, 2009 ). We now continue this section by reviewing design guidelines for analytic rubrics we encountered in literature, and then specifically address what literature mentions about the added value of video-enhanced rubrics.

Design guidelines for analytic rubrics

Effective formative assessment methods for oral presentation and analytic rubrics should be based on proven instructional design guidelines (Van Ginkel et al., 2015 ). Table 1 presents an overview of (seventeen) guidelines on analytic rubrics we encountered in literature. Guidelines 1–4 inform us how to use rubrics for formative assessment; Guidelines 5–17 inform us how to use rubrics for instruction, with Guidelines 5–9 on a rather generic, meso level and Guidelines 10–17 on a more specific, micro level. We will now shortly describe them in relation to oral presentation skills.

Guideline 1: use analytic rubrics instead of rating scale rubrics if rubrics are meant for learning

Conventional rating-scale rubrics are easy to generate and use as they contain scores for each performance criterium (e.g., by a 5-point Likert scale). However, since each performance level is not clearly described or operationalized, rating can suffer from rater-subjectivity, and rating scales do not provide students with unambiguous feedback (Suskie, 2009 ). Analytic rubrics can address those shortcomings as they contain brief textual performance descriptions on all subskills, criteria, and performance levels of complex skills like presentation, but are harder to develop and score (Bargainnier, 2004 ; Brookhart, 2004 ; Schreiber et al., 2012 ).

Guideline 2: use self-assessment via rubrics for formative purposes

Analytic rubrics can encourage self-assessment and -reflection (Falchikov & Boud, 1989 ; Reitmeier & Vrchota, 2009 ), which appears essential when practicing presentations and reflecting on other presentations (Van Ginkel et al., 2017 ). The usefulness of self-assessment for oral presentation was demonstrated by Ritchie’s study ( 2016 ), but was absent in a study by De Grez et al. ( 2009b ) that used the same rubric.

Guideline 3: use peer-assessment via rubrics for formative purposes

Peer-feedback is more (readily) available than teacher-feedback, and can be beneficial for students’ confidence and learning (Cho & Cho, 2011 ; Murillo-Zamorano & Montanero, 2018 ), also for oral presentation (Topping, 2009 ). Students positively value peer-assessment if the circumstances guarantee serious feedback (De Grez et al., 2010 ; Lim et al., 2013 ). It can be assumed that using analytic rubrics positively influences the quality of peer-assessment.

Guideline 4: provide rubrics for usage by self, peers, and teachers as students appreciate rubrics

Students appreciate analytic rubrics because they support them in their learning, in their planning, in producing higher quality work, in focusing efforts, and in reducing anxiety about assignments (Reddy & Andrade, 2010 ), aspects of importance for oral presentation. While students positively perceive the use of peer-grading, the inclusion of teacher-grades is still needed (Mulder et al., 2014 ) and most valued by students (Ritchie, 2016 ).

Guidelines 5–9

Heitink et al. ( 2016 ) carried out a review study identifying five relevant prerequisites for effective classroom instruction on a meso-level when using analytic rubrics (for oral presentations): train teachers and students in using these rubrics, decide on a policy of their use in instruction, while taking school- and classroom contexts into account, and follow a constructivist learning approach. In the next section, it is described how these guidelines were applied to the design of this study’s classroom instruction.

Guidelines 10–17

Van Ginkel et al. ( 2015 ) review study presents a comprehensive overview of effective factors for oral presentation instruction in higher education on a micro-level. Although our research context is within secondary education, the findings from the aforementioned study seem very applicable as they were rooted in firmly researched and well-documented Instructional Design approaches. Their guidelines pertain to (a) instruction, (b) learning, and (c) assessment in the learning environment (Biggs, 2003 ). The next section describes how guidelines were applied to the design of this study’s online Viewbrics tool.

- Video-enhanced rubrics

Early analytic rubrics for oral presentations were all text-based descriptions. This study assumes that such analytic rubrics may fall short when used for learning to give oral presentations, since much of the required performance refers to motoric activities, time-consecutive operations and processes that can hardly be captured in text (e.g., body posture or use of voice during a presentation). Text-based rubrics also have a limited capacity to convey contextualized and more ‘tacit’ behavioral aspects (O’Donnevan et al., 2004 ), since ‘tacit knowledge’ (or ‘knowing how’) is interwoven with practical activities, operations, and behavior in the physical world (Westera, 2011 ). Finally, text leaves more space for personal interpretation (of performance indicators) than video, which negatively influences mental model formation and feedback consistency (Lew et al., 2010 ).

We can therefore expect video-enhanced rubrics to overcome such restrictions, as they can integrate modelling examples with analytic text-based explanations. The video-modelling examples and its embedded question prompts can illustrate behavior associated with performance levels in context, and contain information in different modalities (moving images, sound). Video-enhanced rubrics foster learning from active observation of video-modelling examples (De Grez et al., 2014 ; Rohbanfard & Proteau, 2013 ), especially when combined with textual performance indicators. Looking at effects of video-modelling examples, Van Gog et al. ( 2014 ) found an increased task performance when the video-modelling example of an expert was also shown. De Grez et al. ( 2014 ) found comparable results for learning to give oral presentations. Teachers in training assessing their own performance with video-modelling examples appeared to overrate their performance less than without examples (Baecher et al., 2013 ). Research on mastering complex skills indicates that both modelling examples (in a variety of application contexts) and frequent feedback positively influence the learning process and skills' acquisition (Van Merriënboer & Kirschner, 2013 ). Video-modelling examples not only capture the ‘know-how’ (procedural knowledge), but also elicit the ‘know-why’ (strategic/decisive knowledge).

Development of analytic rubrics tool

This section describes how design guidelines from previous research were applied in the actual development of the rubrics in the Viewbrics tool for our study, and then presents the subskills and levels for oral presentation skills as were defined.

Application of design guidelines

The previous section already mentioned that analytic rubrics should be restricted to formative assessment (Guidelines 2 and 3), and that there are good reasons to assume that a combination of teacher-, peer-, and self-assessment will improve oral presentations (Guidelines 1 and 4). Teachers and students were trained in rubric-usage (Guidelines 5 and 7), whereas students were motivated for using rubrics (Guideline 7). As participating schools were already using analytic rubrics, one might assume their positive initial attitude. Although the policy towards using analytic rubrics might not have been generally known at the work floor, the participating teachers in our study were knowledgeable (Guideline 6). We carefully considered the school context, as (a representative set of) secondary schools in the Netherlands were part of the Viewbrics team (Guideline 8). The formative assessment method was embedded within project-based education (Guideline 9).

Within this study and on the micro-level of design, the learning objectives for the first presentation were clearly specified by lower performance levels, whereas advice on students' second presentation focused on improving specific subskills, that had been performed with insufficient quality during the first presentation (Guideline 10). Students carried out two consecutive projects of increasing complexity (Project 1, Project 2) with authentic tasks, amongst which the oral presentations (Guideline 11). Students were provided with opportunities to observe peer-models to increase their self-efficacy beliefs and oral presentation competence. In our study, only students that received video-enhanced rubrics could observe videos with peer-models before their first presentation (Guideline 12). Students were allowed enough opportunities to rehearse their oral presentations, to increase their presentation competence, and to decrease their communication apprehension. Within our study, only two oral presentations could be provided feedback, but students could rehearse as often as they wanted outside the classroom (Guideline 13). We ensured that feedback in the rubrics was of high quality, i.e., explicit, contextual, adequately timed, and of suitable intensity for improving students’ oral presentation competence. Both experimental groups in the study used digital analytic rubrics within the Viewbrics tool (both teacher-, peer-, and self-feedback). The control group received feedback by a more conventional rubric (rating scale), and could therefore not use the formative assessment and reflection functions (Guideline 14). The setup of the study implied that all peers play a major role during formative assessment in both experimental groups, because they formatively assessed each oral presentation using the Viewbrics tool (Guideline 15). The control group received feedback from their teacher. Both experimental groups used the Viewbrics tool to facilitate self-assessment (Guideline 16). The control group did not receive analytic progress data to inform their self-assessment. Specific goal-setting within self-assessment has been shown to positively stimulate oral presentation performance, to improve self-efficacy and reduce presentation anxiety (De Grez et al., 2009a ; Luchetti et al., 2003 ), so the Viewbrics tool was developed to support both specific goal-setting and self-reflection (Guideline 17).

Subskills and levels for oral presentation

Reddy and Andrade ( 2010 ) stress that rubrics should be tailored to the specific learning objectives and target groups. Oral presentations in secondary education (our study context) involve generating and delivering informative messages with attention to vocal variety, articulation, and non-verbal signals. In this context, message composition and message delivery are considered important (Quianthy, 1990 ). Strong arguments (‘logos’) have to be presented in a credible (‘ethos’) and exciting (‘pathos’) way (Baccarini & Bonfanti, 2015 ). Public speaking experts agree that there is not one right way to do an oral presentation (Schneider et al., 2017 ). There is agreement that all presenters need much practice, commitment, and creativity. Effective presenters do not rigorously and obsessively apply communication rules and techniques, as their audience may then perceive the performance as too technical or artificial. But all presentations should demonstrate sufficient mastery of elementary (sub)skills in an integrated manner. Therefore, such skills should also be practiced as a whole (including knowledge and attitudes), making the attainment of a skill performance level more than the sum of its constituent (sub)skills (Van Merriënboer & Kirschner, 2013 ). A validated instrument for assessing oral presentation performance is needed to help teachers assess and support students while practicing.

When we started developing rubrics with the Viewbrics tool (late 2016), there were no studies or validated measuring instruments for oral presentation performance in secondary education, although several schools used locally developed, non-validated assessment forms (i.e., conventional rubrics). For instance, Schreiber et al. ( 2012 ) had developed an analytic rubric for public speaking skills assessment in higher education, aimed at faculty members and students across disciplines. They identified eleven (sub)skills of public speaking, that could be subsumed under three factors (‘topic adaptation’, ‘speech presentation’ and ‘nonverbal delivery’, similar to logos-ethos-pathos).

Such previous work holds much value, but still had to be adapted and elaborated in the context of the current study. This study elaborated and evaluated eleven subskills that can be identified within the natural flow of an oral presentation and its distinctive features (See Fig. 1 for an overview of subskills, and Fig. 2 for a specification of performance levels for a specific subskill).

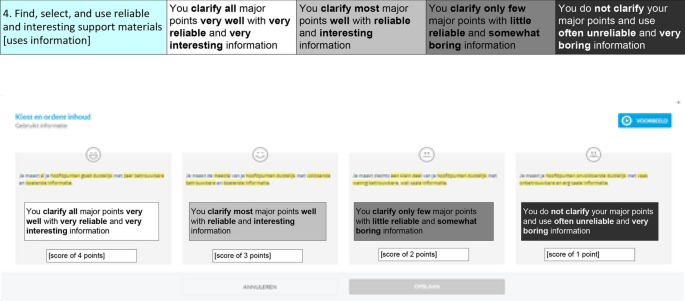

Between brackets are names of subskills as they appear in the dashboard of the Viewbrics tool (Fig. 3 ).

Visualization of oral presentation progress and feedback in the Viewbrics tool

The upper part of Fig. 2 shows the scoring levels for first-year secondary school students for criterium 4 of the oral presentation assessment (four values, from more expert (4 points) to more novice (1 point), from right to left), an example of the conventional rating-scale rubrics. The lower part shows the corresponding screenshot from the Viewbrics tool, representing a text-based analytic rubric example. A video-enhanced analytic rubric example for this subskill provides a peer modelling the required behavior on expert level, with question prompts on selecting reliable and interesting materials. Performance levels were inspired by previous research (Ritchie, 2016 ; Schneider et al., 2017 ; Schreiber et al., 2012 ), but also based upon current secondary school practices in the Netherlands, and developed and tested with secondary school teachers and their students.

All eleven subskills are to be scored on similar four-point Likert scales, and have similar weights in determining total average scores. Two pilot studies tested the usability, validity and reliability of the assessment tool (Rusman et al., 2019 ). Based on this input, the final rubrics were improved and embedded in a prototype of the online Viewbrics tool, and used for this study. The formative assessment method consisted of six steps: (1) study the rubric; (2) practice and conduct an oral presentation; (3) conduct a self-assessment; (4) consult feedback from teacher and peers; (5) Reflect on feedback; and (6) select personal learning goal(s) for the next oral presentation.

After the second project (Project 2), that used the same setup and assessment method as for the first project, students in experimental groups could also see their visualized progress in the ‘dashboard’ of the Viewbrics tool (see Fig. 3 , with English translations provided between brackets), by comparing performance on their two project presentations during the second reflection assignment. The dashboard of the tool shows progress (inner circles), with green reflecting improvement on subskills, blue indicating constant subskills, and red indicating declining subskills. Feedback is provided by emoticons with text. Students’ personal learning goals after reflection are shown under ‘Mijn leerdoelen’ [My learning goals].

The previous sections described how design guidelines for analytic rubrics from literature (“ Previous research and design guidelines for formative assessment with analytic rubrics ” section) were applied in a formative assessment method with analytic rubrics (“ Development of analytic rubrics tool ” section). “ Method ” section describes this study’s research design for comparing rubric formats.

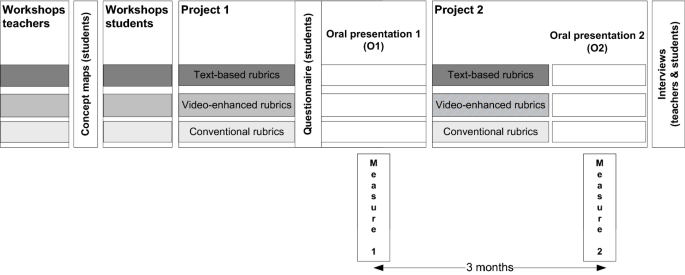

Research design of the study

All classroom scenarios followed the same lesson plan and structure for project-based instruction, and consisted of two projects with specific rubric feedback provided in between. Both experimental groups used the same formative assessment method with validated analytic rubrics, but differed on the analytic rubric format (text-based, video-enhanced). The students of the control group did not use such a formative assessment method, and only received teacher-feedback (via a conventional rating-scale rubric that consisted of a standard form with attention points for presentations, without further instructions) on these presentations. All three scenarios required similar time investments for students. School classes (six) were randomly assigned to conditions (three), so students from the same class were in the same condition. Figure 4 graphically depicts an overview of the research design of the study.

Research design overview

A repeated-measures mixed-ANOVA on oral presentation performance (growth) was carried out to analyze data, with rubric-format (three conditions) as between-groups factor and repeated measures (two moments) as within groups factor. All statistical data analyses were conducted with SPSS version 24.

Participants

Participants were first-year secondary school students (all within the 12–13 years range) from two Dutch schools, with participants equally distributed over schools and conditions ( n = 166, with 79 girls and 87 boys). Classes were randomly allocated to conditions. Most participants completed both oral presentations ( n = 158, so an overall response rate of 95%). Data were collected (almost) equally from the video-enhanced rubrics condition ( n = 51), text-based condition ( n = 57), and conventional rubrics (control) condition ( n = 50).

A related study within the same context and participants (Ackermans et al., 2019b ), analyzed the concept maps elicited from participants to reveal that their mental models (indicating mastery levels) for oral presentation across conditions were similar. From that finding we can conclude that students possessed similar mental models for presentation skills before starting the projects. Results from the online questionnaire (“ Anxiety, preparedness, and motivation ” section) reveal that students in experimental groups did not differ in anxiety, preparedness and motivation before their first presentation. Together with the teacher assessments of similarity of classes, we can assume similarity of students across conditions at the start of the experiment.

Materials and procedure

Teachers from both schools worked closely together in guaranteeing similar instruction and difficulty levels for both projects (Project 1, Project 2). Schools agreed to follow a standardized lesson plan for both projects and their oral presentation tasks. Core team members then developed (condition-specific) materials for teacher- and student workshops on how to use rubrics and provide instructions and feedback (Guidelines 5 and 7). This also assured that similar measures were taken for potential problems with anxiety, preparedness and motivation. Teachers received information about (condition-specific) versions of the Viewbrics tool (see “ Development of analytic rubrics tool ” section). The core team consisted of three researchers and three (project) teachers, with one teacher also supervising the others. The teacher workshops were given by the supervising teacher and two researchers before starting recruitment of students.

Teachers estimated similarity of all six classes with respect to students’ prior presentation skills before starting the first project. All classes were informed by an introduction letter from the core team and their teachers. Participation in this study was voluntary. Students and their parents/caretakers were informed about 4 weeks before the start of the first project, and received information on research-specific activities, time-investment and -schedule. Parents/caretakers signed, on behalf of their minors of age, an informed consent form before the study started. All were informed that data would be anonymized for scientific purposes, and that students could withdraw at any time without giving reasons.

School classes were randomly assigned to conditions. Students of experimental groups were informed that the usability of the Viewbrics tool for oral presentation skills acquisition were investigated, but were left unaware of different rubric formats. Students of the control group were informed that their oral presentation skills acquisition was investigated. From all students, concept maps about oral presentation were elicited (reflecting their mental model and mastery level). Students participated in workshops (specific for their condition and provided by their teacher) on how to use rubrics and provide peer-feedback (all materials remained available throughout the study).

Before giving their presentations on Project 1, students filled in the online questionnaire via LimeSurvey. Peers and teachers in experimental groups provided immediate feedback on given presentations, and students immediately had to self-assess their own presentations (step 3 of the assessment method). Subsequently, students could view the feedback and ratings given by their teacher and peers through the tool (step 4), were asked to reflect on this feedback (step 5), and to choose specific goals for their second oral presentation (step 6). In the control group, students directly received teachers’ feedback (verbally) after completing their presentation, but did not receive any reflection assignment. Control group students used a standard textual form with attention points (conventional rating-scale rubrics). After giving their presentations on the second project, students in the experimental groups got access to the dashboard of the Viewbrics tool (see “ Development of analytic rubrics tool ” section) to see their progress on subskills. About a week after the classes had ended, some semi-structured interviews were carried out by one of the researchers. Finally, one of the researchers functioned as a hotline for teachers in case of urgent questions during the study, and randomly observed some of the lessons.

Measures and instruments

Oral performance scores on presentations were measured by both teachers and peers. A short online questionnaire (with 6 items) was administered to students just before their first oral presentation at the end of Project 1 (see Fig. 4 ). Interviews were conducted with both teachers and students at the end of the intervention to collect more qualitative data on subjective perceptions.

Oral presentation performance

Students’ oral presentation performance progress was measured via comparison of the oral presentation performance scores on both oral presentations (with three months in between). Both presentations were scored by teachers using the video-enhanced rubric in all groups (half of the score in experimental groups, full score for control group). For participants in both experimental groups, oral presentation performance was also scored by peers and self, using the specific rubric-version (either video-enhanced or text-based) (other half of the score). For each of the (eleven) subskills, between 1 point (novice level) and 4 points (expert level) could be earned, with a maximum of 44 points for total performance score. For participants in the control group, the same scale applied but no scores were given by peers nor self. The inter-rater reliability of assessments between teachers and peers was a Cohen’s Kappa = 0.74 which is acceptable.

Anxiety, preparedness, and motivation

Just before presenting, students answered the short questionnaire with five-point Likert scores (from 0 = totally disagree to 4 = totally agree) as additional control for potential differences in anxiety, preparedness and motivation, since especially these factors might influence oral presentation performance (Reddy & Andrade, 2010 ). Notwithstanding this, teachers were the major source to control for similarity of conditions with respect to dealing with presentation anxiety, preparedness and motivation. Two items for anxiety were: “I find it exciting to give a presentation” and “I find it difficult to give a presentation”, a subscale that appeared to have a satisfactory internal reliability with a Cronbach’s Alpha = 0.90. Three items for preparedness were: “I am well prepared to give my presentation”, “I have often rehearsed my presentation”, and “I think I’ve rehearsed my presentation enough”, a subscale that appeared to have a satisfactory Cronbach’s Alpha = 0.75. The item for motivation was: “I am motivated to give my motivation”. Unfortunately, the online questionnaire was not administered within the control group, due to unforeseen circumstances.

Semi-structured interviews with teachers (six) and students (thirty) were meant to gather qualitative data on the practical usability and usefulness of the Viewbrics tool. Examples of questions are: “Have you encountered any difficulties in using the Viewbrics online tool? If any, could you please mention which one(s)” (both students of experimental groups and teachers); “Did the feedback help you to improve your presentation skills? If not, what feedback do you need to improve your presentation skills?” (just students); “How do you evaluate the usefulness of formative assessment?” (both students and teachers); “Would you like to organize things differently in applying formative assessment as during this study? If so, what would you like to organize different?” (just teachers); “How much time did you spend on providing feedback? Did you need more or less time than before?” (just teachers).

Interviews with teachers and students revealed that the reported rubrics approach was easy to use and useful within the formative assessment method. Project teachers could easily stick to the lessons plans as agreed upon in advance. However, project teachers regarded the classroom scenarios as relatively time-consuming. They expected that for some other schools it might be challenging to follow the Viewbrics approach. None of the project teachers had to consult the hotline during the study, and no deviations from the lesson plans were observed by the researchers.

Most important results on the performance measures and questionnaire are presented and compared between conditions.

A mixed ANOVA, with oral presentation performance as within-subjects factor (two scores) and rubric format as between-subjects factor (three conditions), revealed an overall and significant improvement of oral presentation performance over time, with F (1, 157) = 58.13, p < 0.01, η p 2 = 0.27. Significant differences over time were also found between conditions, with F (2, 156) = 17.38, p < 0.01, η p 2 = 0.18. Tests of between-subjects effects showed significant differences between conditions, with F (2, 156) = 118.97, p < 0.01, η p 2 = 0.59, and both experimental groups outperforming the control group as expected (so we could accept H1). However, only control group students showed significantly progress on performance scores over time (at the 0.01 level). At both measures, no significant differences between experimental groups were found as was expected (so we had to reject H2). For descriptives of group averages (over time) see Table 2 .

A post-hoc analysis, using multiple pairwise comparisons with Bonferroni correction, confirms that experimental groups significantly (with p < 0.01 level) outperform the control group at both moments in time, and that both experimental groups not to differ significantly at both measures. Regarding performance progress over time, only the control group shows significant growth (again with p < 0.01). The difference between experimental groups in favour of video-enhanced rubrics did ‘touch upon’ significance ( p = 0.053), but formally H2 had to be rejected. This finding however is a promising trend to be further explored with larger numbers of participants.

An independent t-test comparing the similarity of participants in both experimental groups before their first presentation for anxiety, preparedness, motivation showed no difference, with t (1,98) = 1.32 and p = 0.19 for anxiety, t (1,98) = − 0.14 and p = 0.89 for preparedness, and t (1,98) = − 1.24 and p = 0.22 for motivation (see Table 3 for group averages).

As mentioned in the previous section (interviews with teachers), it was assessed by teachers that presentation anxiety, preparedness and motivation in the control group were no different from both experimental groups. It can therefore be assumed that all groups were similar regarding presentation anxiety, preparedness and motivation before presenting, and that these factors did not confound oral presentation results. There are missing questionnaire data from 58 respondents: Video-enhanced (one respondent), Text-based (seven respondents), and Control group (fifty respondents), respectively.

Conclusions and discussion

The first purpose was to study if applying evidence-informed design guidelines in the development of formative assessment with analytic rubrics supports oral presentation performance of first-year secondary school students in the Netherlands. Students that used such validated rubrics indeed outperform students using common rubrics (so H1 could be accepted). This study has demonstrated that the design guidelines can also be effectively applied and used for secondary education, which makes them more generic. The second purpose was to study if video-enhanced rubrics would be more beneficial to oral presentation skills acquisition when compared to text-based rubrics, but we did not find significant differences here (so H2 had to be rejected). However, post-hoc analysis shows that the growth on performance scores over time indeed seems higher when using video-enhanced rubrics, a promising difference that is ‘only marginally’ significant. Preliminary qualitative findings from the interviews point out that the Viewbrics tool can be easily integrated into classroom instruction and appears usable for the target audiences (both teachers and students), although teachers state it is rather time-consuming to conform to all guidelines.

All students had prior experience with oral presentations (from primary schools) and relatively high oral presentation scores at the start of the study, so there remained limited room for improvement between their first and second oral presentation. Participants in the control group scored relatively low on their first presentation, so had more room for improvement during the study. In addition, the somewhat more difficult content of the second project (Guideline 11) might have slightly reduced the quality of the second oral presentation. Also, more intensive training, additional presentations and their assessments might have demonstrated more added value of the analytic rubrics. Learning might have occurred, since adequate mental models of skills are not automatically applied during performance (Ackermans et al., 2019b ).