- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

9.3: Interview Survey

- Last updated

- Save as PDF

- Page ID 26261

- Anol Bhattacherjee

- University of South Florida via Global Text Project

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

Interviews are a more personalized form of data collection method than questionnaires, and are conducted by trained interviewers using the same research protocol as questionnaire surveys (i.e., a standardized set of questions). However, unlike a questionnaire, the interview script may contain special instructions for the interviewer that is not seen by respondents, and may include space for the interviewer to record personal observations and comments. In addition, unlike mail surveys, the interviewer has the opportunity to clarify any issues raised by the respondent or ask probing or follow-up questions. However, interviews are timeconsuming and resource-intensive. Special interviewing skills are needed on part of the interviewer. The interviewer is also considered to be part of the measurement instrument, and must proactively strive not to artificially bias the observed responses.

The most typical form of interview is personal or face-to-face interview , where the interviewer works directly with the respondent to ask questions and record their responses. Personal interviews may be conducted at the respondent’s home or office location. This approach may even be favored by some respondents, while others may feel uncomfortable in allowing a stranger in their homes. However, skilled interviewers can persuade respondents to cooperate, dramatically improving response rates.

A variation of the personal interview is a group interview, also called focus group . In this technique, a small group of respondents (usually 6-10 respondents) are interviewed together in a common location. The interviewer is essentially a facilitator whose job is to lead the discussion, and ensure that every person has an opportunity to respond. Focus groups allow deeper examination of complex issues than other forms of survey research, because when people hear others talk, it often triggers responses or ideas that they did not think about before. However, focus group discussion may be dominated by a dominant personality, and some individuals may be reluctant to voice their opinions in front of their peers or superiors, especially while dealing with a sensitive issue such as employee underperformance or office politics. Because of their small sample size, focus groups are usually used for exploratory research rather than descriptive or explanatory research.

A third type of interview survey is telephone interviews . In this technique, interviewers contact potential respondents over the phone, typically based on a random selection of people from a telephone directory, to ask a standard set of survey questions. A more recent and technologically advanced approach is computer-assisted telephone interviewing (CATI), increasing being used by academic, government, and commercial survey researchers, where the interviewer is a telephone operator, who is guided through the interview process by a computer program displaying instructions and questions to be asked on a computer screen. The system also selects respondents randomly using a random digit dialing technique, and records responses using voice capture technology. Once respondents are on the phone, higher response rates can be obtained. This technique is not ideal for rural areas where telephone density is low, and also cannot be used for communicating non-audio information such as graphics or product demonstrations.

Role of interviewer

The interviewer has a complex and multi-faceted role in the interview process, which includes the following tasks:

- Prepare for the interview : Since the interviewer is in the forefront of the data collection effort, the quality of data collected depends heavily on how well the interviewer is trained to do the job. The interviewer must be trained in the interview process and the survey method, and also be familiar with the purpose of the study, how responses will be stored and used, and sources of interviewer bias. He/she should also rehearse and time the interview prior to the formal study.

- Locate and enlist the cooperation of respondents : Particularly in personal, in-home surveys, the interviewer must locate specific addresses, and work around respondents’ schedule sometimes at undesirable times such as during weekends. They should also be like a salesperson, selling the idea of participating in the study.

- Motivate respondents : Respondents often feed off the motivation of the interviewer. If the interviewer is disinterested or inattentive, respondents won’t be motivated to provide useful or informative responses either. The interviewer must demonstrate enthusiasm about the study, communicate the importance of the research to respondents, and be attentive to respondents’ needs throughout the interview.

- Clarify any confusion or concerns : Interviewers must be able to think on their feet and address unanticipated concerns or objections raised by respondents to the respondents’ satisfaction. Additionally, they should ask probing questions as necessary even if such questions are not in the script.

- Observe quality of response : The interviewer is in the best position to judge the quality of information collected, and may supplement responses obtained using personal observations of gestures or body language as appropriate.

404 Not found

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Published: 05 October 2018

Interviews and focus groups in qualitative research: an update for the digital age

- P. Gill 1 &

- J. Baillie 2

British Dental Journal volume 225 , pages 668–672 ( 2018 ) Cite this article

27k Accesses

48 Citations

20 Altmetric

Metrics details

Highlights that qualitative research is used increasingly in dentistry. Interviews and focus groups remain the most common qualitative methods of data collection.

Suggests the advent of digital technologies has transformed how qualitative research can now be undertaken.

Suggests interviews and focus groups can offer significant, meaningful insight into participants' experiences, beliefs and perspectives, which can help to inform developments in dental practice.

Qualitative research is used increasingly in dentistry, due to its potential to provide meaningful, in-depth insights into participants' experiences, perspectives, beliefs and behaviours. These insights can subsequently help to inform developments in dental practice and further related research. The most common methods of data collection used in qualitative research are interviews and focus groups. While these are primarily conducted face-to-face, the ongoing evolution of digital technologies, such as video chat and online forums, has further transformed these methods of data collection. This paper therefore discusses interviews and focus groups in detail, outlines how they can be used in practice, how digital technologies can further inform the data collection process, and what these methods can offer dentistry.

You have full access to this article via your institution.

Similar content being viewed by others

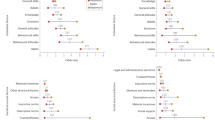

Determinants of behaviour and their efficacy as targets of behavioural change interventions

Interviews in the social sciences

Principal component analysis

Introduction.

Traditionally, research in dentistry has primarily been quantitative in nature. 1 However, in recent years, there has been a growing interest in qualitative research within the profession, due to its potential to further inform developments in practice, policy, education and training. Consequently, in 2008, the British Dental Journal (BDJ) published a four paper qualitative research series, 2 , 3 , 4 , 5 to help increase awareness and understanding of this particular methodological approach.

Since the papers were originally published, two scoping reviews have demonstrated the ongoing proliferation in the use of qualitative research within the field of oral healthcare. 1 , 6 To date, the original four paper series continue to be well cited and two of the main papers remain widely accessed among the BDJ readership. 2 , 3 The potential value of well-conducted qualitative research to evidence-based practice is now also widely recognised by service providers, policy makers, funding bodies and those who commission, support and use healthcare research.

Besides increasing standalone use, qualitative methods are now also routinely incorporated into larger mixed method study designs, such as clinical trials, as they can offer additional, meaningful insights into complex problems that simply could not be provided by quantitative methods alone. Qualitative methods can also be used to further facilitate in-depth understanding of important aspects of clinical trial processes, such as recruitment. For example, Ellis et al . investigated why edentulous older patients, dissatisfied with conventional dentures, decline implant treatment, despite its established efficacy, and frequently refuse to participate in related randomised clinical trials, even when financial constraints are removed. 7 Through the use of focus groups in Canada and the UK, the authors found that fears of pain and potential complications, along with perceived embarrassment, exacerbated by age, are common reasons why older patients typically refuse dental implants. 7

The last decade has also seen further developments in qualitative research, due to the ongoing evolution of digital technologies. These developments have transformed how researchers can access and share information, communicate and collaborate, recruit and engage participants, collect and analyse data and disseminate and translate research findings. 8 Where appropriate, such technologies are therefore capable of extending and enhancing how qualitative research is undertaken. 9 For example, it is now possible to collect qualitative data via instant messaging, email or online/video chat, using appropriate online platforms.

These innovative approaches to research are therefore cost-effective, convenient, reduce geographical constraints and are often useful for accessing 'hard to reach' participants (for example, those who are immobile or socially isolated). 8 , 9 However, digital technologies are still relatively new and constantly evolving and therefore present a variety of pragmatic and methodological challenges. Furthermore, given their very nature, their use in many qualitative studies and/or with certain participant groups may be inappropriate and should therefore always be carefully considered. While it is beyond the scope of this paper to provide a detailed explication regarding the use of digital technologies in qualitative research, insight is provided into how such technologies can be used to facilitate the data collection process in interviews and focus groups.

In light of such developments, it is perhaps therefore timely to update the main paper 3 of the original BDJ series. As with the previous publications, this paper has been purposely written in an accessible style, to enhance readability, particularly for those who are new to qualitative research. While the focus remains on the most common qualitative methods of data collection – interviews and focus groups – appropriate revisions have been made to provide a novel perspective, and should therefore be helpful to those who would like to know more about qualitative research. This paper specifically focuses on undertaking qualitative research with adult participants only.

Overview of qualitative research

Qualitative research is an approach that focuses on people and their experiences, behaviours and opinions. 10 , 11 The qualitative researcher seeks to answer questions of 'how' and 'why', providing detailed insight and understanding, 11 which quantitative methods cannot reach. 12 Within qualitative research, there are distinct methodologies influencing how the researcher approaches the research question, data collection and data analysis. 13 For example, phenomenological studies focus on the lived experience of individuals, explored through their description of the phenomenon. Ethnographic studies explore the culture of a group and typically involve the use of multiple methods to uncover the issues. 14

While methodology is the 'thinking tool', the methods are the 'doing tools'; 13 the ways in which data are collected and analysed. There are multiple qualitative data collection methods, including interviews, focus groups, observations, documentary analysis, participant diaries, photography and videography. Two of the most commonly used qualitative methods are interviews and focus groups, which are explored in this article. The data generated through these methods can be analysed in one of many ways, according to the methodological approach chosen. A common approach is thematic data analysis, involving the identification of themes and subthemes across the data set. Further information on approaches to qualitative data analysis has been discussed elsewhere. 1

Qualitative research is an evolving and adaptable approach, used by different disciplines for different purposes. Traditionally, qualitative data, specifically interviews, focus groups and observations, have been collected face-to-face with participants. In more recent years, digital technologies have contributed to the ongoing evolution of qualitative research. Digital technologies offer researchers different ways of recruiting participants and collecting data, and offer participants opportunities to be involved in research that is not necessarily face-to-face.

Research interviews are a fundamental qualitative research method 15 and are utilised across methodological approaches. Interviews enable the researcher to learn in depth about the perspectives, experiences, beliefs and motivations of the participant. 3 , 16 Examples include, exploring patients' perspectives of fear/anxiety triggers in dental treatment, 17 patients' experiences of oral health and diabetes, 18 and dental students' motivations for their choice of career. 19

Interviews may be structured, semi-structured or unstructured, 3 according to the purpose of the study, with less structured interviews facilitating a more in depth and flexible interviewing approach. 20 Structured interviews are similar to verbal questionnaires and are used if the researcher requires clarification on a topic; however they produce less in-depth data about a participant's experience. 3 Unstructured interviews may be used when little is known about a topic and involves the researcher asking an opening question; 3 the participant then leads the discussion. 20 Semi-structured interviews are commonly used in healthcare research, enabling the researcher to ask predetermined questions, 20 while ensuring the participant discusses issues they feel are important.

Interviews can be undertaken face-to-face or using digital methods when the researcher and participant are in different locations. Audio-recording the interview, with the consent of the participant, is essential for all interviews regardless of the medium as it enables accurate transcription; the process of turning the audio file into a word-for-word transcript. This transcript is the data, which the researcher then analyses according to the chosen approach.

Types of interview

Qualitative studies often utilise one-to-one, face-to-face interviews with research participants. This involves arranging a mutually convenient time and place to meet the participant, signing a consent form and audio-recording the interview. However, digital technologies have expanded the potential for interviews in research, enabling individuals to participate in qualitative research regardless of location.

Telephone interviews can be a useful alternative to face-to-face interviews and are commonly used in qualitative research. They enable participants from different geographical areas to participate and may be less onerous for participants than meeting a researcher in person. 15 A qualitative study explored patients' perspectives of dental implants and utilised telephone interviews due to the quality of the data that could be yielded. 21 The researcher needs to consider how they will audio record the interview, which can be facilitated by purchasing a recorder that connects directly to the telephone. One potential disadvantage of telephone interviews is the inability of the interviewer and researcher to see each other. This is resolved using software for audio and video calls online – such as Skype – to conduct interviews with participants in qualitative studies. Advantages of this approach include being able to see the participant if video calls are used, enabling observation of non-verbal communication, and the software can be free to use. However, participants are required to have a device and internet connection, as well as being computer literate, potentially limiting who can participate in the study. One qualitative study explored the role of dental hygienists in reducing oral health disparities in Canada. 22 The researcher conducted interviews using Skype, which enabled dental hygienists from across Canada to be interviewed within the research budget, accommodating the participants' schedules. 22

A less commonly used approach to qualitative interviews is the use of social virtual worlds. A qualitative study accessed a social virtual world – Second Life – to explore the health literacy skills of individuals who use social virtual worlds to access health information. 23 The researcher created an avatar and interview room, and undertook interviews with participants using voice and text methods. 23 This approach to recruitment and data collection enables individuals from diverse geographical locations to participate, while remaining anonymous if they wish. Furthermore, for interviews conducted using text methods, transcription of the interview is not required as the researcher can save the written conversation with the participant, with the participant's consent. However, the researcher and participant need to be familiar with how the social virtual world works to engage in an interview this way.

Conducting an interview

Ensuring informed consent before any interview is a fundamental aspect of the research process. Participants in research must be afforded autonomy and respect; consent should be informed and voluntary. 24 Individuals should have the opportunity to read an information sheet about the study, ask questions, understand how their data will be stored and used, and know that they are free to withdraw at any point without reprisal. The qualitative researcher should take written consent before undertaking the interview. In a face-to-face interview, this is straightforward: the researcher and participant both sign copies of the consent form, keeping one each. However, this approach is less straightforward when the researcher and participant do not meet in person. A recent protocol paper outlined an approach for taking consent for telephone interviews, which involved: audio recording the participant agreeing to each point on the consent form; the researcher signing the consent form and keeping a copy; and posting a copy to the participant. 25 This process could be replicated in other interview studies using digital methods.

There are advantages and disadvantages of using face-to-face and digital methods for research interviews. Ultimately, for both approaches, the quality of the interview is determined by the researcher. 16 Appropriate training and preparation are thus required. Healthcare professionals can use their interpersonal communication skills when undertaking a research interview, particularly questioning, listening and conversing. 3 However, the purpose of an interview is to gain information about the study topic, 26 rather than offering help and advice. 3 The researcher therefore needs to listen attentively to participants, enabling them to describe their experience without interruption. 3 The use of active listening skills also help to facilitate the interview. 14 Spradley outlined elements and strategies for research interviews, 27 which are a useful guide for qualitative researchers:

Greeting and explaining the project/interview

Asking descriptive (broad), structural (explore response to descriptive) and contrast (difference between) questions

Asymmetry between the researcher and participant talking

Expressing interest and cultural ignorance

Repeating, restating and incorporating the participant's words when asking questions

Creating hypothetical situations

Asking friendly questions

Knowing when to leave.

For semi-structured interviews, a topic guide (also called an interview schedule) is used to guide the content of the interview – an example of a topic guide is outlined in Box 1 . The topic guide, usually based on the research questions, existing literature and, for healthcare professionals, their clinical experience, is developed by the research team. The topic guide should include open ended questions that elicit in-depth information, and offer participants the opportunity to talk about issues important to them. This is vital in qualitative research where the researcher is interested in exploring the experiences and perspectives of participants. It can be useful for qualitative researchers to pilot the topic guide with the first participants, 10 to ensure the questions are relevant and understandable, and amending the questions if required.

Regardless of the medium of interview, the researcher must consider the setting of the interview. For face-to-face interviews, this could be in the participant's home, in an office or another mutually convenient location. A quiet location is preferable to promote confidentiality, enable the researcher and participant to concentrate on the conversation, and to facilitate accurate audio-recording of the interview. For interviews using digital methods the same principles apply: a quiet, private space where the researcher and participant feel comfortable and confident to participate in an interview.

Box 1: Example of a topic guide

Study focus: Parents' experiences of brushing their child's (aged 0–5) teeth

1. Can you tell me about your experience of cleaning your child's teeth?

How old was your child when you started cleaning their teeth?

Why did you start cleaning their teeth at that point?

How often do you brush their teeth?

What do you use to brush their teeth and why?

2. Could you explain how you find cleaning your child's teeth?

Do you find anything difficult?

What makes cleaning their teeth easier for you?

3. How has your experience of cleaning your child's teeth changed over time?

Has it become easier or harder?

Have you changed how often and how you clean their teeth? If so, why?

4. Could you describe how your child finds having their teeth cleaned?

What do they enjoy about having their teeth cleaned?

Is there anything they find upsetting about having their teeth cleaned?

5. Where do you look for information/advice about cleaning your child's teeth?

What did your health visitor tell you about cleaning your child's teeth? (If anything)

What has the dentist told you about caring for your child's teeth? (If visited)

Have any family members given you advice about how to clean your child's teeth? If so, what did they tell you? Did you follow their advice?

6. Is there anything else you would like to discuss about this?

Focus groups

A focus group is a moderated group discussion on a pre-defined topic, for research purposes. 28 , 29 While not aligned to a particular qualitative methodology (for example, grounded theory or phenomenology) as such, focus groups are used increasingly in healthcare research, as they are useful for exploring collective perspectives, attitudes, behaviours and experiences. Consequently, they can yield rich, in-depth data and illuminate agreement and inconsistencies 28 within and, where appropriate, between groups. Examples include public perceptions of dental implants and subsequent impact on help-seeking and decision making, 30 and general dental practitioners' views on patient safety in dentistry. 31

Focus groups can be used alone or in conjunction with other methods, such as interviews or observations, and can therefore help to confirm, extend or enrich understanding and provide alternative insights. 28 The social interaction between participants often results in lively discussion and can therefore facilitate the collection of rich, meaningful data. However, they are complex to organise and manage, due to the number of participants, and may also be inappropriate for exploring particularly sensitive issues that many participants may feel uncomfortable about discussing in a group environment.

Focus groups are primarily undertaken face-to-face but can now also be undertaken online, using appropriate technologies such as email, bulletin boards, online research communities, chat rooms, discussion forums, social media and video conferencing. 32 Using such technologies, data collection can also be synchronous (for example, online discussions in 'real time') or, unlike traditional face-to-face focus groups, asynchronous (for example, online/email discussions in 'non-real time'). While many of the fundamental principles of focus group research are the same, regardless of how they are conducted, a number of subtle nuances are associated with the online medium. 32 Some of which are discussed further in the following sections.

Focus group considerations

Some key considerations associated with face-to-face focus groups are: how many participants are required; should participants within each group know each other (or not) and how many focus groups are needed within a single study? These issues are much debated and there is no definitive answer. However, the number of focus groups required will largely depend on the topic area, the depth and breadth of data needed, the desired level of participation required 29 and the necessity (or not) for data saturation.

The optimum group size is around six to eight participants (excluding researchers) but can work effectively with between three and 14 participants. 3 If the group is too small, it may limit discussion, but if it is too large, it may become disorganised and difficult to manage. It is, however, prudent to over-recruit for a focus group by approximately two to three participants, to allow for potential non-attenders. For many researchers, particularly novice researchers, group size may also be informed by pragmatic considerations, such as the type of study, resources available and moderator experience. 28 Similar size and mix considerations exist for online focus groups. Typically, synchronous online focus groups will have around three to eight participants but, as the discussion does not happen simultaneously, asynchronous groups may have as many as 10–30 participants. 33

The topic area and potential group interaction should guide group composition considerations. Pre-existing groups, where participants know each other (for example, work colleagues) may be easier to recruit, have shared experiences and may enjoy a familiarity, which facilitates discussion and/or the ability to challenge each other courteously. 3 However, if there is a potential power imbalance within the group or if existing group norms and hierarchies may adversely affect the ability of participants to speak freely, then 'stranger groups' (that is, where participants do not already know each other) may be more appropriate. 34 , 35

Focus group management

Face-to-face focus groups should normally be conducted by two researchers; a moderator and an observer. 28 The moderator facilitates group discussion, while the observer typically monitors group dynamics, behaviours, non-verbal cues, seating arrangements and speaking order, which is essential for transcription and analysis. The same principles of informed consent, as discussed in the interview section, also apply to focus groups, regardless of medium. However, the consent process for online discussions will probably be managed somewhat differently. For example, while an appropriate participant information leaflet (and consent form) would still be required, the process is likely to be managed electronically (for example, via email) and would need to specifically address issues relating to technology (for example, anonymity and use, storage and access to online data). 32

The venue in which a face to face focus group is conducted should be of a suitable size, private, quiet, free from distractions and in a collectively convenient location. It should also be conducted at a time appropriate for participants, 28 as this is likely to promote attendance. As with interviews, the same ethical considerations apply (as discussed earlier). However, online focus groups may present additional ethical challenges associated with issues such as informed consent, appropriate access and secure data storage. Further guidance can be found elsewhere. 8 , 32

Before the focus group commences, the researchers should establish rapport with participants, as this will help to put them at ease and result in a more meaningful discussion. Consequently, researchers should introduce themselves, provide further clarity about the study and how the process will work in practice and outline the 'ground rules'. Ground rules are designed to assist, not hinder, group discussion and typically include: 3 , 28 , 29

Discussions within the group are confidential to the group

Only one person can speak at a time

All participants should have sufficient opportunity to contribute

There should be no unnecessary interruptions while someone is speaking

Everyone can be expected to be listened to and their views respected

Challenging contrary opinions is appropriate, but ridiculing is not.

Moderating a focus group requires considered management and good interpersonal skills to help guide the discussion and, where appropriate, keep it sufficiently focused. Avoid, therefore, participating, leading, expressing personal opinions or correcting participants' knowledge 3 , 28 as this may bias the process. A relaxed, interested demeanour will also help participants to feel comfortable and promote candid discourse. Moderators should also prevent the discussion being dominated by any one person, ensure differences of opinions are discussed fairly and, if required, encourage reticent participants to contribute. 3 Asking open questions, reflecting on significant issues, inviting further debate, probing responses accordingly, and seeking further clarification, as and where appropriate, will help to obtain sufficient depth and insight into the topic area.

Moderating online focus groups requires comparable skills, particularly if the discussion is synchronous, as the discussion may be dominated by those who can type proficiently. 36 It is therefore important that sufficient time and respect is accorded to those who may not be able to type as quickly. Asynchronous discussions are usually less problematic in this respect, as interactions are less instant. However, moderating an asynchronous discussion presents additional challenges, particularly if participants are geographically dispersed, as they may be online at different times. Consequently, the moderator will not always be present and the discussion may therefore need to occur over several days, which can be difficult to manage and facilitate and invariably requires considerable flexibility. 32 It is also worth recognising that establishing rapport with participants via online medium is often more challenging than via face-to-face and may therefore require additional time, skills, effort and consideration.

As with research interviews, focus groups should be guided by an appropriate interview schedule, as discussed earlier in the paper. For example, the schedule will usually be informed by the review of the literature and study aims, and will merely provide a topic guide to help inform subsequent discussions. To provide a verbatim account of the discussion, focus groups must be recorded, using an audio-recorder with a good quality multi-directional microphone. While videotaping is possible, some participants may find it obtrusive, 3 which may adversely affect group dynamics. The use (or not) of a video recorder, should therefore be carefully considered.

At the end of the focus group, a few minutes should be spent rounding up and reflecting on the discussion. 28 Depending on the topic area, it is possible that some participants may have revealed deeply personal issues and may therefore require further help and support, such as a constructive debrief or possibly even referral on to a relevant third party. It is also possible that some participants may feel that the discussion did not adequately reflect their views and, consequently, may no longer wish to be associated with the study. 28 Such occurrences are likely to be uncommon, but should they arise, it is important to further discuss any concerns and, if appropriate, offer them the opportunity to withdraw (including any data relating to them) from the study. Immediately after the discussion, researchers should compile notes regarding thoughts and ideas about the focus group, which can assist with data analysis and, if appropriate, any further data collection.

Qualitative research is increasingly being utilised within dental research to explore the experiences, perspectives, motivations and beliefs of participants. The contributions of qualitative research to evidence-based practice are increasingly being recognised, both as standalone research and as part of larger mixed-method studies, including clinical trials. Interviews and focus groups remain commonly used data collection methods in qualitative research, and with the advent of digital technologies, their utilisation continues to evolve. However, digital methods of qualitative data collection present additional methodological, ethical and practical considerations, but also potentially offer considerable flexibility to participants and researchers. Consequently, regardless of format, qualitative methods have significant potential to inform important areas of dental practice, policy and further related research.

Gussy M, Dickson-Swift V, Adams J . A scoping review of qualitative research in peer-reviewed dental publications. Int J Dent Hygiene 2013; 11 : 174–179.

Article Google Scholar

Burnard P, Gill P, Stewart K, Treasure E, Chadwick B . Analysing and presenting qualitative data. Br Dent J 2008; 204 : 429–432.

Gill P, Stewart K, Treasure E, Chadwick B . Methods of data collection in qualitative research: interviews and focus groups. Br Dent J 2008; 204 : 291–295.

Gill P, Stewart K, Treasure E, Chadwick B . Conducting qualitative interviews with school children in dental research. Br Dent J 2008; 204 : 371–374.

Stewart K, Gill P, Chadwick B, Treasure E . Qualitative research in dentistry. Br Dent J 2008; 204 : 235–239.

Masood M, Thaliath E, Bower E, Newton J . An appraisal of the quality of published qualitative dental research. Community Dent Oral Epidemiol 2011; 39 : 193–203.

Ellis J, Levine A, Bedos C et al. Refusal of implant supported mandibular overdentures by elderly patients. Gerodontology 2011; 28 : 62–68.

Macfarlane S, Bucknall T . Digital Technologies in Research. In Gerrish K, Lathlean J (editors) The Research Process in Nursing . 7th edition. pp. 71–86. Oxford: Wiley Blackwell; 2015.

Google Scholar

Lee R, Fielding N, Blank G . Online Research Methods in the Social Sciences: An Editorial Introduction. In Fielding N, Lee R, Blank G (editors) The Sage Handbook of Online Research Methods . pp. 3–16. London: Sage Publications; 2016.

Creswell J . Qualitative inquiry and research design: Choosing among five designs . Thousand Oaks, CA: Sage, 1998.

Guest G, Namey E, Mitchell M . Qualitative research: Defining and designing In Guest G, Namey E, Mitchell M (editors) Collecting Qualitative Data: A Field Manual For Applied Research . pp. 1–40. London: Sage Publications, 2013.

Chapter Google Scholar

Pope C, Mays N . Qualitative research: Reaching the parts other methods cannot reach: an introduction to qualitative methods in health and health services research. BMJ 1995; 311 : 42–45.

Giddings L, Grant B . A Trojan Horse for positivism? A critique of mixed methods research. Adv Nurs Sci 2007; 30 : 52–60.

Hammersley M, Atkinson P . Ethnography: Principles in Practice . London: Routledge, 1995.

Oltmann S . Qualitative interviews: A methodological discussion of the interviewer and respondent contexts Forum Qualitative Sozialforschung/Forum: Qualitative Social Research. 2016; 17 : Art. 15.

Patton M . Qualitative Research and Evaluation Methods . Thousand Oaks, CA: Sage, 2002.

Wang M, Vinall-Collier K, Csikar J, Douglas G . A qualitative study of patients' views of techniques to reduce dental anxiety. J Dent 2017; 66 : 45–51.

Lindenmeyer A, Bowyer V, Roscoe J, Dale J, Sutcliffe P . Oral health awareness and care preferences in patients with diabetes: a qualitative study. Fam Pract 2013; 30 : 113–118.

Gallagher J, Clarke W, Wilson N . Understanding the motivation: a qualitative study of dental students' choice of professional career. Eur J Dent Educ 2008; 12 : 89–98.

Tod A . Interviewing. In Gerrish K, Lacey A (editors) The Research Process in Nursing . Oxford: Blackwell Publishing, 2006.

Grey E, Harcourt D, O'Sullivan D, Buchanan H, Kipatrick N . A qualitative study of patients' motivations and expectations for dental implants. Br Dent J 2013; 214 : 10.1038/sj.bdj.2012.1178.

Farmer J, Peressini S, Lawrence H . Exploring the role of the dental hygienist in reducing oral health disparities in Canada: A qualitative study. Int J Dent Hygiene 2017; 10.1111/idh.12276.

McElhinney E, Cheater F, Kidd L . Undertaking qualitative health research in social virtual worlds. J Adv Nurs 2013; 70 : 1267–1275.

Health Research Authority. UK Policy Framework for Health and Social Care Research. Available at https://www.hra.nhs.uk/planning-and-improving-research/policies-standards-legislation/uk-policy-framework-health-social-care-research/ (accessed September 2017).

Baillie J, Gill P, Courtenay P . Knowledge, understanding and experiences of peritonitis among patients, and their families, undertaking peritoneal dialysis: A mixed methods study protocol. J Adv Nurs 2017; 10.1111/jan.13400.

Kvale S . Interviews . Thousand Oaks (CA): Sage, 1996.

Spradley J . The Ethnographic Interview . New York: Holt, Rinehart and Winston, 1979.

Goodman C, Evans C . Focus Groups. In Gerrish K, Lathlean J (editors) The Research Process in Nursing . pp. 401–412. Oxford: Wiley Blackwell, 2015.

Shaha M, Wenzell J, Hill E . Planning and conducting focus group research with nurses. Nurse Res 2011; 18 : 77–87.

Wang G, Gao X, Edward C . Public perception of dental implants: a qualitative study. J Dent 2015; 43 : 798–805.

Bailey E . Contemporary views of dental practitioners' on patient safety. Br Dent J 2015; 219 : 535–540.

Abrams K, Gaiser T . Online Focus Groups. In Field N, Lee R, Blank G (editors) The Sage Handbook of Online Research Methods . pp. 435–450. London: Sage Publications, 2016.

Poynter R . The Handbook of Online and Social Media Research . West Sussex: John Wiley & Sons, 2010.

Kevern J, Webb C . Focus groups as a tool for critical social research in nurse education. Nurse Educ Today 2001; 21 : 323–333.

Kitzinger J, Barbour R . Introduction: The Challenge and Promise of Focus Groups. In Barbour R S K J (editor) Developing Focus Group Research . pp. 1–20. London: Sage Publications, 1999.

Krueger R, Casey M . Focus Groups: A Practical Guide for Applied Research. 4th ed. Thousand Oaks, California: SAGE; 2009.

Download references

Author information

Authors and affiliations.

Senior Lecturer (Adult Nursing), School of Healthcare Sciences, Cardiff University,

Lecturer (Adult Nursing) and RCBC Wales Postdoctoral Research Fellow, School of Healthcare Sciences, Cardiff University,

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to P. Gill .

Rights and permissions

Reprints and permissions

About this article

Cite this article.

Gill, P., Baillie, J. Interviews and focus groups in qualitative research: an update for the digital age. Br Dent J 225 , 668–672 (2018). https://doi.org/10.1038/sj.bdj.2018.815

Download citation

Accepted : 02 July 2018

Published : 05 October 2018

Issue Date : 12 October 2018

DOI : https://doi.org/10.1038/sj.bdj.2018.815

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Translating brand reputation into equity from the stakeholder’s theory: an approach to value creation based on consumer’s perception & interactions.

- Olukorede Adewole

International Journal of Corporate Social Responsibility (2024)

Perceptions and beliefs of community gatekeepers about genomic risk information in African cleft research

- Abimbola M. Oladayo

- Oluwakemi Odukoya

- Azeez Butali

BMC Public Health (2024)

Assessment of women’s needs, wishes and preferences regarding interprofessional guidance on nutrition in pregnancy – a qualitative study

- Merle Ebinghaus

- Caroline Johanna Agricola

- Birgit-Christiane Zyriax

BMC Pregnancy and Childbirth (2024)

‘Baby mamas’ in Urban Ghana: an exploratory qualitative study on the factors influencing serial fathering among men in Accra, Ghana

- Rosemond Akpene Hiadzi

- Jemima Akweley Agyeman

- Godwin Banafo Akrong

Reproductive Health (2023)

Revolutionising dental technologies: a qualitative study on dental technicians’ perceptions of Artificial intelligence integration

- Galvin Sim Siang Lin

- Yook Shiang Ng

- Kah Hoay Chua

BMC Oral Health (2023)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Interviews vs. Surveys

In the red corner, weighing in at a hefty time commitment and a massive transcription job, we have… INTERVIEWS!

In the blue corner, weighing in at a stack of paper and variable data quality, we have… SURVEYS!

In the battle of the qualitative data collection methods, surveys and interviews both pack quite a punch. Both can help you figure out what your human participants are thinking; how they make decisions, how they behave, and what they believe. Traditionally, both involve questions (which you ask as the researcher) and answers (which your participants contribute). But despite their similarities, surveys and interviews can yield very different results.

One of the most crucial decisions to make before your data collection is which method/s to use. The last thing you want to do is get halfway through interviewing 50 people, only to realise that you really should have surveyed them instead.

Here are a few differences to think about as you consider which data collection method is best for your research.

About Anaise Irvine

2 thoughts on “ interviews vs. surveys ”.

This is great – I’d also like to add my 2c. Surveys are very useful if you already know what is important, interesting and you can express it so it is understandable- and interviews are a great way of getting that information. In my experience a big problem with surveys is that they can be designed in such a way as to completely put off the participant ( asking questions which are basically your constructs, “do you think the use of mobile technology is moderately influenced by your gender ?”), But also missing the opportunity for surprise (“who buys the groceries, you, your partner, your parents ?” seems to exclude “actually we dumpster dive for everything in my house “). I would say the key advantage of surveys is if you are looking for differences between groups, but at least some interviews first can really firm up the questions.

Good point Dave. The question-setting is absolutely crucial.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Concept Testing

- Price & Adoption Modeling

- Brand Measurement

- Segmentation

- Loyalty Tracking

- Recommendation Optimization

- Messaging & Positioning

- Business Development

- Conjoint Analysis

- EverStream Reporter

- Insight Streamer

- Insight Streamer LifeCycle

- Product Manager

- Insights/Market Research Manager

- Product Developer/Engineer

- Whitepapers & Case Studies

- Sample Size Calculator

- 949-453-7911

When Should I Use One-on-One Interviews Over A Survey?

The 1,2,3’s Of Knowing When to Use Qualitative Over Quantitative Research

by David Cristofaro

May 4, 2017 3:44:13 PM

In countless meetings and conference calls to discuss potential research projects, one of the most common questions I receive is, “Should I do focus groups or a survey for this project?” or, “Are interviews better than focus groups?”

The right answer isn’t always clear. Even after performing research for nearly 20 years, more questions nearly always precede my answer.

First, I will add that many studies in fact combine qualitative and quantitative techniques, and combining them should be used more often than they are in practice. More importantly, there are reasons for using them in varying order (qual-then-quant versus quant-then-qual). We will discuss this in a later article.

Often, research efforts will just not justify a lofty enough budget to perform both methods, and as a result, it becomes important to choose one component of the research and invest all of the available resources in that study. Sadly, this happens, but sometimes it is unavoidable, as either there are not enough respondents to project to the audience at large, or in many cases, it is less costly to tolerate the risk than to conduct the second leg of the research.

In my experience, the answer to the question regarding “What research technique should I use?” centers around the answers to the following set of questions:

- Can you provide a list of potential answers to each question you would like to ask your audience? Do you think you know most of the important potential answers?

- Is there a story you are looking for, or a narrative to a set of circumstances or events?

- Are you seeking to describe behavior across a large population? Is “statistical significance” an essential requirement of the research?

Qualitative research is a critical competency for any company’s product development and marketing processes. Since it is indispensable, it needs to be conducted by either qualified professional market researchers, or well-trained, experienced, internal team members. As we begin this discussion, it is important to recognize this is only offered as a guide to recognize qualitative vs. quantitative research projects from a macro perspective.

Can you provide a list of potential answers to each question you would like to ask your audience? Do you think you know most of the important potential answers?

Sometimes, when researching a topic, you may know the general questions you would like to ask of your audience, but you do not know the range of answers you may receive, or which of these are likely to come up most often. If this is the case for you, an exploratory qualitative research study is right for you.

The good news here is, it is usually fairly easy to know when this method is best: when you can’t easily think of the answers yourself to the questions you would like to pose, or when convening a group of your peers internal to your department unearths little more. At best, there may be answers, but there is little agreement as to the range of options.

Is there a story you are looking for, or a narrative to a set of circumstances or events?

You may be looking to hear more about a decision-making pathway, a customer journey, or a series of events in the lives of your customers. In these cases, a survey is a very challenging tool to use.

It may be difficult to expose all of the optional outcomes that were possible at each important juncture, a requirement for the use of a survey to gather this information. This is when the qualitative interview shines; in opportunities to hear and probe on stories or narratives.

Persona Research is an Excellent Example

We recently finished a series on persona development, including definitions and an approach to articulating the personas required for a given audience.

In this case, while segmentation research is very useful in the leadup to developing personas, the critical intelligence involves a narrative or story. This is extremely difficult to articulate in a survey, beyond verifying consistency of a pattern observed in the persona interviews. Therefore, if you are looking to gather story components and stitch together narratives for different personas, or individuals, or for different product use cases, the qualitative study is for you.

Are you seeking to describe behavior across a larger population? Is “statistical significance” an important proof source for your research results?

In the case of ensuring a research result is projectable to the population at large, it is important to say most research is meant to understand behaviors of a group or segment of a population through “sampling” or debriefing a small group of respondents that represents the larger group. Sometimes, however, this is an imperative. It is required by senior leadership, or by an internal gating process.

In these cases, you need a survey. Right?

But what if you don’t know enough to write a survey, yet still need statistically significant results?

More than likely, your research will be a two-step process. Ensuring you have statistically significant results will require you to set up your research more formally, and decide more carefully on how many respondents you sample in each subgroup you need to describe. This means that if you have three groups and you need to know how each behaves independently of each other, you need a large enough sample for each group to detect these differences. If you are seeking to describe the differences in behaviors between the three group, then you can get away with a smaller sample overall.

Sidestepping all of the details surrounding why (it is not too complex a problem, but beyond the scope of this article) statistical significance is usually attained through larger samples of respondents than is practical for qualitative, discussion-oriented studies. But investing in even a very short series of one-on-one interviews prior to fielding a survey offers important advantages which will yield much more complete and reliable quantitative research.

Need help with deciding how to do your next research project? Let Actionable Research offer you some experienced-grounded assistance. We’ll be happy to discuss your research goals and identify the a cost-effective solution that will provide you with Actionable results.

Follow Us

For regular updates or more information, follow us on Facebook, LinkedIn and Twitter.

Popular Blog Posts

Get in touch.

Actionable Research, Inc.

120 Vantis Dr. Suite 300 Aliso Viejo, CA 92656 949 453 7911 [email protected]

www.actionable.com

Interviews, focus groups, or surveys: which should you use?

How does your brand resonate with your participants? Sarah Durham and Big Duck’s Senior Strategist Laura Fisher discuss the ins and outs of interviews, focus groups, and surveys. Learn how you can conduct your own research, make your focus groups more diverse, and how to get more accurate responses.

Sarah Durham: Welcome back to the Smart Communications Podcast. I’m Sarah Durham and I’m joined today by Laura Fisher , who’s a Senior Strategist here at Big Duck. Welcome back, Laura.

Laura Fisher: Thanks for having me.

Sarah Durham: For those of you who’ve been listening to this podcast for awhile, Laura has been on the show a few times. Most recently we recorded a podcast about how doing interviews can help you get better insights and we thought today we would kind of expand on that and another article that Laura wrote on our blog, which is called interviews, focus groups and surveys, three research methods to help you understand audiences and we’re going to unpack that in a little bit more detail today so that if you’re doing your own research, you’ve got a few more tips and tricks up your sleeve. So let’s dig in. Before we talk about these methodologies, let’s talk a little bit about context. Why would a nonprofit communicator or other person need to do this kind of research?

Laura Fisher: We typically see research being most useful at two different phases in a project, potentially at the beginning of a project when you’re just starting out and trying to learn a bit about your audiences. So from a communication standpoint, your audiences, their motivations, their perspectives are going to be key to whatever it is you’re trying to do. So if you’re launching a campaign or going through a rebrand before you begin talking to audiences and doing some research among them can be helpful to jumpstart that project and get their perspectives. The second place it might be helpful is midway through a project using research as a form of testing. So we see this with organizations a lot. If they are testing a new brand, maybe a name or a logo or a tagline or connecting a campaign and testing a concept or a theme for that campaign, putting it in front of your target audiences to get their feedback and it can help you make decisions and help you understand if this new branding or new campaign concept is going to resonate with the people that you’re trying to reach.

Sarah Durham: Okay. So let’s talk about three different types of research you map out in this blog. And by the way, we’ll link to this blog and also to the podcast about conducting interviews in the show notes. But walk us through high level what you talk about in this article and the contexts in which each of these methodologies might be most useful.

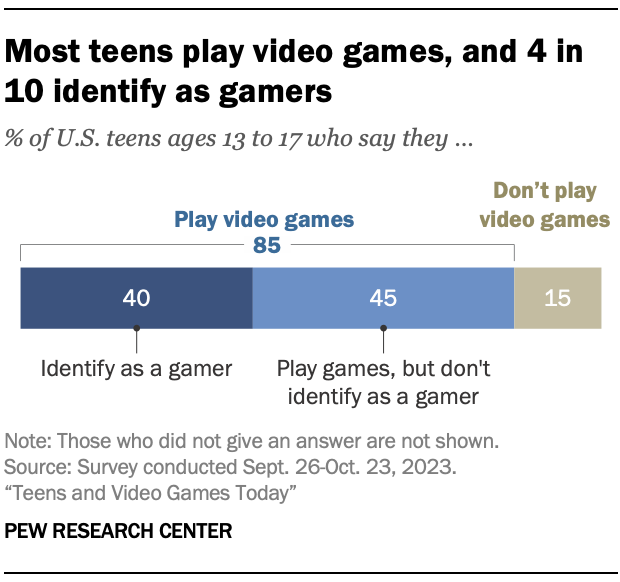

Laura Fisher: The three methodologies I lay out are interviews, focus groups, and surveys. Interviews and focus groups are both what we call qualitative research, which means you’re really digging into perceptions and motivations and the feelings of an audience member as opposed to something more quantitative, which might be numerical data. So for interviews and for focus groups, you’re more having individual conversations. An interview for example, is a conversation with one person exploratory with the audience group you’re trying to get to know. So these come in handy. If you are trying to understand and experience a motivation or behavior that might have connected someone to your organization. Maybe understanding why they donate or why they volunteer. A focus group is pretty similar. It’s just with a larger group. So typically between five to eight people, all with a common connection to your organization. And you can use focus groups for similar reasons, to really unpack motivations and perspectives and experiences. And in a focus group you can really in real time see themes emerge because multiple people are talking to you about the same topic and you can see trends over time. For surveys, these are typically most helpful when you’re trying to ask a lot of questions of a large number of people. You can ask, you know, up to 20-25 questions in a survey. I have a list of thousands and thousands and cover a lot of topics. So while with interviews and focus groups, you might be digging into a few topics very deeply, a survey. You could talk about your communications, your brand, and more in a number of questions and really dig into various perspectives on your communications. So we tend to think about interviews and focus groups and qualitative research when you’re really trying to dig into a topic and meaty way and surveys as being more of a high level way to get a lot of insights very quickly.

Sarah Durham: So, we’ll talk through a couple of examples and places where these might be more or less useful for you, but before we do that, I want to ask you a question that I imagine some of the people who are listening to this are going to have, which is can I really do my own interviews or my own focus groups? I’m sure a lot of people have seen TV shows where focus groups are done by professional facilitators with two way mirrors and all of that. If somebody is not an expert researcher, can they do this on their own? Laura Fisher: Definitely. I think there are a ton of resources out there for creating a research process on your own and really is as simple as writing some objective, non-leading questions, getting the right people in a room and asking those questions. You as the researcher, because you work in the organization, will have to play a more objective role than you might typically. So you should be approaching it from a purely research standpoint, not letting, you know, your role at the organization, get in the way of asking clear and objective questions. But I think if, for many of our clients in nonprofits, time and capacity and budget is a constraint and there’s no reason that you, the communicator, cannot conduct some of the research on your own. You could also consider having someone else at your organization that does not work in communications and doesn’t regularly interact with donors or volunteers. Conduct the research on your behalf if that helps you feel like you’re getting more objective opinions.

Sarah Durham: Yeah, I agree. I think DIY research is a little bit like exercising, like it’s better to do some rather than none and if you can’t afford to hire a pro or you don’t have a volunteer who’s really an expert, definitely better to do what you can do on your own then skip it. There is a little bit of jargon that you’ll see come up also when you start to search for interviews, a lot of times professional researchers will call interviews IDIs, in depth interviews, which you know I’ve always thought was a little bit, a little bit of malarkey cause it’s really just an interview.

Laura Fisher: That’s true.

Sarah Durham: A lot of the interviews we do here are just done by phone and they’re you know, half hour long, maybe an hour maximum and developed with a facilitator’s guide. So to your point, questions are developed in advance. They’re written to be non-leading and also the sort of ground rules for the conversation like what is or isn’t going to be confidential, whether or not you’re taking notes, who’s going to see those notes? Those kinds of things are good to think through in advance. Right?

Laura Fisher: Definitely. I think having a script is really key to interviews and focus groups because it, for one, gives you a guide and talking points to start a conversation and make sure everyone feels comfortable having that conversation and to following a script, especially if you’re doing a number of interviews can help to eliminate bias and make sure you’re asking everybody the same questions and giving everyone a chance to weigh in on the same topics.

Sarah Durham: And I think we dug into that a little bit also in the other podcast we’ve got . So if you’re about to embark on a lot of interviews that will be good to listen to. And we’ll link to it again. Before we move past interviews, let’s talk about an example or two of where interviews are handy or where you found that they were the right type of research.

Laura Fisher: As I said before, interviews are especially helpful for unpacking motivations and experiences and really understanding why the connection someone has with your organization is important and powerful. So I have found it particularly useful in messaging work that we do. So for example, we’re working right now with a rare disease organization on crafting new donor messaging. And in that process we’re talking to 25 different donors to hear about their connection to the organization, why they give, what parts of their mission they care most about where else they give, and why they might give to other organizations to really unpack who that person is and what their experience and motivations are for giving to the organization. And then using that and finding themes across all 25 of the interviews to craft messaging that they can then use with a much larger donor audience. So in that case, interviews are really helpful to dig into a specific topic and action, which is giving.

Sarah Durham: And why would you do a focus group instead of interviews? Laura Fisher: Especially in the context of donating, sometimes a focus group and having a number of people in a room talking about why they give can make people uncomfortable. They like to have one-on-one conversations. So I find focus groups to be more useful when you’re talking about a nonsensitive topic or when you’re testing an identity of some sort. So an example of when we’ve used focus groups is with an education organization doing an awareness campaign and they had a campaign theme, a hook, which was messaging and a visual application and we gave them some options and they really wanted to hear from the people that it was going to be put in front of to see which resonated the most. So we did a focus group where we showed those concepts to a group of about six different people and got their feedback in real time to what they liked, what messaging resonated, what visuals resonated, that sort of thing. And then we could use that to help the organization make a decision about which one to move forward with more broadly. So in that case it was especially helpful because we had work to put in front of a few people. They had the ability to react in real time and then we could build on conversations and perspectives that people were bringing after they saw actual visual and writing work play out. So the focus group has been particularly helpful in those testing contexts.

Sarah Durham: Yes, so that’s a great example of a testing contacts. And I can think of one that we worked on when we were collaborating with the strategic planning firm. We were doing a strategic plan together and we did some focus groups for an arts organization, a Brooklyn based arts organization. And we had focus groups with artists in different communities. Again, not a sensitive topic for people to get together and share their feelings about the organization or about the work. Although there is a dynamic in focus groups that is the sort of either group think or group dynamics that you do have to manage. So what comes up in the group dynamic?

Laura Fisher: It is true that when you have a group of people together talking about anything, people are going to build on each other’s perspectives. Perhaps be biased by the conversation that happened before they speak. It might mean something as simple as the fact that they just, you know, say I feel the same way as the person who spoke before you or that they don’t share an opinion because they’re in a room with a lot of people. So one way we combat that in focus groups, making sure that you give everyone an alternative way to share their feedback. So we often will have a piece of paper, or if it’s a virtual focus group, we share our email addresses, things like that. So if someone feels more comfortable sharing and writing or they want to add to something that they didn’t feel comfortable sharing out loud as an example or something like that, they can do so in writing and share it privately. So that’s just a quick way to make focus groups a little bit more inclusive and kind of combat some of that group think or shyness that can happen in group settings.

Sarah Durham: You can also set some norms at the beginning of the focus groups about hearing all voices or asking perhaps, you know, very loud and dominant personalities in the focus group to take a break and let somebody else talk or something like that. I’ve seen that be a little bit of a wildcard. It kind of just depends I guess on who’s in the room. Okay. So let’s talk a little bit about surveys. What’s an example of a context or two when a survey is particularly helpful?

Laura Fisher: As I said before, surveys are especially helpful when you have a lot to ask of a large number of people. So we were doing a brand and communication study with another health organization and they have a list of, you know, 50 to a hundred thousand people that they haven’t heard from in terms of communications preferences and their views and perceptions of their brand in a long time. So we did a full assessment of their brand and their communications. And a big piece of that was a long survey that included a lot of questions about both brand and communications. Everything from which statement about our organization is most motivating to where do you like to receive your communications? So we got to ask a long list of questions from a lot of people that they hadn’t heard their perspective in quite a long time and then could synthesize at a very high level themes that emerged on their email list. So while an interview might have given us more in depth information about fewer people, we got to see a snapshot of tens of thousands of people that they hadn’t been able to see in a long time. So if you’re feeling like you don’t really know who is on your email list or you have no idea what channels people prefer to hear you on, a survey can be a really good way to get a lot of information about a lot of people very quickly.

Sarah Durham: It’s really helpful also to sort of see that pie chart that quantitative data gives you about percentages of people who’ve responded a certain way. Before we started recording, I was remembering with Laura a project we worked on years ago where we actually used a survey to test two different logos that a client was considering and I was very skeptical of that. I thought that something quantitative about something as kind of emotional as a logo would be problematic, but it really worked well. The organization was kind of undecided, not sure. We sent an email to their alumni, which was a very large list to weigh in on these two visual directions and there was a clear winner. There was an open ended field for comments and people share a lot of comments, which was a lot of work for the people synthesizing those insights to sift through, but really helped make sure that it was something that they got input from, from a very broad range of people in their community. So let’s say you’ve done your interviews or your focus groups or your surveys, maybe you’ve done some combination of those things. We frequently do use, you know, multiple modalities of research depending on the project. How do you synthesize those insights and how do you get over or work with the biases of whoever is actually conducting the research?

Laura Fisher: Synthesis can take time and that time it takes depends a lot on the research methodology you use. Going through surveys, as Sarah said, you get to see pie charts and percentages and they can be a little bit easier to analyze because you get a quicker snapshot of how everyone’s feeling about every question you asked. Interviews and focus groups, we tend to take very diligent notes or even record those and then sift through them. The way that we tend to do it is to have one person sift through the notes and collect themes. Once go back and sift through it and collect themes, add on to themes you’ve already collected again and then have a second person review those notes and identify their own themes, push back on themes that they didn’t see highlighted as much as you might have noted. Just to make sure that whoever is reviewing on the first time is not bringing their own biases to what themes they emerge by having two synthesizers review it. You make sure that you’re getting really objective results about the actual themes that emerged the most times from the script and the recording of the interviews and focus groups. So that’s a great way to eliminate one person’s bias by layering in a second researcher to with you.

Sarah Durham: Yeah, I have seen that be really powerful and transformative in our work in two ways. The first is that two different people do see or hear different themes and ideas, but also I think for the first person, if you, for instance, conducted those focus groups or did those interviews yourself as you’re doing them, you do start to form ideas and insights and it is very easy as you try to synthesize those insights to reveal your own preferences, to start to build the case that you want to build. And what I think is really powerful when somebody else has to review them and synthesize them is that you know that’s going to be checked. So you hold that tendency a little bit more at Bay but also the people that you are presenting those insights to or those findings to tend to believe them a little bit more because they aren’t just, you know, Laura did all this research and therefore she thinks X and it’s all about her. It’s a little bit more objective or it’s been pressure tested a bit and I think that that’s particularly useful if you’re doing research that you’re going to present to your executive director or your board or to a funder where the validity of that research needs to be maybe a bit more rigorous and less personal. There is another step that I see you and your colleagues on our strategy team do to that I think when you’re doing your own research in house at a nonprofit is worth elevating and that is the step of after the research is done before you get into recommendations, sharing back those synthesized insights. So when we do the research, I think you often have a first presentation, which is just a kind of, this is what we heard synthesis, and then a second presentation, which is the recommendations. Is that correct?

Laura Fisher: That’s correct. So we tend to do all of the research for several months, come back with a set of findings and insights, and those are typically just a synthesis of the themes that we saw and a little bit about what we think that might mean. We’re not jumping to this means you need to start, you know, sending 500 emails this year or whatever it may be. It would be something like your list doesn’t feel like they hear from you enough. So it’s kind of that middle step of reporting back on what we heard and adding our own layer of communications insights. And we’d like to do that because it helps to, to Sarah’s point earlier, when the recommendations do come around, it makes a lot more sense. It’s about taking someone on that research journey with you. If we conducted 25 interviews and did a survey to 50,000 people, you’re going to want to hear that and you’re going to want to hear the results of that. And oftentimes with the clients we work with, this is the first time they’ve, you know, heard directly from their audiences in awhile. So we find that that step of checking in and sharing back what we heard can be really powerful for the organization and help them even beyond communications. Sometimes the quotes that they see or the information they get in the survey help them in other places in their organization as well, not just the communications and marketing they’re doing.

Sarah Durham: It’s also a nice way to, I think, highlight the difference between strategies and tactics. Because I think if you jump right from doing research into making recommendations, those recommendations are often tactical, like send more email. But when you stop to say your audiences want to hear from you more, there are many strategies to solve that problem. Email might be one tactic you could use, but there may be multiple ways you could do that. And I think it’s a nice way to kind of Mark the journey of how you arrive at those recommendations with some of the tactics that might emerge. There’s another piece that comes up a lot and we try to layer very proactively into our work that we want you to think about too and that is how to make sure that the research that you conduct is inclusive and equitable and Ally Dommu , Big Duck’s Director of Strategy, wrote a blog about that which we’re going to link to in the show notes . But Laura, this is something that you’ve got a lot of practices around. What tips or tools do you think are useful to bear in mind to make sure the research process is inclusive and equitable?

Laura Fisher: For us I think bringing the inclusivity and equitability into the research process is all about the voices that you seek out and center in the research process. So that might look a couple of different ways. It might be that- do I make it as equitable as possible? You’re not just hearing directly from board members. You’re hearing from volunteers, you’re hearing from program participants, you’re hearing from a number of different people who engage with your organization in different ways and not just those who might have influence to make sure that you’re hearing from all different voices. Similarly, making sure that you have a diversity of voices that you’re listening to as well. So demographically diverse, we try to implement things like screener surveys before we do interviews or focus groups to cast a wide net and try to collect a number of different people that are connected to in different ways and demographic makeups to identify the people that we want to have in a focus group or an interview and make sure that, as much as we can, we are talking to a diverse set of people and a diverse set of connections to your organization. That’s not always possible and sometimes from our clients, you know, we aspirationally want to have a more diverse email list or something like that. So we try to help clients seek out that as well. And it’s helpful I think to think about when you’re embarking on a research process, how you can not only be representative of what your current board or your current email list looks like, but what aspirationally you might be looking for.

Sarah Durham: So there are probably millions of resources that if you Google things like how to do focus groups or how to do interviews or something that come up. One of the resources we like a lot here is a book called Just Enough Research , which you can buy on Amazon . I can’t remember the author’s name. We’ll try to link to it in the show notes, but Just Enough Research is a very handy book. If you’re trying to do your own research and talks about some of the things we’re discussing today. Are there any other parting tips or tricks you want to elevate?

Laura Fisher: I would just add a resource that I use a lot is a Survey Monkey guide to writing good survey questions . For anyone who hasn’t written a survey before or hasn’t in a very long time. It does a great job of sharing how to use different question types, how to make sure you’re writing an objective question. That kind of information might also be useful for writing an interview script or a focus group guide so we can link to that as well. It’s a very helpful sort of starter kit for embarking on a survey.

Sarah Durham: Great. Yeah, and I think one of the insights that’s emerging for me is I listened to you talk is that when you spend a lot of time doing research for a living, as you do, you build a network of tools and resources and skills and those allow you to build your confidence and to feel more certain that the research you’re doing is done well and as valid as it can be, but you don’t have to go that deep. Right? It’s better to do some research and do your best and for an in-house person with limited time to do that, just being thoughtful and methodical is probably the first and most important place to begin.