10 Best Literature Review Tools for Researchers

Boost your research game with these Best Literature Review Tools for Researchers! Uncover hidden gems, organize your findings, and ace your next research paper!

Researchers struggle to identify key sources, extract relevant information, and maintain accuracy while manually conducting literature reviews. This leads to inefficiency, errors, and difficulty in identifying gaps or trends in existing literature.

Table of Contents

Top 10 Literature Review Tools for Researchers: In A Nutshell (2023)

| 1. | Semantic Scholar | Researchers to access and analyze scholarly literature, particularly focused on leveraging AI and semantic analysis |

| 2. | Elicit | Researchers in extracting, organizing, and synthesizing information from various sources, enabling efficient data analysis |

| 3. | Scite.Ai | Determine the credibility and reliability of research articles, facilitating evidence-based decision-making |

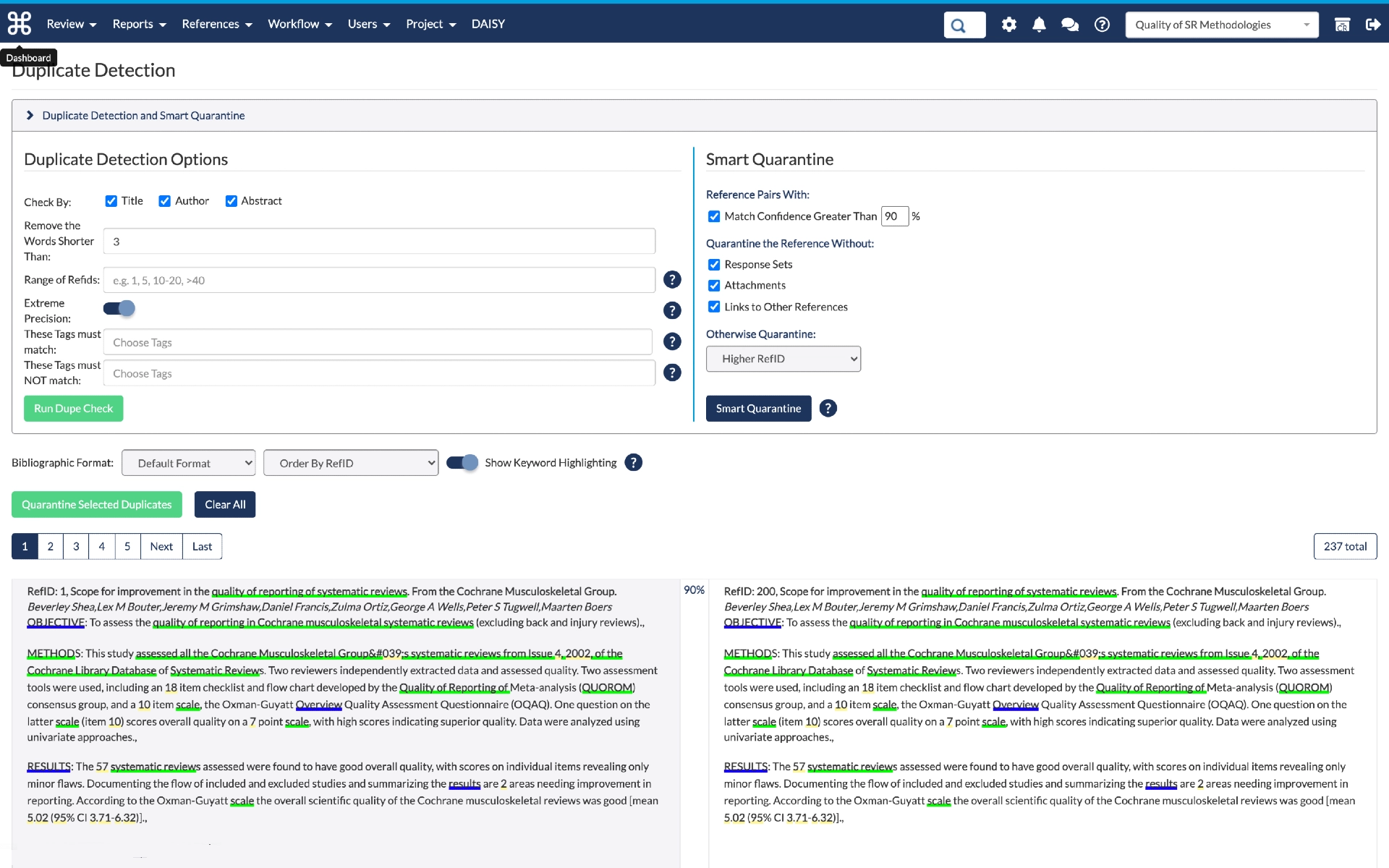

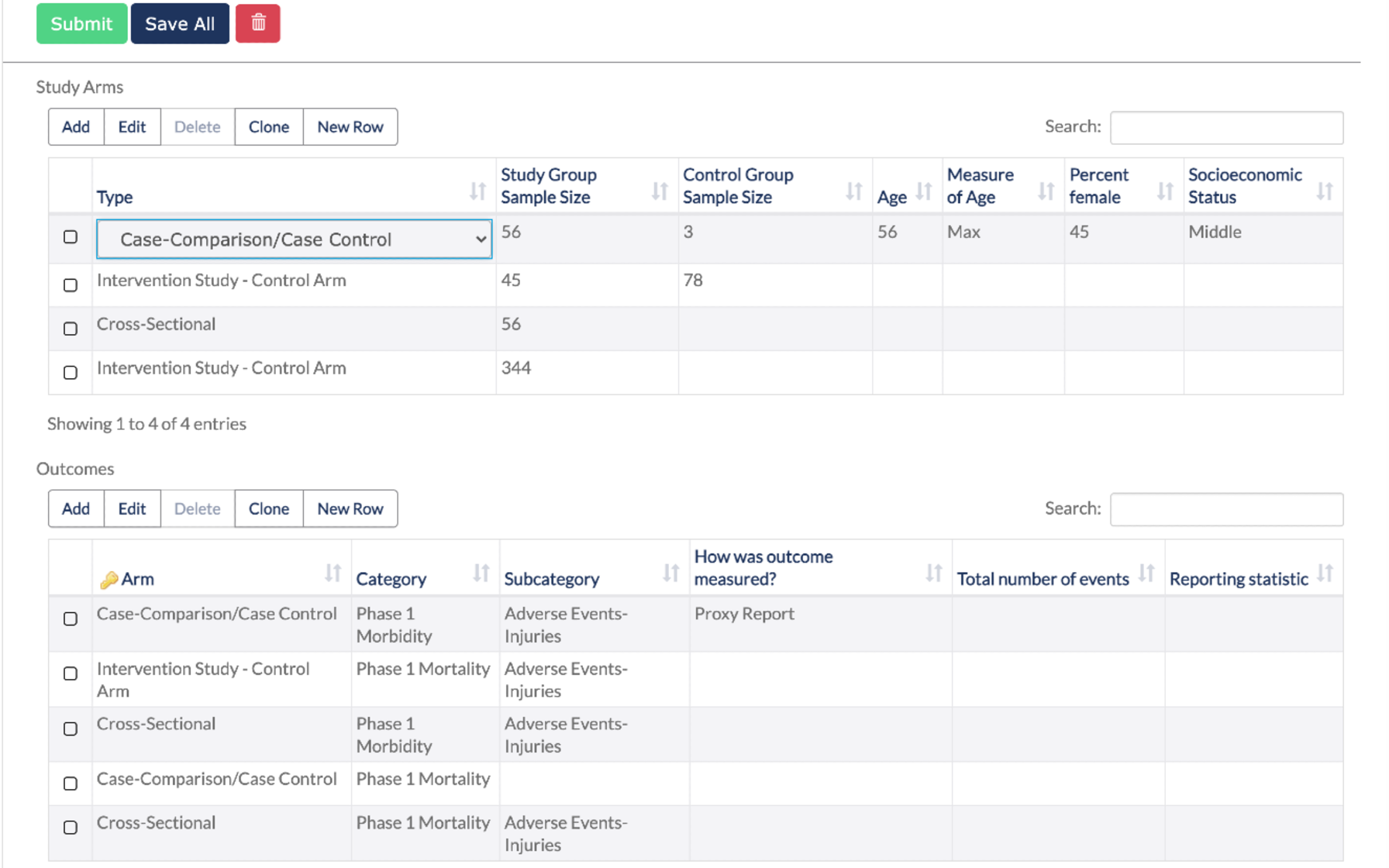

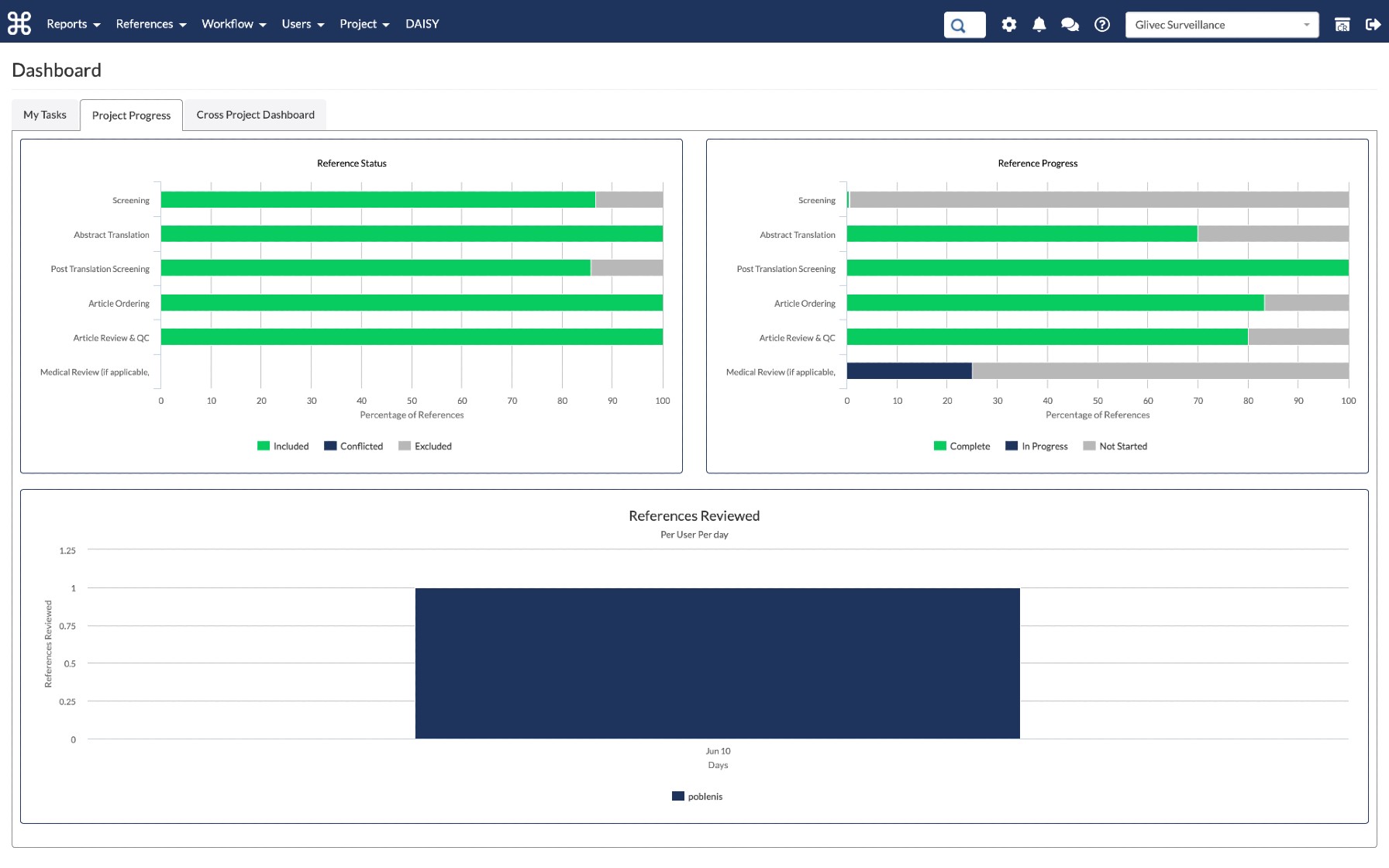

| 4. | DistillerSR | Streamlining and enhancing the process of literature screening, study selection, and data extraction |

| 5. | Rayyan | Facilitating efficient screening and selection of research outputs |

| 6. | Consensus | Researchers to work together, annotate, and discuss research papers in real-time, fostering team collaboration and knowledge sharing |

| 7. | RAx | Researchers to perform efficient literature search and analysis, aiding in identifying relevant articles, saving time, and improving the quality of research |

| 8. | Lateral | Discovering relevant scientific articles and identify potential research collaborators based on user interests and preferences |

| 9. | Iris AI | Exploring and mapping the existing literature, identifying knowledge gaps, and generating research questions |

| 10. | Scholarcy | Extracting key information from research papers, aiding in comprehension and saving time |

#1. Semantic Scholar – A free, AI-powered research tool for scientific literature

Not all scholarly content may be indexed, and occasional false positives or inaccurate associations can occur. Furthermore, the tool primarily focuses on computer science and related fields, potentially limiting coverage in other disciplines.

#2. Elicit – Research assistant using language models like GPT-3

Elicit is a game-changing literature review tool that has gained popularity among researchers worldwide. With its user-friendly interface and extensive database of scholarly articles, it streamlines the research process, saving time and effort.

However, users should be cautious when using Elicit. It is important to verify the credibility and accuracy of the sources found through the tool, as the database encompasses a wide range of publications.

Additionally, occasional glitches in the search function have been reported, leading to incomplete or inaccurate results. While Elicit offers tremendous benefits, researchers should remain vigilant and cross-reference information to ensure a comprehensive literature review.

#3. Scite.Ai – Your personal research assistant

Scite.Ai is a popular literature review tool that revolutionizes the research process for scholars. With its innovative citation analysis feature, researchers can evaluate the credibility and impact of scientific articles, making informed decisions about their inclusion in their own work.

However, while Scite.Ai offers numerous advantages, there are a few aspects to be cautious about. As with any data-driven tool, occasional errors or inaccuracies may arise, necessitating researchers to cross-reference and verify results with other reputable sources.

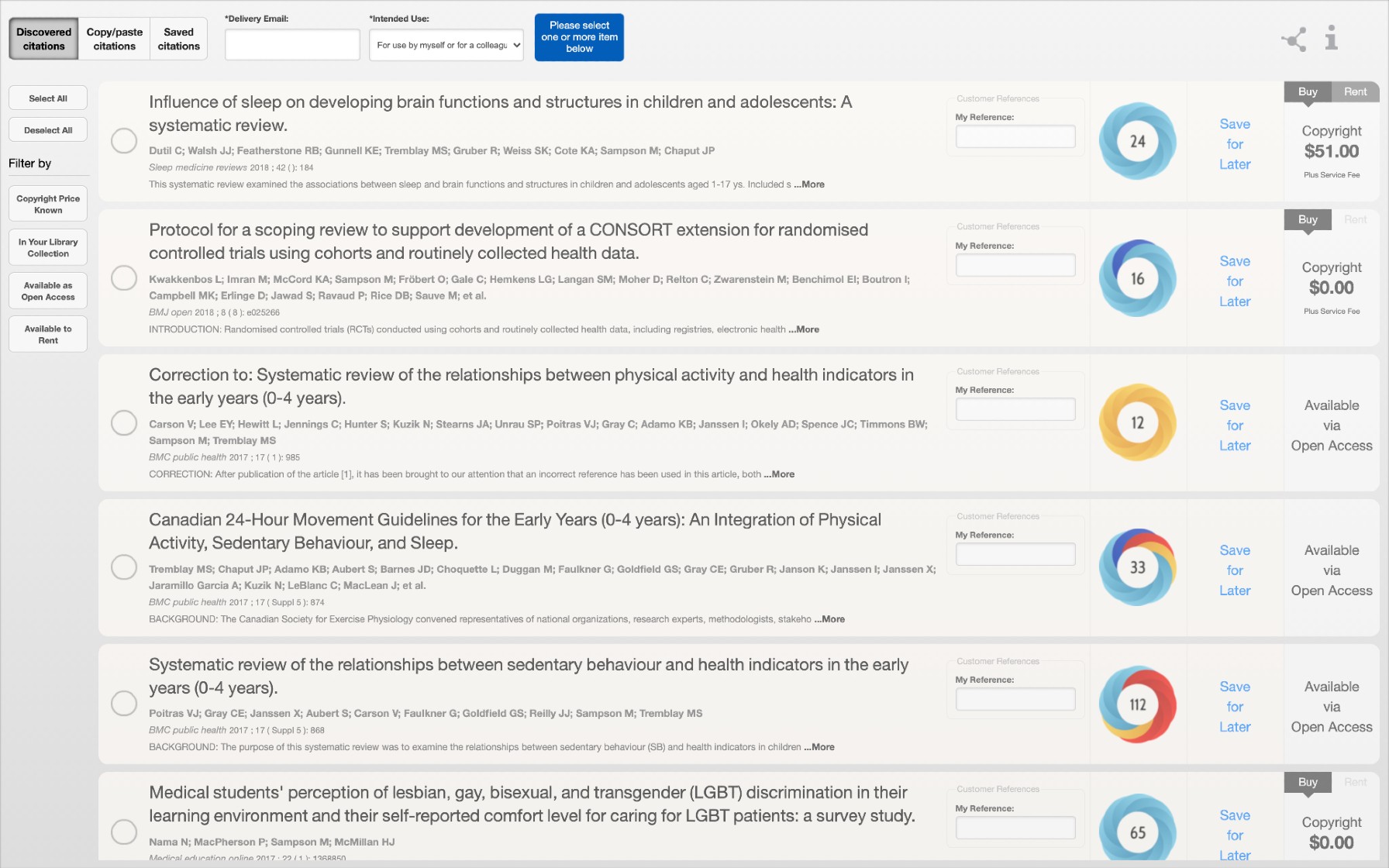

Rayyan offers the following paid plans:

#4. DistillerSR – Literature Review Software

Despite occasional technical glitches reported by some users, the developers actively address these issues through updates and improvements, ensuring a better user experience.

#5. Rayyan – AI Powered Tool for Systematic Literature Reviews

However, it’s important to be aware of a few aspects. The free version of Rayyan has limitations, and upgrading to a premium subscription may be necessary for additional functionalities.

#6. Consensus – Use AI to find you answers in scientific research

With Consensus, researchers can save significant time by efficiently organizing and accessing relevant research material.People consider Consensus for several reasons.

Consensus offers both free and paid plans:

#7. RAx – AI-powered reading assistant

#8. lateral – advance your research with ai.

Additionally, researchers must be mindful of potential biases introduced by the tool’s algorithms and should critically evaluate and interpret the results.

#9. Iris AI – Introducing the researcher workspace

Researchers are drawn to this tool because it saves valuable time by automating the tedious task of literature review and provides comprehensive coverage across multiple disciplines.

#10. Scholarcy – Summarize your literature through AI

Scholarcy’s ability to extract key information and generate concise summaries makes it an attractive option for scholars looking to quickly grasp the main concepts and findings of multiple papers.

Scholarcy’s automated summarization may not capture the nuanced interpretations or contextual information presented in the full text.

Final Thoughts

In conclusion, conducting a comprehensive literature review is a crucial aspect of any research project, and the availability of reliable and efficient tools can greatly facilitate this process for researchers. This article has explored the top 10 literature review tools that have gained popularity among researchers.

Q1. What are literature review tools for researchers?

Q2. what criteria should researchers consider when choosing literature review tools.

When choosing literature review tools, researchers should consider factors such as the tool’s search capabilities, database coverage, user interface, collaboration features, citation management, annotation and highlighting options, integration with reference management software, and data extraction capabilities.

Q3. Are there any literature review tools specifically designed for systematic reviews or meta-analyses?

Meta-analysis support: Some literature review tools include statistical analysis features that assist in conducting meta-analyses. These features can help calculate effect sizes, perform statistical tests, and generate forest plots or other visual representations of the meta-analytic results.

Q4. Can literature review tools help with organizing and annotating collected references?

Integration with citation managers: Some literature review tools integrate with popular citation managers like Zotero, Mendeley, or EndNote, allowing seamless transfer of references and annotations between platforms.

By leveraging these features, researchers can streamline the organization and annotation of their collected references, making it easier to retrieve relevant information during the literature review process.

Leave a Comment Cancel reply

- Resources Home 🏠

- Try SciSpace Copilot

- Search research papers

- Add Copilot Extension

- Try AI Detector

- Try Paraphraser

- Try Citation Generator

- April Papers

- June Papers

- July Papers

5 literature review tools to ace your research (+2 bonus tools)

Table of Contents

Your literature review is the lore behind your research paper . It comes in two forms, systematic and scoping , both serving the purpose of rounding up previously published works in your research area that led you to write and finish your own.

A literature review is vital as it provides the reader with a critical overview of the existing body of knowledge, your methodology, and an opportunity for research applications.

Some steps to follow while writing your review:

- Pick an accessible topic for your paper

- Do thorough research and gather evidence surrounding your topic

- Read and take notes diligently

- Create a rough structure for your review

- Synthesis your notes and write the first draft

- Edit and proofread your literature review

To make your workload a little lighter, there are many literature review AI tools. These tools can help you find academic articles through AI and answer questions about a research paper.

Best literature review tools to improve research workflow

A literature review is one of the most critical yet tedious stages in composing a research paper. Many students find it an uphill task since it requires extensive reading and careful organization .

Using some of the best literature review tools listed here, you can make your life easier by overcoming some of the existing challenges in literature reviews. From collecting and classifying to analyzing and publishing research outputs, these tools help you with your literature review and improve your productivity without additional effort or expenses.

1. SciSpace

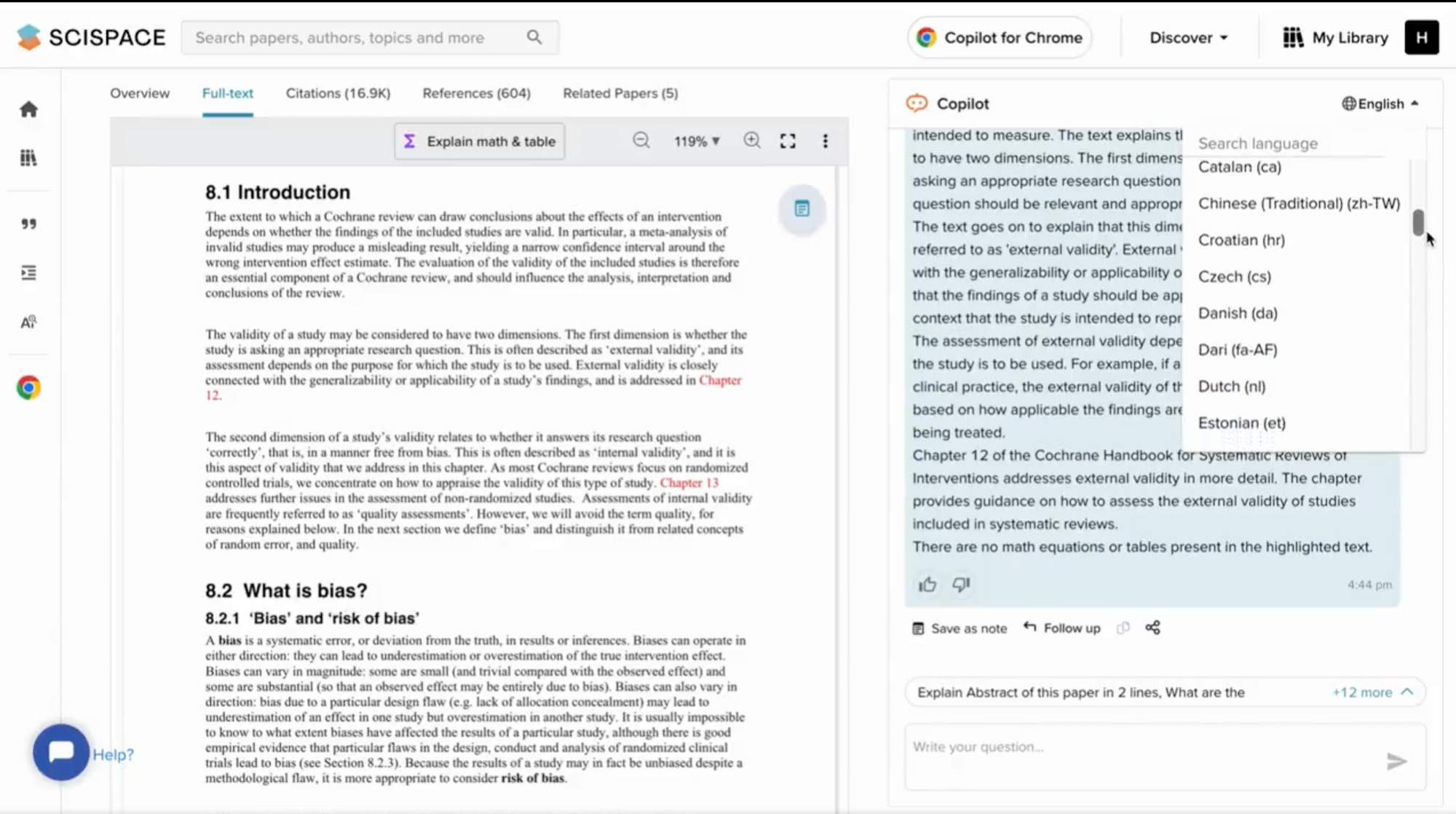

SciSpace is an AI for academic research that will help find research papers and answer questions about a research paper. You can discover, read, and understand research papers with SciSpace making it an excellent platform for literature review. Featuring a repository with over 270 million research papers, it comes with your AI research assistant called Copilot that offers explanations, summaries , and answers as you read.

Get started now:

Find academic articles through AI

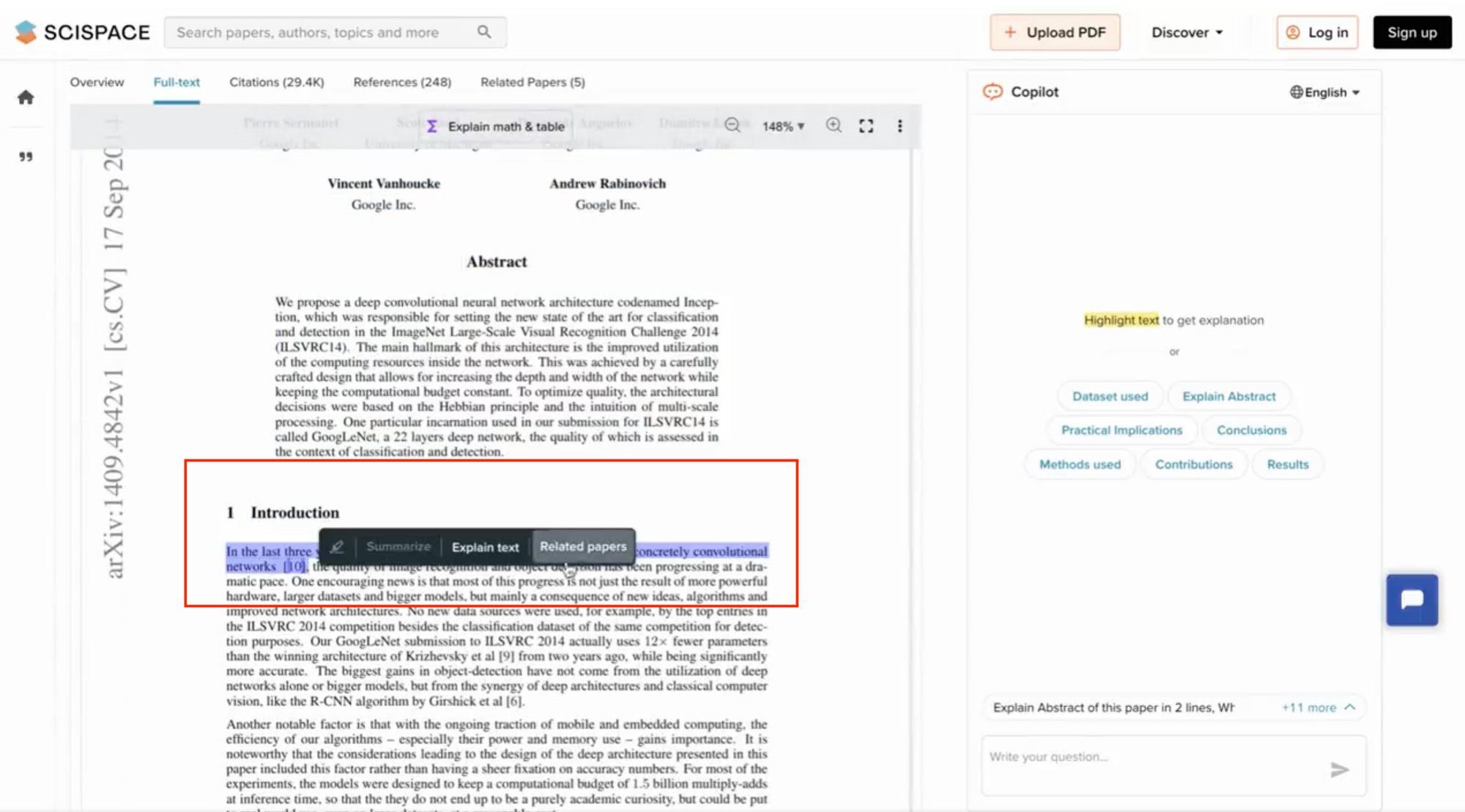

SciSpace has a dedicated literature review tool that finds scientific articles when you search for a question. Based on semantic search, it shows all the research papers relevant for your subject. You can then gather quick insights for all the papers displayed in your search results like methodology, dataset, etc., and figure out all the papers relevant for your research.

Identify relevant articles faster

Abstracts are not always enough to determine whether a paper is relevant to your research question. For starters, you can ask questions to your AI research assistant, SciSpace Copilot to explore the content and better understand the article. Additionally, use the summarize feature to quickly review the methodology and results of a paper and decide if it is worth reading in detail.

Learn in your preferred language

A big barrier non-native English speakers face while conducting a literature review is that a significant portion of scientific literature is published in English. But with SciSpace Copilot, you can review, interact, and learn from research papers in any language you prefer — presently, it supports 75+ languages. The AI will answer questions about a research paper in your mother tongue.

Integrates with Zotero

Many researchers use Zotero to create a library and manage research papers. SciSpace lets you import your scientific articles directly from Zotero into your SciSpace library and use Copilot to comprehend your research papers. You can also highlight key sections, add notes to the PDF as you read, and even turn helpful explanations and answers from Copilot into notes for future review.

Understand math and complex concepts quickly

Come across complex mathematical equations or difficult concepts? Simply highlight the text or select the formula or table, and Copilot will provide an explanation or breakdown of the same in an easy-to-understand manner. You can ask follow-up questions if you need further clarification.

Discover new papers to read without leaving

Highlight phrases or sentences in your research paper to get suggestions for related papers in the field and save time on literature reviews. You can also use the 'Trace' feature to move across and discover connected papers, authors, topics, and more.

SciSpace Copilot is now available as a Chrome extension , allowing you to access its features directly while you browse scientific literature anywhere across the web.

Get citation-backed answers

When you're conducting a literature review, you want credible information with proper references. Copilot ensures that every piece of information provided by SciSpace Copilot is backed by a direct reference, boosting transparency, accuracy, and trustworthiness.

Ask a question related to the paper you're delving into. Every response from Copilot comes with a clickable citation. This citation leads you straight to the section of the PDF from which the answer was extracted.

By seamlessly integrating answers with citations, SciSpace Copilot assures you of the authenticity and relevance of the information you receive.

2. Mendeley

Mendeley Citation Manager is a free web and desktop application. It helps simplify your citation management workflow significantly. Here are some ways you can speed up your referencing game with Mendeley.

Generate citations and bibliographies

Easily add references from your Mendeley library to your Word document, change your citation style, and create a bibliography, all without leaving your document.

Retrieve references

It allows you to access your references quickly. Search for a term, and it will return results by referencing the year, author, or source.

Add sources to your Mendeley library by dragging PDF to Mendeley Reference Manager. Mendeley will automatically remove the PDF(s) metadata and create a library entry.

Read and annotate documents

It helps you highlight and comment across multiple PDFs while keep them all in one place using Mendeley Notebook . Notebook pages are not tied to a reference and let you quote from many PDFs.

A big part of many literature review workflows, Zotero is a free, open-source tool for managing citations that works as a plug-in on your browser. It helps you gather the information you need, cite your sources, lets you attach PDFs, notes, and images to your citations, and create bibliographies.

Import research articles to your database

Search for research articles on a keyword, and add relevant results to your database. Then, select the articles you are most interested in, and import them into Zotero.

Add bibliography in a variety of formats

With Zotero, you don’t have to scramble for different bibliography formats. Simply use the Zotero-Word plug-in to insert in-text citations and generate a bibliography.

Share your research

You can save a paper and sync it with an online library to easily share your research for group projects. Zotero can be used to create your database and decrease the time you spend formatting citations.

Sysrev is an AI too for article review that facilitates screening, collaboration, and data extraction from academic publications, abstracts, and PDF documents using machine learning. The platform is free and supports public and Open Access projects only.

Some of the features of Sysrev include:

Group labels

Group labels can be a powerful concept for creating database tables from documents. When exported and re-imported, each group label creates a new table. To make labels for a project, go into the manage -> labels section of the project.

Group labels enable project managers to pull table information from documents. It makes it easier to communicate review results for specific articles.

Track reviewer performance

Sysrev's label counting tool provides filtering and visualization options for keeping track of the distribution of labels throughout the project's progress. Project managers can check their projects at any point to track progress and the reviewer's performance.

Tool for concordance

The Sysrev tool for concordance allows project administrators and reviewers to perform analysis on their labels. Concordance is measured by calculating the number of times users agree on the labels they have extracted.

Colandr is a free, open-source, internet-based analysis and screening software used as an AI for academic research. It was designed to ease collaboration across various stages of the systematic review procedure. The tool can be a little complex to use. So, here are the steps involved in working with Colandr.

Create a review

The first step to using Colandr is setting up an organized review project. This is helpful to librarians who are assisting researchers with systematic reviews.

The planning stage is setting the review's objectives along with research queries. Any reviewer can review the details of the planning stage. However, they can only be modified by the author for the review.

Citation screening/import

In this phase, users can upload their results from database searches. Colandr also offers an automated deduplication system.

Full-text screening

The system in Colandr will discover the combination of terms and expressions that are most useful for the reader. If an article is selected, it will be moved to the final step.

Data extraction/export

Colandr data extraction is more efficient than the manual method. It creates the form fields for data extraction during the planning stage of the review procedure. Users can decide to revisit or modify the form for data extraction after completing the initial screening.

Bonus literature review tools

SRDR+ is a web-based tool for extracting and managing systematic review or meta-analysis data. It is open and has a searchable archive of systematic reviews and their data.

7. Plot Digitizer

Plot Digitizer is an efficient tool for extracting information from graphs and images, equipped with many features that facilitate data extraction. The program comes with a free online application, which is adequate to extract data quickly.

Final thoughts

Writing a literature review is not easy. It’s a time-consuming process, which can become tiring at times. The literature review tools mentioned in this blog do an excellent job of maximizing your efforts and helping you write literature reviews much more efficiently. With them, you can breathe a sigh of relief and give more time to your research.

As you dive into your literature review, don’t forget to use SciSpace ResearchGPT to streamline the process. It facilitates your research and helps you explore key findings, summary, and other components of the paper easily.

Frequently Asked Questions (FAQs)

1. what is rrl in research.

RRL stands for Review of Related Literature and sometimes interchanged with ‘Literature Review.’ RRL is a body of studies relevant to the topic being researched. These studies may be in the form of journal articles, books, reports, and other similar documents. Review of related literature is used to support an argument or theory being made by the researcher, as well as to provide information on how others have approached the same topic.

2. What are few softwares and tools available for literature review?

• SciSpace Discover

• Mendeley

• Zotero

• Sysrev

• Colandr

• SRDR+

3. How to generate an online literature review?

The Scispace Discover tool, which offers an excellent repository of millions of peer-reviewed articles and resources, will help you generate or create a literature review easily. You may find relevant information by utilizing the filter option, checking its credibility, tracing related topics and articles, and citing in widely accepted formats with a single click.

4. What does it mean to synthesize literature?

To synthesize literature is to take the main points and ideas from a number of sources and present them in a new way. The goal is to create a new piece of writing that pulls together the most important elements of all the sources you read. Make recommendations based on them, and connect them to the research.

5. Should we write abstract for literature review?

Abstracts, particularly for the literature review section, are not required. However, an abstract for the research paper, on the whole, is useful for summarizing the paper and letting readers know what to expect from it. It can also be used to summarize the main points of the paper so that readers have a better understanding of the paper's content before they read it.

6. How do you evaluate the quality of a literature review?

• Whether it is clear and well-written.

• Whether Information is current and up to date.

• Does it cover all of the relevant sources on the topic.

• Does it provide enough evidence to support its conclusions.

7. Is literature review mandatory?

Yes. Literature review is a mandatory part of any research project. It is a critical step in the process that allows you to establish the scope of your research and provide a background for the rest of your work.

8. What are the sources for a literature review?

• Reports

• Theses

• Conference proceedings

• Company reports

• Some government publications

• Journals

• Books

• Newspapers

• Articles by professional associations

• Indexes

• Databases

• Catalogues

• Encyclopaedias

• Dictionaries

• Bibliographies

• Citation indexes

• Statistical data from government websites

9. What is the difference between a systematic review and a literature review?

A systematic review is a form of research that uses a rigorous method to generate knowledge from both published and unpublished data. A literature review, on the other hand, is a critical summary of an area of research within the context of what has already been published.

Suggested reads!

Types of essays in academic writing Citation Machine Alternatives — A comparison of top citation tools 2023

QuillBot vs SciSpace: Choose the best AI-paraphrasing tool

ChatPDF vs. SciSpace Copilot: Unveiling the best tool for your research

You might also like

Consensus GPT vs. SciSpace GPT: Choose the Best GPT for Research

Literature Review and Theoretical Framework: Understanding the Differences

Types of Essays in Academic Writing - Quick Guide (2024)

Something went wrong when searching for seed articles. Please try again soon.

No articles were found for that search term.

Author, year The title of the article goes here

LITERATURE REVIEW SOFTWARE FOR BETTER RESEARCH

“Litmaps is a game changer for finding novel literature... it has been invaluable for my productivity.... I also got my PhD student to use it and they also found it invaluable, finding several gaps they missed”

Varun Venkatesh

Austin Health, Australia

As a full-time researcher, Litmaps has become an indispensable tool in my arsenal. The Seed Maps and Discover features of Litmaps have transformed my literature review process, streamlining the identification of key citations while revealing previously overlooked relevant literature, ensuring no crucial connection goes unnoticed. A true game-changer indeed!

Ritwik Pandey

Doctoral Research Scholar – Sri Sathya Sai Institute of Higher Learning

Using Litmaps for my research papers has significantly improved my workflow. Typically, I start with a single paper related to my topic. Whenever I find an interesting work, I add it to my search. From there, I can quickly cover my entire Related Work section.

David Fischer

Research Associate – University of Applied Sciences Kempten

“It's nice to get a quick overview of related literature. Really easy to use, and it helps getting on top of the often complicated structures of referencing”

Christoph Ludwig

Technische Universität Dresden, Germany

“This has helped me so much in researching the literature. Currently, I am beginning to investigate new fields and this has helped me hugely”

Aran Warren

Canterbury University, NZ

“I can’t live without you anymore! I also recommend you to my students.”

Professor at The Chinese University of Hong Kong

“Seeing my literature list as a network enhances my thinking process!”

Katholieke Universiteit Leuven, Belgium

“Incredibly useful tool to get to know more literature, and to gain insight in existing research”

KU Leuven, Belgium

“As a student just venturing into the world of lit reviews, this is a tool that is outstanding and helping me find deeper results for my work.”

Franklin Jeffers

South Oregon University, USA

“Any researcher could use it! The paper recommendations are great for anyone and everyone”

Swansea University, Wales

“This tool really helped me to create good bibtex references for my research papers”

Ali Mohammed-Djafari

Director of Research at LSS-CNRS, France

“Litmaps is extremely helpful with my research. It helps me organize each one of my projects and see how they relate to each other, as well as to keep up to date on publications done in my field”

Daniel Fuller

Clarkson University, USA

As a person who is an early researcher and identifies as dyslexic, I can say that having research articles laid out in the date vs cite graph format is much more approachable than looking at a standard database interface. I feel that the maps Litmaps offers lower the barrier of entry for researchers by giving them the connections between articles spaced out visually. This helps me orientate where a paper is in the history of a field. Thus, new researchers can look at one of Litmap's "seed maps" and have the same information as hours of digging through a database.

Baylor Fain

Postdoctoral Associate – University of Florida

We use cookies to ensure that we give you the best experience on our website. If you continue to use this site we will assume that you are happy with it.

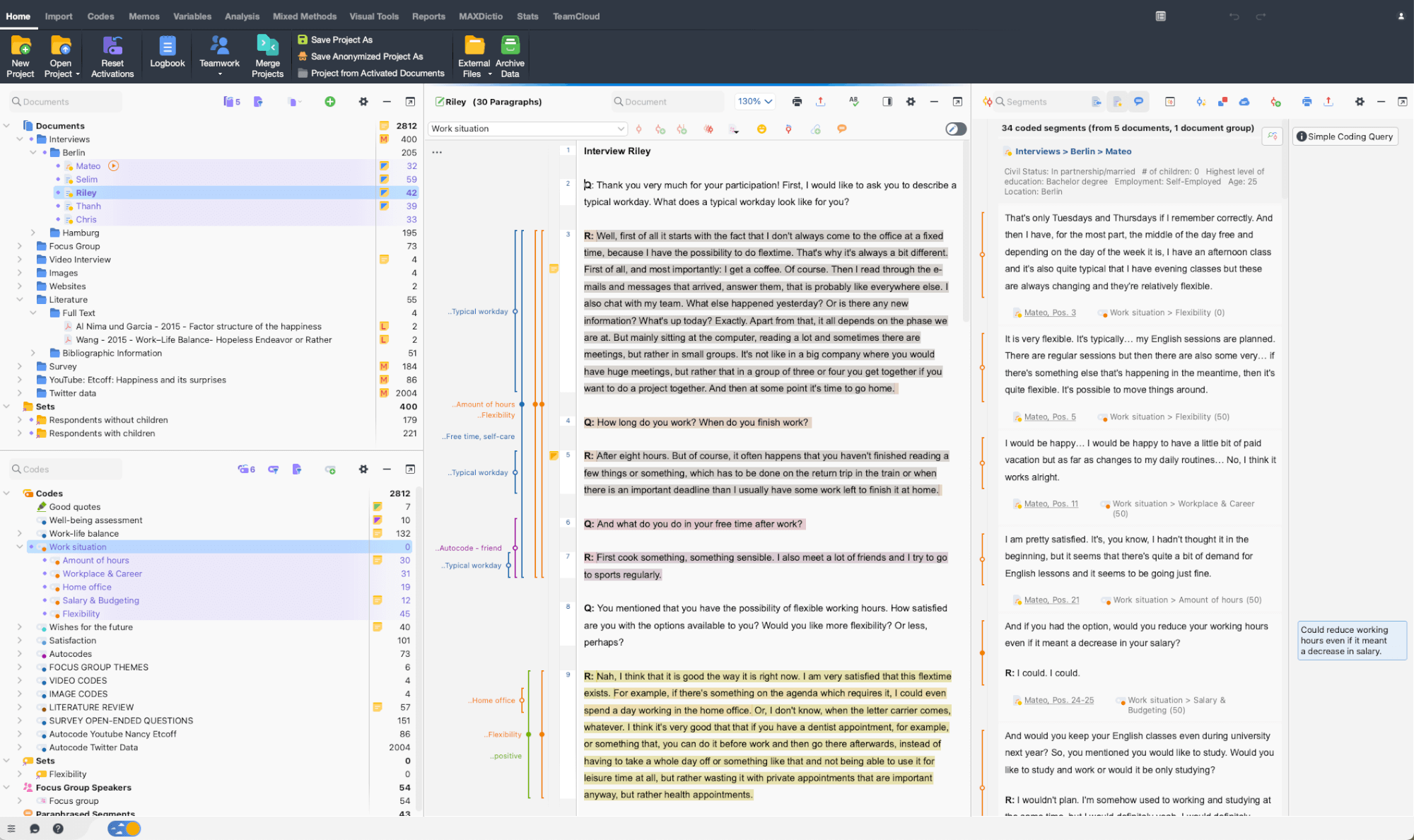

Accelerate your research with the best systematic literature review tools

The ideal literature review tool helps you make sense of the most important insights in your research field. ATLAS.ti empowers researchers to perform powerful and collaborative analysis using the leading software for literature review.

Finalize your literature review faster with comfort

ATLAS.ti makes it easy to manage, organize, and analyze articles, PDFs, excerpts, and more for your projects. Conduct a deep systematic literature review and get the insights you need with a comprehensive toolset built specifically for your research projects.

Figure out the "why" behind your participant's motivations

Understand the behaviors and emotions that are driving your focus group participants. With ATLAS.ti, you can transform your raw data and turn it into qualitative insights you can learn from. Easily determine user intent in the same spot you're deciphering your overall focus group data.

Visualize your research findings like never before

We make it simple to present your analysis results with meaningful charts, networks, and diagrams. Instead of figuring out how to communicate the insights you just unlocked, we enable you to leverage easy-to-use visualizations that support your goals.

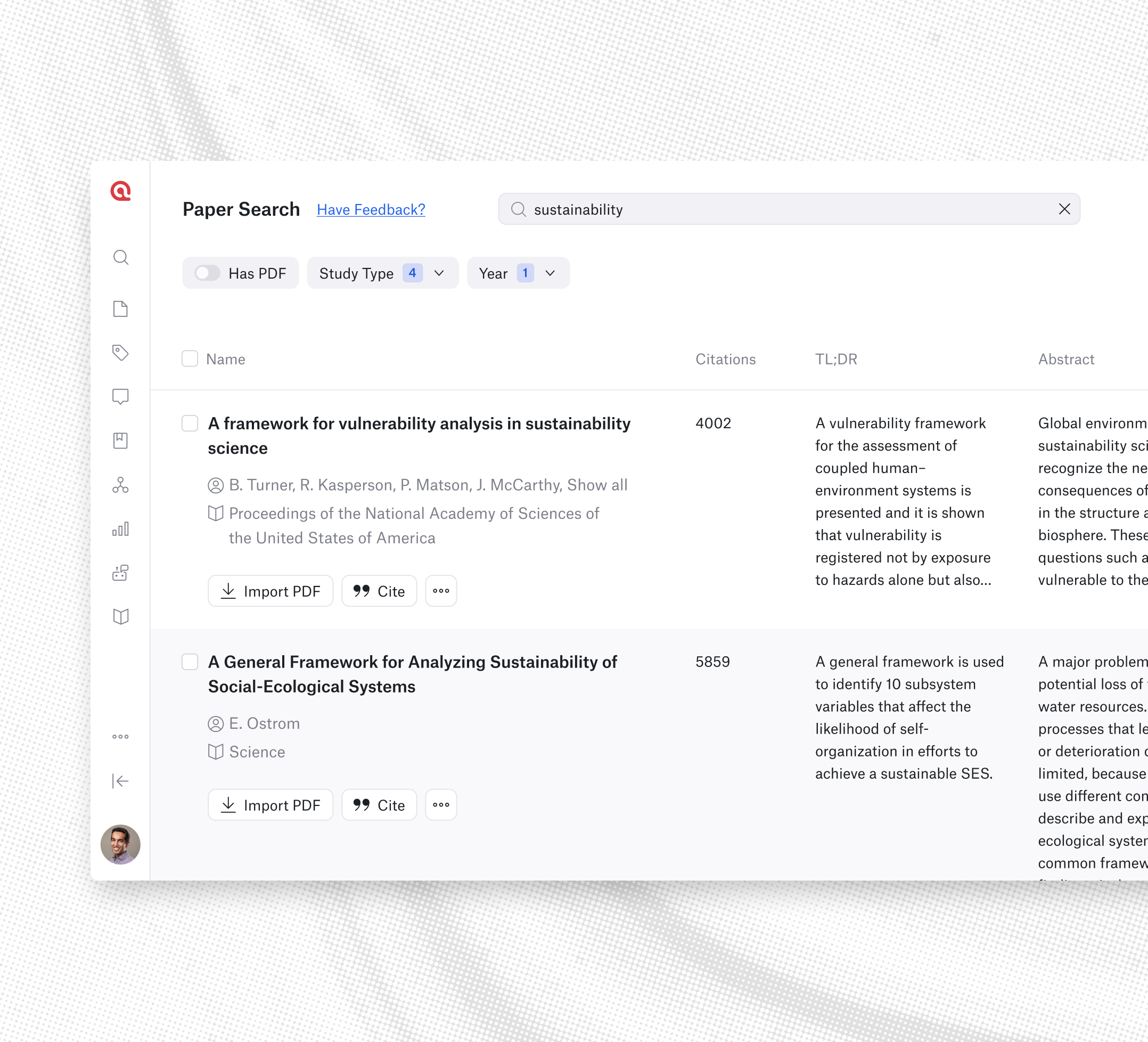

Paper Search – Access 200+ Million Papers with AI-Powered Insights

Unlock access to over 200 million scientific papers and streamline your research with our cutting-edge AI-powered Paper Search 2.0. Easily find, summarize, and integrate relevant papers directly into your ATLAS.ti Web workspace.

Everything you need to elevate your literature review

Import and organize literature data.

Import and analyze any type of text content – ATLAS.ti supports all standard text and transcription files such as Word and PDF.

Analyze with ease and speed

Utilize easy-to-learn workflows that save valuable time, such as auto coding, sentiment analysis, team collaboration, and more.

Leverage AI-driven tools

Make efficiency a priority and let ATLAS.ti do your work with AI-powered research tools and features for faster results.

Visualize and present findings

With just a few clicks, you can create meaningful visualizations like charts, word clouds, tables, networks, among others for your literature data.

The faster way to make sense of your literature review. Try it for free, today.

A literature review analyzes the most current research within a research area. A literature review consists of published studies from many sources:

- Peer-reviewed academic publications

- Full-length books

- University bulletins

- Conference proceedings

- Dissertations and theses

Literature reviews allow researchers to:

- Summarize the state of the research

- Identify unexplored research inquiries

- Recommend practical applications

- Critique currently published research

Literature reviews are either standalone publications or part of a paper as background for an original research project. A literature review, as a section of a more extensive research article, summarizes the current state of the research to justify the primary research described in the paper.

For example, a researcher may have reviewed the literature on a new supplement's health benefits and concluded that more research needs to be conducted on those with a particular condition. This research gap warrants a study examining how this understudied population reacted to the supplement. Researchers need to establish this research gap through a literature review to persuade journal editors and reviewers of the value of their research.

Consider a literature review as a typical research publication presenting a study, its results, and the salient points scholars can infer from the study. The only significant difference with a literature review treats existing literature as the research data to collect and analyze. From that analysis, a literature review can suggest new inquiries to pursue.

Identify a focus

Similar to a typical study, a literature review should have a research question or questions that analysis can answer. This sort of inquiry typically targets a particular phenomenon, population, or even research method to examine how different studies have looked at the same thing differently. A literature review, then, should center the literature collection around that focus.

Collect and analyze the literature

With a focus in mind, a researcher can collect studies that provide relevant information for that focus. They can then analyze the collected studies by finding and identifying patterns or themes that occur frequently. This analysis allows the researcher to point out what the field has frequently explored or, on the other hand, overlooked.

Suggest implications

The literature review allows the researcher to argue a particular point through the evidence provided by the analysis. For example, suppose the analysis makes it apparent that the published research on people's sleep patterns has not adequately explored the connection between sleep and a particular factor (e.g., television-watching habits, indoor air quality). In that case, the researcher can argue that further study can address this research gap.

External requirements aside (e.g., many academic journals have a word limit of 6,000-8,000 words), a literature review as a standalone publication is as long as necessary to allow readers to understand the current state of the field. Even if it is just a section in a larger paper, a literature review is long enough to allow the researcher to justify the study that is the paper's focus.

Note that a literature review needs only to incorporate a representative number of studies relevant to the research inquiry. For term papers in university courses, 10 to 20 references might be appropriate for demonstrating analytical skills. Published literature reviews in peer-reviewed journals might have 40 to 50 references. One of the essential goals of a literature review is to persuade readers that you have analyzed a representative segment of the research you are reviewing.

Researchers can find published research from various online sources:

- Journal websites

- Research databases

- Search engines (Google Scholar, Semantic Scholar)

- Research repositories

- Social networking sites (Academia, ResearchGate)

Many journals make articles freely available under the term "open access," meaning that there are no restrictions to viewing and downloading such articles. Otherwise, collecting research articles from restricted journals usually requires access from an institution such as a university or a library.

Evidence of a rigorous literature review is more important than the word count or the number of articles that undergo data analysis. Especially when writing for a peer-reviewed journal, it is essential to consider how to demonstrate research rigor in your literature review to persuade reviewers of its scholarly value.

Select field-specific journals

The most significant research relevant to your field focuses on a narrow set of journals similar in aims and scope. Consider who the most prominent scholars in your field are and determine which journals publish their research or have them as editors or reviewers. Journals tend to look favorably on systematic reviews that include articles they have published.

Incorporate recent research

Recently published studies have greater value in determining the gaps in the current state of research. Older research is likely to have encountered challenges and critiques that may render their findings outdated or refuted. What counts as recent differs by field; start by looking for research published within the last three years and gradually expand to older research when you need to collect more articles for your review.

Consider the quality of the research

Literature reviews are only as strong as the quality of the studies that the researcher collects. You can judge any particular study by many factors, including:

- the quality of the article's journal

- the article's research rigor

- the timeliness of the research

The critical point here is that you should consider more than just a study's findings or research outputs when including research in your literature review.

Narrow your research focus

Ideally, the articles you collect for your literature review have something in common, such as a research method or research context. For example, if you are conducting a literature review about teaching practices in high school contexts, it is best to narrow your literature search to studies focusing on high school. You should consider expanding your search to junior high school and university contexts only when there are not enough studies that match your focus.

You can create a project in ATLAS.ti for keeping track of your collected literature. ATLAS.ti allows you to view and analyze full text articles and PDF files in a single project. Within projects, you can use document groups to separate studies into different categories for easier and faster analysis.

For example, a researcher with a literature review that examines studies across different countries can create document groups labeled "United Kingdom," "Germany," and "United States," among others. A researcher can also use ATLAS.ti's global filters to narrow analysis to a particular set of studies and gain insights about a smaller set of literature.

ATLAS.ti allows you to search, code, and analyze text documents and PDF files. You can treat a set of research articles like other forms of qualitative data. The codes you apply to your literature collection allow for analysis through many powerful tools in ATLAS.ti:

- Code Co-Occurrence Explorer

- Code Co-Occurrence Table

- Code-Document Table

Other tools in ATLAS.ti employ machine learning to facilitate parts of the coding process for you. Some of our software tools that are effective for analyzing literature include:

- Named Entity Recognition

- Opinion Mining

- Sentiment Analysis

As long as your documents are text documents or text-enable PDF files, ATLAS.ti's automated tools can provide essential assistance in the data analysis process.

7 open source tools to make literature reviews easy

Opensource.com

A good literature review is critical for academic research in any field, whether it is for a research article, a critical review for coursework, or a dissertation. In a recent article, I presented detailed steps for doing a literature review using open source software .

The following is a brief summary of seven free and open source software tools described in that article that will make your next literature review much easier.

1. GNU Linux

Most literature reviews are accomplished by graduate students working in research labs in universities. For absurd reasons, graduate students often have the worst computers on campus. They are often old, slow, and clunky Windows machines that have been discarded and recycled from the undergraduate computer labs. Installing a flavor of GNU Linux will breathe new life into these outdated PCs. There are more than 100 distributions , all of which can be downloaded and installed for free on computers. Most popular Linux distributions come with a "try-before-you-buy" feature. For example, with Ubuntu you can make a bootable USB stick that allows you to test-run the Ubuntu desktop experience without interfering in any way with your PC configuration. If you like the experience, you can use the stick to install Ubuntu on your machine permanently.

Linux distributions generally come with a free web browser, and the most popular is Firefox . Two Firefox plugins that are particularly useful for literature reviews are Unpaywall and Zotero. Keep reading to learn why.

3. Unpaywall

Often one of the hardest parts of a literature review is gaining access to the papers you want to read for your review. The unintended consequence of copyright restrictions and paywalls is it has narrowed access to the peer-reviewed literature to the point that even Harvard University is challenged to pay for it. Fortunately, there are a lot of open access articles—about a third of the literature is free (and the percentage is growing). Unpaywall is a Firefox plugin that enables researchers to click a green tab on the side of the browser and skip the paywall on millions of peer-reviewed journal articles. This makes finding accessible copies of articles much faster that searching each database individually. Unpaywall is fast, free, and legal, as it accesses many of the open access sites that I covered in my paper on using open source in lit reviews .

Formatting references is the most tedious of academic tasks. Zotero can save you from ever doing it again. It operates as an Android app, desktop program, and a Firefox plugin (which I recommend). It is a free, easy-to-use tool to help you collect, organize, cite, and share research. It replaces the functionality of proprietary packages such as RefWorks, Endnote, and Papers for zero cost. Zotero can auto-add bibliographic information directly from websites. In addition, it can scrape bibliographic data from PDF files. Notes can be easily added on each reference. Finally, and most importantly, it can import and export the bibliography databases in all publishers' various formats. With this feature, you can export bibliographic information to paste into a document editor for a paper or thesis—or even to a wiki for dynamic collaborative literature reviews (see tool #7 for more on the value of wikis in lit reviews).

5. LibreOffice

Your thesis or academic article can be written conventionally with the free office suite LibreOffice , which operates similarly to Microsoft's Office products but respects your freedom. Zotero has a word processor plugin to integrate directly with LibreOffice. LibreOffice is more than adequate for the vast majority of academic paper writing.

If LibreOffice is not enough for your layout needs, you can take your paper writing one step further with LaTeX , a high-quality typesetting system specifically designed for producing technical and scientific documentation. LaTeX is particularly useful if your writing has a lot of equations in it. Also, Zotero libraries can be directly exported to BibTeX files for use with LaTeX.

7. MediaWiki

If you want to leverage the open source way to get help with your literature review, you can facilitate a dynamic collaborative literature review . A wiki is a website that allows anyone to add, delete, or revise content directly using a web browser. MediaWiki is free software that enables you to set up your own wikis.

Researchers can (in decreasing order of complexity): 1) set up their own research group wiki with MediaWiki, 2) utilize wikis already established at their universities (e.g., Aalto University ), or 3) use wikis dedicated to areas that they research. For example, several university research groups that focus on sustainability (including mine ) use Appropedia , which is set up for collaborative solutions on sustainability, appropriate technology, poverty reduction, and permaculture.

Using a wiki makes it easy for anyone in the group to keep track of the status of and update literature reviews (both current and older or from other researchers). It also enables multiple members of the group to easily collaborate on a literature review asynchronously. Most importantly, it enables people outside the research group to help make a literature review more complete, accurate, and up-to-date.

Wrapping up

Free and open source software can cover the entire lit review toolchain, meaning there's no need for anyone to use proprietary solutions. Do you use other libre tools for making literature reviews or other academic work easier? Please let us know your favorites in the comments.

Related Content

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- How to Write a Literature Review | Guide, Examples, & Templates

How to Write a Literature Review | Guide, Examples, & Templates

Published on January 2, 2023 by Shona McCombes . Revised on September 11, 2023.

What is a literature review? A literature review is a survey of scholarly sources on a specific topic. It provides an overview of current knowledge, allowing you to identify relevant theories, methods, and gaps in the existing research that you can later apply to your paper, thesis, or dissertation topic .

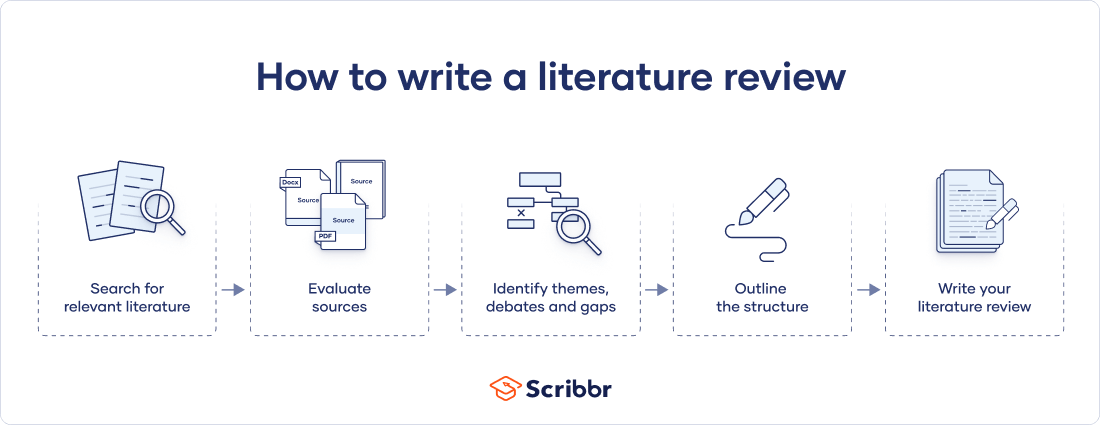

There are five key steps to writing a literature review:

- Search for relevant literature

- Evaluate sources

- Identify themes, debates, and gaps

- Outline the structure

- Write your literature review

A good literature review doesn’t just summarize sources—it analyzes, synthesizes , and critically evaluates to give a clear picture of the state of knowledge on the subject.

Instantly correct all language mistakes in your text

Upload your document to correct all your mistakes in minutes

Table of contents

What is the purpose of a literature review, examples of literature reviews, step 1 – search for relevant literature, step 2 – evaluate and select sources, step 3 – identify themes, debates, and gaps, step 4 – outline your literature review’s structure, step 5 – write your literature review, free lecture slides, other interesting articles, frequently asked questions, introduction.

- Quick Run-through

- Step 1 & 2

When you write a thesis , dissertation , or research paper , you will likely have to conduct a literature review to situate your research within existing knowledge. The literature review gives you a chance to:

- Demonstrate your familiarity with the topic and its scholarly context

- Develop a theoretical framework and methodology for your research

- Position your work in relation to other researchers and theorists

- Show how your research addresses a gap or contributes to a debate

- Evaluate the current state of research and demonstrate your knowledge of the scholarly debates around your topic.

Writing literature reviews is a particularly important skill if you want to apply for graduate school or pursue a career in research. We’ve written a step-by-step guide that you can follow below.

Prevent plagiarism. Run a free check.

Writing literature reviews can be quite challenging! A good starting point could be to look at some examples, depending on what kind of literature review you’d like to write.

- Example literature review #1: “Why Do People Migrate? A Review of the Theoretical Literature” ( Theoretical literature review about the development of economic migration theory from the 1950s to today.)

- Example literature review #2: “Literature review as a research methodology: An overview and guidelines” ( Methodological literature review about interdisciplinary knowledge acquisition and production.)

- Example literature review #3: “The Use of Technology in English Language Learning: A Literature Review” ( Thematic literature review about the effects of technology on language acquisition.)

- Example literature review #4: “Learners’ Listening Comprehension Difficulties in English Language Learning: A Literature Review” ( Chronological literature review about how the concept of listening skills has changed over time.)

You can also check out our templates with literature review examples and sample outlines at the links below.

Download Word doc Download Google doc

Before you begin searching for literature, you need a clearly defined topic .

If you are writing the literature review section of a dissertation or research paper, you will search for literature related to your research problem and questions .

Make a list of keywords

Start by creating a list of keywords related to your research question. Include each of the key concepts or variables you’re interested in, and list any synonyms and related terms. You can add to this list as you discover new keywords in the process of your literature search.

- Social media, Facebook, Instagram, Twitter, Snapchat, TikTok

- Body image, self-perception, self-esteem, mental health

- Generation Z, teenagers, adolescents, youth

Search for relevant sources

Use your keywords to begin searching for sources. Some useful databases to search for journals and articles include:

- Your university’s library catalogue

- Google Scholar

- Project Muse (humanities and social sciences)

- Medline (life sciences and biomedicine)

- EconLit (economics)

- Inspec (physics, engineering and computer science)

You can also use boolean operators to help narrow down your search.

Make sure to read the abstract to find out whether an article is relevant to your question. When you find a useful book or article, you can check the bibliography to find other relevant sources.

You likely won’t be able to read absolutely everything that has been written on your topic, so it will be necessary to evaluate which sources are most relevant to your research question.

For each publication, ask yourself:

- What question or problem is the author addressing?

- What are the key concepts and how are they defined?

- What are the key theories, models, and methods?

- Does the research use established frameworks or take an innovative approach?

- What are the results and conclusions of the study?

- How does the publication relate to other literature in the field? Does it confirm, add to, or challenge established knowledge?

- What are the strengths and weaknesses of the research?

Make sure the sources you use are credible , and make sure you read any landmark studies and major theories in your field of research.

You can use our template to summarize and evaluate sources you’re thinking about using. Click on either button below to download.

Take notes and cite your sources

As you read, you should also begin the writing process. Take notes that you can later incorporate into the text of your literature review.

It is important to keep track of your sources with citations to avoid plagiarism . It can be helpful to make an annotated bibliography , where you compile full citation information and write a paragraph of summary and analysis for each source. This helps you remember what you read and saves time later in the process.

To begin organizing your literature review’s argument and structure, be sure you understand the connections and relationships between the sources you’ve read. Based on your reading and notes, you can look for:

- Trends and patterns (in theory, method or results): do certain approaches become more or less popular over time?

- Themes: what questions or concepts recur across the literature?

- Debates, conflicts and contradictions: where do sources disagree?

- Pivotal publications: are there any influential theories or studies that changed the direction of the field?

- Gaps: what is missing from the literature? Are there weaknesses that need to be addressed?

This step will help you work out the structure of your literature review and (if applicable) show how your own research will contribute to existing knowledge.

- Most research has focused on young women.

- There is an increasing interest in the visual aspects of social media.

- But there is still a lack of robust research on highly visual platforms like Instagram and Snapchat—this is a gap that you could address in your own research.

There are various approaches to organizing the body of a literature review. Depending on the length of your literature review, you can combine several of these strategies (for example, your overall structure might be thematic, but each theme is discussed chronologically).

Chronological

The simplest approach is to trace the development of the topic over time. However, if you choose this strategy, be careful to avoid simply listing and summarizing sources in order.

Try to analyze patterns, turning points and key debates that have shaped the direction of the field. Give your interpretation of how and why certain developments occurred.

If you have found some recurring central themes, you can organize your literature review into subsections that address different aspects of the topic.

For example, if you are reviewing literature about inequalities in migrant health outcomes, key themes might include healthcare policy, language barriers, cultural attitudes, legal status, and economic access.

Methodological

If you draw your sources from different disciplines or fields that use a variety of research methods , you might want to compare the results and conclusions that emerge from different approaches. For example:

- Look at what results have emerged in qualitative versus quantitative research

- Discuss how the topic has been approached by empirical versus theoretical scholarship

- Divide the literature into sociological, historical, and cultural sources

Theoretical

A literature review is often the foundation for a theoretical framework . You can use it to discuss various theories, models, and definitions of key concepts.

You might argue for the relevance of a specific theoretical approach, or combine various theoretical concepts to create a framework for your research.

Like any other academic text , your literature review should have an introduction , a main body, and a conclusion . What you include in each depends on the objective of your literature review.

The introduction should clearly establish the focus and purpose of the literature review.

Depending on the length of your literature review, you might want to divide the body into subsections. You can use a subheading for each theme, time period, or methodological approach.

As you write, you can follow these tips:

- Summarize and synthesize: give an overview of the main points of each source and combine them into a coherent whole

- Analyze and interpret: don’t just paraphrase other researchers — add your own interpretations where possible, discussing the significance of findings in relation to the literature as a whole

- Critically evaluate: mention the strengths and weaknesses of your sources

- Write in well-structured paragraphs: use transition words and topic sentences to draw connections, comparisons and contrasts

In the conclusion, you should summarize the key findings you have taken from the literature and emphasize their significance.

When you’ve finished writing and revising your literature review, don’t forget to proofread thoroughly before submitting. Not a language expert? Check out Scribbr’s professional proofreading services !

This article has been adapted into lecture slides that you can use to teach your students about writing a literature review.

Scribbr slides are free to use, customize, and distribute for educational purposes.

Open Google Slides Download PowerPoint

If you want to know more about the research process , methodology , research bias , or statistics , make sure to check out some of our other articles with explanations and examples.

- Sampling methods

- Simple random sampling

- Stratified sampling

- Cluster sampling

- Likert scales

- Reproducibility

Statistics

- Null hypothesis

- Statistical power

- Probability distribution

- Effect size

- Poisson distribution

Research bias

- Optimism bias

- Cognitive bias

- Implicit bias

- Hawthorne effect

- Anchoring bias

- Explicit bias

A literature review is a survey of scholarly sources (such as books, journal articles, and theses) related to a specific topic or research question .

It is often written as part of a thesis, dissertation , or research paper , in order to situate your work in relation to existing knowledge.

There are several reasons to conduct a literature review at the beginning of a research project:

- To familiarize yourself with the current state of knowledge on your topic

- To ensure that you’re not just repeating what others have already done

- To identify gaps in knowledge and unresolved problems that your research can address

- To develop your theoretical framework and methodology

- To provide an overview of the key findings and debates on the topic

Writing the literature review shows your reader how your work relates to existing research and what new insights it will contribute.

The literature review usually comes near the beginning of your thesis or dissertation . After the introduction , it grounds your research in a scholarly field and leads directly to your theoretical framework or methodology .

A literature review is a survey of credible sources on a topic, often used in dissertations , theses, and research papers . Literature reviews give an overview of knowledge on a subject, helping you identify relevant theories and methods, as well as gaps in existing research. Literature reviews are set up similarly to other academic texts , with an introduction , a main body, and a conclusion .

An annotated bibliography is a list of source references that has a short description (called an annotation ) for each of the sources. It is often assigned as part of the research process for a paper .

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

McCombes, S. (2023, September 11). How to Write a Literature Review | Guide, Examples, & Templates. Scribbr. Retrieved August 19, 2024, from https://www.scribbr.com/dissertation/literature-review/

Is this article helpful?

Shona McCombes

Other students also liked, what is a theoretical framework | guide to organizing, what is a research methodology | steps & tips, how to write a research proposal | examples & templates, what is your plagiarism score.

Literature Review Tips & Tools

- Tips & Examples

Organizational Tools

Tools for systematic reviews.

- Bubbl.us Free online brainstorming/mindmapping tool that also has a free iPad app.

- Coggle Another free online mindmapping tool.

- Organization & Structure tips from Purdue University Online Writing Lab

- Literature Reviews from The Writing Center at University of North Carolina at Chapel Hill Gives several suggestions and descriptions of ways to organize your lit review.

- Cochrane Handbook for Systematic Reviews of Interventions "The Cochrane Handbook for Systematic Reviews of Interventions is the official guide that describes in detail the process of preparing and maintaining Cochrane systematic reviews on the effects of healthcare interventions. "

- Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) website "PRISMA is an evidence-based minimum set of items for reporting in systematic reviews and meta-analyses. PRISMA focuses on the reporting of reviews evaluating randomized trials, but can also be used as a basis for reporting systematic reviews of other types of research, particularly evaluations of interventions."

- PRISMA Flow Diagram Generator Free tool that will generate a PRISMA flow diagram from a CSV file (sample CSV template provided) more... less... Please cite as: Haddaway, N. R., Page, M. J., Pritchard, C. C., & McGuinness, L. A. (2022). PRISMA2020: An R package and Shiny app for producing PRISMA 2020-compliant flow diagrams, with interactivity for optimised digital transparency and Open Synthesis Campbell Systematic Reviews, 18, e1230. https://doi.org/10.1002/cl2.1230

- Rayyan "Rayyan is a 100% FREE web application to help systematic review authors perform their job in a quick, easy and enjoyable fashion. Authors create systematic reviews, collaborate on them, maintain them over time and get suggestions for article inclusion."

- Covidence Covidence is a tool to help manage systematic reviews (and create PRISMA flow diagrams). **UMass Amherst doesn't subscribe, but Covidence offers a free trial for 1 review of no more than 500 records. It is also set up for researchers to pay for each review.

- PROSPERO - Systematic Review Protocol Registry "PROSPERO accepts registrations for systematic reviews, rapid reviews and umbrella reviews. PROSPERO does not accept scoping reviews or literature scans. Sibling PROSPERO sites registers systematic reviews of human studies and systematic reviews of animal studies."

- Critical Appraisal Tools from JBI Joanna Briggs Institute at the University of Adelaide provides these checklists to help evaluate different types of publications that could be included in a review.

- Systematic Review Toolbox "The Systematic Review Toolbox is a community-driven, searchable, web-based catalogue of tools that support the systematic review process across multiple domains. The resource aims to help reviewers find appropriate tools based on how they provide support for the systematic review process. Users can perform a simple keyword search (i.e. Quick Search) to locate tools, a more detailed search (i.e. Advanced Search) allowing users to select various criteria to find specific types of tools and submit new tools to the database. Although the focus of the Toolbox is on identifying software tools to support systematic reviews, other tools or support mechanisms (such as checklists, guidelines and reporting standards) can also be found."

- Abstrackr Free, open-source tool that "helps you upload and organize the results of a literature search for a systematic review. It also makes it possible for your team to screen, organize, and manipulate all of your abstracts in one place." -From Center for Evidence Synthesis in Health

- SRDR Plus (Systematic Review Data Repository: Plus) An open-source tool for extracting, managing,, and archiving data developed by the Center for Evidence Synthesis in Health at Brown University

- RoB 2 Tool (Risk of Bias for Randomized Trials) A revised Cochrane risk of bias tool for randomized trials

- << Previous: Tips & Examples

- Next: Writing & Citing Help >>

- Last Updated: Jul 30, 2024 9:23 AM

- URL: https://guides.library.umass.edu/litreviews

© 2022 University of Massachusetts Amherst • Site Policies • Accessibility

Writing in the Health and Social Sciences

- Journal Publishing

- Style and Writing Guides

- Readings about Writing

- Resources for Dissertation Authors

- Citation Management and Formatting Tools

Systematic Literature Reviews: Steps & Resources

Literature review & systematic review steps.

- What are Literature Reviews?

- Conducting & Reporting Systematic Reviews

- Finding Systematic Reviews

- Tutorials & Tools for Literature Reviews

What are Systematic Reviews? (3 minutes, 24 second YouTube Video)

These steps for conducting a systematic literature review are listed below .

Also see subpages for more information about:

- The different types of literature reviews, including systematic reviews and other evidence synthesis methods

- Tools & Tutorials

- Develop a Focused Question

- Scope the Literature (Initial Search)

- Refine & Expand the Search

- Limit the Results

- Download Citations

- Abstract & Analyze

- Create Flow Diagram

- Synthesize & Report Results

1. Develop a Focused Question

Consider the PICO Format: Population/Problem, Intervention, Comparison, Outcome

Focus on defining the Population or Problem and Intervention (don't narrow by Comparison or Outcome just yet!)

"What are the effects of the Pilates method for patients with low back pain?"

Tools & Additional Resources:

- PICO Question Help

- Stillwell, Susan B., DNP, RN, CNE; Fineout-Overholt, Ellen, PhD, RN, FNAP, FAAN; Melnyk, Bernadette Mazurek, PhD, RN, CPNP/PMHNP, FNAP, FAAN; Williamson, Kathleen M., PhD, RN Evidence-Based Practice, Step by Step: Asking the Clinical Question, AJN The American Journal of Nursing : March 2010 - Volume 110 - Issue 3 - p 58-61 doi: 10.1097/01.NAJ.0000368959.11129.79

2. Scope the Literature

A "scoping search" investigates the breadth and/or depth of the initial question or may identify a gap in the literature.

Eligible studies may be located by searching in:

- Background sources (books, point-of-care tools)

- Article databases

- Trial registries

- Grey literature

- Cited references

- Reference lists

When searching, if possible, translate terms to controlled vocabulary of the database. Use text word searching when necessary.

Use Boolean operators to connect search terms:

- Combine separate concepts with AND (resulting in a narrower search)

- Connecting synonyms with OR (resulting in an expanded search)

Search: pilates AND ("low back pain" OR backache )

Video Tutorials - Translating PICO Questions into Search Queries

- Translate Your PICO Into a Search in PubMed (YouTube, Carrie Price, 5:11)

- Translate Your PICO Into a Search in CINAHL (YouTube, Carrie Price, 4:56)

3. Refine & Expand Your Search

Expand your search strategy with synonymous search terms harvested from:

- database thesauri

- reference lists

- relevant studies

Example:

(pilates OR exercise movement techniques) AND ("low back pain" OR backache* OR sciatica OR lumbago OR spondylosis)

As you develop a final, reproducible strategy for each database, save your strategies in a:

- a personal database account (e.g., MyNCBI for PubMed)

- Log in with your NYU credentials

- Open and "Make a Copy" to create your own tracker for your literature search strategies

4. Limit Your Results

Use database filters to limit your results based on your defined inclusion/exclusion criteria. In addition to relying on the databases' categorical filters, you may also need to manually screen results.

- Limit to Article type, e.g.,: "randomized controlled trial" OR multicenter study

- Limit by publication years, age groups, language, etc.

NOTE: Many databases allow you to filter to "Full Text Only". This filter is not recommended . It excludes articles if their full text is not available in that particular database (CINAHL, PubMed, etc), but if the article is relevant, it is important that you are able to read its title and abstract, regardless of 'full text' status. The full text is likely to be accessible through another source (a different database, or Interlibrary Loan).

- Filters in PubMed

- CINAHL Advanced Searching Tutorial

5. Download Citations

Selected citations and/or entire sets of search results can be downloaded from the database into a citation management tool. If you are conducting a systematic review that will require reporting according to PRISMA standards, a citation manager can help you keep track of the number of articles that came from each database, as well as the number of duplicate records.

In Zotero, you can create a Collection for the combined results set, and sub-collections for the results from each database you search. You can then use Zotero's 'Duplicate Items" function to find and merge duplicate records.

- Citation Managers - General Guide

6. Abstract and Analyze

- Migrate citations to data collection/extraction tool

- Screen Title/Abstracts for inclusion/exclusion

- Screen and appraise full text for relevance, methods,

- Resolve disagreements by consensus

Covidence is a web-based tool that enables you to work with a team to screen titles/abstracts and full text for inclusion in your review, as well as extract data from the included studies.

- Covidence Support

- Critical Appraisal Tools

- Data Extraction Tools

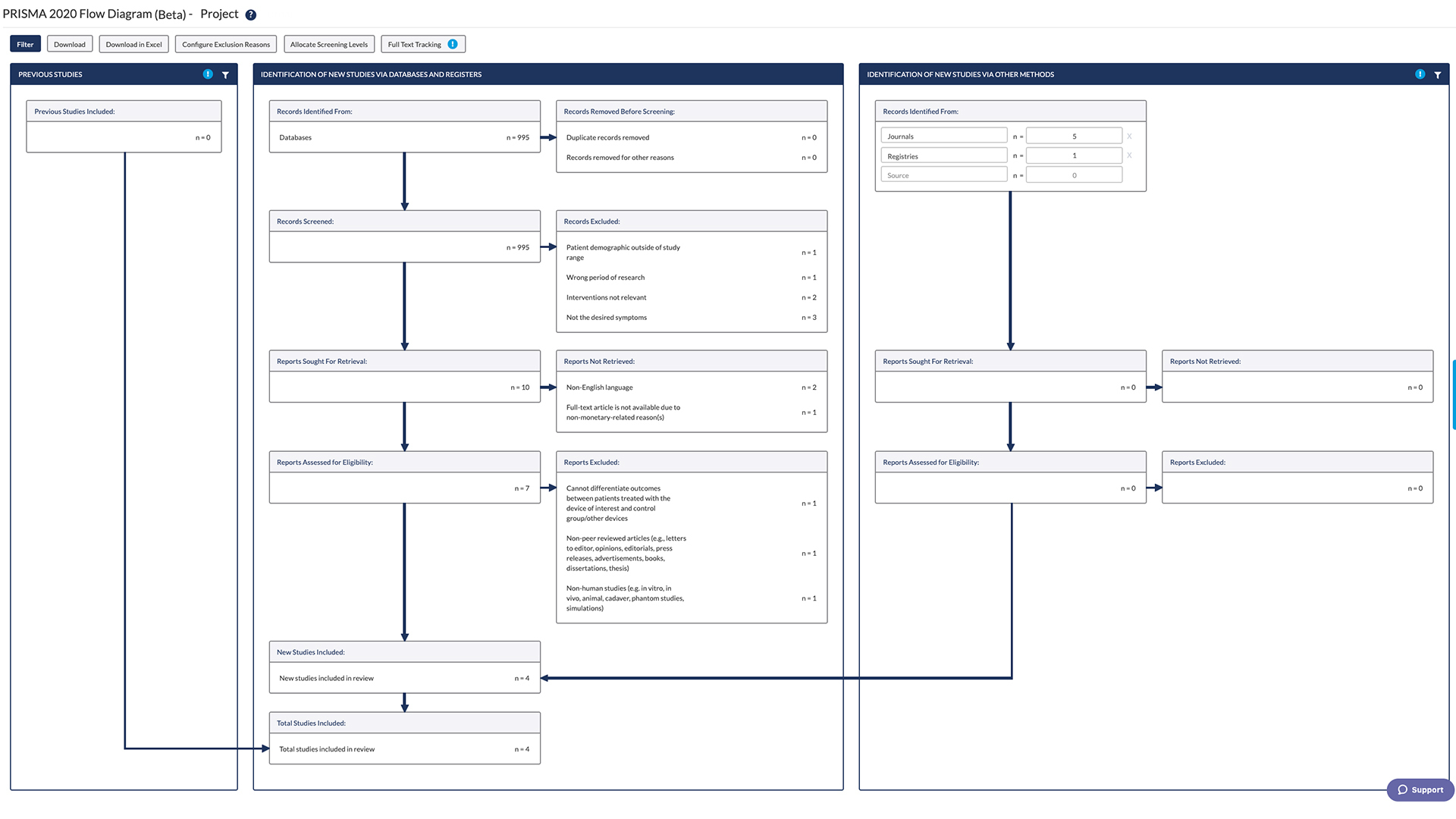

7. Create Flow Diagram

The PRISMA (Preferred Reporting Items for Systematic reviews and Meta-Analyses) flow diagram is a visual representation of the flow of records through different phases of a systematic review. It depicts the number of records identified, included and excluded. It is best used in conjunction with the PRISMA checklist .

Example from: Stotz, S. A., McNealy, K., Begay, R. L., DeSanto, K., Manson, S. M., & Moore, K. R. (2021). Multi-level diabetes prevention and treatment interventions for Native people in the USA and Canada: A scoping review. Current Diabetes Reports, 2 (11), 46. https://doi.org/10.1007/s11892-021-01414-3

- PRISMA Flow Diagram Generator (ShinyApp.io, Haddaway et al. )

- PRISMA Diagram Templates (Word and PDF)

- Make a copy of the file to fill out the template

- Image can be downloaded as PDF, PNG, JPG, or SVG

- Covidence generates a PRISMA diagram that is automatically updated as records move through the review phases

8. Synthesize & Report Results

There are a number of reporting guideline available to guide the synthesis and reporting of results in systematic literature reviews.

It is common to organize findings in a matrix, also known as a Table of Evidence (ToE).

- Reporting Guidelines for Systematic Reviews

- Download a sample template of a health sciences review matrix (GoogleSheets)

Steps modified from:

Cook, D. A., & West, C. P. (2012). Conducting systematic reviews in medical education: a stepwise approach. Medical Education , 46 (10), 943–952.

- << Previous: Citation Management and Formatting Tools

- Next: What are Literature Reviews? >>

- Last Updated: Aug 13, 2024 4:59 PM

- URL: https://guides.nyu.edu/healthwriting

10 Open Science Tools for Literature Review You Should Know about

Here are 10 literature search tools that will make your scientific literature search faster and more convenient. All of the presented literature review software is free and follows Open Science principles.

Traditionally, scientific literature has been tucked away behind paywalls of academic publishers. Not only is the access to papers often restricted, but subscriptions are required to use many scientific search engines. This practice discriminates against universities and institutions who cannot afford the licenses, e.g. in low-income countries. Closed publishing also makes it hard for persons not affiliated with research institutes, such as freelance journalists or the public, to learn about scientific discoveries.

The proportion of research accessible publicly today at no cost varies between disciplines . While in the biomedical sciences and mathematics, the majority of research published between 2009 and 2015 was openly accessible, this held true only for around 15 percent of publications in chemistry. Luckily, the interest in open access publishing is steadily increasing and has gained momentum in the past decade or so.

Many governmental funding bodies around the world nowadays require science resulting from grant money they provided to be available publicly for free. The exact requirements vary and UNESCO is currently developing a framework that specifies standards for the whole area of Open Science.

Once I started my research on the topic, I was astonished by just how many free Open Science tools for literature review already exist! Read on below for 10 literature search tools — from a search engines for research papers, over literature review software that helps you quickly find open access versions of papers, to tools that help you save the correct citation in one click.

Tools for Literature review

First, an overview of the literature search tools in this blog post:

ScienceOpen

- Citation Gecko

- Local Citation Network

ResearchRabbit

- Open Access Button

- EndNote Click

Read by QxMD

I divided the tools into four categories:

Search engines for research papers

- Literature review software based on citation networks

- Locating open access scientific papers, and

- Other tools that help in the literature review

Here, we go!

The best place to start a scientific literature search is with a search engine for research papers. Here are two you might not have heard of!

Want to perform a literature search and don’t want to pay for Web of Science or Scopus or perhaps you are tired of the limited functionality of the free Google Scholar ? ScienceOpen is many things, among others a search engine for research papers. Despite being owned by a private company, this scientific search engine is freely accessible with visually appealing and functional design. Search results are clearly labelled for type of publication, number of citations, altmetrics scores etc. and allow for filtering. You can also access citation metrics, i.e., display which publications have cited a certain paper.

Recommended by a reader of the blog (thank you!), the Lens is a search tool that doesn’t only allow you to search the scholarly literature but patents too! Millions of patents from over 95 jurisdictions can be searched. The Lens is run by the non-profit social enterprise Cambia. The search engine is free to use for the public, though charges occur for commercial use and to get additional functionality.

Literature Review software based on citation networks

The next category of tools we will be looking at are a bit more advanced than a simple search engine for research papers. These literature search tools help you discover scientific literature you may have missed by visualising citation networks.

Citation Gecko

The literature search tool Citation Gecko is an open source web app that makes it easier to discover relevant scientific literature than your average keyword-based search engine for research papers. It works in the following way: First you upload about 5-6 “seed papers”. The program then extracts all references in and to these seed papers and creates a visual citation network. The nodes are displayed in different colours and sizes depending on whether the papers are citing a seed paper or are cited by it and how many, respectively. By combing through the citation network, you can discover new papers that may be relevant for your scientific literature search. You can also increase your citation network step by step by including more seed papers.

This literature review tool was developed by Barney Walker , and the underlying citation data is provided by Crossref and Open Citations .

Local Citation Network

Similar to Citation Gecko, Local Citation Network is an open source tool that works as a scientific search engine on steroids. Local Citation Network was developed by Physician Scientist Tim Wölfle. This literature review tool works best if you feed it with a larger library of seed papers than required for Citation Gecko. Therefore, Wölfle recommends using it at the end of your scientific literature search to identify papers you may have missed.

As an alternative to the literature search tools Citation Gecko and Local Citation Network, a reader of the blog recommended ResearchRabbit . It’s free to use and looks like a versatile piece of literature review software helping you build your own citation network. ResearchRabbit lets you add labels to the entries in your citation network, download PDFs of papers and sign up for email alerts for new papers related to your research topic. Instead of a tool to use only once during your scientific literature search, ResearchRabbit seems to function more like a private scientific library storing (and connecting) all the papers in your field.

Run by (former) researchers and engineers, ResearchRabbit is partly financed through donations but their website does not state where the core funding of this literature review software originates from.

Locating open access scientific papers

You may face the problem in your scientific literature search that you don’t have access to every research paper you are interested in. I highly recommend installing at least one of the open access tools below so you can quickly locate freely accessible versions of the scientific literature if available anywhere.

Open Access Button

Works like the scientific search engine Sci-hub but is legal: You enter the DOI, link or citation of a paper and the literature review tool Open Access Button displays it if freely accessible anywhere. To find an open access version, Open Access Button searches thousands of repositories, for example, preprint servers, authors’ personal pages, open access journals and other aggregators such as the COnnecting REpositories service based at The Open University in the UK ( CORE ), the EU-funded OpenAire infrastructure, and the US community initiative Share .

If the article you are looking for isn’t freely available, Open Access Button asks the author to share it to a repository. You can enter your email address to be notified once it has become available.

Open Access Button is also available as browser plugin, which means that a button appears next to an article whenever a free version is available. This search engine for research papers is funded by non-profit foundations and is open source.

Unpaywall

Unpaywall is a search engine for research papers similar to Open Access Button — but only available as browser plugin. If the article you are looking at is behind a paywall but freely accessible somewhere else, a green button appears on the right side of the article. I installed it recently and regret not having done it sooner, it works really smoothly! I think the plugin is a great help in your scientific literature search.

Unpaywall is run by the non-profit organisation Our Research who has created a fleet of open science tools.

EndNote Click

Another browser extension that lets you access the scientific literature for free if available is EndNote Click (formerly Kopernio). EndNote Click claims to be faster than other search engines for research papers bypassing redirects and verification steps. I personally don’t find the Unpaywall or Open Access Button plugins inconvenient to use but I’d encourage you to try out all of these scientific search engines and see what works best for you.

One advantage of EndNote Click that a reader of the blog told me about is the side bar that appears when opening a paper through the plugin. It lets you, for example, save citations quickly, avoiding time-consuming searches on publishers’ websites.

As the reference manager, EndNote Click is part of the research analytics company Clarivate.

Other tools for literature review

This last category of literature search tools features a tool that creates a personalised feed of scientific literature for you and another that makes citing the scientific literature effortless.

Available as an app or in a browser window, the literature review tool Read lets you create a personalised feed that is updated daily with new papers on research topics or from journals of your choice. If there is an openly accessible version of an article, you can read it with one click. If your institution has journal subscriptions, you can also link them to your Read profile. Read has been created by the company QxMD and is free to use.

CiteAs

You discovered a promising paper in your scientific literature search and want to cite it? CiteAs is a convenient literature review tool to obtain the correct citation for any publication, preprint, software or dataset in one click. Funded by the Alfred P. Sloan Foundation, CiteAs is operated partly by the non-profit Our Research .

Beyond literature review tools

There you have it, 10 tools for literature review that are all completely free and follow Open Science principles.

Of course, finding a great literature review tool, such as a search engine for research papers or a citation tool, is only one essential part in the whole process of writing a scientific paper. If you would like to learn a complete process to write a scientific article step by step, then you’ll love our free training. Simply click on the orange button below to watch it now (or sign up to watch it later).

Share article

© Copyright 2018-2024 by Anna Clemens. All Rights Reserved.

Photography by Alice Dix

RAxter is now Enago Read! Enjoy the same licensing and pricing with enhanced capabilities. No action required for existing customers.

Your all in one AI-powered Reading Assistant

A Reading Space to Ideate, Create Knowledge, and Collaborate on Your Research