- 🌐 All Sites

- _APDaga DumpBox

- _APDaga Tech

- _APDaga Invest

- _APDaga Videos

- 🗃️ Categories

- _Free Tutorials

- __Python (A to Z)

- __Internet of Things

- __Coursera (ML/DL)

- __HackerRank (SQL)

- __Interview Q&A

- _Artificial Intelligence

- __Machine Learning

- __Deep Learning

- _Internet of Things

- __Raspberry Pi

- __Coursera MCQs

- __Linkedin MCQs

- __Celonis MCQs

- _Handwriting Analysis

- __Graphology

- _Investment Ideas

- _Open Diary

- _Troubleshoots

- _Freescale/NXP

- 📣 Mega Menu

- _Logo Maker

- _Youtube Tumbnail Downloader

- 🕸️ Sitemap

Coursera: Machine Learning - All weeks solutions [Assignment + Quiz] - Andrew NG

![Coursera: Machine Learning - All weeks solutions [Assignment + Quiz] - Andrew NG Coursera: Machine Learning - All weeks solutions [Assignment + Quiz] - Andrew NG](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEih0B0NpVY1WWh5W-aPiZ5fckewDLdTtMqzpdakJYFDfq421qiuG_LyCqsaUXx-1Eqjb65iUZUtLzYTInm6843femcLYi3owzOlYr2qvS7XRvlrkLbRLK-vPoqV5xw-Tl68jidbeUT650I/w320-h200/Coursera+Machine+Learning+-+All+weeks+solutions+%255BAssignment+%252B+Quiz%255D+-+Andrew+NG.webp)

Recommended Machine Learning Courses: Coursera: Machine Learning Coursera: Deep Learning Specialization Coursera: Machine Learning with Python Coursera: Advanced Machine Learning Specialization Udemy: Machine Learning LinkedIn: Machine Learning Eduonix: Machine Learning edX: Machine Learning Fast.ai: Introduction to Machine Learning for Coders

=== Week 1 ===

Assignments: .

- No Assignment for Week 1

- Machine Learning (Week 1) Quiz ▸ Introduction

- Machine Learning (Week 1) Quiz ▸ Linear Regression with One Variable

- Machine Learning (Week 1) Quiz ▸ Linear Algebra

=== Week 2 ===

Assignments:.

- Machine Learning (Week 2) [Assignment Solution] ▸ Linear regression and get to see it work on data.

- Machine Learning (Week 2) Quiz ▸ Linear Regression with Multiple Variables

- Machine Learning (Week 2) Quiz ▸ Octave / Matlab Tutorial

=== Week 3 ===

- Machine Learning (Week 3) [Assignment Solution] ▸ Logistic regression and apply it to two different datasets

- Machine Learning (Week 3) Quiz ▸ Logistic Regression

- Machine Learning (Week 3) Quiz ▸ Regularization

=== Week 4 ===

- Machine Learning (Week 4) [Assignment Solution] ▸ One-vs-all logistic regression and neural networks to recognize hand-written digits.

- Machine Learning (Week 4) Quiz ▸ Neural Networks: Representation

=== Week 5 ===

- Machine Learning (Week 5) [Assignment Solution] ▸ Back-propagation algorithm for neural networks to the task of hand-written digit recognition.

- Machine Learning (Week 5) Quiz ▸ Neural Networks: Learning

=== Week 6 ===

- Machine Learning (Week 6) [Assignment Solution] ▸ Regularized linear regression to study models with different bias-variance properties.

- Machine Learning (Week 6) Quiz ▸ Advice for Applying Machine Learning

- Machine Learning (Week 6) Quiz ▸ Machine Learning System Design

=== Week 7 ===

- Machine Learning (Week 7) [Assignment Solution] ▸ Support vector machines (SVMs) to build a spam classifier.

- Machine Learning (Week 7) Quiz ▸ Support Vector Machines

=== Week 8 ===

- Machine Learning (Week 8) [Assignment Solution] ▸ K-means clustering algorithm to compress an image. ▸ Principal component analysis to find a low-dimensional representation of face images.

- Machine Learning (Week 8) Quiz ▸ Unsupervised Learning

- Machine Learning (Week 8) Quiz ▸ Principal Component Analysis

=== Week 9 ===

- Machine Learning (Week 9) [Assignment Solution] ▸ Anomaly detection algorithm to detect failing servers on a network. ▸ Collaborative filtering to build a recommender system for movies.

- Machine Learning (Week 9) Quiz ▸ Anomaly Detection

- Machine Learning (Week 9) Quiz ▸ Recommender Systems

=== Week 10 ===

- No Assignment for Week 10

- Machine Learning (Week 10) Quiz ▸ Large Scale Machine Learning

=== Week 11 ===

- No Assignment for Week 11

- Machine Learning (Week 11) Quiz ▸ Application: Photo OCR Variables

Question 5 Your friend in the U.S. gives you a simple regression fit for predicting house prices from square feet. The estimated intercept is -44850 and the estimated slope is 280.76. You believe that your housing market behaves very similarly, but houses are measured in square meters. To make predictions for inputs in square meters, what intercept must you use? Hint: there are 0.092903 square meters in 1 square foot. You do not need to round your answer. (Note: the next quiz question will ask for the slope of the new model.) i dint get answer for this could any one plz help me with it

Please comment below specific week's quiz blog post. So that I can keep on updating that blog post with updated questions and answers.

This comment has been removed by the author.

Good day Akshay, I trust that you are doing well. I am struggling to pass week 2 assignment, can you please assist me. I am desperate to pass this module and I am only getting 0%... Thank you, I would really appreat your help.

Our website uses cookies to improve your experience. Learn more

Contact form

Assignments

Jump to: [Homeworks] [Projects] [Quizzes] [Exams]

There will be one homework (HW) for each topical unit of the course. Due about a week after we finish that unit.

These are intended to build your conceptual analysis skills plus your implementation skills in Python.

- HW0 : Numerical Programming Fundamentals

- HW1 : Regression, Cross-Validation, and Regularization

- HW2 : Evaluating Binary Classifiers and Implementing Logistic Regression

- HW3 : Neural Networks and Stochastic Gradient Descent

- HW4 : Trees

- HW5 : Kernel Methods and PCA

After completing each unit, there will be a 20 minute quiz (taken online via gradescope).

Each quiz will be designed to assess your conceptual understanding about each unit.

Probably 10 questions. Most questions will be true/false or multiple choice, with perhaps 1-3 short answer questions.

You can view the conceptual questions in each unit's in-class demos/labs and homework as good practice for the corresponding quiz.

There will be three larger "projects" throughout the semester:

- Project A: Classifying Images with Feature Transformations

- Project B: Classifying Sentiment from Text Reviews

- Project C: Recommendation Systems for Movies

Projects are meant to be open-ended and encourage creativity. They are meant to be case studies of applications of the ML concepts from class to three "real world" use cases: image classification, text classification, and recommendations of movies to users.

Each project will due approximately 4 weeks after being handed out. Start early! Do not wait until the last few days.

Projects will generally be centered around a particular methodology for solving a specific task and involve significant programming (with some combination of developing core methods from scratch or using existing libraries). You will need to consider some conceptual issues, write a program to solve the task, and evaluate your program through experiments to compare the performance of different algorithms and methods.

Your main deliverable will be a short report (2-4 pages), describing your approach and providing several figures/tables to explain your results to the reader.

You’ll be assessed on effort, the sophistication of your technical approach, the clarity of your explanations, the evidence that you present to support your evaluative claims, and the performance of your implementation. A high-performing approach with little explanation will receive little credit, while a careful set of experiments that illuminate why a particular direction turned out to be a dead end may receive close to full credit.

Introduction to Machine Learning | Week 5

Session: JAN-APR 2024

Course name: Introduction to Machine Learning

Course Link: Click Here

For answers or latest updates join our telegram channel: Click here to join

These are Introduction to Machine Learning Week 5 Assignment 5 Answers

Q1. Consider a feedforward neural network that performs regression on a p-dimensional input to produce a scalar output. It has m hidden layers and each of these layers has k hidden units. What is the total number of trainable parameters in the network? Ignore the bias terms. pk+mk2 pk+mk2+k pk+(m−1)k2+k p2+(m−1)pk+k p2+(m−1)pk+k2

Answer: pk+(m−1)k2+k

Q2. Consider a neural network layer defined as y=ReLU(Wx). Here x∈Rp is the input, y∈Rd is the output and W∈Rd×p is the parameter matrix. The ReLU activation (defined as ReLU(z):=max(0,z) for a scalar z) is applied element-wise to Wx. Find ∂yi∂Wij where i=1,..,d and j=1,…,p. In the following options, I (condition) is an indicator function that returns 1 if the condition is true and 0 if it is false. I(yi>0)xi I(yi>0)xj I(yi≤0)xi I(yi>0)Wijxj I(yi≤0)Wijxi

Answer: I(yi>0)xj

Q3. Consider a two-layered neural network y=σ(W(B)σ(W(A)x)). Let h=σ(W(A)x) denote the hidden layer representation. W(A) and W(B) are arbitrary weights. Which of the following statement(s) is/are true? Note: ∇g(f) denotes the gradient of f w.r.t g. ∇h(y) depends on W(A). ∇W(A)(y) depends on W(B). ∇W(A)(h) depends on W(B). ∇W(B)(y) depends on W(A).

Answer: B, D

Q4. Which of the following statement(s) about the initialization of neural network weights is/are true? Two different initializations of the same network could converge to different minima. For a given initialization, gradient descent will converge to the same minima irrespective of the learning rate. The weights should be initialized to a constant value. The initial values of the weights should be sampled from a probability distribution.

Answer: A, D

Q5. Consider the following statements about the derivatives of the sigmoid (σ(x)=11+exp(−x))) and tanh(tanh(x)=exp(x)−exp(−x)exp(x)+exp(−x)) activation functions. Which of these statement(s) is/are correct? 0<σ′(x)≤18 limx→−∞σ′(x)=0 0<tanh′(x)≤1 limx→+∞tanh′(x)=1

Answer: B, C

Q6. A geometric distribution is defined by the p.m.f. f(x;p)=(1−p)(x−1)p for x=1,2,….. Given the samples [4,5,6,5,4,3] drawn from this distribution, find the MLE of p. Using this estimate, find the probability of sampling x≥5 from the distribution. 0.289 0.325 0.417 0.366

Answer: 0.366

Q7. Consider a Bernoulli distribution with with p=0.7 (true value of the parameter). We draw samples from this distribution and compute an MAP estimate of p by assuming a prior distribution over p. Which of the following statement(s) is/are true?

If the prior is Beta(2,6), we will likely require fewer samples for converging to the true value than if the prior is Beta(6,2). If the prior is Beta(6,2), we will likely require fewer samples for converging to the true value than if the prior is Beta(2,6). With a prior of Beta(2,100), the estimate will never converge to the true value, regardless of the number of samples used. With a prior of U(0,0.5) (i.e. uniform distribution between 0 and 0.5), the estimate will never converge to the true value, regardless of the number of samples used.

Q8. Which of the following statement(s) about parameter estimation techniques is/are true? To obtain a distribution over the predicted values for a new data point, we need to compute an integral over the parameter space. The MAP estimate of the parameter gives a point prediction for a new data point. The MLE of a parameter gives a distribution of predicted values for a new data point. We need a point estimate of the parameter to compute a distribution of the predicted values for a new data point.

Answer: A, B

More Weeks of Introduction to Machine Learning: Click here

More Nptel Courses: https://progiez.com/nptel-assignment-answers

Session: JULY-DEC 2023

Course Name: Introduction to Machine Learning

Q1. The perceptron learning algorithm is primarily designed for: Regression tasks Unsupervised learning Clustering tasks Linearly separable classification tasks Non-linear classification tasks

Answer: Linearly separable classification tasks

Q2. The last layer of ANN is linear for ________ and softmax for ________. Regression, Regression Classification, Classification Regression, Classification Classification, Regression

Answer: Regression, Classification

Q3. Consider the following statement and answer True/False with corresponding reason: The class outputs of a classification problem with a ANN cannot be treated independently. True. Due to cross-entropy loss function True. Due to softmax activation False. This is the case for regression with single output False. This is the case for regression with multiple outputs

Answer: True. Due to softmax activation

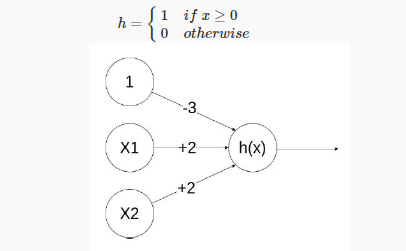

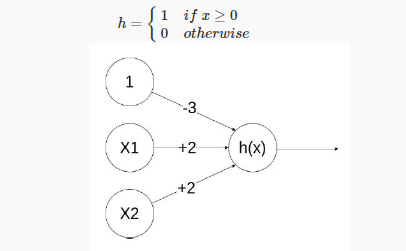

Q4. Given below is a simple ANN with 2 inputs X1,X2∈{0,1} and edge weights −3,+2,+2 h={1 if x≥0 0 otherwise Which of the following logical functions does it compute? XOR NOR NAND AND

Answer: AND

Q5. Using the notations used in class, evaluate the value of the neural network with a 3-3-1 architecture (2-dimensional input with 1 node for the bias term in both the layers). The parameters are as follows α=[1 1 0.4 0.6 0.3 0.5] β=[0.4 0.6 0.9] Using sigmoid function as the activation functions at both the layers, the output of the network for an input of (0.8, 0.7) will be (up to 4 decimal places) 0.7275 0.0217 0.2958 0.8213 0.7291 0.8414 0.1760 0.7552 0.9442 None of these

Answer: 0.8414

Q6. If the step size in gradient descent is too large, what can happen? Overfitting The model will not converge We can reach maxima instead of minima None of the above

Answer: The model will not converge

Q7. On different initializations of your neural network, you get significantly different values of loss. What could be the reason for this? Overfitting Some problem in the architecture Incorrect activation function Multiple local minima

Answer: Multiple local minima

Q8. The likelihood L(θ|X) is given by: P(θ|X) P(X|θ) P(X).P(θ) P(θ)/P(X)

Answer: P(X|θ)

Q9. Why is proper initialization of neural network weights important? To ensure faster convergence during training To prevent overfitting To increase the model’s capacity Initialization doesn’t significantly affect network performance To minimize the number of layers in the network

Answer: To ensure faster convergence during training

Q10. Which of these are limitations of the backpropagation algorithm? It requires error function to be differentiable It requires activation function to be differentiable The ith layer cannot be updated before the update of layer i+1 is complete All of the above (a) and (b) only None of these

Answer: All of the above

More Weeks of INTRODUCTION TO MACHINE LEARNING: Click here

More Nptel Courses: Click here

Session: JAN-APR 2023

Q1. You are given the N samples of input (x) and output (y) as shown in the figure below. What will be the most appropriate model y=f(x)?

a. y=wx˙withw>0 b. y=wx˙withw<0 c. y=xwwithw>0 d. y=xwwithw<0

Answer: c. y=xwwithw>0

Q2. For training a binary classification model with five independent variables, you choose to use neural networks. You apply one hidden layer with three neurons. What are the number of parameters to be estimated? (Consider the bias term as a parameter) a. 16 b. 21 c. 34 = 81 d. 43 = 64 e. 12 f. 22 g. 25 h. 26 i. 4 j. None of these

Answer: f. 22

Q3. Suppose the marks obtained by randomly sampled students follow a normal distribution with unknown μ. A random sample of 5 marks are 25, 55, 64, 7 and 99. Using the given samples find the maximum likelihood estimate for the mean. a. 54.2 b. 67.75 c. 50 d. Information not sufficient for estimation

Answer: c. 50

Q4. You are given the following neural networks which take two binary valued inputs x1,x2∈{0,1} and the activation function is the threshold function(h(x)=1 if x>0; 0 otherwise). Which of the following logical functions does it compute?

a. OR b. AND c. NAND d. None of the above.

Answer: a. OR

Q5. Using the notations used in class, evaluate the value of the neural network with a 3-3-1 archi- tecture (2-dimensional input with 1 node for the bias term in both the layers). The parameters are as follows α=[1−10.20.80.40.5] β=[0.80.40.5] Using sigmoid function as the activation functions at both the layers, the output of the network for an input of (0.8, 0.7) will be a. 0.6710 b. 0.9617 c. 0.6948 d. 0.7052 e. 0.2023 f. 0.7977 g. 0.2446 h. None of these

Answer: f. 0.7977

Q6. Which of the following statements are true: a. The chances of overfitting decreases with increasing the number of hidden nodes and increasing the number of hidden layers. b. A neural network with one hidden layer can represent any Boolean function given sufficient number of hidden units and appropriate activation functions. c. Two hidden layer neural networks can represent any continuous functions (within a tolerance) as long as the number of hidden units is sufficient and appropriate activation functions used.

Answer: b, c

Q7. We have a function which takes a two-dimensional input x=(x1,x2) and has two parameters w=(w1,w2) given by f(x,w)=σ(σ(x1w1)w2+x2) where σ(x)=11+e−x We use backprop- agation to estimate the right parameter values. We start by setting both the parameters to 1. Assume that we are given a training point x2=1,x1=0,y=5. Given this information answer the next two questions. What is the value of ∂f∂w2? a. 0.150 b. -0.25 c. 0.125 d. 0.098 e. 0.0746 f. 0.1604 g. None of these

Answer: e. 0.0746

Q8. If the learning rate is 0.5, what will be the value of w2 after one update using backpropagation algorithm? a. 0.4197 b. -0.4197 c. 0.6881 d. -0.6881 e. 1.3119 f. -1.3119 g. 0.5625 h. -0.5625 i. None of these

Answer: e. 1.3119

Q9. Which of the following are true when comparing ANNs and SVMs? a. ANN error surface has multiple local minima while SVM error surface has only one minima b. After training, an ANN might land on a different minimum each time, when initialized with random weights during each run. c. As shown for Perceptron, there are some classes of functions that cannot be learnt by an ANN. An SVM can learn a hyperplane for any kind of distribution. d. In training, ANN’s error surface is navigated using a gradient descent technique while SVM’s error surface is navigated using convex optimization solvers.

Answer: a, b, d

Q10. Which of the following are correct? a. A perceptron will learn the underlying linearly separable boundary with finite number of training steps. b. XOR function can be modelled by a single perceptron. c. Backpropagation algorithm used while estimating parameters of neural networks actually uses gradient descent algorithm. d. The backpropagation algorithm will always converge to global optimum, which is one of the reasons for impressive performance of neural networks.

Answer: a, c

More Weeks of Introduction to Machine Learning: Click Here

More Nptel courses: https://progiez.com/nptel

Session: JUL-DEC 2022

Course Name: INTRODUCTION TO MACHINE LEARNING

Link to Enroll: Click Here

Q1. If the step size in gradient descent is too large, what can happen? a. Overfitting b. The model will not converge c. We can reach maxima instead of minima d. None of the above

Answer: b. The model will not converge

Q2. Recall the XOR(tabulated below) example from class where we did a transformation of features to make it linearly separable. Which of the following transformations can also work?

a. X‘1=X21,X‘2=X22X′1=X12,X′2=X22 b. X‘1=1+X1,X‘2=1−X2X′1=1+X1,X′2=1−X2 c. X‘1=X1X2,X‘2=−X1X2X′1=X1X2,X′2=−X1X2 d. X‘1=(X1−X2)2,X‘2=(X1+X2)2X′1=(X1−X2)2,X′2=(X1+X2)2

Answer: c. X‘1=X1X2,X‘2=−X1X2X′1=X1X2,X′2=−X1X2

Q3. What is the effect of using activation function f(x)=xf(x)=x for hidden layers in an ANN? a. No effect. It’s as good as any other activation function (sigmoid, tanh etc). b. The ANN is equivalent to doing multi-output linear regression. c. Backpropagation will not work. d. We can model highly complex non-linear functions.

Answer: b. The ANN is equivalent to doing multi-output linear regression.

Q4. Which of the following functions can be used on the last layer of an ANN for classification? a. Softmax b. Sigmoid c. Tanh d. Linear

Q5. Statement: Threshold function cannot be used as activation function for hidden layers. Reason: Threshold functions do not introduce non-linearity. a. Statement is true and reason is false. b. Statement is false and reason is true. c. Both are true and the reason explains the statement. d. Both are true and the reason does not explain the statement.

Answer: a. Statement is true and reason is false.

Q6. We use several techniques to ensure the weights of the neural network are small (such as random initialization around 0 or regularisation). What conclusions can we draw if weights of our ANN are high? a. Model has overfitted. b. It was initialized incorrectly. c. At least one of (a) or (b). d. None of the above.

Answer: c. At least one of (a) or (b).

Q7. On different initializations of your neural network, you get significantly different values of loss. What could be the reason for this? a. Overfitting b. Some problem in the architecture c. Incorrect activation function d. Multiple local minima

Answer: a. Overfitting

Q8. The likelihood L(θ|X)L(θ|X) is given by: a. P(θ|X)P(θ|X) b. P(X|θ)P(X|θ) c. P(X).P(θ)P(X).P(θ) d. P(θ)P(X)P(θ)P(X)

Answer: b. P(X|θ)P(X|θ)

Q9. You are trying to estimate the probability of it raining today using maximum likelihood estimation. Given that in nn days, it rained nrnr times, what is the probability of it raining today? a. nrnnrn b. nrnr+nnrnr+n c. nnr+nnnr+n d. None of the above.

Answer: a. nrnnrn

Q10. Choose the correct statement (multiple may be correct): a. MLE is a special case of MAP when prior is a uniform distribution. b. MLE acts as regularisation for MAP. c. MLE is a special case of MAP when prior is a beta distribution . d. MAP acts as regularisation for MLE.

Answer: a, d

More NPTEL Solutions: https://progiez.com/nptel

NPTEL Introduction To Machine Learning Week 5 Assignment Answer 2023

NPTEL Introduction To Machine Learning Week 5 Assignment Solutions

1. The perceptron learning algorithm is primarily designed for:

- Regression tasks

- Unsupervised learning

- Clustering ta s ks

- Linearly separable classification tasks

- Non-linear classification tasks

2. The last layer of ANN is linear for and softmax for .

- Regression, Regression

- Classificat i on, Clas s ification

- Regression, Classification

- Classification, Regression

3. Consider the following statement and answer True/False with corresponding reason: The class outputs of a classification problem wit h a ANN cannot be treated independently.

- True. Due to cross-entropy loss function

- True. Due to softmax activation

- False. This is the c a se for regression with single output

- False. This is the case for regression with multiple outputs

4. Given below is a simple ANN with 2 inputs X1,X2∈{0,1} and edge weights −3,+2,+2

Which of the following logical functions does it compute?

5. Using the notations used in class, evaluate the value of the neural network with a 3-3-1 architecture (2-dimensional input with 1 node for the bias term in both the layers). The parameters are as follows

Using sigmoid function as the activation functions at both the layers, the output of the network for an input of (0.8, 0.7) will be (up to 4 decimal places)

- None of these

6. If the step size in gradient descent is too large, what can happen?

- Overfitting

- The model will not converge

- We can reach maxi m a instead of minima

- None of the above

7. On different initializations of your neural network, you get significantly different values of loss. What could be the reason for this?

- Some problem in the architecture

- Incorrect activatio n function

- Multiple local minima

8. The likelihood L(θ|X) is given by:

- P(X). P (θ)

9. Why is proper initialization of neural network weights important?

- To ensure faster convergence during training

- To prevent overfitting

- To increase the mod e l’s capacity

- Initialization doesn’t significantly affect network performance

- To minimize the number of layers in the network

10. Which of these are limitations of the backpropagation algorithm?

- It requires error function to be differentiable

- It requires activation function to be differentiable

- The ith layer cannot be update d before the update of layer i+1 is complete

- All of the above

- (a) and (b) only

Leave a comment Cancel reply

Save my name, email, and website in this browser for the next time I comment.

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications

Personal Solutions to Programming Assignments on Matlab

koushal95/Coursera-Machine-Learning-Assignments-Personal-Solutions

Folders and files, repository files navigation, coursera-machine-learning-assignments-personal-solutions.

Exercises are done on Matlab R2017a

This repository consists my personal solutions to the programming assignments of Andrew Ng's Machine Learning course on Coursera.

Course Schedule

Introduction

Linear Regression with One Variable

Linear Algebra Review

Linear Regression with Multiple Variables

Octave / Matlab Tutorial

Programming Exercise 1

Logistic Regression

Regularization

Programming Exercise 2

Neural Network Representation

Programming Exercise 3

Neural Networks: Learning

Programming Exercise 4

Advice for Applying Machine Learning

Programming Exercise 5

Machine Learning System Design

Support Vector Machines

Programming Exercise 6

Unsupervised Learning

Dimensionality Reduction

Programming Exercise 7

Anomaly Detection

Recommender Systems

Programming Exercise 8

Large Scale Machine Learning

Application Example: Photo OCR

- MATLAB 100.0%

For Earth Day, Try These Green Classroom Activities (Downloadable)

- Share article

Earth Day is April 22 in the United States and the day the spring equinox occurs in some parts of the world. It’s a day to reflect on the work being done to raise awareness of climate change and the need to protect natural resources for future generations. Protecting the earth can feel like an enormous, distant undertaking to young people. To help them understand that they can play a role by focusing on their backyards or school yards, educators can scale those feelings of enormity to manageable activities that make a difference.

We collected simple ideas for teachers and students to educate, empower, and build a connection with nature so that they may be inspired to respect it and protect it. Classrooms can be the perfect greenhouse to grow future stewards of the environment.

Click to Download the Activities

Sign Up for EdWeek Update

Edweek top school jobs.

Sign Up & Sign In

NPTEL Introduction To Machine Learning Week 5 Assignment Answer 2023

NPTEL Introduction To Machine Learning Week 5 Assignment Solutions

1. The perceptron learning algorithm is primarily designed for:

- Regression tasks

- Unsupervised learning

- Clustering ta s ks

- Linearly separable classification tasks

- Non-linear classification tasks

2. The last layer of ANN is linear for and softmax for .

- Regression, Regression

- Classificat i on, Clas s ification

- Regression, Classification

- Classification, Regression

3. Consider the following statement and answer True/False with corresponding reason: The class outputs of a classification problem wit h a ANN cannot be treated independently.

- True. Due to cross-entropy loss function

- True. Due to softmax activation

- False. This is the c a se for regression with single output

- False. This is the case for regression with multiple outputs

4. Given below is a simple ANN with 2 inputs X1,X2∈{0,1} and edge weights −3,+2,+2

Which of the following logical functions does it compute?

5. Using the notations used in class, evaluate the value of the neural network with a 3-3-1 architecture (2-dimensional input with 1 node for the bias term in both the layers). The parameters are as follows

Using sigmoid function as the activation functions at both the layers, the output of the network for an input of (0.8, 0.7) will be (up to 4 decimal places)

- None of these

6. If the step size in gradient descent is too large, what can happen?

- Overfitting

- The model will not converge

- We can reach maxi m a instead of minima

- None of the above

7. On different initializations of your neural network, you get significantly different values of loss. What could be the reason for this?

- Some problem in the architecture

- Incorrect activatio n function

- Multiple local minima

8. The likelihood L(θ|X) is given by:

- P(X). P (θ)

9. Why is proper initialization of neural network weights important?

- To ensure faster convergence during training

- To prevent overfitting

- To increase the mod e l’s capacity

- Initialization doesn’t significantly affect network performance

- To minimize the number of layers in the network

10. Which of these are limitations of the backpropagation algorithm?

- It requires error function to be differentiable

- It requires activation function to be differentiable

- The ith layer cannot be update d before the update of layer i+1 is complete

- All of the above

- (a) and (b) only

You must be logged in to post a comment.

IMAGES

VIDEO

COMMENTS

Coursera: Machine Learning (Week 5) [Assignment Solution] - Andrew NG. Back-propagation algorithm for neural networks to the task of hand-written digit recognition. I have recently completed the Machine Learning course from Coursera by Andrew NG. While doing the course we have to go through various quiz and assignments.

The entire code for week 5 Neural network has been uploaded here. If you're a beginner this is the way to go for you. I have tried to solve every question in multiple ways possible and have left a link on how each respective logic was built. Feel free to download and have fun. - GitHub - suhasraju/Coursera-Machine-learning-Week-5-Programming-assignment: The entire code for week 5 Neural ...

Welcome to our NPTEL course on "Machine Learning, ML." In this video, we delve into the key takeaways and answers from Week 5 of the course.Join us on this j...

Practice quiz : Advice for Applying Machine Learning; Practice quiz : Bias and Variance; Practice quiz : Machine Learning Development Process; Programming Assignment. Advice for Applied Machine Learning; Week 4. Practice quiz : Decision Trees; Practice quiz : Decision Trees Learning; Practice quiz : Decision Trees Ensembles; Programming ...

#machinelearning #nptel #swayam #python #ml All week Assignment SolutionIntroduction To Machine Learning - https://www.youtube.com/playlist?list=PL__28a0xF...

by Akshay Daga (APDaga) - April 25, 2021. 4. The complete week-wise solutions for all the assignments and quizzes for the course "Coursera: Machine Learning by Andrew NG" is given below: Recommended Machine Learning Courses: Coursera: Machine Learning. Coursera: Deep Learning Specialization.

Machine Learning (Stanford University) Week 5 assignments - Abhiroyq1/Machine-Learning-Week-5-solutions

updated Week 5 Intro To ML-IIT Madras:4.e6.d7.gNote:- These answers might be not 100% right, please don't copy paste blindly 🙏NPTEL Introduction to machine ...

View week 5 assignment solutions.pdf from CS 6-034 at Massachusetts Institute of Technology. Introduction to Machine Learning Assignment‐ Week 5 TYPE OF QUESTION: MCQ Number of questions: 10

The complete week-wise solutions for Coursera Machine Learning All Weeks Solutions assignments and quizzes taught by Andrew Ng. After completing this course you will get a broad idea of Machine learning algorithms.

NPTEL Introduction to Machine Learning Week 5 Assignment Answers 2024. 1. Consider a feedforward neural network that performs regression on a p. -dimensional input to produce a scalar output. It has m hidden layers and each of these layers has k hidden units. What is the total number of trainable parameters in the network? Ignore the bias terms.

After completing each unit, there will be a 20 minute quiz (taken online via gradescope). Each quiz will be designed to assess your conceptual understanding about each unit. Probably 10 questions. Most questions will be true/false or multiple choice, with perhaps 1-3 short answer questions. You can view the conceptual questions in each unit's ...

This repository contains the exercises, lab works and home works assignment for the Introduction to Machine Learning online class taught by Professor Leslie Pack Kaelbling, Professor Tomás Lozano-Pérez, Professor Isaac L. Chuang and Professor Duane S. Boning from Massachusett Institute of Technology - denikn/Machine-Learning-MIT-Assignment

For answers or latest updates join our telegram channel: Click here to join. These are Introduction to Machine Learning Week 5 Assignment 5 Answers. Q3. Consider a two-layered neural network y=σ (W (B)σ (W (A)x)). Let h=σ (W (A)x) denote the hidden layer representation. W (A) and W (B) are arbitrary weights.

#machinelearning #nptel #solutionsIntroduction to Machine LearningIn this video, we're going to unlock the answers to the Introduction to Machine Learning qu...

15. What is the sigmoid function's role in logistic regression? a. The sigmoid function transforms the input features to a higher-dimensional space. b. The sigmoid function calculates the dot p roduct of input features and weights. c. The sigmoid function defines the learning rate for gradient descent. d.

In this course we intend to introduce some of the basic concepts of machine learning from a mathematically well motivated perspective. We will cover the different learning paradigms and some of the more popular algorithms and architectures used in each of these paradigms. ... Assignment 4 data Solution 4; Week 5 - Artificial Neural Networks ...

Programming Assignment Unit 5 Bachelor of Science - Computer Science - University of the People CS 4407: Data Mining and Machine Learning Instructor: Alejandro Lara March 6, 2024. Part 1: Print decision tree a. Setting the working directory, loading the required packages (rpart and mlbench) and then loading the Ionosphere dataset. b.

Andrew Ng Machine Learning Week 5 Assignment: Neural Network Learning 2 stars 2 forks Branches Tags Activity. Star Notifications Code; Issues 0; Pull requests 0; Actions; Projects 0; Wiki; Security; Insights hangim/machine-learning-ex4. This commit does not belong to any branch on this repository, and may belong to a fork outside of the ...

Answer :- For Answer Click Here. 5. Using the notations used in class, evaluate the value of the neural network with a 3-3-1 architecture (2-dimensional input with 1 node for the bias term in both the layers). The parameters are as follows. Using sigmoid function as the activation functions at both the layers, the output of the network for an ...

Introduction to Machine Learning Week 5 Assignment Answers ||Jan 2024|| NPTEL1. Join telegram Channel -- https://t.me/doubttown 🚀 Welcome to Doubt Town, yo...

Coursera-Machine-Learning-Assignments-Personal-Solutions Exercises are done on Matlab R2017a This repository consists my personal solutions to the programming assignments of Andrew Ng's Machine Learning course on Coursera.

16 simple ideas for teachers and their students. During recess at Ruby Bridges Elementary School in Woodinville, Wash., students have access to cards with objects and words on them so that all ...

Answer :- For Answer Click Here. 5. Using the notations used in class, evaluate the value of the neural network with a 3-3-1 architecture (2-dimensional input with 1 node for the bias term in both the layers). The parameters are as follows. Using sigmoid function as the activation functions at both the layers, the output of the network for an ...