- Our Mission

Research Zeroes In on a Barrier to Reading (Plus, Tips for Teachers)

How much background knowledge is needed to understand a piece of text? New research appears to discover the tipping point.

By now, you’ve probably heard of the baseball experiment. It’s several decades old but has experienced a resurgence in popularity since Natalie Wexler highlighted it in her best-selling new book, The Knowledge Gap .

In the 1980s, researchers Donna Recht and Lauren Leslie asked middle school students to read a passage describing a baseball game, then reenact it with wooden figures on a miniature baseball field. They were surprised by the results: Even the best readers struggled to re-create the events described in the passage.

“Prior knowledge creates a scaffolding for information in memory,” they explained after seeing the results. “Students with high reading ability but low knowledge of baseball were no more capable of recall or summarization than were students with low reading ability and low knowledge of baseball.”

That modest experiment kicked off 30 years of research into reading comprehension, and study after study confirmed Recht and Leslie’s findings: Without background knowledge, even skilled readers labor to make sense of a topic. But those studies left a lot of questions unanswered: How much background knowledge is needed for better decoding? Is there a way to quantify and measure prior knowledge?

A 2019 study published in Psychological Science is finally shedding light on those mysteries. The researchers discovered a “knowledge threshold” when it comes to reading comprehension: If students were unfamiliar with 59 percent of the terms in a topic, their ability to understand the text was “compromised.”

In the study, 3,534 high school students were presented with a list of 44 terms and asked to identify whether each was related to the topic of ecology. Researchers then analyzed the student responses to generate a background-knowledge score, which represented their familiarity with the topic.

Without any interventions, students then read about ecosystems and took a test measuring how well they understood what they had read.

Students who scored less than 59 percent on the background-knowledge test also performed relatively poorly on the subsequent test of reading comprehension. But researchers noted a steep improvement in comprehension above the 59 percent threshold—suggesting both that a lack of background knowledge can be an obstacle to reading comprehension, and that there is a baseline of knowledge that rapidly accelerates comprehension.

Why does background knowledge matter? Reading is more than just knowing the words on the page, the researchers point out. It’s also about making inferences about what’s left off the page—and the more background knowledge a reader has, the better able he or she is to make those inferences.

“Collectively, these results may help identify who is likely to have a problem comprehending information on a specific topic and, to some extent, what knowledge is likely required to comprehend information on that topic,” conclude Tenaha O'Reilly, the lead author of the study, and his colleagues.

5 Ways Teachers Can Build Background Knowledge

Spending a few minutes making sure that students meet the knowledge threshold for a topic can yield outsized results. Here’s what teachers can do:

- Mind the gap: You may be an expert in civil war history, but be mindful that your students will represent a wide range of existing background knowledge on the topic. Similarly, take note of the cultural, social, economic, and racial diversity in your classroom. You may think it’s cool to teach physics using a trebuchet, but not all students have been exposed to the same ideas that you have.

- Identify common terms in the topic. Ask yourself, “What are the main ideas in this topic? Can I connect what we’re learning to other big ideas for students?” If students are learning about earthquakes, for example, take a step back and look at what else they should know about—perhaps Pangaea, Earth’s first continent, or what tectonic plates are. Understanding these concepts can anchor more complex ideas like P and S waves. And don’t forget to go over some broad-stroke ideas—such as history’s biggest earthquakes—so that students are more familiar with the topic.

- Incorporate low-stakes quizzes. Before starting a lesson, use formative assessment strategies such as entry slips or participation cards to quickly identify gaps in knowledge.

- Build concept maps. Consider leading students in the creation of visual models that map out a topic’s big ideas—and connect related ideas that can provide greater context and address knowledge gaps. Visual models provide another way for students to process and encode information, before they dive into reading.

- Sequence and scaffold lessons. When introducing a new topic, try to connect it to previous lessons: Reactivating knowledge the students already possess will serve as a strong foundation for new lessons. Also, consider your sequencing carefully before you start the year to take maximum advantage of this effect.

BRIEF RESEARCH REPORT article

The use of new technologies for improving reading comprehension.

- 1 Department of General Psychology, University of Padova, Padua, Italy

- 2 Azienda Sociosanitaria Ligure 5 Spezzino, La Spezia, Italy

Since the introduction of writing systems, reading comprehension has always been a foundation for achievement in several areas within the educational system, as well as a prerequisite for successful participation in most areas of adult life. The increased availability of technologies and web-based resources can be a really valid support, both in the educational and clinical field, to devise training activities that can also be carried out remotely. There are studies in current literature that has examined the efficacy of internet-based programs for reading comprehension for children with reading comprehension difficulties but almost none considered distance rehabilitation programs. The present paper reports data concerning a distance program Cloze , developed in Italy, for improving language and reading comprehension. Twenty-eight children from 3rd to 6th grade with comprehension difficulties were involved. These children completed the distance program for 15–20 min for at least three times a week for about 4 months. The program was presented separately to each child, with a degree of difficulty adapted to his/her characteristics. Text reading comprehension (assessed distinguishing between narrative and informative texts) increased after intervention. These findings have clinical and educational implications as they suggest that it is possible to promote reading comprehension with a distance individualized program, avoiding the need for the child displacements, necessary for reaching a rehabilitation center.

Introduction

Reading comprehension is a fundamental cognitive ability for children, that supports school achievement and successively participation in most areas of adult life ( Hulme and Snowling, 2011 ). Therefore, children with learning disabilities (LD) and special educational needs who show difficulties in text comprehension, sometimes also in association with other problems, may have an increased risk of life and school failure ( Woolley, 2011 ). Reading comprehension is, indeed, a complex cognitive ability which involves not only linguistic (e.g., vocabulary, grammatical knowledge), but also cognitive (such as working memory, De Beni and Palladino, 2000 ), and metacognitive skills (both for the aspects of knowledge and control, Channa et al., 2015 ), and, more specifically, higher order comprehension skills such as the generation of inferences ( Oakhill et al., 2003 ).

Recently, due to the diffusion of technology in many fields of daily life, text comprehension at school, at home during homework, and at work is based on an increasing number of digital reading devices (computers and laptops, e-books, and tablet devices) that can become a fundamental support to improve traditional reading comprehension and learning skills (e.g., inference generation).

Some authors contrasted in children with typical development the effects of the technological interface on reading comprehension vs printed texts ( Kerr and Symons, 2006 ; Rideout et al., 2010 ; Mangen et al., 2013 ; Singer and Alexander, 2017 ; Delgado et al., 2018 ). Results were consistent and showed a worse comprehension performance in screen texts compared to printed texts for children ( Mangen et al., 2013 ; Delgado et al., 2018 ) and adolescents who nonetheless showed a preference for digital texts compared to printed texts ( Singer and Alexander, 2017 ). Regarding children with learning problems, only few studies considered the differences between printed texts and digital devices ( Chen, 2009 ; Gonzalez, 2014 ; Krieger, 2017 ) finding no significant differences, suggesting that the use of compensative digital tools for children with a learning difficulty could be a valid alternative with respect to the traditional written texts in facilitating their academic and work performance. This conclusion is also supported by the results of a meta-analysis ( Moran et al., 2008 ), regarding the use of digital tools and learning environments for enhancing literacy acquisition in middle school students, which demonstrates that technology can improve reading comprehension.

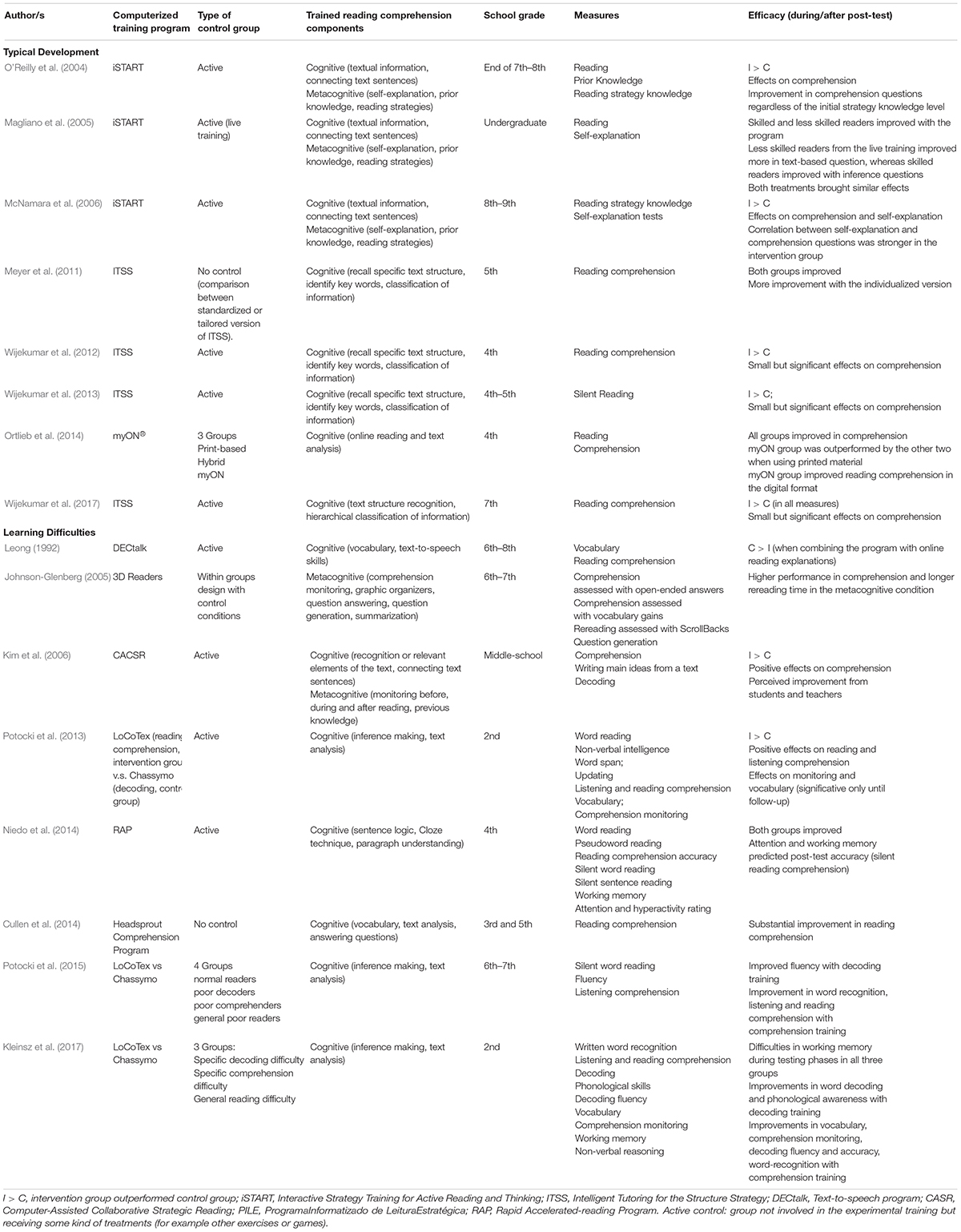

Different procedures and abilities are targeted in the international literature concerning computerized training programs for reading comprehension. In particular, various studies include activities promoting cognitive (e.g., vocabulary, inference making) and metacognitive (e.g., the use of strategies, comprehension monitoring, and identification of relevant parts in a text) components of reading comprehension. Table 1 reports the list of papers proposing computerized training programs with a summary of the findings encountered. Participants involved cover different ages and school grades, the majority belonging to middle school and high school. The general outcome of the studies is positive due to a significant improvement in comprehension skills after the training program with long-lasting effects also during follow-up; indeed, the majority of participants involved in training programs outperformed their peers assigned to comparison groups and maintained their improvements. Specifically, several studies ( O’Reilly et al., 2004 ; Magliano et al., 2005 ; McNamara et al., 2006 ) used the iSTART program with adolescents and young adults. This program promotes self-explanation, prior knowledge and reading strategies to enhance understanding of descriptive scientific texts. Results demonstrated that students who followed the iSTART program received more benefits than their peers, improving self-explanation and summarization. Additionally, strategic knowledge was a relevant factor for the outcome in comprehension tasks including multiple choice questions: students who already possessed good strategic knowledge improved their accuracy when answering to bridging inference questions, whereas students with low strategic knowledge became more accurate with text-based questions. Another program, ITSS, was used with younger students ( Meyer et al., 2011 ; Wijekumar et al., 2012 , 2013 , 2017 ), with the objective to support activities based on identifying main parts and key words in a text and classifying information in a hierarchical order. Positive outcomes were found also with such program since students who followed the ITSS program significantly improved text comprehension compared to their peers in the control group.

Table 1. Synthesis of the main results of the computerized training programs on comprehension present in the literature.

Although most of the literature deals with typical development, also cases of students with learning difficulties were considered. For example, Potocki et al. (2013) (see also Potocki et al., 2015 ) examined the effects of two different computerized programs with specific aims: one focusing on comprehension features, such as inference making and the analysis of text structure, the other considering decoding skills. Both training programs brought some benefits to reading comprehension, however larger effects were found with the program focused on comprehension with long-lasting effects in listening and reading comprehension (see also Kleinsz et al., 2017 ). Studies by Johnson-Glenberg (2005) and Kim et al. (2006) , using respectively the programs 3D Readers and CACSR, were able to promote reading comprehension abilities in middle school students through metacognitive activities. Thanks to these programs students also became more aware of reading strategies and implemented them more successfully during text comprehension. In particular, a study by Niedo et al. (2014) , obtained positive results on silent reading in a small group of children struggling with reading using the “cloze” procedure. This procedure proposes exercises in which parts of a text, typically words, are missing and participants are required to complete the text guessing what is missing.

Thus, computerized programs generally seem to improve reading comprehension skills. However, it should be noticed that, in most cases, students were trained at school, without the personalized support of a clinician taking into consideration the cognitive and psychological needs of the child. In particular, to our knowledge, no program examined the effects of an internet-based distance reading comprehension program which allows the child to be trained at home in a personalized way. A useful aspect of an internet-based distance training is that the psychologist can monitor with the application ( app ) the child’s results and activities and write him/her some motivational messages, reducing the attritions present in programs carried out at home with the only supervision of parents. Literature concerning distance trainings is still rare, however, some evidence suggests that these programs may represent a good integration to other types of intervention, usually carried out at school, in a rehabilitation center or at home (e.g., Mich et al., 2013 ).

Therefore, despite still preliminary, we think that it is relevant to present data about a distance program developed in Italy named Cloze ( Cornoldi and Bertolo, 2013 ), devised for rehabilitation purposes but with potential implication also for educational contexts. Cloze has been developed to promote inferential abilities both at a sentence- and discourse-level using the “cloze” procedure. Several findings in the literature demonstrate that abilities, such as anticipating text parts and inference making, bring improvements in text comprehension (e.g., Yuill and Oakhill, 1988 ) and it has been shown that one way to promote inferential competences is to improve the ability to predict parts of the text that are missing or that follow, considering the available information: the “cloze” technique appears to be one of the most successful ways for this purpose (e.g., Greene, 2001 ).

In the current study the effectiveness of this training program has been tested on a clinical population who exhibited, for various reasons, difficulties in reading comprehension. Participants were 28 children (16 male and 12 female) attending a private practice for learning difficulties in the city of La Spezia, in the north-west of Italy, from 3rd to 6th school grade (5 of 3rd, 9 of 4th, 11 of 5th and 3 of 6th grade), with a mean age of children of M = 9.79 years (SD = 1.03). Seventeen children had a current or past speech disorder: of these children 10 also had a LD (Learning Disabilities) and one was bilingual (speech problems were not due to bilingualism). The other 11 children had a LD or important learning difficulties, and one of them had also ADHD (Attention Deficit/Hyperactivity Disorder). For the goals of the study, all these children were considered together as they all presented a severe reading comprehension difficulty as reported by parents and teachers and confirmed by the initial assessment.

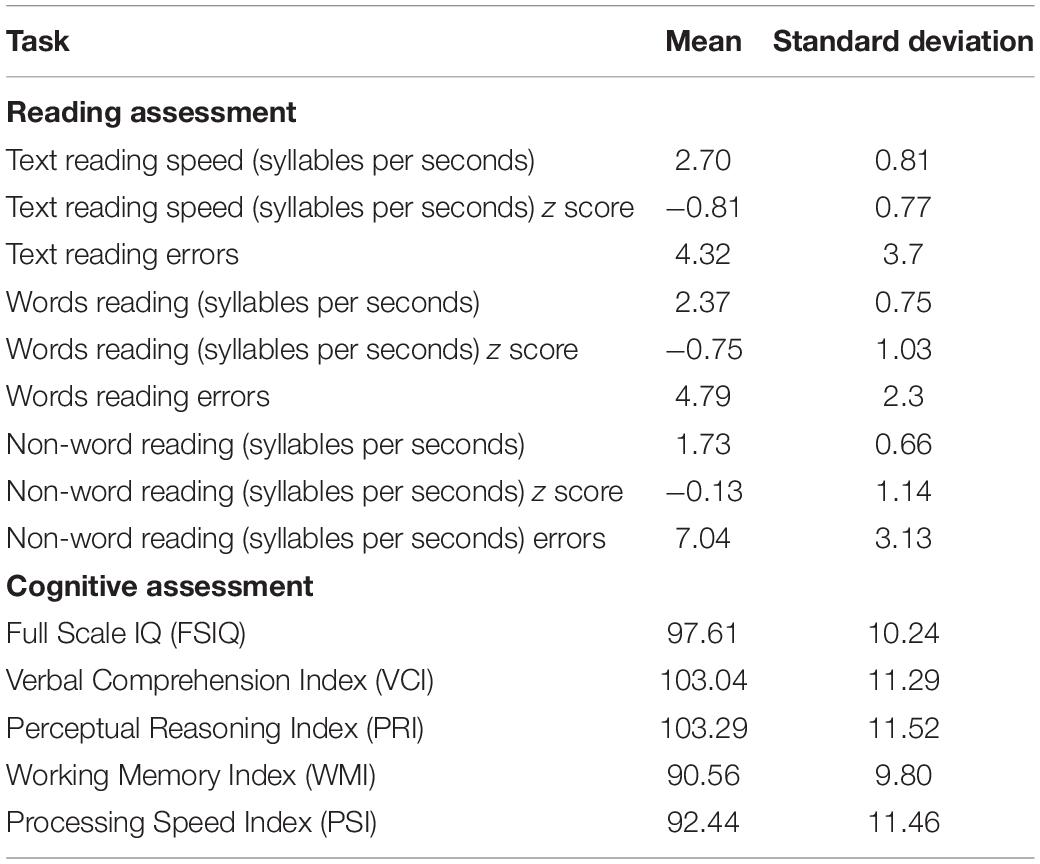

All children had received a comprehensive psychological assessment (see Table 2 ), adapted to their particular needs and ages. In particular all children had an IQ >80 assessed with the Wechsler Intelligence Scale for Children-IV (WISC-IV; Wechsler, 2003 ) and did not have anxiety disorders, mood affective disorders or other developmental disorders, with the exception of the cases with language disorder and the case with ADHD. Children were not receiving any additional treatment, including medication. Written consent was obtained from the children’s parents in the context of the private practice.

Table 2. Main characteristics of the sample in terms of reading and cognitive abilities.

Materials and Methods

Pre-/post-test assessment and procedure of the training.

Each child started a training program through the distance rehabilitation platform Ridinet, using the Cloze app, after the assessment of learning and cognitive abilities, including comprehension assessment with two texts, one narrative and one informative ( Cornoldi and Carretti, 2016 ; Cornoldi et al., 2017 ). Connection to the Ridinet web site was required in order to access to the app, three or four times a week for more or less 15/20 min. The period of use was of 3 months for 6 children and 4 months for 22 children. After this period children’s comprehension was assessed again. Additionally, some questions were asked to parents and children about the app’s utility and pleasantness. In particular, children were asked: “Do you think the program helped you improve your text comprehension skills?,” “Did you like doing this program instead of the same exercises on paper?”; and parents were asked: “Was it difficult to start the Cloze activities on days when it had to be done?,” “Compared to the beginning of the treatment, how do you currently judge the ability of your child to understand the texts?”. For all questions, except the last one, the answer had to be given on a 5-point scale with 1 = not at all, 2 = a little, 3 = enough, 4 = very, 5 = very much. For the last question the answer changed on a 4-point scale with 1 = got worse, 2 = unchanged, 3 = slightly improved, and 4 = greatly improved.

Comprehension Tasks

Reading comprehension was assessed with two texts, the first narrative and the other informative, taken from Italian batteries for the assessment of reading ( Cornoldi and Carretti, 2016 ; Cornoldi et al., 2017 ). The texts range between 226 and 455 words in length, and their length increases with school grade (in order to have texts and questions matching the degrees of expertise at different grades the batteries include a different pair of texts for each grade). Students read the text in silence at their own pace, then answer a variable number of multiple-choice questions (depending on school grade), choosing one of four possible answers. There is no time limit, and students can reread the text whenever they wish. The final score is calculated as the total number of correct answers for each text. Alpha coefficients, as reported by the manuals, range between 0.61 and 0.83. For the purposes of the study we decided to use the same two comprehension texts, at pre-test and post-test, as the procedure offered the opportunity of directly examining and showing to parents changes in comprehension and previous evidence had shown the absence of relevant retest effects with this material in a retest carried out after 3 months ( Viola and Carretti, 2019 ).

Distance Rehabilitation Program: Cloze

Cloze ( Cornoldi and Bertolo, 2013 ) is an app for the promotion of text comprehension with the specific aim to recover processes of lexical and semantic inference. At each work session the child works with texts that lack words and must complete the empty spaces by choosing the correct alternative from those automatically proposed by the app, so that the text becomes congruent. The program is adaptive, as text complexity and proportion of missing words vary according to the previous level of response, and is designed for children who have weaknesses in written text comprehension, mainly due to poor skills in lexical and semantic inferential processes. The app also allows to enhance a set of language skills (phonology, syntax, semantics) which contribute to ensuring the fluidity of text and production processing. The recommended age range for the use of this program is between 7 and 14 years. In this study the semantic mode (only content words may be missing and no syntactic cues can be used for deciding between the alternatives) was proposed to 21 children and the syntactic mode (where all words may be missing) to 7 children. The mode type selected for each child depends from the performance at pre-test and diagnosis. A clinician, co-author of the present study (LB), monitored the child’s results and activities with the app and sent him/her from time to time some motivational messages. The motivational messages were typically sent once a week for congratulating with children for the work done and check with him/her possible problems emerged. Training lasted from 3 to 4 months and involved between 3 and 4 sessions of 15–20 min per week. The variation in duration depended on the decision of each individual family. In fact, children were required to use the software for about 4 months or in any case for a minimum period of 3 months (choice made by six families).

Effects on Reading Comprehension of Cloze Training

All analyses were carried out with SPSS 25 ( IBM Corp, 2017 ). A preliminary analysis found that all the examined variables met the assumptions of normality (K-S between 0.106 and 0.143, p > 0.05). Then, we compared the reading comprehension performance of children before and after the computerized training with Cloze . For this analysis, a repeated measure Analysis of Variance (ANOVA) was conducted on comprehension scores to examine the differences in the whole group of children between the scores obtained before and after the training. A significant difference was found for both comprehension texts [ F (1,27) = 22.37, p < 0.001, η 2 p = 0.453 and F (1,27) = 38.90, p < 0.001, η 2 p = 0.599, respectively]. Possible differences between the two training modalities (semantic vs syntactic) and between different training periods (3 months vs 4 months) were then analyzed; no significant differences emerged between groups in both cases [ F (1,27) < 1].

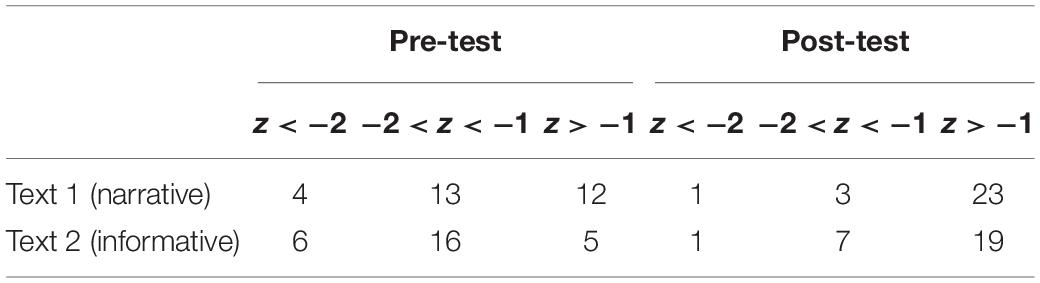

Secondly, to analyze the role of individual differences at pre-test, the standardized training gain score (STG; Jaeggi et al., 2011 ) – computed by subtracting post-test score minus pre-test score, divided by the SD of the pre-test – was calculated for the two texts comprehension. Pearson correlations were computed between the STG and the variable collected at pre-test (reading speed and errors, WISC IV – Full scale IQ, Verbal Comprehension, Perceptual Reasoning, Working Memory and Processing Speed indexes). The only significant correlation was between STG of the narrative text and Verbal Comprehension Index of the WISC-IV Scale ( r = 0.38, p = 0.048). Finally, individual improvements from pre- to post-test were also confirmed considering changes in performance in terms of standard deviation in relations to norms (provided by the manual). Table 3 shows the number of children for each comprehension text who improved their performance moving from a performance at least 2 standard deviations or between 1 and 2 negative standard deviations under the mean to a performance above one negative standard deviation.

Table 3. Changes in performance in relations to norms (provided by the manual) after the training program Cloze.

Perceived Utility, Pleasantness, Parents and Child’s Improvements of Cloze

Results concerning the answers of parents and children about utility, pleasantness and self-perceived efficacy of the app, were also analyzed. At the first question, addressing children’s perceived improvement in comprehension skills, more than half of the sample chose the alternatives “very” or “very much” (15 “very” and 5 “very much”), only 1 child answered “a little” and the others chose “enough.” At the second question, about the pleasure of doing this kind of activity instead of pen and paper activities, all children answered “very” or “very much.” Concerning parents’ questions, at the first question about the difficulty to start the Cloze activity, only one parent answered “enough,” a quarter of the sample chose “a little” (seven families) and all the other 20 families chose the alternative “not at all.” At the last question about the perceived training efficacy on their child’s performance, the large majority of the families chose “slightly improved” or “greatly improved” and only three parents thought their children’s ability had remained unchanged. However, no correlations between parents and child’s perceived improvements and STG in reading comprehension were found.

The present study examined the effects of the use of Cloze , a distance rehabilitation program focused on inference skills, for improving reading comprehension, on the basis of the hypothesis that, being inference making related to reading comprehension at different ages (e.g., Oakhill and Cain, 2012 ), positive effects of the training activities on reading comprehension should be found.

Concerning the efficacy of computer-assisted training programs, literature highlights that many training programs are devised for an educational context. Results are generally encouraging with positive effects on reading comprehension, measured with materials different from those practiced during the training. However, few studies analyzed the efficacy in children with specific reading comprehension problems, and no studies considered the possibility of carrying out a training at home under the distance supervision of an expert. The latter characteristics are those that make the Cloze peculiar compared to the existent literature. Cloze is indeed based on a rehabilitation online platform which allows the child to complete personalized training activities several times a week, without moving from his/her home, and concurrently enabling the clinician to monitor the child’s progress or manage activities’ characteristics. The advantage of this procedure is twofold: on one hand it increases the potential number of training sessions per week, on the other hand it permits to save the necessary time to reach the center for rehabilitation and to reduce the costs of the intervention.

The preliminary data on Cloze were generally positive: children, working on either two slightly different versions of the same program, showed a generalized improvement in reading comprehension tasks and, together with their families, expressed appreciation for the pleasantness and the efficacy of the program. Encouraging results emerged also from the analysis of individual improvements referring to normative scores, as reported in Table 3 : most of the children’s performance migrated from a highly negative level to an average level.

It is noticeable that the efficacy of the training was assessed with materials different from those practiced during the training sessions, since reading comprehension tasks required to read a paper text and complete a series of multiple-choice questions. In future studies it would be interesting to analyze the effects of the program on skills known to be related to text comprehension, such as vocabulary or comprehension monitoring, for example. There is good reason to believe that since these variables are highly predictive of comprehension skills (and given that training in these skills sometimes improve comprehension; e.g., Beck et al., 1982 ; see also Hulme and Snowling, 2011 ), training that specifically targets comprehension might, in turn, lead to improvements in vocabulary or comprehension monitoring skills. Further studies are needed to explore this hypothesis.

A second relevant finding of the present study is the presence of a positive correlation between the gain obtained in one of the reading comprehension text (the narrative one) and the Verbal Comprehension Intelligence Quotient (VCIQ) index of the WISC-IV battery, showing that children who started with more resources in verbal intelligence achieved greater improvements in text comprehension at least with one type of text through the Cloze . The activities probably required to develop some kind of strategies, and for this reason students with larger verbal intellectual resources, who were presumably more able to develop new strategies, were more advantaged. Indeed, this amplification effect is usually found when training activities require the development of strategies ( von Bastian and Oberauer, 2014 ). Such result has clinical and educational implications, inviting professionals and teachers to consider children’s starting resources and, if necessary, to combine activities conducted through distance rehabilitation programs with personal intervention sessions that could teach strategies and promote a metacognitive approach to reading comprehension. However, some limitations of the present study must be acknowledged. Firstly, study did not include a control group, therefore findings should be taken with caution, although normative data and previous results obtained with the same test offer support to the robustness of our results and the use of normative data offers a control measure of how reading comprehension skills are acquired in typically developing children without specific training, therefore functioning as a sort of passive control group. Secondly, the treated group, although characterized by a common reading comprehension difficulty, was partly heterogeneous, as children attended different grades and could have different diagnoses. Unfortunately, the limited number of subjects, with the consequence that it was not possible to form groups defined both by the grade and the diagnosis, did not permit to make analyses taking into account the grade and the diagnosis as between-subjects factors. Future studies should examine a more homogeneous population or consider a larger sample of children, giving more information about the efficacy of training in different children population. Additionally, the fact that the treatment was concluded with the post-training assessment did not offer the opportunity to further examine the procedure and maintenance effects with a follow-up. Despite the limitations, this study offers evidence concerning the efficacy of new methods, based on computer-assisted training programs that could be beneficial in training high-level skills such as comprehension and inference generation. Such tools can be extremely worthwhile for struggling readers who may need to receive further attention in mastering higher level reading comprehension.

Data Availability Statement

The datasets generated for this study are available on request to the corresponding author.

Ethics Statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. Written informed consent to participate in this study was provided by the participants’ legal guardian/next of kin. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author Contributions

AC, CC and BC contributed to the design and implementation of the research. LB provided the data. BC organized the database. AC performed the statistical analysis. ED did the literature research and wrote the section about the review of the literature. AC and BC wrote the other sections. CC contributed to the manuscript revision, read and approved the submitted version.

The present work was carried out within the scope of the research program Dipartimenti di Eccellenza (art.1, commi 314-337 legge 232/2016), which was supported by a grant from MIUR to the Department of General Psychology, University of Padua and partially supported by a grant (PRIN 2015, 2015AR52F9_003) to Cesare Cornoldi funded by the Italian Ministry of Research and Education (MIUR).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Beck, I. L., Perfetti, C. A., and McKeown, M. G. (1982). Effects of long-term vocabulary instruction on lexical access and reading comprehension. J. Educ. Psychol. 74, 506–521. doi: 10.1037/0022-0663.74.4.506

CrossRef Full Text | Google Scholar

Channa, M. A., Nordin, Z. S., Siming, I. A., Chandio, A. A., and Koondher, M. A. (2015). Developing reading comprehension through metacognitive strategies: a review of previous studies. Eng. Lang. Teach. 8, 181–186. doi: 10.5539/elt.v8n8p181

Chen, H. (2009). Online reading comprehension strategies among fifth- and sixth-grade general and special education students. Educ. Res. Perspect. 37, 79–109.

Google Scholar

Cornoldi, C., and Bertolo, L. (2013). Cloze Ridinet. Bologna: Anastasis.

Cornoldi, C., and Carretti, B. (2016). Prove MT-3-Clinica. Firenze: Giunti Edu.

Cornoldi, C., Carretti, B., and Colpo, C. (2017). Prove MT-Kit Scuola. Dalla valutazione degli Apprendimenti di Lettura E Comprensione Al Potenziamento. [MT-Kit for the Assessment In The School. From Reading Assessment To Its Enhancement]. Firenze: Giunti Edu.

Cullen, J. M., Alber-Morgan, S. R., Schnell, S. T., and Wheaton, J. E. (2014). Improving reading skills of students with disabilities using headsprout comprehension. Remed. Spec. Educ. 35, 356–365. doi: 10.1177/0741932514534075

De Beni, R., and Palladino, P. (2000). Intrusion errors in working memory tasks: are they related to reading comprehension ability? Learn. Individ. Differ. 12, 131–143. doi: 10.1016/s1041-6080(01)00033-4

Delgado, P., Vargas, C., Ackerman, R., and Salmerón, L. (2018). Don’t throw away your printed books: a meta-analysis on the effects of reading media on reading comprehension. Educ. Res. Rev. 25, 23–38. doi: 10.1016/j.edurev.2018.09.003

Gonzalez, M. (2014). The effect of embedded text-to-speech and vocabulary eBook scaffolds on the comprehension of students with reading disabilities. Intern. J. Spec. Educ. 29, 111–125.

Greene, B. (2001). Testing reading comprehension of theoretical discourse with cloze. J. Res. Read. 24, 82–98. doi: 10.1111/1467-9817.00134

Hulme, C., and Snowling, M. J. (2011). Children’s reading comprehension difficulties: nature, causes, and treatments. Curr. Direct. Psychol. Sci. 20, 139–142. doi: 10.1177/0963721411408673

IBM Corp (2017). IBM SPSS Statistics for Windows, Version 25.0. Armonk, NY: IBM Corp.

Jaeggi, S. M., Buschkuehl, M., Jonides, J., and Shah, P. (2011). Short-and long-term benefits of cognitive training. Proc. Natl. Acad. Sci. U.S.A. 108, 10081–10086. doi: 10.1073/pnas.1103228108

Johnson-Glenberg, M. C. (2005). Web-based training of metacognitive strategies for text comprehension: focus on poor comprehenders. Read. Writ. 18, 755–786. doi: 10.1007/s11145-005-0956-5

Kerr, M. A., and Symons, S. E. (2006). Computerized presentation of text: effects on children’s reading of informational material. Read. Writ. 19, 1–19. doi: 10.1007/s11145-003-8128-y

Kim, A. H., Vaughn, S., Klingner, J. K., Woodruff, A. L., Klein Reutebuch, C., and Kouzekanani, K. (2006). Improving the reading comprehension of middle school students with disabilities through computer-assisted collaborative strategic reading. Remed. Spec. Educ. 27, 235–249. doi: 10.1177/07419325060270040401

Kleinsz, N., Potocki, A., Ecalle, J., and Magnan, A. (2017). Profiles of French poor readers: underlying difficulties and effects of computerized training programs. Learn. Individ. Differ. 57, 45–57. doi: 10.1016/j.lindif.2017.05.009

Krieger, R. (2017). The Effect of Electronic Text Reading on Reading Comprehension Scores of Students with Disabilities. Master thesis, Governors State University, Park, IL.

Leong, C. K. (1992). Enhancing reading comprehension with text-to-speech (DECtalk) computer system. Read. Writ. 4, 205–217. doi: 10.1007/bf01027492

Magliano, J. P., Todaro, S., Millis, K., Wiemer-Hastings, K., Kim, H. J., and McNamara, D. S. (2005). Changes in reading strategies as a function of reading training: a comparison of live and computerized training. J. Educ. Comput. Res. 32, 185–208. doi: 10.2190/1ln8-7bqe-8tn0-m91l

Mangen, A., Walgermo, B. R., and Brønnick, K. (2013). Reading linear texts on paper versus computer screen: effects on reading comprehension. Intern. J. Educ. Res. 58, 61–68. doi: 10.1016/j.ijer.2012.12.002

McNamara, D. S., O’Reilly, T. P., Best, R. M., and Ozuru, Y. (2006). Improving adolescent students’ reading comprehension with iSTART. Intern. J. Educ. Res. 34, 147–171. doi: 10.2190/1ru5-hdtj-a5c8-jvwe

Meyer, B. J., Wijekumar, K. K., and Lin, Y. C. (2011). Individualizing a web-based structure strategy intervention for fifth graders’ comprehension of nonfiction. J. Educ. Psychol. 103, 140–168. doi: 10.1037/a0021606

Mich, O., Pianta, E., and Mana, N. (2013). Interactive stories and exercises with dynamic feedback for improving reading comprehension skills in deaf children. Comput. Educ. 65, 34–44. doi: 10.1016/j.compedu.2013.01.016

Moran, J., Ferdig, R. E., Pearson, P. D., Wardrop, J., and Blomeyer, R. L. Jr. (2008). Technology and reading performance in the middle-school grades: a meta-analysis with recommendations for policy and practice. J. Liter. Res. 40, 6–58. doi: 10.1080/10862960802070483

Niedo, J., Lee, Y. L., Breznitz, Z., and Berninger, V. W. (2014). Computerized silent reading rate and strategy instruction for fourth graders at risk in silent reading rate. Learn. Disabil. Q. 37, 100–110. doi: 10.1177/0731948713507263

Oakhill, J. V., and Cain, K. (2012). The precursors of reading ability in young readers: evidence from a four-year longitudinal study. Sci. Stud. Read. 162, 91–121. doi: 10.1080/10888438.2010.529219

Oakhill, J. V., Cain, K., and Bryant, P. E. (2003). The dissociation of word reading and text comprehension: evidence from component skills. Lang. Cogn. Process. 18, 443–468. doi: 10.1080/01690960344000008

O’Reilly, T. P., Sinclair, G. P., and McNamara, D. S. (2004). “iSTART: A web-based reading strategy intervention that improves students’ science comprehension,” in Proceedings of the IADIS International Conference Cognition and Exploratory Learning in Digital Age: CELDA 2004 , eds D. G. Kinshuk and P. Isaias (Lisbon: IADIS), 173–180.

Ortlieb, E., Sargent, S., and Moreland, M. (2014). Evaluating the efficacy of using a digital reading environment to improve reading comprehension within a reading clinic. Read. Psychol. 35, 397–421. doi: 10.1080/02702711.2012.683236

Potocki, A., Ecalle, J., and Magnan, A. (2013). Effects of computer-assisted comprehension training in less skilled comprehenders in second grade: a one-year follow-up study. Comput. Educ. 63, 131–140. doi: 10.1016/j.compedu.2012.12.011

Potocki, A., Magnan, A., and Ecalle, J. (2015). Computerized trainings in four groups of struggling readers: specific effects on word reading and comprehension. Res. Dev. Disabil. 45, 83–92. doi: 10.1016/j.ridd.2015.07.016

Rideout, V. J., Foehr, U. G., and Roberts, D. F. (2010). Generation M 2: Media in the Lives of 8-to 18-Year-Olds. San Francisco, CA: Henry J. Kaiser Family Foundation.

Singer, L. M., and Alexander, P. A. (2017). Reading on paper and digitally: what the past decades of empirical research reveal. Rev. Educ. Res. 87, 1007–1041. doi: 10.3102/0034654317722961

Viola, F., and Carretti, B. (2019). Cambiamentinelleabilità di letturanelcorso di unostesso anno scolastico [Changes in readingskillsduring the sameschoolyear]. Dislessia 16, 147–159. doi: 10.14605/DIS1621902

von Bastian, C. C., and Oberauer, K. (2014). Effects and mechanisms of working memory training: a review. Psychol. Res. 78, 803–820. doi: 10.1007/s00426-013-0524-526

Wechsler, D. (2003). Wechsler Intelligence Scale For Children–Fourth Edition (WISC-IV). San Antonio, TX: The Psychological Corporation.

Wijekumar, K. K., Meyer, B. J., and Lei, P. (2012). Large-scale randomized controlled trial with 4th graders using intelligent tutoring of the structure strategy to improve nonfiction reading comprehension. Educ. Technol. Res. Dev. 60, 987–1013. doi: 10.1007/s11423-012-9263-4

Wijekumar, K. K., Meyer, B. J., and Lei, P. (2013). High-fidelity implementation of web-based intelligent tutoring system improves fourth and fifth graders content area reading comprehension. Comput. Educ. 68, 366–379. doi: 10.1016/j.compedu.2013.05.021

Wijekumar, K. K., Meyer, B. J., and Lei, P. (2017). Web-based text structure strategy instruction improves seventh graders’ content area reading comprehension. J. Educ. Psychol. 109, 741–760. doi: 10.1037/edu0000168

Woolley, G. (2011). “Reading comprehension,” in Reading Comprehension: Assisting Children With Learning Difficulties , ed Springer Science+Business Media (Dordrecht, NL: Springer), doi: 10.1007/978-94-007-1174-7_2

Yuill, N., and Oakhill, J. (1988). Effects of inference awareness training on poor reading comprehension. Appl. Cogn. Psychol. 2, 33–45. doi: 10.1002/acp.2350020105

Keywords : reading comprehension, training, distance rehabilitation program, digital device, Cloze app

Citation: Capodieci A, Cornoldi C, Doerr E, Bertolo L and Carretti B (2020) The Use of New Technologies for Improving Reading Comprehension. Front. Psychol. 11:751. doi: 10.3389/fpsyg.2020.00751

Received: 20 November 2019; Accepted: 27 March 2020; Published: 23 April 2020.

Reviewed by:

Copyright © 2020 Capodieci, Cornoldi, Doerr, Bertolo and Carretti. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY) . The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Agnese Capodieci, [email protected] ; Laura Bertolo, [email protected]

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- HHS Author Manuscripts

A Synthesis of Reading Interventions and Effects on Reading Comprehension Outcomes for Older Struggling Readers

This article reports a synthesis of intervention studies conducted between 1994 and 2004 with older students (Grades 6–12) with reading difficulties. Interventions addressing decoding, fluency, vocabulary, and comprehension were included if they measured the effects on reading comprehension. Twenty-nine studies were located and synthesized. Thirteen studies met criteria for a meta-analysis, yielding an effect size (ES) of 0.89 for the weighted average of the difference in comprehension outcomes between treatment and comparison students. Word-level interventions were associated with ES = 0.34 in comprehension outcomes between treatment and comparison students. Implications for comprehension instruction for older struggling readers are described.

Although educators have historically emphasized improving students’ reading proficiency in the elementary school years, reading instruction for secondary students with reading difficulties has been less prevalent. As a result, secondary students with reading difficulties are infrequently provided reading instruction, thus widening the gap between their achievement and that of their grade-level peers. Recent legislation, such as the No Child Left Behind Act ( NCLB; 2002 ), has prompted schools to improve reading instruction for all students, including those in middle and high school. Many secondary students continue to demonstrate difficulties with reading, and educators continue to seek information on best practices for instructing these students.

The National Assessment of Educational Progress (NAEP) administered a reading assessment in 2002 to approximately 343,000 students in Grades 4 and 8. According to the NAEP data, there was no significant change in progress for students between 1992 and 2002, and Grade 8 scores in 2003 actually decreased ( Grigg, Daane, Jin, & Campbell, 2003 ). The NAEP also conducted a long-term trend assessment in reading, which documented performance from 1971 to 2004 for students ages 9, 13, and 17. Although scores for the 9-year-olds showed improvements compared to the scores for this age in 1971 and 1999, this was not the case for the 13- and 17-year-olds. Although the scores at the 75th and 90th percentile for the 13-year-olds significantly improved from 1971 to 2004, there were no significant differences between scores in 1999 and 2004. For the 17-year-olds, there were no significant differences at any of the percentiles selected in 2004, nor were there differences between the 1971 and 1999 scores. These data suggest that the education system is not effectively preparing some adolescents for reading success and that information on effective instructional practices is needed to improve these trends.

Expectations

Secondary students face increasing accountability measures along with a great deal of pressure to meet the demands of more difficult curricula and content ( Swanson & Hoskyn, 2001 ). In the past decade, students have become responsible for learning more complex content at a rapid pace to meet state standards and to pass outcome assessments ( Woodruff, Schumaker, & Deschler, 2002 ).

Our educational system expects that secondary students are able to decode fluently and comprehend material with challenging content ( Alvermann, 2002 ). Some struggling secondary readers, however, lack sufficient advanced decoding, fluency, vocabulary, and comprehension skills to master the complex content ( Kamil, 2003 ).

In a climate where many secondary students continue to struggle with reading and schools face increasingly difficult accountability demands, it is essential to identify the instruction that will benefit struggling secondary readers. Secondary teachers require knowledge of best practices to provide appropriate instruction, prevent students from falling farther behind, and help bring struggling readers closer to reading for knowledge and pleasure.

Comprehension Research

The ultimate goal of reading instruction at the secondary level is comprehension—gaining meaning from text. A number of factors contribute to students’ not being able to comprehend text. Comprehension can break down when students have problems with one or more of the following: (a) decoding words, including structural analysis; (b) reading text with adequate speed and accuracy (fluency); (c) understanding the meanings of words; (d) relating content to prior knowledge; (e) applying comprehension strategies; and (f) monitoring understanding ( Carlisle & Rice, 2002 ; National Institute for Literacy, 2001 ; RAND Reading Study Group, 2002 ).

Because many secondary teachers assume that students who can read words accurately can also comprehend and learn from text simply by reading, they often neglect teaching students how to approach text to better understand the content. In addition, because of increasing accountability, many teachers emphasize the content while neglecting to instruct students on how to read for learning and understanding ( Pressley, 2000 ; RAND Reading Study Group, 2002 ). Finally, the readability level of some text used in secondary classrooms may be too high for below-grade level readers, and the “unfriendliness” of some text can result in comprehension challenges for many students ( Mastropieri, Scruggs, & Graetz, 2003 ).

The RAND Reading Study Group (2002) created a heuristic for conceptualizing reading comprehension. Fundamentally, comprehension occurs through an interaction among three critical elements: the reader, the text, and the activity. The capacity of the reader, the values ascribed to text and text availability, and reader’s activities are among the many variables that are influenced and determined by the sociocultural context that both shapes and is shaped by each of the three elements. This synthesis addresses several critical aspects of this proposed heuristic—the activity or intervention provided for students at risk and, when described in the study, the text that was used. Because the synthesis focuses on intervention research, questions about what elements of interventions were associated with reading comprehension were addressed. This synthesis was not designed to address other critical issues, including the values and background of readers and teachers and the context in which teachers and learners interacted. Many of the social and affective variables associated with improved motivation and interest in text for older readers and how these variables influenced outcomes are part of the heuristic of reading comprehension, but we were unable to address them in this synthesis.

Rationale and Research Question

Many of the instructional practices suggested for poor readers were derived from observing, questioning, and asking good and poor readers to “think aloud” while they read ( Dole, Duffy, Roehler, & Pearson, 1991 ; Heilman, Blair, & Rupley, 1998 ; Jiménez, Garcia, & Pearson, 1995 , 1996 ). These reports described good readers as coordinating a set of highly complex and well-developed skills and strategies before, during, and after reading so that they could understand and learn from text and remember what they read ( Paris, Wasik, & Tumer, 1991 ). When compared with good readers, poor readers were considerably less strategic ( Paris, Lipson, & Wixson, 1983 ). Good readers used the following skills and strategies: (a) reading words rapidly and accurately; (b) noting the structure and organization of text; (c) monitoring their understanding while reading; (d) using summaries; (e) making predictions, checking them as they read, and revising and evaluating them as needed; (g) integrating what they know about the topic with new learning; and (h) making inferences and using visualization ( Jenkins, Heliotis, Stein, & Haynes, 1987 ; Kamil, 2003 ; Klingner, Vaughn, & Boardman, 2007 ; Mastropieri, Scruggs, Bakken, & Whedon, 1996 ; Pressley & Afflerbach, 1995 ; Swanson, 1999 ; Wong & Jones, 1982 ).

Previous syntheses have identified critical intervention elements for effective reading instruction for students with disabilities across grade levels (e.g., Gersten, Fuchs, Williams, & Baker, 2001 ; Mastropieri et al., 1996 ; Swanson, 1999 ). For example, we know that explicit strategy instruction yields strong effects for comprehension for students with learning difficulties and disabilities ( Biancarosa & Snow, 2004 ; Gersten et al., 2001 ; National Reading Panel [NRP], 2000 ; RAND Reading Study Group, 2002 ; Swanson, 1999 ). We also know that effective comprehension instruction in the elementary grades teaches students to summarize, use graphic organizers, generate and answer questions, and monitor their comprehension ( Mastropieri et al., 1996 ; Kamil, 2004 ).

However, despite improved knowledge about effective reading comprehension broadly, much less is known regarding effective interventions and reading instruction for students with reading difficulties in the middle and high school grades ( Curtis & Longo, 1999 ). The syntheses previously discussed focused on students identified for special education, examined specific components of reading, and did not present findings for older readers. In recognition of this void in the research, the report on comprehension from the RAND Reading Study Group (2002) cited the need for additional knowledge on how best to organize instruction for low-achieving students. We have conducted the following synthesis to determine the outcome of comprehension, word study, vocabulary, and fluency interventions on reading comprehension of students in Grades 6 through 12. Furthermore, we extended the synthesis to include all struggling readers, not just those with identified learning disabilities. We addressed the following question: How does intervention research on decoding, fluency, vocabulary, and comprehension influence comprehension outcomes for older students (Grades 6 through 12) with reading difficulties or disabilities?

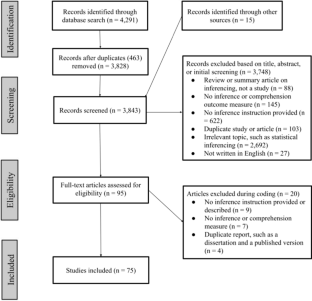

For this synthesis, we conducted a comprehensive search of the literature through a three-step process. The methods described below were developed during prior syntheses conducted by team members ( Kim, Vaughn, Wanzek & Wei, 2004 ; Wanzek, Vaughn, Wexler, Swanson, & Edmonds, 2006 ). We first conducted a computer search of ERIC and PsycINFO to locate studies published between 1994 and 2004. We selected the last decade of studies to reflect the most current research on this topic. Descriptors or root forms of those descriptors ( reading difficult *, learning disab *, LD , mild handi *, mild disab * reading disab *, at-risk , high-risk , reading delay *, learning delay *, struggle reader , dyslex *, read *, comprehen *, vocabulary , fluen *, word , decod *, English Language Arts ) were used in various combinations to capture the greatest possible number of articles. We also searched abstracts from prior syntheses and reviewed reference lists in seminal studies to assure that all studies were identified.

In addition, to assure coverage and because a cumulative review was not located in electronic databases or reference lists, a hand search of 11 major journals from 1998 through 2004 was conducted. Journals examined in this hand search included Annals of Dyslexia, Exceptional Children, Journal of Educational Psychology, Journal of Learning Disabilities, Journal of Special Education, Learning Disability Quarterly, Learning Disabilities Research and Practice, Reading Research Quarterly, Remedial and Special Education , and Scientific Studies of Reading .

Studies were selected if they met all of the following criteria:

- Participants were struggling readers. Struggling readers were defined as low achievers or students with unidentified reading difficulties, with dyslexia, and/or with reading, learning, or speech or language disabilities. Studies also were included if disaggregated data were provided for struggling readers regardless of the characteristics of other students in the study. Only disaggregated data on struggling readers were used in the synthesis.

- Participants were in Grades 6 through 12 (ages 11–21). This grade range was selected because it represents the most common grades describing secondary students. When a sample also included older or younger students and it could be determined that the sample mean age was within the targeted range, the study was accepted.

- Studies were accepted when research designs used treatment–comparison, single-group, or single-subject designs.

- Intervention consisted of any type of reading instruction, including word study, fluency, vocabulary, comprehension, or a combination of these.

- The language of instruction was English.

- At least one dependent measure assessed one or more aspects of reading.

- Data for calculating effect sizes were provided in treatment–comparison and single-group studies.

- Interrater agreement for article acceptance or rejection was calculated by dividing the number of agreements by the number of agreements plus disagreements and was computed as 95%.

Data Analysis

Coding procedures.

We employed extensive coding procedures to organize pertinent information from each study. We adapted previously designed code sheets that were developed for past intervention syntheses ( Kim, Vaughn, Wanzek, & Wei, 2004 ). The code sheet included elements specified in the What Works Clearinghouse Design and Implementation Assessment Device ( Institute of Education Sciences, 2003 ), a document used to evaluate the quality of studies.

The code sheet was used to record relevant descriptive criteria as well as results from each study, including data regarding participants (e.g., number, sex, exceptionality type), study design (e.g., number of conditions, assignment to condition), specifications about conditions (e.g., intervention, comparison), clarity of causal inference, and reported findings. Participant information was coded using four forced-choice items (socioeconomic status, risk type, the use of criteria for classifying students with disabilities, and gender) and two open-ended items (age as described in text and risk type as described in text). Similarly, design information was gathered using a combination of forced-choice (e.g., research design, assignment method, fidelity of implementation) and open-ended items (selection criteria). Intervention and comparison information was coded using 10 open-ended items (e.g., site of intervention, role of person implementing intervention, duration of intervention) as well as a written description of the treatment and comparison conditions.

Information on clarity of causal inference was gathered using 11 items for true experimental designs (e.g., sample sizes, attrition, plausibility of intervention contaminants) and 15 items for quasiexperimental designs (e.g., equating procedures, attrition rates). Additional items allowed coders to describe the measures and indicate measurement contaminants. Finally, the precision of outcome for both effect size estimation and statistical reporting was coded using a series of 10 forced-choice yes–no questions, including information regarding assumptions of independence, normality, and equal variance. Effect sizes were calculated using information related to outcome measures, direction of effects, and reading outcome data for each intervention or comparison condition.

After extensive training (more than 10 hr) on the use and interpretation of items from the code sheet, interrater reliability was determined by having six raters independently code a single article. Responses from the six coders were used to calculate the percentage of agreement (i.e., agreements divided by agreements plus disagreements). An interrater reliability of .85 was achieved. Teams of three coded each article, compared results, and resolved any disagreements in coding, with final decisions reached by consensus. To assure even higher reliability than .85 on coding, any item that was not unambiguous to coders was discussed until a clear coding response could be determined. Finally, two raters who had achieved 100% reliability on items related to outcome precision and data calculated effect sizes for each study.

After the coding had been completed, the studies were summarized in a table format. Table 1 contains information on study design, sample, and intervention implementation (e.g., duration and implementation personnel). In Table 2 , intervention descriptions and effect sizes for reading outcomes are organized by each study’s intervention type and design. Effect sizes and p values are provided when appropriate data were available.

Intervention characteristics

Note. NR = not reported; LD = learning disability; MMR = mild mental retardation; MR = mental retardation; RD = reading disability; ESL = English as a Second Language; EBD = emotional or behavioral disability.

Outcomes by intervention type and design

Note. T = treatment; C = comparison; ES = effect size; PND = percentage of nonoverlapping data; SAT = Stanford Achievement Test; WJRM = Woodcock Johnson Reading Mastery; CBM = curriculum-based measure; WRMT = Woodcock Reading Mastery Test; WRMT-R = Woodcock Reading Mastery Test-Revised; TOWRE = Test of Word Reading Efficiency; CTOP p = Comprehensive Test of Phonological Processing; PPVT = Peabody Picture Vocabulary Test; CRAB = Comprehensive Reading Assessment Battery; SDRT = Stanford Diagnostic Reading Test; SRA = Science Research Associates.

Effect size calculation

Effect sizes were calculated for studies that provided adequate information. For studies lacking data necessary to compute effect sizes, data were summarized using findings from statistical analyses or descriptive statistics. For treatment–comparison design studies, the effect size, d , was calculated as the difference between the mean posttest score of the participants in the intervention condition minus the mean posttest score of the participants in the comparison condition divided by the pooled standard deviation. For studies in this synthesis that employed a treatment–comparison design, effect sizes can be interpreted as d = 0.20 is small, d = 0.50 is medium, and d = 0.80 is a large effect ( Cohen, 1988 ). Effects were adjusted for pretest differences when data were provided. For single-group studies, effect sizes were calculated as the standardized mean change ( Cooper, 1998 ). Outcomes from single-subject studies were calculated as the percentage of nonoverlapping data (PND) ( Scruggs, Mastropieri, & Casto, 1987 ). PND is calculated as the percentage of data points during the treatment phase that are higher than the highest data point from the baseline phase. PND was selected because it offered a more parsimonious means of reporting outcomes for single-subject studies and provided common criteria for comparing treatment impact.

Data Analysis Plan

A range of study designs and intervention types was represented in this synthesis. To fully explore the data, we conducted several types of analyses. First, we synthesized study features (e.g., sample size and study design) to highlight similarities, differences, and salient elements across the corpus of studies. Second, we conducted a meta-analysis of a subset of treatment–comparison design studies to determine the overall effect of reading interventions on students’ reading comprehension. In addition to an overall point estimate of reading intervention effects, we reported effects on comprehension by measurement and intervention type. Last, we synthesized trends and results by intervention type across all studies, including single-group and single-subject design studies.

Study Features

A total of 29 intervention studies, all reported in journal articles, met our criteria for inclusion in the synthesis. Studies appeared in a range of journals (as can be seen in the reference list) and were distributed relatively evenly across the years of interest (1994 to 2004). Each study’s design and sample characteristics are described in Table 1 . In the following sections, we summarize information on study features, including sample characteristics, design, and duration of the intervention as well as fidelity of implementation.

Sample characteristics

The 29 studies included 976 students. Sample sizes ranged from 1 to 125, with an average of 51 participants for treatment–comparison studies. The majority of studies targeted middle school students ( n = 19). Five studies focused on high school students, 2 on both middle and high school students, and 3 reported only students’ ages. Although our criteria included interventions for all struggling readers, including those without identified disabilities, only 8 studies included samples of struggling readers without disabilities. The other studies included students with learning or reading disabilities ( n = 17) or a combination of both students with and without disabilities ( n = 4).

Study design

The corpus of studies included 17 treatment–comparison, 9 single-subject, and 3 single-group design studies. The distribution of intervention type by design is displayed in Table 3 . The number of treatment–comparison studies with specific design elements that are characteristic of high quality studies ( Institute of Education Sciences, 2003 ; Raudenbush, 2005 ; Shadish, 2002 ) is indicated in Table 4 . The three elements in Table 4 were selected because they strengthen the validity of study conclusions when appropriately employed. As indicated, only 2 studies ( Abbott & Berninger, 1999 ; Allinder, Dunse, Brunken, & Obermiller-Krolikowski, 2001 ) randomly assigned students to conditions, reported implementation fidelity, and measured student outcomes using standardized measures.

Type of intervention by study design

Quality of treatment–comparison studies

Intervention design and implementation

The number of intervention sessions ranged from 2 to 70. For 11 studies, the number of sessions was not reported and could not be determined from the information provided. Similarly, the frequency and length of sessions was inconsistently reported but is provided in Table 1 when available. For studies that reported the length and number of sessions ( n = 12), students were engaged in an average of 23 hr of instruction. For treatment–comparison design studies, the average number of instructional hours provided was 26 ( n = 10).

Narrative text was used in most text-level interventions ( n = 12). Two studies used both narrative and expository text during the intervention, and 7 used expository text exclusively. For 4 studies, the type of text used was not discernable, and as would be expected, the word-level studies did not include connected text. About an equal number of study interventions was implemented by teachers ( n = 13) and researchers ( n = 12). Two interventions were implemented by both teachers and researchers, and the person implementing the intervention could not be determined from 2 studies.

Meta-Analysis

To summarize the effect of reading interventions on students’ comprehension, we conducted a meta-analysis of a study subset ( k = 13; Abbott & Berninger, 1999 ; Alfassi, 1998 ; Allinder et al., 2001 ; Anderson, Chan, & Henne, 1995 ; DiCecco & Gleason, 2002 ; L. S. Fuchs, Fuchs, & Kazdan, 1999 ; Hasselbring & Goin, 2004 ; Jitendra, Hoppes, & Xin, 2000 ; Mastropieri et al., 2001 ; Moore & Scevak, 1995 ; Penney, 2002 ; Wilder & Williams, 2001 ; Williams, Brown, Silverstein, & deCani, 1994 ). Studies with theoretically similar contrasts and measures of reading comprehension were included in the meta-analysis. All selected studies compared the effects of a reading intervention with a comparison condition in which the construct of interest was absent. By selecting only studies with contrasts between a treatment condition and a no-treatment comparison condition, we could ensure that the resulting point estimate of the effect could be meaningfully interpreted.

The majority of qualifying studies reported multiple comprehension dependent variables. Thus, we first calculated a composite effect for each study using methods outlined by Rosenthal and Rubin (1986) such that each study contributed only one effect to the aggregate. In these calculations, effects from standardized measure were weighted more heavily ( w = 2) than effects from research-developed measures. We analyzed a random-effects model with one predictor variable (intervention type) to account for the presence of unexplained variance and to provide a more conservative estimate of effect significance. A weighted average of effects was estimated and the amount of variance between study effects calculated using the Q statistic ( Shadish & Haddock, 1994 ). In addition to an overall point estimate of the effect of reading interventions, we also calculated weighted averages to highlight effects of certain intervention characteristics (e.g., using narrative versus expository text). When reporting weighted mean effects, only outcomes from studies with treatment–comparison conditions were included. Effects from single-group studies were excluded because only one study ( Mercer, Campbell, Miller, Mercer & Lane, 2000 ) provided the information needed to convert the repeated-measures effect size into the same metric as an independent group effect size.

Overall effect on comprehension

The 13 treatment–comparison studies were included in the meta-analysis because they (a) had theoretically similar contrasts and measures of reading comprehension and (b) examined the effects of a reading intervention with a comparison in which the construct of interest was absent. In 8 studies, the contrast was between the intervention of interest and the school’s current reading instruction. In 5 studies, the comparison condition also received an intervention, but the construct or strategy of interest was absent from that condition. The remaining 4 treatment–comparison studies in the synthesis were eliminated from the meta-analysis because they did not include a comprehension measure ( Bhat, Griffin, & Sindelair, 2003 ; Bhattacharya & Ehri, 2004 ) or they did not include a no-treatment comparison condition ( Chan, 1996 ; Klingner & Vaughn, 1996 ).

A random-effects model was used to provide a more conservative estimate of intervention effect significance. In this model, the weighted average of the difference in comprehension outcomes between students in the treatment conditions and students in the comparison conditions was large (effect size = 0.89; 95% confidence interval (CI) = 0.42, 1.36). That is, students in the treatment conditions scored, on average, more than two thirds of a standard deviation higher than students in the comparison conditions on measures of comprehension, and the effect was significantly different from zero.

To examine whether researcher-developed or curriculum-based measures inflated the effect of reading interventions, we also calculated the effect based on standardized measures only. For this analysis, seven studies were included; the other six studies were eliminated from this secondary analysis because they did not include a standardized measure of comprehension. When limited to only studies that included a standardized measure of comprehension, the random-effects model yielded a moderate average effect (effect size = 0.47; 95% CI = 0.12, 0.82). The effect of reading interventions on comprehension was quite large (effect size = 1.19; 95% CI = 1.10, 1.37) when researcher-developed measures were used to estimate the effect ( k = 9).

In a fixed-effects model, intervention type was a significant predictor of effect size variation ( Q between = 22.33, p < .05), which suggests that the effect sizes were not similar across the categories. Weighted average effects for each intervention type (comprehension, fluency, word study, and multicomponent) were calculated and are presented in Table 5 . For fluency and word study interventions, the effect was not significant—the average effect on comprehension was not different from zero. For the other intervention types, the effect was significantly different from zero but differed in magnitude. Bonferroni post hoc contrasts showed a significant difference in effects on comprehension between comprehension and multicompo-nent interventions ( p < .025). There was no significant difference between the effects of word study interventions and multicomponent interventions ( p > .025).

Average weighted effects by measurement and intervention type

We also computed weighted average effects for studies with common characteristics. Whether an intervention was implemented by the researcher ( n = 4, average effect size = 1.15) or the students’ teacher ( n = 8, effect size = 0.77), the effects were large. The 95% CIs for these two conditions did not overlap, suggesting that they are significantly different. Effects on comprehension were different depending on the student population. Moderate average effects were found for samples of struggling readers ( n = 5, effect size = 0.45) or both struggling readers and students with disabilities ( n = 4, effect size = 0.68), but a large effect ( n = 4, effect size = 1.50) was found for studies with samples of only students with disabilities.

Eleven of the 13 studies included in the meta-analysis used reading of connected text as part of the intervention. In an analysis of studies that reported the type of text used, the weighted average effect for interventions using expository text was moderate ( n = 3, effect size = 0.53), whereas the average effect for those focusing on narrative text was high ( n = 6, effect size = 1.30). Closer examination of the studies with interventions focused on expository text ( Alfassi, 1998 ; DiCecco & Gleason, 2002 ; Moore & Scevak, 1995 ) showed that two studies tested the effects of a multicomponent intervention similar in structure to reciprocal teaching and one examined the effects of using graphic organizers.

Intervention Variables

For this synthesis, we examined findings from treatment–comparison design studies first, because the findings from these studies provide the greatest confidence about causal inferences. We then used results from single-group and single-subject design studies to support or refute findings from the treatment–comparison design studies. Findings are summarized by intervention type. Intervention type was defined as the primary reading component addressed by the intervention (i.e., word study, fluency, vocabulary, comprehension). The corpus of studies did not include any vocabulary interventions but did include several studies that addressed multiple components in which vocabulary instruction was represented. Within each summary, findings for different reading outcomes (e.g., fluency, word reading, comprehension) are reported separately to highlight the interventions’ effects on component reading skills.

Comprehension

Nine treatment–comparison studies ( Alfassi, 1998 ; Anderson et al., 1995 ; Chan, 1996 ; DiCecco & Gleason, 2002 ; Jitendra et al., 2000 ; Klingner & Vaughn, 1996 ; Moore & Scevak, 1995 ; Wilder & Williams, 2001 ; Williams et al., 1994 ) focused on comprehension. Among these studies, several ( Alfassi, 1998 ; Anderson et al., 1995 ; Klingner & Vaughn, 1996 ; Moore & Scevak, 1995 ) examined interventions in which students were taught a combination of reading comprehension skills and strategies, an approach with evidence of effectiveness in improving students’ general comprehension ( NRP, 2000 ; RAND Reading Study Group, 2002 ). Two studies ( Alfassi, 1998 ; Klingner & Vaughn, 1996 ) employed reciprocal teaching ( Palincsar, Brown, & Martin, 1987 ), a model that includes previewing, clarifying, generating questions, and summarizing and has been shown to be highly effective in improving comprehension (see for review, Rosenshine & Meister, 1994 ). Klingner and Vaughn (1996) reported mixed results when the grouping structure of a reciprocal teaching intervention was manipulated during student application and practice. On a standardized measure of comprehension, cooperative grouping was the more effective model (effect size = 1.42). On a researcher-developed comprehension measure, the effects were small but favored the peer tutoring group (effect size = 0.35). It is likely that the standardized test outcome is more reliable, suggesting greater effects from the use of cooperative grouping structures, at least for English language learners with reading difficulties. In another study, effects of reciprocal teaching on comprehension were moderate to high (effect size = 0.35 to 1.04; Alfassi, 1998 ) when implemented in a remedial high school setting, a context not typically examined in previous studies of reciprocal teaching ( Alfassi, 1998 ).

The multiple-strategy intervention in Anderson et al. (1995) resulted in large effects (effect size = 0.80 to 2.08). The repertoire of strategies included previewing and using knowledge of text structure to facilitate understanding. However, another study ( Moore & Scevak, 1995 ), which focused on teaching students to use text structure and features to summarize expository text, reported no effects (effect size = −0.57 to 0.07). It should be noted that the intervention provided in the Anderson and colleagues study (1995) was conducted for 140 hr (a very extensive intervention), and the amount of time for the intervention in the Moore and Scevak study (1995) was not specified, but the study was conducted for only 7 weeks—suggesting a significantly less extensive intervention.

Chan (1996) manipulated both strategy instruction and attribution training and found that poor readers benefited from some attribution training, with the most effective model being attribution training plus successive strategy training (effect size = 1.68). In addition, all three strategy conditions were more effective than the attribution-only condition, which suggests that poor readers also benefit from explicit strategy instruction.

Using graphic organizers is another strategy with demonstrated efficacy in improving comprehension ( Kim et al., 2004 ). One experimental study ( DiCecco & Gleason, 2002 ) and two single-subject studies ( Gardhill & Jitendra, 1999 ; Vallecorsa & deBettencourt, 1997 ) examined the impact of teaching students to use graphic organizers. In DiCecco and Gleason (2002) , the effect of a concept relationship graphic organizer intervention on relational statement production was large (effect size = 1.68). However, the effect was mixed for measures of content knowledge (effect size = 0.08 to 0.50). Other studies also indicated that graphic organizers assisted students in identifying information related to the organizer but were less effective in improving students’ overall understanding of text. For example, in a single-subject study of a story mapping intervention, Gardhill and Jitendra (1999) found mixed results on general comprehension questions (PND = 13% to 100%) but consistent improvement compared to baseline on story retell (PND = 100%). Similarly, all three students in a study of explicit story mapping ( Vallecorsa & deBettencourt, 1997 ) increased the number of story elements included in a retell (PND = 67% to 100%).