Criteria for Good Qualitative Research: A Comprehensive Review

- Regular Article

- Open access

- Published: 18 September 2021

- Volume 31 , pages 679–689, ( 2022 )

Cite this article

You have full access to this open access article

- Drishti Yadav ORCID: orcid.org/0000-0002-2974-0323 1

70k Accesses

22 Citations

72 Altmetric

Explore all metrics

This review aims to synthesize a published set of evaluative criteria for good qualitative research. The aim is to shed light on existing standards for assessing the rigor of qualitative research encompassing a range of epistemological and ontological standpoints. Using a systematic search strategy, published journal articles that deliberate criteria for rigorous research were identified. Then, references of relevant articles were surveyed to find noteworthy, distinct, and well-defined pointers to good qualitative research. This review presents an investigative assessment of the pivotal features in qualitative research that can permit the readers to pass judgment on its quality and to condemn it as good research when objectively and adequately utilized. Overall, this review underlines the crux of qualitative research and accentuates the necessity to evaluate such research by the very tenets of its being. It also offers some prospects and recommendations to improve the quality of qualitative research. Based on the findings of this review, it is concluded that quality criteria are the aftereffect of socio-institutional procedures and existing paradigmatic conducts. Owing to the paradigmatic diversity of qualitative research, a single and specific set of quality criteria is neither feasible nor anticipated. Since qualitative research is not a cohesive discipline, researchers need to educate and familiarize themselves with applicable norms and decisive factors to evaluate qualitative research from within its theoretical and methodological framework of origin.

Similar content being viewed by others

What is Qualitative in Qualitative Research

Patrik Aspers & Ugo Corte

Environmental-, social-, and governance-related factors for business investment and sustainability: a scientometric review of global trends

Hadiqa Ahmad, Muhammad Yaqub & Seung Hwan Lee

Literature reviews as independent studies: guidelines for academic practice

Sascha Kraus, Matthias Breier, … João J. Ferreira

Avoid common mistakes on your manuscript.

Introduction

“… It is important to regularly dialogue about what makes for good qualitative research” (Tracy, 2010 , p. 837)

To decide what represents good qualitative research is highly debatable. There are numerous methods that are contained within qualitative research and that are established on diverse philosophical perspectives. Bryman et al., ( 2008 , p. 262) suggest that “It is widely assumed that whereas quality criteria for quantitative research are well‐known and widely agreed, this is not the case for qualitative research.” Hence, the question “how to evaluate the quality of qualitative research” has been continuously debated. There are many areas of science and technology wherein these debates on the assessment of qualitative research have taken place. Examples include various areas of psychology: general psychology (Madill et al., 2000 ); counseling psychology (Morrow, 2005 ); and clinical psychology (Barker & Pistrang, 2005 ), and other disciplines of social sciences: social policy (Bryman et al., 2008 ); health research (Sparkes, 2001 ); business and management research (Johnson et al., 2006 ); information systems (Klein & Myers, 1999 ); and environmental studies (Reid & Gough, 2000 ). In the literature, these debates are enthused by the impression that the blanket application of criteria for good qualitative research developed around the positivist paradigm is improper. Such debates are based on the wide range of philosophical backgrounds within which qualitative research is conducted (e.g., Sandberg, 2000 ; Schwandt, 1996 ). The existence of methodological diversity led to the formulation of different sets of criteria applicable to qualitative research.

Among qualitative researchers, the dilemma of governing the measures to assess the quality of research is not a new phenomenon, especially when the virtuous triad of objectivity, reliability, and validity (Spencer et al., 2004 ) are not adequate. Occasionally, the criteria of quantitative research are used to evaluate qualitative research (Cohen & Crabtree, 2008 ; Lather, 2004 ). Indeed, Howe ( 2004 ) claims that the prevailing paradigm in educational research is scientifically based experimental research. Hypotheses and conjectures about the preeminence of quantitative research can weaken the worth and usefulness of qualitative research by neglecting the prominence of harmonizing match for purpose on research paradigm, the epistemological stance of the researcher, and the choice of methodology. Researchers have been reprimanded concerning this in “paradigmatic controversies, contradictions, and emerging confluences” (Lincoln & Guba, 2000 ).

In general, qualitative research tends to come from a very different paradigmatic stance and intrinsically demands distinctive and out-of-the-ordinary criteria for evaluating good research and varieties of research contributions that can be made. This review attempts to present a series of evaluative criteria for qualitative researchers, arguing that their choice of criteria needs to be compatible with the unique nature of the research in question (its methodology, aims, and assumptions). This review aims to assist researchers in identifying some of the indispensable features or markers of high-quality qualitative research. In a nutshell, the purpose of this systematic literature review is to analyze the existing knowledge on high-quality qualitative research and to verify the existence of research studies dealing with the critical assessment of qualitative research based on the concept of diverse paradigmatic stances. Contrary to the existing reviews, this review also suggests some critical directions to follow to improve the quality of qualitative research in different epistemological and ontological perspectives. This review is also intended to provide guidelines for the acceleration of future developments and dialogues among qualitative researchers in the context of assessing the qualitative research.

The rest of this review article is structured in the following fashion: Sect. Methods describes the method followed for performing this review. Section Criteria for Evaluating Qualitative Studies provides a comprehensive description of the criteria for evaluating qualitative studies. This section is followed by a summary of the strategies to improve the quality of qualitative research in Sect. Improving Quality: Strategies . Section How to Assess the Quality of the Research Findings? provides details on how to assess the quality of the research findings. After that, some of the quality checklists (as tools to evaluate quality) are discussed in Sect. Quality Checklists: Tools for Assessing the Quality . At last, the review ends with the concluding remarks presented in Sect. Conclusions, Future Directions and Outlook . Some prospects in qualitative research for enhancing its quality and usefulness in the social and techno-scientific research community are also presented in Sect. Conclusions, Future Directions and Outlook .

For this review, a comprehensive literature search was performed from many databases using generic search terms such as Qualitative Research , Criteria , etc . The following databases were chosen for the literature search based on the high number of results: IEEE Explore, ScienceDirect, PubMed, Google Scholar, and Web of Science. The following keywords (and their combinations using Boolean connectives OR/AND) were adopted for the literature search: qualitative research, criteria, quality, assessment, and validity. The synonyms for these keywords were collected and arranged in a logical structure (see Table 1 ). All publications in journals and conference proceedings later than 1950 till 2021 were considered for the search. Other articles extracted from the references of the papers identified in the electronic search were also included. A large number of publications on qualitative research were retrieved during the initial screening. Hence, to include the searches with the main focus on criteria for good qualitative research, an inclusion criterion was utilized in the search string.

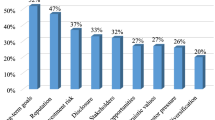

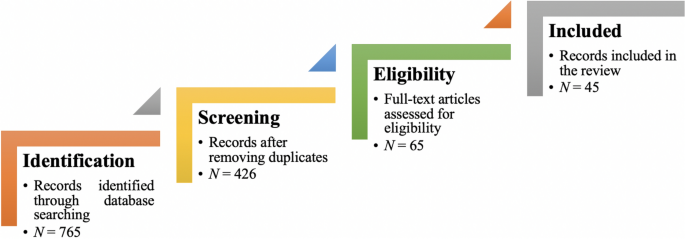

From the selected databases, the search retrieved a total of 765 publications. Then, the duplicate records were removed. After that, based on the title and abstract, the remaining 426 publications were screened for their relevance by using the following inclusion and exclusion criteria (see Table 2 ). Publications focusing on evaluation criteria for good qualitative research were included, whereas those works which delivered theoretical concepts on qualitative research were excluded. Based on the screening and eligibility, 45 research articles were identified that offered explicit criteria for evaluating the quality of qualitative research and were found to be relevant to this review.

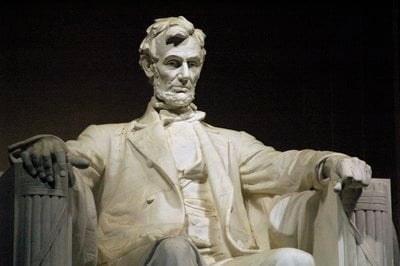

Figure 1 illustrates the complete review process in the form of PRISMA flow diagram. PRISMA, i.e., “preferred reporting items for systematic reviews and meta-analyses” is employed in systematic reviews to refine the quality of reporting.

PRISMA flow diagram illustrating the search and inclusion process. N represents the number of records

Criteria for Evaluating Qualitative Studies

Fundamental criteria: general research quality.

Various researchers have put forward criteria for evaluating qualitative research, which have been summarized in Table 3 . Also, the criteria outlined in Table 4 effectively deliver the various approaches to evaluate and assess the quality of qualitative work. The entries in Table 4 are based on Tracy’s “Eight big‐tent criteria for excellent qualitative research” (Tracy, 2010 ). Tracy argues that high-quality qualitative work should formulate criteria focusing on the worthiness, relevance, timeliness, significance, morality, and practicality of the research topic, and the ethical stance of the research itself. Researchers have also suggested a series of questions as guiding principles to assess the quality of a qualitative study (Mays & Pope, 2020 ). Nassaji ( 2020 ) argues that good qualitative research should be robust, well informed, and thoroughly documented.

Qualitative Research: Interpretive Paradigms

All qualitative researchers follow highly abstract principles which bring together beliefs about ontology, epistemology, and methodology. These beliefs govern how the researcher perceives and acts. The net, which encompasses the researcher’s epistemological, ontological, and methodological premises, is referred to as a paradigm, or an interpretive structure, a “Basic set of beliefs that guides action” (Guba, 1990 ). Four major interpretive paradigms structure the qualitative research: positivist and postpositivist, constructivist interpretive, critical (Marxist, emancipatory), and feminist poststructural. The complexity of these four abstract paradigms increases at the level of concrete, specific interpretive communities. Table 5 presents these paradigms and their assumptions, including their criteria for evaluating research, and the typical form that an interpretive or theoretical statement assumes in each paradigm. Moreover, for evaluating qualitative research, quantitative conceptualizations of reliability and validity are proven to be incompatible (Horsburgh, 2003 ). In addition, a series of questions have been put forward in the literature to assist a reviewer (who is proficient in qualitative methods) for meticulous assessment and endorsement of qualitative research (Morse, 2003 ). Hammersley ( 2007 ) also suggests that guiding principles for qualitative research are advantageous, but methodological pluralism should not be simply acknowledged for all qualitative approaches. Seale ( 1999 ) also points out the significance of methodological cognizance in research studies.

Table 5 reflects that criteria for assessing the quality of qualitative research are the aftermath of socio-institutional practices and existing paradigmatic standpoints. Owing to the paradigmatic diversity of qualitative research, a single set of quality criteria is neither possible nor desirable. Hence, the researchers must be reflexive about the criteria they use in the various roles they play within their research community.

Improving Quality: Strategies

Another critical question is “How can the qualitative researchers ensure that the abovementioned quality criteria can be met?” Lincoln and Guba ( 1986 ) delineated several strategies to intensify each criteria of trustworthiness. Other researchers (Merriam & Tisdell, 2016 ; Shenton, 2004 ) also presented such strategies. A brief description of these strategies is shown in Table 6 .

It is worth mentioning that generalizability is also an integral part of qualitative research (Hays & McKibben, 2021 ). In general, the guiding principle pertaining to generalizability speaks about inducing and comprehending knowledge to synthesize interpretive components of an underlying context. Table 7 summarizes the main metasynthesis steps required to ascertain generalizability in qualitative research.

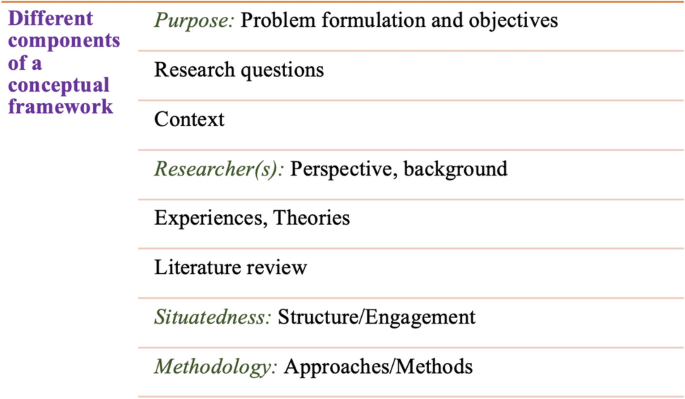

Figure 2 reflects the crucial components of a conceptual framework and their contribution to decisions regarding research design, implementation, and applications of results to future thinking, study, and practice (Johnson et al., 2020 ). The synergy and interrelationship of these components signifies their role to different stances of a qualitative research study.

Essential elements of a conceptual framework

In a nutshell, to assess the rationale of a study, its conceptual framework and research question(s), quality criteria must take account of the following: lucid context for the problem statement in the introduction; well-articulated research problems and questions; precise conceptual framework; distinct research purpose; and clear presentation and investigation of the paradigms. These criteria would expedite the quality of qualitative research.

How to Assess the Quality of the Research Findings?

The inclusion of quotes or similar research data enhances the confirmability in the write-up of the findings. The use of expressions (for instance, “80% of all respondents agreed that” or “only one of the interviewees mentioned that”) may also quantify qualitative findings (Stenfors et al., 2020 ). On the other hand, the persuasive reason for “why this may not help in intensifying the research” has also been provided (Monrouxe & Rees, 2020 ). Further, the Discussion and Conclusion sections of an article also prove robust markers of high-quality qualitative research, as elucidated in Table 8 .

Quality Checklists: Tools for Assessing the Quality

Numerous checklists are available to speed up the assessment of the quality of qualitative research. However, if used uncritically and recklessly concerning the research context, these checklists may be counterproductive. I recommend that such lists and guiding principles may assist in pinpointing the markers of high-quality qualitative research. However, considering enormous variations in the authors’ theoretical and philosophical contexts, I would emphasize that high dependability on such checklists may say little about whether the findings can be applied in your setting. A combination of such checklists might be appropriate for novice researchers. Some of these checklists are listed below:

The most commonly used framework is Consolidated Criteria for Reporting Qualitative Research (COREQ) (Tong et al., 2007 ). This framework is recommended by some journals to be followed by the authors during article submission.

Standards for Reporting Qualitative Research (SRQR) is another checklist that has been created particularly for medical education (O’Brien et al., 2014 ).

Also, Tracy ( 2010 ) and Critical Appraisal Skills Programme (CASP, 2021 ) offer criteria for qualitative research relevant across methods and approaches.

Further, researchers have also outlined different criteria as hallmarks of high-quality qualitative research. For instance, the “Road Trip Checklist” (Epp & Otnes, 2021 ) provides a quick reference to specific questions to address different elements of high-quality qualitative research.

Conclusions, Future Directions, and Outlook

This work presents a broad review of the criteria for good qualitative research. In addition, this article presents an exploratory analysis of the essential elements in qualitative research that can enable the readers of qualitative work to judge it as good research when objectively and adequately utilized. In this review, some of the essential markers that indicate high-quality qualitative research have been highlighted. I scope them narrowly to achieve rigor in qualitative research and note that they do not completely cover the broader considerations necessary for high-quality research. This review points out that a universal and versatile one-size-fits-all guideline for evaluating the quality of qualitative research does not exist. In other words, this review also emphasizes the non-existence of a set of common guidelines among qualitative researchers. In unison, this review reinforces that each qualitative approach should be treated uniquely on account of its own distinctive features for different epistemological and disciplinary positions. Owing to the sensitivity of the worth of qualitative research towards the specific context and the type of paradigmatic stance, researchers should themselves analyze what approaches can be and must be tailored to ensemble the distinct characteristics of the phenomenon under investigation. Although this article does not assert to put forward a magic bullet and to provide a one-stop solution for dealing with dilemmas about how, why, or whether to evaluate the “goodness” of qualitative research, it offers a platform to assist the researchers in improving their qualitative studies. This work provides an assembly of concerns to reflect on, a series of questions to ask, and multiple sets of criteria to look at, when attempting to determine the quality of qualitative research. Overall, this review underlines the crux of qualitative research and accentuates the need to evaluate such research by the very tenets of its being. Bringing together the vital arguments and delineating the requirements that good qualitative research should satisfy, this review strives to equip the researchers as well as reviewers to make well-versed judgment about the worth and significance of the qualitative research under scrutiny. In a nutshell, a comprehensive portrayal of the research process (from the context of research to the research objectives, research questions and design, speculative foundations, and from approaches of collecting data to analyzing the results, to deriving inferences) frequently proliferates the quality of a qualitative research.

Prospects : A Road Ahead for Qualitative Research

Irrefutably, qualitative research is a vivacious and evolving discipline wherein different epistemological and disciplinary positions have their own characteristics and importance. In addition, not surprisingly, owing to the sprouting and varied features of qualitative research, no consensus has been pulled off till date. Researchers have reflected various concerns and proposed several recommendations for editors and reviewers on conducting reviews of critical qualitative research (Levitt et al., 2021 ; McGinley et al., 2021 ). Following are some prospects and a few recommendations put forward towards the maturation of qualitative research and its quality evaluation:

In general, most of the manuscript and grant reviewers are not qualitative experts. Hence, it is more likely that they would prefer to adopt a broad set of criteria. However, researchers and reviewers need to keep in mind that it is inappropriate to utilize the same approaches and conducts among all qualitative research. Therefore, future work needs to focus on educating researchers and reviewers about the criteria to evaluate qualitative research from within the suitable theoretical and methodological context.

There is an urgent need to refurbish and augment critical assessment of some well-known and widely accepted tools (including checklists such as COREQ, SRQR) to interrogate their applicability on different aspects (along with their epistemological ramifications).

Efforts should be made towards creating more space for creativity, experimentation, and a dialogue between the diverse traditions of qualitative research. This would potentially help to avoid the enforcement of one's own set of quality criteria on the work carried out by others.

Moreover, journal reviewers need to be aware of various methodological practices and philosophical debates.

It is pivotal to highlight the expressions and considerations of qualitative researchers and bring them into a more open and transparent dialogue about assessing qualitative research in techno-scientific, academic, sociocultural, and political rooms.

Frequent debates on the use of evaluative criteria are required to solve some potentially resolved issues (including the applicability of a single set of criteria in multi-disciplinary aspects). Such debates would not only benefit the group of qualitative researchers themselves, but primarily assist in augmenting the well-being and vivacity of the entire discipline.

To conclude, I speculate that the criteria, and my perspective, may transfer to other methods, approaches, and contexts. I hope that they spark dialog and debate – about criteria for excellent qualitative research and the underpinnings of the discipline more broadly – and, therefore, help improve the quality of a qualitative study. Further, I anticipate that this review will assist the researchers to contemplate on the quality of their own research, to substantiate research design and help the reviewers to review qualitative research for journals. On a final note, I pinpoint the need to formulate a framework (encompassing the prerequisites of a qualitative study) by the cohesive efforts of qualitative researchers of different disciplines with different theoretic-paradigmatic origins. I believe that tailoring such a framework (of guiding principles) paves the way for qualitative researchers to consolidate the status of qualitative research in the wide-ranging open science debate. Dialogue on this issue across different approaches is crucial for the impending prospects of socio-techno-educational research.

Amin, M. E. K., Nørgaard, L. S., Cavaco, A. M., Witry, M. J., Hillman, L., Cernasev, A., & Desselle, S. P. (2020). Establishing trustworthiness and authenticity in qualitative pharmacy research. Research in Social and Administrative Pharmacy, 16 (10), 1472–1482.

Article Google Scholar

Barker, C., & Pistrang, N. (2005). Quality criteria under methodological pluralism: Implications for conducting and evaluating research. American Journal of Community Psychology, 35 (3–4), 201–212.

Bryman, A., Becker, S., & Sempik, J. (2008). Quality criteria for quantitative, qualitative and mixed methods research: A view from social policy. International Journal of Social Research Methodology, 11 (4), 261–276.

Caelli, K., Ray, L., & Mill, J. (2003). ‘Clear as mud’: Toward greater clarity in generic qualitative research. International Journal of Qualitative Methods, 2 (2), 1–13.

CASP (2021). CASP checklists. Retrieved May 2021 from https://casp-uk.net/casp-tools-checklists/

Cohen, D. J., & Crabtree, B. F. (2008). Evaluative criteria for qualitative research in health care: Controversies and recommendations. The Annals of Family Medicine, 6 (4), 331–339.

Denzin, N. K., & Lincoln, Y. S. (2005). Introduction: The discipline and practice of qualitative research. In N. K. Denzin & Y. S. Lincoln (Eds.), The sage handbook of qualitative research (pp. 1–32). Sage Publications Ltd.

Google Scholar

Elliott, R., Fischer, C. T., & Rennie, D. L. (1999). Evolving guidelines for publication of qualitative research studies in psychology and related fields. British Journal of Clinical Psychology, 38 (3), 215–229.

Epp, A. M., & Otnes, C. C. (2021). High-quality qualitative research: Getting into gear. Journal of Service Research . https://doi.org/10.1177/1094670520961445

Guba, E. G. (1990). The paradigm dialog. In Alternative paradigms conference, mar, 1989, Indiana u, school of education, San Francisco, ca, us . Sage Publications, Inc.

Hammersley, M. (2007). The issue of quality in qualitative research. International Journal of Research and Method in Education, 30 (3), 287–305.

Haven, T. L., Errington, T. M., Gleditsch, K. S., van Grootel, L., Jacobs, A. M., Kern, F. G., & Mokkink, L. B. (2020). Preregistering qualitative research: A Delphi study. International Journal of Qualitative Methods, 19 , 1609406920976417.

Hays, D. G., & McKibben, W. B. (2021). Promoting rigorous research: Generalizability and qualitative research. Journal of Counseling and Development, 99 (2), 178–188.

Horsburgh, D. (2003). Evaluation of qualitative research. Journal of Clinical Nursing, 12 (2), 307–312.

Howe, K. R. (2004). A critique of experimentalism. Qualitative Inquiry, 10 (1), 42–46.

Johnson, J. L., Adkins, D., & Chauvin, S. (2020). A review of the quality indicators of rigor in qualitative research. American Journal of Pharmaceutical Education, 84 (1), 7120.

Johnson, P., Buehring, A., Cassell, C., & Symon, G. (2006). Evaluating qualitative management research: Towards a contingent criteriology. International Journal of Management Reviews, 8 (3), 131–156.

Klein, H. K., & Myers, M. D. (1999). A set of principles for conducting and evaluating interpretive field studies in information systems. MIS Quarterly, 23 (1), 67–93.

Lather, P. (2004). This is your father’s paradigm: Government intrusion and the case of qualitative research in education. Qualitative Inquiry, 10 (1), 15–34.

Levitt, H. M., Morrill, Z., Collins, K. M., & Rizo, J. L. (2021). The methodological integrity of critical qualitative research: Principles to support design and research review. Journal of Counseling Psychology, 68 (3), 357.

Lincoln, Y. S., & Guba, E. G. (1986). But is it rigorous? Trustworthiness and authenticity in naturalistic evaluation. New Directions for Program Evaluation, 1986 (30), 73–84.

Lincoln, Y. S., & Guba, E. G. (2000). Paradigmatic controversies, contradictions and emerging confluences. In N. K. Denzin & Y. S. Lincoln (Eds.), Handbook of qualitative research (2nd ed., pp. 163–188). Sage Publications.

Madill, A., Jordan, A., & Shirley, C. (2000). Objectivity and reliability in qualitative analysis: Realist, contextualist and radical constructionist epistemologies. British Journal of Psychology, 91 (1), 1–20.

Mays, N., & Pope, C. (2020). Quality in qualitative research. Qualitative Research in Health Care . https://doi.org/10.1002/9781119410867.ch15

McGinley, S., Wei, W., Zhang, L., & Zheng, Y. (2021). The state of qualitative research in hospitality: A 5-year review 2014 to 2019. Cornell Hospitality Quarterly, 62 (1), 8–20.

Merriam, S., & Tisdell, E. (2016). Qualitative research: A guide to design and implementation. San Francisco, US.

Meyer, M., & Dykes, J. (2019). Criteria for rigor in visualization design study. IEEE Transactions on Visualization and Computer Graphics, 26 (1), 87–97.

Monrouxe, L. V., & Rees, C. E. (2020). When I say… quantification in qualitative research. Medical Education, 54 (3), 186–187.

Morrow, S. L. (2005). Quality and trustworthiness in qualitative research in counseling psychology. Journal of Counseling Psychology, 52 (2), 250.

Morse, J. M. (2003). A review committee’s guide for evaluating qualitative proposals. Qualitative Health Research, 13 (6), 833–851.

Nassaji, H. (2020). Good qualitative research. Language Teaching Research, 24 (4), 427–431.

O’Brien, B. C., Harris, I. B., Beckman, T. J., Reed, D. A., & Cook, D. A. (2014). Standards for reporting qualitative research: A synthesis of recommendations. Academic Medicine, 89 (9), 1245–1251.

O’Connor, C., & Joffe, H. (2020). Intercoder reliability in qualitative research: Debates and practical guidelines. International Journal of Qualitative Methods, 19 , 1609406919899220.

Reid, A., & Gough, S. (2000). Guidelines for reporting and evaluating qualitative research: What are the alternatives? Environmental Education Research, 6 (1), 59–91.

Rocco, T. S. (2010). Criteria for evaluating qualitative studies. Human Resource Development International . https://doi.org/10.1080/13678868.2010.501959

Sandberg, J. (2000). Understanding human competence at work: An interpretative approach. Academy of Management Journal, 43 (1), 9–25.

Schwandt, T. A. (1996). Farewell to criteriology. Qualitative Inquiry, 2 (1), 58–72.

Seale, C. (1999). Quality in qualitative research. Qualitative Inquiry, 5 (4), 465–478.

Shenton, A. K. (2004). Strategies for ensuring trustworthiness in qualitative research projects. Education for Information, 22 (2), 63–75.

Sparkes, A. C. (2001). Myth 94: Qualitative health researchers will agree about validity. Qualitative Health Research, 11 (4), 538–552.

Spencer, L., Ritchie, J., Lewis, J., & Dillon, L. (2004). Quality in qualitative evaluation: A framework for assessing research evidence.

Stenfors, T., Kajamaa, A., & Bennett, D. (2020). How to assess the quality of qualitative research. The Clinical Teacher, 17 (6), 596–599.

Taylor, E. W., Beck, J., & Ainsworth, E. (2001). Publishing qualitative adult education research: A peer review perspective. Studies in the Education of Adults, 33 (2), 163–179.

Tong, A., Sainsbury, P., & Craig, J. (2007). Consolidated criteria for reporting qualitative research (COREQ): A 32-item checklist for interviews and focus groups. International Journal for Quality in Health Care, 19 (6), 349–357.

Tracy, S. J. (2010). Qualitative quality: Eight “big-tent” criteria for excellent qualitative research. Qualitative Inquiry, 16 (10), 837–851.

Download references

Open access funding provided by TU Wien (TUW).

Author information

Authors and affiliations.

Faculty of Informatics, Technische Universität Wien, 1040, Vienna, Austria

Drishti Yadav

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Drishti Yadav .

Ethics declarations

Conflict of interest.

The author declares no conflict of interest.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Yadav, D. Criteria for Good Qualitative Research: A Comprehensive Review. Asia-Pacific Edu Res 31 , 679–689 (2022). https://doi.org/10.1007/s40299-021-00619-0

Download citation

Accepted : 28 August 2021

Published : 18 September 2021

Issue Date : December 2022

DOI : https://doi.org/10.1007/s40299-021-00619-0

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Qualitative research

- Evaluative criteria

- Find a journal

- Publish with us

- Track your research

What Should Be the Characteristics of a Good Research Paper?

by team@blp

In miscellaneous.

When people want to get answers to various issues, they search for information on the problems. From their findings, they expand them, aiming to agree or refute them. Research papers are common assignments in colleges.

They follow specific research and writing guidelines to answer particular questions or assigned topics. They look into the critical topic of credible research sources and argue their findings in an orderly manner. To be termed as good, the research paper must bear the following characteristics.

In this Article

Gives credit to previous research work on the topic

- It’s hooked on a relevant research question.

It must be based on appropriate, systematic research methods

- The information must be accurate and controlled.

- It must be verifiable and rigorous.

Be careful with the topic you choose

Decide the sources you want to use, create your thesis statement , plan your points, write your paper, characteristics of a good research paper.

Writing a research paper aims to discover new knowledge, but the knowledge must have a base. Its base is the research done previously by other scholars. The student must acknowledge the previous research and avoid duplicating it in their writing process.

A college student must engage in deep research work to create a credible research paper. This makes the process lengthy and complex when choosing your topic, selecting sources, and developing its design. In addition, it requires a great deal of knowledge to piece everything together. Fortunately, Studyclerk will give you professional help anytime you need it. If you do not have enough knowledge and time to write a paper on your own, you can ask for research paper help by StudyClerk, where experienced paper writers will write your paper in no time. You can trust their expert writers to handle your assignment well and get a well-written paper in a short time.

It’s hooked on a relevant research question .

All the time a student spends researching multiple sources is to answer a specific research question. The question must be relevant to the current needs. This question guides them into the information they use or the line of argument they take.

The methodology of research a student chooses will determine the value of the information they get or give. The methods must be valid and credible to provide reliable outcomes. Whether the student chooses a qualitative, quantitative, or mixed approach, they must all be valuable and relevant.

The information must be accurate and controlled .

A good research paper cannot be generalized information but specific, scientific information. That is why they must include references and record tests or information accurately. Moreover, they must keep the information controlled by staying within the topic from the first step of research to the last.

It must be verifiable and rigorous .

The student must use information or write arguments that can be verified. If it’s a test, it must be replicable by another researcher. The sources must be verifiable and accurate. Without rigorous deep research strategies , the paper cannot be good. They must put a lot of labor into both the writing and research processes to ensure the information is credible, clear, concise, original, and precise.

How to write a good research paper

To write a good research paper, you must first understand what kind of question you have been assigned. Then, you will choose the best topic that you will love to write about. The following points will help you write a good research paper.

You must select a topic you love. Go for a topic that will be easier to research, which will give you a broader area of study.

Your instructor doesn’t restrict you on the sources you must use. Broaden your mind so that you don’t limit yourself to specific sources of information. Sometimes you will get helpful information from sources you slightest thought as good.

Write your central statement to base your position on the research. Make it coherent contentious, and let it be a summary of your arguments.

Create an outline that will guide you when arguing your points

- Start with the most vital points and smooth the flow.

- Pay attention to paragraph structure and let your arguments be clear.

- Finish with a compelling conclusion, and don’t forget to cite your sources.

A research paper requires extensive research methods to get solid points for supporting your stand. First, the sources you use must be verifiable by any other researcher. You must ensure your research work is original for your paper to be credible. Third, each point should be coherent with each paragraph. Finally, your research findings must be tagged on the research question and provide answers that apply to the current society.

Author’s Bio

Helen Birk is an online freelance writer who holds an outstanding record of helping numerous students do their academic assignments. She is an expert in essays and thesis writing, and students simply love her for her high-quality work. In addition, she enjoys cycling, doing pencil sketching, and listening to spiritual podcasts in her free time.

Guide on Where Can I Buy a Custom Essay Online?

Explore Where Can I Buy a Custom Essay Online? How to write an essay? Such a question is a main problem for schoolchildren and students because more and more often teachers offer to write a text in the essay genre. This type has become very popular, so we have...

Summer Office Wear For Men: A Style Guide For Teachers

As we dive into the scorching summer months, the struggle to maintain a professional appearance while staying cool and comfortable in the classroom becomes all too real. With global warming, expect harsh temperature changes with every seasonal transition. But don’t...

From Application to Offer: Navigating the Job Search Journey

Embarking on the job search journey can be an exhilarating yet daunting process. From crafting your application to the moment you receive that much-anticipated job offer, each step requires careful planning, preparation, and execution. This comprehensive guide aims to...

Mastering the Test: Strategies for Exam Success

The path to academic and professional certification is often paved with the challenge of passing rigorous exams. For many, the mere thought of an upcoming test can evoke a mix of anticipation and apprehension. However, success is not just a product of what you know...

How the Discovery Stage Contributes to the Success of a Business Project

A positive return on your investment in a business project is only possible when you enter the market with an offer demanded by consumers. They determine the success of the company through their purchases and give it a chance for further growth. That is why special...

Exploring Innovative Educational Leadership: A Comparative Analysis of Global Teaching Trends

If you pay attention to the educational sector, you'll notice a shift toward transformational leadership across all academic levels. It's an approach born out of the growing need to prepare students for a changing world where learners are no longer insulated from...

The Importance of STEM Education

In today's fast-paced world, STEM education, encompassing Science, Technology, Engineering, and Mathematics, plays a pivotal role in shaping the future. It's not merely a set of subjects; it's a pathway to innovation, problem-solving, and economic growth. Fostering...

Building Blocks for a Project-Powered Future

Strolling through the campus nowadays, it’s not just about cramming for exams or pulling an all-nighter to finish an essay. It’s more – we’re gearing up students for those real-world challenges, for a career that’s more puzzle-solving than paint by numbers. Life after...

Artistic Expression: CapCut Online’s Text Additions to Images

Introduction In the realm of digital creativity, CapCut's online creative suite stands as a beacon for artistic expression. While renowned for its video editing prowess, the web-based version of CapCut, also known as the YouTube editor, conceals a remarkable feature:...

Enhancing Math Education for Diverse Learners with Customized Worksheets: Tips & Ideas

Mathematics is a fundamental subject that holds immense importance in a student's academic journey and life beyond the classroom. However, teaching mathematics to diverse learners, who have varying learning styles and abilities, can be quite challenging. One effective...

WANT MORE AMAZING CONTENT?

- Free GRE Practice Questions

- 100+ Personal Statement Templates

- 100+ Quotes to Kick Start Your Personal Statement

- 390 Adjectives to Use in a LOR

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

13.1 Formatting a Research Paper

Learning objectives.

- Identify the major components of a research paper written using American Psychological Association (APA) style.

- Apply general APA style and formatting conventions in a research paper.

In this chapter, you will learn how to use APA style , the documentation and formatting style followed by the American Psychological Association, as well as MLA style , from the Modern Language Association. There are a few major formatting styles used in academic texts, including AMA, Chicago, and Turabian:

- AMA (American Medical Association) for medicine, health, and biological sciences

- APA (American Psychological Association) for education, psychology, and the social sciences

- Chicago—a common style used in everyday publications like magazines, newspapers, and books

- MLA (Modern Language Association) for English, literature, arts, and humanities

- Turabian—another common style designed for its universal application across all subjects and disciplines

While all the formatting and citation styles have their own use and applications, in this chapter we focus our attention on the two styles you are most likely to use in your academic studies: APA and MLA.

If you find that the rules of proper source documentation are difficult to keep straight, you are not alone. Writing a good research paper is, in and of itself, a major intellectual challenge. Having to follow detailed citation and formatting guidelines as well may seem like just one more task to add to an already-too-long list of requirements.

Following these guidelines, however, serves several important purposes. First, it signals to your readers that your paper should be taken seriously as a student’s contribution to a given academic or professional field; it is the literary equivalent of wearing a tailored suit to a job interview. Second, it shows that you respect other people’s work enough to give them proper credit for it. Finally, it helps your reader find additional materials if he or she wishes to learn more about your topic.

Furthermore, producing a letter-perfect APA-style paper need not be burdensome. Yes, it requires careful attention to detail. However, you can simplify the process if you keep these broad guidelines in mind:

- Work ahead whenever you can. Chapter 11 “Writing from Research: What Will I Learn?” includes tips for keeping track of your sources early in the research process, which will save time later on.

- Get it right the first time. Apply APA guidelines as you write, so you will not have much to correct during the editing stage. Again, putting in a little extra time early on can save time later.

- Use the resources available to you. In addition to the guidelines provided in this chapter, you may wish to consult the APA website at http://www.apa.org or the Purdue University Online Writing lab at http://owl.english.purdue.edu , which regularly updates its online style guidelines.

General Formatting Guidelines

This chapter provides detailed guidelines for using the citation and formatting conventions developed by the American Psychological Association, or APA. Writers in disciplines as diverse as astrophysics, biology, psychology, and education follow APA style. The major components of a paper written in APA style are listed in the following box.

These are the major components of an APA-style paper:

Body, which includes the following:

- Headings and, if necessary, subheadings to organize the content

- In-text citations of research sources

- References page

All these components must be saved in one document, not as separate documents.

The title page of your paper includes the following information:

- Title of the paper

- Author’s name

- Name of the institution with which the author is affiliated

- Header at the top of the page with the paper title (in capital letters) and the page number (If the title is lengthy, you may use a shortened form of it in the header.)

List the first three elements in the order given in the previous list, centered about one third of the way down from the top of the page. Use the headers and footers tool of your word-processing program to add the header, with the title text at the left and the page number in the upper-right corner. Your title page should look like the following example.

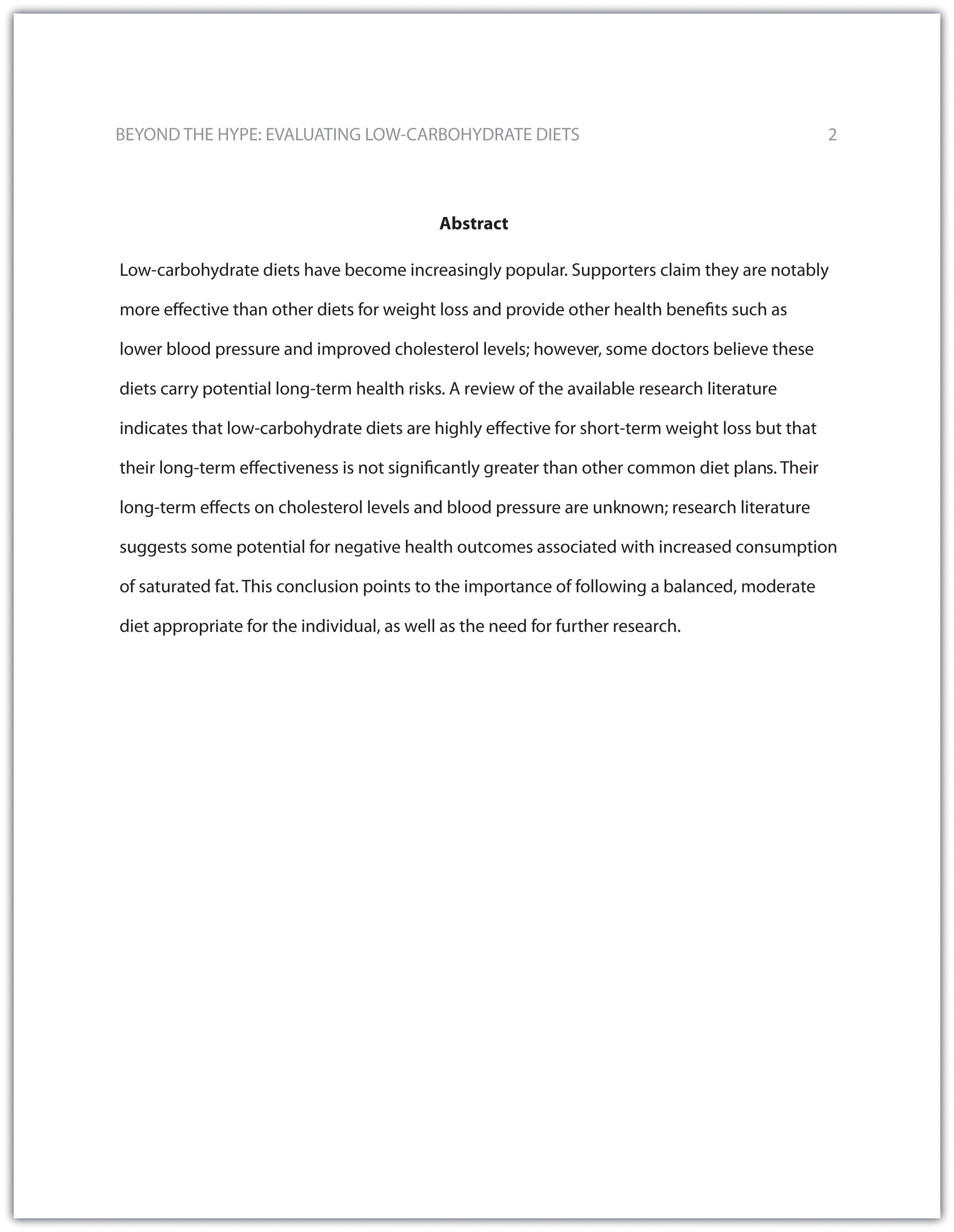

The next page of your paper provides an abstract , or brief summary of your findings. An abstract does not need to be provided in every paper, but an abstract should be used in papers that include a hypothesis. A good abstract is concise—about one hundred fifty to two hundred fifty words—and is written in an objective, impersonal style. Your writing voice will not be as apparent here as in the body of your paper. When writing the abstract, take a just-the-facts approach, and summarize your research question and your findings in a few sentences.

In Chapter 12 “Writing a Research Paper” , you read a paper written by a student named Jorge, who researched the effectiveness of low-carbohydrate diets. Read Jorge’s abstract. Note how it sums up the major ideas in his paper without going into excessive detail.

Write an abstract summarizing your paper. Briefly introduce the topic, state your findings, and sum up what conclusions you can draw from your research. Use the word count feature of your word-processing program to make sure your abstract does not exceed one hundred fifty words.

Depending on your field of study, you may sometimes write research papers that present extensive primary research, such as your own experiment or survey. In your abstract, summarize your research question and your findings, and briefly indicate how your study relates to prior research in the field.

Margins, Pagination, and Headings

APA style requirements also address specific formatting concerns, such as margins, pagination, and heading styles, within the body of the paper. Review the following APA guidelines.

Use these general guidelines to format the paper:

- Set the top, bottom, and side margins of your paper at 1 inch.

- Use double-spaced text throughout your paper.

- Use a standard font, such as Times New Roman or Arial, in a legible size (10- to 12-point).

- Use continuous pagination throughout the paper, including the title page and the references section. Page numbers appear flush right within your header.

- Section headings and subsection headings within the body of your paper use different types of formatting depending on the level of information you are presenting. Additional details from Jorge’s paper are provided.

Begin formatting the final draft of your paper according to APA guidelines. You may work with an existing document or set up a new document if you choose. Include the following:

- Your title page

- The abstract you created in Note 13.8 “Exercise 1”

- Correct headers and page numbers for your title page and abstract

APA style uses section headings to organize information, making it easy for the reader to follow the writer’s train of thought and to know immediately what major topics are covered. Depending on the length and complexity of the paper, its major sections may also be divided into subsections, sub-subsections, and so on. These smaller sections, in turn, use different heading styles to indicate different levels of information. In essence, you are using headings to create a hierarchy of information.

The following heading styles used in APA formatting are listed in order of greatest to least importance:

- Section headings use centered, boldface type. Headings use title case, with important words in the heading capitalized.

- Subsection headings use left-aligned, boldface type. Headings use title case.

- The third level uses left-aligned, indented, boldface type. Headings use a capital letter only for the first word, and they end in a period.

- The fourth level follows the same style used for the previous level, but the headings are boldfaced and italicized.

- The fifth level follows the same style used for the previous level, but the headings are italicized and not boldfaced.

Visually, the hierarchy of information is organized as indicated in Table 13.1 “Section Headings” .

Table 13.1 Section Headings

A college research paper may not use all the heading levels shown in Table 13.1 “Section Headings” , but you are likely to encounter them in academic journal articles that use APA style. For a brief paper, you may find that level 1 headings suffice. Longer or more complex papers may need level 2 headings or other lower-level headings to organize information clearly. Use your outline to craft your major section headings and determine whether any subtopics are substantial enough to require additional levels of headings.

Working with the document you developed in Note 13.11 “Exercise 2” , begin setting up the heading structure of the final draft of your research paper according to APA guidelines. Include your title and at least two to three major section headings, and follow the formatting guidelines provided above. If your major sections should be broken into subsections, add those headings as well. Use your outline to help you.

Because Jorge used only level 1 headings, his Exercise 3 would look like the following:

Citation Guidelines

In-text citations.

Throughout the body of your paper, include a citation whenever you quote or paraphrase material from your research sources. As you learned in Chapter 11 “Writing from Research: What Will I Learn?” , the purpose of citations is twofold: to give credit to others for their ideas and to allow your reader to follow up and learn more about the topic if desired. Your in-text citations provide basic information about your source; each source you cite will have a longer entry in the references section that provides more detailed information.

In-text citations must provide the name of the author or authors and the year the source was published. (When a given source does not list an individual author, you may provide the source title or the name of the organization that published the material instead.) When directly quoting a source, it is also required that you include the page number where the quote appears in your citation.

This information may be included within the sentence or in a parenthetical reference at the end of the sentence, as in these examples.

Epstein (2010) points out that “junk food cannot be considered addictive in the same way that we think of psychoactive drugs as addictive” (p. 137).

Here, the writer names the source author when introducing the quote and provides the publication date in parentheses after the author’s name. The page number appears in parentheses after the closing quotation marks and before the period that ends the sentence.

Addiction researchers caution that “junk food cannot be considered addictive in the same way that we think of psychoactive drugs as addictive” (Epstein, 2010, p. 137).

Here, the writer provides a parenthetical citation at the end of the sentence that includes the author’s name, the year of publication, and the page number separated by commas. Again, the parenthetical citation is placed after the closing quotation marks and before the period at the end of the sentence.

As noted in the book Junk Food, Junk Science (Epstein, 2010, p. 137), “junk food cannot be considered addictive in the same way that we think of psychoactive drugs as addictive.”

Here, the writer chose to mention the source title in the sentence (an optional piece of information to include) and followed the title with a parenthetical citation. Note that the parenthetical citation is placed before the comma that signals the end of the introductory phrase.

David Epstein’s book Junk Food, Junk Science (2010) pointed out that “junk food cannot be considered addictive in the same way that we think of psychoactive drugs as addictive” (p. 137).

Another variation is to introduce the author and the source title in your sentence and include the publication date and page number in parentheses within the sentence or at the end of the sentence. As long as you have included the essential information, you can choose the option that works best for that particular sentence and source.

Citing a book with a single author is usually a straightforward task. Of course, your research may require that you cite many other types of sources, such as books or articles with more than one author or sources with no individual author listed. You may also need to cite sources available in both print and online and nonprint sources, such as websites and personal interviews. Chapter 13 “APA and MLA Documentation and Formatting” , Section 13.2 “Citing and Referencing Techniques” and Section 13.3 “Creating a References Section” provide extensive guidelines for citing a variety of source types.

Writing at Work

APA is just one of several different styles with its own guidelines for documentation, formatting, and language usage. Depending on your field of interest, you may be exposed to additional styles, such as the following:

- MLA style. Determined by the Modern Languages Association and used for papers in literature, languages, and other disciplines in the humanities.

- Chicago style. Outlined in the Chicago Manual of Style and sometimes used for papers in the humanities and the sciences; many professional organizations use this style for publications as well.

- Associated Press (AP) style. Used by professional journalists.

References List

The brief citations included in the body of your paper correspond to the more detailed citations provided at the end of the paper in the references section. In-text citations provide basic information—the author’s name, the publication date, and the page number if necessary—while the references section provides more extensive bibliographical information. Again, this information allows your reader to follow up on the sources you cited and do additional reading about the topic if desired.

The specific format of entries in the list of references varies slightly for different source types, but the entries generally include the following information:

- The name(s) of the author(s) or institution that wrote the source

- The year of publication and, where applicable, the exact date of publication

- The full title of the source

- For books, the city of publication

- For articles or essays, the name of the periodical or book in which the article or essay appears

- For magazine and journal articles, the volume number, issue number, and pages where the article appears

- For sources on the web, the URL where the source is located

The references page is double spaced and lists entries in alphabetical order by the author’s last name. If an entry continues for more than one line, the second line and each subsequent line are indented five spaces. Review the following example. ( Chapter 13 “APA and MLA Documentation and Formatting” , Section 13.3 “Creating a References Section” provides extensive guidelines for formatting reference entries for different types of sources.)

In APA style, book and article titles are formatted in sentence case, not title case. Sentence case means that only the first word is capitalized, along with any proper nouns.

Key Takeaways

- Following proper citation and formatting guidelines helps writers ensure that their work will be taken seriously, give proper credit to other authors for their work, and provide valuable information to readers.

- Working ahead and taking care to cite sources correctly the first time are ways writers can save time during the editing stage of writing a research paper.

- APA papers usually include an abstract that concisely summarizes the paper.

- APA papers use a specific headings structure to provide a clear hierarchy of information.

- In APA papers, in-text citations usually include the name(s) of the author(s) and the year of publication.

- In-text citations correspond to entries in the references section, which provide detailed bibliographical information about a source.

Writing for Success Copyright © 2015 by University of Minnesota is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Research Paper

29 December 2023

last updated

A research paper is a product of seeking information, analysis, human thinking, and time. Basically, when scholars want to get answers to questions, they start to search for information to expand, use, approve, or deny findings. In simple words, research papers are results of processes by considering writing works and following specific requirements. Besides, scientists research and expand many theories, developing social or technological aspects of human science. However, in order to write relevant papers, they need to know a definition of the research, structure, characteristics, and types.

Definition of What Is a Research Paper and Its Meaning

A research paper is a common assignment. It comes to a situation when students, scholars, and scientists need to answer specific questions by using sources. Basically, a research paper is one of the types of papers where scholars analyze questions or topics , look for secondary sources , and write papers on defined themes. For example, if an assignment is to write a research paper on some causes of global warming or any other topic, a person must write a research proposal on it, analyzing important points and credible sources . Although essays focus on personal knowledge, writing a research paper means analyzing sources by following academic standards. Moreover, scientists must meet the structure of research papers. Therefore, writers need to analyze their research paper topics , start to research, cover key aspects, process credible articles, and organize final studies properly.

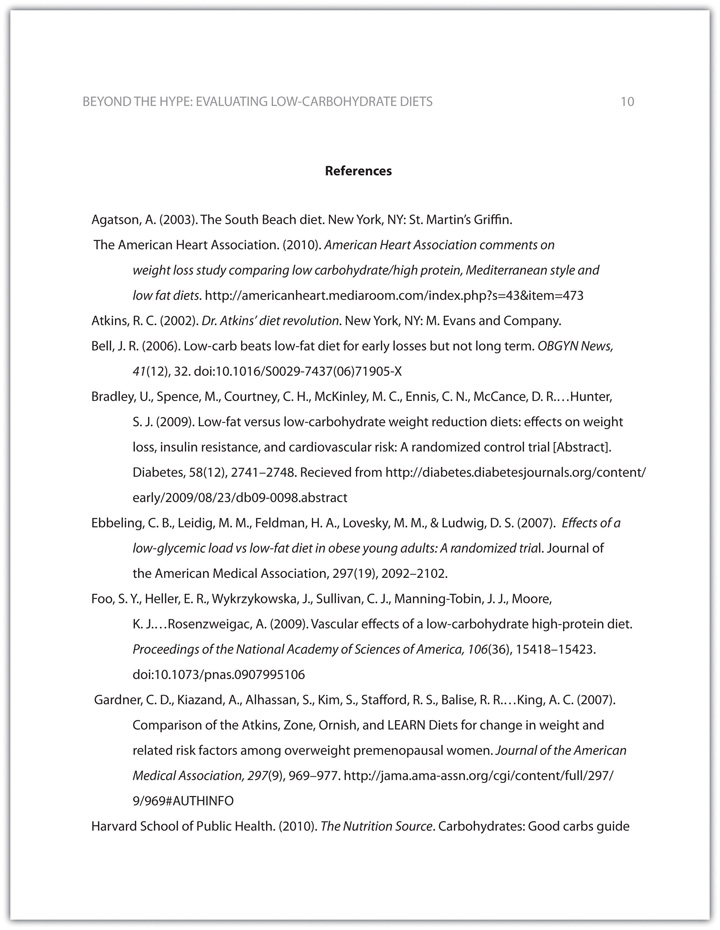

The Structure of a Research Work

The structure of research papers depends on assignment requirements. In fact, when students get their assignments and instructions, they need to analyze specific research questions or topics, find reliable sources , and write final works. Basically, the structure of research papers consists of the abstract , outline , introduction , literature review , methodology, results , discussion, recommendations, limitations, conclusion , acknowledgments , and references. However, students may not include some of these sections because of assigned instructions that they have and specific types of research papers. For instance, if instructions of papers do not suppose to conduct real experiments, the methodology section can be skipped because of the data’s absence. In turn, the structure of the final work consists of:

Join our satisfied customers who have received perfect papers from Wr1ter Team.

🔸 The First Part of a Research Study

Abstract or an executive summary means the first section of a research paper that provides the study’s purpose, research questions or suggestions, main findings with conclusions. Moreover, this paragraph of about 150 words should be written when the whole work is finished already. Hence, abstract sections should describe key aspects of studies, including discussions about the relevance of findings.

Outline serves as a clear map of the structure of a research study.

Introduction provides the main information on problem statements, the indication of methodology, important findings, and principal conclusion. Basically, this section of a research paper covers rationales behind the work or background research, explanation of the importance, defending its relevance, a brief description of experimental designs, defined research questions, hypotheses, or key aspects.

🔸 Literature Review and Research or Experiment

Literature Review is needed for the analysis of past studies or scholarly articles to be familiar with research questions or topics. Hence, this section summarizes and synthesizes arguments and ideas from scholarly sources without adding new contributions. In turn, this part is organized around arguments or ideas, not sources.

Methodology or Materials and Methods covers explanations of research designs. Basically, techniques for gathering information and other aspects related to experiments must be described in a research paper. For instance, students and scholars document all specialized materials and general procedures. In this case, individuals may use some or all of the methods in further studies or judge the scientific merit of the work. Moreover, scientists should explain how they are going to conduct their experiments.

Results mean the gained information or data after the research or experiment. Basically, scholars should present and illustrate their findings. Moreover, this section may include tables or figures.

🔸 Analysis of Findings

Discussion is a section of a research paper where scientists review the information in the introduction part, evaluate gained results, or compare it with past studies. In particular, students and scholars interpret gained data or findings in appropriate depth. For example, if results differ from expectations at the beginning, scientists should explain why that may have happened. However, if results agree with rationales, scientists should describe theories that the evidence is supported.

Recommendations take its roots from a discussion section where scholars propose potential solutions or new ideas based on obtained results in a research paper. In this case, if scientists have any recommendations on how to improve this research so that other scholars can use evidence in further studies, they must write what they think in this section.

Limitations mean a consideration of research weaknesses and results to get new directions. For instance, if researchers found any limitations of studies that could affect experiments, scholars must not use such knowledge because of the same mistakes. Moreover, scientists should avoid contradicting results, and, even more, they must write it in this section.

🔸 The Final Part of a Conducted Research

Conclusion includes final claims of a research paper based on findings. Basically, this section covers final thoughts and the summary of the whole work. Moreover, this section may be used instead of limitations and recommendations that would be too small by themselves. In this case, scientists do not need to use headings for recommendations and limitations. Also, check out conclusion examples .

Acknowledgments or Appendix may take different forms, from paragraphs to charts. In this section, scholars include additional information on a research paper.

References mean a section where students, scholars, or scientists provide all used sources by following the format and academic rules.

Research Characteristics

Any type of work must meet some standards. By considering a research paper, this work must be written accordingly. In this case, the main characteristics of research papers are the length, style, format, and sources. Firstly, the length of research work defines the number of needed sources to analyze. Then, the style must be formal and covers impersonal and inclusive language. In turn, the format means academic standards of how to organize final works, including its structure and norms. Finally, sources and their number define works as research papers because of the volume of analyzed information. Hence, these characteristics must be considered while writing research papers.

Types of Research Papers

In general, the length of assignments can be different because of instructions. For example, there are two main types of research papers, such as typical and serious works. Firstly, a typical research paper may include definitive, argumentative, interpretive, and other works. In this case, typical papers are from 2 to 10 pages, where students analyze research questions or specific topics. Then, a serious research study is the expanded version of typical works. In turn, the length of such a paper is more than 10 pages. Basically, such works cover a serious analysis with many sources. Therefore, typical and serious works are two types of research papers.

Typical Research Papers

Basically, typical research works depend on assignments, the number of sources, and the paper’s length. So, a typical research paper is usually a long essay with the analyzed evidence. For example, students in high school and colleges get such assignments to learn how to research and analyze topics. In this case, they do not need to conduct serious experiments with the analysis and calculation of data. Moreover, students must use the Internet or libraries in searching for credible secondary sources to find potential answers to specific questions. As a result, students gather information on topics and learn how to take defined sides, present unique positions, or explain new directions. Hence, typical research papers require an analysis of primary and secondary sources without serious experiments or data.

Serious Research Studies

Although long papers require a lot of time for finding and analyzing credible sources, real experiments are an integral part of research work. Firstly, scholars at universities need to analyze the information from past studies to expand or disapprove of researched topics. Then, if scholars want to prove specific positions or ideas, they must get real evidence. In this case, experiments can be surveys, calculations, or other types of data that scholars do personally. Moreover, a dissertation is a typical serious research paper that young scientists write based on the research analysis of topics, data from conducted experiments, and conclusions at the end of work. Thus, serious research papers are studies that take a lot of time, analysis of sources with gained data, and interpretation of results.

What makes a high quality clinical research paper?

Affiliation.

- 1 BMJ, London, UK. [email protected]

- PMID: 20233312

- DOI: 10.1111/j.1601-0825.2010.01663.x

The quality of a research paper depends primarily on the quality of the research study it reports. However, there is also much that authors can do to maximise the clarity and usefulness of their papers. Journals' instructions for authors often focus on the format, style, and length of articles but do not always emphasise the need to clearly explain the work's science and ethics: so this review reminds researchers that transparency is important too. The research question should be stated clearly, along with an explanation of where it came from and why it is important. The study methods must be reported fully and, where appropriate, in line with an evidence based reporting guideline such as the CONSORT statement for randomised controlled trials. If the study was a trial the paper should state where and when the study was registered and state its registration identifier. Finally, any relevant conflicts of interest should be declared.

Publication types

- Clinical Trials as Topic*

- Ethics, Research*

- Guidelines as Topic

- Journalism, Medical / standards*

- Periodicals as Topic

- Publishing / standards*

- Writing / standards*

Choose Your Test

Sat / act prep online guides and tips, 113 great research paper topics.

General Education

One of the hardest parts of writing a research paper can be just finding a good topic to write about. Fortunately we've done the hard work for you and have compiled a list of 113 interesting research paper topics. They've been organized into ten categories and cover a wide range of subjects so you can easily find the best topic for you.

In addition to the list of good research topics, we've included advice on what makes a good research paper topic and how you can use your topic to start writing a great paper.

What Makes a Good Research Paper Topic?

Not all research paper topics are created equal, and you want to make sure you choose a great topic before you start writing. Below are the three most important factors to consider to make sure you choose the best research paper topics.

#1: It's Something You're Interested In

A paper is always easier to write if you're interested in the topic, and you'll be more motivated to do in-depth research and write a paper that really covers the entire subject. Even if a certain research paper topic is getting a lot of buzz right now or other people seem interested in writing about it, don't feel tempted to make it your topic unless you genuinely have some sort of interest in it as well.

#2: There's Enough Information to Write a Paper

Even if you come up with the absolute best research paper topic and you're so excited to write about it, you won't be able to produce a good paper if there isn't enough research about the topic. This can happen for very specific or specialized topics, as well as topics that are too new to have enough research done on them at the moment. Easy research paper topics will always be topics with enough information to write a full-length paper.

Trying to write a research paper on a topic that doesn't have much research on it is incredibly hard, so before you decide on a topic, do a bit of preliminary searching and make sure you'll have all the information you need to write your paper.

#3: It Fits Your Teacher's Guidelines

Don't get so carried away looking at lists of research paper topics that you forget any requirements or restrictions your teacher may have put on research topic ideas. If you're writing a research paper on a health-related topic, deciding to write about the impact of rap on the music scene probably won't be allowed, but there may be some sort of leeway. For example, if you're really interested in current events but your teacher wants you to write a research paper on a history topic, you may be able to choose a topic that fits both categories, like exploring the relationship between the US and North Korea. No matter what, always get your research paper topic approved by your teacher first before you begin writing.

113 Good Research Paper Topics

Below are 113 good research topics to help you get you started on your paper. We've organized them into ten categories to make it easier to find the type of research paper topics you're looking for.

Arts/Culture

- Discuss the main differences in art from the Italian Renaissance and the Northern Renaissance .

- Analyze the impact a famous artist had on the world.

- How is sexism portrayed in different types of media (music, film, video games, etc.)? Has the amount/type of sexism changed over the years?

- How has the music of slaves brought over from Africa shaped modern American music?

- How has rap music evolved in the past decade?

- How has the portrayal of minorities in the media changed?

Current Events

- What have been the impacts of China's one child policy?

- How have the goals of feminists changed over the decades?

- How has the Trump presidency changed international relations?

- Analyze the history of the relationship between the United States and North Korea.

- What factors contributed to the current decline in the rate of unemployment?

- What have been the impacts of states which have increased their minimum wage?

- How do US immigration laws compare to immigration laws of other countries?

- How have the US's immigration laws changed in the past few years/decades?

- How has the Black Lives Matter movement affected discussions and view about racism in the US?

- What impact has the Affordable Care Act had on healthcare in the US?

- What factors contributed to the UK deciding to leave the EU (Brexit)?

- What factors contributed to China becoming an economic power?

- Discuss the history of Bitcoin or other cryptocurrencies (some of which tokenize the S&P 500 Index on the blockchain) .

- Do students in schools that eliminate grades do better in college and their careers?

- Do students from wealthier backgrounds score higher on standardized tests?

- Do students who receive free meals at school get higher grades compared to when they weren't receiving a free meal?

- Do students who attend charter schools score higher on standardized tests than students in public schools?

- Do students learn better in same-sex classrooms?

- How does giving each student access to an iPad or laptop affect their studies?

- What are the benefits and drawbacks of the Montessori Method ?

- Do children who attend preschool do better in school later on?

- What was the impact of the No Child Left Behind act?

- How does the US education system compare to education systems in other countries?

- What impact does mandatory physical education classes have on students' health?

- Which methods are most effective at reducing bullying in schools?

- Do homeschoolers who attend college do as well as students who attended traditional schools?

- Does offering tenure increase or decrease quality of teaching?

- How does college debt affect future life choices of students?

- Should graduate students be able to form unions?

- What are different ways to lower gun-related deaths in the US?

- How and why have divorce rates changed over time?

- Is affirmative action still necessary in education and/or the workplace?

- Should physician-assisted suicide be legal?

- How has stem cell research impacted the medical field?

- How can human trafficking be reduced in the United States/world?

- Should people be able to donate organs in exchange for money?

- Which types of juvenile punishment have proven most effective at preventing future crimes?

- Has the increase in US airport security made passengers safer?

- Analyze the immigration policies of certain countries and how they are similar and different from one another.

- Several states have legalized recreational marijuana. What positive and negative impacts have they experienced as a result?

- Do tariffs increase the number of domestic jobs?

- Which prison reforms have proven most effective?

- Should governments be able to censor certain information on the internet?

- Which methods/programs have been most effective at reducing teen pregnancy?

- What are the benefits and drawbacks of the Keto diet?

- How effective are different exercise regimes for losing weight and maintaining weight loss?

- How do the healthcare plans of various countries differ from each other?

- What are the most effective ways to treat depression ?

- What are the pros and cons of genetically modified foods?

- Which methods are most effective for improving memory?

- What can be done to lower healthcare costs in the US?

- What factors contributed to the current opioid crisis?

- Analyze the history and impact of the HIV/AIDS epidemic .

- Are low-carbohydrate or low-fat diets more effective for weight loss?

- How much exercise should the average adult be getting each week?

- Which methods are most effective to get parents to vaccinate their children?

- What are the pros and cons of clean needle programs?

- How does stress affect the body?

- Discuss the history of the conflict between Israel and the Palestinians.

- What were the causes and effects of the Salem Witch Trials?

- Who was responsible for the Iran-Contra situation?

- How has New Orleans and the government's response to natural disasters changed since Hurricane Katrina?

- What events led to the fall of the Roman Empire?

- What were the impacts of British rule in India ?

- Was the atomic bombing of Hiroshima and Nagasaki necessary?

- What were the successes and failures of the women's suffrage movement in the United States?

- What were the causes of the Civil War?

- How did Abraham Lincoln's assassination impact the country and reconstruction after the Civil War?

- Which factors contributed to the colonies winning the American Revolution?

- What caused Hitler's rise to power?

- Discuss how a specific invention impacted history.

- What led to Cleopatra's fall as ruler of Egypt?

- How has Japan changed and evolved over the centuries?

- What were the causes of the Rwandan genocide ?

- Why did Martin Luther decide to split with the Catholic Church?

- Analyze the history and impact of a well-known cult (Jonestown, Manson family, etc.)

- How did the sexual abuse scandal impact how people view the Catholic Church?

- How has the Catholic church's power changed over the past decades/centuries?

- What are the causes behind the rise in atheism/ agnosticism in the United States?